Fixes#3043

`surface_texture.present()` will cause panics if no work is done on a given frame. "Views" are how we queue up work. Without any cameras, no work is produced. This adds a "clear pass" for windows without views, which ensures we clear windows (thus doing work) every frame.

This is a "quick fix". It can be made much cleaner once we make "render targets" a concept and move some responsibilities around. Then we just clear the "render target" once instead of clearing "views". I _might_ have time to tackle that work prior to 0.6, but I doubt it. If "render targets" don't make it in to 0.6, they will be one of the first things I tackle after release.

# Objective

Port bevy_ui to pipelined-rendering (see #2535 )

## Solution

I did some changes during the port:

- [X] separate color from the texture asset (as suggested [here](https://discord.com/channels/691052431525675048/743663924229963868/874353914525413406))

- [X] ~give the vertex shader a per-instance buffer instead of per-vertex buffer~ (incompatible with batching)

Remaining features to implement to reach parity with the old renderer:

- [x] textures

- [X] TextBundle

I'd also like to add these features, but they need some design discussion:

- [x] batching

- [ ] separate opaque and transparent phases

- [ ] multiple windows

- [ ] texture atlases

- [ ] (maybe) clipping

Updates the wireframe rendering initialliy implemented in https://github.com/bevyengine/bevy/pull/562 to the new renderer.

It lives in `bevy_pbr2` instead of `bevy_render2` because that way it can reuse the `MeshPipeline`.

# Objective

- Rendering before MainPass should be possible, so clearing needs to happen in an earlier pass.

- Fixes#3190.

## Solution

- I added a "Clear" SubGraph, a "ClearPassNode" Node, that clears the color and depth attachments of all views and a "ClearNodeDriver" Node, that schedules the "ClearPassNode" before MainPass.

- Make sure that the 2d and 3d draw passes do not clear their attachments anymore.

### Notes

- It works in the way, that with the current pipeline examples nothing should have changed in their behaviour

- I would like to add an example that adds a pass inbetween ClearPass and MainPass, but I do not understand enough about the new render architecture to do that yet

- Clears all attachment for all views: I do not know enough about rendering in general to say, whether there is a use case for not clearing

- Does not solve #3043 as we still need Cameras/ViewTargets to clear.

# Objective

The new renderer does not support any options yet for wgpu. These are needed for example for rendering wireframes (see #3193).

## Solution

I've ported WgpuOptions to bevy_render2.

The defaults match the defaults that were used before this PR (meaning, some specific options when target_arch = wasm32).

Additionally, I removed `Auto` from WgpuBackends and added `Primary`. The default will use primary or GL based on the target_arch.

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

# Objective

- Checks for NaN in computed NDC space coordinates, fixing unexpected NaN in a fallible (`Option<T>`) function.

## Solution

- Adds a NaN check, in addition to the existing NDC bounds checks.

- This is a helper function, and should have no performance impact to the engine itself.

- This will help prevent hard-to-trace NaN propagation in user code, by returning `None` instead of `Some(NaN)`.

Depends on https://github.com/bevyengine/bevy/pull/3269 for CI error fix.

# Objective

I'm exposing these command encoders so bevy user's can create their own command encoders. This is useful when you want to copy a texture to a texture or create a compute pass manually for example.

Note: I formatted this file which might of changed the order of some exports.

## Solution

Just re-export `CommandEncoder` and `CommandEncoderDescriptor`.

# Objective

- Fix#3188

- Allow creating a `PipelinedSpriteBundle` without an image, just a plain color

```rust

PipelinedSpriteBundle {

sprite: Sprite {

color: Color::rgba(0.8, 0.0, 0.0, 0.3),

custom_size: Some(Vec2::new(500.0, 500.0)),

..Default::default()

},

..Default::default()

}

```

## Solution

- The default impl for `Image` was creating a one pixel image with all values at `1`. I changed it to `255` as picking `1` for it doesn't really make sense, it should be either `0` or `255`

- I created a static handle and added the default image to the assets with this handle

- I changed the default impl for `PipelinedSpriteBundle` to use this handle

# Objective

Fixes recent pipeline errors:

```

error: use of deprecated associated function `std::array::IntoIter::<T, N>::new`: use `IntoIterator::into_iter` instead

--> crates/bevy_render/src/mesh/mesh.rs:467:54

|

467 | .flat_map(|normal| std::array::IntoIter::new([normal, normal, normal]))

| ^^^

|

= note: `-D deprecated` implied by `-D warnings`

Compiling bevy_render2 v0.5.0 (/home/runner/work/bevy/bevy/pipelined/bevy_render2)

error: use of deprecated associated function `std::array::IntoIter::<T, N>::new`: use `IntoIterator::into_iter` instead

--> pipelined/bevy_render2/src/mesh/mesh/mod.rs:287:54

|

287 | .flat_map(|normal| std::array::IntoIter::new([normal, normal, normal]))

| ^^^

|

= note: `-D deprecated` implied by `-D warnings`

error: could not compile `bevy_render` due to previous error

```

## Solution

- Replaced `IntoIter::new` with `IntoIterator::into_iter`

## Suggestions

For me it looks like two equivalent `Mesh` structs with the same methods. Should we refactor it? Or, they will be different in the near future?

Co-authored-by: CrazyRoka <rokarostuk@gmail.com>

# Objective

- New clippy lints with rust 1.57 are failing

## Solution

- Fixed clippy lints following suggestions

- I ignored clippy in old renderer because there was many and it will be removed soon

# Objective

- Add support for `#else` for shader defs

## Solution

- When entering a scope with `#ifdef` or `#ifndef`, if the parent scope is truthy, and the shader definition is also truthy, then the a new scope is pushed onto the scope stack that is also truthy, else falsy. When encountering a subsequent else clause within a scope, if the parent is truthy and the current scope is truthy, then it should become falsy. If the parent scope is truthy and the current scope is falsy then it should become truthy. If the parent scope is falsy, then the current scope should remain falsy as the parent scope takes precedent.

- I added a simple test for an else case.

A sample implementation of how to have `iter()` work on mutable queries without breaking aliasing rules.

# Objective

- Fixes#753

## Solution

- Added a ReadOnlyFetch to WorldQuery that is the `&T` version of `&mut T` that is used to specify the return type for read only operations like `iter()`.

- ~~As the comment suggests specifying the bound doesn't work due to restrictions on defining recursive implementations (like `Or`). However bounds on the functions are fine~~ Never mind I misread how `Or` was constructed, bounds now exist.

- Note that the only mutable one has a new `Fetch` for readonly as the `State` has to be the same for any of this to work

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Changing the underlying image would not update a sprite

## Solution

- 'Detect' if the underlying image changes to update the sprite

Currently, we don't support change detection on `RenderAssets`, so we have to manually check it.

This method at least maintains the bind groups when the image isn't changing. They were cached, so I assume that's important.

This gives us correct behaviour here.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Shadow maps should only be sampled if the mesh is a shadow receiver AND shadow mapping is enabled for the light

## Solution

- Fix the logic in the shader

See #3165 and #3175

# Objective

- @superdump was having trouble with this loop in the GLTF loader.

## Solution

- Make it probably linear.

- Measured times:

- Old: 40s, new: 200ms

I think there's still room for improvement. For example, I think making the nodes be in `Arc`s could be a significant gain, since currently there's duplication all the way down the tree.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Use `std140_size_static()` everywhere instead of manual sizes as the crevice rewrite appears to have fixed all the problems as it claimed to do.

I've tested `3d_scene_pipelined`, `bevymark_pipelined`, and `load_gltf_pipelined` and all three look fine.

# Objective

I need to queue my own textures up for font rendering(texture arrays) and I noticed a bunch of `ImageX`, like `ImageDataLayout`, were missing from the render resources exports.

## Solution

Add new exports to render resources.

# Objective

Allow shadow mapping to be enabled/disabled per-light.

## Solution

- NOTE: This PR is on top of https://github.com/bevyengine/bevy/pull/3072

- Add `shadows_enabled` boolean property to `PointLight` and `DirectionalLight` components.

- Do not update the frusta for the light if shadows are disabled.

- Do not check for visible entities for the light if shadows are disabled.

- Do not fetch shadows for lights with shadows disabled.

- I reworked a few types for clarity: `ViewLight` -> `ShadowView`, the bulk of `ViewLights` members -> `ViewShadowBindings`, the entities Vec in `ViewLights` -> `ViewLightEntities`, the uniform offset in `ViewLights` for `GpuLights` -> `ViewLightsUniformOffset`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes#3132

This is a squash-and-rebase of @Ku95's documentation of the new renderer onto the latest `pipelined-rendering` branch.

Original PR is #2884.

Co-authored-by: dataphract <dataphract@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Fix nested shader defs. For example, in:

```rust

#ifdef A

#ifdef B

some code here

#endif

#endif

```

...before this PR, if `A` *is not* defined, and `B` *is* defined, then `some code here` will be output.

## Solution

- Combine the logic of whether the parent and child scope guards are defined and use that as the resulting child scope guard boolean value

# Objective

Add depth prepass and support for opaque, alpha mask, and alpha blend modes for the 3D PBR target.

## Solution

NOTE: This is based on top of #2861 frustum culling. Just lining it up to keep @cart loaded with the review train. 🚂

There are a lot of important details here. Big thanks to @cwfitzgerald of wgpu, naga, and rend3 fame for explaining how to do it properly!

* An `AlphaMode` component is added that defines whether a material should be considered opaque, an alpha mask (with a cutoff value that defaults to 0.5, the same as glTF), or transparent and should be alpha blended

* Two depth prepasses are added:

* Opaque does a plain vertex stage

* Alpha mask does the vertex stage but also a fragment stage that samples the colour for the fragment and discards if its alpha value is below the cutoff value

* Both are sorted front to back, not that it matters for these passes. (Maybe there should be a way to skip sorting?)

* Three main passes are added:

* Opaque and alpha mask passes use a depth comparison function of Equal such that only the geometry that was closest is processed further, due to early-z testing

* The transparent pass uses the Greater depth comparison function so that only transparent objects that are closer than anything opaque are rendered

* The opaque fragment shading is as before except that alpha is explicitly set to 1.0

* Alpha mask fragment shading sets the alpha value to 1.0 if it is equal to or above the cutoff, as defined by glTF

* Opaque and alpha mask are sorted front to back (again not that it matters as we will skip anything that is not equal... maybe sorting is no longer needed here?)

* Transparent is sorted back to front. Transparent fragment shading uses the alpha blending over operator

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Adds new `EntityRenderCommand`, `EntityPhaseItem`, and `CachedPipelinePhaseItem` traits to make it possible to reuse RenderCommands across phases. This should be helpful for features like #3072 . It also makes the trait impls slightly less generic-ey in the common cases.

This also fixes the custom shader examples to account for the recent Frustum Culling and MSAA changes (the UX for these things will be improved later).

# Objective

- Update vendor crevice to have the latest update from crevice 0.8.0

- Using https://github.com/ElectronicRU/crevice/tree/arrays which has the changes to make arrays work

## Solution

- Also updated glam and hexasphere to only have one version of glam

- From the original PR, using crevice to write GLSL code containing arrays would probably not work but it's not something used by Bevy

# Objective

Implement frustum culling for much better performance on more complex scenes. With the Amazon Lumberyard Bistro scene, I was getting roughly 15fps without frustum culling and 60+fps with frustum culling on a MacBook Pro 16 with i9 9980HK 8c/16t CPU and Radeon Pro 5500M.

macOS does weird things with vsync so even though vsync was off, it really looked like sometimes other applications or the desktop window compositor were interfering, but the difference could be even more as I even saw up to 90+fps sometimes.

## Solution

- Until the https://github.com/bevyengine/rfcs/pull/12 RFC is completed, I wanted to implement at least some of the bounding volume functionality we needed to be able to unblock a bunch of rendering features and optimisations such as frustum culling, fitting the directional light orthographic projection to the relevant meshes in the view, clustered forward rendering, etc.

- I have added `Aabb`, `Frustum`, and `Sphere` types with only the necessary intersection tests for the algorithms used. I also added `CubemapFrusta` which contains a `[Frustum; 6]` and can be used by cube maps such as environment maps, and point light shadow maps.

- I did do a bit of benchmarking and optimisation on the intersection tests. I compared the [rafx parallel-comparison bitmask approach](c91bd5fcfd/rafx-visibility/src/geometry/frustum.rs (L64-L92)) with a naïve loop that has an early-out in case of a bounding volume being outside of any one of the `Frustum` planes and found them to be very similar, so I chose the simpler and more readable option. I also compared using Vec3 and Vec3A and it turned out that promoting Vec3s to Vec3A improved performance of the culling significantly due to Vec3A operations using SIMD optimisations where Vec3 uses plain scalar operations.

- When loading glTF models, the vertex attribute accessors generally store the minimum and maximum values, which allows for adding AABBs to meshes loaded from glTF for free.

- For meshes without an AABB (`PbrBundle` deliberately does not have an AABB by default), a system is executed that scans over the vertex positions to find the minimum and maximum values along each axis. This is used to construct the AABB.

- The `Frustum::intersects_obb` and `Sphere::insersects_obb` algorithm is from Foundations of Game Engine Development 2: Rendering by Eric Lengyel. There is no OBB type, yet, rather an AABB and the model matrix are passed in as arguments. This calculates a 'relative radius' of the AABB with respect to the plane normal (the plane normal in the Sphere case being something I came up with as the direction pointing from the centre of the sphere to the centre of the AABB) such that it can then do a sphere-sphere intersection test in practice.

- `RenderLayers` were copied over from the current renderer.

- `VisibleEntities` was copied over from the current renderer and a `CubemapVisibleEntities` was added to support `PointLight`s for now. `VisibleEntities` are added to views (cameras and lights) and contain a `Vec<Entity>` that is populated by culling/visibility systems that run in PostUpdate of the app world, and are iterated over in the render world for, for example, queuing up meshes to be drawn by lights for shadow maps and the main pass for cameras.

- `Visibility` and `ComputedVisibility` components were added. The `Visibility` component is user-facing so that, for example, the entity can be marked as not visible in an editor. `ComputedVisibility` on the other hand is the result of the culling/visibility systems and takes `Visibility` into account. So if an entity is marked as not being visible in its `Visibility` component, that will skip culling/visibility intersection tests and just mark the `ComputedVisibility` as false.

- The `ComputedVisibility` is used to decide which meshes to extract.

- I had to add a way to get the far plane from the `CameraProjection` in order to define an explicit far frustum plane for culling. This should perhaps be optional as it is not always desired and in that case, testing 5 planes instead of 6 is a performance win.

I think that's about all. I discussed some of the design with @cart on Discord already so hopefully it's not too far from being mergeable. It works well at least. 😄

# Objective

- Support tangent vertex attributes, and normal maps

- Support loading these from glTF models

## Solution

- Make two pipelines in both the shadow and pbr passes, one for without normal maps, one for with normal maps

- Select the correct pipeline to bind based on the presence of the normal map texture

- Share the vertex attribute layout between shadow and pbr passes

- Refactored pbr.wgsl to share a bunch of common code between the normal map and non-normal map entry points. I tried to do this in a way that will allow custom shader reuse.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

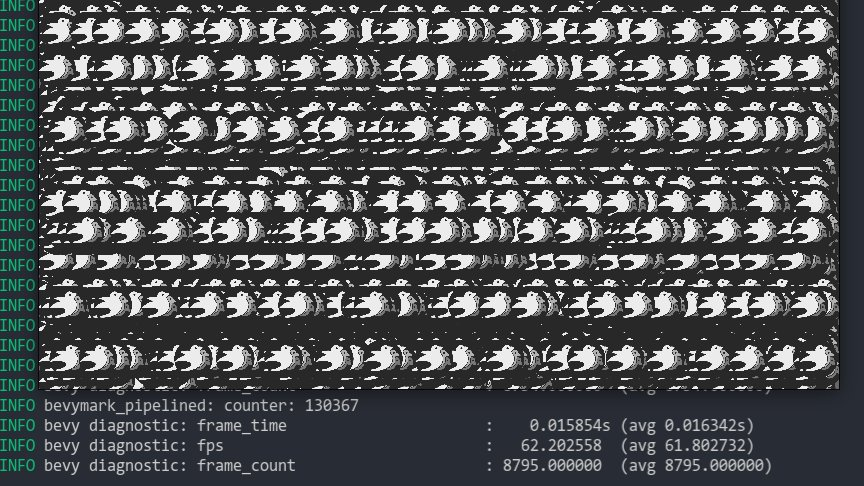

This implements the following:

* **Sprite Batching**: Collects sprites in a vertex buffer to draw many sprites with a single draw call. Sprites are batched by their `Handle<Image>` within a specific z-level. When possible, sprites are opportunistically batched _across_ z-levels (when no sprites with a different texture exist between two sprites with the same texture on different z levels). With these changes, I can now get ~130,000 sprites at 60fps on the `bevymark_pipelined` example.

* **Sprite Color Tints**: The `Sprite` type now has a `color` field. Non-white color tints result in a specialized render pipeline that passes the color in as a vertex attribute. I chose to specialize this because passing vertex colors has a measurable price (without colors I get ~130,000 sprites on bevymark, with colors I get ~100,000 sprites). "Colored" sprites cannot be batched with "uncolored" sprites, but I think this is fine because the chance of a "colored" sprite needing to batch with other "colored" sprites is generally probably way higher than an "uncolored" sprite needing to batch with a "colored" sprite.

* **Sprite Flipping**: Sprites can be flipped on their x or y axis using `Sprite::flip_x` and `Sprite::flip_y`. This is also true for `TextureAtlasSprite`.

* **Simpler BufferVec/UniformVec/DynamicUniformVec Clearing**: improved the clearing interface by removing the need to know the size of the final buffer at the initial clear.

Note that this moves sprites away from entity-driven rendering and back to extracted lists. We _could_ use entities here, but it necessitates that an intermediate list is allocated / populated to collect and sort extracted sprites. This redundant copy, combined with the normal overhead of spawning extracted sprite entities, brings bevymark down to ~80,000 sprites at 60fps. I think making sprites a bit more fixed (by default) is worth it. I view this as acceptable because batching makes normal entity-driven rendering pretty useless anyway (and we would want to batch most custom materials too). We can still support custom shaders with custom bindings, we'll just need to define a specific interface for it.

Adds support for MSAA to the new renderer. This is done using the new [pipeline specialization](#3031) support to specialize on sample count. This is an alternative implementation to #2541 that cuts out the need for complicated render graph edge management by moving the relevant target information into View entities. This reuses @superdump's clever MSAA bitflag range code from #2541.

Note that wgpu currently only supports 1 or 4 samples due to those being the values supported by WebGPU. However they do plan on exposing ways to [enable/query for natively supported sample counts](https://github.com/gfx-rs/wgpu/issues/1832). When this happens we should integrate

# Objective

Make possible to use wgpu gles backend on in the browser (wasm32 + WebGL2).

## Solution

It is built on top of old @cart patch initializing windows before wgpu. Also:

- initializes wgpu with `Backends::GL` and proper `wgpu::Limits` on wasm32

- changes default texture format to `wgpu::TextureFormat::Rgba8UnormSrgb`

Co-authored-by: Mariusz Kryński <mrk@sed.pl>

# Objective

- Fixes#2919

- Initial pixel was hard coded and not dependent on texture format

- Replace #2920 as I noticed this needed to be done also on pipeline rendering branch

## Solution

- Replace the hard coded pixel with one using the texture pixel size

# Objective

while testing wgpu/WebGL on mobile GPU I've noticed bevy always forces vertex index format to 32bit (and ignores mesh settings).

## Solution

the solution is to pass proper vertex index format in GpuIndexInfo to render_pass

## New Features

This adds the following to the new renderer:

* **Shader Assets**

* Shaders are assets again! Users no longer need to call `include_str!` for their shaders

* Shader hot-reloading

* **Shader Defs / Shader Preprocessing**

* Shaders now support `# ifdef NAME`, `# ifndef NAME`, and `# endif` preprocessor directives

* **Bevy RenderPipelineDescriptor and RenderPipelineCache**

* Bevy now provides its own `RenderPipelineDescriptor` and the wgpu version is now exported as `RawRenderPipelineDescriptor`. This allows users to define pipelines with `Handle<Shader>` instead of needing to manually compile and reference `ShaderModules`, enables passing in shader defs to configure the shader preprocessor, makes hot reloading possible (because the descriptor can be owned and used to create new pipelines when a shader changes), and opens the doors to pipeline specialization.

* The `RenderPipelineCache` now handles compiling and re-compiling Bevy RenderPipelineDescriptors. It has internal PipelineLayout and ShaderModule caches. Users receive a `CachedPipelineId`, which can be used to look up the actual `&RenderPipeline` during rendering.

* **Pipeline Specialization**

* This enables defining per-entity-configurable pipelines that specialize on arbitrary custom keys. In practice this will involve specializing based on things like MSAA values, Shader Defs, Bind Group existence, and Vertex Layouts.

* Adds a `SpecializedPipeline` trait and `SpecializedPipelines<MyPipeline>` resource. This is a simple layer that generates Bevy RenderPipelineDescriptors based on a custom key defined for the pipeline.

* Specialized pipelines are also hot-reloadable.

* This was the result of experimentation with two different approaches:

1. **"generic immediate mode multi-key hash pipeline specialization"**

* breaks up the pipeline into multiple "identities" (the core pipeline definition, shader defs, mesh layout, bind group layout). each of these identities has its own key. looking up / compiling a specific version of a pipeline requires composing all of these keys together

* the benefit of this approach is that it works for all pipelines / the pipeline is fully identified by the keys. the multiple keys allow pre-hashing parts of the pipeline identity where possible (ex: pre compute the mesh identity for all meshes)

* the downside is that any per-entity data that informs the values of these keys could require expensive re-hashes. computing each key for each sprite tanked bevymark performance (sprites don't actually need this level of specialization yet ... but things like pbr and future sprite scenarios might).

* this is the approach rafx used last time i checked

2. **"custom key specialization"**

* Pipelines by default are not specialized

* Pipelines that need specialization implement a SpecializedPipeline trait with a custom key associated type

* This allows specialization keys to encode exactly the amount of information required (instead of needing to be a combined hash of the entire pipeline). Generally this should fit in a small number of bytes. Per-entity specialization barely registers anymore on things like bevymark. It also makes things like "shader defs" way cheaper to hash because we can use context specific bitflags instead of strings.

* Despite the extra trait, it actually generally makes pipeline definitions + lookups simpler: managing multiple keys (and making the appropriate calls to manage these keys) was way more complicated.

* I opted for custom key specialization. It performs better generally and in my opinion is better UX. Fortunately the way this is implemented also allows for custom caches as this all builds on a common abstraction: the RenderPipelineCache. The built in custom key trait is just a simple / pre-defined way to interact with the cache

## Callouts

* The SpecializedPipeline trait makes it easy to inherit pipeline configuration in custom pipelines. The changes to `custom_shader_pipelined` and the new `shader_defs_pipelined` example illustrate how much simpler it is to define custom pipelines based on the PbrPipeline.

* The shader preprocessor is currently pretty naive (it just uses regexes to process each line). Ultimately we might want to build a more custom parser for more performance + better error handling, but for now I'm happy to optimize for "easy to implement and understand".

## Next Steps

* Port compute pipelines to the new system

* Add more preprocessor directives (else, elif, import)

* More flexible vertex attribute specialization / enable cheaply specializing on specific mesh vertex layouts

# Objective

The current TODO comment is out of date

## Solution

I switched up the comment

Co-authored-by: William Batista <45850508+billyb2@users.noreply.github.com>

Upgrades both the old and new renderer to wgpu 0.11 (and naga 0.7). This builds on @zicklag's work here #2556.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Using RenderQueue in BufferVec allows removal of the staging buffer entirely, as well as removal of the SpriteNode.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- removed unused RenderResourceId and SwapChainFrame (already unified with TextureView)

- added deref to BindGroup, this makes conversion to wgpu::BindGroup easier

## Solution

- cleans up the API