# Objective

- Fixes#7889.

## Solution

- Change the glTF loader to insert a `Camera3dBundle` instead of a manually constructed bundle. This might prevent future issues when new components are required for a 3D Camera to work correctly.

- Register the `ColorGrading` type because `bevy_scene` was complaining about it.

# Objective

- Update `glam` to the latest version.

## Solution

- Update `glam` to version `0.23`.

Since the breaking change in `glam` only affects the `scalar-math` feature, this should cause no issues.

# Objective

Alternative to #7490. I wrote all of the code in this PR, but I have added @robtfm as co-author on commits that build on ideas from #7490. I would not have been able to solve these problems on my own without much more time investment and I'm largely just rephrasing the ideas from that PR.

Fixes#7435Fixes#7361Fixes#5721

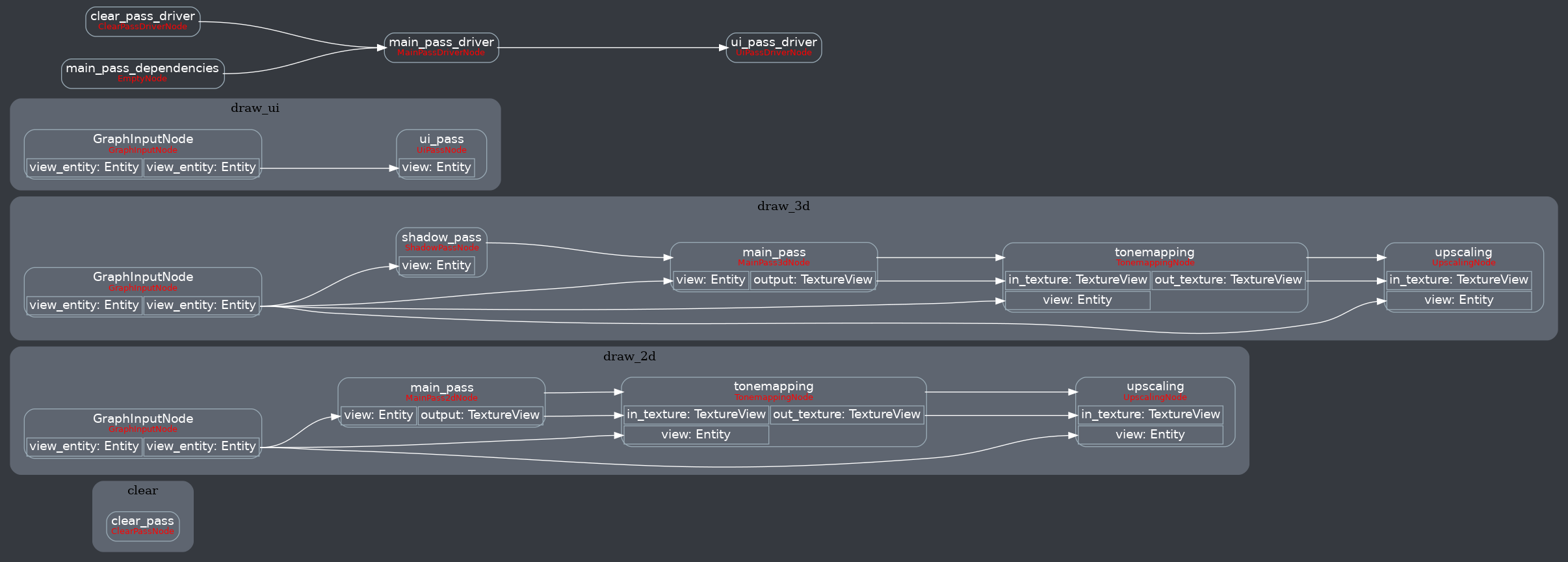

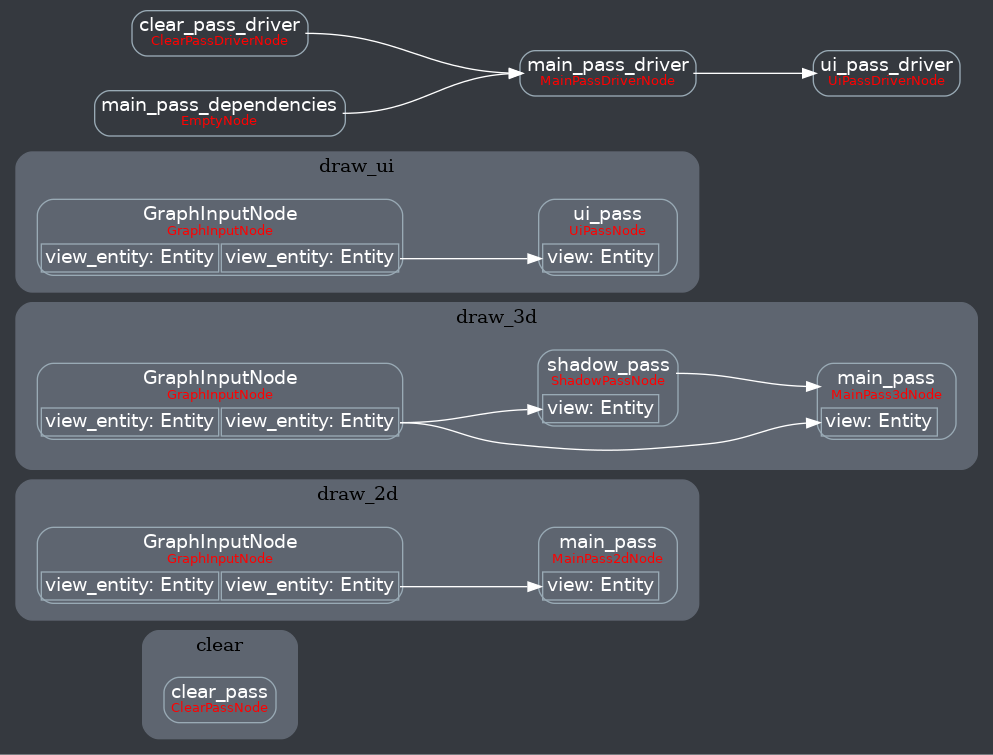

## Solution

This implements the solution I [outlined here](https://github.com/bevyengine/bevy/pull/7490#issuecomment-1426580633).

* Adds "msaa writeback" as an explicit "msaa camera feature" and default to msaa_writeback: true for each camera. If this is true, a camera has MSAA enabled, and it isn't the first camera for the target, add a writeback before the main pass for that camera.

* Adds a CameraOutputMode, which can be used to configure if (and how) the results of a camera's rendering will be written to the final RenderTarget output texture (via the upscaling node). The `blend_state` and `color_attachment_load_op` are now configurable, giving much more control over how a camera will write to the output texture.

* Made cameras with the same target share the same main_texture tracker by using `Arc<AtomicUsize>`, which ensures continuity across cameras. This was previously broken / could produce weird results in some cases. `ViewTarget::main_texture()` is now correct in every context.

* Added a new generic / specializable BlitPipeline, which the new MsaaWritebackNode uses internally. The UpscalingPipelineNode now uses BlitPipeline instead of its own pipeline. We might ultimately need to fork this back out if we choose to add more configurability to the upscaling, but for now this will save on binary size by not embedding the same shader twice.

* Moved the "camera sorting" logic from the camera driver node to its own system. The results are now stored in the `SortedCameras` resource, which can be used anywhere in the renderer. MSAA writeback makes use of this.

---

## Changelog

- Added `Camera::msaa_writeback` which can enable and disable msaa writeback.

- Added specializable `BlitPipeline` and ported the upscaling node to use this.

- Added SortedCameras, exposing information that was previously internal to the camera driver node.

- Made cameras with the same target share the same main_texture tracker, which ensures continuity across cameras.

# Objective

Support the following syntax for adding systems:

```rust

App::new()

.add_system(setup.on_startup())

.add_systems((

show_menu.in_schedule(OnEnter(GameState::Paused)),

menu_ssytem.in_set(OnUpdate(GameState::Paused)),

hide_menu.in_schedule(OnExit(GameState::Paused)),

))

```

## Solution

Add the traits `IntoSystemAppConfig{s}`, which provide the extension methods necessary for configuring which schedule a system belongs to. These extension methods return `IntoSystemAppConfig{s}`, which `App::add_system{s}` uses to choose which schedule to add systems to.

---

## Changelog

+ Added the extension methods `in_schedule(label)` and `on_startup()` for configuring the schedule a system belongs to.

## Future Work

* Replace all uses of `add_startup_system` in the engine.

* Deprecate this method

# Objective

While working on #7784, I noticed that a `#define VAR` in a `.wgsl` file is always effective, even if it its scope is not accepting lines.

Example:

```c

#define A

#ifndef A

#define B

#endif

```

Currently, `B` will be defined although it shouldn't. This PR fixes that.

## Solution

Move the branch responsible for `#define` lines into the last else branch, which is only evaluated if the current scope is accepting lines.

# Objective

There was PR that introduced support for storage buffer is `AsBindGroup` macro [#6129](https://github.com/bevyengine/bevy/pull/6129), but it does not give more granular control over storage buffer, it will always copy all the data no matter which part of it was updated. There is also currently another open PR #6669 that tries to achieve exactly that, it is just not up to date and seems abandoned (Sorry if that is not right). In this PR I'm proposing a solution for both of these approaches to co-exist using `#[storage(n, buffer)]` and `#[storage(n)]` to distinguish between the cases.

We could also discuss in this PR if there is a need to extend this support to DynamicBuffers as well.

# Objective

- Nothing render

```

ERROR bevy_render::render_resource::pipeline_cache: failed to process shader: Invalid shader def definition for '_import_path': bevy_pbr

```

## Solution

- Fix define regex so that it must have one whitespace after `define`

# Objective

- Fixes#7494

- It is now possible to define a ShaderDef from inside a shader. This can be useful to centralise a value, or making sure an import is only interpreted once

## Solution

- Support `#define <SHADERDEF_NAME> <optional value>`

# Objective

- ambiguities bad

## Solution

- solve ambiguities

- by either ignoring (e.g. on `queue_mesh_view_bind_groups` since `LightMeta` access is different)

- by introducing a dependency (`prepare_windows -> prepare_*` because the latter use the fallback Msaa)

- make `prepare_assets` public so that we can do a proper `.after`

# Objective

- Fix the environment map shader not working under webgl due to textureNumLevels() not being supported

- Fixes https://github.com/bevyengine/bevy/issues/7722

## Solution

- Instead of using textureNumLevels(), put an extra field in the GpuLights uniform to store the mip count

# Objective

Splits tone mapping from https://github.com/bevyengine/bevy/pull/6677 into a separate PR.

Address https://github.com/bevyengine/bevy/issues/2264.

Adds tone mapping options:

- None: Bypasses tonemapping for instances where users want colors output to match those set.

- Reinhard

- Reinhard Luminance: Bevy's exiting tonemapping

- [ACES](https://github.com/TheRealMJP/BakingLab/blob/master/BakingLab/ACES.hlsl) (Fitted version, based on the same implementation that Godot 4 uses) see https://github.com/bevyengine/bevy/issues/2264

- [AgX](https://github.com/sobotka/AgX)

- SomewhatBoringDisplayTransform

- TonyMcMapface

- Blender Filmic

This PR also adds support for EXR images so they can be used to compare tonemapping options with reference images.

## Migration Guide

- Tonemapping is now an enum with NONE and the various tonemappers.

- The DebandDither is now a separate component.

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

Closes#7573

- Make `StartupSet` a base set

## Solution

- Add `#[system_set(base)]` to the enum declaration

- Replace `.in_set(StartupSet::...)` with `.in_base_set(StartupSet::...)`

**Note**: I don't really know what I'm doing and what exactly the difference between base and non-base sets are. I mostly opened this PR based on discussion in Discord. I also don't really know how to test that I didn't break everything. Your reviews are appreciated!

---

## Changelog

- `StartupSet` is now a base set

## Migration Guide

`StartupSet` is now a base set. This means that you have to use `.in_base_set` instead of `.in_set`:

### Before

```rs

app.add_system(foo.in_set(StartupSet::PreStartup))

```

### After

```rs

app.add_system(foo.in_base_set(StartupSet::PreStartup))

```

# Objective

Allow for creating pipelines that use push constants. To be able to use push constants. Fixes#4825

As of right now, trying to call `RenderPass::set_push_constants` will trigger the following error:

```

thread 'main' panicked at 'wgpu error: Validation Error

Caused by:

In a RenderPass

note: encoder = `<CommandBuffer-(0, 59, Vulkan)>`

In a set_push_constant command

provided push constant is for stage(s) VERTEX | FRAGMENT | VERTEX_FRAGMENT, however the pipeline layout has no push constant range for the stage(s) VERTEX | FRAGMENT | VERTEX_FRAGMENT

```

## Solution

Add a field push_constant_ranges to` RenderPipelineDescriptor` and `ComputePipelineDescriptor`.

This PR supersedes #4908 which now contains merge conflicts due to significant changes to `bevy_render`.

Meanwhile, this PR also made the `layout` field of `RenderPipelineDescriptor` and `ComputePipelineDescriptor` non-optional. If the user do not need to specify the bind group layouts, they can simply supply an empty vector here. No need for it to be optional.

---

## Changelog

- Add a field push_constant_ranges to RenderPipelineDescriptor and ComputePipelineDescriptor

- Made the `layout` field of RenderPipelineDescriptor and ComputePipelineDescriptor non-optional.

## Migration Guide

- Add push_constant_ranges: Vec::new() to every `RenderPipelineDescriptor` and `ComputePipelineDescriptor`

- Unwrap the optional values on the `layout` field of `RenderPipelineDescriptor` and `ComputePipelineDescriptor`. If the descriptor has no layout, supply an empty vector.

Co-authored-by: Zhixing Zhang <me@neoto.xin>

# Objective

Fixes#7295

Should we maybe default to 4x if 2x/8x is selected but not supported?

---

## Changelog

- Added 2x and 8x sample counts for MSAA.

# Objective

- Environment maps use these formats, and in the future rendering LUTs will need textures loaded by default in the engine

## Solution

- Make ktx2 and zstd part of the default feature

- Let examples assume these features are enabled

---

## Changelog

- `ktx2` and `zstd` are now party of bevy's default enabled features

## Migration Guide

- If you used the `ktx2` or `zstd` features, you no longer need to explicitly enable them, as they are now part of bevy's default enabled features

# Objective

- Fixes#5432

- Fixes#6680

## Solution

- move code responsible for generating the `impl TypeUuid` from `type_uuid_derive` into a new function, `gen_impl_type_uuid`.

- this allows the new proc macro, `impl_type_uuid`, to call the code for generation.

- added struct `TypeUuidDef` and implemented `syn::Parse` to allow parsing of the input for the new macro.

- finally, used the new macro `impl_type_uuid` to implement `TypeUuid` for the standard library (in `crates/bevy_reflect/src/type_uuid_impl.rs`).

- fixes#6680 by doing a wrapping add of the param's index to its `TYPE_UUID`

Co-authored-by: dis-da-moe <84386186+dis-da-moe@users.noreply.github.com>

# Objective

We have a few old system labels that are now system sets but are still named or documented as labels. Documentation also generally mentioned system labels in some places.

## Solution

- Clean up naming and documentation regarding system sets

## Migration Guide

`PrepareAssetLabel` is now called `PrepareAssetSet`

# Objective

- Fixes: #7187

Since avoiding the `SRes::into_inner` call does not seem to be possible, this PR tries to at least document its usage.

I am not sure if I explained the lifetime issue correctly, please let me know if something is incorrect.

## Solution

- Add information about the `SRes::into_inner` usage on both `RenderCommand` and `Res`

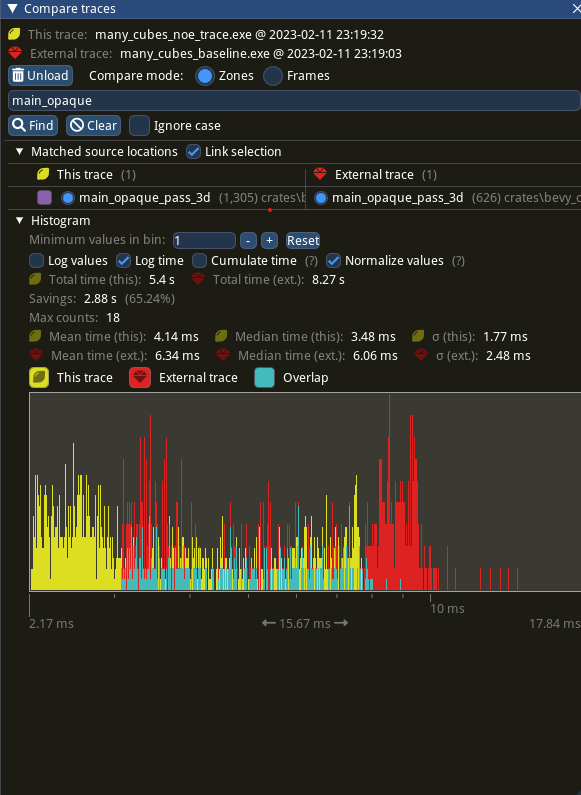

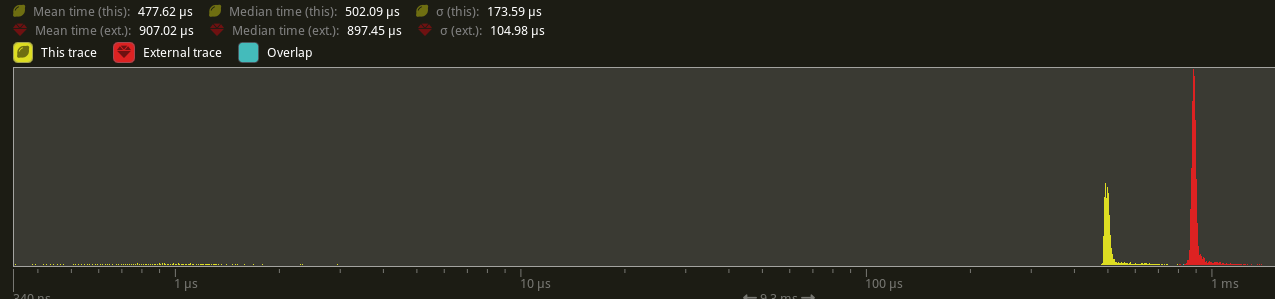

Profiles show that in extremely hot loops, like the draw loops in the renderer, invoking the trace! macro has noticeable overhead, even if the trace log level is not enabled.

Solve this by introduce a 'wrapper' detailed_trace macro around trace, that wraps the trace! log statement in a trivially false if statement unless a cargo feature is enabled

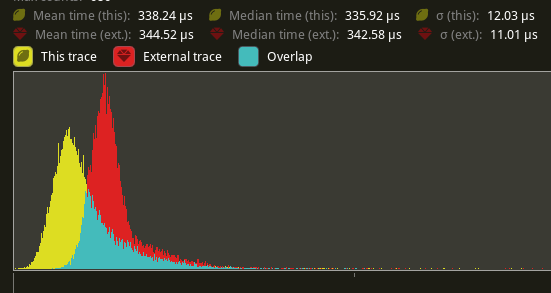

# Objective

- Eliminate significant overhead observed with trace-level logging in render hot loops, even when trace log level is not enabled.

- This is an alternative solution to the one proposed in #7223

## Solution

- Introduce a wrapper around the `trace!` macro called `detailed_trace!`. This macro wraps the `trace!` macro with an if statement that is conditional on a new cargo feature, `detailed_trace`. When the feature is not enabled (the default), then the if statement is trivially false and should be optimized away at compile time.

- Convert the observed hot occurrences of trace logging in `TrackedRenderPass` with this new macro.

Testing the results of

```

cargo run --profile stress-test --features bevy/trace_tracy --example many_cubes -- spheres

```

shows significant improvement of the `main_opaque_pass_3d` of the renderer, a median time decrease from 6.0ms to 3.5ms.

---

## Changelog

- For performance reasons, some detailed renderer trace logs now require the use of cargo feature `detailed_trace` in addition to setting the log level to `TRACE` in order to be shown.

## Migration Guide

- Some detailed bevy trace events now require the use of the cargo feature `detailed_trace` in addition to enabling `TRACE` level logging to view. Should you wish to see these logs, please compile your code with the bevy feature `detailed_trace`. Currently, the only logs that are affected are the renderer logs pertaining to `TrackedRenderPass` functions

# Objective

There was issue #191 requesting subdivisions on the shape::Plane.

I also could have used this recently. I then write the solution.

Fixes #191

## Solution

I changed the shape::Plane to include subdivisions field and the code to create the subdivisions. I don't know how people are counting subdivisions so as I put in the doc comments 0 subdivisions results in the original geometry of the Plane.

Greater then 0 results in the number of lines dividing the plane.

I didn't know if it would be better to create a new struct that implemented this feature, say SubdivisionPlane or change Plane. I decided on changing Plane as that was what the original issue was.

It would be trivial to alter this to use another struct instead of altering Plane.

The issues of migration, although small, would be eliminated if a new struct was implemented.

## Changelog

### Added

Added subdivisions field to shape::Plane

## Migration Guide

All the examples needed to be updated to initalize the subdivisions field.

Also there were two tests in tests/window that need to be updated.

A user would have to update all their uses of shape::Plane to initalize the subdivisions field.

fixes#6799

# Objective

We should be able to reuse the `Globals` or `View` shader struct definitions from anywhere (including third party plugins) without needing to worry about defining unrelated shader defs.

Also we'd like to refactor these structs to not be repeatedly defined.

## Solution

Refactor both `Globals` and `View` into separate importable shaders.

Use the imports throughout.

Co-authored-by: Torstein Grindvik <52322338+torsteingrindvik@users.noreply.github.com>

# Objective

- This makes code a little more readable now.

## Solution

- Use `position` provided by `Iter` instead of `enumerating` indices and `map`ping to the index.

This was missed in #7205.

Should be fixed now. 😄

## Migration Guide

- `SpecializedComputePipelines::specialize` now takes a `&PipelineCache` instead of a `&mut PipelineCache`

(Before)

(After)

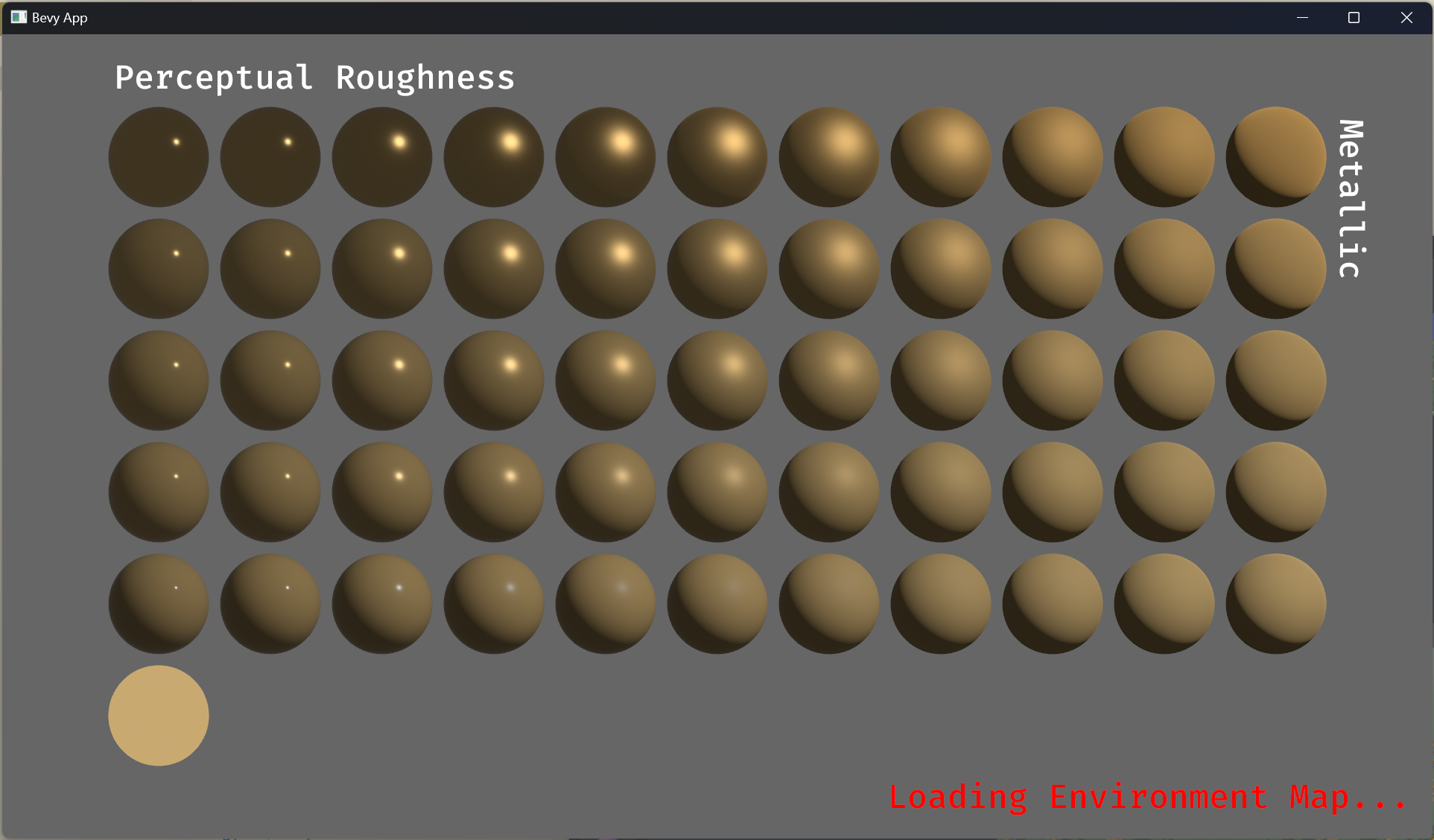

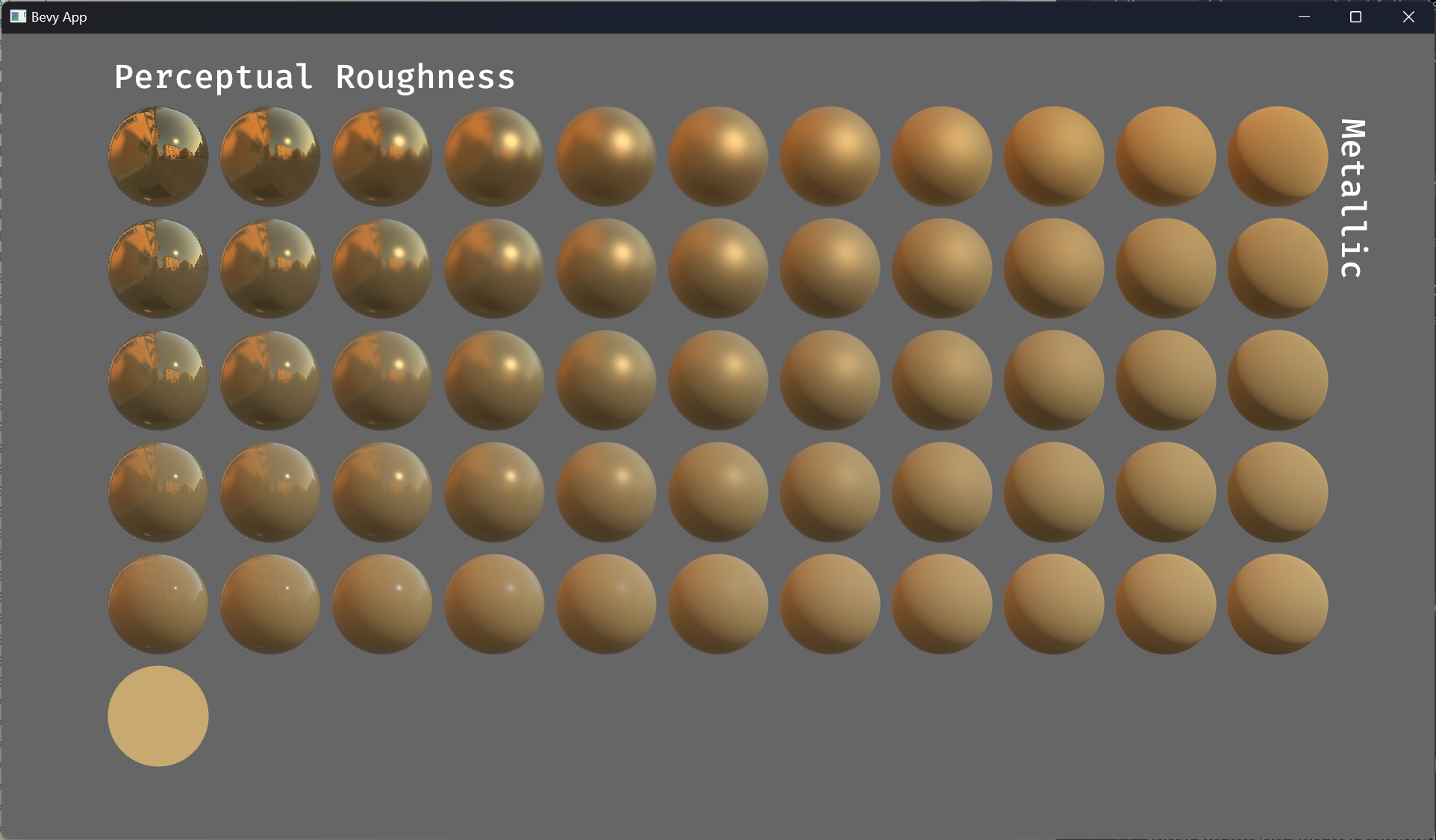

# Objective

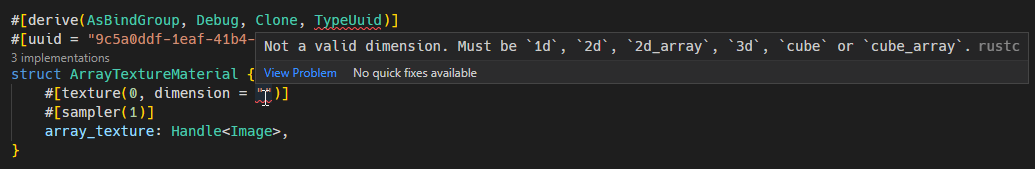

- Improve lighting; especially reflections.

- Closes https://github.com/bevyengine/bevy/issues/4581.

## Solution

- Implement environment maps, providing better ambient light.

- Add microfacet multibounce approximation for specular highlights from Filament.

- Occlusion is no longer incorrectly applied to direct lighting. It now only applies to diffuse indirect light. Unsure if it's also supposed to apply to specular indirect light - the glTF specification just says "indirect light". In the case of ambient occlusion, for instance, that's usually only calculated as diffuse though. For now, I'm choosing to apply this just to indirect diffuse light, and not specular.

- Modified the PBR example to use an environment map, and have labels.

- Added `FallbackImageCubemap`.

## Implementation

- IBL technique references can be found in environment_map.wgsl.

- It's more accurate to use a LUT for the scale/bias. Filament has a good reference on generating this LUT. For now, I just used an analytic approximation.

- For now, environment maps must first be prefiltered outside of bevy using a 3rd party tool. See the `EnvironmentMap` documentation.

- Eventually, we should have our own prefiltering code, so that we can have dynamically changing environment maps, as well as let users drop in an HDR image and use asset preprocessing to create the needed textures using only bevy.

---

## Changelog

- Added an `EnvironmentMapLight` camera component that adds additional ambient light to a scene.

- StandardMaterials will now appear brighter and more saturated at high roughness, due to internal material changes. This is more physically correct.

- Fixed StandardMaterial occlusion being incorrectly applied to direct lighting.

- Added `FallbackImageCubemap`.

Co-authored-by: IceSentry <c.giguere42@gmail.com>

Co-authored-by: James Liu <contact@jamessliu.com>

Co-authored-by: Rob Parrett <robparrett@gmail.com>

# Objective

- Terminology used in field names and docs aren't accurate

- `window_origin` doesn't have any effect when `scaling_mode` is `ScalingMode::None`

- `left`, `right`, `bottom`, and `top` are set automatically unless `scaling_mode` is `None`. Fields that only sometimes give feedback are confusing.

- `ScalingMode::WindowSize` has no arguments, which is inconsistent with other `ScalingMode`s. 1 pixel = 1 world unit is also typically way too wide.

- `OrthographicProjection` feels generally less streamlined than its `PerspectiveProjection` counterpart

- Fixes#5818

- Fixes#6190

## Solution

- Improve consistency in `OrthographicProjection`'s public fields (they should either always give feedback or never give feedback).

- Improve consistency in `ScalingMode`'s arguments

- General usability improvements

- Improve accuracy of terminology:

- "Window" should refer to the physical window on the desktop

- "Viewport" should refer to the component in the window that images are drawn on (typically all of it)

- "View frustum" should refer to the volume captured by the projection

---

## Changelog

### Added

- Added argument to `ScalingMode::WindowSize` that specifies the number of pixels that equals one world unit.

- Added documentation for fields and enums

### Changed

- Renamed `window_origin` to `viewport_origin`, which now:

- Affects all `ScalingMode`s

- Takes a fraction of the viewport's width and height instead of an enum

- Removed `WindowOrigin` enum as it's obsolete

- Renamed `ScalingMode::None` to `ScalingMode::Fixed`, which now:

- Takes arguments to specify the projection size

- Replaced `left`, `right`, `bottom`, and `top` fields with a single `area: Rect`

- `scale` is now applied before updating `area`. Reading from it will take `scale` into account.

- Documentation changes to make terminology more accurate and consistent

## Migration Guide

- Change `window_origin` to `viewport_origin`; replace `WindowOrigin::Center` with `Vec2::new(0.5, 0.5)` and `WindowOrigin::BottomLeft` with `Vec2::new(0.0, 0.0)`

- For shadow projections and such, replace `left`, `right`, `bottom`, and `top` with `area: Rect::new(left, bottom, right, top)`

- For camera projections, remove l/r/b/t values from `OrthographicProjection` instantiations, as they no longer have any effect in any `ScalingMode`

- Change `ScalingMode::None` to `ScalingMode::Fixed`

- Replace manual changes of l/r/b/t with:

- Arguments in `ScalingMode::Fixed` to specify size

- `viewport_origin` to specify offset

- Change `ScalingMode::WindowSize` to `ScalingMode::WindowSize(1.0)`

# Objective

Fix#7377Fix#7513

## Solution

Record the changes made to the Bevy `Window` from `winit` as 'canon' to avoid Bevy sending those changes back to `winit` again, causing a feedback loop.

## Changelog

* Removed `ModifiesWindows` system label.

Neither `despawn_window` nor `changed_window` actually modify the `Window` component so all the `.after(ModifiesWindows)` shouldn't be necessary.

* Moved `changed_window` and `despawn_window` systems to `CoreStage::Last` to avoid systems making changes to the `Window` between `changed_window` and the end of the frame as they would be ignored.

## Migration Guide

The `ModifiesWindows` system label was removed.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

Some render systems that have system set used as a label so that they can be referenced from somewhere else.

The 1:1 translation from `add_system_to_stage(Prepare, prepare_lights.label(PrepareLights))` is `add_system(prepare_lights.in_set(Prepare).in_set(PrepareLights)`, but configuring the `PrepareLights` set to be in `Prepare` would match the intention better (there are no systems in `PrepareLights` outside of `Prepare`) and it is easier for visualization tools to deal with.

# Solution

- replace

```rust

prepare_lights in PrepareLights

prepare_lights in Prepare

```

with

```rs

prepare_lights in PrepareLights

PrepareLights in Prepare

```

**Before**

**After**

# Objective

Buffers in bevy do not allow for setting buffer usage flags which can be useful for setting COPY_SRC, MAP_READ, MAP_WRITE, which allows for buffers to be copied from gpu to cpu for inspection.

## Solution

Add buffer_usage field to buffers and a set_usage function to set them

# Objective

- Fixes#766

## Solution

- Add a new `Lcha` member to `bevy_render::color::Color` enum

---

## Changelog

- Add a new `Lcha` member to `bevy_render::color::Color` enum

- Add `bevy_render::color::LchRepresentation` struct

# Objective

[as noted](https://github.com/bevyengine/bevy/pull/5950#discussion_r1080762807) by james, transmuting arcs may be UB.

we now store a `*const ()` pointer internally, and only rely on `ptr.cast::<()>().cast::<T>() == ptr`.

as a happy side effect this removes the need for boxing the value, so todo: potentially use this for release mode as well

# Objective

NOTE: This depends on #7267 and should not be merged until #7267 is merged. If you are reviewing this before that is merged, I highly recommend viewing the Base Sets commit instead of trying to find my changes amongst those from #7267.

"Default sets" as described by the [Stageless RFC](https://github.com/bevyengine/rfcs/pull/45) have some [unfortunate consequences](https://github.com/bevyengine/bevy/discussions/7365).

## Solution

This adds "base sets" as a variant of `SystemSet`:

A set is a "base set" if `SystemSet::is_base` returns `true`. Typically this will be opted-in to using the `SystemSet` derive:

```rust

#[derive(SystemSet, Clone, Hash, Debug, PartialEq, Eq)]

#[system_set(base)]

enum MyBaseSet {

A,

B,

}

```

**Base sets are exclusive**: a system can belong to at most one "base set". Adding a system to more than one will result in an error. When possible we fail immediately during system-config-time with a nice file + line number. For the more nested graph-ey cases, this will fail at the final schedule build.

**Base sets cannot belong to other sets**: this is where the word "base" comes from

Systems and Sets can only be added to base sets using `in_base_set`. Calling `in_set` with a base set will fail. As will calling `in_base_set` with a normal set.

```rust

app.add_system(foo.in_base_set(MyBaseSet::A))

// X must be a normal set ... base sets cannot be added to base sets

.configure_set(X.in_base_set(MyBaseSet::A))

```

Base sets can still be configured like normal sets:

```rust

app.add_system(MyBaseSet::B.after(MyBaseSet::Ap))

```

The primary use case for base sets is enabling a "default base set":

```rust

schedule.set_default_base_set(CoreSet::Update)

// this will belong to CoreSet::Update by default

.add_system(foo)

// this will override the default base set with PostUpdate

.add_system(bar.in_base_set(CoreSet::PostUpdate))

```

This allows us to build apis that work by default in the standard Bevy style. This is a rough analog to the "default stage" model, but it use the new "stageless sets" model instead, with all of the ordering flexibility (including exclusive systems) that it provides.

---

## Changelog

- Added "base sets" and ported CoreSet to use them.

## Migration Guide

TODO

Huge thanks to @maniwani, @devil-ira, @hymm, @cart, @superdump and @jakobhellermann for the help with this PR.

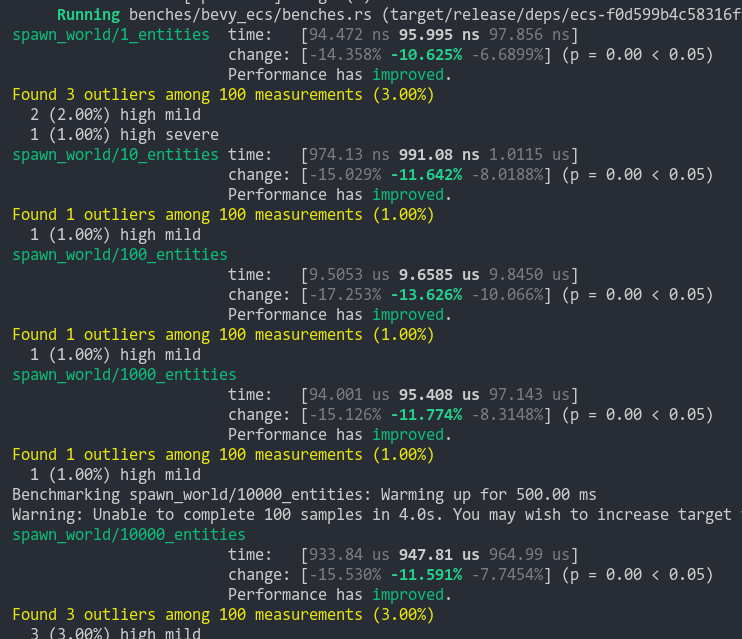

# Objective

- Followup #6587.

- Minimal integration for the Stageless Scheduling RFC: https://github.com/bevyengine/rfcs/pull/45

## Solution

- [x] Remove old scheduling module

- [x] Migrate new methods to no longer use extension methods

- [x] Fix compiler errors

- [x] Fix benchmarks

- [x] Fix examples

- [x] Fix docs

- [x] Fix tests

## Changelog

### Added

- a large number of methods on `App` to work with schedules ergonomically

- the `CoreSchedule` enum

- `App::add_extract_system` via the `RenderingAppExtension` trait extension method

- the private `prepare_view_uniforms` system now has a public system set for scheduling purposes, called `ViewSet::PrepareUniforms`

### Removed

- stages, and all code that mentions stages

- states have been dramatically simplified, and no longer use a stack

- `RunCriteriaLabel`

- `AsSystemLabel` trait

- `on_hierarchy_reports_enabled` run criteria (now just uses an ad hoc resource checking run condition)

- systems in `RenderSet/Stage::Extract` no longer warn when they do not read data from the main world

- `RunCriteriaLabel`

- `transform_propagate_system_set`: this was a nonstandard pattern that didn't actually provide enough control. The systems are already `pub`: the docs have been updated to ensure that the third-party usage is clear.

### Changed

- `System::default_labels` is now `System::default_system_sets`.

- `App::add_default_labels` is now `App::add_default_sets`

- `CoreStage` and `StartupStage` enums are now `CoreSet` and `StartupSet`

- `App::add_system_set` was renamed to `App::add_systems`

- The `StartupSchedule` label is now defined as part of the `CoreSchedules` enum

- `.label(SystemLabel)` is now referred to as `.in_set(SystemSet)`

- `SystemLabel` trait was replaced by `SystemSet`

- `SystemTypeIdLabel<T>` was replaced by `SystemSetType<T>`

- The `ReportHierarchyIssue` resource now has a public constructor (`new`), and implements `PartialEq`

- Fixed time steps now use a schedule (`CoreSchedule::FixedTimeStep`) rather than a run criteria.

- Adding rendering extraction systems now panics rather than silently failing if no subapp with the `RenderApp` label is found.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied.

- `SceneSpawnerSystem` now runs under `CoreSet::Update`, rather than `CoreStage::PreUpdate.at_end()`.

- `bevy_pbr::add_clusters` is no longer an exclusive system

- the top level `bevy_ecs::schedule` module was replaced with `bevy_ecs::scheduling`

- `tick_global_task_pools_on_main_thread` is no longer run as an exclusive system. Instead, it has been replaced by `tick_global_task_pools`, which uses a `NonSend` resource to force running on the main thread.

## Migration Guide

- Calls to `.label(MyLabel)` should be replaced with `.in_set(MySet)`

- Stages have been removed. Replace these with system sets, and then add command flushes using the `apply_system_buffers` exclusive system where needed.

- The `CoreStage`, `StartupStage, `RenderStage` and `AssetStage` enums have been replaced with `CoreSet`, `StartupSet, `RenderSet` and `AssetSet`. The same scheduling guarantees have been preserved.

- Systems are no longer added to `CoreSet::Update` by default. Add systems manually if this behavior is needed, although you should consider adding your game logic systems to `CoreSchedule::FixedTimestep` instead for more reliable framerate-independent behavior.

- Similarly, startup systems are no longer part of `StartupSet::Startup` by default. In most cases, this won't matter to you.

- For example, `add_system_to_stage(CoreStage::PostUpdate, my_system)` should be replaced with

- `add_system(my_system.in_set(CoreSet::PostUpdate)`

- When testing systems or otherwise running them in a headless fashion, simply construct and run a schedule using `Schedule::new()` and `World::run_schedule` rather than constructing stages

- Run criteria have been renamed to run conditions. These can now be combined with each other and with states.

- Looping run criteria and state stacks have been removed. Use an exclusive system that runs a schedule if you need this level of control over system control flow.

- For app-level control flow over which schedules get run when (such as for rollback networking), create your own schedule and insert it under the `CoreSchedule::Outer` label.

- Fixed timesteps are now evaluated in a schedule, rather than controlled via run criteria. The `run_fixed_timestep` system runs this schedule between `CoreSet::First` and `CoreSet::PreUpdate` by default.

- Command flush points introduced by `AssetStage` have been removed. If you were relying on these, add them back manually.

- Adding extract systems is now typically done directly on the main app. Make sure the `RenderingAppExtension` trait is in scope, then call `app.add_extract_system(my_system)`.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied. You may need to order your movement systems to occur before this system in order to avoid system order ambiguities in culling behavior.

- the `RenderLabel` `AppLabel` was renamed to `RenderApp` for clarity

- `App::add_state` now takes 0 arguments: the starting state is set based on the `Default` impl.

- Instead of creating `SystemSet` containers for systems that run in stages, simply use `.on_enter::<State::Variant>()` or its `on_exit` or `on_update` siblings.

- `SystemLabel` derives should be replaced with `SystemSet`. You will also need to add the `Debug`, `PartialEq`, `Eq`, and `Hash` traits to satisfy the new trait bounds.

- `with_run_criteria` has been renamed to `run_if`. Run criteria have been renamed to run conditions for clarity, and should now simply return a bool.

- States have been dramatically simplified: there is no longer a "state stack". To queue a transition to the next state, call `NextState::set`

## TODO

- [x] remove dead methods on App and World

- [x] add `App::add_system_to_schedule` and `App::add_systems_to_schedule`

- [x] avoid adding the default system set at inappropriate times

- [x] remove any accidental cycles in the default plugins schedule

- [x] migrate benchmarks

- [x] expose explicit labels for the built-in command flush points

- [x] migrate engine code

- [x] remove all mentions of stages from the docs

- [x] verify docs for States

- [x] fix uses of exclusive systems that use .end / .at_start / .before_commands

- [x] migrate RenderStage and AssetStage

- [x] migrate examples

- [x] ensure that transform propagation is exported in a sufficiently public way (the systems are already pub)

- [x] ensure that on_enter schedules are run at least once before the main app

- [x] re-enable opt-in to execution order ambiguities

- [x] revert change to `update_bounds` to ensure it runs in `PostUpdate`

- [x] test all examples

- [x] unbreak directional lights

- [x] unbreak shadows (see 3d_scene, 3d_shape, lighting, transparaency_3d examples)

- [x] game menu example shows loading screen and menu simultaneously

- [x] display settings menu is a blank screen

- [x] `without_winit` example panics

- [x] ensure all tests pass

- [x] SubApp doc test fails

- [x] runs_spawn_local tasks fails

- [x] [Fix panic_when_hierachy_cycle test hanging](https://github.com/alice-i-cecile/bevy/pull/120)

## Points of Difficulty and Controversy

**Reviewers, please give feedback on these and look closely**

1. Default sets, from the RFC, have been removed. These added a tremendous amount of implicit complexity and result in hard to debug scheduling errors. They're going to be tackled in the form of "base sets" by @cart in a followup.

2. The outer schedule controls which schedule is run when `App::update` is called.

3. I implemented `Label for `Box<dyn Label>` for our label types. This enables us to store schedule labels in concrete form, and then later run them. I ran into the same set of problems when working with one-shot systems. We've previously investigated this pattern in depth, and it does not appear to lead to extra indirection with nested boxes.

4. `SubApp::update` simply runs the default schedule once. This sucks, but this whole API is incomplete and this was the minimal changeset.

5. `time_system` and `tick_global_task_pools_on_main_thread` no longer use exclusive systems to attempt to force scheduling order

6. Implemetnation strategy for fixed timesteps

7. `AssetStage` was migrated to `AssetSet` without reintroducing command flush points. These did not appear to be used, and it's nice to remove these bottlenecks.

8. Migration of `bevy_render/lib.rs` and pipelined rendering. The logic here is unusually tricky, as we have complex scheduling requirements.

## Future Work (ideally before 0.10)

- Rename schedule_v3 module to schedule or scheduling

- Add a derive macro to states, and likely a `EnumIter` trait of some form

- Figure out what exactly to do with the "systems added should basically work by default" problem

- Improve ergonomics for working with fixed timesteps and states

- Polish FixedTime API to match Time

- Rebase and merge #7415

- Resolve all internal ambiguities (blocked on better tools, especially #7442)

- Add "base sets" to replace the removed default sets.

# Objective

Avoid ‘Unable to find a GPU! Make sure you have installed required drivers!’ .

Because many devices only support OpenGL without Vulkan.

Fixes#3191

## Solution

Use all backends supported by wgpu.

# Objective

Currently, shaders may only have syntax such as

```wgsl

#ifdef FOO

// foo code

#else

#ifdef BAR

// bar code

#else

#ifdef BAZ

// baz code

#else

// fallback code

#endif

#endif

#endif

```

This is hard to read and follow.

Add a way to allow writing `#else ifdef DEFINE` to reduce the number of scopes introduced and to increase readability.

## Solution

Refactor the current preprocessing a bit and add logic to allow `#else ifdef DEFINE`.

This includes per-scope tracking of whether a branch has been accepted.

Add a few tests for this feature.

With these changes we may now write:

```wgsl

#ifdef FOO

// foo code

#else ifdef BAR

// bar code

#else ifdef BAZ

// baz code

#else

// fallback code

#endif

```

instead.

---

## Changelog

- Add `#else ifdef` to shader preprocessing.

# Objective

- Trying to move some of the fixes from https://github.com/bevyengine/bevy/pull/7267 to make that one easier to review

- The MainThreadExecutor is how the render world runs nonsend systems on the main thread for pipelined rendering.

- The multithread executor for stageless wasn't using the MainThreadExecutor.

- MainThreadExecutor was declared in the old executor_parallel module that is getting deleted.

- The way the MainThreadExecutor was getting passed to the scope was actually unsound as the resource could be dropped from the World while the schedule was running

## Solution

- Move MainThreadExecutor to the new multithreaded_executor's file.

- Make the multithreaded executor use the MainThreadExecutor

- Clone the MainThreadExecutor onto the stack and pass that ref in

## Changelog

- Move MainThreadExecutor for stageless migration.

# Objective

In simple cases we might want to derive the `ExtractComponent` trait.

This adds symmetry to the existing `ExtractResource` derive.

## Solution

Add an implementation of `#[derive(ExtractComponent)]`.

The implementation is adapted from the existing `ExtractResource` derive macro.

Additionally, there is an attribute called `extract_component_filter`. This allows specifying a query filter type used when extracting.

If not specified, no filter (equal to `()`) is used.

So:

```rust

#[derive(Component, Clone, ExtractComponent)]

#[extract_component_filter(With<Fuel>)]

pub struct Car {

pub wheels: usize,

}

```

would expand to (a bit cleaned up here):

```rust

impl ExtractComponent for Car

{

type Query = &'static Self;

type Filter = With<Fuel>;

type Out = Self;

fn extract_component(item: QueryItem<'_, Self::Query>) -> Option<Self::Out> {

Some(item.clone())

}

}

```

---

## Changelog

- Added the ability to `#[derive(ExtractComponent)]` with an optional filter.

# Objective

- Fixes#4592

## Solution

- Implement `SrgbColorSpace` for `u8` via `f32`

- Convert KTX2 R8 and R8G8 non-linear sRGB to wgpu `R8Unorm` and `Rg8Unorm` as non-linear sRGB are not supported by wgpu for these formats

- Convert KTX2 R8G8B8 formats to `Rgba8Unorm` and `Rgba8UnormSrgb` by adding an alpha channel as the Rgb variants don't exist in wgpu

---

## Changelog

- Added: Support for KTX2 `R8_SRGB`, `R8_UNORM`, `R8G8_SRGB`, `R8G8_UNORM`, `R8G8B8_SRGB`, `R8G8B8_UNORM` formats by converting to supported wgpu formats as appropriate

# Objective

Add a `FromReflect` derive to the `Aabb` type, like all other math types, so we can reflect `Vec<Aabb>`.

## Solution

Just add it :)

---

## Changelog

### Added

- Implemented `FromReflect` for `Aabb`.

# Objective

Update Bevy to wgpu 0.15.

## Changelog

- Update to wgpu 0.15, wgpu-hal 0.15.1, and naga 0.11

- Users can now use the [DirectX Shader Compiler](https://github.com/microsoft/DirectXShaderCompiler) (DXC) on Windows with DX12 for faster shader compilation and ShaderModel 6.0+ support (requires `dxcompiler.dll` and `dxil.dll`, which are included in DXC downloads from [here](https://github.com/microsoft/DirectXShaderCompiler/releases/latest))

## Migration Guide

### WGSL Top-Level `let` is now `const`

All top level constants are now declared with `const`, catching up with the wgsl spec.

`let` is no longer allowed at the global scope, only within functions.

```diff

-let SOME_CONSTANT = 12.0;

+const SOME_CONSTANT = 12.0;

```

#### `TextureDescriptor` and `SurfaceConfiguration` now requires a `view_formats` field

The new `view_formats` field in the `TextureDescriptor` is used to specify a list of formats the texture can be re-interpreted to in a texture view. Currently only changing srgb-ness is allowed (ex. `Rgba8Unorm` <=> `Rgba8UnormSrgb`). You should set `view_formats` to `&[]` (empty) unless you have a specific reason not to.

#### The DirectX Shader Compiler (DXC) is now supported on DX12

DXC is now the default shader compiler when using the DX12 backend. DXC is Microsoft's replacement for their legacy FXC compiler, and is faster, less buggy, and allows for modern shader features to be used (ShaderModel 6.0+). DXC requires `dxcompiler.dll` and `dxil.dll` to be available, otherwise it will log a warning and fall back to FXC.

You can get `dxcompiler.dll` and `dxil.dll` by downloading the latest release from [Microsoft's DirectXShaderCompiler github repo](https://github.com/microsoft/DirectXShaderCompiler/releases/latest) and copying them into your project's root directory. These must be included when you distribute your Bevy game/app/etc if you plan on supporting the DX12 backend and are using DXC.

`WgpuSettings` now has a `dx12_shader_compiler` field which can be used to choose between either FXC or DXC (if you pass None for the paths for DXC, it will check for the .dlls in the working directory).

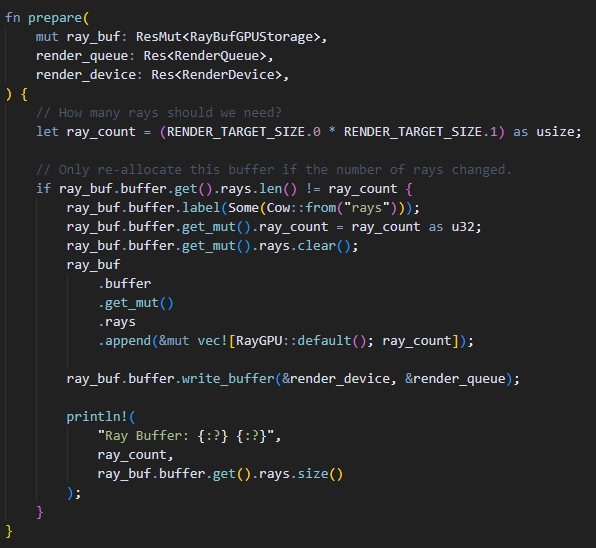

# Objective

## Use Case

A render node which calls `post_process_write()` on a `ViewTarget` multiple times during a single run of the node means both main textures of this view target is accessed.

If the source texture (which alternate between main textures **a** and **b**) is accessed in a shader during those iterations it means that those textures have to be bound using bind groups.

Preparing bind groups for both main textures ahead of time is desired, which means having access to the _other_ main texture is needed.

## Solution

Add a method on `ViewTarget` for accessing the other main texture.

---

## Changelog

### Added

- `main_texture_other` API on `ViewTarget`

# Objective

I found several words in code and docs are incorrect. This should be fixed.

## Solution

- Fix several minor typos

Co-authored-by: Chris Ohk <utilforever@gmail.com>

# Objective

Fixes#6952

## Solution

- Request WGPU capabilities `SAMPLED_TEXTURE_AND_STORAGE_BUFFER_ARRAY_NON_UNIFORM_INDEXING`, `SAMPLER_NON_UNIFORM_INDEXING` and `UNIFORM_BUFFER_AND_STORAGE_TEXTURE_ARRAY_NON_UNIFORM_INDEXING` when corresponding features are enabled.

- Add an example (`shaders/texture_binding_array`) illustrating (and testing) the use of non-uniform indexed textures and samplers.

## Changelog

- Added new capabilities for shader validation.

- Added example `shaders/texture_binding_array`.

Co-authored-by: Robert Swain <robert.swain@gmail.com>

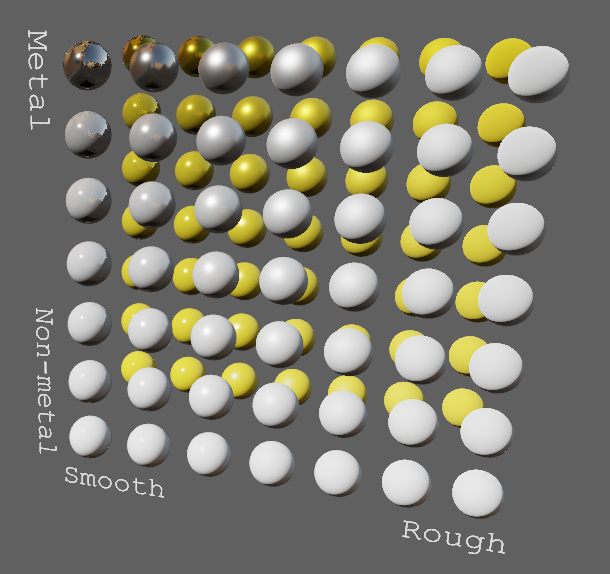

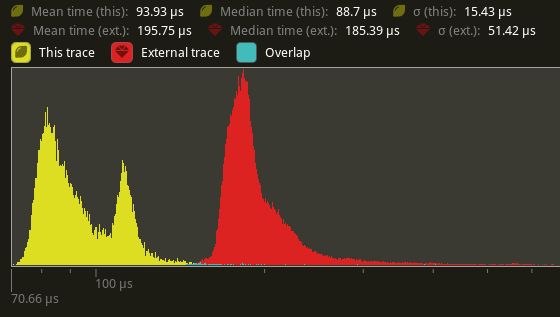

# Objective

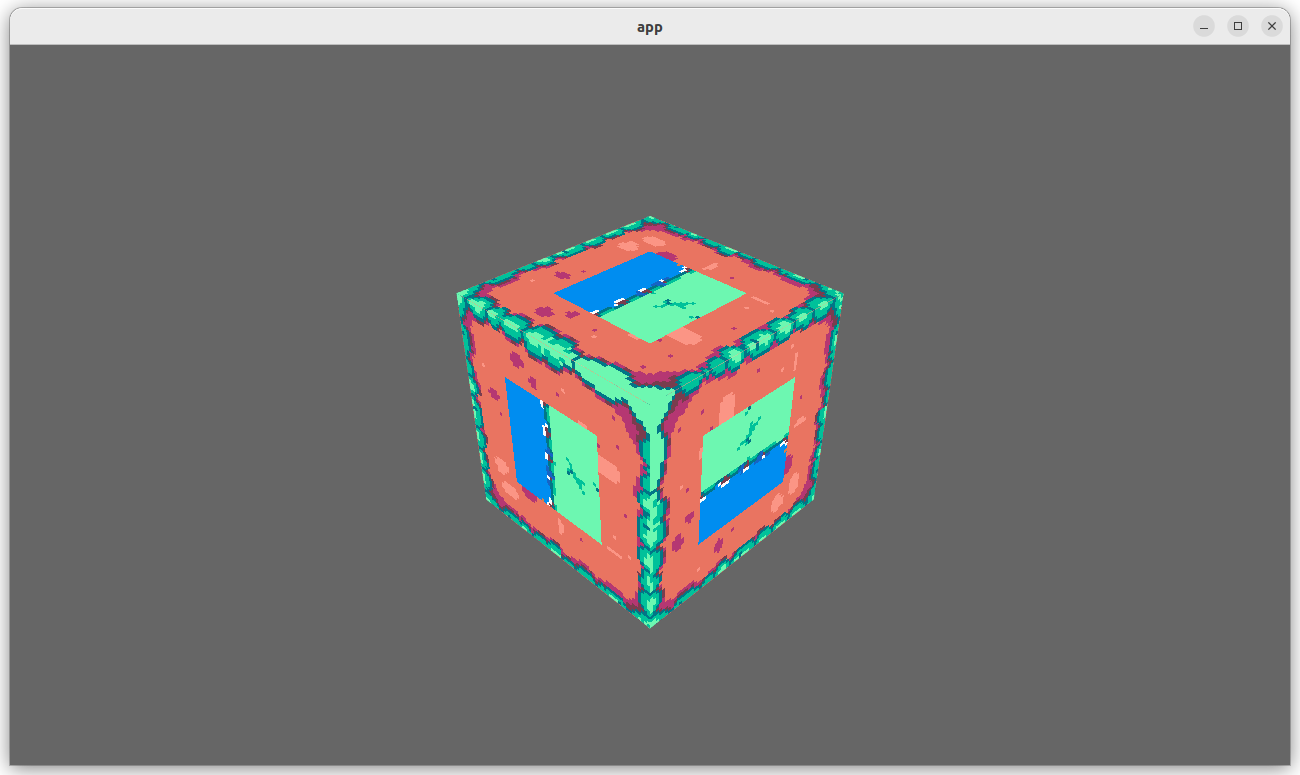

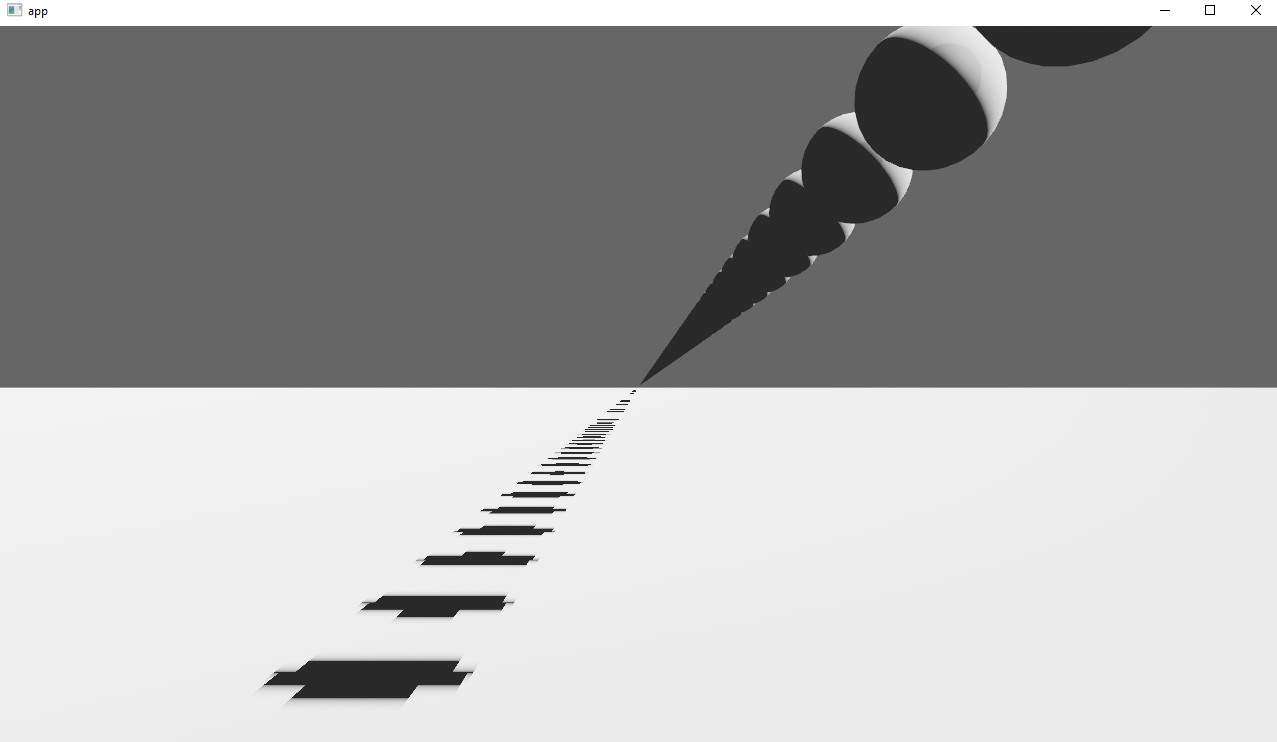

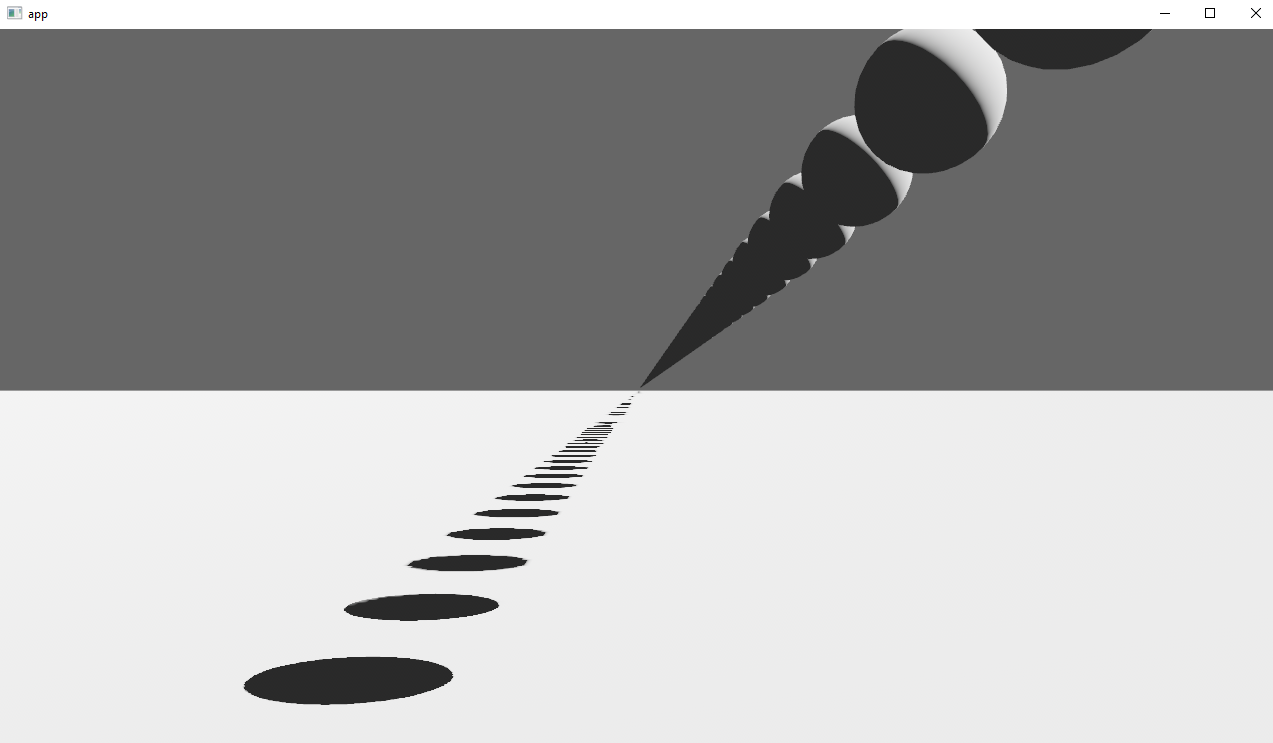

Implements cascaded shadow maps for directional lights, which produces better quality shadows without needing excessively large shadow maps.

Fixes#3629

Before

After

## Solution

Rather than rendering a single shadow map for directional light, the view frustum is divided into a series of cascades, each of which gets its own shadow map. The correct cascade is then sampled for shadow determination.

---

## Changelog

Directional lights now use cascaded shadow maps for improved shadow quality.

## Migration Guide

You no longer have to manually specify a `shadow_projection` for a directional light, and these settings should be removed. If customization of how cascaded shadow maps work is desired, modify the `CascadeShadowConfig` component instead.

# Objective

Fixes#7286. Both `App::add_sub_app` and `App::insert_sub_app` are rather redundant. Before 0.10 is shipped, one of them should be removed.

## Solution

Remove `App::add_sub_app` to prefer `App::insert_sub_app`.

Also hid away `SubApp::extract` since that can be a footgun if someone mutates it for whatever reason. Willing to revert this change if there are objections.

Perhaps we should make `SubApp: Deref<Target=App>`? Might change if we decide to move `!Send` resources into it.

---

## Changelog

Added: `SubApp::new`

Removed: `App::add_sub_app`

## Migration Guide

`App::add_sub_app` has been removed in favor of `App::insert_sub_app`. Use `SubApp::new` and insert it via `App::add_sub_app`

Old:

```rust

let mut sub_app = App::new()

// Build subapp here

app.add_sub_app(MySubAppLabel, sub_app);

```

New:

```rust

let mut sub_app = App::new()

// Build subapp here

app.insert_sub_app(MySubAppLabel, SubApp::new(sub_app, extract_fn));

```

# Objective

`RenderContext`, the core abstraction for running the render graph, currently only supports recording one `CommandBuffer` across the entire render graph. This means the entire buffer must be recorded sequentially, usually via the render graph itself. This prevents parallelization and forces users to only encode their commands in the render graph.

## Solution

Allow `RenderContext` to store a `Vec<CommandBuffer>` that it progressively appends to. By default, the context will not have a command encoder, but will create one as soon as either `begin_tracked_render_pass` or the `command_encoder` accesor is first called. `RenderContext::add_command_buffer` allows users to interrupt the current command encoder, flush it to the vec, append a user-provided `CommandBuffer` and reset the command encoder to start a new buffer. Users or the render graph will call `RenderContext::finish` to retrieve the series of buffers for submitting to the queue.

This allows users to encode their own `CommandBuffer`s outside of the render graph, potentially in different threads, and store them in components or resources.

Ideally, in the future, the core pipeline passes can run in `RenderStage::Render` systems and end up saving the completed command buffers to either `Commands` or a field in `RenderPhase`.

## Alternatives

The alternative is to use to use wgpu's `RenderBundle`s, which can achieve similar results; however it's not universally available (no OpenGL, WebGL, and DX11).

---

## Changelog

Added: `RenderContext::new`

Added: `RenderContext::add_command_buffer`

Added: `RenderContext::finish`

Changed: `RenderContext::render_device` is now private. Use the accessor `RenderContext::render_device()` instead.

Changed: `RenderContext::command_encoder` is now private. Use the accessor `RenderContext::command_encoder()` instead.

Changed: `RenderContext` now supports adding external `CommandBuffer`s for inclusion into the render graphs. These buffers can be encoded outside of the render graph (i.e. in a system).

## Migration Guide

`RenderContext`'s fields are now private. Use the accessors on `RenderContext` instead, and construct it with `RenderContext::new`.

# Objective

Fixes#6931

Continues #6954 by squashing `Msaa` to a flat enum

Helps out #7215

# Solution

```

pub enum Msaa {

Off = 1,

#[default]

Sample4 = 4,

}

```

# Changelog

- Modified

- `Msaa` is now enum

- Defaults to 4 samples

- Uses `.samples()` method to get the sample number as `u32`

# Migration Guide

```

let multi = Msaa { samples: 4 }

// is now

let multi = Msaa::Sample4

multi.samples

// is now

multi.samples()

```

Co-authored-by: Sjael <jakeobrien44@gmail.com>

After #6503, bevy_render uses the `send_blocking` method introduced in async-channel 1.7, but depended only on ^1.4.

I saw this after pulling main without running cargo update.

# Objective

- Fix minimum dependency version of async-channel

## Solution

- Bump async-channel version constraint to ^1.8, which is currently the latest version.

NOTE: Both bevy_ecs and bevy_tasks also depend on async-channel but they didn't use any newer features.

# Objective

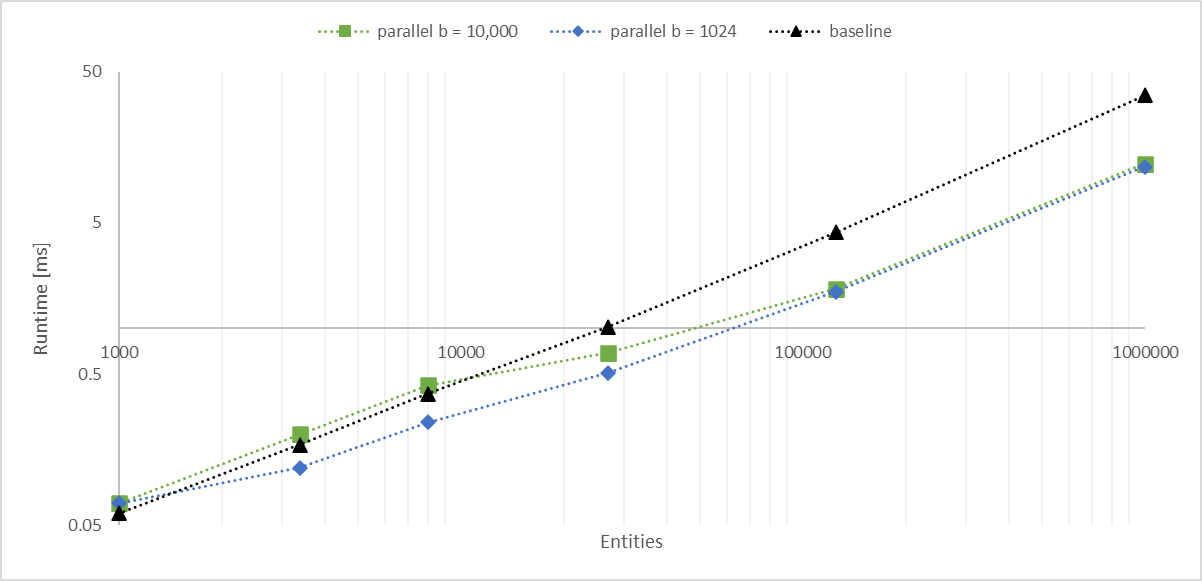

Fixes#3184. Fixes#6640. Fixes#4798. Using `Query::par_for_each(_mut)` currently requires a `batch_size` parameter, which affects how it chunks up large archetypes and tables into smaller chunks to run in parallel. Tuning this value is difficult, as the performance characteristics entirely depends on the state of the `World` it's being run on. Typically, users will just use a flat constant and just tune it by hand until it performs well in some benchmarks. However, this is both error prone and risks overfitting the tuning on that benchmark.

This PR proposes a naive automatic batch-size computation based on the current state of the `World`.

## Background

`Query::par_for_each(_mut)` schedules a new Task for every archetype or table that it matches. Archetypes/tables larger than the batch size are chunked into smaller tasks. Assuming every entity matched by the query has an identical workload, this makes the worst case scenario involve using a batch size equal to the size of the largest matched archetype or table. Conversely, a batch size of `max {archetype, table} size / thread count * COUNT_PER_THREAD` is likely the sweetspot where the overhead of scheduling tasks is minimized, at least not without grouping small archetypes/tables together.

There is also likely a strict minimum batch size below which the overhead of scheduling these tasks is heavier than running the entire thing single-threaded.

## Solution

- [x] Remove the `batch_size` from `Query(State)::par_for_each` and friends.

- [x] Add a check to compute `batch_size = max {archeytpe/table} size / thread count * COUNT_PER_THREAD`

- [x] ~~Panic if thread count is 0.~~ Defer to `for_each` if the thread count is 1 or less.

- [x] Early return if there is no matched table/archetype.

- [x] Add override option for users have queries that strongly violate the initial assumption that all iterated entities have an equal workload.

---

## Changelog

Changed: `Query::par_for_each(_mut)` has been changed to `Query::par_iter(_mut)` and will now automatically try to produce a batch size for callers based on the current `World` state.

## Migration Guide

The `batch_size` parameter for `Query(State)::par_for_each(_mut)` has been removed. These calls will automatically compute a batch size for you. Remove these parameters from all calls to these functions.

Before:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_for_each(32, |comp| {

...

});

}

```

After:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_iter().for_each(|comp| {

...

});

}

```

Co-authored-by: Arnav Choubey <56453634+x-52@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Corey Farwell <coreyf@rwell.org>

Co-authored-by: Aevyrie <aevyrie@gmail.com>

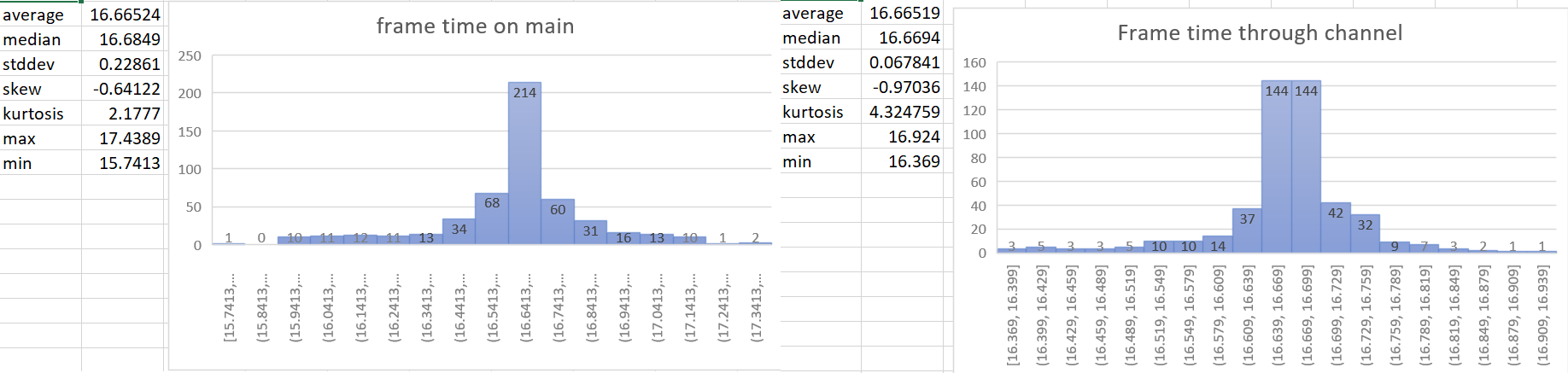

# Objective

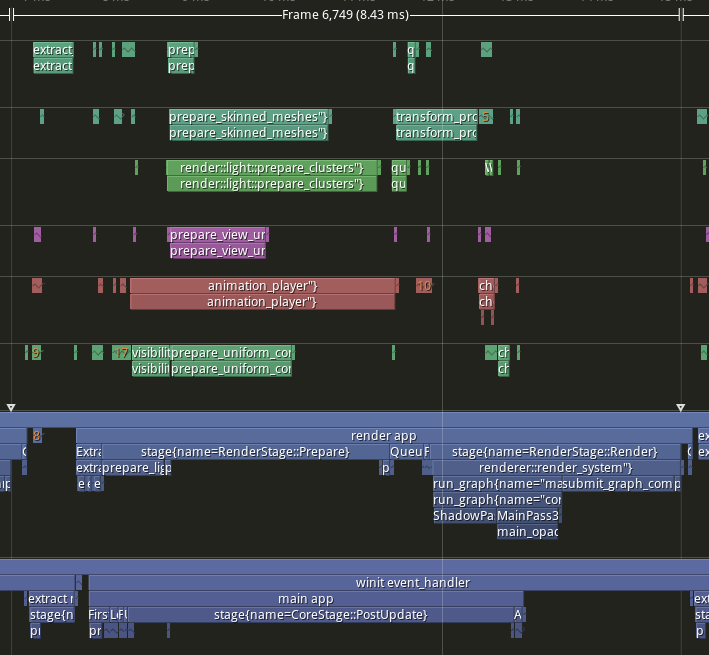

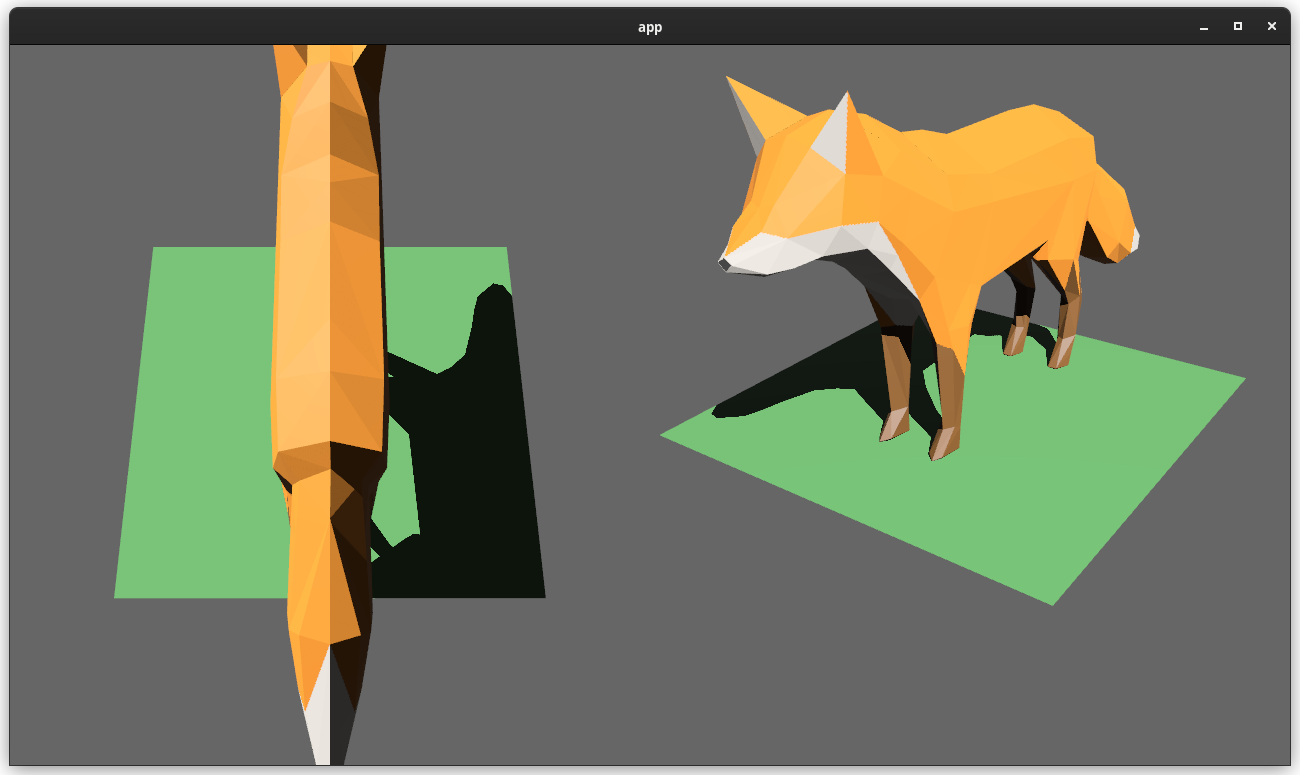

- Implement pipelined rendering

- Fixes#5082

- Fixes#4718

## User Facing Description

Bevy now implements piplelined rendering! Pipelined rendering allows the app logic and rendering logic to run on different threads leading to large gains in performance.

*tracy capture of many_foxes example*

To use pipelined rendering, you just need to add the `PipelinedRenderingPlugin`. If you're using `DefaultPlugins` then it will automatically be added for you on all platforms except wasm. Bevy does not currently support multithreading on wasm which is needed for this feature to work. If you aren't using `DefaultPlugins` you can add the plugin manually.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new()

// whatever other plugins you need

.add_plugin(RenderPlugin)

// needs to be added after RenderPlugin

.add_plugin(PipelinedRenderingPlugin)

.run();

}

```

If for some reason pipelined rendering needs to be removed. You can also disable the plugin the normal way.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new.add_plugins(DefaultPlugins.build().disable::<PipelinedRenderingPlugin>());

}

```

### A setup function was added to plugins

A optional plugin lifecycle function was added to the `Plugin trait`. This function is called after all plugins have been built, but before the app runner is called. This allows for some final setup to be done. In the case of pipelined rendering, the function removes the sub app from the main app and sends it to the render thread.

```rust

struct MyPlugin;

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

}

// optional function

fn setup(&self, app: &mut App) {

// do some final setup before runner is called

}

}

```

### A Stage for Frame Pacing

In the `RenderExtractApp` there is a stage labelled `BeforeIoAfterRenderStart` that systems can be added to. The specific use case for this stage is for a frame pacing system that can delay the start of main app processing in render bound apps to reduce input latency i.e. "frame pacing". This is not currently built into bevy, but exists as `bevy`

```text

|-------------------------------------------------------------------|

| | BeforeIoAfterRenderStart | winit events | main schedule |

| extract |---------------------------------------------------------|

| | extract commands | rendering schedule |

|-------------------------------------------------------------------|

```

### Small API additions

* `Schedule::remove_stage`

* `App::insert_sub_app`

* `App::remove_sub_app`

* `TaskPool::scope_with_executor`

## Problems and Solutions

### Moving render app to another thread

Most of the hard bits for this were done with the render redo. This PR just sends the render app back and forth through channels which seems to work ok. I originally experimented with using a scope to run the render task. It was cuter, but that approach didn't allow render to start before i/o processing. So I switched to using channels. There is much complexity in the coordination that needs to be done, but it's worth it. By moving rendering during i/o processing the frame times should be much more consistent in render bound apps. See https://github.com/bevyengine/bevy/issues/4691.

### Unsoundness with Sending World with NonSend resources

Dropping !Send things on threads other than the thread they were spawned on is considered unsound. The render world doesn't have any nonsend resources. So if we tell the users to "pretty please don't spawn nonsend resource on the render world", we can avoid this problem.

More seriously there is this https://github.com/bevyengine/bevy/pull/6534 pr, which patches the unsoundness by aborting the app if a nonsend resource is dropped on the wrong thread. ~~That PR should probably be merged before this one.~~ For a longer term solution we have this discussion going https://github.com/bevyengine/bevy/discussions/6552.

### NonSend Systems in render world

The render world doesn't have any !Send resources, but it does have a non send system. While Window is Send, winit does have some API's that can only be accessed on the main thread. `prepare_windows` in the render schedule thus needs to be scheduled on the main thread. Currently we run nonsend systems by running them on the thread the TaskPool::scope runs on. When we move render to another thread this no longer works.

To fix this, a new `scope_with_executor` method was added that takes a optional `TheadExecutor` that can only be ticked on the thread it was initialized on. The render world then holds a `MainThreadExecutor` resource which can be passed to the scope in the parallel executor that it uses to spawn it's non send systems on.

### Scopes executors between render and main should not share tasks

Since the render world and the app world share the `ComputeTaskPool`. Because `scope` has executors for the ComputeTaskPool a system from the main world could run on the render thread or a render system could run on the main thread. This can cause performance problems because it can delay a stage from finishing. See https://github.com/bevyengine/bevy/pull/6503#issuecomment-1309791442 for more details.

To avoid this problem, `TaskPool::scope` has been changed to not tick the ComputeTaskPool when it's used by the parallel executor. In the future when we move closer to the 1 thread to 1 logical core model we may want to overprovide threads, because the render and main app threads don't do much when executing the schedule.

## Performance

My machine is Windows 11, AMD Ryzen 5600x, RX 6600

### Examples

#### This PR with pipelining vs Main

> Note that these were run on an older version of main and the performance profile has probably changed due to optimizations

Seeing a perf gain from 29% on many lights to 7% on many sprites.

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | 27.01% | 27.34% | -47.09% | 1.58 | 1.55 | -1.78 | 5.85 | 5.67 | 3.78 | 4.27 | 4.12 | 5.56

many lights | 29.35% | 29.94% | -10.84% | 3.02 | 3.03 | -0.57 | 10.29 | 10.12 | 5.26 | 7.27 | 7.09 | 5.83

many animated sprites | 13.97% | 15.69% | 14.20% | 3.79 | 4.17 | 1.41 | 27.12 | 26.57 | 9.93 | 23.33 | 22.4 | 8.52

3d scene | 25.79% | 26.78% | 7.46% | 0.49 | 0.49 | 0.15 | 1.9 | 1.83 | 2.01 | 1.41 | 1.34 | 1.86

many cubes | 11.97% | 11.28% | 14.51% | 1.93 | 1.78 | 1.31 | 16.13 | 15.78 | 9.03 | 14.2 | 14 | 7.72

many sprites | 7.14% | 9.42% | -85.42% | 1.72 | 2.23 | -6.15 | 24.09 | 23.68 | 7.2 | 22.37 | 21.45 | 13.35

<!--EndFragment-->

</body>

</html>

#### This PR with pipelining disabled vs Main

Mostly regressions here. I don't think this should be a problem as users that are disabling pipelined rendering are probably running single threaded and not using the parallel executor. The regression is probably mostly due to the switch to use `async_executor::run` instead of `try_tick` and also having one less thread to run systems on. I'll do a writeup on why switching to `run` causes regressions, so we can try to eventually fix it. Using try_tick causes issues when pipeline rendering is enable as seen [here](https://github.com/bevyengine/bevy/pull/6503#issuecomment-1380803518)

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR no pipelining | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | -3.72% | -4.42% | -1.07% | -0.21 | -0.24 | -0.04 | 5.64 | 5.43 | 3.74 | 5.85 | 5.67 | 3.78

many lights | 0.29% | -0.30% | 4.75% | 0.03 | -0.03 | 0.25 | 10.29 | 10.12 | 5.26 | 10.26 | 10.15 | 5.01

many animated sprites | 0.22% | 1.81% | -2.72% | 0.06 | 0.48 | -0.27 | 27.12 | 26.57 | 9.93 | 27.06 | 26.09 | 10.2

3d scene | -15.79% | -14.75% | -31.34% | -0.3 | -0.27 | -0.63 | 1.9 | 1.83 | 2.01 | 2.2 | 2.1 | 2.64

many cubes | -2.85% | -3.30% | 0.00% | -0.46 | -0.52 | 0 | 16.13 | 15.78 | 9.03 | 16.59 | 16.3 | 9.03

many sprites | 2.49% | 2.41% | 0.69% | 0.6 | 0.57 | 0.05 | 24.09 | 23.68 | 7.2 | 23.49 | 23.11 | 7.15

<!--EndFragment-->

</body>

</html>

### Benchmarks

Mostly the same except empty_systems has got a touch slower. The maybe_pipelining+1 column has the compute task pool with an extra thread over default added. This is because pipelining loses one thread over main to execute systems on, since the main thread no longer runs normal systems.

<details>

<summary>Click Me</summary>

```text

group main maybe-pipelining+1

----- ------------------------- ------------------

busy_systems/01x_entities_03_systems 1.07 30.7±1.32µs ? ?/sec 1.00 28.6±1.35µs ? ?/sec

busy_systems/01x_entities_06_systems 1.10 52.1±1.10µs ? ?/sec 1.00 47.2±1.08µs ? ?/sec

busy_systems/01x_entities_09_systems 1.00 74.6±1.36µs ? ?/sec 1.00 75.0±1.93µs ? ?/sec

busy_systems/01x_entities_12_systems 1.03 100.6±6.68µs ? ?/sec 1.00 98.0±1.46µs ? ?/sec

busy_systems/01x_entities_15_systems 1.11 128.5±3.53µs ? ?/sec 1.00 115.5±1.02µs ? ?/sec

busy_systems/02x_entities_03_systems 1.16 50.4±2.56µs ? ?/sec 1.00 43.5±3.00µs ? ?/sec

busy_systems/02x_entities_06_systems 1.00 87.1±1.27µs ? ?/sec 1.05 91.5±7.15µs ? ?/sec

busy_systems/02x_entities_09_systems 1.04 139.9±6.37µs ? ?/sec 1.00 134.0±1.06µs ? ?/sec

busy_systems/02x_entities_12_systems 1.05 179.2±3.47µs ? ?/sec 1.00 170.1±3.17µs ? ?/sec

busy_systems/02x_entities_15_systems 1.01 219.6±3.75µs ? ?/sec 1.00 218.1±2.55µs ? ?/sec

busy_systems/03x_entities_03_systems 1.10 70.6±2.33µs ? ?/sec 1.00 64.3±0.69µs ? ?/sec

busy_systems/03x_entities_06_systems 1.02 130.2±3.11µs ? ?/sec 1.00 128.0±1.34µs ? ?/sec

busy_systems/03x_entities_09_systems 1.00 195.0±10.11µs ? ?/sec 1.00 194.8±1.41µs ? ?/sec

busy_systems/03x_entities_12_systems 1.01 261.7±4.05µs ? ?/sec 1.00 259.8±4.11µs ? ?/sec

busy_systems/03x_entities_15_systems 1.00 318.0±3.04µs ? ?/sec 1.06 338.3±20.25µs ? ?/sec

busy_systems/04x_entities_03_systems 1.00 82.9±0.63µs ? ?/sec 1.02 84.3±0.63µs ? ?/sec

busy_systems/04x_entities_06_systems 1.01 181.7±3.65µs ? ?/sec 1.00 179.8±1.76µs ? ?/sec

busy_systems/04x_entities_09_systems 1.04 265.0±4.68µs ? ?/sec 1.00 255.3±1.98µs ? ?/sec

busy_systems/04x_entities_12_systems 1.00 335.9±3.00µs ? ?/sec 1.05 352.6±15.84µs ? ?/sec

busy_systems/04x_entities_15_systems 1.00 418.6±10.26µs ? ?/sec 1.08 450.2±39.58µs ? ?/sec

busy_systems/05x_entities_03_systems 1.07 114.3±0.95µs ? ?/sec 1.00 106.9±1.52µs ? ?/sec

busy_systems/05x_entities_06_systems 1.08 229.8±2.90µs ? ?/sec 1.00 212.3±4.18µs ? ?/sec

busy_systems/05x_entities_09_systems 1.03 329.3±1.99µs ? ?/sec 1.00 319.2±2.43µs ? ?/sec

busy_systems/05x_entities_12_systems 1.06 454.7±6.77µs ? ?/sec 1.00 430.1±3.58µs ? ?/sec

busy_systems/05x_entities_15_systems 1.03 554.6±6.15µs ? ?/sec 1.00 538.4±23.87µs ? ?/sec

contrived/01x_entities_03_systems 1.00 14.0±0.15µs ? ?/sec 1.08 15.1±0.21µs ? ?/sec

contrived/01x_entities_06_systems 1.04 28.5±0.37µs ? ?/sec 1.00 27.4±0.44µs ? ?/sec

contrived/01x_entities_09_systems 1.00 41.5±4.38µs ? ?/sec 1.02 42.2±2.24µs ? ?/sec

contrived/01x_entities_12_systems 1.06 55.9±1.49µs ? ?/sec 1.00 52.6±1.36µs ? ?/sec

contrived/01x_entities_15_systems 1.02 68.0±2.00µs ? ?/sec 1.00 66.5±0.78µs ? ?/sec

contrived/02x_entities_03_systems 1.03 25.2±0.38µs ? ?/sec 1.00 24.6±0.52µs ? ?/sec

contrived/02x_entities_06_systems 1.00 46.3±0.49µs ? ?/sec 1.04 48.1±4.13µs ? ?/sec

contrived/02x_entities_09_systems 1.02 70.4±0.99µs ? ?/sec 1.00 68.8±1.04µs ? ?/sec

contrived/02x_entities_12_systems 1.06 96.8±1.49µs ? ?/sec 1.00 91.5±0.93µs ? ?/sec

contrived/02x_entities_15_systems 1.02 116.2±0.95µs ? ?/sec 1.00 114.2±1.42µs ? ?/sec

contrived/03x_entities_03_systems 1.00 33.2±0.38µs ? ?/sec 1.01 33.6±0.45µs ? ?/sec

contrived/03x_entities_06_systems 1.00 62.4±0.73µs ? ?/sec 1.01 63.3±1.05µs ? ?/sec

contrived/03x_entities_09_systems 1.02 96.4±0.85µs ? ?/sec 1.00 94.8±3.02µs ? ?/sec

contrived/03x_entities_12_systems 1.01 126.3±4.67µs ? ?/sec 1.00 125.6±2.27µs ? ?/sec

contrived/03x_entities_15_systems 1.03 160.2±9.37µs ? ?/sec 1.00 156.0±1.53µs ? ?/sec

contrived/04x_entities_03_systems 1.02 41.4±3.39µs ? ?/sec 1.00 40.5±0.52µs ? ?/sec

contrived/04x_entities_06_systems 1.00 78.9±1.61µs ? ?/sec 1.02 80.3±1.06µs ? ?/sec

contrived/04x_entities_09_systems 1.02 121.8±3.97µs ? ?/sec 1.00 119.2±1.46µs ? ?/sec

contrived/04x_entities_12_systems 1.00 157.8±1.48µs ? ?/sec 1.01 160.1±1.72µs ? ?/sec

contrived/04x_entities_15_systems 1.00 197.9±1.47µs ? ?/sec 1.08 214.2±34.61µs ? ?/sec

contrived/05x_entities_03_systems 1.00 49.1±0.33µs ? ?/sec 1.01 49.7±0.75µs ? ?/sec

contrived/05x_entities_06_systems 1.00 95.0±0.93µs ? ?/sec 1.00 94.6±0.94µs ? ?/sec

contrived/05x_entities_09_systems 1.01 143.2±1.68µs ? ?/sec 1.00 142.2±2.00µs ? ?/sec

contrived/05x_entities_12_systems 1.00 191.8±2.03µs ? ?/sec 1.01 192.7±7.88µs ? ?/sec

contrived/05x_entities_15_systems 1.02 239.7±3.71µs ? ?/sec 1.00 235.8±4.11µs ? ?/sec

empty_systems/000_systems 1.01 47.8±0.67ns ? ?/sec 1.00 47.5±2.02ns ? ?/sec

empty_systems/001_systems 1.00 1743.2±126.14ns ? ?/sec 1.01 1761.1±70.10ns ? ?/sec

empty_systems/002_systems 1.01 2.2±0.04µs ? ?/sec 1.00 2.2±0.02µs ? ?/sec

empty_systems/003_systems 1.02 2.7±0.09µs ? ?/sec 1.00 2.7±0.16µs ? ?/sec

empty_systems/004_systems 1.00 3.1±0.11µs ? ?/sec 1.00 3.1±0.24µs ? ?/sec

empty_systems/005_systems 1.00 3.5±0.05µs ? ?/sec 1.11 3.9±0.70µs ? ?/sec

empty_systems/010_systems 1.00 5.5±0.12µs ? ?/sec 1.03 5.7±0.17µs ? ?/sec

empty_systems/015_systems 1.00 7.9±0.19µs ? ?/sec 1.06 8.4±0.16µs ? ?/sec

empty_systems/020_systems 1.00 10.4±1.25µs ? ?/sec 1.02 10.6±0.18µs ? ?/sec

empty_systems/025_systems 1.00 12.4±0.39µs ? ?/sec 1.14 14.1±1.07µs ? ?/sec

empty_systems/030_systems 1.00 15.1±0.39µs ? ?/sec 1.05 15.8±0.62µs ? ?/sec

empty_systems/035_systems 1.00 16.9±0.47µs ? ?/sec 1.07 18.0±0.37µs ? ?/sec

empty_systems/040_systems 1.00 19.3±0.41µs ? ?/sec 1.05 20.3±0.39µs ? ?/sec

empty_systems/045_systems 1.00 22.4±1.67µs ? ?/sec 1.02 22.9±0.51µs ? ?/sec

empty_systems/050_systems 1.00 24.4±1.67µs ? ?/sec 1.01 24.7±0.40µs ? ?/sec

empty_systems/055_systems 1.05 28.6±5.27µs ? ?/sec 1.00 27.2±0.70µs ? ?/sec

empty_systems/060_systems 1.02 29.9±1.64µs ? ?/sec 1.00 29.3±0.66µs ? ?/sec

empty_systems/065_systems 1.02 32.7±3.15µs ? ?/sec 1.00 32.1±0.98µs ? ?/sec

empty_systems/070_systems 1.00 33.0±1.42µs ? ?/sec 1.03 34.1±1.44µs ? ?/sec

empty_systems/075_systems 1.00 34.8±0.89µs ? ?/sec 1.04 36.2±0.70µs ? ?/sec

empty_systems/080_systems 1.00 37.0±1.82µs ? ?/sec 1.05 38.7±1.37µs ? ?/sec

empty_systems/085_systems 1.00 38.7±0.76µs ? ?/sec 1.05 40.8±0.83µs ? ?/sec

empty_systems/090_systems 1.00 41.5±1.09µs ? ?/sec 1.04 43.2±0.82µs ? ?/sec

empty_systems/095_systems 1.00 43.6±1.10µs ? ?/sec 1.04 45.2±0.99µs ? ?/sec

empty_systems/100_systems 1.00 46.7±2.27µs ? ?/sec 1.03 48.1±1.25µs ? ?/sec

```

</details>

## Migration Guide

### App `runner` and SubApp `extract` functions are now required to be Send

This was changed to enable pipelined rendering. If this breaks your use case please report it as these new bounds might be able to be relaxed.

## ToDo

* [x] redo benchmarking

* [x] reinvestigate the perf of the try_tick -> run change for task pool scope

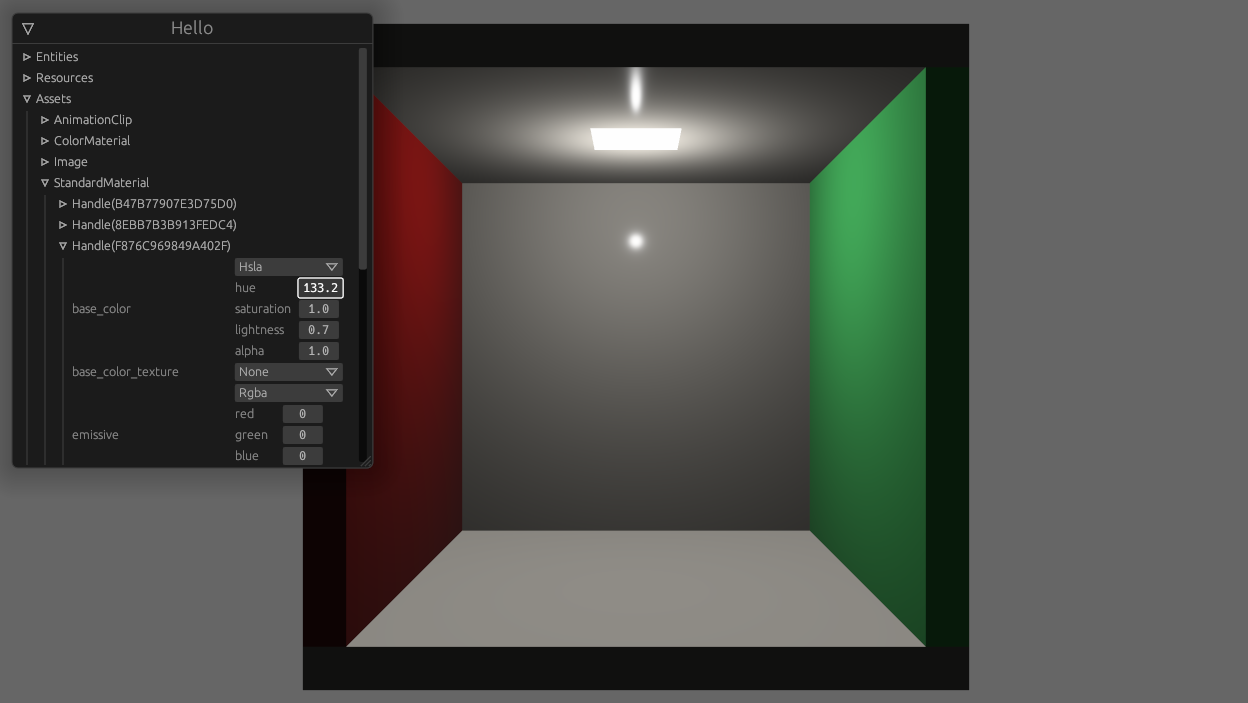

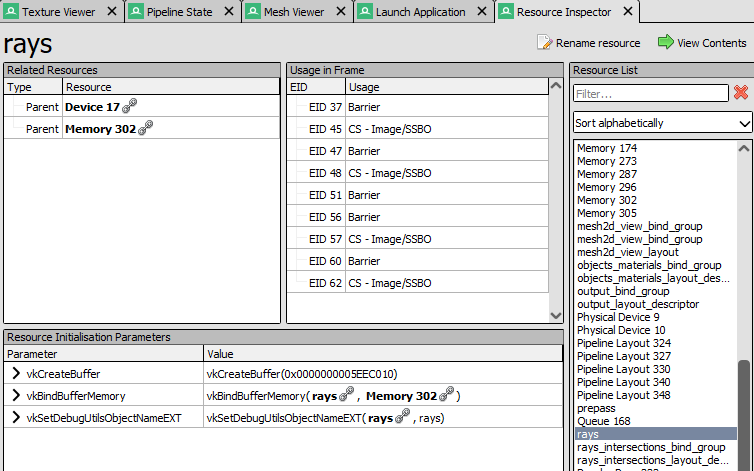

# Objective

- Add a configurable prepass

- A depth prepass is useful for various shader effects and to reduce overdraw. It can be expansive depending on the scene so it's important to be able to disable it if you don't need any effects that uses it or don't suffer from excessive overdraw.

- The goal is to eventually use it for things like TAA, Ambient Occlusion, SSR and various other techniques that can benefit from having a prepass.

## Solution

The prepass node is inserted before the main pass. It runs for each `Camera3d` with a prepass component (`DepthPrepass`, `NormalPrepass`). The presence of one of those components is used to determine which textures are generated in the prepass. When any prepass is enabled, the depth buffer generated will be used by the main pass to reduce overdraw.

The prepass runs for each `Material` created with the `MaterialPlugin::prepass_enabled` option set to `true`. You can overload the shader used by the prepass by using `Material::prepass_vertex_shader()` and/or `Material::prepass_fragment_shader()`. It will also use the `Material::specialize()` for more advanced use cases. It is enabled by default on all materials.

The prepass works on opaque materials and materials using an alpha mask. Transparent materials are ignored.

The `StandardMaterial` overloads the prepass fragment shader to support alpha mask and normal maps.

---

## Changelog

- Add a new `PrepassNode` that runs before the main pass

- Add a `PrepassPlugin` to extract/prepare/queue the necessary data

- Add a `DepthPrepass` and `NormalPrepass` component to control which textures will be created by the prepass and available in later passes.

- Add a new `prepass_enabled` flag to the `MaterialPlugin` that will control if a material uses the prepass or not.

- Add a new `prepass_enabled` flag to the `PbrPlugin` to control if the StandardMaterial uses the prepass. Currently defaults to false.

- Add `Material::prepass_vertex_shader()` and `Material::prepass_fragment_shader()` to control the prepass from the `Material`

## Notes

In bevy's sample 3d scene, the performance is actually worse when enabling the prepass, but on more complex scenes the performance is generally better. I would like more testing on this, but @DGriffin91 has reported a very noticeable improvements in some scenes.

The prepass is also used by @JMS55 for TAA and GTAO

discord thread: <https://discord.com/channels/691052431525675048/1011624228627419187>

This PR was built on top of the work of multiple people

Co-Authored-By: @superdump

Co-Authored-By: @robtfm

Co-Authored-By: @JMS55

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

Fix https://github.com/bevyengine/bevy/issues/4530

- Make it easier to open/close/modify windows by setting them up as `Entity`s with a `Window` component.

- Make multiple windows very simple to set up. (just add a `Window` component to an entity and it should open)

## Solution

- Move all properties of window descriptor to ~components~ a component.

- Replace `WindowId` with `Entity`.

- ~Use change detection for components to update backend rather than events/commands. (The `CursorMoved`/`WindowResized`/... events are kept for user convenience.~

Check each field individually to see what we need to update, events are still kept for user convenience.

---

## Changelog

- `WindowDescriptor` renamed to `Window`.

- Width/height consolidated into a `WindowResolution` component.

- Requesting maximization/minimization is done on the [`Window::state`] field.

- `WindowId` is now `Entity`.

## Migration Guide

- Replace `WindowDescriptor` with `Window`.

- Change `width` and `height` fields in a `WindowResolution`, either by doing

```rust

WindowResolution::new(width, height) // Explicitly

// or using From<_> for tuples for convenience

(1920., 1080.).into()

```

- Replace any `WindowCommand` code to just modify the `Window`'s fields directly and creating/closing windows is now by spawning/despawning an entity with a `Window` component like so:

```rust

let window = commands.spawn(Window { ... }).id(); // open window

commands.entity(window).despawn(); // close window

```

## Unresolved

- ~How do we tell when a window is minimized by a user?~

~Currently using the `Resize(0, 0)` as an indicator of minimization.~

No longer attempting to tell given how finnicky this was across platforms, now the user can only request that a window be maximized/minimized.

## Future work

- Move `exit_on_close` functionality out from windowing and into app(?)

- https://github.com/bevyengine/bevy/issues/5621

- https://github.com/bevyengine/bevy/issues/7099

- https://github.com/bevyengine/bevy/issues/7098

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Speed up the render phase of rendering. An extension of #6885.

`SystemState::get` increments the `World`'s change tick atomically every time it's called. This is notably more expensive than a unsynchronized increment, even without contention. It also updates the archetypes, even when there has been nothing to update when it's called repeatedly.

## Solution

Piggyback off of #6885. Split `SystemState::validate_world_and_update_archetypes` into `SystemState::validate_world` and `SystemState::update_archetypes`, and make the later `pub`. Then create safe variants of `SystemState::get_unchecked_manual` that still validate the `World` but do not update archetypes and do not increment the change tick using `World::read_change_tick` and `World::change_tick`. Update `RenderCommandState` to call `SystemState::update_archetypes` in `Draw::prepare` and `SystemState::get_manual` in `Draw::draw`.

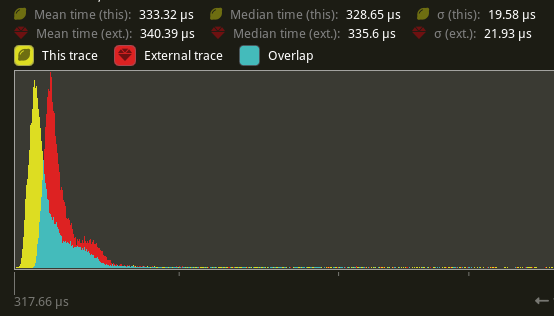

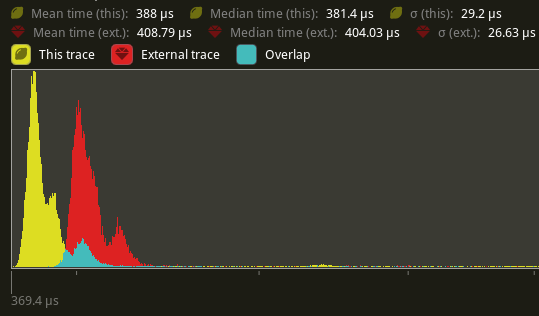

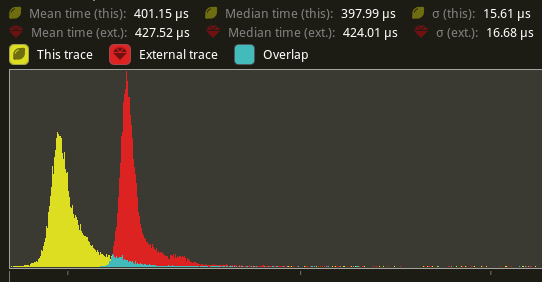

## Performance

There's a slight perf benefit (~2%) for `main_opaque_pass_3d` on `many_foxes` (340.39 us -> 333.32 us)

## Alternatives

We can change `SystemState::get` to not increment the `World`'s change tick. Though this would still put updating the archetypes and an atomic read on the hot-path.

---

## Changelog

Added: `SystemState::get_manual`

Added: `SystemState::get_manual_mut`

Added: `SystemState::update_archetypes`

# Objective

- Allow rendering queue systems to use a `Res<PipelineCache>` even for queueing up new rendering pipelines. This is part of unblocking parallel execution queue systems.

## Solution

- Make `PipelineCache` internally mutable w.r.t to queueing new pipelines. Pipelines are no longer immediately updated into the cache state, but rather queued into a Vec. The Vec of pending new pipelines is then later processed at the same time we actually create the queued pipelines on the GPU device.

---

## Changelog

`PipelineCache` no longer requires mutable access in order to queue render / compute pipelines.

## Migration Guide

* Most usages of `resource_mut::<PipelineCache>` and `ResMut<PipelineCache>` can be changed to `resource::<PipelineCache>` and `Res<PipelineCache>` as long as they don't use any methods requiring mutability - the only public method requiring it is `process_queue`.

# Objective

The documentation of the bevy_render crate is still pretty incomplete.

This PR follows up on #6885 and improves the documentation of the `render_phase` module.

This module contains one of our most important rendering abstractions and the current documentation is pretty confusing. This PR tries to clarify what all of these pieces are for and how they work together to form bevy`s modular rendering logic.

## Solution

### Code Reformating

- I have moved the `rangefinder` into the `render_phase` module since it is only used there.

- I have moved the `PhaseItem` (and the `BatchedPhaseItem`) from `render_phase::draw` over to `render_phase::mod`. This does not change the public-facing API since they are reexported anyway, but this change makes the relation between `RenderPhase` and `PhaseItem` clear and easier to discover.

### Documentation

- revised all documentation in the `render_phase` module

- added a module-level explanation of how `RenderPhase`s, `RenderPass`es, `PhaseItem`s, `Draw` functions, and `RenderCommands` relate to each other and how they are used

---

## Changelog

- The `rangefinder` module has been moved into the `render_phase` module.

## Migration Guide

- The `rangefinder` module has been moved into the `render_phase` module.

```rust

//old

use bevy::render::rangefinder::*;

// new

use bevy::render::render_phase::rangefinder::*;

```

# Objective

- There is a warning when building in release:

```

warning: unused import: `bevy_ecs::system::Local`

--> crates/bevy_render/src/extract_resource.rs:5:5

|

5 | use bevy_ecs::system::Local;

| ^^^^^^^^^^^^^^^^^^^^^^^

|

= note: `#[warn(unused_imports)]` on by default

```

- It's used 59751d6e33/crates/bevy_render/src/extract_resource.rs (L47)

- Fix it

## Solution

- Gate the import

- repeat of #5320

As mentioned in https://github.com/bevyengine/bevy/pull/6530. It allows to not create a new constant and simply having it to show up in the documentation when someone is looking for "transparent" (case insensitive) in rustdoc search.

cc @alice-i-cecile

# Objective

- Fixes#7066

## Solution

- Split the ChangeDetection trait into ChangeDetection and ChangeDetectionMut

- Added Ref as equivalent to &T with change detection

---

## Changelog

- Support for Ref which allow inspecting change detection flags in an immutable way

## Migration Guide

- While bevy prelude includes both ChangeDetection and ChangeDetectionMut any code explicitly referencing ChangeDetection might need to be updated to ChangeDetectionMut or both. Specifically any reading logic requires ChangeDetection while writes requires ChangeDetectionMut.

use bevy_ecs::change_detection::DetectChanges -> use bevy_ecs::change_detection::{DetectChanges, DetectChangesMut}