# Objective

`bevy::render::texture::ImageSettings` was added to prelude in #5566, so these `use` statements are unnecessary and the examples can be made a bit more concise.

## Solution

Remove `use bevy::render::texture::ImageSettings`

# Objective

- Fix / support KTX2 array / cubemap / cubemap array textures

- Fixes#4495 . Supersedes #4514 .

## Solution

- Add `Option<TextureViewDescriptor>` to `Image` to enable configuration of the `TextureViewDimension` of a texture.

- This allows users to set `D2Array`, `D3`, `Cube`, `CubeArray` or whatever they need

- Automatically configure this when loading KTX2

- Transcode all layers and faces instead of just one

- Use the UASTC block size of 128 bits, and the number of blocks in x/y for a given mip level in order to determine the offset of the layer and face within the KTX2 mip level data

- `wgpu` wants data ordered as layer 0 mip 0..n, layer 1 mip 0..n, etc. See https://docs.rs/wgpu/latest/wgpu/util/trait.DeviceExt.html#tymethod.create_texture_with_data

- Reorder the data KTX2 mip X layer Y face Z to `wgpu` layer Y face Z mip X order

- Add a `skybox` example to demonstrate / test loading cubemaps from PNG and KTX2, including ASTC 4x4, BC7, and ETC2 compression for support everywhere. Note that you need to enable the `ktx2,zstd` features to be able to load the compressed textures.

---

## Changelog

- Fixed: KTX2 array / cubemap / cubemap array textures

- Fixes: Validation failure for compressed textures stored in KTX2 where the width/height are not a multiple of the block dimensions.

- Added: `Image` now has an `Option<TextureViewDescriptor>` field to enable configuration of the texture view. This is useful for configuring the `TextureViewDimension` when it is not just a plain 2D texture and the loader could/did not identify what it should be.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

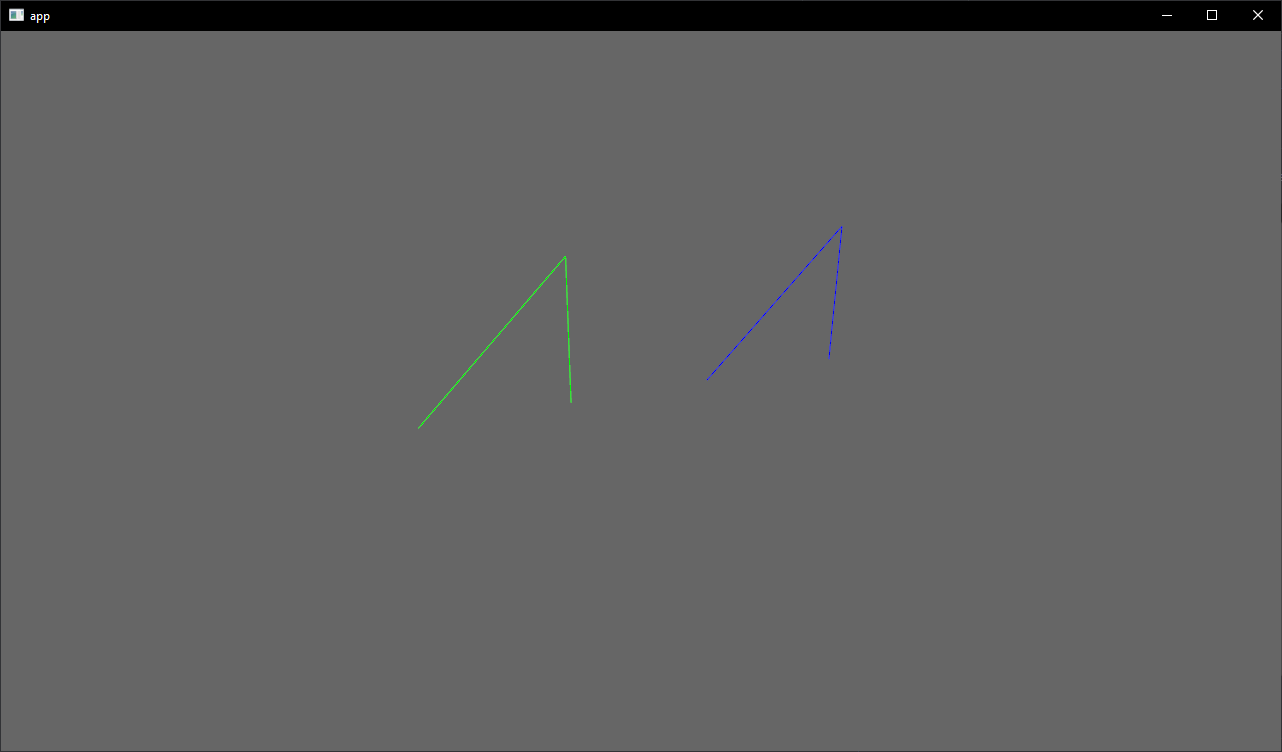

# Objective

- Showcase how to use a `Material` and `Mesh` to spawn 3d lines

## Solution

- Add an example using a simple `Material` and `Mesh` definition to draw a 3d line

- Shows how to use `LineList` and `LineStrip` in combination with a specialized `Material`

## Notes

This isn't just a primitive shape because it needs a special Material, but I think it's a good showcase of the power of the `Material` and `AsBindGroup` abstractions. All of this is easy to figure out when you know these options are a thing, but I think they are hard to discover which is why I think this should be an example and not shipped with bevy.

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Remove unnecessary calls to `iter()`/`iter_mut()`.

Mainly updates the use of queries in our code, docs, and examples.

```rust

// From

for _ in list.iter() {

for _ in list.iter_mut() {

// To

for _ in &list {

for _ in &mut list {

```

We already enable the pedantic lint [clippy::explicit_iter_loop](https://rust-lang.github.io/rust-clippy/stable/) inside of Bevy. However, this only warns for a few known types from the standard library.

## Note for reviewers

As you can see the additions and deletions are exactly equal.

Maybe give it a quick skim to check I didn't sneak in a crypto miner, but you don't have to torture yourself by reading every line.

I already experienced enough pain making this PR :)

Co-authored-by: devil-ira <justthecooldude@gmail.com>

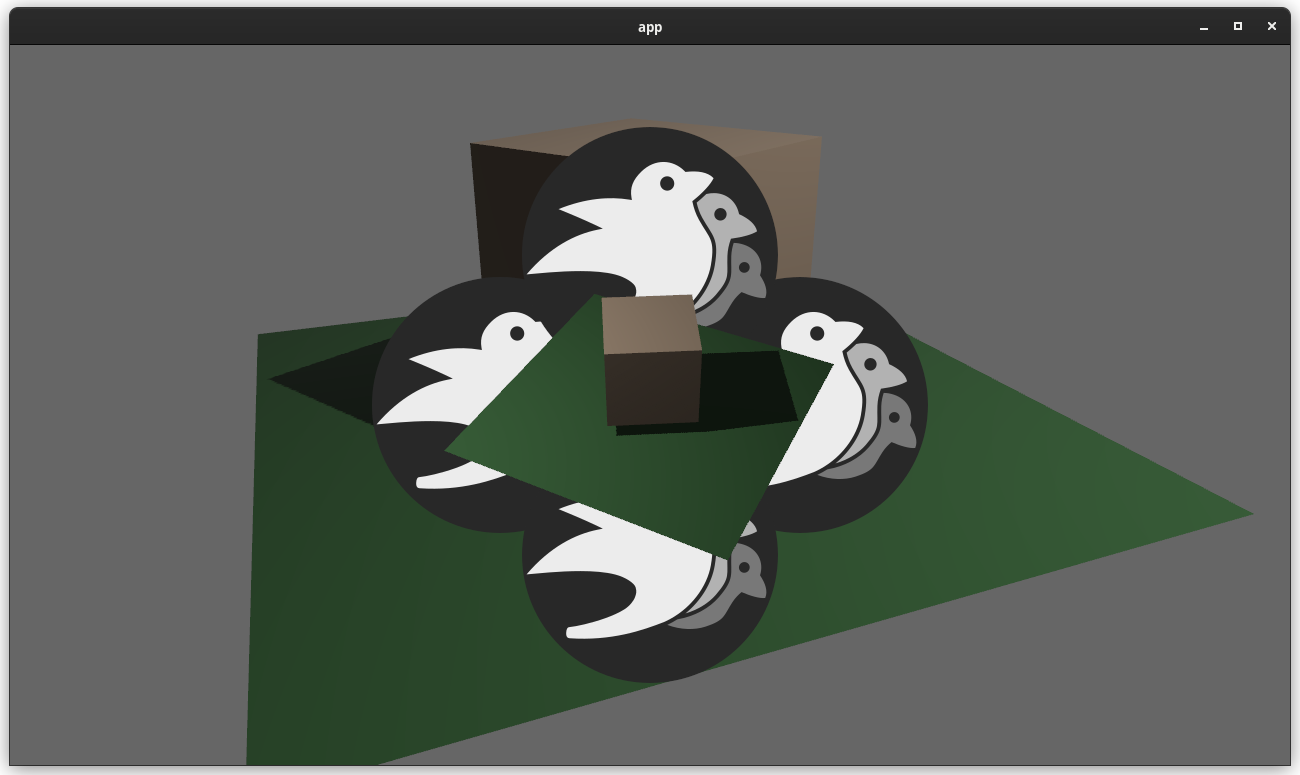

# Objective

add spotlight support

## Solution / Changelog

- add spotlight angles (inner, outer) to ``PointLight`` struct. emitted light is linearly attenuated from 100% to 0% as angle tends from inner to outer. Direction is taken from the existing transform rotation.

- add spotlight direction (vec3) and angles (f32,f32) to ``GpuPointLight`` struct (60 bytes -> 80 bytes) in ``pbr/render/lights.rs`` and ``mesh_view_bind_group.wgsl``

- reduce no-buffer-support max point light count to 204 due to above

- use spotlight data to attenuate light in ``pbr.wgsl``

- do additional cluster culling on spotlights to minimise cost in ``assign_lights_to_clusters``

- changed one of the lights in the lighting demo to a spotlight

- also added a ``spotlight`` demo - probably not justified but so reviewers can see it more easily

## notes

increasing the size of the GpuPointLight struct on my machine reduces the FPS of ``many_lights -- sphere`` from ~150fps to 140fps.

i thought this was a reasonable tradeoff, and felt better than handling spotlights separately which is possible but would mean introducing a new bind group, refactoring light-assignment code and adding new spotlight-specific code in pbr.wgsl. the FPS impact for smaller numbers of lights should be very small.

the cluster culling strategy reintroduces the cluster aabb code which was recently removed... sorry. the aabb is used to get a cluster bounding sphere, which can then be tested fairly efficiently using the strategy described at the end of https://bartwronski.com/2017/04/13/cull-that-cone/. this works well with roughly cubic clusters (where the cluster z size is close to the same as x/y size), less well for other cases like single Z slice / tiled forward rendering. In the worst case we will end up just keeping the culling of the equivalent point light.

Co-authored-by: François <mockersf@gmail.com>

# Objective

Users often ask for help with rotations as they struggle with `Quat`s.

`Quat` is rather complex and has a ton of verbose methods.

## Solution

Add rotation helper methods to `Transform`.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- Spawning a scene is handled as a special case with a command `spawn_scene` that takes an handle but doesn't let you specify anything else. This is the only handle that works that way.

- Workaround for this have been to add the `spawn_scene` on `ChildBuilder` to be able to specify transform of parent, or to make the `SceneSpawner` available to be able to select entities from a scene by their instance id

## Solution

Add a bundle

```rust

pub struct SceneBundle {

pub scene: Handle<Scene>,

pub transform: Transform,

pub global_transform: GlobalTransform,

pub instance_id: Option<InstanceId>,

}

```

and instead of

```rust

commands.spawn_scene(asset_server.load("models/FlightHelmet/FlightHelmet.gltf#Scene0"));

```

you can do

```rust

commands.spawn_bundle(SceneBundle {

scene: asset_server.load("models/FlightHelmet/FlightHelmet.gltf#Scene0"),

..Default::default()

});

```

The scene will be spawned as a child of the entity with the `SceneBundle`

~I would like to remove the command `spawn_scene` in favor of this bundle but didn't do it yet to get feedback first~

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

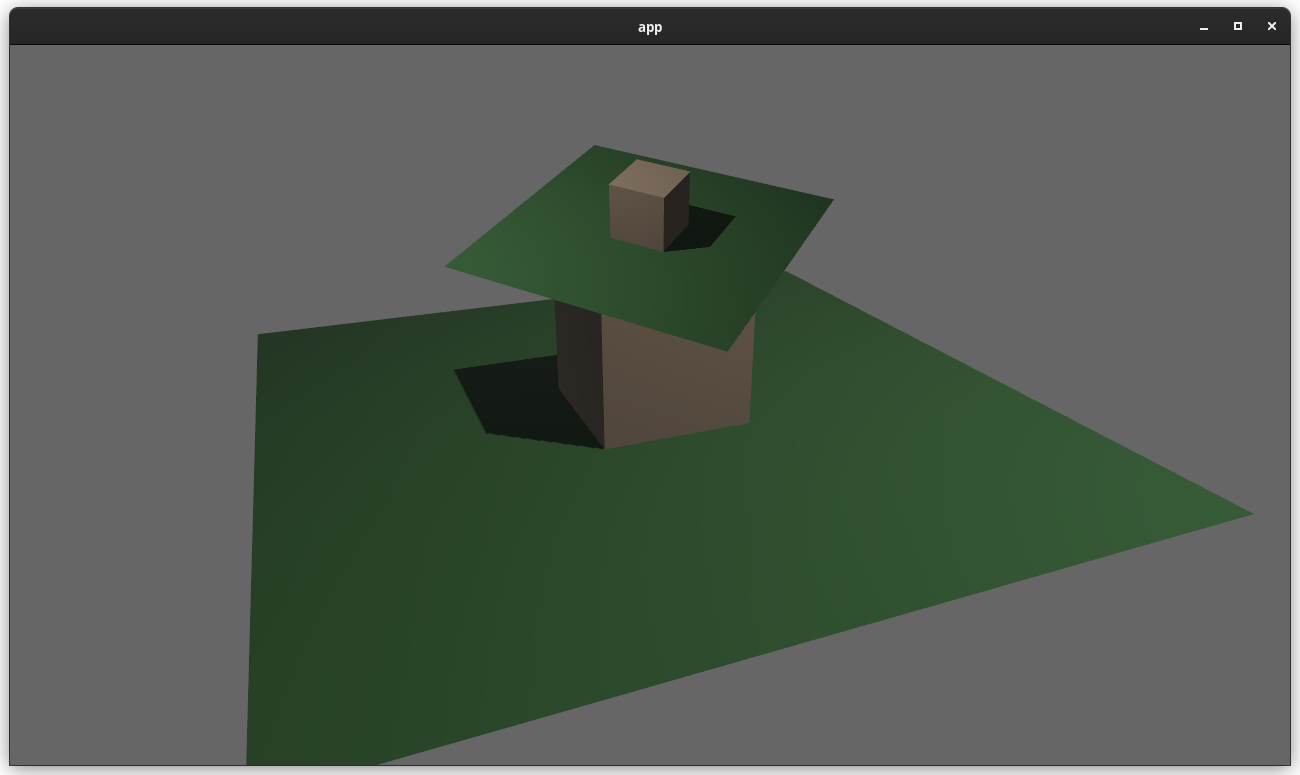

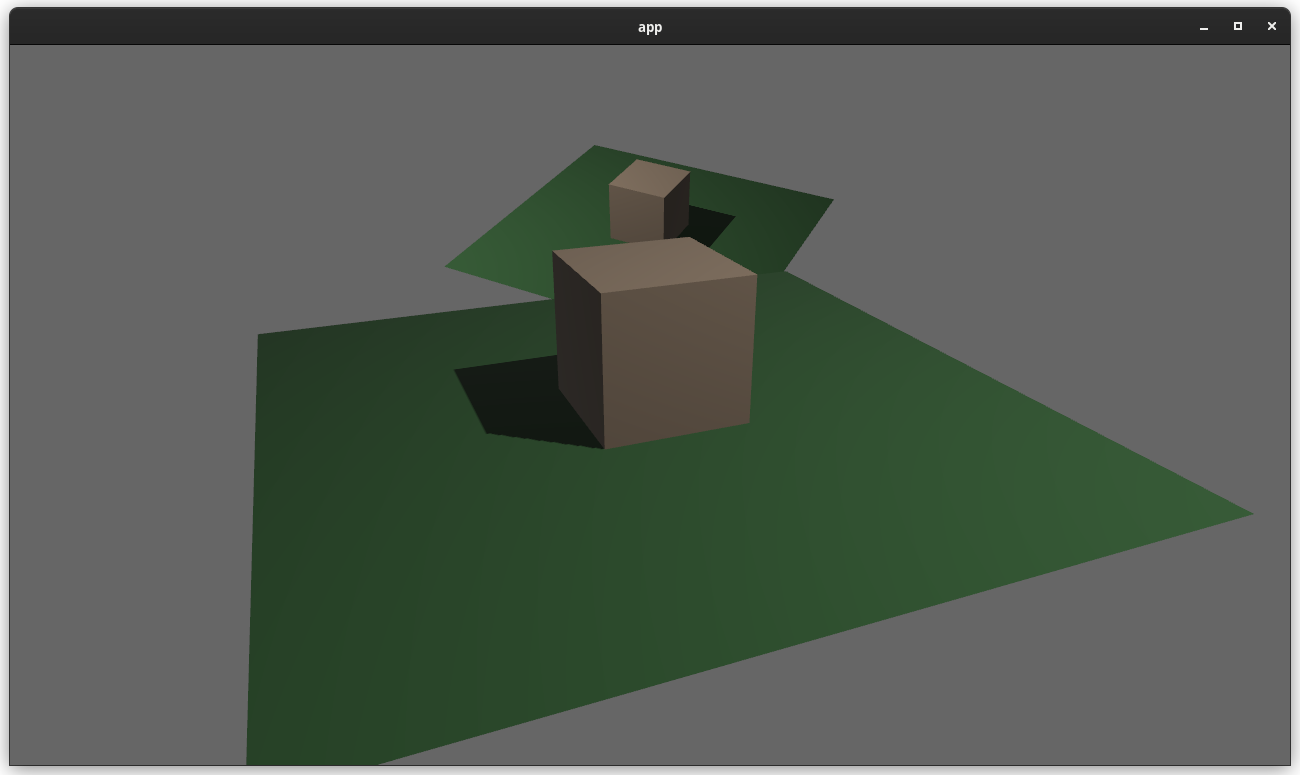

Users should be able to configure depth load operations on cameras. Currently every camera clears depth when it is rendered. But sometimes later passes need to rely on depth from previous passes.

## Solution

This adds the `Camera3d::depth_load_op` field with a new `Camera3dDepthLoadOp` value. This is a custom type because Camera3d uses "reverse-z depth" and this helps us record and document that in a discoverable way. It also gives us more control over reflection + other trait impls, whereas `LoadOp` is owned by the `wgpu` crate.

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

depth_load_op: Camera3dDepthLoadOp::Load,

..default()

},

..default()

});

```

### two_passes example with the "second pass" camera configured to the default (clear depth to 0.0)

### two_passes example with the "second pass" camera configured to "load" the depth

---

## Changelog

### Added

* `Camera3d` now has a `depth_load_op` field, which can configure the Camera's main 3d pass depth loading behavior.

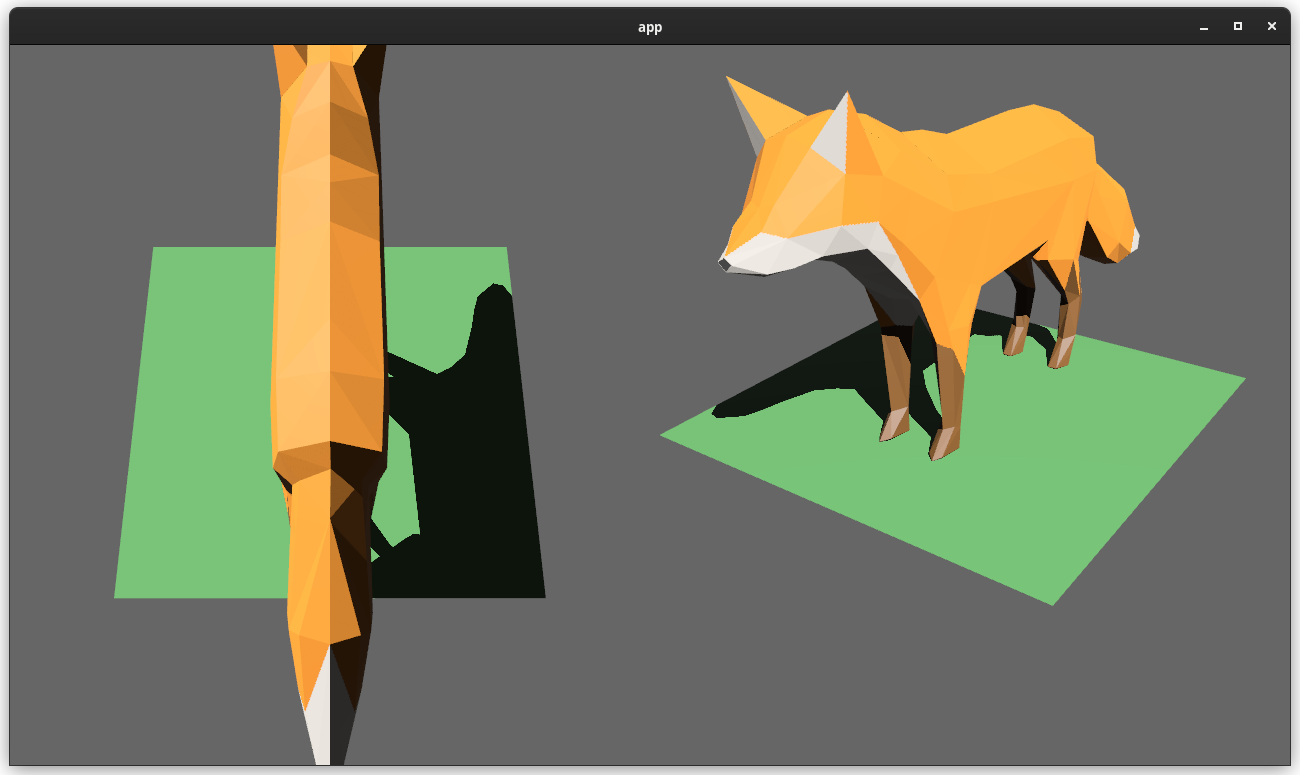

# Objective

Users should be able to render cameras to specific areas of a render target, which enables scenarios like split screen, minimaps, etc.

Builds on the new Camera Driven Rendering added here: #4745Fixes: #202

Alternative to #1389 and #3626 (which are incompatible with the new Camera Driven Rendering)

## Solution

Cameras can now configure an optional "viewport", which defines a rectangle within their render target to draw to. If a `Viewport` is defined, the camera's `CameraProjection`, `View`, and visibility calculations will use the viewport configuration instead of the full render target.

```rust

// This camera will render to the first half of the primary window (on the left side).

commands.spawn_bundle(Camera3dBundle {

camera: Camera {

viewport: Some(Viewport {

physical_position: UVec2::new(0, 0),

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

depth: 0.0..1.0,

}),

..default()

},

..default()

});

```

To account for this, the `Camera` component has received a few adjustments:

* `Camera` now has some new getter functions:

* `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, `projection_matrix`

* All computed camera values are now private and live on the `ComputedCameraValues` field (logical/physical width/height, the projection matrix). They are now exposed on `Camera` via getters/setters This wasn't _needed_ for viewports, but it was long overdue.

---

## Changelog

### Added

* `Camera` components now have a `viewport` field, which can be set to draw to a portion of a render target instead of the full target.

* `Camera` component has some new functions: `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, and `projection_matrix`

* Added a new split_screen example illustrating how to render two cameras to the same scene

## Migration Guide

`Camera::projection_matrix` is no longer a public field. Use the new `Camera::projection_matrix()` method instead:

```rust

// Bevy 0.7

let projection = camera.projection_matrix;

// Bevy 0.8

let projection = camera.projection_matrix();

```

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

# Objective

- Coming from 7a596f1910 (r876310734)

- Simplify the examples regarding addition of `Msaa` Resource with default value.

## Solution

- Remove addition of `Msaa` Resource with default value from examples,

# Objective

Provide a starting point for #3951, or a partial solution.

Providing a few comment blocks to discuss, and hopefully find better one in the process.

## Solution

Since I am pretty new to pretty much anything in this context, I figured I'd just start with a draft for some file level doc blocks. For some of them I found more relevant details (or at least things I considered interessting), for some others there is less.

## Changelog

- Moved some existing comments from main() functions in the 2d examples to the file header level

- Wrote some more comment blocks for most other 2d examples

TODO:

- [x] 2d/sprite_sheet, wasnt able to come up with something good yet

- [x] all other example groups...

Also: Please let me know if the commit style is okay, or to verbose. I could certainly squash these things, or add more details if needed.

I also hope its okay to raise this PR this early, with just a few files changed. Took me long enough and I dont wanted to let it go to waste because I lost motivation to do the whole thing. Additionally I am somewhat uncertain over the style and contents of the commets. So let me know what you thing please.

# Objective

Add support for vertex colors

## Solution

This change is modeled after how vertex tangents are handled, so the shader is conditionally compiled with vertex color support if the mesh has the corresponding attribute set.

Vertex colors are multiplied by the base color. I'm not sure if this is the best for all cases, but may be useful for modifying vertex colors without creating a new mesh.

I chose `VertexFormat::Float32x4`, but I'd prefer 16-bit floats if/when support is added.

## Changelog

### Added

- Vertex colors can be specified using the `Mesh::ATTRIBUTE_COLOR` mesh attribute.

# Objective

- As requested here: https://github.com/bevyengine/bevy/pull/4520#issuecomment-1109302039

- Make it easier to spot issues with built-in shapes

## Solution

https://user-images.githubusercontent.com/200550/165624709-c40dfe7e-0e1e-4bd3-ae52-8ae66888c171.mp4

- Add an example showcasing the built-in 3d shapes with lighting/shadows

- Rotate objects in such a way that all faces are seen by the camera

- Add a UV debug texture

## Discussion

I'm not sure if this is what @alice-i-cecile had in mind, but I adapted the little "torus playground" from the issue linked above to include all built-in shapes.

This exact arrangement might not be particularly scalable if many more shapes are added. Maybe a slow camera pan, or cycling with the keyboard or on a timer, or a sidebar with buttons would work better. If one of the latter options is used, options for showing wireframes or computed flat normals might add some additional utility.

Ideally, I think we'd have a better way of visualizing normals.

Happy to rework this or close it if there's not a consensus around it being useful.

# Objective

Fixes https://github.com/bevyengine/bevy/issues/3499

## Solution

Uses a `HashMap` from `RenderTarget` to sampled textures when preparing `ViewTarget`s to ensure that two passes with the same render target get sampled to the same texture.

This builds on and depends on https://github.com/bevyengine/bevy/pull/3412, so this will be a draft PR until #3412 is merged. All changes for this PR are in the last commit.

# Objective

- Several examples are useful for qualitative tests of Bevy's performance

- By contrast, these are less useful for learning material: they are often relatively complex and have large amounts of setup and are performance optimized.

## Solution

- Move bevymark, many_sprites and many_cubes into the new stress_tests example folder

- Move contributors into the games folder: unlike the remaining examples in the 2d folder, it is not focused on demonstrating a clear feature.

# Objective

Add a system parameter `ParamSet` to be used as container for conflicting parameters.

## Solution

Added two methods to the SystemParamState trait, which gives the access used by the parameter. Did the implementation. Added some convenience methods to FilteredAccessSet. Changed `get_conflicts` to return every conflicting component instead of breaking on the first conflicting `FilteredAccess`.

Co-authored-by: bilsen <40690317+bilsen@users.noreply.github.com>

# Objective

A common pattern in Rust is the [newtype](https://doc.rust-lang.org/rust-by-example/generics/new_types.html). This is an especially useful pattern in Bevy as it allows us to give common/foreign types different semantics (such as allowing it to implement `Component` or `FromWorld`) or to simply treat them as a "new type" (clever). For example, it allows us to wrap a common `Vec<String>` and do things like:

```rust

#[derive(Component)]

struct Items(Vec<String>);

fn give_sword(query: Query<&mut Items>) {

query.single_mut().0.push(String::from("Flaming Poisoning Raging Sword of Doom"));

}

```

> We could then define another struct that wraps `Vec<String>` without anything clashing in the query.

However, one of the worst parts of this pattern is the ugly `.0` we have to write in order to access the type we actually care about. This is why people often implement `Deref` and `DerefMut` in order to get around this.

Since it's such a common pattern, especially for Bevy, it makes sense to add a derive macro to automatically add those implementations.

## Solution

Added a derive macro for `Deref` and another for `DerefMut` (both exported into the prelude). This works on all structs (including tuple structs) as long as they only contain a single field:

```rust

#[derive(Deref)]

struct Foo(String);

#[derive(Deref, DerefMut)]

struct Bar {

name: String,

}

```

This allows us to then remove that pesky `.0`:

```rust

#[derive(Component, Deref, DerefMut)]

struct Items(Vec<String>);

fn give_sword(query: Query<&mut Items>) {

query.single_mut().push(String::from("Flaming Poisoning Raging Sword of Doom"));

}

```

### Alternatives

There are other alternatives to this such as by using the [`derive_more`](https://crates.io/crates/derive_more) crate. However, it doesn't seem like we need an entire crate just yet since we only need `Deref` and `DerefMut` (for now).

### Considerations

One thing to consider is that the Rust std library recommends _not_ using `Deref` and `DerefMut` for things like this: "`Deref` should only be implemented for smart pointers to avoid confusion" ([reference](https://doc.rust-lang.org/std/ops/trait.Deref.html)). Personally, I believe it makes sense to use it in the way described above, but others may disagree.

### Additional Context

Discord: https://discord.com/channels/691052431525675048/692572690833473578/956648422163746827 (controversiality discussed [here](https://discord.com/channels/691052431525675048/692572690833473578/956711911481835630))

---

## Changelog

- Add `Deref` derive macro (exported to prelude)

- Add `DerefMut` derive macro (exported to prelude)

- Updated most newtypes in examples to use one or both derives

Co-authored-by: MrGVSV <49806985+MrGVSV@users.noreply.github.com>

# Objective

- Make the example a little easier to follow by removing unnecessary steps.

## Solution

- `Assets<Image>` will give us a handle for our render texture if we call `add()` instead of `set()`. No need to set it manually; one less thing to think about while reading the example.

**Problem**

- whenever you want more than one of the builtin cameras (for example multiple windows, split screen, portals), you need to add a render graph node that executes the correct sub graph, extract the camera into the render world and add the correct `RenderPhase<T>` components

- querying for the 3d camera is annoying because you need to compare the camera's name to e.g. `CameraPlugin::CAMERA_3d`

**Solution**

- Introduce the marker types `Camera3d`, `Camera2d` and `CameraUi`

-> `Query<&mut Transform, With<Camera3d>>` works

- `PerspectiveCameraBundle::new_3d()` and `PerspectiveCameraBundle::<Camera3d>::default()` contain the `Camera3d` marker

- `OrthographicCameraBundle::new_3d()` has `Camera3d`, `OrthographicCameraBundle::new_2d()` has `Camera2d`

- remove `ActiveCameras`, `ExtractedCameraNames`

- run 2d, 3d and ui passes for every camera of their respective marker

-> no custom setup for multiple windows example needed

**Open questions**

- do we need a replacement for `ActiveCameras`? What about a component `ActiveCamera { is_active: bool }` similar to `Visibility`?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Make the many_cubes example more interesting (and look more like many_sprites)

## Solution

- Actually display many cubes

- Move the camera around

Adds a `default()` shorthand for `Default::default()` ... because life is too short to constantly type `Default::default()`.

```rust

use bevy::prelude::*;

#[derive(Default)]

struct Foo {

bar: usize,

baz: usize,

}

// Normally you would do this:

let foo = Foo {

bar: 10,

..Default::default()

};

// But now you can do this:

let foo = Foo {

bar: 10,

..default()

};

```

The examples have been adapted to use `..default()`. I've left internal crates as-is for now because they don't pull in the bevy prelude, and the ergonomics of each case should be considered individually.

# Objective

- In the large majority of cases, users were calling `.unwrap()` immediately after `.get_resource`.

- Attempting to add more helpful error messages here resulted in endless manual boilerplate (see #3899 and the linked PRs).

## Solution

- Add an infallible variant named `.resource` and so on.

- Use these infallible variants over `.get_resource().unwrap()` across the code base.

## Notes

I did not provide equivalent methods on `WorldCell`, in favor of removing it entirely in #3939.

## Migration Guide

Infallible variants of `.get_resource` have been added that implicitly panic, rather than needing to be unwrapped.

Replace `world.get_resource::<Foo>().unwrap()` with `world.resource::<Foo>()`.

## Impact

- `.unwrap` search results before: 1084

- `.unwrap` search results after: 942

- internal `unwrap_or_else` calls added: 4

- trivial unwrap calls removed from tests and code: 146

- uses of the new `try_get_resource` API: 11

- percentage of the time the unwrapping API was used internally: 93%

# Objective

Will fix#3377 and #3254

## Solution

Use an enum to represent either a `WindowId` or `Handle<Image>` in place of `Camera::window`.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- `WgpuOptions` is mutated to be updated with the actual device limits and features, but this information is readily available to both the main and render worlds through the `RenderDevice` which has .limits() and .features() methods

- Information about the adapter in terms of its name, the backend in use, etc were not being exposed but have clear use cases for being used to take decisions about what rendering code to use. For example, if something works well on AMD GPUs but poorly on Intel GPUs. Or perhaps something works well in Vulkan but poorly in DX12.

## Solution

- Stop mutating `WgpuOptions `and don't insert the updated values into the main and render worlds

- Return `AdapterInfo` from `initialize_renderer` and insert it into the main and render worlds

- Use `RenderDevice` limits in the lighting code that was using `WgpuOptions.limits`.

- Renamed `WgpuOptions` to `WgpuSettings`

# Objective

- Bevy currently has no simple way to make an "empty" Entity work correctly in a Hierachy.

- The current Solution is to insert a Tuple instead:

```rs

.insert_bundle((Transform::default(), GlobalTransform::default()))

```

## Solution

* Add a `TransformBundle` that combines the Components:

```rs

.insert_bundle(TransformBundle::default())

```

* The code is based on #2331, except for missing the more controversial usage of `TransformBundle` as a Sub-bundle in preexisting Bundles.

Co-authored-by: MinerSebas <66798382+MinerSebas@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

#3457 adds the `doc_markdown` clippy lint, which checks doc comments to make sure code identifiers are escaped with backticks. This causes a lot of lint errors, so this is one of a number of PR's that will fix those lint errors one crate at a time.

This PR fixes lints in the `examples` folder.

This makes the [New Bevy Renderer](#2535) the default (and only) renderer. The new renderer isn't _quite_ ready for the final release yet, but I want as many people as possible to start testing it so we can identify bugs and address feedback prior to release.

The examples are all ported over and operational with a few exceptions:

* I removed a good portion of the examples in the `shader` folder. We still have some work to do in order to make these examples possible / ergonomic / worthwhile: #3120 and "high level shader material plugins" are the big ones. This is a temporary measure.

* Temporarily removed the multiple_windows example: doing this properly in the new renderer will require the upcoming "render targets" changes. Same goes for the render_to_texture example.

* Removed z_sort_debug: entity visibility sort info is no longer available in app logic. we could do this on the "render app" side, but i dont consider it a priority.

Add an example that demonstrates the difference between no MSAA and MSAA 4x. This is also useful for testing panics when resizing the window using MSAA. This is on top of #3042 .

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Upgrades both the old and new renderer to wgpu 0.11 (and naga 0.7). This builds on @zicklag's work here #2556.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This implements the most minimal variant of #1843 - a derive for marker trait. This is a prerequisite to more complicated features like statically defined storage type or opt-out component reflection.

In order to make component struct's purpose explicit and avoid misuse, it must be annotated with `#[derive(Component)]` (manual impl is discouraged for compatibility). Right now this is just a marker trait, but in the future it might be expanded. Making this change early allows us to make further changes later without breaking backward compatibility for derive macro users.

This already prevents a lot of issues, like using bundles in `insert` calls. Primitive types are no longer valid components as well. This can be easily worked around by adding newtype wrappers and deriving `Component` for them.

One funny example of prevented bad code (from our own tests) is when an newtype struct or enum variant is used. Previously, it was possible to write `insert(Newtype)` instead of `insert(Newtype(value))`. That code compiled, because function pointers (in this case newtype struct constructor) implement `Send + Sync + 'static`, so we allowed them to be used as components. This is no longer the case and such invalid code will trigger a compile error.

Co-authored-by: = <=>

Co-authored-by: TheRawMeatball <therawmeatball@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

This updates the `pipelined-rendering` branch to use the latest `bevy_ecs` from `main`. This accomplishes a couple of goals:

1. prepares for upcoming `custom-shaders` branch changes, which were what drove many of the recent bevy_ecs changes on `main`

2. prepares for the soon-to-happen merge of `pipelined-rendering` into `main`. By including bevy_ecs changes now, we make that merge simpler / easier to review.

I split this up into 3 commits:

1. **add upstream bevy_ecs**: please don't bother reviewing this content. it has already received thorough review on `main` and is a literal copy/paste of the relevant folders (the old folders were deleted so the directories are literally exactly the same as `main`).

2. **support manual buffer application in stages**: this is used to enable the Extract step. we've already reviewed this once on the `pipelined-rendering` branch, but its worth looking at one more time in the new context of (1).

3. **support manual archetype updates in QueryState**: same situation as (2).

# Objective

Forward perspective projections have poor floating point precision distribution over the depth range. Reverse projections fair much better, and instead of having to have a far plane, with the reverse projection, using an infinite far plane is not a problem. The infinite reverse perspective projection has become the industry standard. The renderer rework is a great time to migrate to it.

## Solution

All perspective projections, including point lights, have been moved to using `glam::Mat4::perspective_infinite_reverse_rh()` and so have no far plane. As various depth textures are shared between orthographic and perspective projections, a quirk of this PR is that the near and far planes of the orthographic projection are swapped when the Mat4 is computed. This has no impact on 2D/3D orthographic projection usage, and provides consistency in shaders, texture clear values, etc. throughout the codebase.

## Known issues

For some reason, when looking along -Z, all geometry is black. The camera can be translated up/down / strafed left/right and geometry will still be black. Moving forward/backward or rotating the camera away from looking exactly along -Z causes everything to work as expected.

I have tried to debug this issue but both in macOS and Windows I get crashes when doing pixel debugging. If anyone could reproduce this and debug it I would be very grateful. Otherwise I will have to try to debug it further without pixel debugging, though the projections and such all looked fine to me.