# Objective

- Significantly reduce the size of MeshUniform by only including

necessary data.

## Solution

Local to world, model transforms are affine. This means they only need a

4x3 matrix to represent them.

`MeshUniform` stores the current, and previous model transforms, and the

inverse transpose of the current model transform, all as 4x4 matrices.

Instead we can store the current, and previous model transforms as 4x3

matrices, and we only need the upper-left 3x3 part of the inverse

transpose of the current model transform. This change allows us to

reduce the serialized MeshUniform size from 208 bytes to 144 bytes,

which is over a 30% saving in data to serialize, and VRAM bandwidth and

space.

## Benchmarks

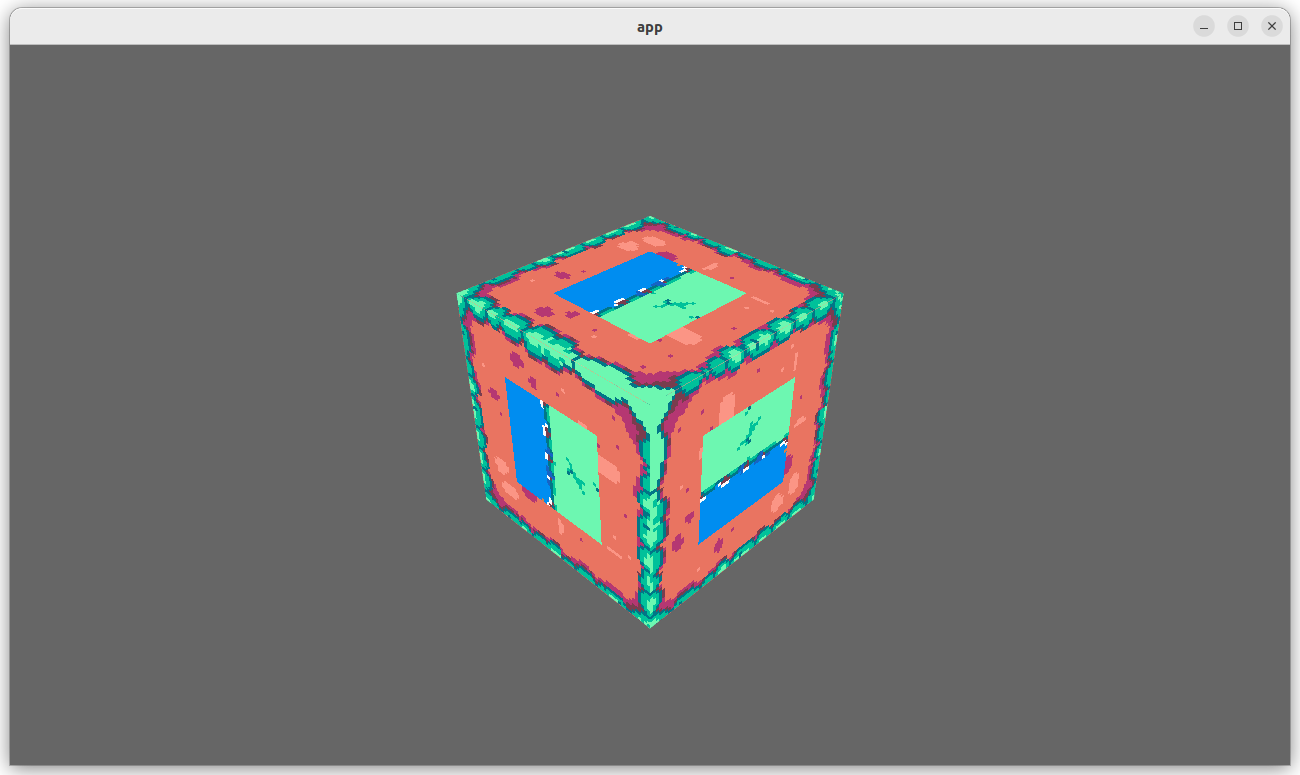

On an M1 Max, running `many_cubes -- sphere`, main is in yellow, this PR

is in red:

<img width="1484" alt="Screenshot 2023-08-11 at 02 36 43"

src="https://github.com/bevyengine/bevy/assets/302146/7d99c7b3-f2bb-4004-a8d0-4c00f755cb0d">

A reduction in frame time of ~14%.

---

## Changelog

- Changed: Redefined `MeshUniform` to improve performance by using 4x3

affine transforms and reconstructing 4x4 matrices in the shader. Helper

functions were added to `bevy_pbr::mesh_functions` to unpack the data.

`affine_to_square` converts the packed 4x3 in 3x4 matrix data to a 4x4

matrix. `mat2x4_f32_to_mat3x3` converts the 3x3 in mat2x4 + f32 matrix

data back into a 3x3.

## Migration Guide

Shader code before:

```

var model = mesh[instance_index].model;

```

Shader code after:

```

#import bevy_pbr::mesh_functions affine_to_square

var model = affine_to_square(mesh[instance_index].model);

```

naga and wgpu should polyfill WGSL instance_index functionality where it

is not available in GLSL. Until that is done, we can work around it in

bevy using a push constant which is converted to a uniform by naga and

wgpu.

# Objective

- Fixes#9375

## Solution

- Use a push constant to pass in the base instance to the shader on

WebGL2 so that base instance + gl_InstanceID is used to correctly

represent the instance index.

## TODO

- [ ] Benchmark vs per-object dynamic offset MeshUniform as this will

now push a uniform value per-draw as well as update the dynamic offset

per-batch.

- [x] Test on DX12 AMD/NVIDIA to check that this PR does not regress any

problems that were observed there. (@Elabajaba @robtfm were testing that

last time - help appreciated. <3 )

---

## Changelog

- Added: `bevy_render::instance_index` shader import which includes a

workaround for the lack of a WGSL `instance_index` polyfill for WebGL2

in naga and wgpu for the time being. It uses a push_constant which gets

converted to a plain uniform by naga and wgpu.

## Migration Guide

Shader code before:

```

struct Vertex {

@builtin(instance_index) instance_index: u32,

...

}

@vertex

fn vertex(vertex_no_morph: Vertex) -> VertexOutput {

...

var model = mesh[vertex_no_morph.instance_index].model;

```

After:

```

#import bevy_render::instance_index

struct Vertex {

@builtin(instance_index) instance_index: u32,

...

}

@vertex

fn vertex(vertex_no_morph: Vertex) -> VertexOutput {

...

var model = mesh[bevy_render::instance_index::get_instance_index(vertex_no_morph.instance_index)].model;

```

# Objective

The `post_processing` example is currently broken when run with webgl2.

```

cargo run --example post_processing --target=wasm32-unknown-unknown

```

```

wasm.js:387 panicked at 'wgpu error: Validation Error

Caused by:

In Device::create_render_pipeline

note: label = `post_process_pipeline`

In the provided shader, the type given for group 0 binding 2 has a size of 4. As the device does not support `DownlevelFlags::BUFFER_BINDINGS_NOT_16_BYTE_ALIGNED`, the type must have a size that is a multiple of 16 bytes.

```

I bisected the breakage to c7eaedd6a1.

## Solution

Add padding when using webgl2

# Objective

This PR continues https://github.com/bevyengine/bevy/pull/8885

It aims to improve the `Mesh` documentation in the following ways:

- Put everything at the "top level" instead of the "impl".

- Explain better what is a Mesh, how it can be created, and that it can

be edited.

- Explain it can be used with a `Material`, and mention

`StandardMaterial`, `PbrBundle`, `ColorMaterial`, and

`ColorMesh2dBundle` since those cover most cases

- Mention the glTF/Bevy vocabulary discrepancy for "Mesh"

- Add an image for the example

- Various nitpicky modifications

## Note

- The image I added is 90.3ko which I think is small enough?

- Since rustdoc doesn't allow cross-reference not in dependencies of a

subcrate [yet](https://github.com/rust-lang/rust/issues/74481), I have a

lot of backtick references that are not links :(

- Since rustdoc doesn't allow linking to code in the crate (?) I put

link to github directly.

- Since rustdoc doesn't allow embed images in doc

[yet](https://github.com/rust-lang/rust/issues/32104), maybe

[soon](https://github.com/rust-lang/rfcs/pull/3397), I had to put only a

link to the image. I don't think it's worth adding

[embed_doc_image](https://docs.rs/embed-doc-image/latest/embed_doc_image/)

as a dependency for this.

# Objective

- Fix shader_material_glsl example

## Solution

- Expose the `PER_OBJECT_BUFFER_BATCH_SIZE` shader def through the

default `MeshPipeline` specialization.

- Make use of it in the `custom_material.vert` shader to access the mesh

binding.

---

## Changelog

- Added: Exposed the `PER_OBJECT_BUFFER_BATCH_SIZE` shader def through

the default `MeshPipeline` specialization to use in custom shaders not

using bevy_pbr::mesh_bindings that still want to use the mesh binding in

some way.

# Objective

- Reduce the number of rebindings to enable batching of draw commands

## Solution

- Use the new `GpuArrayBuffer` for `MeshUniform` data to store all

`MeshUniform` data in arrays within fewer bindings

- Sort opaque/alpha mask prepass, opaque/alpha mask main, and shadow

phases also by the batch per-object data binding dynamic offset to

improve performance on WebGL2.

---

## Changelog

- Changed: Per-object `MeshUniform` data is now managed by

`GpuArrayBuffer` as arrays in buffers that need to be indexed into.

## Migration Guide

Accessing the `model` member of an individual mesh object's shader

`Mesh` struct the old way where each `MeshUniform` was stored at its own

dynamic offset:

```rust

struct Vertex {

@location(0) position: vec3<f32>,

};

fn vertex(vertex: Vertex) -> VertexOutput {

var out: VertexOutput;

out.clip_position = mesh_position_local_to_clip(

mesh.model,

vec4<f32>(vertex.position, 1.0)

);

return out;

}

```

The new way where one needs to index into the array of `Mesh`es for the

batch:

```rust

struct Vertex {

@builtin(instance_index) instance_index: u32,

@location(0) position: vec3<f32>,

};

fn vertex(vertex: Vertex) -> VertexOutput {

var out: VertexOutput;

out.clip_position = mesh_position_local_to_clip(

mesh[vertex.instance_index].model,

vec4<f32>(vertex.position, 1.0)

);

return out;

}

```

Note that using the instance_index is the default way to pass the

per-object index into the shader, but if you wish to do custom rendering

approaches you can pass it in however you like.

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Elabajaba <Elabajaba@users.noreply.github.com>

# Objective

#5703 caused the normal prepass to fail as the prepass uses

`pbr_functions::apply_normal_mapping`, which uses

`mesh_view_bindings::view` to determine mip bias, which conflicts with

`prepass_bindings::view`.

## Solution

pass the mip bias to the `apply_normal_mapping` function explicitly.

# Objective

Fixes#8967

## Solution

I think this example was just missed in #5703. I made the same sort of

changes to `fallback_image` that were made in other examples in that PR.

# Objective

operate on naga IR directly to improve handling of shader modules.

- give codespan reporting into imported modules

- allow glsl to be used from wgsl and vice-versa

the ultimate objective is to make it possible to

- provide user hooks for core shader functions (to modify light

behaviour within the standard pbr pipeline, for example)

- make automatic binding slot allocation possible

but ... since this is already big, adds some value and (i think) is at

feature parity with the existing code, i wanted to push this now.

## Solution

i made a crate called naga_oil (https://github.com/robtfm/naga_oil -

unpublished for now, could be part of bevy) which manages modules by

- building each module independantly to naga IR

- creating "header" files for each supported language, which are used to

build dependent modules/shaders

- make final shaders by combining the shader IR with the IR for imported

modules

then integrated this into bevy, replacing some of the existing shader

processing stuff. also reworked examples to reflect this.

## Migration Guide

shaders that don't use `#import` directives should work without changes.

the most notable user-facing difference is that imported

functions/variables/etc need to be qualified at point of use, and

there's no "leakage" of visible stuff into your shader scope from the

imports of your imports, so if you used things imported by your imports,

you now need to import them directly and qualify them.

the current strategy of including/'spreading' `mesh_vertex_output`

directly into a struct doesn't work any more, so these need to be

modified as per the examples (e.g. color_material.wgsl, or many others).

mesh data is assumed to be in bindgroup 2 by default, if mesh data is

bound into bindgroup 1 instead then the shader def `MESH_BINDGROUP_1`

needs to be added to the pipeline shader_defs.

# Objective

- Add morph targets to `bevy_pbr` (closes#5756) & load them from glTF

- Supersedes #3722

- Fixes#6814

[Morph targets][1] (also known as shape interpolation, shape keys, or

blend shapes) allow animating individual vertices with fine grained

controls. This is typically used for facial expressions. By specifying

multiple poses as vertex offset, and providing a set of weight of each

pose, it is possible to define surprisingly realistic transitions

between poses. Blending between multiple poses also allow composition.

Morph targets are part of the [gltf standard][2] and are a feature of

Unity and Unreal, and babylone.js, it is only natural to implement them

in bevy.

## Solution

This implementation of morph targets uses a 3d texture where each pixel

is a component of an animated attribute. Each layer is a different

target. We use a 2d texture for each target, because the number of

attribute×components×animated vertices is expected to always exceed the

maximum pixel row size limit of webGL2. It copies fairly closely the way

skinning is implemented on the CPU side, while on the GPU side, the

shader morph target implementation is a relatively trivial detail.

We add an optional `morph_texture` to the `Mesh` struct. The

`morph_texture` is built through a method that accepts an iterator over

attribute buffers.

The `MorphWeights` component, user-accessible, controls the blend of

poses used by mesh instances (so that multiple copy of the same mesh may

have different weights), all the weights are uploaded to a uniform

buffer of 256 `f32`. We limit to 16 poses per mesh, and a total of 256

poses.

More literature:

* Old babylone.js implementation (vertex attribute-based):

https://www.eternalcoding.com/dev-log-1-morph-targets/

* Babylone.js implementation (similar to ours):

https://www.youtube.com/watch?v=LBPRmGgU0PE

* GPU gems 3:

https://developer.nvidia.com/gpugems/gpugems3/part-i-geometry/chapter-3-directx-10-blend-shapes-breaking-limits

* Development discord thread

https://discord.com/channels/691052431525675048/1083325980615114772https://user-images.githubusercontent.com/26321040/231181046-3bca2ab2-d4d9-472e-8098-639f1871ce2e.mp4https://github.com/bevyengine/bevy/assets/26321040/d2a0c544-0ef8-45cf-9f99-8c3792f5a258

## Acknowledgements

* Thanks to `storytold` for sponsoring the feature

* Thanks to `superdump` and `james7132` for guidance and help figuring

out stuff

## Future work

- Handling of less and more attributes (eg: animated uv, animated

arbitrary attributes)

- Dynamic pose allocation (so that zero-weighted poses aren't uploaded

to GPU for example, enables much more total poses)

- Better animation API, see #8357

----

## Changelog

- Add morph targets to bevy meshes

- Support up to 64 poses per mesh of individually up to 116508 vertices,

animation currently strictly limited to the position, normal and tangent

attributes.

- Load a morph target using `Mesh::set_morph_targets`

- Add `VisitMorphTargets` and `VisitMorphAttributes` traits to

`bevy_render`, this allows defining morph targets (a fairly complex and

nested data structure) through iterators (ie: single copy instead of

passing around buffers), see documentation of those traits for details

- Add `MorphWeights` component exported by `bevy_render`

- `MorphWeights` control mesh's morph target weights, blending between

various poses defined as morph targets.

- `MorphWeights` are directly inherited by direct children (single level

of hierarchy) of an entity. This allows controlling several mesh

primitives through a unique entity _as per GLTF spec_.

- Add `MorphTargetNames` component, naming each indices of loaded morph

targets.

- Load morph targets weights and buffers in `bevy_gltf`

- handle morph targets animations in `bevy_animation` (previously, it

was a `warn!` log)

- Add the `MorphStressTest.gltf` asset for morph targets testing, taken

from the glTF samples repo, CC0.

- Add morph target manipulation to `scene_viewer`

- Separate the animation code in `scene_viewer` from the rest of the

code, reducing `#[cfg(feature)]` noise

- Add the `morph_targets.rs` example to show off how to manipulate morph

targets, loading `MorpStressTest.gltf`

## Migration Guide

- (very specialized, unlikely to be touched by 3rd parties)

- `MeshPipeline` now has a single `mesh_layouts` field rather than

separate `mesh_layout` and `skinned_mesh_layout` fields. You should

handle all possible mesh bind group layouts in your implementation

- You should also handle properly the new `MORPH_TARGETS` shader def and

mesh pipeline key. A new function is exposed to make this easier:

`setup_moprh_and_skinning_defs`

- The `MeshBindGroup` is now `MeshBindGroups`, cached bind groups are

now accessed through the `get` method.

[1]: https://en.wikipedia.org/wiki/Morph_target_animation

[2]:

https://registry.khronos.org/glTF/specs/2.0/glTF-2.0.html#morph-targets

---------

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Fixes#6920

## Solution

From the issue discussion:

> From looking at the `AsBindGroup` derive macro implementation, the

fallback image's `TextureView` is used when the binding's

`Option<Handle<Image>>` is `None`. Because this relies on already having

a view that matches the desired binding dimensions, I think the solution

will require creating a separate `GpuImage` for each possible

`TextureViewDimension`.

---

## Changelog

Users can now rely on `FallbackImage` to work with a texture binding of

any dimension.

# Objective

Since #8446, example `shader_prepass` logs the following error on my mac

m1:

```

ERROR bevy_render::render_resource::pipeline_cache: failed to process shader:

error: Entry point fragment at Fragment is invalid

= Argument 1 varying error

= Capability MULTISAMPLED_SHADING is not supported

```

The example display the 3d scene but doesn't change with the preps

selected

Maybe related to this update in naga:

cc3a8ac737

## Solution

- Disable MSAA in the example, and check if it's enabled in the shader

# Objective

Fix the screenspace_texture example not working on the WebGPU examples

page. Currently it fails with the following error in the browser

console:

```

1 error(s) generated while compiling the shader:

:213:9 error: redeclaration of 'uv'

let uv = coords_to_viewport_uv(position.xy, view.viewport);

^^

:211:14 note: 'uv' previously declared here

@location(2) uv: vec2<f32>,

```

## Solution

Rename the shader variable `uv` to `viewport_uv` to prevent variable

redeclaration error.

# Objective

The objective is to be able to load data from "application-specific"

(see glTF spec 3.7.2.1.) vertex attribute semantics from glTF files into

Bevy meshes.

## Solution

Rather than probe the glTF for the specific attributes supported by

Bevy, this PR changes the loader to iterate through all the attributes

and map them onto `MeshVertexAttribute`s. This mapping includes all the

previously supported attributes, plus it is now possible to add mappings

using the `add_custom_vertex_attribute()` method on `GltfPlugin`.

## Changelog

- Add support for loading custom vertex attributes from glTF files.

- Add the `custom_gltf_vertex_attribute.rs` example to illustrate

loading custom vertex attributes.

## Migration Guide

- If you were instantiating `GltfPlugin` using the unit-like struct

syntax, you must instead use `GltfPlugin::default()` as the type is no

longer unit-like.

# Objective

- The old post processing example doesn't use the actual post processing

features of bevy. It also has some issues with resizing. It's also

causing some confusion for people because accessing the prepass textures

from it is not easy.

- There's already a render to texture example

- At this point, it's mostly obsolete since the post_process_pass

example is more complete and shows the recommended way to do post

processing in bevy. It's a bit more complicated, but it's well

documented and I'm working on simplifying it even more

## Solution

- Remove the old post_processing example

- Rename post_process_pass to post_processing

## Reviewer Notes

The diff is really noisy because of the rename, but I didn't change any

code in the example.

---------

Co-authored-by: James Liu <contact@jamessliu.com>

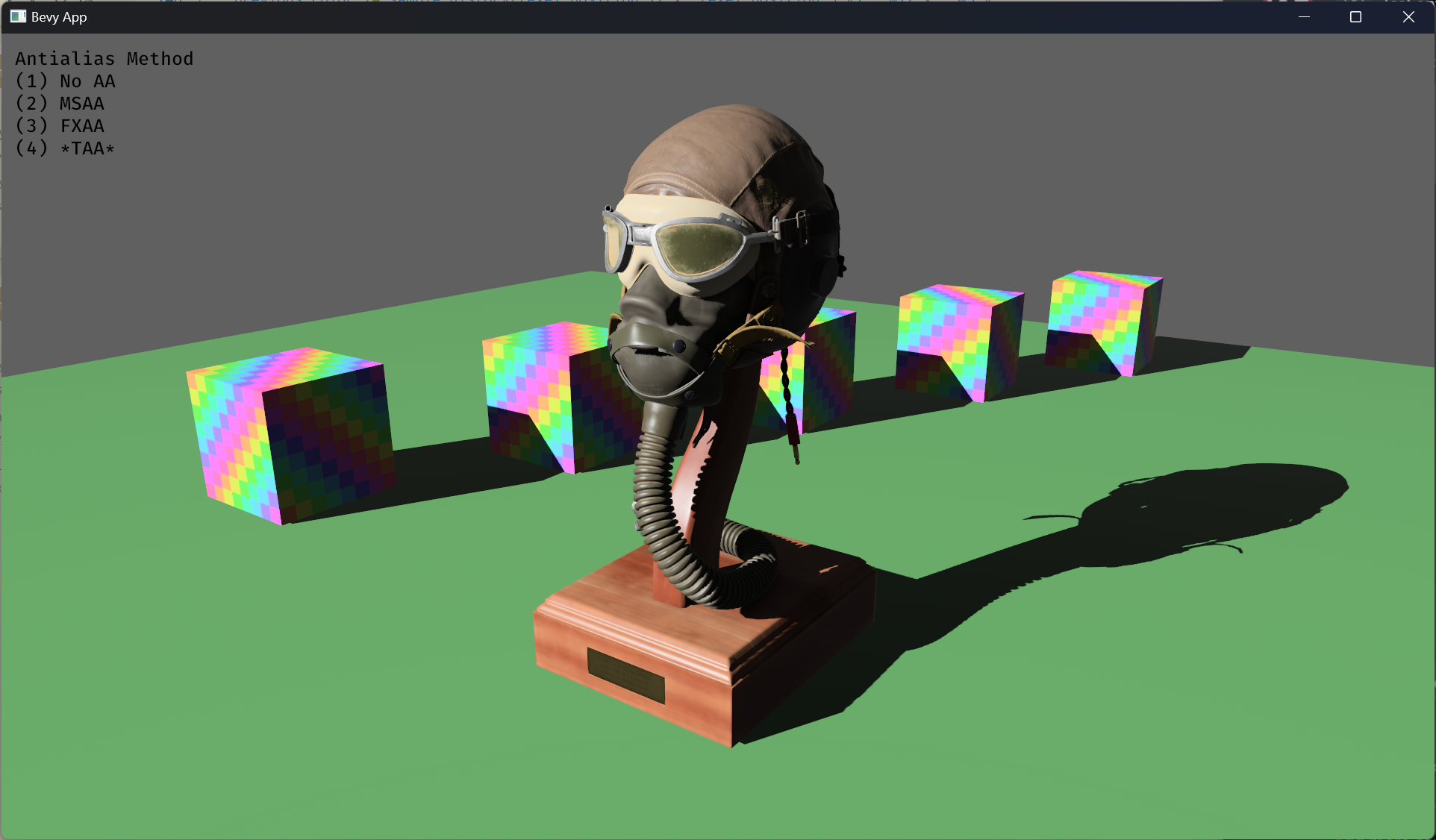

# Objective

- Implement an alternative antialias technique

- TAA scales based off of view resolution, not geometry complexity

- TAA filters textures, firefly pixels, and other aliasing not covered

by MSAA

- TAA additionally will reduce noise / increase quality in future

stochastic rendering techniques

- Closes https://github.com/bevyengine/bevy/issues/3663

## Solution

- Add a temporal jitter component

- Add a motion vector prepass

- Add a TemporalAntialias component and plugin

- Combine existing MSAA and FXAA examples and add TAA

## Followup Work

- Prepass motion vector support for skinned meshes

- Move uniforms needed for motion vectors into a separate bind group,

instead of using different bind group layouts

- Reuse previous frame's GPU view buffer for motion vectors, instead of

recomputing

- Mip biasing for sharper textures, and or unjitter texture UVs

https://github.com/bevyengine/bevy/issues/7323

- Compute shader for better performance

- Investigate FSR techniques

- Historical depth based disocclusion tests, for geometry disocclusion

- Historical luminance/hue based tests, for shading disocclusion

- Pixel "locks" to reduce blending rate / revamp history confidence

mechanism

- Orthographic camera support for TemporalJitter

- Figure out COD's 1-tap bicubic filter

---

## Changelog

- Added MotionVectorPrepass and TemporalJitter

- Added TemporalAntialiasPlugin, TemporalAntialiasBundle, and

TemporalAntialiasSettings

---------

Co-authored-by: IceSentry <c.giguere42@gmail.com>

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: Daniel Chia <danstryder@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Brandon Dyer <brandondyer64@gmail.com>

Co-authored-by: Edgar Geier <geieredgar@gmail.com>

# Objective

Co-Authored-By: davier

[bricedavier@gmail.com](mailto:bricedavier@gmail.com)

Fixes#3576.

Adds a `resources` field in scene serialization data to allow

de/serializing resources that have reflection enabled.

## Solution

Most of this code is taken from a previous closed PR:

https://github.com/bevyengine/bevy/pull/3580. Most of the credit goes to

@Davier , what I did was mostly getting it to work on the latest main

branch of Bevy, along with adding a few asserts in the currently

existing tests to be sure everything is working properly.

This PR changes the scene format to include resources in this way:

```

(

resources: {

// List of resources here, keyed by resource type name.

},

entities: [

// Previous scene format here

],

)

```

An example taken from the tests:

```

(

resources: {

"bevy_scene::serde::tests::MyResource": (

foo: 123,

),

},

entities: {

// Previous scene format here

},

)

```

For this, a `resources` fields has been added on the `DynamicScene` and

the `DynamicSceneBuilder` structs. The latter now also has a method

named `extract_resources` to properly extract the existing resources

registered in the local type registry, in a similar way to

`extract_entities`.

---

## Changelog

Added: Reflect resources registered in the type registry used by dynamic

scenes will now be properly de/serialized in scene data.

## Migration Guide

Since the scene format has been changed, the user may not be able to use

scenes saved prior to this PR due to the `resources` scene field being

missing. ~~To preserve backwards compatibility, I will try to make the

`resources` fully optional so that old scenes can be loaded without

issue.~~

## TODOs

- [x] I may have to update a few doc blocks still referring to dynamic

scenes as mere container of entities, since they now include resources

as well.

- [x] ~~I want to make the `resources` key optional, as specified in the

Migration Guide, so that old scenes will be compatible with this

change.~~ Since this would only be trivial for ron format, I think it

might be better to consider it in a separate PR/discussion to figure out

if it could be done for binary serialization too.

- [x] I suppose it might be a good idea to add a resources in the scene

example so that users will quickly notice they can serialize resources

just like entities.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Use the prepass textures in webgl

## Solution

- Bind the prepass textures even when using webgl, but only if msaa is disabled

- Also did some refactors to centralize how textures are bound, similar to the EnvironmentMapLight PR

- ~~Also did some refactors of the example to make it work in webgl~~

- ~~To make the example work in webgl, I needed to use a sampler for the depth texture, the resulting code looks a bit weird, but it's simple enough and I think it's worth it to show how it works when using webgl~~

# Objective

Splits tone mapping from https://github.com/bevyengine/bevy/pull/6677 into a separate PR.

Address https://github.com/bevyengine/bevy/issues/2264.

Adds tone mapping options:

- None: Bypasses tonemapping for instances where users want colors output to match those set.

- Reinhard

- Reinhard Luminance: Bevy's exiting tonemapping

- [ACES](https://github.com/TheRealMJP/BakingLab/blob/master/BakingLab/ACES.hlsl) (Fitted version, based on the same implementation that Godot 4 uses) see https://github.com/bevyengine/bevy/issues/2264

- [AgX](https://github.com/sobotka/AgX)

- SomewhatBoringDisplayTransform

- TonyMcMapface

- Blender Filmic

This PR also adds support for EXR images so they can be used to compare tonemapping options with reference images.

## Migration Guide

- Tonemapping is now an enum with NONE and the various tonemappers.

- The DebandDither is now a separate component.

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

(Before)

(After)

# Objective

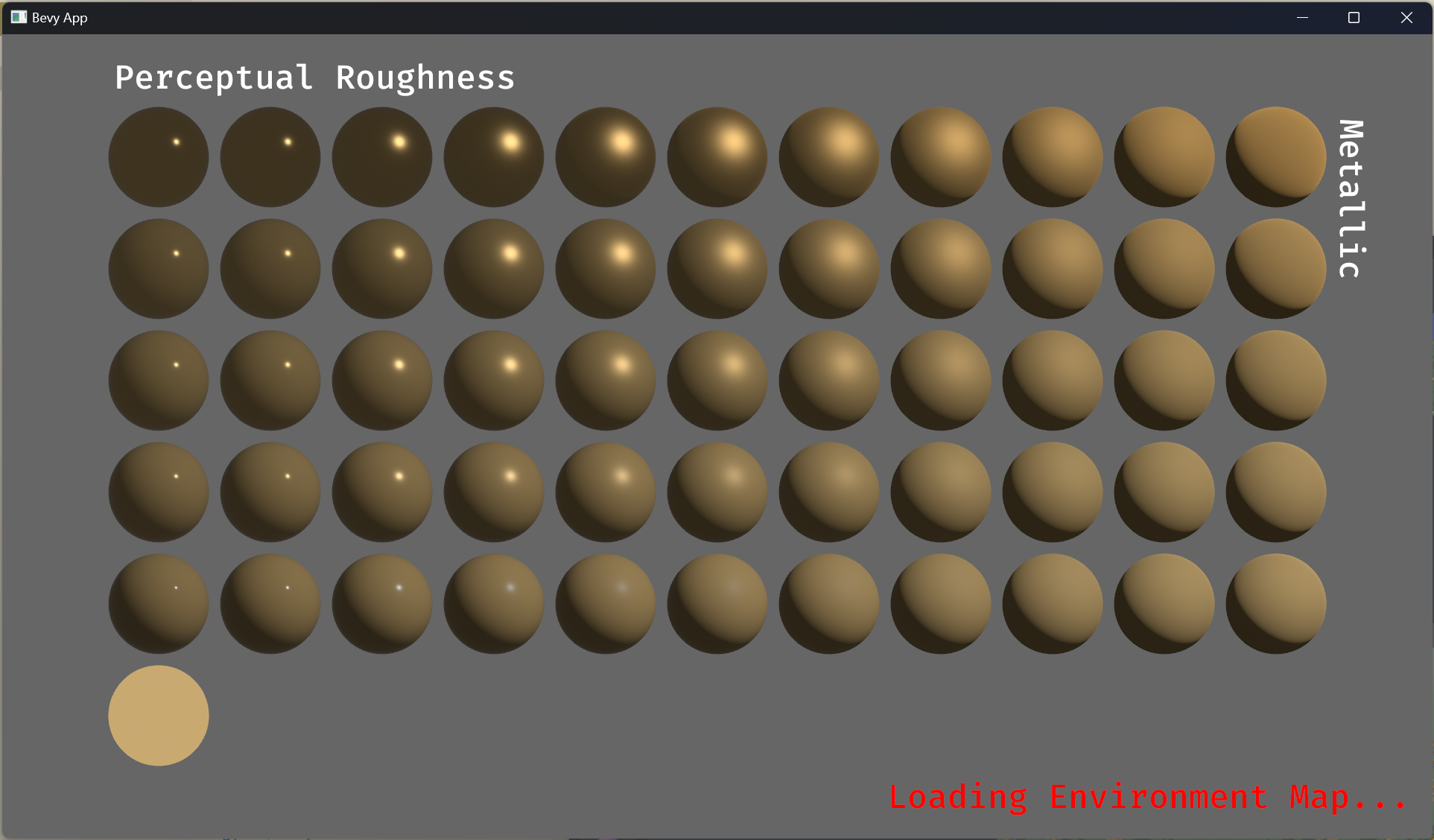

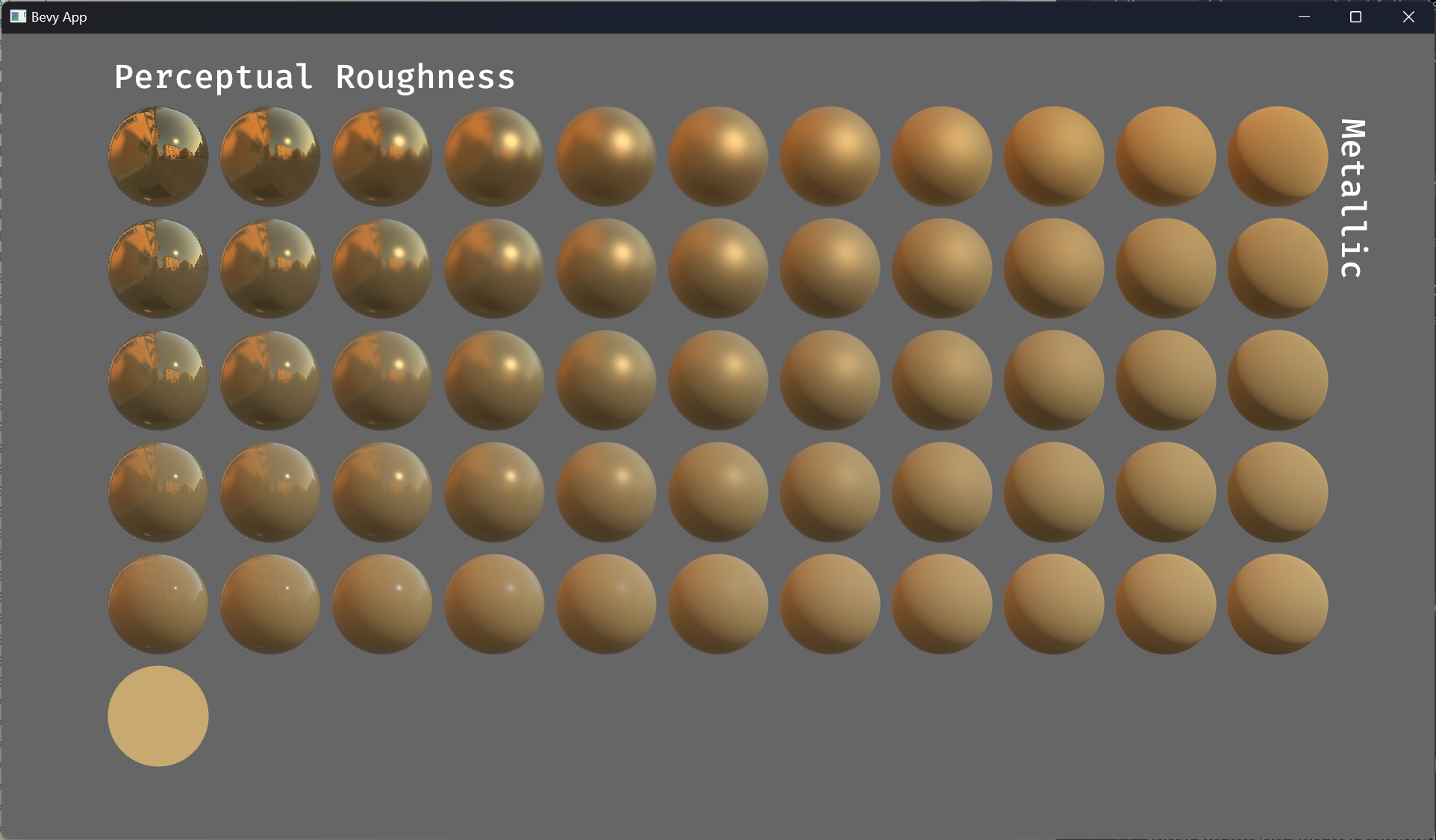

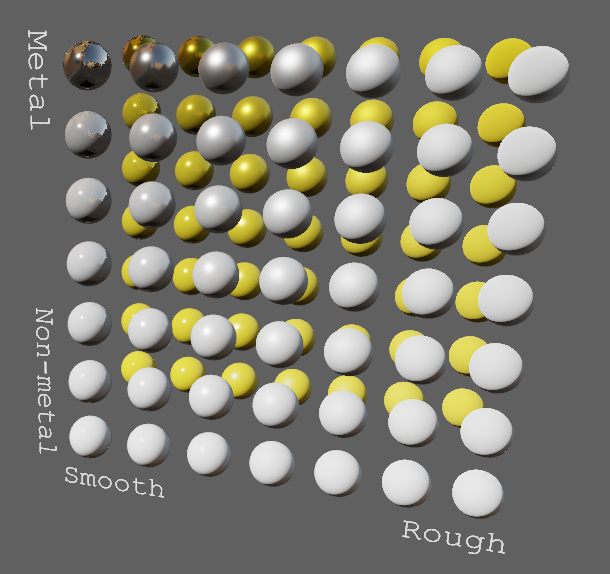

- Improve lighting; especially reflections.

- Closes https://github.com/bevyengine/bevy/issues/4581.

## Solution

- Implement environment maps, providing better ambient light.

- Add microfacet multibounce approximation for specular highlights from Filament.

- Occlusion is no longer incorrectly applied to direct lighting. It now only applies to diffuse indirect light. Unsure if it's also supposed to apply to specular indirect light - the glTF specification just says "indirect light". In the case of ambient occlusion, for instance, that's usually only calculated as diffuse though. For now, I'm choosing to apply this just to indirect diffuse light, and not specular.

- Modified the PBR example to use an environment map, and have labels.

- Added `FallbackImageCubemap`.

## Implementation

- IBL technique references can be found in environment_map.wgsl.

- It's more accurate to use a LUT for the scale/bias. Filament has a good reference on generating this LUT. For now, I just used an analytic approximation.

- For now, environment maps must first be prefiltered outside of bevy using a 3rd party tool. See the `EnvironmentMap` documentation.

- Eventually, we should have our own prefiltering code, so that we can have dynamically changing environment maps, as well as let users drop in an HDR image and use asset preprocessing to create the needed textures using only bevy.

---

## Changelog

- Added an `EnvironmentMapLight` camera component that adds additional ambient light to a scene.

- StandardMaterials will now appear brighter and more saturated at high roughness, due to internal material changes. This is more physically correct.

- Fixed StandardMaterial occlusion being incorrectly applied to direct lighting.

- Added `FallbackImageCubemap`.

Co-authored-by: IceSentry <c.giguere42@gmail.com>

Co-authored-by: James Liu <contact@jamessliu.com>

Co-authored-by: Rob Parrett <robparrett@gmail.com>

# Objective

- Shader error cause by a missing import.

- `pbr_functions.wgsl` was missing an import for the `ambient_light()` function, as `array_texture` doesn't import it.

- Closes#7542.

## Solution

- Add`#import bevy_pbr::pbr_ambient` into `array_texture`

# Objective

- Fix `post_processing` and `shader_prepass` examples as they fail when compiling shaders due to missing shader defs

- Fixes#6799

- Fixes#6996

- Fixes#7375

- Supercedes #6997

- Supercedes #7380

## Solution

- The prepass was broken due to a missing `MAX_CASCADES_PER_LIGHT` shader def. Add it.

- The shader used in the `post_processing` example is applied to a 2D mesh, so use the correct mesh2d_view_bindings shader import.

<img width="1392" alt="image" src="https://user-images.githubusercontent.com/418473/203873533-44c029af-13b7-4740-8ea3-af96bd5867c9.png">

<img width="1392" alt="image" src="https://user-images.githubusercontent.com/418473/203873549-36be7a23-b341-42a2-8a9f-ceea8ac7a2b8.png">

# Objective

- Add support for the “classic” distance fog effect, as well as a more advanced atmospheric fog effect.

## Solution

This PR:

- Introduces a new `FogSettings` component that controls distance fog per-camera.

- Adds support for three widely used “traditional” fog falloff modes: `Linear`, `Exponential` and `ExponentialSquared`, as well as a more advanced `Atmospheric` fog;

- Adds support for directional light influence over fog color;

- Extracts fog via `ExtractComponent`, then uses a prepare system that sets up a new dynamic uniform struct (`Fog`), similar to other mesh view types;

- Renders fog in PBR material shader, as a final adjustment to the `output_color`, after PBR is computed (but before tone mapping);

- Adds a new `StandardMaterial` flag to enable fog; (`fog_enabled`)

- Adds convenience methods for easier artistic control when creating non-linear fog types;

- Adds documentation around fog.

---

## Changelog

### Added

- Added support for distance-based fog effects for PBR materials, controllable per-camera via the new `FogSettings` component;

- Added `FogFalloff` enum for selecting between three widely used “traditional” fog falloff modes: `Linear`, `Exponential` and `ExponentialSquared`, as well as a more advanced `Atmospheric` fog;

# Objective

Fixes#6952

## Solution

- Request WGPU capabilities `SAMPLED_TEXTURE_AND_STORAGE_BUFFER_ARRAY_NON_UNIFORM_INDEXING`, `SAMPLER_NON_UNIFORM_INDEXING` and `UNIFORM_BUFFER_AND_STORAGE_TEXTURE_ARRAY_NON_UNIFORM_INDEXING` when corresponding features are enabled.

- Add an example (`shaders/texture_binding_array`) illustrating (and testing) the use of non-uniform indexed textures and samplers.

## Changelog

- Added new capabilities for shader validation.

- Added example `shaders/texture_binding_array`.

# Objective

- The functions added to utils.wgsl by the prepass assume that mesh_view_bindings are present, which isn't always the case

- Fixes https://github.com/bevyengine/bevy/issues/7353

## Solution

- Move these functions to their own `prepass_utils.wgsl` file

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

# Objective

- Add a configurable prepass

- A depth prepass is useful for various shader effects and to reduce overdraw. It can be expansive depending on the scene so it's important to be able to disable it if you don't need any effects that uses it or don't suffer from excessive overdraw.

- The goal is to eventually use it for things like TAA, Ambient Occlusion, SSR and various other techniques that can benefit from having a prepass.

## Solution

The prepass node is inserted before the main pass. It runs for each `Camera3d` with a prepass component (`DepthPrepass`, `NormalPrepass`). The presence of one of those components is used to determine which textures are generated in the prepass. When any prepass is enabled, the depth buffer generated will be used by the main pass to reduce overdraw.

The prepass runs for each `Material` created with the `MaterialPlugin::prepass_enabled` option set to `true`. You can overload the shader used by the prepass by using `Material::prepass_vertex_shader()` and/or `Material::prepass_fragment_shader()`. It will also use the `Material::specialize()` for more advanced use cases. It is enabled by default on all materials.

The prepass works on opaque materials and materials using an alpha mask. Transparent materials are ignored.

The `StandardMaterial` overloads the prepass fragment shader to support alpha mask and normal maps.

---

## Changelog

- Add a new `PrepassNode` that runs before the main pass

- Add a `PrepassPlugin` to extract/prepare/queue the necessary data

- Add a `DepthPrepass` and `NormalPrepass` component to control which textures will be created by the prepass and available in later passes.

- Add a new `prepass_enabled` flag to the `MaterialPlugin` that will control if a material uses the prepass or not.

- Add a new `prepass_enabled` flag to the `PbrPlugin` to control if the StandardMaterial uses the prepass. Currently defaults to false.

- Add `Material::prepass_vertex_shader()` and `Material::prepass_fragment_shader()` to control the prepass from the `Material`

## Notes

In bevy's sample 3d scene, the performance is actually worse when enabling the prepass, but on more complex scenes the performance is generally better. I would like more testing on this, but @DGriffin91 has reported a very noticeable improvements in some scenes.

The prepass is also used by @JMS55 for TAA and GTAO

discord thread: <https://discord.com/channels/691052431525675048/1011624228627419187>

This PR was built on top of the work of multiple people

Co-Authored-By: @superdump

Co-Authored-By: @robtfm

Co-Authored-By: @JMS55

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

- Fixes#4019

- Fix lighting of double-sided materials when using a negative scale

- The FlightHelmet.gltf model's hose uses a double-sided material. Loading the model with a uniform scale of -1.0, and comparing against Blender, it was identified that negating the world-space tangent, bitangent, and interpolated normal produces incorrect lighting. Discussion with Morten Mikkelsen clarified that this is both incorrect and unnecessary.

## Solution

- Remove the code that negates the T, B, and N vectors (the interpolated world-space tangent, calculated world-space bitangent, and interpolated world-space normal) when seeing the back face of a double-sided material with negative scale.

- Negate the world normal for a double-sided back face only when not using normal mapping

### Before, on `main`, flipping T, B, and N

<img width="932" alt="Screenshot 2022-08-22 at 15 11 53" src="https://user-images.githubusercontent.com/302146/185965366-f776ff2c-cfa1-46d1-9c84-fdcb399c273c.png">

### After, on this PR

<img width="932" alt="Screenshot 2022-08-22 at 15 12 11" src="https://user-images.githubusercontent.com/302146/185965420-8be493e2-3b1a-4188-bd13-fd6b17a76fe7.png">

### Double-sided material without normal maps

https://user-images.githubusercontent.com/302146/185988113-44a384e7-0b55-4946-9b99-20f8c803ab7e.mp4

---

## Changelog

- Fixed: Lighting of normal-mapped, double-sided materials applied to models with negative scale

- Fixed: Lighting and shadowing of back faces with no normal-mapping and a double-sided material

## Migration Guide

`prepare_normal` from the `bevy_pbr::pbr_functions` shader import has been reworked.

Before:

```rust

pbr_input.world_normal = in.world_normal;

pbr_input.N = prepare_normal(

pbr_input.material.flags,

in.world_normal,

#ifdef VERTEX_TANGENTS

#ifdef STANDARDMATERIAL_NORMAL_MAP

in.world_tangent,

#endif

#endif

in.uv,

in.is_front,

);

```

After:

```rust

pbr_input.world_normal = prepare_world_normal(

in.world_normal,

(material.flags & STANDARD_MATERIAL_FLAGS_DOUBLE_SIDED_BIT) != 0u,

in.is_front,

);

pbr_input.N = apply_normal_mapping(

pbr_input.material.flags,

pbr_input.world_normal,

#ifdef VERTEX_TANGENTS

#ifdef STANDARDMATERIAL_NORMAL_MAP

in.world_tangent,

#endif

#endif

in.uv,

);

```

# Objective

Entities are unique, however, this is not reflected in the scene format. Currently, entities are stored in a list where a user could inadvertently create a duplicate of the same entity.

## Solution

Switch from the list representation to a map representation for entities.

---

## Changelog

* The `entities` field in the scene format is now a map of entity ID to entity data

## Migration Guide

The scene format now stores its collection of entities in a map rather than a list:

```rust

// OLD

(

entities: [

(

entity: 12,

components: {

"bevy_transform::components::transform::Transform": (

translation: (

x: 0.0,

y: 0.0,

z: 0.0

),

rotation: (0.0, 0.0, 0.0, 1.0),

scale: (

x: 1.0,

y: 1.0,

z: 1.0

),

),

},

),

],

)

// NEW

(

entities: {

12: (

components: {

"bevy_transform::components::transform::Transform": (

translation: (

x: 0.0,

y: 0.0,

z: 0.0

),

rotation: (0.0, 0.0, 0.0, 1.0),

scale: (

x: 1.0,

y: 1.0,

z: 1.0

),

),

},

),

},

)

```

# Objective

Currently scenes define components using a list:

```rust

[

(

entity: 0,

components: [

{

"bevy_transform::components::transform::Transform": (

translation: (

x: 0.0,

y: 0.0,

z: 0.0

),

rotation: (0.0, 0.0, 0.0, 1.0),

scale: (

x: 1.0,

y: 1.0,

z: 1.0

),

),

},

{

"my_crate::Foo": (

text: "Hello World",

),

},

{

"my_crate::Bar": (

baz: 123,

),

},

],

),

]

```

However, this representation has some drawbacks (as pointed out by @Metadorius in [this](https://github.com/bevyengine/bevy/pull/4561#issuecomment-1202215565) comment):

1. Increased nesting and more characters (minor effect on overall size)

2. More importantly, by definition, entities cannot have more than one instance of any given component. Therefore, such data is best stored as a map— where all values are meant to have unique keys.

## Solution

Change `components` to store a map of components rather than a list:

```rust

[

(

entity: 0,

components: {

"bevy_transform::components::transform::Transform": (

translation: (

x: 0.0,

y: 0.0,

z: 0.0

),

rotation: (0.0, 0.0, 0.0, 1.0),

scale: (

x: 1.0,

y: 1.0,

z: 1.0

),

),

"my_crate::Foo": (

text: "Hello World",

),

"my_crate::Bar": (

baz: 123

),

},

),

]

```

#### Code Representation

This change only affects the scene format itself. `DynamicEntity` still stores its components as a list. The reason for this is that storing such data as a map is not really needed since:

1. The "key" of each value is easily found by just calling `Reflect::type_name` on it

2. We should be generating such structs using the `World` itself which upholds the one-component-per-entity rule

One could in theory create manually create a `DynamicEntity` with duplicate components, but this isn't something I think we should focus on in this PR. `DynamicEntity` can be broken in other ways (i.e. storing a non-component in the components list), and resolving its issues can be done in a separate PR.

---

## Changelog

* The scene format now uses a map to represent the collection of components rather than a list

## Migration Guide

The scene format now uses a map to represent the collection of components. Scene files will need to update from the old list format.

<details>

<summary>Example Code</summary>

```rust

// OLD

[

(

entity: 0,

components: [

{

"bevy_transform::components::transform::Transform": (

translation: (

x: 0.0,

y: 0.0,

z: 0.0

),

rotation: (0.0, 0.0, 0.0, 1.0),

scale: (

x: 1.0,

y: 1.0,

z: 1.0

),

),

},

{

"my_crate::Foo": (

text: "Hello World",

),

},

{

"my_crate::Bar": (

baz: 123,

),

},

],

),

]

// NEW

[

(

entity: 0,

components: {

"bevy_transform::components::transform::Transform": (

translation: (

x: 0.0,

y: 0.0,

z: 0.0

),

rotation: (0.0, 0.0, 0.0, 1.0),

scale: (

x: 1.0,

y: 1.0,

z: 1.0

),

),

"my_crate::Foo": (

text: "Hello World",

),

"my_crate::Bar": (

baz: 123

),

},

),

]

```

</details>

# Objective

Scenes are currently represented as a list of entities. This is all we need currently, but we may want to add more data to this format in the future (metadata, asset lists, etc.).

It would be nice to update the format in preparation of possible future changes. Doing so now (i.e., before 0.9) could mean reduced[^1] breakage for things added in 0.10.

[^1]: Obviously, adding features runs the risk of breaking things regardless. But if all features added are for whatever reason optional or well-contained, then users should at least have an easier time updating.

## Solution

Made the scene root a struct rather than a list.

```rust

(

entities: [

// Entity data here...

]

)

```

---

## Changelog

* The scene format now puts the entity list in a newly added `entities` field, rather than having it be the root object

## Migration Guide

The scene file format now uses a struct as the root object rather than a list of entities. The list of entities is now found in the `entities` field of this struct.

```rust

// OLD

[

(

entity: 0,

components: [

// Components...

]

),

]

// NEW

(

entities: [

(

entity: 0,

components: [

// Components...

]

),

]

)

```

Co-authored-by: Gino Valente <49806985+MrGVSV@users.noreply.github.com>

> Note: This is rebased off #4561 and can be viewed as a competitor to that PR. See `Comparison with #4561` section for details.

# Objective

The current serialization format used by `bevy_reflect` is both verbose and error-prone. Taking the following structs[^1] for example:

```rust

// -- src/inventory.rs

#[derive(Reflect)]

struct Inventory {

id: String,

max_storage: usize,

items: Vec<Item>

}

#[derive(Reflect)]

struct Item {

name: String

}

```

Given an inventory of a single item, this would serialize to something like:

```rust

// -- assets/inventory.ron

{

"type": "my_game::inventory::Inventory",

"struct": {

"id": {

"type": "alloc::string::String",

"value": "inv001",

},

"max_storage": {

"type": "usize",

"value": 10

},

"items": {

"type": "alloc::vec::Vec<alloc::string::String>",

"list": [

{

"type": "my_game::inventory::Item",

"struct": {

"name": {

"type": "alloc::string::String",

"value": "Pickaxe"

},

},

},

],

},

},

}

```

Aside from being really long and difficult to read, it also has a few "gotchas" that users need to be aware of if they want to edit the file manually. A major one is the requirement that you use the proper keys for a given type. For structs, you need `"struct"`. For lists, `"list"`. For tuple structs, `"tuple_struct"`. And so on.

It also ***requires*** that the `"type"` entry come before the actual data. Despite being a map— which in programming is almost always orderless by default— the entries need to be in a particular order. Failure to follow the ordering convention results in a failure to deserialize the data.

This makes it very prone to errors and annoyances.

## Solution

Using #4042, we can remove a lot of the boilerplate and metadata needed by this older system. Since we now have static access to type information, we can simplify our serialized data to look like:

```rust

// -- assets/inventory.ron

{

"my_game::inventory::Inventory": (

id: "inv001",

max_storage: 10,

items: [

(

name: "Pickaxe"

),

],

),

}

```

This is much more digestible and a lot less error-prone (no more key requirements and no more extra type names).

Additionally, it is a lot more familiar to users as it follows conventional serde mechanics. For example, the struct is represented with `(...)` when serialized to RON.

#### Custom Serialization

Additionally, this PR adds the opt-in ability to specify a custom serde implementation to be used rather than the one created via reflection. For example[^1]:

```rust

// -- src/inventory.rs

#[derive(Reflect, Serialize)]

#[reflect(Serialize)]

struct Item {

#[serde(alias = "id")]

name: String

}

```

```rust

// -- assets/inventory.ron

{

"my_game::inventory::Inventory": (

id: "inv001",

max_storage: 10,

items: [

(

id: "Pickaxe"

),

],

),

},

```

By allowing users to define their own serialization methods, we do two things:

1. We give more control over how data is serialized/deserialized to the end user

2. We avoid having to re-define serde's attributes and forcing users to apply both (e.g. we don't need a `#[reflect(alias)]` attribute).

### Improved Formats

One of the improvements this PR provides is the ability to represent data in ways that are more conventional and/or familiar to users. Many users are familiar with RON so here are some of the ways we can now represent data in RON:

###### Structs

```js

{

"my_crate::Foo": (

bar: 123

)

}

// OR

{

"my_crate::Foo": Foo(

bar: 123

)

}

```

<details>

<summary>Old Format</summary>

```js

{

"type": "my_crate::Foo",

"struct": {

"bar": {

"type": "usize",

"value": 123

}

}

}

```

</details>

###### Tuples

```js

{

"(f32, f32)": (1.0, 2.0)

}

```

<details>

<summary>Old Format</summary>

```js

{

"type": "(f32, f32)",

"tuple": [

{

"type": "f32",

"value": 1.0

},

{

"type": "f32",

"value": 2.0

}

]

}

```

</details>

###### Tuple Structs

```js

{

"my_crate::Bar": ("Hello World!")

}

// OR

{

"my_crate::Bar": Bar("Hello World!")

}

```

<details>

<summary>Old Format</summary>

```js

{

"type": "my_crate::Bar",

"tuple_struct": [

{

"type": "alloc::string::String",

"value": "Hello World!"

}

]

}

```

</details>

###### Arrays

It may be a bit surprising to some, but arrays now also use the tuple format. This is because they essentially _are_ tuples (a sequence of values with a fixed size), but only allow for homogenous types. Additionally, this is how RON handles them and is probably a result of the 32-capacity limit imposed on them (both by [serde](https://docs.rs/serde/latest/serde/trait.Serialize.html#impl-Serialize-for-%5BT%3B%2032%5D) and by [bevy_reflect](https://docs.rs/bevy/latest/bevy/reflect/trait.GetTypeRegistration.html#impl-GetTypeRegistration-for-%5BT%3B%2032%5D)).

```js

{

"[i32; 3]": (1, 2, 3)

}

```

<details>

<summary>Old Format</summary>

```js

{

"type": "[i32; 3]",

"array": [

{

"type": "i32",

"value": 1

},

{

"type": "i32",

"value": 2

},

{

"type": "i32",

"value": 3

}

]

}

```

</details>

###### Enums

To make things simple, I'll just put a struct variant here, but the style applies to all variant types:

```js

{

"my_crate::ItemType": Consumable(

name: "Healing potion"

)

}

```

<details>

<summary>Old Format</summary>

```js

{

"type": "my_crate::ItemType",

"enum": {

"variant": "Consumable",

"struct": {

"name": {

"type": "alloc::string::String",

"value": "Healing potion"

}

}

}

}

```

</details>

### Comparison with #4561

This PR is a rebased version of #4561. The reason for the split between the two is because this PR creates a _very_ different scene format. You may notice that the PR descriptions for either PR are pretty similar. This was done to better convey the changes depending on which (if any) gets merged first. If #4561 makes it in first, I will update this PR description accordingly.

---

## Changelog

* Re-worked serialization/deserialization for reflected types

* Added `TypedReflectDeserializer` for deserializing data with known `TypeInfo`

* Renamed `ReflectDeserializer` to `UntypedReflectDeserializer`

* ~~Replaced usages of `deserialize_any` with `deserialize_map` for non-self-describing formats~~ Reverted this change since there are still some issues that need to be sorted out (in a separate PR). By reverting this, crates like `bincode` can throw an error when attempting to deserialize non-self-describing formats (`bincode` results in `DeserializeAnyNotSupported`)

* Structs, tuples, tuple structs, arrays, and enums are now all de/serialized using conventional serde methods

## Migration Guide

* This PR reduces the verbosity of the scene format. Scenes will need to be updated accordingly:

```js

// Old format

{

"type": "my_game::item::Item",

"struct": {

"id": {

"type": "alloc::string::String",

"value": "bevycraft:stone",

},

"tags": {

"type": "alloc::vec::Vec<alloc::string::String>",

"list": [

{

"type": "alloc::string::String",

"value": "material"

},

],

},

}

// New format

{

"my_game::item::Item": (

id: "bevycraft:stone",

tags: ["material"]

)

}

```

[^1]: Some derives omitted for brevity.

# Objective

fixes#5946

## Solution

adjust cluster index calculation for viewport origin.

from reading point 2 of the rasterization algorithm description in https://gpuweb.github.io/gpuweb/#rasterization, it looks like framebuffer space (and so @bulitin(position)) is not meant to be adjusted for viewport origin, so we need to subtract that to get the right cluster index.

- add viewport origin to rust `ExtractedView` and wgsl `View` structs

- subtract from frag coord for cluster index calculation

# Objective

- Fix / support KTX2 array / cubemap / cubemap array textures

- Fixes#4495 . Supersedes #4514 .

## Solution

- Add `Option<TextureViewDescriptor>` to `Image` to enable configuration of the `TextureViewDimension` of a texture.

- This allows users to set `D2Array`, `D3`, `Cube`, `CubeArray` or whatever they need

- Automatically configure this when loading KTX2

- Transcode all layers and faces instead of just one

- Use the UASTC block size of 128 bits, and the number of blocks in x/y for a given mip level in order to determine the offset of the layer and face within the KTX2 mip level data

- `wgpu` wants data ordered as layer 0 mip 0..n, layer 1 mip 0..n, etc. See https://docs.rs/wgpu/latest/wgpu/util/trait.DeviceExt.html#tymethod.create_texture_with_data

- Reorder the data KTX2 mip X layer Y face Z to `wgpu` layer Y face Z mip X order

- Add a `skybox` example to demonstrate / test loading cubemaps from PNG and KTX2, including ASTC 4x4, BC7, and ETC2 compression for support everywhere. Note that you need to enable the `ktx2,zstd` features to be able to load the compressed textures.

---

## Changelog

- Fixed: KTX2 array / cubemap / cubemap array textures

- Fixes: Validation failure for compressed textures stored in KTX2 where the width/height are not a multiple of the block dimensions.

- Added: `Image` now has an `Option<TextureViewDescriptor>` field to enable configuration of the texture view. This is useful for configuring the `TextureViewDimension` when it is not just a plain 2D texture and the loader could/did not identify what it should be.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Make `game_of_life.wgsl` easier to read and understand

## Solution

- Remove unused code in the shader

- `location_f32` was unused in `init`

- `color` was unused in `update`

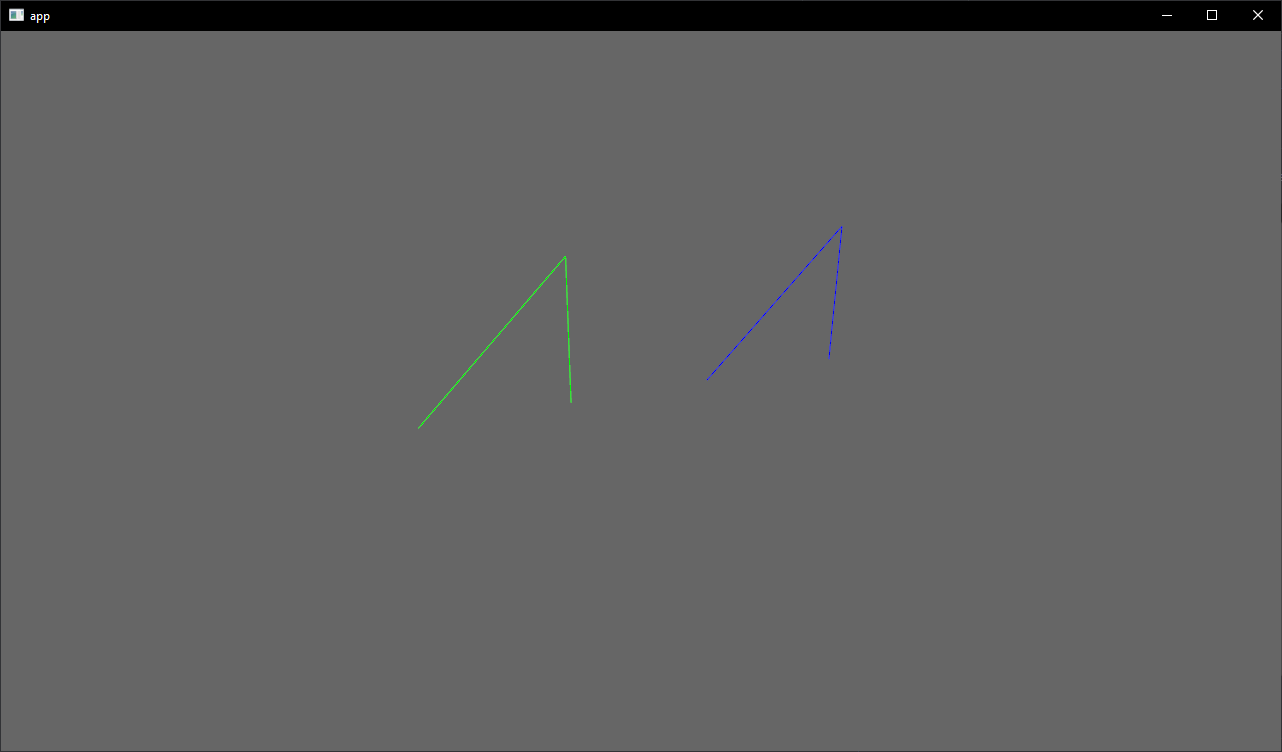

# Objective

- Showcase how to use a `Material` and `Mesh` to spawn 3d lines

## Solution

- Add an example using a simple `Material` and `Mesh` definition to draw a 3d line

- Shows how to use `LineList` and `LineStrip` in combination with a specialized `Material`

## Notes

This isn't just a primitive shape because it needs a special Material, but I think it's a good showcase of the power of the `Material` and `AsBindGroup` abstractions. All of this is easy to figure out when you know these options are a thing, but I think they are hard to discover which is why I think this should be an example and not shipped with bevy.

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

# Objective

Add texture sampling to the GLSL shader example, as naga does not support the commonly used sampler2d type.

Fixes#5059

## Solution

- Align the shader_material_glsl example behaviour with the shader_material example, as the later includes texture sampling.

- Update the GLSL shader to do texture sampling the way naga supports it, and document the way naga does not support it.

## Changelog

- The shader_material_glsl example has been updated to demonstrate texture sampling using the GLSL shading language.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>