# Objective

Speed up entity moves between tables by reducing the number of copies conducted. Currently three separate copies are conducted: `src[index] -> swap scratch`, `src[last] -> src[index]`, and `swap scratch -> dst[target]`. The first and last copies can be merged by directly using the copy `src[index] -> dst[target]`, which can save quite some time if the component(s) in question are large.

## Solution

This PR does the following:

- Adds `BlobVec::swap_remove_unchecked(usize, PtrMut<'_>)`, which is identical to `swap_remove_and_forget_unchecked`, but skips the `swap_scratch` and directly copies the component into the provided `PtrMut<'_>`.

- Build `Column::initialize_from_unchecked(&mut Column, usize, usize)` on top of it, which uses the above to directly initialize a row from another column.

- Update most of the table move APIs to use `initialize_from_unchecked` instead of a combination of `swap_remove_and_forget_unchecked` and `initialize`.

This is an alternative, though orthogonal, approach to achieve the same performance gains as seen in #4853. This (hopefully) shouldn't run into the same Miri limitations that said PR currently does. After this PR, `swap_remove_and_forget_unchecked` is still in use for Resources and swap_scratch likely still should be removed, so #4853 still has use, even if this PR is merged.

## Performance

TODO: Microbenchmark

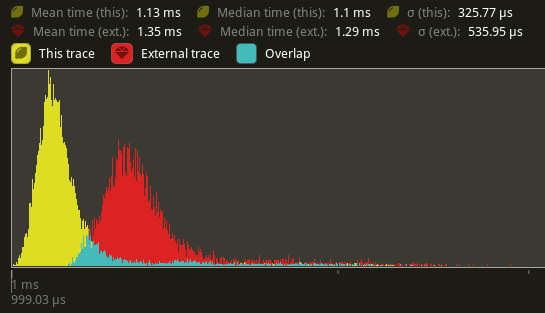

This PR shows similar improvements to commands that add or remove table components that result in a table move. When tested on `many_cubes sphere`, some of the more command heavy systems saw notable improvements. In particular, `prepare_uniform_components<T>`, this saw a reduction in time from 1.35ms to 1.13ms (a 16.3% improvement) on my local machine, a similar if not slightly better gain than what #4853 showed [here](https://github.com/bevyengine/bevy/pull/4853#issuecomment-1159346106).

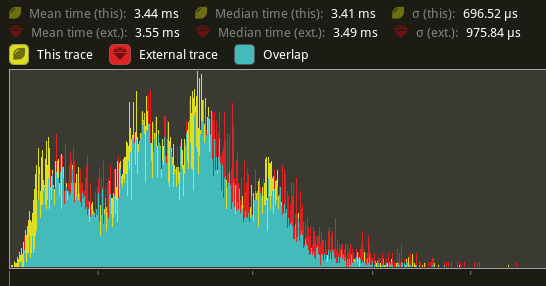

The command heavy `Extract` stage also saw a smaller overall improvement:

---

## Changelog

Added: `BlobVec::swap_remove_unchecked`.

Added: `Column::initialize_from_unchecked`.

# Objective

Currently, `Reflect` is unsafe to implement because of a contract in which `any` and `any_mut` must return `self`, or `downcast` will cause UB. This PR makes `Reflect` safe, makes `downcast` not use unsafe, and eliminates this contract.

## Solution

This PR adds a method to `Reflect`, `any`. It also renames the old `any` to `as_any`.

`any` now takes a `Box<Self>` and returns a `Box<dyn Any>`.

---

## Changelog

### Added:

- `any()` method

- `represents()` method

### Changed:

- `Reflect` is now a safe trait

- `downcast()` is now safe

- The old `any` is now called `as_any`, and `any_mut` is now `as_mut_any`

## Migration Guide

- Reflect derives should not have to change anything

- Manual reflect impls will need to remove the `unsafe` keyword, add `any()` implementations, and rename the old `any` and `any_mut` to `as_any` and `as_mut_any`.

- Calls to `any`/`any_mut` must be changed to `as_any`/`as_mut_any`

## Points of discussion:

- Should renaming `any` be avoided and instead name the new method `any_box`?

- ~~Could there be a performance regression from avoiding the unsafe? I doubt it, but this change does seem to introduce redundant checks.~~

- ~~Could/should `is` and `type_id()` be implemented differently? For example, moving `is` onto `Reflect` as an `fn(&self, TypeId) -> bool`~~

Co-authored-by: PROMETHIA-27 <42193387+PROMETHIA-27@users.noreply.github.com>

# Objective

Add support for custom `AssetIo` implementations to trigger reloading of an asset.

## Solution

- Add a public method to `AssetServer` to allow forcing the reloading of an asset.

---

## Changelog

- Add method `reload_asset` to `AssetServer`.

Co-authored-by: Robert G. Jakabosky <rjakabosky+neopallium@neoawareness.com>

# Objective

- Fixes#5083

## Solution

I looked at the implementation of those events. I noticed that they both are adaptations of `winit`'s `DeviceEvent`/`WindowEvent` enum variants. Therefore I based the description of the items on the documentation provided by the upstream crate. I also added a link to `CursorMoved`, just like `MouseMotion` already has.

## Observations

- Looking at the implementation of `MouseMotion`, I noticed the `DeviceId` field of the `winit` event is discarded by `bevy_input`. This means that in the case a machine has multiple pointing devices, it is impossible to distinguish to which one the event is referring to. **EDIT:** just tested, `MouseMotion` events are emitted for movement of both mice.

# Objective

Attempt to more clearly document `ImageSettings` and setting a default sampler for new images, as per #5046

## Changelog

- Moved ImageSettings into image.rs, image::* is already exported. Makes it simpler for linking docs.

- Renamed "DefaultImageSampler" to "RenderDefaultImageSampler". Not a great name, but more consistent with other render resources.

- Added/updated related docs

# Objective

- Allow custom shaders to reuse the HDR results of PBR.

## Solution

- Separate `pbr()` and `tone_mapping()` into 2 functions in `pbr_functions.wgsl`.

# Objective

- Simplify the process of obtaining a `ComponentId` instance corresponding to a `Component`.

- Resolves#5060.

## Solution

- Add a `component_id::<T: Component>(&self)` function to both `World` and `Components` to retrieve the `ComponentId` associated with `T` from a immutable reference.

---

## Changelog

- Added `World::component_id::<C>()` and `Components::component_id::<C>()` to retrieve a `Component`'s corresponding `ComponentId` if it exists.

# Objective

Update pbr mesh shader to use correct normals for skinned meshes.

## Solution

Only use `mesh_normal_local_to_world` for normals if `SKINNED` is not defined.

# Objective

Partially addresses #4291.

Speed up the sort phase for unbatched render phases.

## Solution

Split out one of the optimizations in #4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass.

## Performance

This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change.

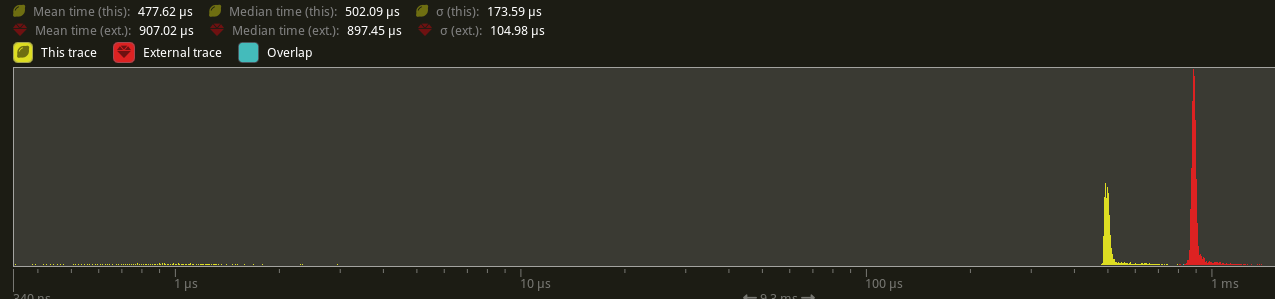

On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.

## Future Work

There were prior discussions to add support for faster radix sorts in #4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems.

Another optimization included in #4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`.

Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while.

---

## Changelog

Added: `PhaseItem::sort`

## Migration Guide

RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`).

Co-authored-by: Federico Rinaldi <gisquerin@gmail.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: colepoirier <colepoirier@gmail.com>

# Objective

DioxusLabs and Bevy have taken over maintaining what was our abandoned ui layout dependency [stretch](https://github.com/vislyhq/stretch). Dioxus' fork has had a lot of work done on it by @alice-i-cecile, @Weibye , @jkelleyrtp, @mockersf, @HackerFoo, @TimJentzsch and a dozen other contributors and now is in much better shape than stretch was. The updated crate is called taffy and is available on github [here](https://github.com/DioxusLabs/taffy) ([taffy](https://crates.io/crates/taffy) on crates.io). The goal of this PR is to replace stretch v0.3.2 with taffy v0.1.0.

## Solution

I changed the bevy_ui Cargo.toml to depend on taffy instead of stretch and fixed all the errors rustc complained about.

---

## Changelog

Changed bevy_ui layout dependency from stretch to taffy (the maintained fork of stretch).

fixes#677

## Migration Guide

The public api of taffy is different from that of stretch so please advise me on what to do here @alice-i-cecile.

# Objective

- Builds on top of #4938

- Make clustered-forward PBR lighting/shadows functionality callable

- See #3969 for details

## Solution

- Add `PbrInput` struct type containing a `StandardMaterial`, occlusion, world_position, world_normal, and frag_coord

- Split functionality to calculate the unit view vector, and normal-mapped normal into `bevy_pbr::pbr_functions`

- Split high-level shading flow into `pbr(in: PbrInput, N: vec3<f32>, V: vec3<f32>, is_orthographic: bool)` function in `bevy_pbr::pbr_functions`

- Rework `pbr.wgsl` fragment stage entry point to make use of the new functions

- This has been benchmarked on an M1 Max using `many_cubes -- sphere`. `main` had a median frame time of 15.88ms, this PR 15.99ms, which is a 0.69% frame time increase, which is within noise in my opinion.

---

## Changelog

- Added: PBR shading code is now callable. Import `bevy_pbr::pbr_functions` and its dependencies, create a `PbrInput`, calculate the unit view and normal-mapped normal vectors and whether the projection is orthographic, and call `pbr()`!

# Objective

Closes#1557. Partially addresses #3362.

Cleanup the public facing API for storage types. Most of these APIs are difficult to use safely when directly interfacing with these types, and is also currently impossible to interact with in normal ECS use as there is no `World::storages_mut`. The majority of these types should be easy enough to read, and perhaps mutate the contents, but never structurally altered without the same checks in the rest of bevy_ecs code. This both cleans up the public facing types and helps use unused code detection to remove a few of the APIs we're not using internally.

## Solution

- Mark all APIs that take `&mut T` under `bevy_ecs::storage` as `pub(crate)` or `pub(super)`

- Cleanup after it all.

Entire type visibility changes:

- `BlobVec` is `pub(super)`, only storage code should be directly interacting with it.

- `SparseArray` is now `pub(crate)` for the entire type. It's an implementation detail for `Table` and `(Component)SparseSet`.

- `TableMoveResult` is now `pub(crate)

---

## Changelog

TODO

## Migration Guide

Dear God, I hope not.

# Objective

Further speed up visibility checking by removing the main sources of contention for the system.

## Solution

- ~~Make `ComputedVisibility` a resource wrapping a `FixedBitset`.~~

- ~~Remove `ComputedVisibility` as a component.~~

~~This adds a one-bit overhead to every entity in the app world. For a game with 100,000 entities, this is 12.5KB of memory. This is still small enough to fit entirely in most L1 caches. Also removes the need for a per-Entity change detection tick. This reduces the memory footprint of ComputedVisibility 72x.~~

~~The decreased memory usage and less fragmented memory locality should provide significant performance benefits.~~

~~Clearing visible entities should be significantly faster than before:~~

- ~~Setting one `u32` to 0 clears 32 entities per cycle.~~

- ~~No archetype fragmentation to contend with.~~

- ~~Change detection is applied to the resource, so there is no per-Entity update tick requirement.~~

~~The side benefit of this design is that it removes one more "computed component" from userspace. Though accessing the values within it are now less ergonomic.~~

This PR changes `crossbeam_channel` in `check_visibility` to use a `Local<ThreadLocal<Cell<Vec<Entity>>>` to mark down visible entities instead.

Co-Authored-By: TheRawMeatball <therawmeatball@gmail.com>

Co-Authored-By: Aevyrie <aevyrie@gmail.com>

# Objective

The descriptions included in the API docs of `entity` module, `Entity` struct, and `Component` trait have some issues:

1. the concept of entity is not clearly defined,

2. descriptions are a little bit out of place,

3. in a case the description leak too many details about the implementation,

4. some descriptions are not exhaustive,

5. there are not enough examples,

6. the content can be formatted in a much better way.

## Solution

1. ~~Stress the fact that entity is an abstract and elementary concept. Abstract because the concept of entity is not hardcoded into the library but emerges from the interaction of `Entity` with every other part of `bevy_ecs`, like components and world methods. Elementary because it is a fundamental concept that cannot be defined with other terms (like point in euclidean geometry, or time in classical physics).~~ We decided to omit the definition of entity in the API docs ([see why]). It is only described in its relationship with components.

2. Information has been moved to relevant places and links are used instead in the other places.

3. Implementation details about `Entity` have been reduced.

4. Descriptions have been made more exhaustive by stating how to obtain and use items. Entity operations are enriched with `World` methods.

5. Examples have been added or enriched.

6. Sections have been added to organize content. Entity operations are now laid out in a table.

### Todo list

- [x] Break lines at sentence-level.

## For reviewers

- ~~I added a TODO over `Component` docs, make sure to check it out and discuss it if necessary.~~ ([Resolved])

- You can easily check the rendered documentation by doing `cargo doc -p bevy_ecs --no-deps --open`.

[see why]: https://github.com/bevyengine/bevy/pull/4767#discussion_r875106329

[Resolved]: https://github.com/bevyengine/bevy/pull/4767#discussion_r874127825

# Objective

`bevy_ui` doesn't support correctly touch inputs because of two problems in the focus system:

- It attempts to retrieve touch input with a specific `0` id

- It doesn't retrieve touch positions and bases its focus solely on mouse position, absent from mobile devices

## Solution

I added a few methods to the `Touches` resource, allowing to check if **any** touch input was pressed, released or cancelled and to retrieve the *position* of the first pressed touch input and adapted the focus system.

I added a test button to the *iOS* example and it works correclty on emulator. I did not test on a real touch device as:

- Android is not working (https://github.com/bevyengine/bevy/issues/3249)

- I don't have an iOS device

# Objective

- Fixes#4996

## Solution

- Panicking on the global task pool is probably bad. This changes the panicking test to use a single threaded stage to run the test instead.

- I checked the other #[should_panic]

- I also added explicit ordering between the transform propagate system and the parent update system. The ambiguous ordering didn't seem to be causing problems, but the tests are probably more correct this way. The plugins that add these systems have an explicit ordering. I can remove this if necessary.

## Note

I don't have a 100% mental model of why panicking is causing intermittent failures. It probably has to do with a task for one of the other tests landing on the panicking thread when it actually panics. Why this causes a problem I'm not sure, but this PR seems to fix things.

## Open questions

- there are some other #[should_panic] tests that run on the task pool in stage.rs. I don't think we restart panicked threads, so this might be killing most of the threads on the pool. But since they're not causing test failures, we should probably decide what to do about that separately. The solution in this PR won't work since those tests are explicitly testing parallelism.

builds on top of #4780

# Objective

`Reflect` and `Serialize` are currently very tied together because `Reflect` has a `fn serialize(&self) -> Option<Serializable<'_>>` method. Because of that, we can either implement `Reflect` for types like `Option<T>` with `T: Serialize` and have `fn serialize` be implemented, or without the bound but having `fn serialize` return `None`.

By separating `ReflectSerialize` into a separate type (like how it already is for `ReflectDeserialize`, `ReflectDefault`), we could separately `.register::<Option<T>>()` and `.register_data::<Option<T>, ReflectSerialize>()` only if the type `T: Serialize`.

This PR does not change the registration but allows it to be changed in a future PR.

## Solution

- add the type

```rust

struct ReflectSerialize { .. }

impl<T: Reflect + Serialize> FromType<T> for ReflectSerialize { .. }

```

- remove `#[reflect(Serialize)]` special casing.

- when serializing reflect value types, look for `ReflectSerialize` in the `TypeRegistry` instead of calling `value.serialize()`

- changed `EntityCountDiagnosticsPlugin` to not use an exclusive system to get its entity count

- removed mention of `WgpuResourceDiagnosticsPlugin` in example `log_diagnostics` as it doesn't exist anymore

- added ability to enable, disable ~~or toggle~~ a diagnostic (fix#3767)

- made diagnostic values lazy, so they are only computed if the diagnostic is enabled

- do not log an average for diagnostics with only one value

- removed `sum` function from diagnostic as it isn't really useful

- ~~do not keep an average of the FPS diagnostic. it is already an average on the last 20 frames, so the average FPS was an average of the last 20 frames over the last 20 frames~~

- do not compute the FPS value as an average over the last 20 frames but give the actual "instant FPS"

- updated log format to use variable capture

- added some doc

- the frame counter diagnostic value can be reseted to 0

# Objective

- Optional dependencies were enabled by some features as a side effect. for example, enabling the `webgl` feature enables the `bevy_pbr` optional dependency

## Solution

- Use the syntax introduced in rust 1.60 to specify weak dependency features: https://blog.rust-lang.org/2022/04/07/Rust-1.60.0.html#new-syntax-for-cargo-features

> Weak dependency features tackle the second issue where the `"optional-dependency/feature-name"` syntax would always enable `optional-dependency`. However, often you want to enable the feature on the optional dependency only if some other feature has enabled the optional dependency. Starting in 1.60, you can add a ? as in `"package-name?/feature-name"` which will only enable the given feature if something else has enabled the optional dependency.

# Objective

- `.x` is not the correct syntax to access a column in a matrix in WGSL: https://www.w3.org/TR/WGSL/#matrix-access-expr

- naga accepts it and translates it correctly, but it's not valid when shaders are kept as is and used directly in WGSL

## Solution

- Use the correct syntax

# Objective

- Update hashbrown to 0.12

## Solution

- Replace #4004

- As the 0.12 is already in Bevy dependency tree, it shouldn't be an issue to update

- The exception for the 0.11 should be removed once https://github.com/zakarumych/gpu-descriptor/pull/21 is merged and released

- Also removed a few exceptions that weren't needed anymore

# Objective

- KTX2 UASTC format mapping was incorrect. For some reason I had written it to map to a set of data formats based on the count of KTX2 sample information blocks, but the mapping should be done based on the channel type in the sample information.

- This is a valid change pulled out from #4514 as the attempt to fix the array textures there was incorrect

## Solution

- Fix the KTX2 UASTC `DataFormat` enum to contain the correct formats based on the channel types in section 3.10.2 of https://github.khronos.org/KTX-Specification/ (search for "Basis Universal UASTC Format")

- Correctly map from the sample information channel type to `DataFormat`

- Correctly configure transcoding and the resulting texture format based on the `DataFormat`

---

## Changelog

- Fixed: KTX2 UASTC format handling

# Use Case

Seems generally useful, but specifically motivated by my work on the [`bevy_datasize`](https://github.com/BGR360/bevy_datasize) crate.

For that project, I'm implementing "heap size estimators" for all of the Bevy internal types. To do this accurately for `Mesh`, I need to get the lengths of all of the mesh's attribute vectors.

Currently, in order to accomplish this, I am doing the following:

* Checking all of the attributes that are mentioned in the `Mesh` class ([see here](0531ec2d02/src/builtins/render/mesh.rs (L46-L54)))

* Providing the user with an option to configure additional attributes to check ([see here](0531ec2d02/src/config.rs (L7-L21)))

This is both overly complicated and a bit wasteful (since I have to check every attribute name that I know about in case there are attributes set for it).

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

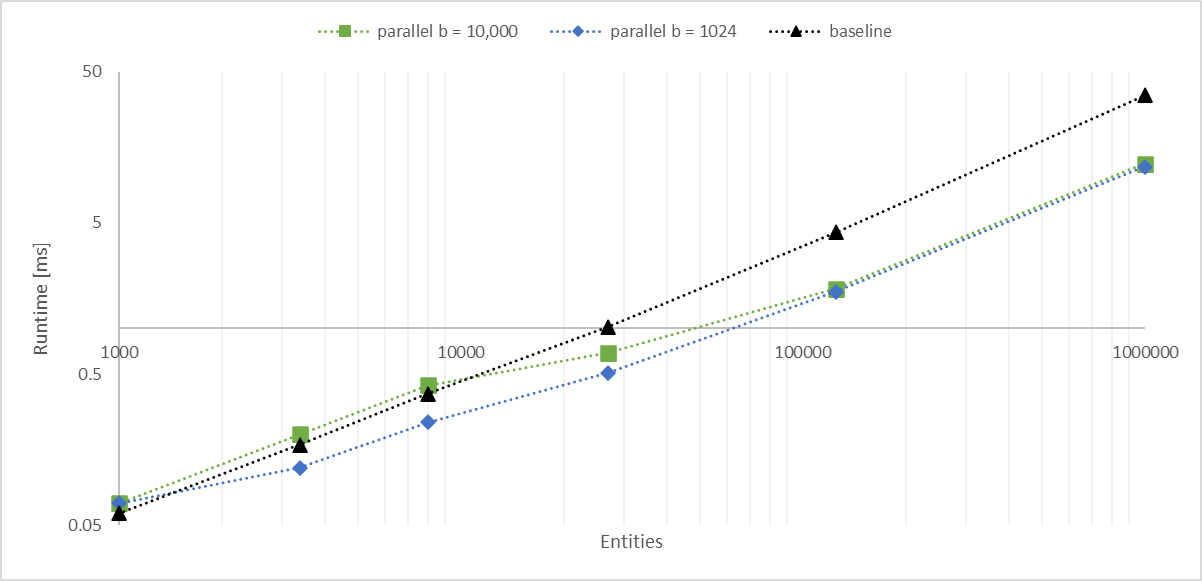

Working with a large number of entities with `Aabbs`, rendered with an instanced shader, I found the bottleneck became the frustum culling system. The goal of this PR is to significantly improve culling performance without any major changes. We should consider constructing a BVH for more substantial improvements.

## Solution

- Convert the inner entity query to a parallel iterator with `par_for_each_mut` using a batch size of 1,024.

- This outperforms single threaded culling when there are more than 1,000 entities.

- Below this they are approximately equal, with <= 10 microseconds of multithreading overhead.

- Above this, the multithreaded version is significantly faster, scaling linearly with core count.

- In my million-entity-workload, this PR improves my framerate by 200% - 300%.

## log-log of `check_visibility` time vs. entities for single/multithreaded

---

## Changelog

Frustum culling is now run with a parallel query. When culling more than a thousand entities, this is faster than the previous method, scaling proportionally with the number of available cores.

# Objective

- Builds on top of #4901

- Separate out PBR lighting, shadows, clustered forward, and utils from `pbr.wgsl` as part of making the PBR code more reusable and extensible.

- See #3969 for details.

## Solution

- Add `bevy_pbr::utils`, `bevy_pbr::clustered_forward`, `bevy_pbr::lighting`, `bevy_pbr::shadows` shader imports exposing many shader functions for external use

- Split `PI`, `saturate()`, `hsv2rgb()`, and `random1D()` into `bevy_pbr::utils`

- Split clustered-forward-specific functions into `bevy_pbr::clustered_forward`, including moving the debug visualization code into a `cluster_debug_visualization()` function in that import

- Split PBR lighting functions into `bevy_pbr::lighting`

- Split shadow functions into `bevy_pbr::shadows`

---

## Changelog

- Added: `bevy_pbr::utils`, `bevy_pbr::clustered_forward`, `bevy_pbr::lighting`, `bevy_pbr::shadows` shader imports exposing many shader functions for external use

- Split `PI`, `saturate()`, `hsv2rgb()`, and `random1D()` into `bevy_pbr::utils`

- Split clustered-forward-specific functions into `bevy_pbr::clustered_forward`, including moving the debug visualization code into a `cluster_debug_visualization()` function in that import

- Split PBR lighting functions into `bevy_pbr::lighting`

- Split shadow functions into `bevy_pbr::shadows`

# Objective

- Add reusable shader functions for transforming positions / normals / tangents between local and world / clip space for 2D and 3D so that they are done in a simple and correct way

- The next step in #3969 so check there for more details.

## Solution

- Add `bevy_pbr::mesh_functions` and `bevy_sprite::mesh2d_functions` shader imports

- These contain `mesh_` and `mesh2d_` versions of the following functions:

- `mesh_position_local_to_world`

- `mesh_position_world_to_clip`

- `mesh_position_local_to_clip`

- `mesh_normal_local_to_world`

- `mesh_tangent_local_to_world`

- Use them everywhere where it is appropriate

- Notably not in the sprite and UI shaders where `mesh2d_position_world_to_clip` could have been used, but including all the functions depends on the mesh binding so I chose to not use the function there

- NOTE: The `mesh_` and `mesh2d_` functions are currently identical. However, if I had defined only `bevy_pbr::mesh_functions` and used that in bevy_sprite, then bevy_sprite would have a runtime dependency on bevy_pbr, which seems undesirable. I also expect that when we have a proper 2D rendering API, these functions will diverge between 2D and 3D.

---

## Changelog

- Added: `bevy_pbr::mesh_functions` and `bevy_sprite::mesh2d_functions` shader imports containing `mesh_` and `mesh2d_` versions of the following functions:

- `mesh_position_local_to_world`

- `mesh_position_world_to_clip`

- `mesh_position_local_to_clip`

- `mesh_normal_local_to_world`

- `mesh_tangent_local_to_world`

## Migration Guide

- The `skin_tangents` function from the `bevy_pbr::skinning` shader import has been replaced with the `mesh_tangent_local_to_world` function from the `bevy_pbr::mesh_functions` shader import

# Objective

- Fix a type inference regression introduced by #3001

- Make read only bounds on world queries more user friendly

ptrification required you to write `Q::Fetch: ReadOnlyFetch` as `for<'w> QueryFetch<'w, Q>: ReadOnlyFetch` which has the same type inference problem as `for<'w> QueryFetch<'w, Q>: FilterFetch<'w>` had, i.e. the following code would error:

```rust

#[derive(Component)]

struct Foo;

fn bar(a: Query<(&Foo, Without<Foo>)>) {

foo(a);

}

fn foo<Q: WorldQuery>(a: Query<Q, ()>)

where

for<'w> QueryFetch<'w, Q>: ReadOnlyFetch,

{

}

```

`for<..>` bounds are also rather user unfriendly..

## Solution

Remove the `ReadOnlyFetch` trait in favour of a `ReadOnlyWorldQuery` trait, and remove `WorldQueryGats::ReadOnlyFetch` in favor of `WorldQuery::ReadOnly` allowing the previous code snippet to be written as:

```rust

#[derive(Component)]

struct Foo;

fn bar(a: Query<(&Foo, Without<Foo>)>) {

foo(a);

}

fn foo<Q: ReadOnlyWorldQuery>(a: Query<Q, ()>) {}

```

This avoids the `for<...>` bound which makes the code simpler and also fixes the type inference issue.

The reason for moving the two functions out of `FetchState` and into `WorldQuery` is to allow the world query `&mut T` to share a `State` with the `&T` world query so that it can have `type ReadOnly = &T`. Presumably it would be possible to instead have a `ReadOnlyRefMut<T>` world query and then do `type ReadOnly = ReadOnlyRefMut<T>` much like how (before this PR) we had a `ReadOnlyWriteFetch<T>`. A side benefit of the current solution in this PR is that it will likely make it easier in the future to support an API such as `Query<&mut T> -> Query<&T>`. The primary benefit IMO is just that `ReadOnlyRefMut<T>` and its associated fetch would have to reimplement all of the logic that the `&T` world query impl does but this solution avoids that :)

---

## Changelog/Migration Guide

The trait `ReadOnlyFetch` has been replaced with `ReadOnlyWorldQuery` along with the `WorldQueryGats::ReadOnlyFetch` assoc type which has been replaced with `<WorldQuery::ReadOnly as WorldQueryGats>::Fetch`

- Any where clauses such as `QueryFetch<Q>: ReadOnlyFetch` should be replaced with `Q: ReadOnlyWorldQuery`.

- Any custom world query impls should implement `ReadOnlyWorldQuery` insead of `ReadOnlyFetch`

Functions `update_component_access` and `update_archetype_component_access` have been moved from the `FetchState` trait to `WorldQuery`

- Any callers should now call `Q::update_component_access(state` instead of `state.update_component_access` (and `update_archetype_component_access` respectively)

- Any custom world query impls should move the functions from the `FetchState` impl to `WorldQuery` impl

`WorldQuery` has been made an `unsafe trait`, `FetchState` has been made a safe `trait`. (I think this is how it should have always been, but regardless this is _definitely_ necessary now that the two functions have been moved to `WorldQuery`)

- If you have a custom `FetchState` impl make it a normal `impl` instead of `unsafe impl`

- If you have a custom `WorldQuery` impl make it an `unsafe impl`, if your code was sound before it is going to still be sound

This upgrade should bring some significant performance improvements to

instrumentation. These are mostly achieved by disabling features (by

default) that are likely not widely used by default – collection of

callstacks and support for fibers that wasn't used for anything in

particular yet. For callstack collection it might be worthwhile to

provide a mechanism to enable this at runtime by calling

`TracyLayer::with_stackdepth`.

These should bring the cost of a single span down from 30+µs per span to

a more reasonable 1.5µs or so and down to the ns scale for events (on my

1st gen Ryzen machine, anyway.) There is still a fair amount of overhead

over plain tracy_client instrumentation in formatting and such, but

dealing with it requires significant effort and this is a

straightforward improvement to have for the time being.

Co-authored-by: Simonas Kazlauskas <git@kazlauskas.me>

# Objective

- Fixes#4271

## Solution

- Check for a pending transition in addition to a scheduled operation.

- I don't see a valid reason for updating the state unless both `scheduled` and `transition` are empty.

# Objective

Overflow::Hidden doesn't work correctly with scale_factor_override.

If you run the Bevy UI example with scale_factor_override 3 you'll see half clipped text around the edges of the scrolling listbox.

The problem seems to be that the corners of the node are transformed before the amount of clipping required is calculated. But then that transformed clip is compared to the original untransformed size of the node rect to see if it should be culled or not. With a higher scale factor the relative size of the untransformed node rect is going to be really big, so the overflow isn't culled.

# Solution

Multiply the size of the node rect by extracted_uinode.transform before the cull test.

# Objective

Fix#4958

There was 4 issues:

- this is not true in WASM and on macOS: f28b921209/examples/3d/split_screen.rs (L90)

- ~~I made sure the system was running at least once~~

- I'm sending the event on window creation

- in webgl, setting a viewport has impacts on other render passes

- only in webgl and when there is a custom viewport, I added a render pass without a custom viewport

- shaderdef NO_ARRAY_TEXTURES_SUPPORT was not used by the 2d pipeline

- webgl feature was used but not declared in bevy_sprite, I added it to the Cargo.toml

- shaderdef NO_STORAGE_BUFFERS_SUPPORT was not used by the 2d pipeline

- I added it based on the BufferBindingType

The last commit changes the two last fixes to add the shaderdefs in the shader cache directly instead of needing to do it in each pipeline

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Closes#4464

## Solution

- Specify default mag and min filter types for `Image` instead of using `wgpu`'s defaults.

---

## Changelog

### Changed

- Default `Image` filtering changed from `Nearest` to `Linear`.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

> Resolves#4504

It can be helpful to have access to type information without requiring an instance of that type. Especially for `Reflect`, a lot of the gathered type information is known at compile-time and should not necessarily require an instance.

## Solution

Created a dedicated `TypeInfo` enum to store static type information. All types that derive `Reflect` now also implement the newly created `Typed` trait:

```rust

pub trait Typed: Reflect {

fn type_info() -> &'static TypeInfo;

}

```

> Note: This trait was made separate from `Reflect` due to `Sized` restrictions.

If you only have access to a `dyn Reflect`, just call `.get_type_info()` on it. This new trait method on `Reflect` should return the same value as if you had called it statically.

If all you have is a `TypeId` or type name, you can get the `TypeInfo` directly from the registry using the `TypeRegistry::get_type_info` method (assuming it was registered).

### Usage

Below is an example of working with `TypeInfo`. As you can see, we don't have to generate an instance of `MyTupleStruct` in order to get this information.

```rust

#[derive(Reflect)]

struct MyTupleStruct(usize, i32, MyStruct);

let info = MyTupleStruct::type_info();

if let TypeInfo::TupleStruct(info) = info {

assert!(info.is::<MyTupleStruct>());

assert_eq!(std::any::type_name::<MyTupleStruct>(), info.type_name());

assert!(info.field_at(1).unwrap().is::<i32>());

} else {

panic!("Expected `TypeInfo::TupleStruct`");

}

```

### Manual Implementations

It's not recommended to manually implement `Typed` yourself, but if you must, you can use the `TypeInfoCell` to automatically create and manage the static `TypeInfo`s for you (which is very helpful for blanket/generic impls):

```rust

use bevy_reflect::{Reflect, TupleStructInfo, TypeInfo, UnnamedField};

use bevy_reflect::utility::TypeInfoCell;

struct Foo<T: Reflect>(T);

impl<T: Reflect> Typed for Foo<T> {

fn type_info() -> &'static TypeInfo {

static CELL: TypeInfoCell = TypeInfoCell::generic();

CELL.get_or_insert::<Self, _>(|| {

let fields = [UnnamedField:🆕:<T>()];

let info = TupleStructInfo:🆕:<Self>(&fields);

TypeInfo::TupleStruct(info)

})

}

}

```

## Benefits

One major benefit is that this opens the door to other serialization methods. Since we can get all the type info at compile time, we can know how to properly deserialize something like:

```rust

#[derive(Reflect)]

struct MyType {

foo: usize,

bar: Vec<String>

}

// RON to be deserialized:

(

type: "my_crate::MyType", // <- We now know how to deserialize the rest of this object

value: {

// "foo" is a value type matching "usize"

"foo": 123,

// "bar" is a list type matching "Vec<String>" with item type "String"

"bar": ["a", "b", "c"]

}

)

```

Not only is this more compact, but it has better compatibility (we can change the type of `"foo"` to `i32` without having to update our serialized data).

Of course, serialization/deserialization strategies like this may need to be discussed and fully considered before possibly making a change. However, we will be better equipped to do that now that we can access type information right from the registry.

## Discussion

Some items to discuss:

1. Duplication. There's a bit of overlap with the existing traits/structs since they require an instance of the type while the type info structs do not (for example, `Struct::field_at(&self, index: usize)` and `StructInfo::field_at(&self, index: usize)`, though only `StructInfo` is accessible without an instance object). Is this okay, or do we want to handle it in another way?

2. Should `TypeInfo::Dynamic` be removed? Since the dynamic types don't have type information available at runtime, we could consider them `TypeInfo::Value`s (or just even just `TypeInfo::Struct`). The intention with `TypeInfo::Dynamic` was to keep the distinction from these dynamic types and actual structs/values since users might incorrectly believe the methods of the dynamic type's info struct would map to some contained data (which isn't possible statically).

4. General usefulness of this change, including missing/unnecessary parts.

5. Possible changes to the scene format? (One possible issue with changing it like in the example above might be that we'd have to be careful when handling generic or trait object types.)

## Compile Tests

I ran a few tests to compare compile times (as suggested [here](https://github.com/bevyengine/bevy/pull/4042#discussion_r876408143)). I toggled `Reflect` and `FromReflect` derive macros using `cfg_attr` for both this PR (aa5178e773) and main (c309acd432).

<details>

<summary>See More</summary>

The test project included 250 of the following structs (as well as a few other structs):

```rust

#[derive(Default)]

#[cfg_attr(feature = "reflect", derive(Reflect))]

#[cfg_attr(feature = "from_reflect", derive(FromReflect))]

pub struct Big001 {

inventory: Inventory,

foo: usize,

bar: String,

baz: ItemDescriptor,

items: [Item; 20],

hello: Option<String>,

world: HashMap<i32, String>,

okay: (isize, usize, /* wesize */),

nope: ((String, String), (f32, f32)),

blah: Cow<'static, str>,

}

```

> I don't know if the compiler can optimize all these duplicate structs away, but I think it's fine either way. We're comparing times, not finding the absolute worst-case time.

I only ran each build 3 times using `cargo build --timings` (thank you @devil-ira), each of which were preceeded by a `cargo clean --package bevy_reflect_compile_test`.

Here are the times I got:

| Test | Test 1 | Test 2 | Test 3 | Average |

| -------------------------------- | ------ | ------ | ------ | ------- |

| Main | 1.7s | 3.1s | 1.9s | 2.33s |

| Main + `Reflect` | 8.3s | 8.6s | 8.1s | 8.33s |

| Main + `Reflect` + `FromReflect` | 11.6s | 11.8s | 13.8s | 12.4s |

| PR | 3.5s | 1.8s | 1.9s | 2.4s |

| PR + `Reflect` | 9.2s | 8.8s | 9.3s | 9.1s |

| PR + `Reflect` + `FromReflect` | 12.9s | 12.3s | 12.5s | 12.56s |

</details>

---

## Future Work

Even though everything could probably be made `const`, we unfortunately can't. This is because `TypeId::of::<T>()` is not yet `const` (see https://github.com/rust-lang/rust/issues/77125). When it does get stabilized, it would probably be worth coming back and making things `const`.

Co-authored-by: MrGVSV <49806985+MrGVSV@users.noreply.github.com>

# Objective

- Spawning a scene is handled as a special case with a command `spawn_scene` that takes an handle but doesn't let you specify anything else. This is the only handle that works that way.

- Workaround for this have been to add the `spawn_scene` on `ChildBuilder` to be able to specify transform of parent, or to make the `SceneSpawner` available to be able to select entities from a scene by their instance id

## Solution

Add a bundle

```rust

pub struct SceneBundle {

pub scene: Handle<Scene>,

pub transform: Transform,

pub global_transform: GlobalTransform,

pub instance_id: Option<InstanceId>,

}

```

and instead of

```rust

commands.spawn_scene(asset_server.load("models/FlightHelmet/FlightHelmet.gltf#Scene0"));

```

you can do

```rust

commands.spawn_bundle(SceneBundle {

scene: asset_server.load("models/FlightHelmet/FlightHelmet.gltf#Scene0"),

..Default::default()

});

```

The scene will be spawned as a child of the entity with the `SceneBundle`

~I would like to remove the command `spawn_scene` in favor of this bundle but didn't do it yet to get feedback first~

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Following #4855, `Column` is just a parallel `BlobVec`/`Vec<UnsafeCell<ComponentTicks>>` pair, which is identical to the dense and ticks vecs in `ComponentSparseSet`, which has some code duplication with `Column`.

## Solution

Replace dense and ticks in `ComponentSparseSet` with a `Column`.

# Objective

Most of our `Iterator` impls satisfy the requirements of `std::iter::FusedIterator`, which has internal specialization that optimizes `Interator::fuse`. The std lib iterator combinators do have a few that rely on `fuse`, so this could optimize those use cases. I don't think we're using any of them in the engine itself, but beyond a light increase in compile time, it doesn't hurt to implement the trait.

## Solution

Implement the trait for all eligible iterators in first party crates. Also add a missing `ExactSizeIterator` on an iterator that could use it.

Right now, a direct reference to the target TaskPool is required to launch tasks on the pools, despite the three newtyped pools (AsyncComputeTaskPool, ComputeTaskPool, and IoTaskPool) effectively acting as global instances. The need to pass a TaskPool reference adds notable friction to spawning subtasks within existing tasks. Possible use cases for this may include chaining tasks within the same pool like spawning separate send/receive I/O tasks after waiting on a network connection to be established, or allowing cross-pool dependent tasks like starting dependent multi-frame computations following a long I/O load.

Other task execution runtimes provide static access to spawning tasks (i.e. `tokio::spawn`), which is notably easier to use than the reference passing required by `bevy_tasks` right now.

This PR makes does the following:

* Adds `*TaskPool::init` which initializes a `OnceCell`'ed with a provided TaskPool. Failing if the pool has already been initialized.

* Adds `*TaskPool::get` which fetches the initialized global pool of the respective type or panics. This generally should not be an issue in normal Bevy use, as the pools are initialized before they are accessed.

* Updated default task pool initialization to either pull the global handles and save them as resources, or if they are already initialized, pull the a cloned global handle as the resource.

This should make it notably easier to build more complex task hierarchies for dependent tasks. It should also make writing bevy-adjacent, but not strictly bevy-only plugin crates easier, as the global pools ensure it's all running on the same threads.

One alternative considered is keeping a thread-local reference to the pool for all threads in each pool to enable the same `tokio::spawn` interface. This would spawn tasks on the same pool that a task is currently running in. However this potentially leads to potential footgun situations where long running blocking tasks run on `ComputeTaskPool`.

# Objective

Users should be able to configure depth load operations on cameras. Currently every camera clears depth when it is rendered. But sometimes later passes need to rely on depth from previous passes.

## Solution

This adds the `Camera3d::depth_load_op` field with a new `Camera3dDepthLoadOp` value. This is a custom type because Camera3d uses "reverse-z depth" and this helps us record and document that in a discoverable way. It also gives us more control over reflection + other trait impls, whereas `LoadOp` is owned by the `wgpu` crate.

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

depth_load_op: Camera3dDepthLoadOp::Load,

..default()

},

..default()

});

```

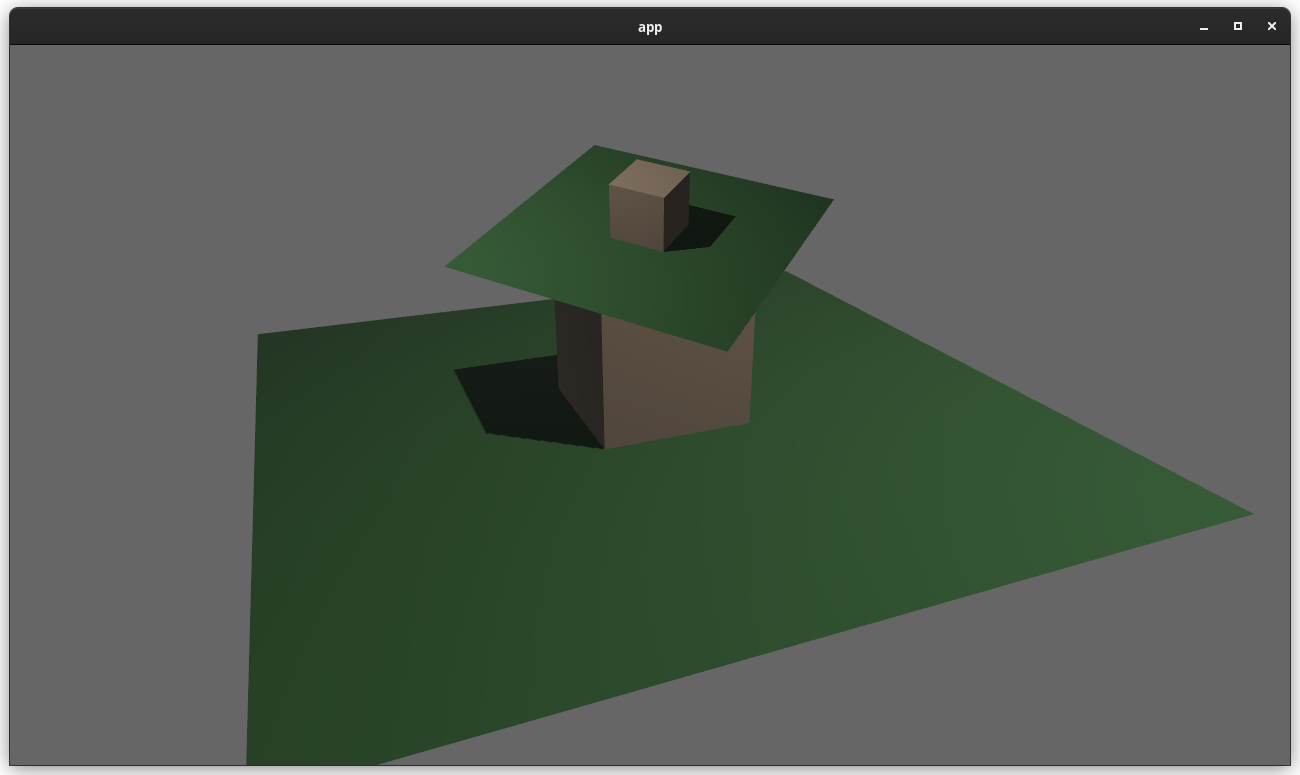

### two_passes example with the "second pass" camera configured to the default (clear depth to 0.0)

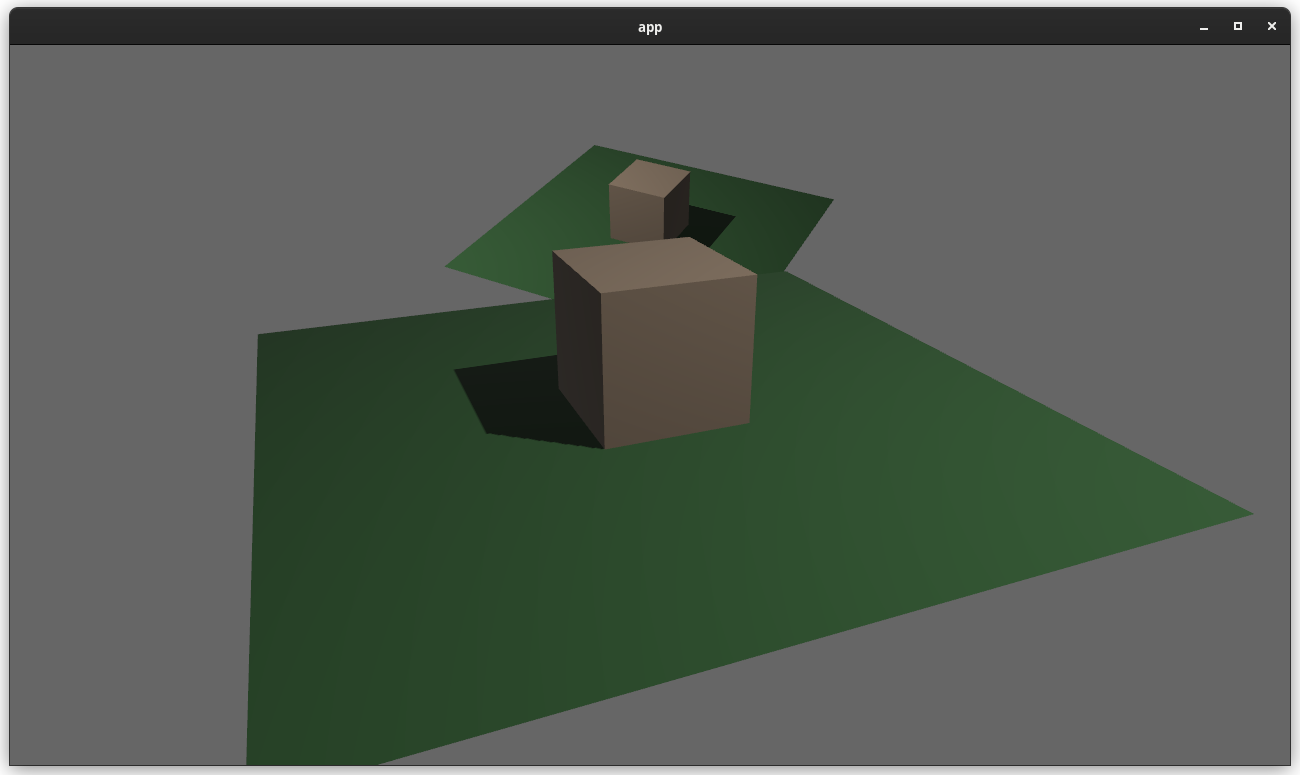

### two_passes example with the "second pass" camera configured to "load" the depth

---

## Changelog

### Added

* `Camera3d` now has a `depth_load_op` field, which can configure the Camera's main 3d pass depth loading behavior.

While working on a refactor of `bevy_mod_picking` to include viewport-awareness, I found myself writing these functions to test if a cursor coordinate was inside the camera's rendered area.

# Objective

- Simplify conversion from physical to logical pixels

- Add methods that returns the dimensions of the viewport as a min-max rect

---

## Changelog

- Added `Camera::to_logical`

- Added `Camera::physical_viewport_rect`

- Added `Camera::logical_viewport_rect`