Currently, Bevy rebuilds the buffer containing all the transforms for

joints every frame, during the extraction phase. This is inefficient in

cases in which many skins are present in the scene and their joints

don't move, such as the Caldera test scene.

To address this problem, this commit switches skin extraction to use a

set of retained GPU buffers with allocations managed by the offset

allocator. I use fine-grained change detection in order to determine

which skins need updating. Note that the granularity is on the level of

an entire skin, not individual joints. Using the change detection at

that level would yield poor performance in common cases in which an

entire skin is animated at once. Also, this patch yields additional

performance from the fact that changing joint transforms no longer

requires the skinned mesh to be re-extracted.

Note that this optimization can be a double-edged sword. In

`many_foxes`, fine-grained change detection regressed the performance of

`extract_skins` by 3.4x. This is because every joint is updated every

frame in that example, so change detection is pointless and is pure

overhead. Because the `many_foxes` workload is actually representative

of animated scenes, this patch includes a heuristic that disables

fine-grained change detection if the number of transformed entities in

the frame exceeds a certain fraction of the total number of joints.

Currently, this threshold is set to 25%. Note that this is a crude

heuristic, because it doesn't distinguish between the number of

transformed *joints* and the number of transformed *entities*; however,

it should be good enough to yield the optimum code path most of the

time.

Finally, this patch fixes a bug whereby skinned meshes are actually

being incorrectly retained if the buffer offsets of the joints of those

skinned meshes changes from frame to frame. To fix this without

retaining skins, we would have to re-extract every skinned mesh every

frame. Doing this was a significant regression on Caldera. With this PR,

by contrast, mesh joints stay at the same buffer offset, so we don't

have to update the `MeshInputUniform` containing the buffer offset every

frame. This also makes PR #17717 easier to implement, because that PR

uses the buffer offset from the previous frame, and the logic for

calculating that is simplified if the previous frame's buffer offset is

guaranteed to be identical to that of the current frame.

On Caldera, this patch reduces the time spent in `extract_skins` from

1.79 ms to near zero. On `many_foxes`, this patch regresses the

performance of `extract_skins` by approximately 10%-25%, depending on

the number of foxes. This has only a small impact on frame rate.

The GPU can fill out many of the fields in `IndirectParametersMetadata`

using information it already has:

* `early_instance_count` and `late_instance_count` are always

initialized to zero.

* `mesh_index` is already present in the work item buffer as the

`input_index` of the first work item in each batch.

This patch moves these fields to a separate buffer, the *GPU indirect

parameters metadata* buffer. That way, it avoids having to write them on

CPU during `batch_and_prepare_binned_render_phase`. This effectively

reduces the number of bits that that function must write per mesh from

160 to 64 (in addition to the 64 bits per mesh *instance*).

Additionally, this PR refactors `UntypedPhaseIndirectParametersBuffers`

to add another layer, `MeshClassIndirectParametersBuffers`, which allows

abstracting over the buffers corresponding indexed and non-indexed

meshes. This patch doesn't make much use of this abstraction, but

forthcoming patches will, and it's overall a cleaner approach.

This didn't seem to have much of an effect by itself on

`batch_and_prepare_binned_render_phase` time, but subsequent PRs

dependent on this PR yield roughly a 2× speedup.

# Objective

Fix panic in `custom_render_phase`.

This example was broken by #17764, but that breakage evolved into a

panic after #17849. This new panic seems to illustrate the problem in a

pretty straightforward way.

```

2025-02-15T00:44:11.833622Z INFO bevy_diagnostic::system_information_diagnostics_plugin::internal: SystemInfo { os: "macOS 15.3 Sequoia", kernel: "24.3.0", cpu: "Apple M4 Max", core_count: "16", memory: "64.0 GiB" }

2025-02-15T00:44:11.908328Z INFO bevy_render::renderer: AdapterInfo { name: "Apple M4 Max", vendor: 0, device: 0, device_type: IntegratedGpu, driver: "", driver_info: "", backend: Metal }

2025-02-15T00:44:12.314930Z INFO bevy_winit::system: Creating new window App (0v1)

thread 'Compute Task Pool (1)' panicked at /Users/me/src/bevy/crates/bevy_ecs/src/system/function_system.rs:216:28:

bevy_render::batching::gpu_preprocessing::batch_and_prepare_sorted_render_phase<custom_render_phase::Stencil3d, custom_render_phase::StencilPipeline> could not access system parameter ResMut<PhaseBatchedInstanceBuffers<Stencil3d, MeshUniform>>

```

## Solution

Add a `SortedRenderPhasePlugin` for the custom phase.

## Testing

`cargo run --example custom_render_phase`

The `output_index` field is only used in direct mode, and the

`indirect_parameters_index` field is only used in indirect mode.

Consequently, we can combine them into a single field, reducing the size

of `PreprocessWorkItem`, which

`batch_and_prepare_{binned,sorted}_render_phase` must construct every

frame for every mesh instance, from 96 bits to 64 bits.

Currently, invocations of `batch_and_prepare_binned_render_phase` and

`batch_and_prepare_sorted_render_phase` can't run in parallel because

they write to scene-global GPU buffers. After PR #17698,

`batch_and_prepare_binned_render_phase` started accounting for the

lion's share of the CPU time, causing us to be strongly CPU bound on

scenes like Caldera when occlusion culling was on (because of the

overhead of batching for the Z-prepass). Although I eventually plan to

optimize `batch_and_prepare_binned_render_phase`, we can obtain

significant wins now by parallelizing that system across phases.

This commit splits all GPU buffers that

`batch_and_prepare_binned_render_phase` and

`batch_and_prepare_sorted_render_phase` touches into separate buffers

for each phase so that the scheduler will run those phases in parallel.

At the end of batch preparation, we gather the render phases up into a

single resource with a new *collection* phase. Because we already run

mesh preprocessing separately for each phase in order to make occlusion

culling work, this is actually a cleaner separation. For example, mesh

output indices (the unique ID that identifies each mesh instance on GPU)

are now guaranteed to be sequential starting from 0, which will simplify

the forthcoming work to remove them in favor of the compute dispatch ID.

On Caldera, this brings the frame time down to approximately 9.1 ms with

occlusion culling on.

Currently, we look up each `MeshInputUniform` index in a hash table that

maps the main entity ID to the index every frame. This is inefficient,

cache unfriendly, and unnecessary, as the `MeshInputUniform` index for

an entity remains the same from frame to frame (even if the input

uniform changes). This commit changes the `IndexSet` in the `RenderBin`

to an `IndexMap` that maps the `MainEntity` to `MeshInputUniformIndex`

(a new type that this patch adds for more type safety).

On Caldera with parallel `batch_and_prepare_binned_render_phase`, this

patch improves that function from 3.18 ms to 2.42 ms, a 31% speedup.

# Objective

Because of mesh preprocessing, users cannot rely on

`@builtin(instance_index)` in order to reference external data, as the

instance index is not stable, either from frame to frame or relative to

the total spawn order of mesh instances.

## Solution

Add a user supplied mesh index that can be used for referencing external

data when drawing instanced meshes.

Closes#13373

## Testing

Benchmarked `many_cubes` showing no difference in total frame time.

## Showcase

https://github.com/user-attachments/assets/80620147-aafc-4d9d-a8ee-e2149f7c8f3b

---------

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

# Objective

https://github.com/bevyengine/bevy/issues/17746

## Solution

- Change `Image.data` from being a `Vec<u8>` to a `Option<Vec<u8>>`

- Added functions to help with creating images

## Testing

- Did you test these changes? If so, how?

All current tests pass

Tested a variety of existing examples to make sure they don't crash

(they don't)

- If relevant, what platforms did you test these changes on, and are

there any important ones you can't test?

Linux x86 64-bit NixOS

---

## Migration Guide

Code that directly access `Image` data will now need to use unwrap or

handle the case where no data is provided.

Behaviour of new_fill slightly changed, but not in a way that is likely

to affect anything. It no longer panics and will fill the whole texture

instead of leaving black pixels if the data provided is not a nice

factor of the size of the image.

---------

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

# Objective

- It's currently very hard for beginners and advanced users to get a

full understanding of a complete render phase.

## Solution

- Implement a full custom render phase

- The render phase in the example is intended to show a custom stencil

phase that renders the stencil in red directly on the screen

---

## Showcase

<img width="1277" alt="image"

src="https://github.com/user-attachments/assets/e9dc0105-4fb6-463f-ad53-0529b575fd28"

/>

## Notes

More docs to explain what is going on is still needed but the example

works and can already help some people.

We might want to consider using a batched phase and cold specialization

in the future, but the example is already complex enough as it is.

---------

Co-authored-by: Christopher Biscardi <chris@christopherbiscardi.com>

PR #17684 broke occlusion culling because it neglected to set the

indirect parameter offsets for the late mesh preprocessing stage if the

work item buffers were already set. This PR moves the update of those

values to a new function, `init_work_item_buffers`, which is

unconditionally called for every phase every frame.

Note that there's some complexity in order to handle the case in which

occlusion culling was enabled on one frame and disabled on the next, or

vice versa. This was necessary in order to make the occlusion culling

toggle in the `occlusion_culling` example work again.

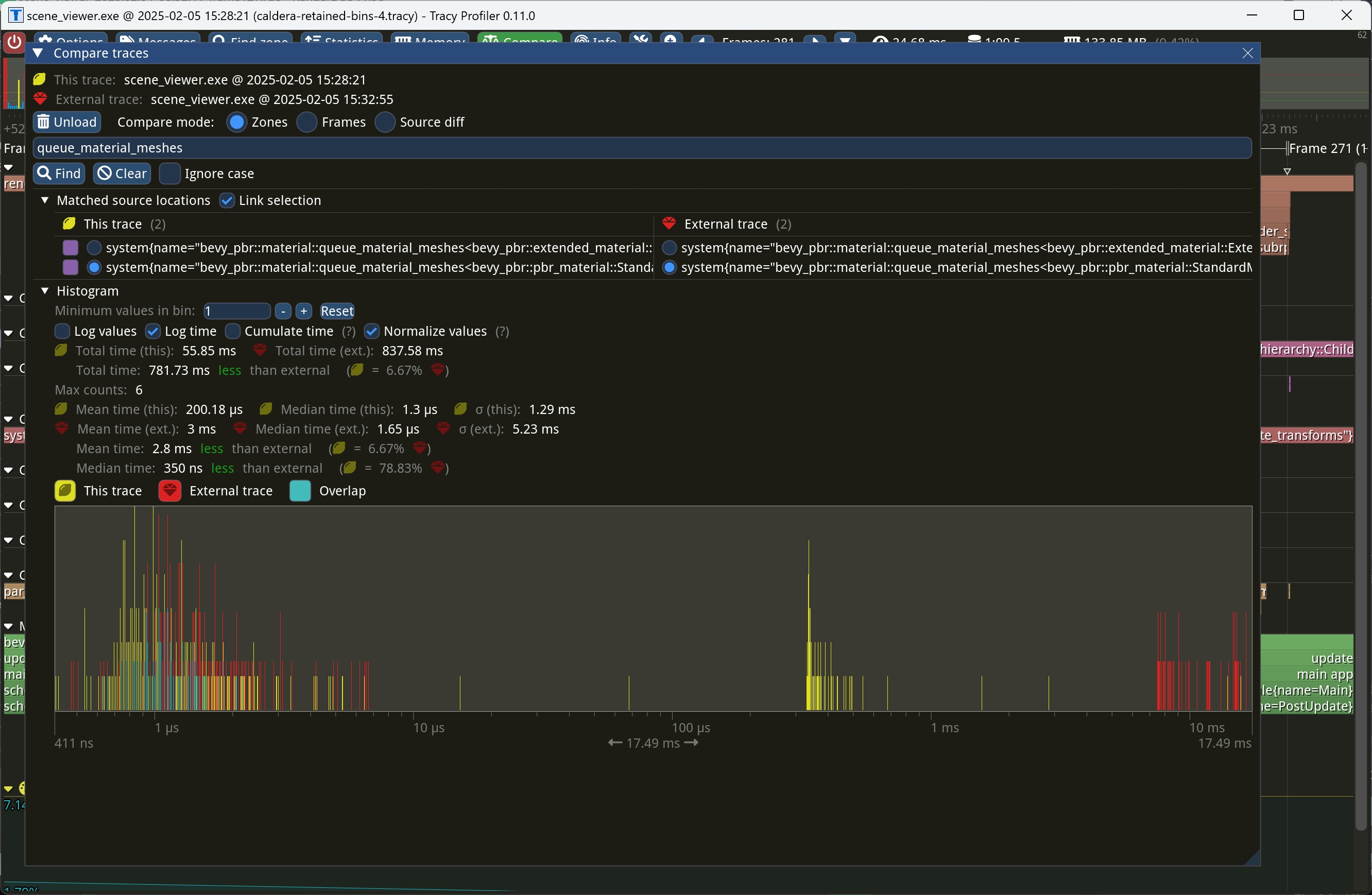

This PR makes Bevy keep entities in bins from frame to frame if they

haven't changed. This reduces the time spent in `queue_material_meshes`

and related functions to near zero for static geometry. This patch uses

the same change tick technique that #17567 uses to detect when meshes

have changed in such a way as to require re-binning.

In order to quickly find the relevant bin for an entity when that entity

has changed, we introduce a new type of cache, the *bin key cache*. This

cache stores a mapping from main world entity ID to cached bin key, as

well as the tick of the most recent change to the entity. As we iterate

through the visible entities in `queue_material_meshes`, we check the

cache to see whether the entity needs to be re-binned. If it doesn't,

then we mark it as clean in the `valid_cached_entity_bin_keys` bit set.

If it does, then we insert it into the correct bin, and then mark the

entity as clean. At the end, all entities not marked as clean are

removed from the bins.

This patch has a dramatic effect on the rendering performance of most

benchmarks, as it effectively eliminates `queue_material_meshes` from

the profile. Note, however, that it generally simultaneously regresses

`batch_and_prepare_binned_render_phase` by a bit (not by enough to

outweigh the win, however). I believe that's because, before this patch,

`queue_material_meshes` put the bins in the CPU cache for

`batch_and_prepare_binned_render_phase` to use, while with this patch,

`batch_and_prepare_binned_render_phase` must load the bins into the CPU

cache itself.

On Caldera, this reduces the time spent in `queue_material_meshes` from

5+ ms to 0.2ms-0.3ms. Note that benchmarking on that scene is very noisy

right now because of https://github.com/bevyengine/bevy/issues/17535.

We were calling `clear()` on the work item buffer table, which caused us

to deallocate all the CPU side buffers. This patch changes the logic to

instead just clear the buffers individually, but leave their backing

stores. This has two consequences:

1. To effectively retain work item buffers from frame to frame, we need

to key them off `RetainedViewEntity` values and not the render world

`Entity`, which is transient. This PR changes those buffers accordingly.

2. We need to clean up work item buffers that belong to views that went

away. Amusingly enough, we actually have a system,

`delete_old_work_item_buffers`, that tries to do this already, but it

wasn't doing anything because the `clear_batched_gpu_instance_buffers`

system already handled that. This patch actually makes the

`delete_old_work_item_buffers` system useful, by removing the clearing

behavior from `clear_batched_gpu_instance_buffers` and instead making

`delete_old_work_item_buffers` delete buffers corresponding to

nonexistent views.

On Bistro, this PR improves the performance of

`batch_and_prepare_binned_render_phase` from 61.2 us to 47.8 us, a 28%

speedup.

## Objective

Bevy 0.15 introduced new method in `Material2d` trait- `alpha_mode`.

Before that when new material was created it had alpha blending, now it

does not.

## Solution

While I am okay with it, it could be useful to add the new trait method

implementation to one of the samples so users are more aware of it.

---------

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

*Occlusion culling* allows the GPU to skip the vertex and fragment

shading overhead for objects that can be quickly proved to be invisible

because they're behind other geometry. A depth prepass already

eliminates most fragment shading overhead for occluded objects, but the

vertex shading overhead, as well as the cost of testing and rejecting

fragments against the Z-buffer, is presently unavoidable for standard

meshes. We currently perform occlusion culling only for meshlets. But

other meshes, such as skinned meshes, can benefit from occlusion culling

too in order to avoid the transform and skinning overhead for unseen

meshes.

This commit adapts the same [*two-phase occlusion culling*] technique

that meshlets use to Bevy's standard 3D mesh pipeline when the new

`OcclusionCulling` component, as well as the `DepthPrepass` component,

are present on the camera. It has these steps:

1. *Early depth prepass*: We use the hierarchical Z-buffer from the

previous frame to cull meshes for the initial depth prepass, effectively

rendering only the meshes that were visible in the last frame.

2. *Early depth downsample*: We downsample the depth buffer to create

another hierarchical Z-buffer, this time with the current view

transform.

3. *Late depth prepass*: We use the new hierarchical Z-buffer to test

all meshes that weren't rendered in the early depth prepass. Any meshes

that pass this check are rendered.

4. *Late depth downsample*: Again, we downsample the depth buffer to

create a hierarchical Z-buffer in preparation for the early depth

prepass of the next frame. This step is done after all the rendering, in

order to account for custom phase items that might write to the depth

buffer.

Note that this patch has no effect on the per-mesh CPU overhead for

occluded objects, which remains high for a GPU-driven renderer due to

the lack of `cold-specialization` and retained bins. If

`cold-specialization` and retained bins weren't on the horizon, then a

more traditional approach like potentially visible sets (PVS) or low-res

CPU rendering would probably be more efficient than the GPU-driven

approach that this patch implements for most scenes. However, at this

point the amount of effort required to implement a PVS baking tool or a

low-res CPU renderer would probably be greater than landing

`cold-specialization` and retained bins, and the GPU driven approach is

the more modern one anyway. It does mean that the performance

improvements from occlusion culling as implemented in this patch *today*

are likely to be limited, because of the high CPU overhead for occluded

meshes.

Note also that this patch currently doesn't implement occlusion culling

for 2D objects or shadow maps. Those can be addressed in a follow-up.

Additionally, note that the techniques in this patch require compute

shaders, which excludes support for WebGL 2.

This PR is marked experimental because of known precision issues with

the downsampling approach when applied to non-power-of-two framebuffer

sizes (i.e. most of them). These precision issues can, in rare cases,

cause objects to be judged occluded that in fact are not. (I've never

seen this in practice, but I know it's possible; it tends to be likelier

to happen with small meshes.) As a follow-up to this patch, we desire to

switch to the [SPD-based hi-Z buffer shader from the Granite engine],

which doesn't suffer from these problems, at which point we should be

able to graduate this feature from experimental status. I opted not to

include that rewrite in this patch for two reasons: (1) @JMS55 is

planning on doing the rewrite to coincide with the new availability of

image atomic operations in Naga; (2) to reduce the scope of this patch.

A new example, `occlusion_culling`, has been added. It demonstrates

objects becoming quickly occluded and disoccluded by dynamic geometry

and shows the number of objects that are actually being rendered. Also,

a new `--occlusion-culling` switch has been added to `scene_viewer`, in

order to make it easy to test this patch with large scenes like Bistro.

[*two-phase occlusion culling*]:

https://medium.com/@mil_kru/two-pass-occlusion-culling-4100edcad501

[Aaltonen SIGGRAPH 2015]:

https://www.advances.realtimerendering.com/s2015/aaltonenhaar_siggraph2015_combined_final_footer_220dpi.pdf

[Some literature]:

https://gist.github.com/reduz/c5769d0e705d8ab7ac187d63be0099b5?permalink_comment_id=5040452#gistcomment-5040452

[SPD-based hi-Z buffer shader from the Granite engine]:

https://github.com/Themaister/Granite/blob/master/assets/shaders/post/hiz.comp

## Migration guide

* When enqueuing a custom mesh pipeline, work item buffers are now

created with

`bevy::render::batching::gpu_preprocessing::get_or_create_work_item_buffer`,

not `PreprocessWorkItemBuffers::new`. See the

`specialized_mesh_pipeline` example.

## Showcase

Occlusion culling example:

Bistro zoomed out, before occlusion culling:

Bistro zoomed out, after occlusion culling:

In this scene, occlusion culling reduces the number of meshes Bevy has

to render from 1591 to 585.

This commit allows Bevy to use `multi_draw_indirect_count` for drawing

meshes. The `multi_draw_indirect_count` feature works just like

`multi_draw_indirect`, but it takes the number of indirect parameters

from a GPU buffer rather than specifying it on the CPU.

Currently, the CPU constructs the list of indirect draw parameters with

the instance count for each batch set to zero, uploads the resulting

buffer to the GPU, and dispatches a compute shader that bumps the

instance count for each mesh that survives culling. Unfortunately, this

is inefficient when we support `multi_draw_indirect_count`. Draw

commands corresponding to meshes for which all instances were culled

will remain present in the list when calling

`multi_draw_indirect_count`, causing overhead. Proper use of

`multi_draw_indirect_count` requires eliminating these empty draw

commands.

To address this inefficiency, this PR makes Bevy fully construct the

indirect draw commands on the GPU instead of on the CPU. Instead of

writing instance counts to the draw command buffer, the mesh

preprocessing shader now writes them to a separate *indirect metadata

buffer*. A second compute dispatch known as the *build indirect

parameters* shader runs after mesh preprocessing and converts the

indirect draw metadata into actual indirect draw commands for the GPU.

The build indirect parameters shader operates on a batch at a time,

rather than an instance at a time, and as such each thread writes only 0

or 1 indirect draw parameters, simplifying the current logic in

`mesh_preprocessing`, which currently has to have special cases for the

first mesh in each batch. The build indirect parameters shader emits

draw commands in a tightly packed manner, enabling maximally efficient

use of `multi_draw_indirect_count`.

Along the way, this patch switches mesh preprocessing to dispatch one

compute invocation per render phase per view, instead of dispatching one

compute invocation per view. This is preparation for two-phase occlusion

culling, in which we will have two mesh preprocessing stages. In that

scenario, the first mesh preprocessing stage must only process opaque

and alpha tested objects, so the work items must be separated into those

that are opaque or alpha tested and those that aren't. Thus this PR

splits out the work items into a separate buffer for each phase. As this

patch rewrites so much of the mesh preprocessing infrastructure, it was

simpler to just fold the change into this patch instead of deferring it

to the forthcoming occlusion culling PR.

Finally, this patch changes mesh preprocessing so that it runs

separately for indexed and non-indexed meshes. This is because draw

commands for indexed and non-indexed meshes have different sizes and

layouts. *The existing code is actually broken for non-indexed meshes*,

as it attempts to overlay the indirect parameters for non-indexed meshes

on top of those for indexed meshes. Consequently, right now the

parameters will be read incorrectly when multiple non-indexed meshes are

multi-drawn together. *This is a bug fix* and, as with the change to

dispatch phases separately noted above, was easiest to include in this

patch as opposed to separately.

## Migration Guide

* Systems that add custom phase items now need to populate the indirect

drawing-related buffers. See the `specialized_mesh_pipeline` example for

an example of how this is done.

We won't be able to retain render phases from frame to frame if the keys

are unstable. It's not as simple as simply keying off the main world

entity, however, because some main world entities extract to multiple

render world entities. For example, directional lights extract to

multiple shadow cascades, and point lights extract to one view per

cubemap face. Therefore, we key off a new type, `RetainedViewEntity`,

which contains the main entity plus a *subview ID*.

This is part of the preparation for retained bins.

---------

Co-authored-by: ickshonpe <david.curthoys@googlemail.com>

# Objective

Many instances of `clippy::too_many_arguments` linting happen to be on

systems - functions which we don't call manually, and thus there's not

much reason to worry about the argument count.

## Solution

Allow `clippy::too_many_arguments` globally, and remove all lint

attributes related to it.

Currently, our batchable binned items are stored in a hash table that

maps bin key, which includes the batch set key, to a list of entities.

Multidraw is handled by sorting the bin keys and accumulating adjacent

bins that can be multidrawn together (i.e. have the same batch set key)

into multidraw commands during `batch_and_prepare_binned_render_phase`.

This is reasonably efficient right now, but it will complicate future

work to retain indirect draw parameters from frame to frame. Consider

what must happen when we have retained indirect draw parameters and the

application adds a bin (i.e. a new mesh) that shares a batch set key

with some pre-existing meshes. (That is, the new mesh can be multidrawn

with the pre-existing meshes.) To be maximally efficient, our goal in

that scenario will be to update *only* the indirect draw parameters for

the batch set (i.e. multidraw command) containing the mesh that was

added, while leaving the others alone. That means that we have to

quickly locate all the bins that belong to the batch set being modified.

In the existing code, we would have to sort the list of bin keys so that

bins that can be multidrawn together become adjacent to one another in

the list. Then we would have to do a binary search through the sorted

list to find the location of the bin that was just added. Next, we would

have to widen our search to adjacent indexes that contain the same batch

set, doing expensive comparisons against the batch set key every time.

Finally, we would reallocate the indirect draw parameters and update the

stored pointers to the indirect draw parameters that the bins store.

By contrast, it'd be dramatically simpler if we simply changed the way

bins are stored to first map from batch set key (i.e. multidraw command)

to the bins (i.e. meshes) within that batch set key, and then from each

individual bin to the mesh instances. That way, the scenario above in

which we add a new mesh will be simpler to handle. First, we will look

up the batch set key corresponding to that mesh in the outer map to find

an inner map corresponding to the single multidraw command that will

draw that batch set. We will know how many meshes the multidraw command

is going to draw by the size of that inner map. Then we simply need to

reallocate the indirect draw parameters and update the pointers to those

parameters within the bins as necessary. There will be no need to do any

binary search or expensive batch set key comparison: only a single hash

lookup and an iteration over the inner map to update the pointers.

This patch implements the above technique. Because we don't have

retained bins yet, this PR provides no performance benefits. However, it

opens the door to maximally efficient updates when only a small number

of meshes change from frame to frame.

The main churn that this patch causes is that the *batch set key* (which

uniquely specifies a multidraw command) and *bin key* (which uniquely

specifies a mesh *within* that multidraw command) are now separate,

instead of the batch set key being embedded *within* the bin key.

In order to isolate potential regressions, I think that at least #16890,

#16836, and #16825 should land before this PR does.

## Migration Guide

* The *batch set key* is now separate from the *bin key* in

`BinnedPhaseItem`. The batch set key is used to collect multidrawable

meshes together. If you aren't using the multidraw feature, you can

safely set the batch set key to `()`.

Currently, `check_visibility` is parameterized over a query filter that

specifies the type of potentially-visible object. This has the

unfortunate side effect that we need a separate system,

`mark_view_visibility_as_changed_if_necessary`, to trigger view

visibility change detection. That system is quite slow because it must

iterate sequentially over all entities in the scene.

This PR moves the query filter from `check_visibility` to a new

component, `VisibilityClass`. `VisibilityClass` stores a list of type

IDs, each corresponding to one of the query filters we used to use.

Because `check_visibility` is no longer specialized to the query filter

at the type level, Bevy now only needs to invoke it once, leading to

better performance as `check_visibility` can do change detection on the

fly rather than delegating it to a separate system.

This commit also has ergonomic improvements, as there's no need for

applications that want to add their own custom renderable components to

add specializations of the `check_visibility` system to the schedule.

Instead, they only need to ensure that the `ViewVisibility` component is

properly kept up to date. The recommended way to do this, and the way

that's demonstrated in the `custom_phase_item` and

`specialized_mesh_pipeline` examples, is to make `ViewVisibility` a

required component and to add the type ID to it in a component add hook.

This patch does this for `Mesh3d`, `Mesh2d`, `Sprite`, `Light`, and

`Node`, which means that most app code doesn't need to change at all.

Note that, although this patch has a large impact on the performance of

visibility determination, it doesn't actually improve the end-to-end

frame time of `many_cubes`. That's because the render world was already

effectively hiding the latency from

`mark_view_visibility_as_changed_if_necessary`. This patch is, however,

necessary for *further* improvements to `many_cubes` performance.

`many_cubes` trace before:

`many_cubes` trace after:

## Migration Guide

* `check_visibility` no longer takes a `QueryFilter`, and there's no

need to add it manually to your app schedule anymore for custom

rendering items. Instead, entities with custom renderable components

should add the appropriate type IDs to `VisibilityClass`. See

`custom_phase_item` for an example.

This commit allows Bevy to bind 16 lightmaps at a time, if the current

platform supports bindless textures. Naturally, if bindless textures

aren't supported, Bevy falls back to binding only a single lightmap at a

time. As lightmaps are usually heavily atlased, I doubt many scenes will

use more than 16 lightmap textures.

This has little performance impact now, but it's desirable for us to

reap the benefits of multidraw and bindless textures on scenes that use

lightmaps. Otherwise, we might have to break batches in order to switch

those lightmaps.

Additionally, this PR slightly reduces the cost of binning because it

makes the lightmap index in `Opaque3dBinKey` 32 bits instead of an

`AssetId`.

## Migration Guide

* The `Opaque3dBinKey::lightmap_image` field is now

`Opaque3dBinKey::lightmap_slab`, which is a lightweight identifier for

an entire binding array of lightmaps.

This patch replaces the undocumented `NoGpuCulling` component with a new

component, `NoIndirectDrawing`, effectively turning indirect drawing on

by default. Indirect mode is needed for the recently-landed multidraw

feature (#16427). Since multidraw is such a win for performance, when

that feature is supported the small performance tax that indirect mode

incurs is virtually always worth paying.

To ensure that custom drawing code such as that in the

`custom_shader_instancing` example continues to function, this commit

additionally makes GPU culling take the `NoFrustumCulling` component

into account.

This PR is an alternative to #16670 that doesn't break the

`custom_shader_instancing` example. **PR #16755 should land first in

order to avoid breaking deferred rendering, as multidraw currently

breaks it**.

## Migration Guide

* Indirect drawing (GPU culling) is now enabled by default, so the

`GpuCulling` component is no longer available. To disable indirect mode,

which may be useful with custom render nodes, add the new

`NoIndirectDrawing` component to your camera.

This commit makes `StandardMaterial` use bindless textures, as

implemented in PR #16368. Non-bindless mode, as used for example in

Metal and WebGL 2, remains fully supported via a plethora of `#ifdef

BINDLESS` preprocessor definitions.

Unfortunately, this PR introduces quite a bit of unsightliness into the

PBR shaders. This is a result of the fact that WGSL supports neither

passing binding arrays to functions nor passing individual *elements* of

binding arrays to functions, except directly to texture sample

functions. Thus we're unable to use the `sample_texture` abstraction

that helped abstract over the meshlet and non-meshlet paths. I don't

think there's anything we can do to help this other than to suggest

improvements to upstream Naga.

# Objective

Fixes typos in bevy project, following suggestion in

https://github.com/bevyengine/bevy-website/pull/1912#pullrequestreview-2483499337

## Solution

I used https://github.com/crate-ci/typos to find them.

I included only the ones that feel undebatable too me, but I am not in

game engine so maybe some terms are expected.

I left out the following typos:

- `reparametrize` => `reparameterize`: There are a lot of occurences, I

believe this was expected

- `semicircles` => `hemicircles`: 2 occurences, may mean something

specific in geometry

- `invertation` => `inversion`: may mean something specific

- `unparented` => `parentless`: may mean something specific

- `metalness` => `metallicity`: may mean something specific

## Testing

- Did you test these changes? If so, how? I did not test the changes,

most changes are related to raw text. I expect the others to be tested

by the CI.

- Are there any parts that need more testing? I do not think

- How can other people (reviewers) test your changes? Is there anything

specific they need to know? To me there is nothing to test

- If relevant, what platforms did you test these changes on, and are

there any important ones you can't test?

---

## Migration Guide

> This section is optional. If there are no breaking changes, you can

delete this section.

(kept in case I include the `reparameterize` change here)

- If this PR is a breaking change (relative to the last release of

Bevy), describe how a user might need to migrate their code to support

these changes

- Simply adding new functionality is not a breaking change.

- Fixing behavior that was definitely a bug, rather than a questionable

design choice is not a breaking change.

## Questions

- [x] Should I include the above typos? No

(https://github.com/bevyengine/bevy/pull/16702#issuecomment-2525271152)

- [ ] Should I add `typos` to the CI? (I will check how to configure it

properly)

This project looks awesome, I really enjoy reading the progress made,

thanks to everyone involved.

This commit adds support for *multidraw*, which is a feature that allows

multiple meshes to be drawn in a single drawcall. `wgpu` currently

implements multidraw on Vulkan, so this feature is only enabled there.

Multiple meshes can be drawn at once if they're in the same vertex and

index buffers and are otherwise placed in the same bin. (Thus, for

example, at present the materials and textures must be identical, but

see #16368.) Multidraw is a significant performance improvement during

the draw phase because it reduces the number of rebindings, as well as

the number of drawcalls.

This feature is currently only enabled when GPU culling is used: i.e.

when `GpuCulling` is present on a camera. Therefore, if you run for

example `scene_viewer`, you will not see any performance improvements,

because `scene_viewer` doesn't add the `GpuCulling` component to its

camera.

Additionally, the multidraw feature is only implemented for opaque 3D

meshes and not for shadows or 2D meshes. I plan to make GPU culling the

default and to extend the feature to shadows in the future. Also, in the

future I suspect that polyfilling multidraw on APIs that don't support

it will be fruitful, as even without driver-level support use of

multidraw allows us to avoid expensive `wgpu` rebindings.

The bindless PR (#16368) broke some examples:

* `specialized_mesh_pipeline` and `custom_shader_instancing` failed

because they expect to be able to render a mesh with no material, by

overriding enough of the render pipeline to be able to do so. This PR

fixes the issue by restoring the old behavior in which we extract meshes

even if they have no material.

* `texture_binding_array` broke because it doesn't implement

`AsBindGroup::unprepared_bind_group`. This was tricky to fix because

there's a very good reason why `texture_binding_array` doesn't implement

that method: there's no sensible way to do so with `wgpu`'s current

bindless API, due to its multiple levels of borrowed references. To fix

the example, I split `MaterialBindGroup` into

`MaterialBindlessBindGroup` and `MaterialNonBindlessBindGroup`, and

allow direct custom implementations of `AsBindGroup::as_bind_group` for

the latter type of bind groups. To opt in to the new behavior, return

the `AsBindGroupError::CreateBindGroupDirectly` error from your

`AsBindGroup::unprepared_bind_group` implementation, and Bevy will call

your custom `AsBindGroup::as_bind_group` method as before.

## Migration Guide

* Bevy will now unconditionally call

`AsBindGroup::unprepared_bind_group` for your materials, so you must no

longer panic in that function. Instead, return the new

`AsBindGroupError::CreateBindGroupDirectly` error, and Bevy will fall

back to calling `AsBindGroup::as_bind_group` as before.

This patch adds the infrastructure necessary for Bevy to support

*bindless resources*, by adding a new `#[bindless]` attribute to

`AsBindGroup`.

Classically, only a single texture (or sampler, or buffer) can be

attached to each shader binding. This means that switching materials

requires breaking a batch and issuing a new drawcall, even if the mesh

is otherwise identical. This adds significant overhead not only in the

driver but also in `wgpu`, as switching bind groups increases the amount

of validation work that `wgpu` must do.

*Bindless resources* are the typical solution to this problem. Instead

of switching bindings between each texture, the renderer instead

supplies a large *array* of all textures in the scene up front, and the

material contains an index into that array. This pattern is repeated for

buffers and samplers as well. The renderer now no longer needs to switch

binding descriptor sets while drawing the scene.

Unfortunately, as things currently stand, this approach won't quite work

for Bevy. Two aspects of `wgpu` conspire to make this ideal approach

unacceptably slow:

1. In the DX12 backend, all binding arrays (bindless resources) must

have a constant size declared in the shader, and all textures in an

array must be bound to actual textures. Changing the size requires a

recompile.

2. Changing even one texture incurs revalidation of all textures, a

process that takes time that's linear in the total size of the binding

array.

This means that declaring a large array of textures big enough to

encompass the entire scene is presently unacceptably slow. For example,

if you declare 4096 textures, then `wgpu` will have to revalidate all

4096 textures if even a single one changes. This process can take

multiple frames.

To work around this problem, this PR groups bindless resources into

small *slabs* and maintains a free list for each. The size of each slab

for the bindless arrays associated with a material is specified via the

`#[bindless(N)]` attribute. For instance, consider the following

declaration:

```rust

#[derive(AsBindGroup)]

#[bindless(16)]

struct MyMaterial {

#[buffer(0)]

color: Vec4,

#[texture(1)]

#[sampler(2)]

diffuse: Handle<Image>,

}

```

The `#[bindless(N)]` attribute specifies that, if bindless arrays are

supported on the current platform, each resource becomes a binding array

of N instances of that resource. So, for `MyMaterial` above, the `color`

attribute is exposed to the shader as `binding_array<vec4<f32>, 16>`,

the `diffuse` texture is exposed to the shader as

`binding_array<texture_2d<f32>, 16>`, and the `diffuse` sampler is

exposed to the shader as `binding_array<sampler, 16>`. Inside the

material's vertex and fragment shaders, the applicable index is

available via the `material_bind_group_slot` field of the `Mesh`

structure. So, for instance, you can access the current color like so:

```wgsl

// `uniform` binding arrays are a non-sequitur, so `uniform` is automatically promoted

// to `storage` in bindless mode.

@group(2) @binding(0) var<storage> material_color: binding_array<Color, 4>;

...

@fragment

fn fragment(in: VertexOutput) -> @location(0) vec4<f32> {

let color = material_color[mesh[in.instance_index].material_bind_group_slot];

...

}

```

Note that portable shader code can't guarantee that the current platform

supports bindless textures. Indeed, bindless mode is only available in

Vulkan and DX12. The `BINDLESS` shader definition is available for your

use to determine whether you're on a bindless platform or not. Thus a

portable version of the shader above would look like:

```wgsl

#ifdef BINDLESS

@group(2) @binding(0) var<storage> material_color: binding_array<Color, 4>;

#else // BINDLESS

@group(2) @binding(0) var<uniform> material_color: Color;

#endif // BINDLESS

...

@fragment

fn fragment(in: VertexOutput) -> @location(0) vec4<f32> {

#ifdef BINDLESS

let color = material_color[mesh[in.instance_index].material_bind_group_slot];

#else // BINDLESS

let color = material_color;

#endif // BINDLESS

...

}

```

Importantly, this PR *doesn't* update `StandardMaterial` to be bindless.

So, for example, `scene_viewer` will currently not run any faster. I

intend to update `StandardMaterial` to use bindless mode in a follow-up

patch.

A new example, `shaders/shader_material_bindless`, has been added to

demonstrate how to use this new feature.

Here's a Tracy profile of `submit_graph_commands` of this patch and an

additional patch (not submitted yet) that makes `StandardMaterial` use

bindless. Red is those patches; yellow is `main`. The scene was Bistro

Exterior with a hack that forces all textures to opaque. You can see a

1.47x mean speedup.

## Migration Guide

* `RenderAssets::prepare_asset` now takes an `AssetId` parameter.

* Bin keys now have Bevy-specific material bind group indices instead of

`wgpu` material bind group IDs, as part of the bindless change. Use the

new `MaterialBindGroupAllocator` to map from bind group index to bind

group ID.

# Objective

Glam has some common and useful types and helpers that are not in the

prelude of `bevy_math`. This includes shorthand constructors like

`vec3`, or even `Vec3A`, the aligned version of `Vec3`.

```rust

// The "normal" way to create a 3D vector

let vec = Vec3::new(2.0, 1.0, -3.0);

// Shorthand version

let vec = vec3(2.0, 1.0, -3.0);

```

## Solution

Add the following types and methods to the prelude:

- `vec2`, `vec3`, `vec3a`, `vec4`

- `uvec2`, `uvec3`, `uvec4`

- `ivec2`, `ivec3`, `ivec4`

- `bvec2`, `bvec3`, `bvec3a`, `bvec4`, `bvec4a`

- `mat2`, `mat3`, `mat3a`, `mat4`

- `quat` (not sure if anyone uses this, but for consistency)

- `Vec3A`

- `BVec3A`, `BVec4A`

- `Mat3A`

I did not add the u16, i16, or f64 variants like `dvec2`, since there

are currently no existing types like those in the prelude.

The shorthand constructors are currently used a lot in some places in

Bevy, and not at all in others. In a follow-up, we might want to

consider if we have a preference for the shorthand, and make a PR to

change the codebase to use it more consistently.

# Objective

Fixes#15940

## Solution

Remove the `pub use` and fix the compile errors.

Make `bevy_image` available as `bevy::image`.

## Testing

Feature Frenzy would be good here! Maybe I'll learn how to use it if I

have some time this weekend, or maybe a reviewer can use it.

## Migration Guide

Use `bevy_image` instead of `bevy_render::texture` items.

---------

Co-authored-by: chompaa <antony.m.3012@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- wgpu 0.20 made workgroup vars stop being zero-init by default. this

broke some applications (cough foresight cough) and now we workaround

it. wgpu exposes a compilation option that zero initializes workgroup

memory by default, but bevy does not expose it.

## Solution

- expose the compilation option wgpu gives us

## Testing

- ran examples: 3d_scene, compute_shader_game_of_life, gpu_readback,

lines, specialized_mesh_pipeline. they all work

- confirmed fix for our own problems

---

</details>

## Migration Guide

- add `zero_initialize_workgroup_memory: false,` to

`ComputePipelineDescriptor` or `RenderPipelineDescriptor` structs to

preserve 0.14 functionality, add `zero_initialize_workgroup_memory:

true,` to restore bevy 0.13 functionality.

The two additional linear texture samplers that PCSS added caused us to

blow past the limit on Apple Silicon macOS and WebGL. To fix the issue,

this commit adds a `--feature pbr_pcss` feature gate that disables PCSS

if not present.

Closes#15345.

Closes#15525.

Closes#15821.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

# Objective

Continue improving the user experience of our UI Node API in the

direction specified by [Bevy's Next Generation Scene / UI

System](https://github.com/bevyengine/bevy/discussions/14437)

## Solution

As specified in the document above, merge `Style` fields into `Node`,

and move "computed Node fields" into `ComputedNode` (I chose this name

over something like `ComputedNodeLayout` because it currently contains

more than just layout info. If we want to break this up / rename these

concepts, lets do that in a separate PR). `Style` has been removed.

This accomplishes a number of goals:

## Ergonomics wins

Specifying both `Node` and `Style` is now no longer required for

non-default styles

Before:

```rust

commands.spawn((

Node::default(),

Style {

width: Val::Px(100.),

..default()

},

));

```

After:

```rust

commands.spawn(Node {

width: Val::Px(100.),

..default()

});

```

## Conceptual clarity

`Style` was never a comprehensive "style sheet". It only defined "core"

style properties that all `Nodes` shared. Any "styled property" that

couldn't fit that mold had to be in a separate component. A "real" style

system would style properties _across_ components (`Node`, `Button`,

etc). We have plans to build a true style system (see the doc linked

above).

By moving the `Style` fields to `Node`, we fully embrace `Node` as the

driving concept and remove the "style system" confusion.

## Next Steps

* Consider identifying and splitting out "style properties that aren't

core to Node". This should not happen for Bevy 0.15.

---

## Migration Guide

Move any fields set on `Style` into `Node` and replace all `Style`

component usage with `Node`.

Before:

```rust

commands.spawn((

Node::default(),

Style {

width: Val::Px(100.),

..default()

},

));

```

After:

```rust

commands.spawn(Node {

width: Val::Px(100.),

..default()

});

```

For any usage of the "computed node properties" that used to live on

`Node`, use `ComputedNode` instead:

Before:

```rust

fn system(nodes: Query<&Node>) {

for node in &nodes {

let computed_size = node.size();

}

}

```

After:

```rust

fn system(computed_nodes: Query<&ComputedNode>) {

for computed_node in &computed_nodes {

let computed_size = computed_node.size();

}

}

```

Fixes#15834

## Migration Guide

The APIs of `Time`, `Timer` and `Stopwatch` have been cleaned up for

consistency with each other and the standard library's `Duration` type.

The following methods have been renamed:

- `Stowatch::paused` -> `Stopwatch::is_paused`

- `Time::elapsed_seconds` -> `Time::elasped_secs` (including `_f64` and

`_wrapped` variants)

# Objective

Fixes#15891

## Solution

Just remove the invalid triangle. I'm assuming that line of code was

originally copied from one that was drawing a quad.

## Testing

- `cargo run --example specialized_mesh_pipeline`

- hover over over the triangles

Tested on macos

# Objective

Cleanup naming and docs, add missing migration guide after #15591

All text root nodes now use `Text` (UI) / `Text2d`.

All text readers/writers use `Text<Type>Reader`/`Text<Type>Writer`

convention.

---

## Migration Guide

Doubles as #15591 migration guide.

Text bundles (`TextBundle` and `Text2dBundle`) were removed in favor of

`Text` and `Text2d`.

Shared configuration fields were replaced with `TextLayout`, `TextFont`

and `TextColor` components.

Just `TextBundle`'s additional field turned into `TextNodeFlags`

component,

while `Text2dBundle`'s additional fields turned into `TextBounds` and

`Anchor` components.

Text sections were removed in favor of hierarchy-based approach.

For root text entities with `Text` or `Text2d` components, child

entities with `TextSpan` will act as additional text sections.

To still access text spans by index, use the new `TextUiReader`,

`Text2dReader` and `TextUiWriter`, `Text2dWriter` system parameters.

# Objective

The type `AssetLoadError` has `PartialEq` and `Eq` impls, which is

problematic due to the fact that the `AssetLoaderError` and

`AddAsyncError` variants lie in their impls: they will return `true` for

any `Box<dyn Error>` with the same `TypeId`, even if the actual value is

different. This can lead to subtle bugs if a user relies on the equality

comparison to ensure that two values are equal.

The same is true for `DependencyLoadState`,

`RecursiveDependencyLoadState`.

More generally, it is an anti-pattern for large error types involving

dynamic dispatch, such as `AssetLoadError`, to have equality

comparisons. Directly comparing two errors for equality is usually not

desired -- if some logic needs to branch based on the value of an error,

it is usually more correct to check for specific variants and inspect

their fields.

As far as I can tell, the only reason these errors have equality

comparisons is because the `LoadState` enum wraps `AssetLoadError` for

its `Failed` variant. This equality comparison is only used to check for

`== LoadState::Loaded`, which we can easily replace with an `is_loaded`

method.

## Solution

Remove the `{Partial}Eq` impls from `LoadState`, which also allows us to

remove it from the error types.

## Migration Guide

The types `bevy_asset::AssetLoadError` and `bevy_asset::LoadState` no

longer support equality comparisons. If you need to check for an asset's

load state, consider checking for a specific variant using

`LoadState::is_loaded` or the `matches!` macro. Similarly, consider

using the `matches!` macro to check for specific variants of the

`AssetLoadError` type if you need to inspect the value of an asset load

error in your code.

`DependencyLoadState` and `RecursiveDependencyLoadState` are not

released yet, so no migration needed,

---------

Co-authored-by: Joseph <21144246+JoJoJet@users.noreply.github.com>

# Objective

- closes#15866

## Solution

- Simply migrate where possible.

## Testing

- Expect that CI will do most of the work. Examples is another way of

testing this, as most of the work is in that area.

---

## Notes

For now, this PR doesn't migrate `QueryState::single` and friends as for

now, this look like another issue. So for example, QueryBuilders that

used single or `World::query` that used single wasn't migrated. If there

is a easy way to migrate those, please let me know.

Most of the uses of `Query::single` were removed, the only other uses

that I found was related to tests of said methods, so will probably be

removed when we remove `Query::single`.

# Objective

- Required components replace bundles, but `SpatialBundle` is yet to be

deprecated

## Solution

- Deprecate `SpatialBundle`

- Insert `Transform` and `Visibility` instead in examples using it

- In `spawn` or `insert` inserting a default `Transform` or `Visibility`

with component already requiring either, remove those components from

the tuple

## Testing

- Did you test these changes? If so, how?

Yes, I ran the examples I changed and tests

- Are there any parts that need more testing?

The `gamepad_viewer` and and `custom_shader_instancing` examples don't

work as intended due to entirely unrelated code, didn't check main.

- How can other people (reviewers) test your changes? Is there anything

specific they need to know?

Run examples, or just check that all spawned values are identical

- If relevant, what platforms did you test these changes on, and are

there any important ones you can't test?

Linux, wayland trough x11 (cause that's the default feature)

---

## Migration Guide

`SpatialBundle` is now deprecated, insert `Transform` and `Visibility`

instead which will automatically insert all other components that were

in the bundle. If you do not specify these values and any other

components in your `spawn`/`insert` call already requires either of

these components you can leave that one out.

before:

```rust

commands.spawn(SpatialBundle::default());

```

after:

```rust

commands.spawn((Transform::default(), Visibility::default());

```

# Objective

Currently text is recomputed unnecessarily on any changes to its color,

which is extremely expensive.

## Solution

Split up `TextStyle` into two separate components `TextFont` and

`TextColor`.

## Testing

I added this system to `many_buttons`:

```rust

fn set_text_colors_changed(mut colors: Query<&mut TextColor>) {

for mut text_color in colors.iter_mut() {

text_color.set_changed();

}

}

```

reports ~4fps on main, ~50fps with this PR.

## Migration Guide

`TextStyle` has been renamed to `TextFont` and its `color` field has

been moved to a separate component named `TextColor` which newtypes

`Color`.

# Objective

In the Render World, there are a number of collections that are derived

from Main World entities and are used to drive rendering. The most

notable are:

- `VisibleEntities`, which is generated in the `check_visibility` system

and contains visible entities for a view.

- `ExtractedInstances`, which maps entity ids to asset ids.

In the old model, these collections were trivially kept in sync -- any

extracted phase item could look itself up because the render entity id

was guaranteed to always match the corresponding main world id.

After #15320, this became much more complicated, and was leading to a

number of subtle bugs in the Render World. The main rendering systems,

i.e. `queue_material_meshes` and `queue_material2d_meshes`, follow a

similar pattern:

```rust

for visible_entity in visible_entities.iter::<With<Mesh2d>>() {

let Some(mesh_instance) = render_mesh_instances.get_mut(visible_entity) else {

continue;

};

// Look some more stuff up and specialize the pipeline...

let bin_key = Opaque2dBinKey {

pipeline: pipeline_id,

draw_function: draw_opaque_2d,

asset_id: mesh_instance.mesh_asset_id.into(),

material_bind_group_id: material_2d.get_bind_group_id().0,

};

opaque_phase.add(

bin_key,

*visible_entity,

BinnedRenderPhaseType::mesh(mesh_instance.automatic_batching),

);

}

```

In this case, `visible_entities` and `render_mesh_instances` are both

collections that are created and keyed by Main World entity ids, and so

this lookup happens to work by coincidence. However, there is a major

unintentional bug here: namely, because `visible_entities` is a

collection of Main World ids, the phase item being queued is created

with a Main World id rather than its correct Render World id.

This happens to not break mesh rendering because the render commands

used for drawing meshes do not access the `ItemQuery` parameter, but

demonstrates the confusion that is now possible: our UI phase items are

correctly being queued with Render World ids while our meshes aren't.

Additionally, this makes it very easy and error prone to use the wrong

entity id to look up things like assets. For example, if instead we

ignored visibility checks and queued our meshes via a query, we'd have

to be extra careful to use `&MainEntity` instead of the natural

`Entity`.

## Solution

Make all collections that are derived from Main World data use

`MainEntity` as their key, to ensure type safety and avoid accidentally

looking up data with the wrong entity id:

```rust

pub type MainEntityHashMap<V> = hashbrown::HashMap<MainEntity, V, EntityHash>;

```

Additionally, we make all `PhaseItem` be able to provide both their Main

and Render World ids, to allow render phase implementors maximum

flexibility as to what id should be used to look up data.

You can think of this like tracking at the type level whether something

in the Render World should use it's "primary key", i.e. entity id, or

needs to use a foreign key, i.e. `MainEntity`.

## Testing

##### TODO:

This will require extensive testing to make sure things didn't break!

Additionally, some extraction logic has become more complicated and

needs to be checked for regressions.

## Migration Guide

With the advent of the retained render world, collections that contain

references to `Entity` that are extracted into the render world have

been changed to contain `MainEntity` in order to prevent errors where a

render world entity id is used to look up an item by accident. Custom

rendering code may need to be changed to query for `&MainEntity` in

order to look up the correct item from such a collection. Additionally,

users who implement their own extraction logic for collections of main

world entity should strongly consider extracting into a different

collection that uses `MainEntity` as a key.

Additionally, render phases now require specifying both the `Entity` and

`MainEntity` for a given `PhaseItem`. Custom render phases should ensure

`MainEntity` is available when queuing a phase item.

# Objective

- Closes#15716

- Closes#15718

## Solution

- Replace `Handle<MeshletMesh>` with a new `MeshletMesh3d` component

- As expected there were some random things that needed fixing:

- A couple tests were storing handles just to prevent them from being

dropped I believe, which seems to have been unnecessary in some.

- The `SpriteBundle` still had a `Handle<Image>` field. I've removed

this.

- Tests in `bevy_sprite` incorrectly added a `Handle<Image>` field

outside of the `Sprite` component.

- A few examples were still inserting `Handle`s, switched those to their

corresponding wrappers.

- 2 examples that were still querying for `Handle<Image>` were changed

to query `Sprite`

## Testing

- I've verified that the changed example work now

## Migration Guide

`Handle` can no longer be used as a `Component`. All existing Bevy types

using this pattern have been wrapped in their own semantically

meaningful type. You should do the same for any custom `Handle`

components your project needs.

The `Handle<MeshletMesh>` component is now `MeshletMesh3d`.

The `WithMeshletMesh` type alias has been removed. Use

`With<MeshletMesh3d>` instead.

**Ready for review. Examples migration progress: 100%.**

# Objective

- Implement https://github.com/bevyengine/bevy/discussions/15014

## Solution

This implements [cart's

proposal](https://github.com/bevyengine/bevy/discussions/15014#discussioncomment-10574459)

faithfully except for one change. I separated `TextSpan` from

`TextSpan2d` because `TextSpan` needs to require the `GhostNode`

component, which is a `bevy_ui` component only usable by UI.

Extra changes:

- Added `EntityCommands::commands_mut` that returns a mutable reference.

This is a blocker for extension methods that return something other than

`self`. Note that `sickle_ui`'s `UiBuilder::commands` returns a mutable

reference for this reason.

## Testing

- [x] Text examples all work.

---

## Showcase

TODO: showcase-worthy

## Migration Guide

TODO: very breaking

### Accessing text spans by index

Text sections are now text sections on different entities in a

hierarchy, Use the new `TextReader` and `TextWriter` system parameters

to access spans by index.

Before:

```rust

fn refresh_text(mut query: Query<&mut Text, With<TimeText>>, time: Res<Time>) {

let text = query.single_mut();

text.sections[1].value = format_time(time.elapsed());

}

```

After:

```rust

fn refresh_text(

query: Query<Entity, With<TimeText>>,

mut writer: UiTextWriter,

time: Res<Time>

) {

let entity = query.single();

*writer.text(entity, 1) = format_time(time.elapsed());

}

```

### Iterating text spans

Text spans are now entities in a hierarchy, so the new `UiTextReader`

and `UiTextWriter` system parameters provide ways to iterate that

hierarchy. The `UiTextReader::iter` method will give you a normal

iterator over spans, and `UiTextWriter::for_each` lets you visit each of

the spans.

---------

Co-authored-by: ickshonpe <david.curthoys@googlemail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Continue migration of bevy APIs to required components, following

guidance of https://hackmd.io/@bevy/required_components/

## Solution

- Make `Sprite` require `Transform` and `Visibility` and

`SyncToRenderWorld`

- move image and texture atlas handles into `Sprite`

- deprecate `SpriteBundle`

- remove engine uses of `SpriteBundle`

## Testing

ran cargo tests on bevy_sprite and tested several sprite examples.

---

## Migration Guide

Replace all uses of `SpriteBundle` with `Sprite`. There are several new

convenience constructors: `Sprite::from_image`,

`Sprite::from_atlas_image`, `Sprite::from_color`.

WARNING: use of `Handle<Image>` and `TextureAtlas` as components on

sprite entities will NO LONGER WORK. Use the fields on `Sprite` instead.

I would have removed the `Component` impls from `TextureAtlas` and

`Handle<Image>` except it is still used within ui. We should fix this

moving forward with the migration.

# Objective

Yet another PR for migrating stuff to required components. This time,

cameras!

## Solution

As per the [selected

proposal](https://hackmd.io/tsYID4CGRiWxzsgawzxG_g#Combined-Proposal-1-Selected),

deprecate `Camera2dBundle` and `Camera3dBundle` in favor of `Camera2d`

and `Camera3d`.

Adding a `Camera` without `Camera2d` or `Camera3d` now logs a warning,

as suggested by Cart [on

Discord](https://discord.com/channels/691052431525675048/1264881140007702558/1291506402832945273).

I would personally like cameras to work a bit differently and be split

into a few more components, to avoid some footguns and confusing

semantics, but that is more controversial, and shouldn't block this core

migration.

## Testing

I ran a few 2D and 3D examples, and tried cameras with and without

render graphs.

---

## Migration Guide

`Camera2dBundle` and `Camera3dBundle` have been deprecated in favor of

`Camera2d` and `Camera3d`. Inserting them will now also insert the other

components required by them automatically.

# Objective

A big step in the migration to required components: meshes and

materials!

## Solution

As per the [selected

proposal](https://hackmd.io/@bevy/required_components/%2Fj9-PnF-2QKK0on1KQ29UWQ):

- Deprecate `MaterialMesh2dBundle`, `MaterialMeshBundle`, and

`PbrBundle`.

- Add `Mesh2d` and `Mesh3d` components, which wrap a `Handle<Mesh>`.

- Add `MeshMaterial2d<M: Material2d>` and `MeshMaterial3d<M: Material>`,

which wrap a `Handle<M>`.

- Meshes *without* a mesh material should be rendered with a default

material. The existence of a material is determined by

`HasMaterial2d`/`HasMaterial3d`, which is required by

`MeshMaterial2d`/`MeshMaterial3d`. This gets around problems with the

generics.

Previously:

```rust

commands.spawn(MaterialMesh2dBundle {

mesh: meshes.add(Circle::new(100.0)).into(),

material: materials.add(Color::srgb(7.5, 0.0, 7.5)),

transform: Transform::from_translation(Vec3::new(-200., 0., 0.)),

..default()

});

```

Now:

```rust

commands.spawn((

Mesh2d(meshes.add(Circle::new(100.0))),

MeshMaterial2d(materials.add(Color::srgb(7.5, 0.0, 7.5))),

Transform::from_translation(Vec3::new(-200., 0., 0.)),

));

```

If the mesh material is missing, previously nothing was rendered. Now,

it renders a white default `ColorMaterial` in 2D and a

`StandardMaterial` in 3D (this can be overridden). Below, only every

other entity has a material:

Why white? This is still open for discussion, but I think white makes

sense for a *default* material, while *invalid* asset handles pointing

to nothing should have something like a pink material to indicate that

something is broken (I don't handle that in this PR yet). This is kind

of a mix of Godot and Unity: Godot just renders a white material for

non-existent materials, while Unity renders nothing when no materials

exist, but renders pink for invalid materials. I can also change the

default material to pink if that is preferable though.

## Testing

I ran some 2D and 3D examples to test if anything changed visually. I

have not tested all examples or features yet however. If anyone wants to

test more extensively, it would be appreciated!

## Implementation Notes

- The relationship between `bevy_render` and `bevy_pbr` is weird here.

`bevy_render` needs `Mesh3d` for its own systems, but `bevy_pbr` has all

of the material logic, and `bevy_render` doesn't depend on it. I feel

like the two crates should be refactored in some way, but I think that's

out of scope for this PR.

- I didn't migrate meshlets to required components yet. That can

probably be done in a follow-up, as this is already a huge PR.

- It is becoming increasingly clear to me that we really, *really* want

to disallow raw asset handles as components. They caused me a *ton* of

headache here already, and it took me a long time to find every place

that queried for them or inserted them directly on entities, since there

were no compiler errors for it. If we don't remove the `Component`

derive, I expect raw asset handles to be a *huge* footgun for users as

we transition to wrapper components, especially as handles as components

have been the norm so far. I personally consider this to be a blocker

for 0.15: we need to migrate to wrapper components for asset handles

everywhere, and remove the `Component` derive. Also see

https://github.com/bevyengine/bevy/issues/14124.

---

## Migration Guide

Asset handles for meshes and mesh materials must now be wrapped in the

`Mesh2d` and `MeshMaterial2d` or `Mesh3d` and `MeshMaterial3d`

components for 2D and 3D respectively. Raw handles as components no

longer render meshes.

Additionally, `MaterialMesh2dBundle`, `MaterialMeshBundle`, and

`PbrBundle` have been deprecated. Instead, use the mesh and material

components directly.

Previously:

```rust

commands.spawn(MaterialMesh2dBundle {

mesh: meshes.add(Circle::new(100.0)).into(),

material: materials.add(Color::srgb(7.5, 0.0, 7.5)),

transform: Transform::from_translation(Vec3::new(-200., 0., 0.)),

..default()

});

```

Now:

```rust

commands.spawn((

Mesh2d(meshes.add(Circle::new(100.0))),

MeshMaterial2d(materials.add(Color::srgb(7.5, 0.0, 7.5))),

Transform::from_translation(Vec3::new(-200., 0., 0.)),

));

```

If the mesh material is missing, a white default material is now used.

Previously, nothing was rendered if the material was missing.

The `WithMesh2d` and `WithMesh3d` query filter type aliases have also

been removed. Simply use `With<Mesh2d>` or `With<Mesh3d>`.

---------

Co-authored-by: Tim Blackbird <justthecooldude@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Another step in the migration to required components: lights!

Note that this does not include `EnvironmentMapLight` or reflection

probes yet, because their API hasn't been fully chosen yet.

## Solution

As per the [selected

proposals](https://hackmd.io/@bevy/required_components/%2FLLnzwz9XTxiD7i2jiUXkJg):

- Deprecate `PointLightBundle` in favor of the `PointLight` component

- Deprecate `SpotLightBundle` in favor of the `PointLight` component

- Deprecate `DirectionalLightBundle` in favor of the `DirectionalLight`

component

## Testing

I ran some examples with lights.

---

## Migration Guide

`PointLightBundle`, `SpotLightBundle`, and `DirectionalLightBundle` have

been deprecated. Use the `PointLight`, `SpotLight`, and

`DirectionalLight` components instead. Adding them will now insert the

other components required by them automatically.

# Objective

- The shader_instancing example can be misleading since it doesn't

explain that bevy has built in automatic instancing.

## Solution

- Explain that bevy has built in instancing and that this example is for

advanced users.

- Add a new automatic_instancing example that shows how to use the built

in automatic instancing

- Rename the shader_instancing example to custom_shader_instancing to

highlight that this is a more advanced implementation

---------

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

Adds a new `Readback` component to request for readback of a

`Handle<Image>` or `Handle<ShaderStorageBuffer>` to the CPU in a future

frame.

## Solution

We track the `Readback` component and allocate a target buffer to write

the gpu resource into and map it back asynchronously, which then fires a

trigger on the entity in the main world. This proccess is asynchronous,

and generally takes a few frames.

## Showcase

```rust

let mut buffer = ShaderStorageBuffer::from(vec![0u32; 16]);

buffer.buffer_description.usage |= BufferUsages::COPY_SRC;

let buffer = buffers.add(buffer);

commands

.spawn(Readback::buffer(buffer.clone()))

.observe(|trigger: Trigger<ReadbackComplete>| {

info!("Buffer data from previous frame {:?}", trigger.event());

});

```

---------

Co-authored-by: Kristoffer Søholm <k.soeholm@gmail.com>

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>