# Objective

Fixes#5304

## Solution

Instead of using a simple utility function for loading, which uses a default allocation limit of 512MB, we use a Reader object which can be configured ad hoc.

## Changelog

> This section is optional. If this was a trivial fix, or has no externally-visible impact, you can delete this section.

- Allows loading of textures larger than 512MB

# Objective

When someone searches in rustdoc for `world_to_screen`, they now will

find `world_to_viewport`. The method was renamed in 0.8, it would be

nice to allow users to find the new name more easily.

---

# Objective

- Added a bunch of backticks to things that should have them, like equations, abstract variable names,

- Changed all small x, y, and z to capitals X, Y, Z.

This might be more annoying than helpful; Feel free to refuse this PR.

# Objective

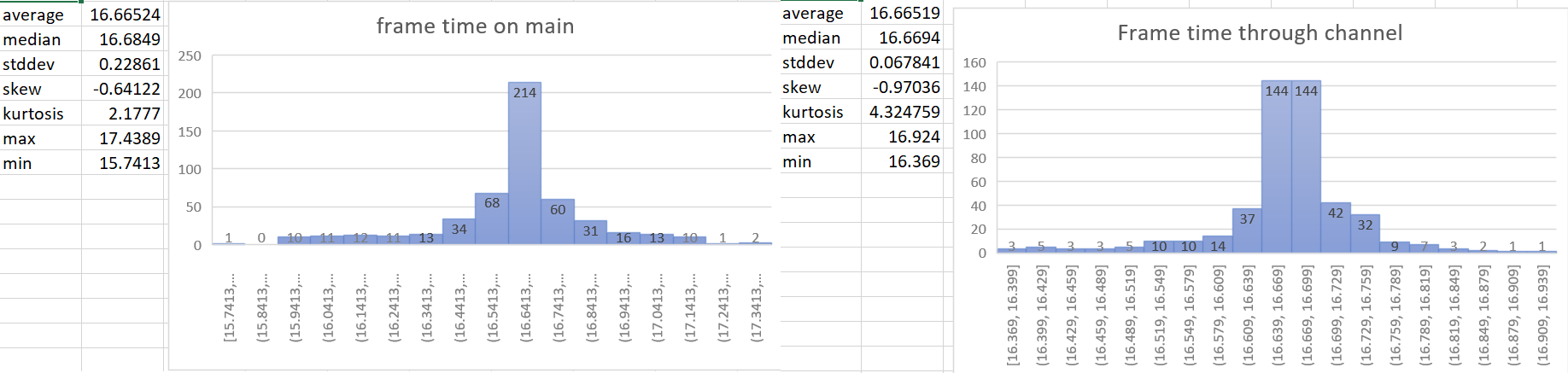

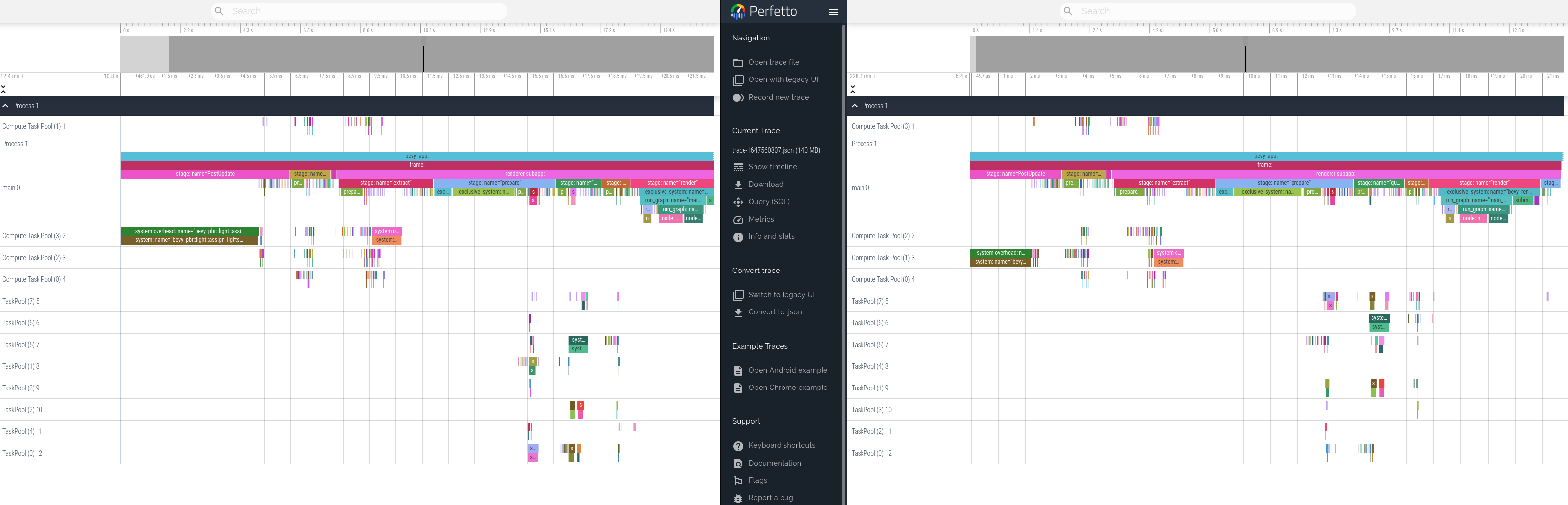

- The time update is currently done in the wrong part of the schedule. For a single frame the current order of things is update input, update time (First stage), other stages, render stage (frame presentation). So when we update the time it includes the input processing of the current frame and the frame presentation of the previous frame. This is a problem when vsync is on. When input processing takes a longer amount of time for a frame, the vsync wait time gets shorter. So when these are not paired correctly we can potentially have a long input processing time added to the normal vsync wait time in the previous frame. This leads to inaccurate frame time reporting and more variance of the time than actually exists. For more details of why this is an issue see the linked issue below.

- Helps with https://github.com/bevyengine/bevy/issues/4669

- Supercedes https://github.com/bevyengine/bevy/pull/4728 and https://github.com/bevyengine/bevy/pull/4735. This PR should be less controversial than those because it doesn't add to the API surface.

## Solution

- The most accurate frame time would come from hardware. We currently don't have access to that for multiple reasons, so the next best thing we can do is measure the frame time as close to frame presentation as possible. This PR gets the Instant::now() for the time immediately after frame presentation in the render system and then sends that time to the app world through a channel.

- implements suggestion from @aevyrie from here https://github.com/bevyengine/bevy/pull/4728#discussion_r872010606

## Statistics

---

## Changelog

- Make frame time reporting more accurate.

## Migration Guide

`time.delta()` now reports zero for 2 frames on startup instead of 1 frame.

Remove unnecessary calls to `iter()`/`iter_mut()`.

Mainly updates the use of queries in our code, docs, and examples.

```rust

// From

for _ in list.iter() {

for _ in list.iter_mut() {

// To

for _ in &list {

for _ in &mut list {

```

We already enable the pedantic lint [clippy::explicit_iter_loop](https://rust-lang.github.io/rust-clippy/stable/) inside of Bevy. However, this only warns for a few known types from the standard library.

## Note for reviewers

As you can see the additions and deletions are exactly equal.

Maybe give it a quick skim to check I didn't sneak in a crypto miner, but you don't have to torture yourself by reading every line.

I already experienced enough pain making this PR :)

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- Validate the format of the values with the expected attribute format.

- Currently, if you pass the wrong format, it will crash somewhere unrelated with a very cryptic error message, so it's really hard to debug for beginners.

## Solution

- Compare the format and panic when unexpected format is passed

## Note

- I used a separate `error!()` for a human friendly message because the panic message is very noisy and hard to parse for beginners. I don't mind changing this to only a panic if people prefer that.

- This could potentially be something that runs only in debug mode, but I don't think inserting attributes is done often enough for this to be an issue.

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Small optimization. `.collect()` from arrays generates very nice code without reallocations: https://rust.godbolt.org/z/6E6c595bq

Co-authored-by: Kornel <kornel@geekhood.net>

# Objective

Currently some TextureFormats are not supported by the Image type.

The `TextureFormat::R16Unorm` format is useful for storing heightmaps.

This small change would unblock releasing my terrain plugin on bevy 0.8.

## Solution

Added `TextureFormat::R16Unorm` support to Image.

This is an alternative (short term solution) to the large texture format issue https://github.com/bevyengine/bevy/pull/4124.

# Objective

- Extracting resources currently always uses commands, which requires *at least* one additional move of the extracted value, as well as dynamic dispatch.

- Addresses https://github.com/bevyengine/bevy/pull/4402#discussion_r911634931

## Solution

- Write the resource into a `ResMut<R>` directly.

- Fall-back to commands if the resource hasn't been added yet.

# Objective

- Currently, the `Extract` `RenderStage` is executed on the main world, with the render world available as a resource.

- However, when needing access to resources in the render world (e.g. to mutate them), the only way to do so was to get exclusive access to the whole `RenderWorld` resource.

- This meant that effectively only one extract which wrote to resources could run at a time.

- We didn't previously make `Extract`ing writing to the world a non-happy path, even though we want to discourage that.

## Solution

- Move the extract stage to run on the render world.

- Add the main world as a `MainWorld` resource.

- Add an `Extract` `SystemParam` as a convenience to access a (read only) `SystemParam` in the main world during `Extract`.

## Future work

It should be possible to avoid needing to use `get_or_spawn` for the render commands, since now the `Commands`' `Entities` matches up with the world being executed on.

We need to determine how this interacts with https://github.com/bevyengine/bevy/pull/3519

It's theoretically possible to remove the need for the `value` method on `Extract`. However, that requires slightly changing the `SystemParam` interface, which would make it more complicated. That would probably mess up the `SystemState` api too.

## Todo

I still need to add doc comments to `Extract`.

---

## Changelog

### Changed

- The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase.

Resources on the render world can now be accessed using `ResMut` during extract.

### Removed

- `Commands::spawn_and_forget`. Use `Commands::get_or_spawn(e).insert_bundle(bundle)` instead

## Migration Guide

The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase. `Extract` takes a single type parameter, which is any system parameter (such as `Res`, `Query` etc.). It will extract this from the main world, and returns the result of this extraction when `value` is called on it.

For example, if previously your extract system looked like:

```rust

fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

for cloud in clouds.iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

the new version would be:

```rust

fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

The diff is:

```diff

--- a/src/clouds.rs

+++ b/src/clouds.rs

@@ -1,5 +1,5 @@

-fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

- for cloud in clouds.iter() {

+fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

+ for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

You can now also access resources from the render world using the normal system parameters during `Extract`:

```rust

fn extract_assets(mut render_assets: ResMut<MyAssets>, source_assets: Extract<Res<MyAssets>>) {

*render_assets = source_assets.clone();

}

```

Please note that all existing extract systems need to be updated to match this new style; even if they currently compile they will not run as expected. A warning will be emitted on a best-effort basis if this is not met.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Support removing attributes from meshes. For an example use case, meshes created using the bevy::predule::shape types or loaded from external files may have attributes that are not needed for the materials they will be rendered with.

This was extracted from PR #5222.

## Solution

Implement Mesh::remove_attribute().

# Objective

add spotlight support

## Solution / Changelog

- add spotlight angles (inner, outer) to ``PointLight`` struct. emitted light is linearly attenuated from 100% to 0% as angle tends from inner to outer. Direction is taken from the existing transform rotation.

- add spotlight direction (vec3) and angles (f32,f32) to ``GpuPointLight`` struct (60 bytes -> 80 bytes) in ``pbr/render/lights.rs`` and ``mesh_view_bind_group.wgsl``

- reduce no-buffer-support max point light count to 204 due to above

- use spotlight data to attenuate light in ``pbr.wgsl``

- do additional cluster culling on spotlights to minimise cost in ``assign_lights_to_clusters``

- changed one of the lights in the lighting demo to a spotlight

- also added a ``spotlight`` demo - probably not justified but so reviewers can see it more easily

## notes

increasing the size of the GpuPointLight struct on my machine reduces the FPS of ``many_lights -- sphere`` from ~150fps to 140fps.

i thought this was a reasonable tradeoff, and felt better than handling spotlights separately which is possible but would mean introducing a new bind group, refactoring light-assignment code and adding new spotlight-specific code in pbr.wgsl. the FPS impact for smaller numbers of lights should be very small.

the cluster culling strategy reintroduces the cluster aabb code which was recently removed... sorry. the aabb is used to get a cluster bounding sphere, which can then be tested fairly efficiently using the strategy described at the end of https://bartwronski.com/2017/04/13/cull-that-cone/. this works well with roughly cubic clusters (where the cluster z size is close to the same as x/y size), less well for other cases like single Z slice / tiled forward rendering. In the worst case we will end up just keeping the culling of the equivalent point light.

Co-authored-by: François <mockersf@gmail.com>

# Objective

Reduce the boilerplate code needed to make draw order sorting work correctly when queuing items through new common functionality. Also fix several instances in the bevy code-base (mostly examples) where this boilerplate appears to be incorrect.

## Solution

- Moved the logic for handling back-to-front vs front-to-back draw ordering into the PhaseItems by inverting the sort key ordering of Opaque3d and AlphaMask3d. The means that all the standard 3d rendering phases measure distance in the same way. Clients of these structs no longer need to know to negate the distance.

- Added a new utility struct, ViewRangefinder3d, which encapsulates the maths needed to calculate a "distance" from an ExtractedView and a mesh's transform matrix.

- Converted all the occurrences of the distance calculations in Bevy and its examples to use ViewRangefinder3d. Several of these occurrences appear to be buggy because they don't invert the view matrix or don't negate the distance where appropriate. This leads me to the view that Bevy should expose a facility to correctly perform this calculation.

## Migration Guide

Code which creates Opaque3d, AlphaMask3d, or Transparent3d phase items _should_ use ViewRangefinder3d to calculate the distance value.

Code which manually calculated the distance for Opaque3d or AlphaMask3d phase items and correctly negated the z value will no longer depth sort correctly. However, incorrect depth sorting for these types will not impact the rendered output as sorting is only a performance optimisation when drawing with depth-testing enabled. Code which manually calculated the distance for Transparent3d phase items will continue to work as before.

# Objective

We don't have reflection for resources.

## Solution

Introduce reflection for resources.

Continues #3580 (by @Davier), related to #3576.

---

## Changelog

### Added

* Reflection on a resource type (by adding `ReflectResource`):

```rust

#[derive(Reflect)]

#[reflect(Resource)]

struct MyResourse;

```

### Changed

* Rename `ReflectComponent::add_component` into `ReflectComponent::insert_component` for consistency.

## Migration Guide

* Rename `ReflectComponent::add_component` into `ReflectComponent::insert_component`.

# Objective

Transform screen-space coordinates into world space in shaders. (My use case is for generating rays for ray tracing with the same perspective as the 3d camera).

## Solution

Add `inverse_projection` and `inverse_view_proj` fields to shader view uniform

---

## Changelog

### Added

`inverse_projection` and `inverse_view_proj` fields to shader view uniform

## Note

It'd probably be good to double-check that I did the matrix multiplication in the right order for `inverse_proj_view`. Thanks!

# Objective

- Enable `wgpu` profiling spans

## Solution

- `wgpu` uses the `profiling` crate to add profiling span instrumentation to their code

- `profiling` offers multiple 'backends' for profiling, including `tracing`

- When the `bevy` `trace` feature is used, add the `profiling` crate with its `profile-with-tracing` feature to enable appropriate profiling spans in `wgpu` using `tracing` which fits nicely into our infrastructure

- Bump our default `tracing` subscriber filter to `wgpu=info` from `wgpu=error` so that the profiling spans are not filtered out as they are created at the `info` level.

---

## Changelog

- Added: `tracing` profiling support for `wgpu` when using bevy's `trace` feature

- Changed: The default `tracing` filter statement for `wgpu` has been changed from the `error` level to the `info` level to not filter out the wgpu profiling spans

Removed `const_vec2`/`const_vec3`

and replaced with equivalent `.from_array`.

# Objective

Fixes#5112

## Solution

- `encase` needs to update to `glam` as well. See teoxoy/encase#4 on progress on that.

- `hexasphere` also needs to be updated, see OptimisticPeach/hexasphere#12.

# Objective

- Nightly clippy lints should be fixed before they get stable and break CI

## Solution

- fix new clippy lints

- ignore `significant_drop_in_scrutinee` since it isn't relevant in our loop https://github.com/rust-lang/rust-clippy/issues/8987

```rust

for line in io::stdin().lines() {

...

}

```

Co-authored-by: Jakob Hellermann <hellermann@sipgate.de>

# Objective

Fixes#5153

## Solution

Search for all enums and manually check if they have default impls that can use this new derive.

By my reckoning:

| enum | num |

|-|-|

| total | 159 |

| has default impl | 29 |

| default is unit variant | 23 |

# Objective

This PR reworks Bevy's Material system, making the user experience of defining Materials _much_ nicer. Bevy's previous material system leaves a lot to be desired:

* Materials require manually implementing the `RenderAsset` trait, which involves manually generating the bind group, handling gpu buffer data transfer, looking up image textures, etc. Even the simplest single-texture material involves writing ~80 unnecessary lines of code. This was never the long term plan.

* There are two material traits, which is confusing, hard to document, and often redundant: `Material` and `SpecializedMaterial`. `Material` implicitly implements `SpecializedMaterial`, and `SpecializedMaterial` is used in most high level apis to support both use cases. Most users shouldn't need to think about specialization at all (I consider it a "power-user tool"), so the fact that `SpecializedMaterial` is front-and-center in our apis is a miss.

* Implementing either material trait involves a lot of "type soup". The "prepared asset" parameter is particularly heinous: `&<Self as RenderAsset>::PreparedAsset`. Defining vertex and fragment shaders is also more verbose than it needs to be.

## Solution

Say hello to the new `Material` system:

```rust

#[derive(AsBindGroup, TypeUuid, Debug, Clone)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

pub struct CoolMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Handle<Image>,

}

impl Material for CoolMaterial {

fn fragment_shader() -> ShaderRef {

"cool_material.wgsl".into()

}

}

```

Thats it! This same material would have required [~80 lines of complicated "type heavy" code](https://github.com/bevyengine/bevy/blob/v0.7.0/examples/shader/shader_material.rs) in the old Material system. Now it is just 14 lines of simple, readable code.

This is thanks to a new consolidated `Material` trait and the new `AsBindGroup` trait / derive.

### The new `Material` trait

The old "split" `Material` and `SpecializedMaterial` traits have been removed in favor of a new consolidated `Material` trait. All of the functions on the trait are optional.

The difficulty of implementing `Material` has been reduced by simplifying dataflow and removing type complexity:

```rust

// Old

impl Material for CustomMaterial {

fn fragment_shader(asset_server: &AssetServer) -> Option<Handle<Shader>> {

Some(asset_server.load("custom_material.wgsl"))

}

fn alpha_mode(render_asset: &<Self as RenderAsset>::PreparedAsset) -> AlphaMode {

render_asset.alpha_mode

}

}

// New

impl Material for CustomMaterial {

fn fragment_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

fn alpha_mode(&self) -> AlphaMode {

self.alpha_mode

}

}

```

Specialization is still supported, but it is hidden by default under the `specialize()` function (more on this later).

### The `AsBindGroup` trait / derive

The `Material` trait now requires the `AsBindGroup` derive. This can be implemented manually relatively easily, but deriving it will almost always be preferable.

Field attributes like `uniform` and `texture` are used to define which fields should be bindings,

what their binding type is, and what index they should be bound at:

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Handle<Image>,

}

```

In WGSL shaders, the binding looks like this:

```wgsl

struct CoolMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CoolMaterial;

[[group(1), binding(1)]]

var color_texture: texture_2d<f32>;

[[group(1), binding(2)]]

var color_sampler: sampler;

```

Note that the "group" index is determined by the usage context. It is not defined in `AsBindGroup`. Bevy material bind groups are bound to group 1.

The following field-level attributes are supported:

* `uniform(BINDING_INDEX)`

* The field will be converted to a shader-compatible type using the `ShaderType` trait, written to a `Buffer`, and bound as a uniform. It can also be derived for custom structs.

* `texture(BINDING_INDEX)`

* This field's `Handle<Image>` will be used to look up the matching `Texture` gpu resource, which will be bound as a texture in shaders. The field will be assumed to implement `Into<Option<Handle<Image>>>`. In practice, most fields should be a `Handle<Image>` or `Option<Handle<Image>>`. If the value of an `Option<Handle<Image>>` is `None`, the new `FallbackImage` resource will be used instead. This attribute can be used in conjunction with a `sampler` binding attribute (with a different binding index).

* `sampler(BINDING_INDEX)`

* Behaves exactly like the `texture` attribute, but sets the Image's sampler binding instead of the texture.

Note that fields without field-level binding attributes will be ignored.

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

this_field_is_ignored: String,

}

```

As mentioned above, `Option<Handle<Image>>` is also supported:

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Option<Handle<Image>>,

}

```

This is useful if you want a texture to be optional. When the value is `None`, the `FallbackImage` will be used for the binding instead, which defaults to "pure white".

Field uniforms with the same binding index will be combined into a single binding:

```rust

#[derive(AsBindGroup)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

#[uniform(0)]

roughness: f32,

}

```

In WGSL shaders, the binding would look like this:

```wgsl

struct CoolMaterial {

color: vec4<f32>;

roughness: f32;

};

[[group(1), binding(0)]]

var<uniform> material: CoolMaterial;

```

Some less common scenarios will require "struct-level" attributes. These are the currently supported struct-level attributes:

* `uniform(BINDING_INDEX, ConvertedShaderType)`

* Similar to the field-level `uniform` attribute, but instead the entire `AsBindGroup` value is converted to `ConvertedShaderType`, which must implement `ShaderType`. This is useful if more complicated conversion logic is required.

* `bind_group_data(DataType)`

* The `AsBindGroup` type will be converted to some `DataType` using `Into<DataType>` and stored as `AsBindGroup::Data` as part of the `AsBindGroup::as_bind_group` call. This is useful if data needs to be stored alongside the generated bind group, such as a unique identifier for a material's bind group. The most common use case for this attribute is "shader pipeline specialization".

The previous `CoolMaterial` example illustrating "combining multiple field-level uniform attributes with the same binding index" can

also be equivalently represented with a single struct-level uniform attribute:

```rust

#[derive(AsBindGroup)]

#[uniform(0, CoolMaterialUniform)]

struct CoolMaterial {

color: Color,

roughness: f32,

}

#[derive(ShaderType)]

struct CoolMaterialUniform {

color: Color,

roughness: f32,

}

impl From<&CoolMaterial> for CoolMaterialUniform {

fn from(material: &CoolMaterial) -> CoolMaterialUniform {

CoolMaterialUniform {

color: material.color,

roughness: material.roughness,

}

}

}

```

### Material Specialization

Material shader specialization is now _much_ simpler:

```rust

#[derive(AsBindGroup, TypeUuid, Debug, Clone)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

#[bind_group_data(CoolMaterialKey)]

struct CoolMaterial {

#[uniform(0)]

color: Color,

is_red: bool,

}

#[derive(Copy, Clone, Hash, Eq, PartialEq)]

struct CoolMaterialKey {

is_red: bool,

}

impl From<&CoolMaterial> for CoolMaterialKey {

fn from(material: &CoolMaterial) -> CoolMaterialKey {

CoolMaterialKey {

is_red: material.is_red,

}

}

}

impl Material for CoolMaterial {

fn fragment_shader() -> ShaderRef {

"cool_material.wgsl".into()

}

fn specialize(

pipeline: &MaterialPipeline<Self>,

descriptor: &mut RenderPipelineDescriptor,

layout: &MeshVertexBufferLayout,

key: MaterialPipelineKey<Self>,

) -> Result<(), SpecializedMeshPipelineError> {

if key.bind_group_data.is_red {

let fragment = descriptor.fragment.as_mut().unwrap();

fragment.shader_defs.push("IS_RED".to_string());

}

Ok(())

}

}

```

Setting `bind_group_data` is not required for specialization (it defaults to `()`). Scenarios like "custom vertex attributes" also benefit from this system:

```rust

impl Material for CustomMaterial {

fn vertex_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

fn fragment_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

fn specialize(

pipeline: &MaterialPipeline<Self>,

descriptor: &mut RenderPipelineDescriptor,

layout: &MeshVertexBufferLayout,

key: MaterialPipelineKey<Self>,

) -> Result<(), SpecializedMeshPipelineError> {

let vertex_layout = layout.get_layout(&[

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

ATTRIBUTE_BLEND_COLOR.at_shader_location(1),

])?;

descriptor.vertex.buffers = vec![vertex_layout];

Ok(())

}

}

```

### Ported `StandardMaterial` to the new `Material` system

Bevy's built-in PBR material uses the new Material system (including the AsBindGroup derive):

```rust

#[derive(AsBindGroup, Debug, Clone, TypeUuid)]

#[uuid = "7494888b-c082-457b-aacf-517228cc0c22"]

#[bind_group_data(StandardMaterialKey)]

#[uniform(0, StandardMaterialUniform)]

pub struct StandardMaterial {

pub base_color: Color,

#[texture(1)]

#[sampler(2)]

pub base_color_texture: Option<Handle<Image>>,

/* other fields omitted for brevity */

```

### Ported Bevy examples to the new `Material` system

The overall complexity of Bevy's "custom shader examples" has gone down significantly. Take a look at the diffs if you want a dopamine spike.

Please note that while this PR has a net increase in "lines of code", most of those extra lines come from added documentation. There is a significant reduction

in the overall complexity of the code (even accounting for the new derive logic).

---

## Changelog

### Added

* `AsBindGroup` trait and derive, which make it much easier to transfer data to the gpu and generate bind groups for a given type.

### Changed

* The old `Material` and `SpecializedMaterial` traits have been replaced by a consolidated (much simpler) `Material` trait. Materials no longer implement `RenderAsset`.

* `StandardMaterial` was ported to the new material system. There are no user-facing api changes to the `StandardMaterial` struct api, but it now implements `AsBindGroup` and `Material` instead of `RenderAsset` and `SpecializedMaterial`.

## Migration Guide

The Material system has been reworked to be much simpler. We've removed a lot of boilerplate with the new `AsBindGroup` derive and the `Material` trait is simpler as well!

### Bevy 0.7 (old)

```rust

#[derive(Debug, Clone, TypeUuid)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

pub struct CustomMaterial {

color: Color,

color_texture: Handle<Image>,

}

#[derive(Clone)]

pub struct GpuCustomMaterial {

_buffer: Buffer,

bind_group: BindGroup,

}

impl RenderAsset for CustomMaterial {

type ExtractedAsset = CustomMaterial;

type PreparedAsset = GpuCustomMaterial;

type Param = (SRes<RenderDevice>, SRes<MaterialPipeline<Self>>);

fn extract_asset(&self) -> Self::ExtractedAsset {

self.clone()

}

fn prepare_asset(

extracted_asset: Self::ExtractedAsset,

(render_device, material_pipeline): &mut SystemParamItem<Self::Param>,

) -> Result<Self::PreparedAsset, PrepareAssetError<Self::ExtractedAsset>> {

let color = Vec4::from_slice(&extracted_asset.color.as_linear_rgba_f32());

let byte_buffer = [0u8; Vec4::SIZE.get() as usize];

let mut buffer = encase::UniformBuffer::new(byte_buffer);

buffer.write(&color).unwrap();

let buffer = render_device.create_buffer_with_data(&BufferInitDescriptor {

contents: buffer.as_ref(),

label: None,

usage: BufferUsages::UNIFORM | BufferUsages::COPY_DST,

});

let (texture_view, texture_sampler) = if let Some(result) = material_pipeline

.mesh_pipeline

.get_image_texture(gpu_images, &Some(extracted_asset.color_texture.clone()))

{

result

} else {

return Err(PrepareAssetError::RetryNextUpdate(extracted_asset));

};

let bind_group = render_device.create_bind_group(&BindGroupDescriptor {

entries: &[

BindGroupEntry {

binding: 0,

resource: buffer.as_entire_binding(),

},

BindGroupEntry {

binding: 0,

resource: BindingResource::TextureView(texture_view),

},

BindGroupEntry {

binding: 1,

resource: BindingResource::Sampler(texture_sampler),

},

],

label: None,

layout: &material_pipeline.material_layout,

});

Ok(GpuCustomMaterial {

_buffer: buffer,

bind_group,

})

}

}

impl Material for CustomMaterial {

fn fragment_shader(asset_server: &AssetServer) -> Option<Handle<Shader>> {

Some(asset_server.load("custom_material.wgsl"))

}

fn bind_group(render_asset: &<Self as RenderAsset>::PreparedAsset) -> &BindGroup {

&render_asset.bind_group

}

fn bind_group_layout(render_device: &RenderDevice) -> BindGroupLayout {

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

entries: &[

BindGroupLayoutEntry {

binding: 0,

visibility: ShaderStages::FRAGMENT,

ty: BindingType::Buffer {

ty: BufferBindingType::Uniform,

has_dynamic_offset: false,

min_binding_size: Some(Vec4::min_size()),

},

count: None,

},

BindGroupLayoutEntry {

binding: 1,

visibility: ShaderStages::FRAGMENT,

ty: BindingType::Texture {

multisampled: false,

sample_type: TextureSampleType::Float { filterable: true },

view_dimension: TextureViewDimension::D2Array,

},

count: None,

},

BindGroupLayoutEntry {

binding: 2,

visibility: ShaderStages::FRAGMENT,

ty: BindingType::Sampler(SamplerBindingType::Filtering),

count: None,

},

],

label: None,

})

}

}

```

### Bevy 0.8 (new)

```rust

impl Material for CustomMaterial {

fn fragment_shader() -> ShaderRef {

"custom_material.wgsl".into()

}

}

#[derive(AsBindGroup, TypeUuid, Debug, Clone)]

#[uuid = "f690fdae-d598-45ab-8225-97e2a3f056e0"]

pub struct CustomMaterial {

#[uniform(0)]

color: Color,

#[texture(1)]

#[sampler(2)]

color_texture: Handle<Image>,

}

```

## Future Work

* Add support for more binding types (cubemaps, buffers, etc). This PR intentionally includes a bare minimum number of binding types to keep "reviewability" in check.

* Consider optionally eliding binding indices using binding names. `AsBindGroup` could pass in (optional?) reflection info as a "hint".

* This would make it possible for the derive to do this:

```rust

#[derive(AsBindGroup)]

pub struct CustomMaterial {

#[uniform]

color: Color,

#[texture]

#[sampler]

color_texture: Option<Handle<Image>>,

alpha_mode: AlphaMode,

}

```

* Or this

```rust

#[derive(AsBindGroup)]

pub struct CustomMaterial {

#[binding]

color: Color,

#[binding]

color_texture: Option<Handle<Image>>,

alpha_mode: AlphaMode,

}

```

* Or even this (if we flip to "include bindings by default")

```rust

#[derive(AsBindGroup)]

pub struct CustomMaterial {

color: Color,

color_texture: Option<Handle<Image>>,

#[binding(ignore)]

alpha_mode: AlphaMode,

}

```

* If we add the option to define custom draw functions for materials (which could be done in a type-erased way), I think that would be enough to support extra non-material bindings. Worth considering!

# Objective

Documents the `BufferVec` render resource.

`BufferVec` is a fairly low level object, that will likely be managed by a higher level API (e.g. through [`encase`](https://github.com/bevyengine/bevy/issues/4272)) in the future. For now, since it is still used by some simple

example crates (e.g. [bevy-vertex-pulling](https://github.com/superdump/bevy-vertex-pulling)), it will be helpful

to provide some simple documentation on what `BufferVec` does.

## Solution

I looked through Discord discussion on `BufferVec`, and found [a comment](https://discord.com/channels/691052431525675048/953222550568173580/956596218857918464 ) by @superdump to be particularly helpful, in the general discussion around `encase`.

I have taken care to clarify where the data is stored (host-side), when the device-side buffer is created (through calls to `reserve`), and when data writes from host to device are scheduled (using `write_buffer` calls).

---

## Changelog

- Added doc string for `BufferVec` and two of its methods: `reserve` and `write_buffer`.

Co-authored-by: Brian Merchant <bhmerchant@gmail.com>

# Objective

Attempt to more clearly document `ImageSettings` and setting a default sampler for new images, as per #5046

## Changelog

- Moved ImageSettings into image.rs, image::* is already exported. Makes it simpler for linking docs.

- Renamed "DefaultImageSampler" to "RenderDefaultImageSampler". Not a great name, but more consistent with other render resources.

- Added/updated related docs

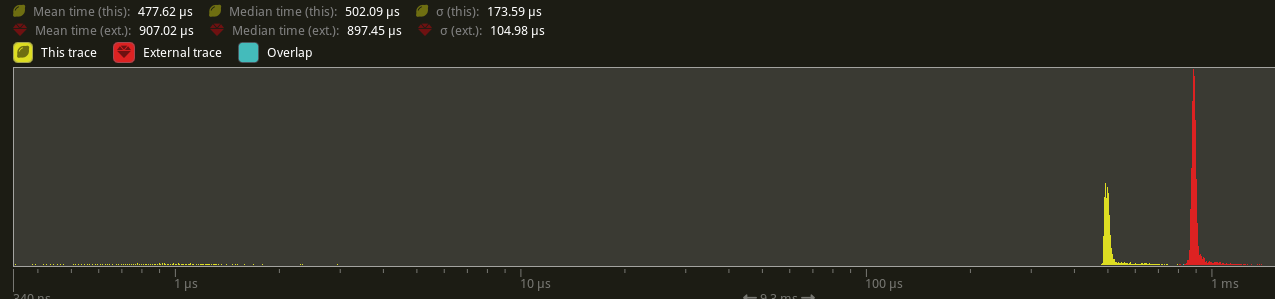

# Objective

Partially addresses #4291.

Speed up the sort phase for unbatched render phases.

## Solution

Split out one of the optimizations in #4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass.

## Performance

This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change.

On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.

## Future Work

There were prior discussions to add support for faster radix sorts in #4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems.

Another optimization included in #4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`.

Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while.

---

## Changelog

Added: `PhaseItem::sort`

## Migration Guide

RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`).

Co-authored-by: Federico Rinaldi <gisquerin@gmail.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: colepoirier <colepoirier@gmail.com>

# Objective

Further speed up visibility checking by removing the main sources of contention for the system.

## Solution

- ~~Make `ComputedVisibility` a resource wrapping a `FixedBitset`.~~

- ~~Remove `ComputedVisibility` as a component.~~

~~This adds a one-bit overhead to every entity in the app world. For a game with 100,000 entities, this is 12.5KB of memory. This is still small enough to fit entirely in most L1 caches. Also removes the need for a per-Entity change detection tick. This reduces the memory footprint of ComputedVisibility 72x.~~

~~The decreased memory usage and less fragmented memory locality should provide significant performance benefits.~~

~~Clearing visible entities should be significantly faster than before:~~

- ~~Setting one `u32` to 0 clears 32 entities per cycle.~~

- ~~No archetype fragmentation to contend with.~~

- ~~Change detection is applied to the resource, so there is no per-Entity update tick requirement.~~

~~The side benefit of this design is that it removes one more "computed component" from userspace. Though accessing the values within it are now less ergonomic.~~

This PR changes `crossbeam_channel` in `check_visibility` to use a `Local<ThreadLocal<Cell<Vec<Entity>>>` to mark down visible entities instead.

Co-Authored-By: TheRawMeatball <therawmeatball@gmail.com>

Co-Authored-By: Aevyrie <aevyrie@gmail.com>

builds on top of #4780

# Objective

`Reflect` and `Serialize` are currently very tied together because `Reflect` has a `fn serialize(&self) -> Option<Serializable<'_>>` method. Because of that, we can either implement `Reflect` for types like `Option<T>` with `T: Serialize` and have `fn serialize` be implemented, or without the bound but having `fn serialize` return `None`.

By separating `ReflectSerialize` into a separate type (like how it already is for `ReflectDeserialize`, `ReflectDefault`), we could separately `.register::<Option<T>>()` and `.register_data::<Option<T>, ReflectSerialize>()` only if the type `T: Serialize`.

This PR does not change the registration but allows it to be changed in a future PR.

## Solution

- add the type

```rust

struct ReflectSerialize { .. }

impl<T: Reflect + Serialize> FromType<T> for ReflectSerialize { .. }

```

- remove `#[reflect(Serialize)]` special casing.

- when serializing reflect value types, look for `ReflectSerialize` in the `TypeRegistry` instead of calling `value.serialize()`

# Objective

- KTX2 UASTC format mapping was incorrect. For some reason I had written it to map to a set of data formats based on the count of KTX2 sample information blocks, but the mapping should be done based on the channel type in the sample information.

- This is a valid change pulled out from #4514 as the attempt to fix the array textures there was incorrect

## Solution

- Fix the KTX2 UASTC `DataFormat` enum to contain the correct formats based on the channel types in section 3.10.2 of https://github.khronos.org/KTX-Specification/ (search for "Basis Universal UASTC Format")

- Correctly map from the sample information channel type to `DataFormat`

- Correctly configure transcoding and the resulting texture format based on the `DataFormat`

---

## Changelog

- Fixed: KTX2 UASTC format handling

# Use Case

Seems generally useful, but specifically motivated by my work on the [`bevy_datasize`](https://github.com/BGR360/bevy_datasize) crate.

For that project, I'm implementing "heap size estimators" for all of the Bevy internal types. To do this accurately for `Mesh`, I need to get the lengths of all of the mesh's attribute vectors.

Currently, in order to accomplish this, I am doing the following:

* Checking all of the attributes that are mentioned in the `Mesh` class ([see here](0531ec2d02/src/builtins/render/mesh.rs (L46-L54)))

* Providing the user with an option to configure additional attributes to check ([see here](0531ec2d02/src/config.rs (L7-L21)))

This is both overly complicated and a bit wasteful (since I have to check every attribute name that I know about in case there are attributes set for it).

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

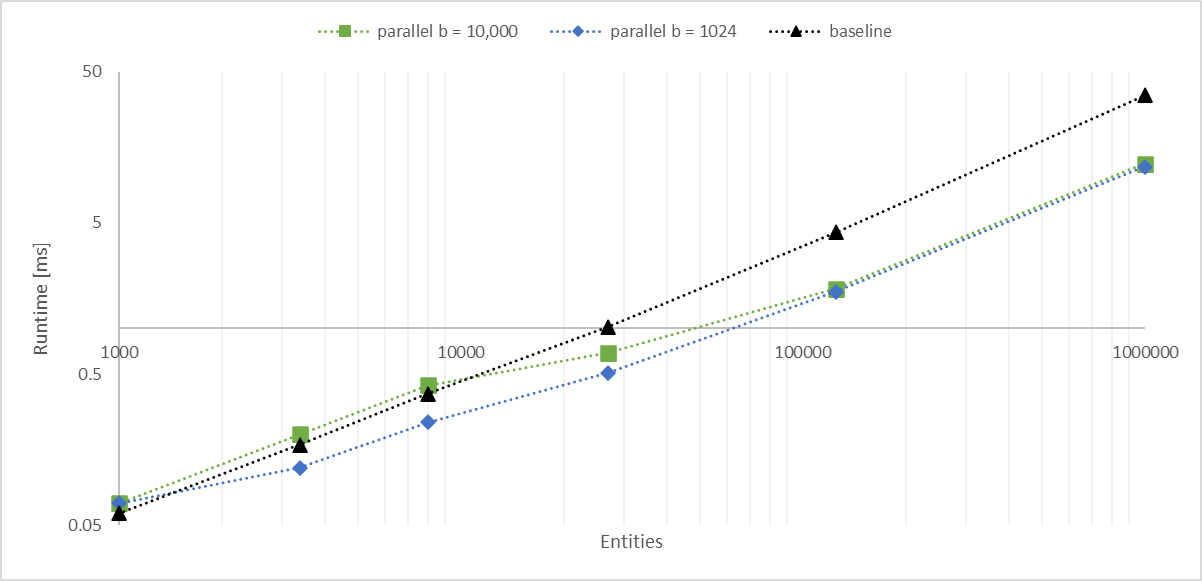

# Objective

Working with a large number of entities with `Aabbs`, rendered with an instanced shader, I found the bottleneck became the frustum culling system. The goal of this PR is to significantly improve culling performance without any major changes. We should consider constructing a BVH for more substantial improvements.

## Solution

- Convert the inner entity query to a parallel iterator with `par_for_each_mut` using a batch size of 1,024.

- This outperforms single threaded culling when there are more than 1,000 entities.

- Below this they are approximately equal, with <= 10 microseconds of multithreading overhead.

- Above this, the multithreaded version is significantly faster, scaling linearly with core count.

- In my million-entity-workload, this PR improves my framerate by 200% - 300%.

## log-log of `check_visibility` time vs. entities for single/multithreaded

---

## Changelog

Frustum culling is now run with a parallel query. When culling more than a thousand entities, this is faster than the previous method, scaling proportionally with the number of available cores.

# Objective

Fix#4958

There was 4 issues:

- this is not true in WASM and on macOS: f28b921209/examples/3d/split_screen.rs (L90)

- ~~I made sure the system was running at least once~~

- I'm sending the event on window creation

- in webgl, setting a viewport has impacts on other render passes

- only in webgl and when there is a custom viewport, I added a render pass without a custom viewport

- shaderdef NO_ARRAY_TEXTURES_SUPPORT was not used by the 2d pipeline

- webgl feature was used but not declared in bevy_sprite, I added it to the Cargo.toml

- shaderdef NO_STORAGE_BUFFERS_SUPPORT was not used by the 2d pipeline

- I added it based on the BufferBindingType

The last commit changes the two last fixes to add the shaderdefs in the shader cache directly instead of needing to do it in each pipeline

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Closes#4464

## Solution

- Specify default mag and min filter types for `Image` instead of using `wgpu`'s defaults.

---

## Changelog

### Changed

- Default `Image` filtering changed from `Nearest` to `Linear`.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Most of our `Iterator` impls satisfy the requirements of `std::iter::FusedIterator`, which has internal specialization that optimizes `Interator::fuse`. The std lib iterator combinators do have a few that rely on `fuse`, so this could optimize those use cases. I don't think we're using any of them in the engine itself, but beyond a light increase in compile time, it doesn't hurt to implement the trait.

## Solution

Implement the trait for all eligible iterators in first party crates. Also add a missing `ExactSizeIterator` on an iterator that could use it.

While working on a refactor of `bevy_mod_picking` to include viewport-awareness, I found myself writing these functions to test if a cursor coordinate was inside the camera's rendered area.

# Objective

- Simplify conversion from physical to logical pixels

- Add methods that returns the dimensions of the viewport as a min-max rect

---

## Changelog

- Added `Camera::to_logical`

- Added `Camera::physical_viewport_rect`

- Added `Camera::logical_viewport_rect`

# Objective

Currently, providing the wrong number of inputs to a render graph node triggers this assertion:

```

thread 'main' panicked at 'assertion failed: `(left == right)`

left: `1`,

right: `2`', /[redacted]/bevy/crates/bevy_render/src/renderer/graph_runner.rs:164:13

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

```

This does not provide the user any context.

## Solution

Add a new `RenderGraphRunnerError` variant to handle this case. The new message looks like this:

```

ERROR bevy_render::renderer: Error running render graph:

ERROR bevy_render::renderer: > node (name: 'Some("outline_pass")') has 2 input slots, but was provided 1 values

```

---

## Changelog

### Changed

`RenderGraphRunnerError` now has a new variant, `MismatchedInputCount`.

## Migration Guide

Exhaustive matches on `RenderGraphRunnerError` will need to add a branch to handle the new `MismatchedInputCount` variant.

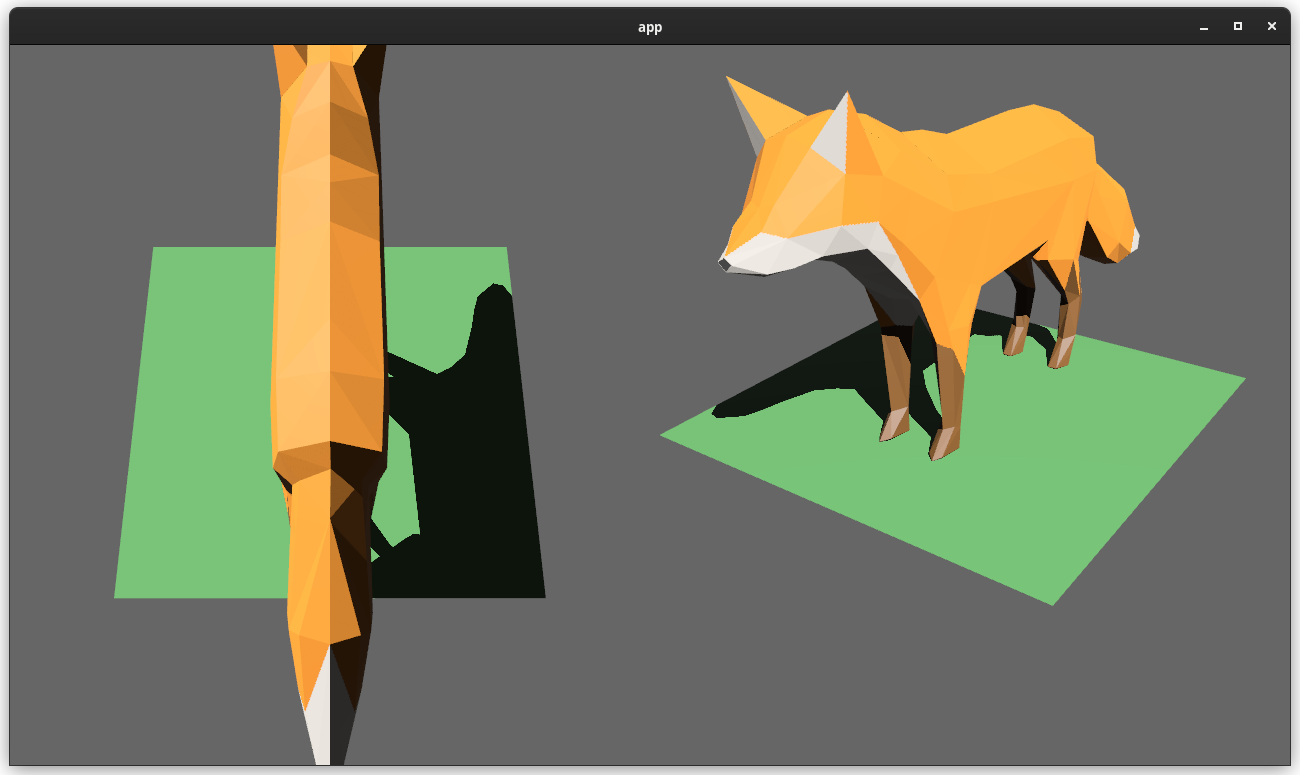

# Objective

Users should be able to render cameras to specific areas of a render target, which enables scenarios like split screen, minimaps, etc.

Builds on the new Camera Driven Rendering added here: #4745Fixes: #202

Alternative to #1389 and #3626 (which are incompatible with the new Camera Driven Rendering)

## Solution

Cameras can now configure an optional "viewport", which defines a rectangle within their render target to draw to. If a `Viewport` is defined, the camera's `CameraProjection`, `View`, and visibility calculations will use the viewport configuration instead of the full render target.

```rust

// This camera will render to the first half of the primary window (on the left side).

commands.spawn_bundle(Camera3dBundle {

camera: Camera {

viewport: Some(Viewport {

physical_position: UVec2::new(0, 0),

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

depth: 0.0..1.0,

}),

..default()

},

..default()

});

```

To account for this, the `Camera` component has received a few adjustments:

* `Camera` now has some new getter functions:

* `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, `projection_matrix`

* All computed camera values are now private and live on the `ComputedCameraValues` field (logical/physical width/height, the projection matrix). They are now exposed on `Camera` via getters/setters This wasn't _needed_ for viewports, but it was long overdue.

---

## Changelog

### Added

* `Camera` components now have a `viewport` field, which can be set to draw to a portion of a render target instead of the full target.

* `Camera` component has some new functions: `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, and `projection_matrix`

* Added a new split_screen example illustrating how to render two cameras to the same scene

## Migration Guide

`Camera::projection_matrix` is no longer a public field. Use the new `Camera::projection_matrix()` method instead:

```rust

// Bevy 0.7

let projection = camera.projection_matrix;

// Bevy 0.8

let projection = camera.projection_matrix();

```

# Objective

At the moment all extra capabilities are disabled when validating shaders with naga:

c7c08f95cb/crates/bevy_render/src/render_resource/shader.rs (L146-L149)

This means these features can't be used even if the corresponding wgpu features are active.

## Solution

With these changes capabilities are now set corresponding to `RenderDevice::features`.

---

I have validated these changes for push constants with a project I am currently working on. Though bevy does not support creating pipelines with push constants yet, so I was only able to see that shaders are validated and compiled as expected.

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

# Objective

Models can be produced that do not have vertex tangents but do have normal map textures. The tangents can be generated. There is a way that the vertex tangents can be generated to be exactly invertible to avoid introducing error when recreating the normals in the fragment shader.

## Solution

- After attempts to get https://github.com/gltf-rs/mikktspace to integrate simple glam changes and version bumps, and releases of that crate taking weeks / not being made (no offense intended to the authors/maintainers, bevy just has its own timelines and needs to take care of) it was decided to fork that repository. The following steps were taken:

- mikktspace was forked to https://github.com/bevyengine/mikktspace in order to preserve the repository's history in case the original is ever taken down

- The README in that repo was edited to add a note stating from where the repository was forked and explaining why

- The repo was locked for changes as its only purpose is historical

- The repo was integrated into the bevy repo using `git subtree add --prefix crates/bevy_mikktspace git@github.com:bevyengine/mikktspace.git master`

- In `bevy_mikktspace`:

- The travis configuration was removed

- `cargo fmt` was run

- The `Cargo.toml` was conformed to bevy's (just adding bevy to the keywords, changing the homepage and repository, changing the version to 0.7.0-dev - importantly the license is exactly the same)

- Remove the features, remove `nalgebra` entirely, only use `glam`, suppress clippy.

- This was necessary because our CI runs clippy with `--all-features` and the `nalgebra` and `glam` features are mutually exclusive, plus I don't want to modify this highly numerically-sensitive code just to appease clippy and diverge even more from upstream.

- Rebase https://github.com/bevyengine/bevy/pull/1795

- @jakobhellermann said it was fine to copy and paste but it ended up being almost exactly the same with just a couple of adjustments when validating correctness so I decided to actually rebase it and then build on top of it.

- Use the exact same fragment shader code to ensure correct normal mapping.

- Tested with both https://github.com/KhronosGroup/glTF-Sample-Models/tree/master/2.0/NormalTangentMirrorTest which has vertex tangents and https://github.com/KhronosGroup/glTF-Sample-Models/tree/master/2.0/NormalTangentTest which requires vertex tangent generation

Co-authored-by: alteous <alteous@outlook.com>

Adds ability to specify scaling factor for `WindowSize`, size of the fixed axis for `FixedVertical` and `FixedHorizontal` and a new `ScalingMode` that is a mix of `FixedVertical` and `FixedHorizontal`

# The issue

Currently, only available options are to:

* Have one of the axes fixed to value 1

* Have viewport size match the window size

* Manually adjust viewport size

In most of the games these options are not enough and more advanced scaling methods have to be used

## Solution

The solution is to provide additional parameters to current scaling modes, like scaling factor for `WindowSize`. Additionally, a more advanced `Auto` mode is added, which dynamically switches between behaving like `FixedVertical` and `FixedHorizontal` depending on the window's aspect ratio.

Co-authored-by: Daniikk1012 <49123959+Daniikk1012@users.noreply.github.com>

# Objective

- Add an `ExtractResourcePlugin` for convenience and consistency

## Solution

- Add an `ExtractResourcePlugin` similar to `ExtractComponentPlugin` but for ECS `Resource`s. The system that is executed simply clones the main world resource into a render world resource, if and only if the main world resource was either added or changed since the last execution of the system.

- Add an `ExtractResource` trait with a `fn extract_resource(res: &Self) -> Self` function. This is used by the `ExtractResourcePlugin` to extract the resource

- Add a derive macro for `ExtractResource` on a `Resource` with the `Clone` trait, that simply returns `res.clone()`

- Use `ExtractResourcePlugin` wherever both possible and appropriate

This was first done in 7b4e3a5, but was then reverted when the new

renderer for 0.6 was merged (ffecb05).

I'm assuming it was simply a mistake when merging.

# Objective

- Same as #2740, I think it was reverted by mistake when merging.

> # Objective

>

> - Make it easy to use HexColorError with `thiserror`, i.e. converting it into other error types.

>

> Makes this possible:

>

> ```rust

> #[derive(Debug, thiserror::Error)]

> pub enum LdtkError {

> #[error("An error occured while deserializing")]

> Json(#[from] serde_json::Error),

> #[error("An error occured while parsing a color")]

> HexColor(#[from] bevy::render::color::HexColorError),

> }

> ```

>

> ## Solution

>

> - Derive thiserror::Error the same way we do elsewhere (see query.rs for instance)

# Objective

One way to avoid texture atlas bleeding is to ensure that every vertex is

placed at an integer pixel coordinate. This is a particularly appealing

solution for regular structures like tile maps.

Doing so is currently harder than necessary when the WindowSize scaling

mode and Center origin are used: For odd window width or height, the

origin of the coordinate system is placed in the middle of a pixel at

some .5 offset.

## Solution

Avoid this issue by rounding the half width and height values.

# Objective

Make the function consistent with returned values and `as_hsla` method

Fixes#4826

## Solution

- Rename the method

## Migration Guide

- Rename the method

# Objective

- We do a lot of function pointer calls in a hot loop (clearing entities in render). This is slow, since calling function pointers cannot be optimised out. We can avoid that in the cases where the function call is a no-op.

- Alternative to https://github.com/bevyengine/bevy/pull/2897

- On my machine, in `many_cubes`, this reduces dropping time from ~150μs to ~80μs.

## Solution

- Make `drop` in `BlobVec` an `Option`, recording whether the given drop impl is required or not.

- Note that this does add branching in some cases - we could consider splitting this into two fields, i.e. unconditionally call the `drop` fn pointer.

- My intuition of how often types stored in `World` should have non-trivial drops makes me think that would be slower, however.

N.B. Even once this lands, we should still test having a 'drop_multiple' variant - for types with a real `Drop` impl, the current implementation is definitely optimal.

# Objective

- Fixes#4456

## Solution

- Removed the `near` and `far` fields from the camera and the views.

---

## Changelog

- Removed the `near` and `far` fields from the camera and the views.

- Removed the `ClusterFarZMode::CameraFarPlane` far z mode.

## Migration Guide

- Cameras no longer accept near and far values during initialization

- `ClusterFarZMode::Constant` should be used with the far value instead of `ClusterFarZMode::CameraFarPlane`

# Objective

The frame marker event was emitted in the loop of presenting all the windows. This would mark the frame as finished multiple times if more than one window is used.

## Solution

Move the frame marker to after the `for`-loop, so that it gets executed only once.

# Objective

Make it easy to get position and index data from Meshes.

## Solution

It was previously possible to get the mesh data by manually matching on `Mesh::VertexAttributeValues` and `Mesh::Indices`as in the bodies of these two methods (`VertexAttributeValues::as_float3(&self)` and `Indices::iter(&self)`), but that's needless duplication that making these methods `pub` fixes.

# Objective

Fixes#3180, builds from https://github.com/bevyengine/bevy/pull/2898

## Solution

Support requesting a window to be closed and closing a window in `bevy_window`, and handle this in `bevy_winit`.

This is a stopgap until we move to windows as entites, which I'm sure I'll get around to eventually.

## Changelog

### Added

- `Window::close` to allow closing windows.

- `WindowClosed` to allow reacting to windows being closed.

### Changed

Replaced `bevy::system::exit_on_esc_system` with `bevy:🪟:close_on_esc`.

## Fixed

The app no longer exits when any window is closed. This difference is only observable when there are multiple windows.

## Migration Guide

`bevy::input::system::exit_on_esc_system` has been removed. Use `bevy:🪟:close_on_esc` instead.

`CloseWindow` has been removed. Use `Window::close` instead.

The `Close` variant has been added to `WindowCommand`. Handle this by closing the relevant window.

# Objective

Fixes#4556

## Solution

StorageBuffer must use the Size of the std430 representation to calculate the buffer size, as the std430 representation is the data that will be written to it.

# Objective

Add support for vertex colors

## Solution

This change is modeled after how vertex tangents are handled, so the shader is conditionally compiled with vertex color support if the mesh has the corresponding attribute set.

Vertex colors are multiplied by the base color. I'm not sure if this is the best for all cases, but may be useful for modifying vertex colors without creating a new mesh.

I chose `VertexFormat::Float32x4`, but I'd prefer 16-bit floats if/when support is added.

## Changelog

### Added

- Vertex colors can be specified using the `Mesh::ATTRIBUTE_COLOR` mesh attribute.

# Objective

Bevy users often want to create circles and other simple shapes.

All the machinery is in place to accomplish this, and there are external crates that help. But when writing code for e.g. a new bevy example, it's not really possible to draw a circle without bringing in a new asset, writing a bunch of scary looking mesh code, or adding a dependency.

In particular, this PR was inspired by this interaction in another PR: https://github.com/bevyengine/bevy/pull/3721#issuecomment-1016774535

## Solution

This PR adds `shape::RegularPolygon` and `shape::Circle` (which is just a `RegularPolygon` that defaults to a large number of sides)

## Discussion

There's a lot of ongoing discussion about shapes in <https://github.com/bevyengine/rfcs/pull/12> and at least one other lingering shape PR (although it seems incomplete).

That RFC currently includes `RegularPolygon` and `Circle` shapes, so I don't think that having working mesh generation code in the engine for those shapes would add much burden to an author of an implementation.

But if we'd prefer not to add additional shapes until after that's sorted out, I'm happy to close this for now.

## Alternatives for users

For any users stumbling on this issue, here are some plugins that will help if you need more shapes.

https://github.com/Nilirad/bevy_prototype_lyonhttps://github.com/johanhelsing/bevy_smudhttps://github.com/Weasy666/bevy_svghttps://github.com/redpandamonium/bevy_more_shapeshttps://github.com/ForesightMiningSoftwareCorporation/bevy_polyline

# Objective

- After #3412, `Camera::world_to_screen` got a little bit uglier to use by needing to provide both `Windows` and `Assets<Image>`, even though only one would be needed b697e73c3d/crates/bevy_render/src/camera/camera.rs (L117-L123)

- Some time, exact coordinates are not needed but normalized device coordinates is enough

## Solution

- Add a function to just get NDC

### Problem

It currently isn't possible to construct the default value of a reflected type. Because of that, it isn't possible to use `add_component` of `ReflectComponent` to add a new component to an entity because you can't know what the initial value should be.

### Solution

1. add `ReflectDefault` type

```rust

#[derive(Clone)]

pub struct ReflectDefault {

default: fn() -> Box<dyn Reflect>,

}

impl ReflectDefault {

pub fn default(&self) -> Box<dyn Reflect> {

(self.default)()

}

}

impl<T: Reflect + Default> FromType<T> for ReflectDefault {

fn from_type() -> Self {

ReflectDefault {

default: || Box::new(T::default()),

}

}

}

```

2. add `#[reflect(Default)]` to all component types that implement `Default` and are user facing (so not `ComputedSize`, `CubemapVisibleEntities` etc.)

This makes it possible to add the default value of a component to an entity without any compile-time information:

```rust

fn main() {

let mut app = App::new();

app.register_type::<Camera>();

let type_registry = app.world.get_resource::<TypeRegistry>().unwrap();

let type_registry = type_registry.read();

let camera_registration = type_registry.get(std::any::TypeId::of::<Camera>()).unwrap();

let reflect_default = camera_registration.data::<ReflectDefault>().unwrap();

let reflect_component = camera_registration

.data::<ReflectComponent>()

.unwrap()

.clone();

let default = reflect_default.default();

drop(type_registry);

let entity = app.world.spawn().id();

reflect_component.add_component(&mut app.world, entity, &*default);

let camera = app.world.entity(entity).get::<Camera>().unwrap();

dbg!(&camera);

}

```

### Open questions

- should we have `ReflectDefault` or `ReflectFromWorld` or both?

# Objective

- While optimising many_cubes, I noticed that all material handles are extracted regardless of whether the entity to which the handle belongs is visible or not. As such >100k handles are extracted when only <20k are visible.

## Solution

- Only extract material handles of visible entities.

- This improves `many_cubes -- sphere` from ~42fps to ~48fps. It reduces not only the extraction time but also system commands time. `Handle<StandardMaterial>` extraction and its system commands went from 0.522ms + 3.710ms respectively, to 0.267ms + 0.227ms an 88% reduction for this system for this case. It's very view dependent but...

# Objective

`bevy_ecs` has large amounts of unsafe code which is hard to get right and makes it difficult to audit for soundness.

## Solution

Introduce lifetimed, type-erased pointers: `Ptr<'a>` `PtrMut<'a>` `OwningPtr<'a>'` and `ThinSlicePtr<'a, T>` which are newtypes around a raw pointer with a lifetime and conceptually representing strong invariants about the pointee and validity of the pointer.

The process of converting bevy_ecs to use these has already caught multiple cases of unsound behavior.

## Changelog

TL;DR for release notes: `bevy_ecs` now uses lifetimed, type-erased pointers internally, significantly improving safety and legibility without sacrificing performance. This should have approximately no end user impact, unless you were meddling with the (unfortunately public) internals of `bevy_ecs`.

- `Fetch`, `FilterFetch` and `ReadOnlyFetch` trait no longer have a `'state` lifetime

- this was unneeded

- `ReadOnly/Fetch` associated types on `WorldQuery` are now on a new `WorldQueryGats<'world>` trait

- was required to work around lack of Generic Associated Types (we wish to express `type Fetch<'a>: Fetch<'a>`)

- `derive(WorldQuery)` no longer requires `'w` lifetime on struct

- this was unneeded, and improves the end user experience

- `EntityMut::get_unchecked_mut` returns `&'_ mut T` not `&'w mut T`

- allows easier use of unsafe API with less footguns, and can be worked around via lifetime transmutery as a user

- `Bundle::from_components` now takes a `ctx` parameter to pass to the `FnMut` closure

- required because closure return types can't borrow from captures

- `Fetch::init` takes `&'world World`, `Fetch::set_archetype` takes `&'world Archetype` and `&'world Tables`, `Fetch::set_table` takes `&'world Table`

- allows types implementing `Fetch` to store borrows into world

- `WorldQuery` trait now has a `shrink` fn to shorten the lifetime in `Fetch::<'a>::Item`

- this works around lack of subtyping of assoc types, rust doesnt allow you to turn `<T as Fetch<'static>>::Item'` into `<T as Fetch<'a>>::Item'`

- `QueryCombinationsIter` requires this

- Most types implementing `Fetch` now have a lifetime `'w`

- allows the fetches to store borrows of world data instead of using raw pointers

## Migration guide

- `EntityMut::get_unchecked_mut` returns a more restricted lifetime, there is no general way to migrate this as it depends on your code

- `Bundle::from_components` implementations must pass the `ctx` arg to `func`

- `Bundle::from_components` callers have to use a fn arg instead of closure captures for borrowing from world

- Remove lifetime args on `derive(WorldQuery)` structs as it is nonsensical

- `<Q as WorldQuery>::ReadOnly/Fetch` should be changed to either `RO/QueryFetch<'world>` or `<Q as WorldQueryGats<'world>>::ReadOnly/Fetch`

- `<F as Fetch<'w, 's>>` should be changed to `<F as Fetch<'w>>`

- Change the fn sigs of `Fetch::init/set_archetype/set_table` to match respective trait fn sigs

- Implement the required `fn shrink` on any `WorldQuery` implementations

- Move assoc types `Fetch` and `ReadOnlyFetch` on `WorldQuery` impls to `WorldQueryGats` impls

- Pass an appropriate `'world` lifetime to whatever fetch struct you are for some reason using

### Type inference regression

in some cases rustc may give spurrious errors when attempting to infer the `F` parameter on a query/querystate this can be fixed by manually specifying the type, i.e. `QueryState:🆕:<_, ()>(world)`. The error is rather confusing:

```rust=

error[E0271]: type mismatch resolving `<() as Fetch<'_>>::Item == bool`

--> crates/bevy_pbr/src/render/light.rs:1413:30

|

1413 | main_view_query: QueryState::new(world),

| ^^^^^^^^^^^^^^^ expected `bool`, found `()`

|

= note: required because of the requirements on the impl of `for<'x> FilterFetch<'x>` for `<() as WorldQueryGats<'x>>::Fetch`

note: required by a bound in `bevy_ecs::query::QueryState::<Q, F>::new`

--> crates/bevy_ecs/src/query/state.rs:49:32

|

49 | for<'x> QueryFetch<'x, F>: FilterFetch<'x>,

| ^^^^^^^^^^^^^^^ required by this bound in `bevy_ecs::query::QueryState::<Q, F>::new`

```

---

Made with help from @BoxyUwU and @alice-i-cecile

Co-authored-by: Boxy <supbscripter@gmail.com>

# Objective

Reduce from scratch build time.

## Solution

Reduce the size of the critical path by removing dependencies between crates where not necessary. For `cargo check --no-default-features` this reduced build time from ~51s to ~45s. For some commits I am not completely sure if the tradeoff between build time reduction and convenience caused by the commit is acceptable. If not, I can drop them.

# Objective

Fix wonky torus normals.

## Solution

I attempted this previously in #3549, but it looks like I botched it. It seems like I mixed up the y/z axes. Somehow, the result looked okay from that particular camera angle.

This video shows toruses generated with

- [left, orange] original torus mesh code

- [middle, pink] PR 3549

- [right, purple] This PR

https://user-images.githubusercontent.com/200550/164093183-58a7647c-b436-4512-99cd-cf3b705cefb0.mov

# Objective

- Related #4276.

- Part of the splitting process of #3503.

## Solution

- Move `Size` to `bevy_ui`.

## Reasons

- `Size` is only needed in `bevy_ui` (because it needs to use `Val` instead of `f32`), but it's also used as a worse `Vec2` replacement in other areas.

- `Vec2` is more powerful than `Size` so it should be used whenever possible.

- Discussion in #3503.

## Changelog

### Changed

- The `Size` type got moved from `bevy_math` to `bevy_ui`.

## Migration Guide