# Objective

The `LICENSE` file in the root directory was removed in #4966. This breaks the license shield link in the README.

## Solution

I changed the link to instead point at the license section of the readme on the main repo page. I think this is better than a 404, but I am unsure as to if it's the best solution. As such feedback is appreciated.

# Objective

`bevy::render::texture::ImageSettings` was added to prelude in #5566, so these `use` statements are unnecessary and the examples can be made a bit more concise.

## Solution

Remove `use bevy::render::texture::ImageSettings`

# Objective

- I wanted to have controls independent from keyboard layout and found that bevy doesn't have a proper implementation for that

## Solution

- I created a `ScanCode` enum with two hundreds scan codes and updated `keyboard_input_system` to include and update `ResMut<Input<ScanCode>>`

- closes both https://github.com/bevyengine/bevy/issues/2052 and https://github.com/bevyengine/bevy/issues/862

Co-authored-by: Bleb1k <91003089+Bleb1k@users.noreply.github.com>

#4197 intended to remove all `pub` constructors of `Children` and `Parent` and it seems like this one was missed.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- Fixes#5544

- Part of the splitting process of #3692.

## Solution

- Document everything in the `gamepad.rs` file.

- Add a doc example for mocking gamepad input.

---

## Changelog

- Added and updated the documentation inside of the `gamepad.rs` file.

# Objective

- Similar to #5512 , the `View` struct definition in the shaders in `bevy_sprite` and `bevy_ui` were out of sync with the rust-side `ViewUniform`. Only `view_proj` was being used and is the first member and as those shaders are not customisable it makes little difference in practice, unlike for `Mesh2d`.

## Solution

- Sync shader `View` struct definition in `bevy_sprite` and `bevy_ui` with the correct definition that matches `ViewUniform`

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

# Objective

Change frametimediagnostic from seconds to milliseconds because this will always be less than one seconds and is the common diagnostic display unit for game engines.

## Solution

- multiplied the existing value by 1000

---

## Changelog

Frametimes are now reported in milliseconds

Co-authored-by: Syama Mishra <38512086+SyamaMishra@users.noreply.github.com>

Co-authored-by: McSpidey <mcspidey@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

In Bevy 0.8, the default filter mode was changed to linear (#4465). I believe this is a sensible default, but it's also very common to want to use point filtering (e.g. for pixel art games).

## Solution

I am proposing including `bevy_render::texture::ImageSettings` in the Bevy prelude so it is more ergonomic to change the filtering in such cases.

---

## Changelog

### Added

- Added `bevy_render::texture::ImageSettings` to prelude.

# Objective

Add reflect/from reflect impls for NonZero integer types. I'm guessing these haven't been added yet because no one has needed them as of yet.

# Objective

Simplify the worldquery trait hierarchy as much as possible by putting it all in one trait. If/when gats are stabilised this can be trivially migrated over to use them, although that's not why I made this PR, those reasons are:

- Moves all of the conceptually related unsafe code for a worldquery next to eachother

- Removes now unnecessary traits simplifying the "type system magic" in bevy_ecs

---

## Changelog

All methods/functions/types/consts on `FetchState` and `Fetch` traits have been moved to the `WorldQuery` trait and the other traits removed. `WorldQueryGats` now only contains an `Item` and `Fetch` assoc type.

## Migration Guide

Implementors should move items in impls to the `WorldQuery/Gats` traits and remove any `Fetch`/`FetchState` impls

Any use sites of items in the `Fetch`/`FetchState` traits should be updated to use the `WorldQuery` trait items instead

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Fixes#5384 and maybe other issues around window closing/app not exiting

## Solution

There are three systems involved in exiting when closing a window:

- `close_when_requested` asking Winit to close the window in stage `Update`

- `exit_on_all_closed` exiting when no window remains opened in stage `Update`

- `change_window` removing windows that are closed in stage `PostUpdate`

This ordering meant that when closing a window, we had to run one more frame to actually exit. As there was no window, panics could occur in systems assuming there was a window. In case of Bevy app using a low power options, that means waiting for the timeout before actually exiting the app (60 seconds by default)

This PR changes the ordering so that `exit_on_all_closed` happens after `change_window` in the same frame, so there isn't an extra frame without window

> In draft until #4761 is merged. See the relevant commits [here](a85fe94a18).

---

# Objective

Update enums across Bevy to use the new enum reflection and get rid of `#[reflect_value(...)]` usages.

## Solution

Find and replace all[^1] instances of `#[reflect_value(...)]` on enum types.

---

## Changelog

- Updated all[^1] reflected enums to implement `Enum` (i.e. they are no longer `ReflectRef::Value`)

## Migration Guide

Bevy-defined enums have been updated to implement `Enum` and are not considered value types (`ReflectRef::Value`) anymore. This means that their serialized representations will need to be updated. For example, given the Bevy enum:

```rust

pub enum ScalingMode {

None,

WindowSize,

Auto { min_width: f32, min_height: f32 },

FixedVertical(f32),

FixedHorizontal(f32),

}

```

You will need to update the serialized versions accordingly.

```js

// OLD FORMAT

{

"type": "bevy_render:📷:projection::ScalingMode",

"value": FixedHorizontal(720),

},

// NEW FORMAT

{

"type": "bevy_render:📷:projection::ScalingMode",

"enum": {

"variant": "FixedHorizontal",

"tuple": [

{

"type": "f32",

"value": 720,

},

],

},

},

```

This may also have other smaller implications (such as `Debug` representation), but serialization is probably the most prominent.

[^1]: All enums except `HandleId` as neither `Uuid` nor `AssetPathId` implement the reflection traits

# Objective

> This is a revival of #1347. Credit for the original PR should go to @Davier.

Currently, enums are treated as `ReflectRef::Value` types by `bevy_reflect`. Obviously, there needs to be better a better representation for enums using the reflection API.

## Solution

Based on prior work from @Davier, an `Enum` trait has been added as well as the ability to automatically implement it via the `Reflect` derive macro. This allows enums to be expressed dynamically:

```rust

#[derive(Reflect)]

enum Foo {

A,

B(usize),

C { value: f32 },

}

let mut foo = Foo::B(123);

assert_eq!("B", foo.variant_name());

assert_eq!(1, foo.field_len());

let new_value = DynamicEnum::from(Foo::C { value: 1.23 });

foo.apply(&new_value);

assert_eq!(Foo::C{value: 1.23}, foo);

```

### Features

#### Derive Macro

Use the `#[derive(Reflect)]` macro to automatically implement the `Enum` trait for enum definitions. Optionally, you can use `#[reflect(ignore)]` with both variants and variant fields, just like you can with structs. These ignored items will not be considered as part of the reflection and cannot be accessed via reflection.

```rust

#[derive(Reflect)]

enum TestEnum {

A,

// Uncomment to ignore all of `B`

// #[reflect(ignore)]

B(usize),

C {

// Uncomment to ignore only field `foo` of `C`

// #[reflect(ignore)]

foo: f32,

bar: bool,

},

}

```

#### Dynamic Enums

Enums may be created/represented dynamically via the `DynamicEnum` struct. The main purpose of this struct is to allow enums to be deserialized into a partial state and to allow dynamic patching. In order to ensure conversion from a `DynamicEnum` to a concrete enum type goes smoothly, be sure to add `FromReflect` to your derive macro.

```rust

let mut value = TestEnum::A;

// Create from a concrete instance

let dyn_enum = DynamicEnum::from(TestEnum::B(123));

value.apply(&dyn_enum);

assert_eq!(TestEnum::B(123), value);

// Create a purely dynamic instance

let dyn_enum = DynamicEnum::new("TestEnum", "A", ());

value.apply(&dyn_enum);

assert_eq!(TestEnum::A, value);

```

#### Variants

An enum value is always represented as one of its variants— never the enum in its entirety.

```rust

let value = TestEnum::A;

assert_eq!("A", value.variant_name());

// Since we are using the `A` variant, we cannot also be the `B` variant

assert_ne!("B", value.variant_name());

```

All variant types are representable within the `Enum` trait: unit, struct, and tuple.

You can get the current type like:

```rust

match value.variant_type() {

VariantType::Unit => println!("A unit variant!"),

VariantType::Struct => println!("A struct variant!"),

VariantType::Tuple => println!("A tuple variant!"),

}

```

> Notice that they don't contain any values representing the fields. These are purely tags.

If a variant has them, you can access the fields as well:

```rust

let mut value = TestEnum::C {

foo: 1.23,

bar: false

};

// Read/write specific fields

*value.field_mut("bar").unwrap() = true;

// Iterate over the entire collection of fields

for field in value.iter_fields() {

println!("{} = {:?}", field.name(), field.value());

}

```

#### Variant Swapping

It might seem odd to group all variant types under a single trait (why allow `iter_fields` on a unit variant?), but the reason this was done ~~is to easily allow *variant swapping*.~~ As I was recently drafting up the **Design Decisions** section, I discovered that other solutions could have been made to work with variant swapping. So while there are reasons to keep the all-in-one approach, variant swapping is _not_ one of them.

```rust

let mut value: Box<dyn Enum> = Box::new(TestEnum::A);

value.set(Box::new(TestEnum::B(123))).unwrap();

```

#### Serialization

Enums can be serialized and deserialized via reflection without needing to implement `Serialize` or `Deserialize` themselves (which can save thousands of lines of generated code). Below are the ways an enum can be serialized.

> Note, like the rest of reflection-based serialization, the order of the keys in these representations is important!

##### Unit

```json

{

"type": "my_crate::TestEnum",

"enum": {

"variant": "A"

}

}

```

##### Tuple

```json

{

"type": "my_crate::TestEnum",

"enum": {

"variant": "B",

"tuple": [

{

"type": "usize",

"value": 123

}

]

}

}

```

<details>

<summary>Effects on Option</summary>

This ends up making `Option` look a little ugly:

```json

{

"type": "core::option::Option<usize>",

"enum": {

"variant": "Some",

"tuple": [

{

"type": "usize",

"value": 123

}

]

}

}

```

</details>

##### Struct

```json

{

"type": "my_crate::TestEnum",

"enum": {

"variant": "C",

"struct": {

"foo": {

"type": "f32",

"value": 1.23

},

"bar": {

"type": "bool",

"value": false

}

}

}

}

```

## Design Decisions

<details>

<summary><strong>View Section</strong></summary>

This section is here to provide some context for why certain decisions were made for this PR, alternatives that could have been used instead, and what could be improved upon in the future.

### Variant Representation

One of the biggest decisions was to decide on how to represent variants. The current design uses a "all-in-one" design where unit, tuple, and struct variants are all simultaneously represented by the `Enum` trait. This is not the only way it could have been done, though.

#### Alternatives

##### 1. Variant Traits

One way of representing variants would be to define traits for each variant, implementing them whenever an enum featured at least one instance of them. This would allow us to define variants like:

```rust

pub trait Enum: Reflect {

fn variant(&self) -> Variant;

}

pub enum Variant<'a> {

Unit,

Tuple(&'a dyn TupleVariant),

Struct(&'a dyn StructVariant),

}

pub trait TupleVariant {

fn field_len(&self) -> usize;

// ...

}

```

And then do things like:

```rust

fn get_tuple_len(foo: &dyn Enum) -> usize {

match foo.variant() {

Variant::Tuple(tuple) => tuple.field_len(),

_ => panic!("not a tuple variant!")

}

}

```

The reason this PR does not go with this approach is because of the fact that variants are not separate types. In other words, we cannot implement traits on specific variants— these cover the *entire* enum. This means we offer an easy footgun:

```rust

let foo: Option<i32> = None;

let my_enum = Box::new(foo) as Box<dyn TupleVariant>;

```

Here, `my_enum` contains `foo`, which is a unit variant. However, since we need to implement `TupleVariant` for `Option` as a whole, it's possible to perform such a cast. This is obviously wrong, but could easily go unnoticed. So unfortunately, this makes it not a good candidate for representing variants.

##### 2. Variant Structs

To get around the issue of traits necessarily needing to apply to both the enum and its variants, we could instead use structs that are created on a per-variant basis. This was also considered but was ultimately [[removed](71d27ab3c6) due to concerns about allocations.

Each variant struct would probably look something like:

```rust

pub trait Enum: Reflect {

fn variant_mut(&self) -> VariantMut;

}

pub enum VariantMut<'a> {

Unit,

Tuple(TupleVariantMut),

Struct(StructVariantMut),

}

struct StructVariantMut<'a> {

fields: Vec<&'a mut dyn Reflect>,

field_indices: HashMap<Cow<'static, str>, usize>

}

```

This allows us to isolate struct variants into their own defined struct and define methods specifically for their use. It also prevents users from casting to it since it's not a trait. However, this is not an optimal solution. Both `field_indices` and `fields` will require an allocation (remember, a `Box<[T]>` still requires a `Vec<T>` in order to be constructed). This *might* be a problem if called frequently enough.

##### 3. Generated Structs

The original design, implemented by @Davier, instead generates structs specific for each variant. So if we had a variant path like `Foo::Bar`, we'd generate a struct named `FooBarWrapper`. This would be newtyped around the original enum and forward tuple or struct methods to the enum with the chosen variant.

Because it involved using the `Tuple` and `Struct` traits (which are also both bound on `Reflect`), this meant a bit more code had to be generated. For a single struct variant with one field, the generated code amounted to ~110LoC. However, each new field added to that variant only added ~6 more LoC.

In order to work properly, the enum had to be transmuted to the generated struct:

```rust

fn variant(&self) -> crate::EnumVariant<'_> {

match self {

Foo::Bar {value: i32} => {

let wrapper_ref = unsafe {

std::mem::transmute::<&Self, &FooBarWrapper>(self)

};

crate::EnumVariant::Struct(wrapper_ref as &dyn crate::Struct)

}

}

}

```

This works because `FooBarWrapper` is defined as `repr(transparent)`.

Out of all the alternatives, this would probably be the one most likely to be used again in the future. The reasons for why this PR did not continue to use it was because:

* To reduce generated code (which would hopefully speed up compile times)

* To avoid cluttering the code with generated structs not visible to the user

* To keep bevy_reflect simple and extensible (these generated structs act as proxies and might not play well with current or future systems)

* To avoid additional unsafe blocks

* My own misunderstanding of @Davier's code

That last point is obviously on me. I misjudged the code to be too unsafe and unable to handle variant swapping (which it probably could) when I was rebasing it. Looking over it again when writing up this whole section, I see that it was actually a pretty clever way of handling variant representation.

#### Benefits of All-in-One

As stated before, the current implementation uses an all-in-one approach. All variants are capable of containing fields as far as `Enum` is concerned. This provides a few benefits that the alternatives do not (reduced indirection, safer code, etc.).

The biggest benefit, though, is direct field access. Rather than forcing users to have to go through pattern matching, we grant direct access to the fields contained by the current variant. The reason we can do this is because all of the pattern matching happens internally. Getting the field at index `2` will automatically return `Some(...)` for the current variant if it has a field at that index or `None` if it doesn't (or can't).

This could be useful for scenarios where the variant has already been verified or just set/swapped (or even where the type of variant doesn't matter):

```rust

let dyn_enum: &mut dyn Enum = &mut Foo::Bar {value: 123};

// We know it's the `Bar` variant

let field = dyn_enum.field("value").unwrap();

```

Reflection is not a type-safe abstraction— almost every return value is wrapped in `Option<...>`. There are plenty of places to check and recheck that a value is what Reflect says it is. Forcing users to have to go through `match` each time they want to access a field might just be an extra step among dozens of other verification processes.

Some might disagree, but ultimately, my view is that the benefit here is an improvement to the ergonomics and usability of reflected enums.

</details>

---

## Changelog

### Added

* Added `Enum` trait

* Added `Enum` impl to `Reflect` derive macro

* Added `DynamicEnum` struct

* Added `DynamicVariant`

* Added `EnumInfo`

* Added `VariantInfo`

* Added `StructVariantInfo`

* Added `TupleVariantInfo`

* Added `UnitVariantInfo`

* Added serializtion/deserialization support for enums

* Added `EnumSerializer`

* Added `VariantType`

* Added `VariantFieldIter`

* Added `VariantField`

* Added `enum_partial_eq(...)`

* Added `enum_hash(...)`

### Changed

* `Option<T>` now implements `Enum`

* `bevy_window` now depends on `bevy_reflect`

* Implemented `Reflect` and `FromReflect` for `WindowId`

* Derive `FromReflect` on `PerspectiveProjection`

* Derive `FromReflect` on `OrthographicProjection`

* Derive `FromReflect` on `WindowOrigin`

* Derive `FromReflect` on `ScalingMode`

* Derive `FromReflect` on `DepthCalculation`

## Migration Guide

* Enums no longer need to be treated as values and usages of `#[reflect_value(...)]` can be removed or replaced by `#[reflect(...)]`

* Enums (including `Option<T>`) now take a different format when serializing. The format is described above, but this may cause issues for existing scenes that make use of enums.

---

Also shout out to @nicopap for helping clean up some of the code here! It's a big feature so help like this is really appreciated!

Co-authored-by: Gino Valente <gino.valente.code@gmail.com>

# Objective

Currently, actually using a `Local` on a system requires that it be `T: FromWorld`, but that requirement is only expressed on the `SystemParam` machinery, which leads to the confusing error message for when the user attempts to add an invalid system. By adding these bounds to `Local` directly, it improves clarity on usage and semantics.

## Solution

- Add `T: FromWorld` bound to `Local`'s definition

## Migration Guide

- It might be possible for references to `Local`s without `T: FromWorld` to exist, but these should be exceedingly rare and probably dead code. In the event that one of these is encountered, the easiest solutions are to delete the code or wrap the inner `T` in an `Option` to allow it to be default constructed to `None`.

# Objective

- Migrate changes from #3503.

## Solution

- Change `Size<T>` and `UiRect<T>` to `Size` and `UiRect` using `Val`.

- Implement `Sub`, `SubAssign`, `Mul`, `MulAssign`, `Div` and `DivAssign` for `Val`.

- Update tests for `Size`.

---

## Changelog

### Changed

- The generic `T` of `Size` and `UiRect` got removed and instead they both now always use `Val`.

## Migration Guide

- The generic `T` of `Size` and `UiRect` got removed and instead they both now always use `Val`. If you used a `Size<f32>` consider replacing it with a `Vec2` which is way more powerful.

Co-authored-by: KDecay <KDecayMusic@protonmail.com>

# Objective

View mesh2d_view_types.wgsl was missing a couple of fields present in bevy::render::ViewUniform, causing rendering issues for shaders using later fields.

## Solution

Solved by adding the fields in question

# Objective

- Fix / support KTX2 array / cubemap / cubemap array textures

- Fixes#4495 . Supersedes #4514 .

## Solution

- Add `Option<TextureViewDescriptor>` to `Image` to enable configuration of the `TextureViewDimension` of a texture.

- This allows users to set `D2Array`, `D3`, `Cube`, `CubeArray` or whatever they need

- Automatically configure this when loading KTX2

- Transcode all layers and faces instead of just one

- Use the UASTC block size of 128 bits, and the number of blocks in x/y for a given mip level in order to determine the offset of the layer and face within the KTX2 mip level data

- `wgpu` wants data ordered as layer 0 mip 0..n, layer 1 mip 0..n, etc. See https://docs.rs/wgpu/latest/wgpu/util/trait.DeviceExt.html#tymethod.create_texture_with_data

- Reorder the data KTX2 mip X layer Y face Z to `wgpu` layer Y face Z mip X order

- Add a `skybox` example to demonstrate / test loading cubemaps from PNG and KTX2, including ASTC 4x4, BC7, and ETC2 compression for support everywhere. Note that you need to enable the `ktx2,zstd` features to be able to load the compressed textures.

---

## Changelog

- Fixed: KTX2 array / cubemap / cubemap array textures

- Fixes: Validation failure for compressed textures stored in KTX2 where the width/height are not a multiple of the block dimensions.

- Added: `Image` now has an `Option<TextureViewDescriptor>` field to enable configuration of the texture view. This is useful for configuring the `TextureViewDimension` when it is not just a plain 2D texture and the loader could/did not identify what it should be.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Replace `many_for_each_mut` with `iter_many_mut` using the same tricks to avoid aliased mutability that `iter_combinations_mut` uses.

<sub>I tried rebasing the draft PR I made for this before and it died. F</sub>

## Why

`many_for_each_mut` is worse for a few reasons:

1. The closure prevents the use of `continue`, `break`, and `return` behaves like a limited `continue`.

2. rustfmt will crumple it and double the indentation when the line gets too long.

```rust

query.many_for_each_mut(

&entity_list,

|(mut transform, velocity, mut component_c)| {

// Double trouble.

},

);

```

3. It is more surprising to have `many_for_each_mut` as a mutable counterpart to `iter_many` than `iter_many_mut`.

4. It required a separate unsafe fn; more unsafe code to maintain.

5. The `iter_many_mut` API matches the existing `iter_combinations_mut` API.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

Sadly, #4944 introduces a serious exponential despawn behavior, which cannot be included in 0.8. [Handling AABBs properly is a controversial topic](https://github.com/bevyengine/bevy/pull/5423#issuecomment-1199995825) and one that deserves more time than the day we have left before release.

## Solution

This reverts commit c2b332f98a.

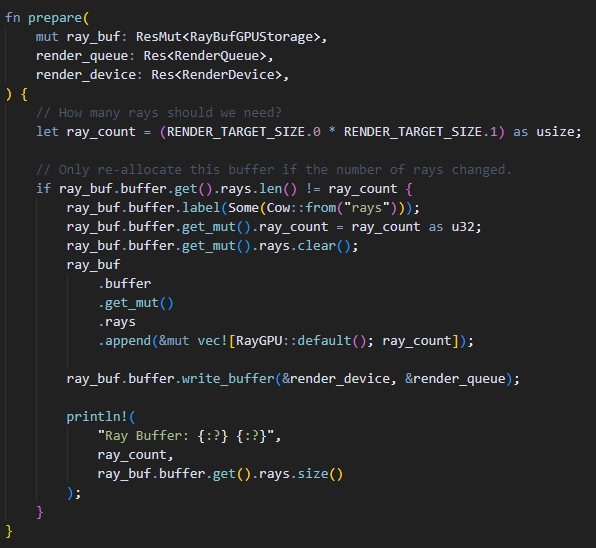

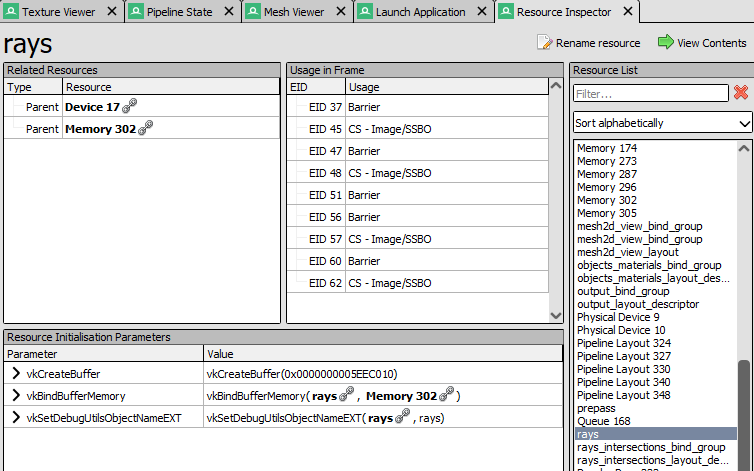

# Objective

- Expose the wgpu debug label on storage buffer types.

## Solution

🐄

- Add an optional cow static string and pass that to the label field of create_buffer_with_data

- This pattern is already used by Bevy for debug tags on bind group and layout descriptors.

---

Example Usage:

A buffer is given a label using the label function. Alternatively a buffer may be labeled when it is created if the default() convention is not used.

Here is the buffer appearing with the correct name in RenderDoc. Previously the buffer would have an anonymous name such as "Buffer223":

Co-authored-by: rebelroad-reinhart <reinhart@rebelroad.gg>

# Objective

- Improve performance when rendering text

## Solution

- While playing with example `many_buttons`, I noticed a lot of time was spent converting colours

- Investigating, the biggest culprit seems to be text colour. Each glyph in a text is an individual UI node for rendering, with a copy of the colour. Making the conversion to RGBA linear only once per text section reduces the number of conversion done once rendering.

- This improves FPS for example `many_buttons` from ~33 to ~42

- I did the same change for text 2d

# Objective

I found this small ux hiccup when writing the 0.8 blog post:

```rust

image.sampler = ImageSampler::Descriptor(ImageSampler::nearest_descriptor());

```

Not good!

## Solution

```rust

image.sampler = ImageSampler::nearest();

```

(there are Good Reasons to keep around the nearest_descriptor() constructor and I think it belongs on this type)

# Objective

`ReadOnlyWorldQuery` should have required `Self::ReadOnly = Self` so that calling `.iter()` on a readonly query is equivelent to calling `iter_mut()`.

## Solution

add `ReadOnly = Self` to the definition of `ReadOnlyWorldQuery`

---

## Changelog

ReadOnlyWorldQuery's `ReadOnly` assoc type is now always equal to `Self`

## Migration Guide

Make `Self::ReadOnly = Self` hold

# Objective

- Fixes#5463

- set ANDROID_NDK_ROOT

- GitHub recently updated their ubuntu container, removing some of the android environment variable: ca5d04c7da

- `cargo-apk` is not reading the new environment variable: 9a8be258a9/ndk-build/src/ndk.rs (L33-L38)

- this also means CI will now use the latest android NDK, I don't know if that's an issue

# Objective

fix an error in shadow map indexing that occurs when point lights without shadows are used in conjunction with spotlights with shadows

## Solution

calculate point_light_count correctly

# Objective

Enable treating components and resources equally, which can

simplify the implementation of some systems where only the change

detection feature is relevant and not the kind of object (resource or

component).

## Solution

Implement `From<ResMut<T>>` and `From<NonSendMut<T>>` for

`Mut`. Since the 3 structs are similar, and only differ by their system

param role, the conversion is trivial.

---

## Changelog

Added - `From<ResMut>` and `From<NonSendMut>` for `Mut<T>`.

# Objective

- Even though it's marked as optional, it is no longer possible to not depend on `bevy_render` as it's a dependency of `bevy_scene`

## Solution

- Make `bevy_scene` optional

- For the minimalist among us, also make `bevy_asset` optional

# Objective

Add a section to the example's README on how

to reduce generated wasm executable size.

Add a `wasm-release` profile to bevy's `Cargo.toml`

in order to use it when building bevy-website.

Notes:

- We do not recommend `strip = "symbols"` since it breaks bindgen

- see https://github.com/bevyengine/bevy-website/pull/402

# Objective

- Fix some typos

## Solution

For the first time in my life, I made a pull request to OSS.

Am I right?

Co-authored-by: eiei114 <60887155+eiei114@users.noreply.github.com>

# Objective

- `#![warn(missing_docs)]` was added to bevy_asset in #3536

- A method was not documented when targeting wasm

## Solution

- Add documentation for it

# Objective

Some generic types like `Option<T>`, `Vec<T>` and `HashMap<K, V>` implement `Reflect` when where their generic types `T`/`K`/`V` implement `Serialize + for<'de> Deserialize<'de>`.

This is so that in their `GetTypeRegistration` impl they can insert the `ReflectSerialize` and `ReflectDeserialize` type data structs.

This has the annoying side effect that if your struct contains a `Option<NonSerdeStruct>` you won't be able to derive reflect (https://github.com/bevyengine/bevy/issues/4054).

## Solution

- remove the `Serialize + Deserialize` bounds on wrapper types

- this means that `ReflectSerialize` and `ReflectDeserialize` will no longer be inserted even for `.register::<Option<DoesImplSerde>>()`

- add `register_type_data<T, D>` shorthand for `registry.get_mut(T).insert(D::from_type<T>())`

- require users to register their specific generic types **and the serde types** separately like

```rust

.register_type::<Option<String>>()

.register_type_data::<Option<String>, ReflectSerialize>()

.register_type_data::<Option<String>, ReflectDeserialize>()

```

I believe this is the best we can do for extensibility and convenience without specialization.

## Changelog

- `.register_type` for generic types like `Option<T>`, `Vec<T>`, `HashMap<K, V>` will no longer insert `ReflectSerialize` and `ReflectDeserialize` type data. Instead you need to register it separately for concrete generic types like so:

```rust

.register_type::<Option<String>>()

.register_type_data::<Option<String>, ReflectSerialize>()

.register_type_data::<Option<String>, ReflectDeserialize>()

```

TODO: more docs and tweaks to the scene example to demonstrate registering generic types.

# Objective

Bevy need a way to benchmark UI rendering code,

this PR adds a stress test that spawns a lot of buttons.

## Solution

- Add the `many_buttons` stress test.

---

## Changelog

- Add the `many_buttons` stress test.

# Objective

- wgpu 0.13 has validation to ensure that the width and height specified for a texture are both multiples of the respective block width and block height. This means validation fails for compressed textures with say a 4x4 block size, but non-modulo-4 image width/height.

## Solution

- Using `Extent3d`'s `physical_size()` method in the `dds` loader. It takes a `TextureFormat` argument and ensures the resolution is correct.

---

## Changelog

- Fixes: Validation failure for compressed textures stored in `dds` where the width/height are not a multiple of the block dimensions.

# Objective

the bevy pbr shader doesn't handle at all normal maps

if a mesh doesn't have backed tangents. This is a pitfall

(that I fell into) and needs to be documented.

# Solution

Document the behavior. (Also document a few other

`StandardMaterial` fields)

## Changelog

* Add documentation to `emissive`, `normal_map_texture` and `occlusion_texture` fields of `StandardMaterial`.

# Objective

I've found there is a duplicated line, probably left after some copy paste.

## Solution

- removed it

---

Co-authored-by: adsick <vadimgangsta73@gmail.com>

# Objective

UI nodes can be hidden by setting their `Visibility` property. Since #5310 was merged, this is now ergonomic to use, as visibility is now inherited.

However, UI nodes still receive (and store) interactions when hidden, resulting in surprising hidden state (and an inability to otherwise disable UI nodes.

## Solution

Fixes#5360.

I've updated the `ui_focus_system` to accomplish this in a minimally intrusive way, and updated the docs to match.

**NOTE:** I have not added automated tests to verify this behavior, as we do not currently have a good testing paradigm for `bevy_ui`. I'm not thrilled with that by any means, but I'm not sure fixing it is within scope.

## Paths not taken

### Separate `Disabled` component

This is a much larger and more controversial change, and not well-scoped to UI.

Furthermore, it is extremely rare that you want hidden UI elements to function: the most common cases are for things like changing tabs, collapsing elements or so on.

Splitting this behavior would be more complex, and substantially violate user expectations.

### A separate limbo world

Mentioned in the linked issue. Super cool, but all of the problems of the `Disabled` component solution with a whole new RFC-worth of complexity.

### Using change detection to reduce the amount of redundant work

Adds a lot of complexity for questionable performance gains. Likely involves a complete refactor of the entire system.

We simply don't have the tests or benchmarks here to justify this.

## Changelog

- UI nodes are now always in an `Interaction::None` state while they are hidden (via the `ComputedVisibility` component).

# Objective

- Fixes#5293

- UI nodes with a rotation that made the top left corner lower than the top right corner (z rotations greater than π/4) were culled

## Solution

- Do not cull nodes with a rotation, but don't do proper culling in this case

As a reminder, changing rotation and scale of UI nodes is not recommended as it won't impact layout. This is a quick fix but doesn't handle properly rotations and scale in clipping/culling. This would need a lot more work as mentioned here: c2b332f98a/crates/bevy_ui/src/render/mod.rs (L404-L405)