2021-12-14 03:58:23 +00:00

|

|

|

pub mod wireframe;

|

2020-04-25 00:46:54 +00:00

|

|

|

|

2021-12-14 03:58:23 +00:00

|

|

|

mod alpha;

|

|

|

|

|

mod bundle;

|

2020-08-09 23:13:04 +00:00

|

|

|

mod light;

|

|

|

|

|

mod material;

|

2021-12-25 21:45:43 +00:00

|

|

|

mod pbr_material;

|

2023-01-19 22:11:13 +00:00

|

|

|

mod prepass;

|

2021-12-14 03:58:23 +00:00

|

|

|

mod render;

|

2020-08-09 23:13:04 +00:00

|

|

|

|

2021-12-14 03:58:23 +00:00

|

|

|

pub use alpha::*;

|

2023-01-19 22:11:13 +00:00

|

|

|

use bevy_utils::default;

|

2021-12-14 03:58:23 +00:00

|

|

|

pub use bundle::*;

|

2020-08-09 23:13:04 +00:00

|

|

|

pub use light::*;

|

|

|

|

|

pub use material::*;

|

2021-12-25 21:45:43 +00:00

|

|

|

pub use pbr_material::*;

|

2023-01-19 22:11:13 +00:00

|

|

|

pub use prepass::*;

|

2021-12-14 03:58:23 +00:00

|

|

|

pub use render::*;

|

2020-08-09 23:13:04 +00:00

|

|

|

|

2022-04-25 14:32:56 +00:00

|

|

|

use bevy_window::ModifiesWindows;

|

|

|

|

|

|

2020-07-17 02:27:19 +00:00

|

|

|

pub mod prelude {

|

2022-11-02 20:40:45 +00:00

|

|

|

#[doc(hidden)]

|

2021-05-14 20:37:34 +00:00

|

|

|

pub use crate::{

|

2021-12-14 03:58:23 +00:00

|

|

|

alpha::AlphaMode,

|

2022-07-08 19:57:43 +00:00

|

|

|

bundle::{

|

|

|

|

|

DirectionalLightBundle, MaterialMeshBundle, PbrBundle, PointLightBundle,

|

|

|

|

|

SpotLightBundle,

|

|

|

|

|

},

|

|

|

|

|

light::{AmbientLight, DirectionalLight, PointLight, SpotLight},

|

2021-12-25 21:45:43 +00:00

|

|

|

material::{Material, MaterialPlugin},

|

|

|

|

|

pbr_material::StandardMaterial,

|

2021-05-14 20:37:34 +00:00

|

|

|

};

|

2020-07-17 02:27:19 +00:00

|

|

|

}

|

2020-04-25 00:46:54 +00:00

|

|

|

|

2021-12-14 03:58:23 +00:00

|

|

|

pub mod draw_3d_graph {

|

|

|

|

|

pub mod node {

|

|

|

|

|

/// Label for the shadow pass node.

|

|

|

|

|

pub const SHADOW_PASS: &str = "shadow_pass";

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2020-07-17 01:47:51 +00:00

|

|

|

use bevy_app::prelude::*;

|

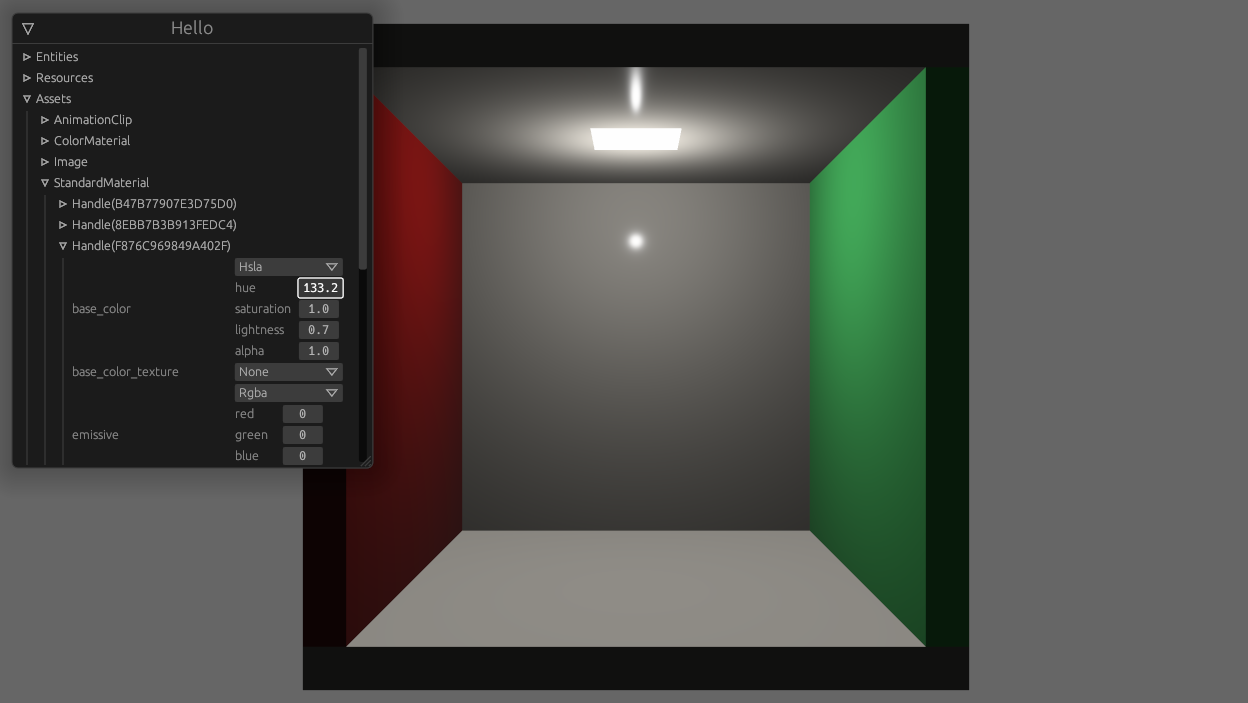

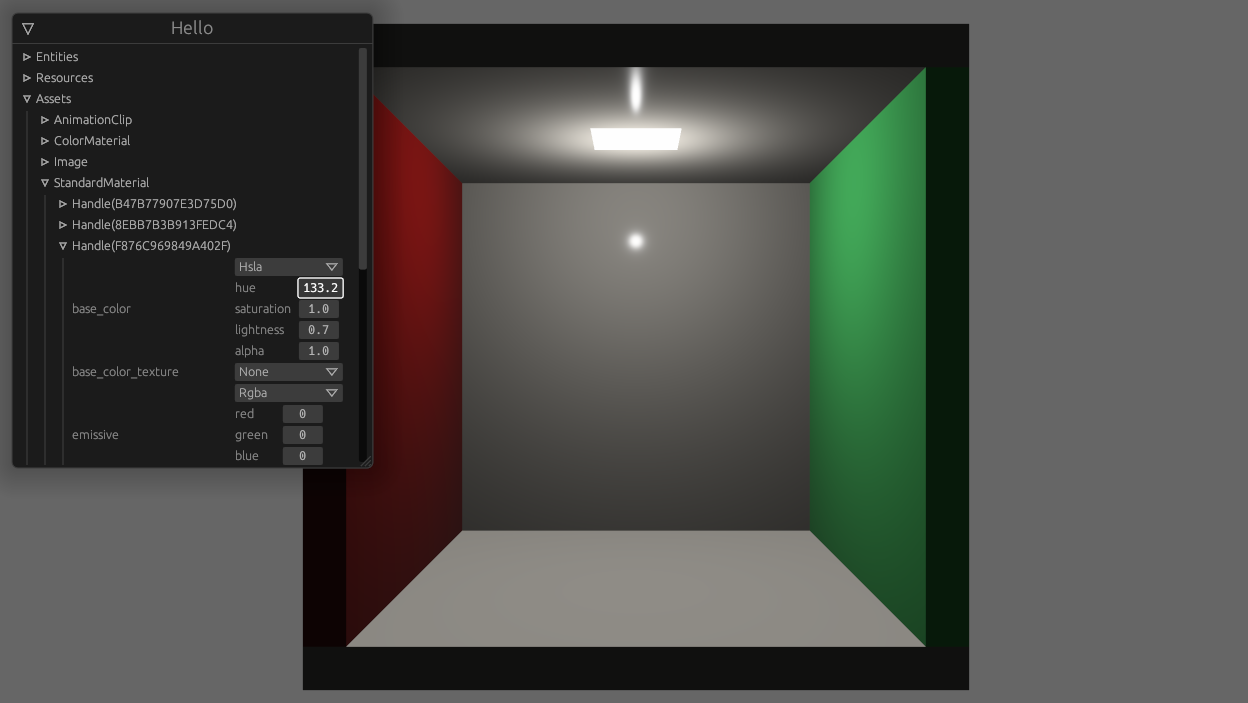

add `ReflectAsset` and `ReflectHandle` (#5923)

# Objective

^ enable this

Concretely, I need to

- list all handle ids for an asset type

- fetch the asset as `dyn Reflect`, given a `HandleUntyped`

- when encountering a `Handle<T>`, find out what asset type that handle refers to (`T`'s type id) and turn the handle into a `HandleUntyped`

## Solution

- add `ReflectAsset` type containing function pointers for working with assets

```rust

pub struct ReflectAsset {

type_uuid: Uuid,

assets_resource_type_id: TypeId, // TypeId of the `Assets<T>` resource

get: fn(&World, HandleUntyped) -> Option<&dyn Reflect>,

get_mut: fn(&mut World, HandleUntyped) -> Option<&mut dyn Reflect>,

get_unchecked_mut: unsafe fn(&World, HandleUntyped) -> Option<&mut dyn Reflect>,

add: fn(&mut World, &dyn Reflect) -> HandleUntyped,

set: fn(&mut World, HandleUntyped, &dyn Reflect) -> HandleUntyped,

len: fn(&World) -> usize,

ids: for<'w> fn(&'w World) -> Box<dyn Iterator<Item = HandleId> + 'w>,

remove: fn(&mut World, HandleUntyped) -> Option<Box<dyn Reflect>>,

}

```

- add `ReflectHandle` type relating the handle back to the asset type and providing a way to create a `HandleUntyped`

```rust

pub struct ReflectHandle {

type_uuid: Uuid,

asset_type_id: TypeId,

downcast_handle_untyped: fn(&dyn Any) -> Option<HandleUntyped>,

}

```

- add the corresponding `FromType` impls

- add a function `app.register_asset_reflect` which is supposed to be called after `.add_asset` and registers `ReflectAsset` and `ReflectHandle` in the type registry

---

## Changelog

- add `ReflectAsset` and `ReflectHandle` types, which allow code to use reflection to manipulate arbitrary assets without knowing their types at compile time

2022-10-28 20:42:33 +00:00

|

|

|

use bevy_asset::{load_internal_asset, AddAsset, Assets, Handle, HandleUntyped};

|

2021-12-14 03:58:23 +00:00

|

|

|

use bevy_ecs::prelude::*;

|

|

|

|

|

use bevy_reflect::TypeUuid;

|

|

|

|

|

use bevy_render::{

|

Camera Driven Rendering (#4745)

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

2022-06-02 00:12:17 +00:00

|

|

|

camera::CameraUpdateSystem,

|

2022-05-30 18:36:03 +00:00

|

|

|

extract_resource::ExtractResourcePlugin,

|

2021-12-14 23:04:26 +00:00

|

|

|

prelude::Color,

|

2021-12-14 03:58:23 +00:00

|

|

|

render_graph::RenderGraph,

|

|

|

|

|

render_phase::{sort_phase_system, AddRenderCommand, DrawFunctions},

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120

Fixes #3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-02-23 23:21:13 +00:00

|

|

|

render_resource::{Shader, SpecializedMeshPipelines},

|

2021-12-14 03:58:23 +00:00

|

|

|

view::VisibilitySystems,

|

|

|

|

|

RenderApp, RenderStage,

|

|

|

|

|

};

|

|

|

|

|

use bevy_transform::TransformSystem;

|

2020-04-25 00:46:54 +00:00

|

|

|

|

Split mesh shader files (#4867)

# Objective

- Split PBR and 2D mesh shaders into types and bindings to prepare the shaders to be more reusable.

- See #3969 for details. I'm doing this in multiple steps to make review easier.

---

## Changelog

- Changed: 2D and PBR mesh shaders are now split into types and bindings, the following shader imports are available: `bevy_pbr::mesh_view_types`, `bevy_pbr::mesh_view_bindings`, `bevy_pbr::mesh_types`, `bevy_pbr::mesh_bindings`, `bevy_sprite::mesh2d_view_types`, `bevy_sprite::mesh2d_view_bindings`, `bevy_sprite::mesh2d_types`, `bevy_sprite::mesh2d_bindings`

## Migration Guide

- In shaders for 3D meshes:

- `#import bevy_pbr::mesh_view_bind_group` -> `#import bevy_pbr::mesh_view_bindings`

- `#import bevy_pbr::mesh_struct` -> `#import bevy_pbr::mesh_types`

- NOTE: If you are using the mesh bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_pbr::mesh_bindings` which itself imports the mesh types needed for the bindings.

- In shaders for 2D meshes:

- `#import bevy_sprite::mesh2d_view_bind_group` -> `#import bevy_sprite::mesh2d_view_bindings`

- `#import bevy_sprite::mesh2d_struct` -> `#import bevy_sprite::mesh2d_types`

- NOTE: If you are using the mesh2d bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_sprite::mesh2d_bindings` which itself imports the mesh2d types needed for the bindings.

2022-05-31 23:23:25 +00:00

|

|

|

pub const PBR_TYPES_SHADER_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 1708015359337029744);

|

|

|

|

|

pub const PBR_BINDINGS_SHADER_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 5635987986427308186);

|

Separate out PBR lighting, shadows, clustered forward, and utils from pbr.wgsl (#4938)

# Objective

- Builds on top of #4901

- Separate out PBR lighting, shadows, clustered forward, and utils from `pbr.wgsl` as part of making the PBR code more reusable and extensible.

- See #3969 for details.

## Solution

- Add `bevy_pbr::utils`, `bevy_pbr::clustered_forward`, `bevy_pbr::lighting`, `bevy_pbr::shadows` shader imports exposing many shader functions for external use

- Split `PI`, `saturate()`, `hsv2rgb()`, and `random1D()` into `bevy_pbr::utils`

- Split clustered-forward-specific functions into `bevy_pbr::clustered_forward`, including moving the debug visualization code into a `cluster_debug_visualization()` function in that import

- Split PBR lighting functions into `bevy_pbr::lighting`

- Split shadow functions into `bevy_pbr::shadows`

---

## Changelog

- Added: `bevy_pbr::utils`, `bevy_pbr::clustered_forward`, `bevy_pbr::lighting`, `bevy_pbr::shadows` shader imports exposing many shader functions for external use

- Split `PI`, `saturate()`, `hsv2rgb()`, and `random1D()` into `bevy_pbr::utils`

- Split clustered-forward-specific functions into `bevy_pbr::clustered_forward`, including moving the debug visualization code into a `cluster_debug_visualization()` function in that import

- Split PBR lighting functions into `bevy_pbr::lighting`

- Split shadow functions into `bevy_pbr::shadows`

2022-06-14 00:58:30 +00:00

|

|

|

pub const UTILS_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 1900548483293416725);

|

|

|

|

|

pub const CLUSTERED_FORWARD_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 166852093121196815);

|

|

|

|

|

pub const PBR_LIGHTING_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 14170772752254856967);

|

|

|

|

|

pub const SHADOWS_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 11350275143789590502);

|

2021-12-14 03:58:23 +00:00

|

|

|

pub const PBR_SHADER_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 4805239651767701046);

|

2023-01-19 22:11:13 +00:00

|

|

|

pub const PBR_PREPASS_SHADER_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 9407115064344201137);

|

Callable PBR functions (#4939)

# Objective

- Builds on top of #4938

- Make clustered-forward PBR lighting/shadows functionality callable

- See #3969 for details

## Solution

- Add `PbrInput` struct type containing a `StandardMaterial`, occlusion, world_position, world_normal, and frag_coord

- Split functionality to calculate the unit view vector, and normal-mapped normal into `bevy_pbr::pbr_functions`

- Split high-level shading flow into `pbr(in: PbrInput, N: vec3<f32>, V: vec3<f32>, is_orthographic: bool)` function in `bevy_pbr::pbr_functions`

- Rework `pbr.wgsl` fragment stage entry point to make use of the new functions

- This has been benchmarked on an M1 Max using `many_cubes -- sphere`. `main` had a median frame time of 15.88ms, this PR 15.99ms, which is a 0.69% frame time increase, which is within noise in my opinion.

---

## Changelog

- Added: PBR shading code is now callable. Import `bevy_pbr::pbr_functions` and its dependencies, create a `PbrInput`, calculate the unit view and normal-mapped normal vectors and whether the projection is orthographic, and call `pbr()`!

2022-06-21 20:50:06 +00:00

|

|

|

pub const PBR_FUNCTIONS_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 16550102964439850292);

|

2021-12-14 03:58:23 +00:00

|

|

|

pub const SHADOW_SHADER_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 1836745567947005696);

|

|

|

|

|

|

|

|

|

|

/// Sets up the entire PBR infrastructure of bevy.

|

2023-01-19 22:11:13 +00:00

|

|

|

pub struct PbrPlugin {

|

|

|

|

|

/// Controls if the prepass is enabled for the StandardMaterial.

|

|

|

|

|

/// For more information about what a prepass is, see the [`bevy_core_pipeline::prepass`] docs.

|

|

|

|

|

pub prepass_enabled: bool,

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

impl Default for PbrPlugin {

|

|

|

|

|

fn default() -> Self {

|

|

|

|

|

Self {

|

|

|

|

|

prepass_enabled: true,

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

2020-04-25 00:46:54 +00:00

|

|

|

|

2020-08-08 03:22:17 +00:00

|

|

|

impl Plugin for PbrPlugin {

|

2021-07-27 20:21:06 +00:00

|

|

|

fn build(&self, app: &mut App) {

|

Split mesh shader files (#4867)

# Objective

- Split PBR and 2D mesh shaders into types and bindings to prepare the shaders to be more reusable.

- See #3969 for details. I'm doing this in multiple steps to make review easier.

---

## Changelog

- Changed: 2D and PBR mesh shaders are now split into types and bindings, the following shader imports are available: `bevy_pbr::mesh_view_types`, `bevy_pbr::mesh_view_bindings`, `bevy_pbr::mesh_types`, `bevy_pbr::mesh_bindings`, `bevy_sprite::mesh2d_view_types`, `bevy_sprite::mesh2d_view_bindings`, `bevy_sprite::mesh2d_types`, `bevy_sprite::mesh2d_bindings`

## Migration Guide

- In shaders for 3D meshes:

- `#import bevy_pbr::mesh_view_bind_group` -> `#import bevy_pbr::mesh_view_bindings`

- `#import bevy_pbr::mesh_struct` -> `#import bevy_pbr::mesh_types`

- NOTE: If you are using the mesh bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_pbr::mesh_bindings` which itself imports the mesh types needed for the bindings.

- In shaders for 2D meshes:

- `#import bevy_sprite::mesh2d_view_bind_group` -> `#import bevy_sprite::mesh2d_view_bindings`

- `#import bevy_sprite::mesh2d_struct` -> `#import bevy_sprite::mesh2d_types`

- NOTE: If you are using the mesh2d bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_sprite::mesh2d_bindings` which itself imports the mesh2d types needed for the bindings.

2022-05-31 23:23:25 +00:00

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

PBR_TYPES_SHADER_HANDLE,

|

|

|

|

|

"render/pbr_types.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

|

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

PBR_BINDINGS_SHADER_HANDLE,

|

|

|

|

|

"render/pbr_bindings.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

Separate out PBR lighting, shadows, clustered forward, and utils from pbr.wgsl (#4938)

# Objective

- Builds on top of #4901

- Separate out PBR lighting, shadows, clustered forward, and utils from `pbr.wgsl` as part of making the PBR code more reusable and extensible.

- See #3969 for details.

## Solution

- Add `bevy_pbr::utils`, `bevy_pbr::clustered_forward`, `bevy_pbr::lighting`, `bevy_pbr::shadows` shader imports exposing many shader functions for external use

- Split `PI`, `saturate()`, `hsv2rgb()`, and `random1D()` into `bevy_pbr::utils`

- Split clustered-forward-specific functions into `bevy_pbr::clustered_forward`, including moving the debug visualization code into a `cluster_debug_visualization()` function in that import

- Split PBR lighting functions into `bevy_pbr::lighting`

- Split shadow functions into `bevy_pbr::shadows`

---

## Changelog

- Added: `bevy_pbr::utils`, `bevy_pbr::clustered_forward`, `bevy_pbr::lighting`, `bevy_pbr::shadows` shader imports exposing many shader functions for external use

- Split `PI`, `saturate()`, `hsv2rgb()`, and `random1D()` into `bevy_pbr::utils`

- Split clustered-forward-specific functions into `bevy_pbr::clustered_forward`, including moving the debug visualization code into a `cluster_debug_visualization()` function in that import

- Split PBR lighting functions into `bevy_pbr::lighting`

- Split shadow functions into `bevy_pbr::shadows`

2022-06-14 00:58:30 +00:00

|

|

|

load_internal_asset!(app, UTILS_HANDLE, "render/utils.wgsl", Shader::from_wgsl);

|

|

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

CLUSTERED_FORWARD_HANDLE,

|

|

|

|

|

"render/clustered_forward.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

|

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

PBR_LIGHTING_HANDLE,

|

|

|

|

|

"render/pbr_lighting.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

|

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

SHADOWS_HANDLE,

|

|

|

|

|

"render/shadows.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

Callable PBR functions (#4939)

# Objective

- Builds on top of #4938

- Make clustered-forward PBR lighting/shadows functionality callable

- See #3969 for details

## Solution

- Add `PbrInput` struct type containing a `StandardMaterial`, occlusion, world_position, world_normal, and frag_coord

- Split functionality to calculate the unit view vector, and normal-mapped normal into `bevy_pbr::pbr_functions`

- Split high-level shading flow into `pbr(in: PbrInput, N: vec3<f32>, V: vec3<f32>, is_orthographic: bool)` function in `bevy_pbr::pbr_functions`

- Rework `pbr.wgsl` fragment stage entry point to make use of the new functions

- This has been benchmarked on an M1 Max using `many_cubes -- sphere`. `main` had a median frame time of 15.88ms, this PR 15.99ms, which is a 0.69% frame time increase, which is within noise in my opinion.

---

## Changelog

- Added: PBR shading code is now callable. Import `bevy_pbr::pbr_functions` and its dependencies, create a `PbrInput`, calculate the unit view and normal-mapped normal vectors and whether the projection is orthographic, and call `pbr()`!

2022-06-21 20:50:06 +00:00

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

PBR_FUNCTIONS_HANDLE,

|

|

|

|

|

"render/pbr_functions.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

2022-02-18 22:56:57 +00:00

|

|

|

load_internal_asset!(app, PBR_SHADER_HANDLE, "render/pbr.wgsl", Shader::from_wgsl);

|

|

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

2021-12-14 03:58:23 +00:00

|

|

|

SHADOW_SHADER_HANDLE,

|

2022-02-18 22:56:57 +00:00

|

|

|

"render/depth.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

2021-12-14 03:58:23 +00:00

|

|

|

);

|

2023-01-19 22:11:13 +00:00

|

|

|

load_internal_asset!(

|

|

|

|

|

app,

|

|

|

|

|

PBR_PREPASS_SHADER_HANDLE,

|

|

|

|

|

"render/pbr_prepass.wgsl",

|

|

|

|

|

Shader::from_wgsl

|

|

|

|

|

);

|

2021-12-14 03:58:23 +00:00

|

|

|

|

2022-01-03 07:59:25 +00:00

|

|

|

app.register_type::<CubemapVisibleEntities>()

|

|

|

|

|

.register_type::<DirectionalLight>()

|

|

|

|

|

.register_type::<PointLight>()

|

2022-07-08 19:57:43 +00:00

|

|

|

.register_type::<SpotLight>()

|

add `ReflectAsset` and `ReflectHandle` (#5923)

# Objective

^ enable this

Concretely, I need to

- list all handle ids for an asset type

- fetch the asset as `dyn Reflect`, given a `HandleUntyped`

- when encountering a `Handle<T>`, find out what asset type that handle refers to (`T`'s type id) and turn the handle into a `HandleUntyped`

## Solution

- add `ReflectAsset` type containing function pointers for working with assets

```rust

pub struct ReflectAsset {

type_uuid: Uuid,

assets_resource_type_id: TypeId, // TypeId of the `Assets<T>` resource

get: fn(&World, HandleUntyped) -> Option<&dyn Reflect>,

get_mut: fn(&mut World, HandleUntyped) -> Option<&mut dyn Reflect>,

get_unchecked_mut: unsafe fn(&World, HandleUntyped) -> Option<&mut dyn Reflect>,

add: fn(&mut World, &dyn Reflect) -> HandleUntyped,

set: fn(&mut World, HandleUntyped, &dyn Reflect) -> HandleUntyped,

len: fn(&World) -> usize,

ids: for<'w> fn(&'w World) -> Box<dyn Iterator<Item = HandleId> + 'w>,

remove: fn(&mut World, HandleUntyped) -> Option<Box<dyn Reflect>>,

}

```

- add `ReflectHandle` type relating the handle back to the asset type and providing a way to create a `HandleUntyped`

```rust

pub struct ReflectHandle {

type_uuid: Uuid,

asset_type_id: TypeId,

downcast_handle_untyped: fn(&dyn Any) -> Option<HandleUntyped>,

}

```

- add the corresponding `FromType` impls

- add a function `app.register_asset_reflect` which is supposed to be called after `.add_asset` and registers `ReflectAsset` and `ReflectHandle` in the type registry

---

## Changelog

- add `ReflectAsset` and `ReflectHandle` types, which allow code to use reflection to manipulate arbitrary assets without knowing their types at compile time

2022-10-28 20:42:33 +00:00

|

|

|

.register_asset_reflect::<StandardMaterial>()

|

2022-07-04 13:04:20 +00:00

|

|

|

.register_type::<AmbientLight>()

|

|

|

|

|

.register_type::<DirectionalLightShadowMap>()

|

2022-11-07 19:44:17 +00:00

|

|

|

.register_type::<ClusterConfig>()

|

|

|

|

|

.register_type::<ClusterZConfig>()

|

|

|

|

|

.register_type::<ClusterFarZMode>()

|

2022-07-04 13:04:20 +00:00

|

|

|

.register_type::<PointLightShadowMap>()

|

2022-11-04 01:34:12 +00:00

|

|

|

.add_plugin(MeshRenderPlugin)

|

2023-01-19 22:11:13 +00:00

|

|

|

.add_plugin(MaterialPlugin::<StandardMaterial> {

|

|

|

|

|

prepass_enabled: self.prepass_enabled,

|

|

|

|

|

..default()

|

|

|

|

|

})

|

2021-12-14 03:58:23 +00:00

|

|

|

.init_resource::<AmbientLight>()

|

2022-03-24 00:20:27 +00:00

|

|

|

.init_resource::<GlobalVisiblePointLights>()

|

2021-12-14 03:58:23 +00:00

|

|

|

.init_resource::<DirectionalLightShadowMap>()

|

|

|

|

|

.init_resource::<PointLightShadowMap>()

|

2022-05-30 18:36:03 +00:00

|

|

|

.add_plugin(ExtractResourcePlugin::<AmbientLight>::default())

|

2021-12-14 03:58:23 +00:00

|

|

|

.add_system_to_stage(

|

|

|

|

|

CoreStage::PostUpdate,

|

|

|

|

|

// NOTE: Clusters need to have been added before update_clusters is run so

|

|

|

|

|

// add as an exclusive system

|

|

|

|

|

add_clusters

|

Exclusive Systems Now Implement `System`. Flexible Exclusive System Params (#6083)

# Objective

The [Stageless RFC](https://github.com/bevyengine/rfcs/pull/45) involves allowing exclusive systems to be referenced and ordered relative to parallel systems. We've agreed that unifying systems under `System` is the right move.

This is an alternative to #4166 (see rationale in the comments I left there). Note that this builds on the learnings established there (and borrows some patterns).

## Solution

This unifies parallel and exclusive systems under the shared `System` trait, removing the old `ExclusiveSystem` trait / impls. This is accomplished by adding a new `ExclusiveFunctionSystem` impl similar to `FunctionSystem`. It is backed by `ExclusiveSystemParam`, which is similar to `SystemParam`. There is a new flattened out SystemContainer api (which cuts out a lot of trait and type complexity).

This means you can remove all cases of `exclusive_system()`:

```rust

// before

commands.add_system(some_system.exclusive_system());

// after

commands.add_system(some_system);

```

I've also implemented `ExclusiveSystemParam` for `&mut QueryState` and `&mut SystemState`, which makes this possible in exclusive systems:

```rust

fn some_exclusive_system(

world: &mut World,

transforms: &mut QueryState<&Transform>,

state: &mut SystemState<(Res<Time>, Query<&Player>)>,

) {

for transform in transforms.iter(world) {

println!("{transform:?}");

}

let (time, players) = state.get(world);

for player in players.iter() {

println!("{player:?}");

}

}

```

Note that "exclusive function systems" assume `&mut World` is present (and the first param). I think this is a fair assumption, given that the presence of `&mut World` is what defines the need for an exclusive system.

I added some targeted SystemParam `static` constraints, which removed the need for this:

``` rust

fn some_exclusive_system(state: &mut SystemState<(Res<'static, Time>, Query<&'static Player>)>) {}

```

## Related

- #2923

- #3001

- #3946

## Changelog

- `ExclusiveSystem` trait (and implementations) has been removed in favor of sharing the `System` trait.

- `ExclusiveFunctionSystem` and `ExclusiveSystemParam` were added, enabling flexible exclusive function systems

- `&mut SystemState` and `&mut QueryState` now implement `ExclusiveSystemParam`

- Exclusive and parallel System configuration is now done via a unified `SystemDescriptor`, `IntoSystemDescriptor`, and `SystemContainer` api.

## Migration Guide

Calling `.exclusive_system()` is no longer required (or supported) for converting exclusive system functions to exclusive systems:

```rust

// Old (0.8)

app.add_system(some_exclusive_system.exclusive_system());

// New (0.9)

app.add_system(some_exclusive_system);

```

Converting "normal" parallel systems to exclusive systems is done by calling the exclusive ordering apis:

```rust

// Old (0.8)

app.add_system(some_system.exclusive_system().at_end());

// New (0.9)

app.add_system(some_system.at_end());

```

Query state in exclusive systems can now be cached via ExclusiveSystemParams, which should be preferred for clarity and performance reasons:

```rust

// Old (0.8)

fn some_system(world: &mut World) {

let mut transforms = world.query::<&Transform>();

for transform in transforms.iter(world) {

}

}

// New (0.9)

fn some_system(world: &mut World, transforms: &mut QueryState<&Transform>) {

for transform in transforms.iter(world) {

}

}

```

2022-09-26 23:57:07 +00:00

|

|

|

.at_start()

|

2021-12-14 03:58:23 +00:00

|

|

|

.label(SimulationLightSystems::AddClusters),

|

|

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

CoreStage::PostUpdate,

|

|

|

|

|

assign_lights_to_clusters

|

|

|

|

|

.label(SimulationLightSystems::AssignLightsToClusters)

|

2022-04-25 14:32:56 +00:00

|

|

|

.after(TransformSystem::TransformPropagate)

|

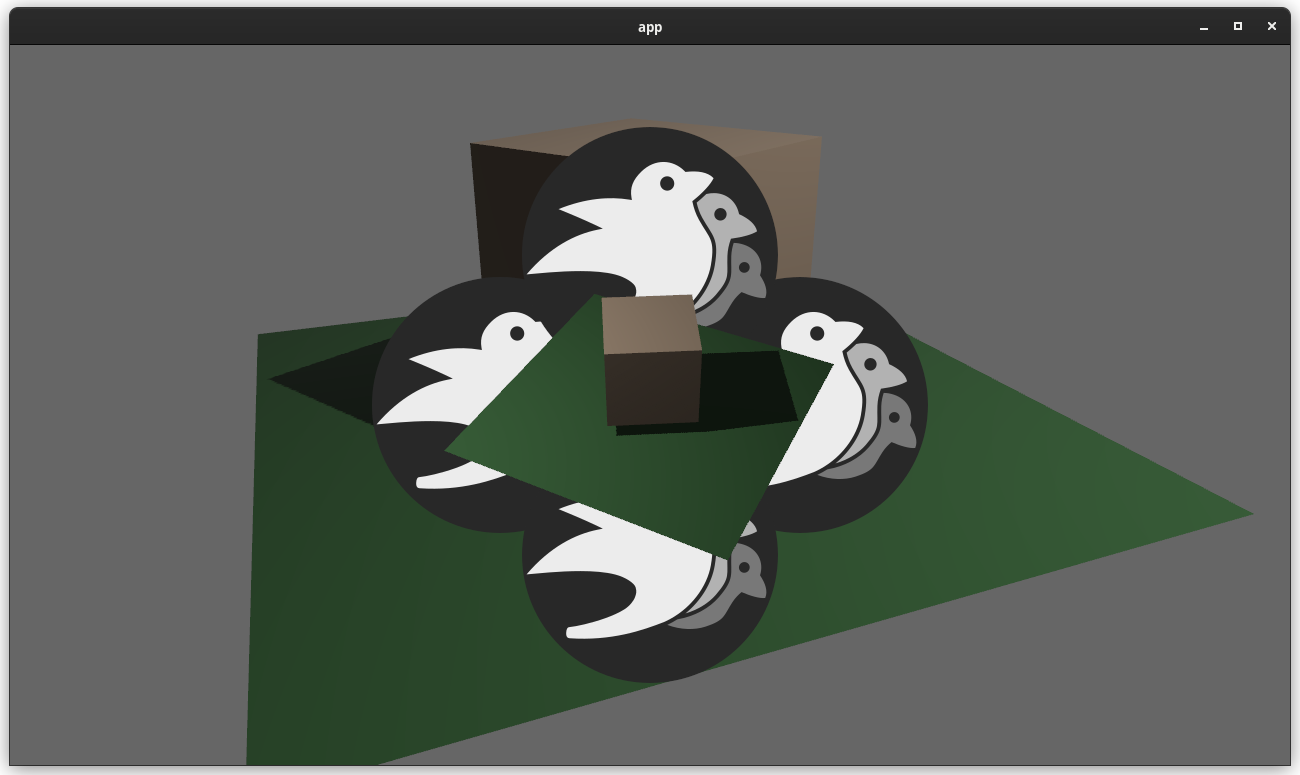

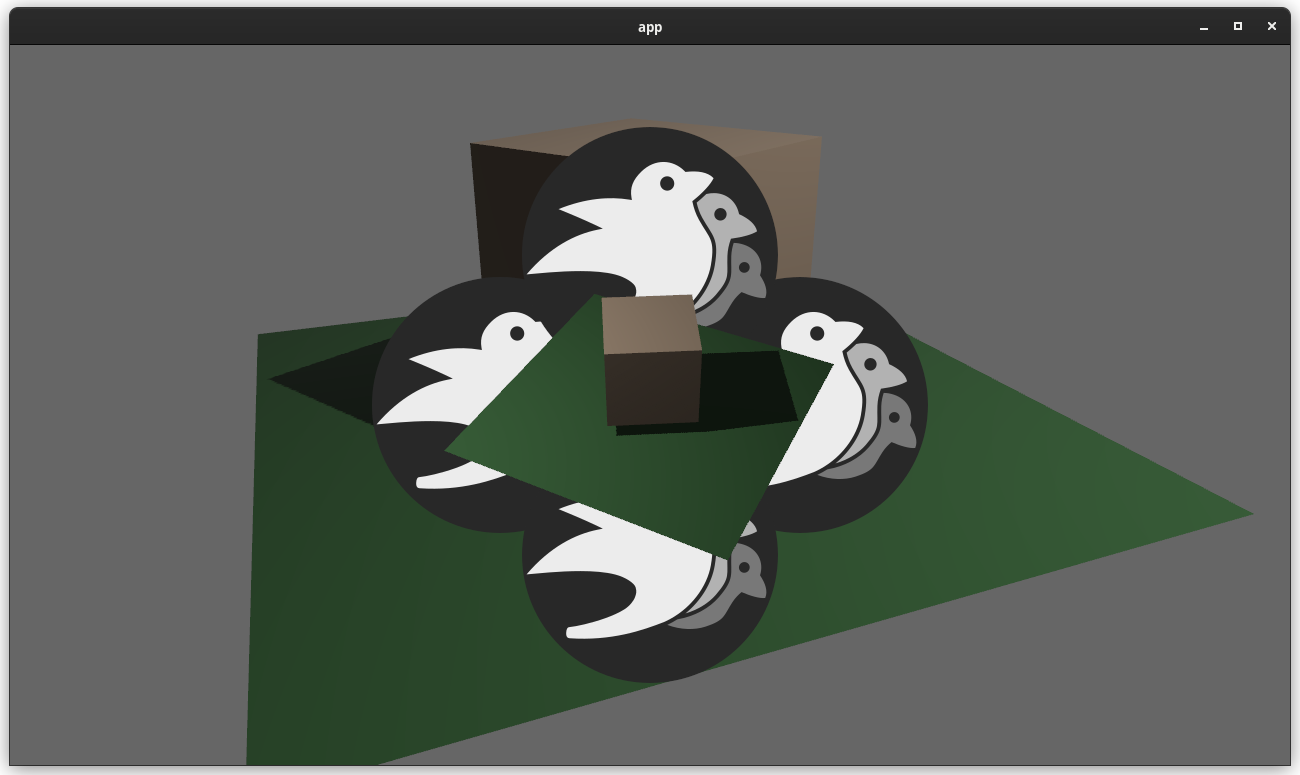

Visibilty Inheritance, universal ComputedVisibility and RenderLayers support (#5310)

# Objective

Fixes #4907. Fixes #838. Fixes #5089.

Supersedes #5146. Supersedes #2087. Supersedes #865. Supersedes #5114

Visibility is currently entirely local. Set a parent entity to be invisible, and the children are still visible. This makes it hard for users to hide entire hierarchies of entities.

Additionally, the semantics of `Visibility` vs `ComputedVisibility` are inconsistent across entity types. 3D meshes use `ComputedVisibility` as the "definitive" visibility component, with `Visibility` being just one data source. Sprites just use `Visibility`, which means they can't feed off of `ComputedVisibility` data, such as culling information, RenderLayers, and (added in this pr) visibility inheritance information.

## Solution

Splits `ComputedVisibilty::is_visible` into `ComputedVisibilty::is_visible_in_view` and `ComputedVisibilty::is_visible_in_hierarchy`. For each visible entity, `is_visible_in_hierarchy` is computed by propagating visibility down the hierarchy. The `ComputedVisibility::is_visible()` function combines these two booleans for the canonical "is this entity visible" function.

Additionally, all entities that have `Visibility` now also have `ComputedVisibility`. Sprites, Lights, and UI entities now use `ComputedVisibility` when appropriate.

This means that in addition to visibility inheritance, everything using Visibility now also supports RenderLayers. Notably, Sprites (and other 2d objects) now support `RenderLayers` and work properly across multiple views.

Also note that this does increase the amount of work done per sprite. Bevymark with 100,000 sprites on `main` runs in `0.017612` seconds and this runs in `0.01902`. That is certainly a gap, but I believe the api consistency and extra functionality this buys us is worth it. See [this thread](https://github.com/bevyengine/bevy/pull/5146#issuecomment-1182783452) for more info. Note that #5146 in combination with #5114 _are_ a viable alternative to this PR and _would_ perform better, but that comes at the cost of api inconsistencies and doing visibility calculations in the "wrong" place. The current visibility system does have potential for performance improvements. I would prefer to evolve that one system as a whole rather than doing custom hacks / different behaviors for each feature slice.

Here is a "split screen" example where the left camera uses RenderLayers to filter out the blue sprite.

Note that this builds directly on #5146 and that @james7132 deserves the credit for the baseline visibility inheritance work. This pr moves the inherited visibility field into `ComputedVisibility`, then does the additional work of porting everything to `ComputedVisibility`. See my [comments here](https://github.com/bevyengine/bevy/pull/5146#issuecomment-1182783452) for rationale.

## Follow up work

* Now that lights use ComputedVisibility, VisibleEntities now includes "visible lights" in the entity list. Functionally not a problem as we use queries to filter the list down in the desired context. But we should consider splitting this out into a separate`VisibleLights` collection for both clarity and performance reasons. And _maybe_ even consider scoping `VisibleEntities` down to `VisibleMeshes`?.

* Investigate alternative sprite rendering impls (in combination with visibility system tweaks) that avoid re-generating a per-view fixedbitset of visible entities every frame, then checking each ExtractedEntity. This is where most of the performance overhead lives. Ex: we could generate ExtractedEntities per-view using the VisibleEntities list, avoiding the need for the bitset.

* Should ComputedVisibility use bitflags under the hood? This would cut down on the size of the component, potentially speed up the `is_visible()` function, and allow us to cheaply expand ComputedVisibility with more data (ex: split out local visibility and parent visibility, add more culling classes, etc).

---

## Changelog

* ComputedVisibility now takes hierarchy visibility into account.

* 2D, UI and Light entities now use the ComputedVisibility component.

## Migration Guide

If you were previously reading `Visibility::is_visible` as the "actual visibility" for sprites or lights, use `ComputedVisibilty::is_visible()` instead:

```rust

// before (0.7)

fn system(query: Query<&Visibility>) {

for visibility in query.iter() {

if visibility.is_visible {

log!("found visible entity");

}

}

}

// after (0.8)

fn system(query: Query<&ComputedVisibility>) {

for visibility in query.iter() {

if visibility.is_visible() {

log!("found visible entity");

}

}

}

```

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-07-15 23:24:42 +00:00

|

|

|

.after(VisibilitySystems::CheckVisibility)

|

Camera Driven Rendering (#4745)

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

2022-06-02 00:12:17 +00:00

|

|

|

.after(CameraUpdateSystem)

|

2022-04-25 14:32:56 +00:00

|

|

|

.after(ModifiesWindows),

|

2021-12-14 03:58:23 +00:00

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

CoreStage::PostUpdate,

|

|

|

|

|

update_directional_light_frusta

|

2022-07-08 19:57:43 +00:00

|

|

|

.label(SimulationLightSystems::UpdateLightFrusta)

|

Visibilty Inheritance, universal ComputedVisibility and RenderLayers support (#5310)

# Objective

Fixes #4907. Fixes #838. Fixes #5089.

Supersedes #5146. Supersedes #2087. Supersedes #865. Supersedes #5114

Visibility is currently entirely local. Set a parent entity to be invisible, and the children are still visible. This makes it hard for users to hide entire hierarchies of entities.

Additionally, the semantics of `Visibility` vs `ComputedVisibility` are inconsistent across entity types. 3D meshes use `ComputedVisibility` as the "definitive" visibility component, with `Visibility` being just one data source. Sprites just use `Visibility`, which means they can't feed off of `ComputedVisibility` data, such as culling information, RenderLayers, and (added in this pr) visibility inheritance information.

## Solution

Splits `ComputedVisibilty::is_visible` into `ComputedVisibilty::is_visible_in_view` and `ComputedVisibilty::is_visible_in_hierarchy`. For each visible entity, `is_visible_in_hierarchy` is computed by propagating visibility down the hierarchy. The `ComputedVisibility::is_visible()` function combines these two booleans for the canonical "is this entity visible" function.

Additionally, all entities that have `Visibility` now also have `ComputedVisibility`. Sprites, Lights, and UI entities now use `ComputedVisibility` when appropriate.

This means that in addition to visibility inheritance, everything using Visibility now also supports RenderLayers. Notably, Sprites (and other 2d objects) now support `RenderLayers` and work properly across multiple views.

Also note that this does increase the amount of work done per sprite. Bevymark with 100,000 sprites on `main` runs in `0.017612` seconds and this runs in `0.01902`. That is certainly a gap, but I believe the api consistency and extra functionality this buys us is worth it. See [this thread](https://github.com/bevyengine/bevy/pull/5146#issuecomment-1182783452) for more info. Note that #5146 in combination with #5114 _are_ a viable alternative to this PR and _would_ perform better, but that comes at the cost of api inconsistencies and doing visibility calculations in the "wrong" place. The current visibility system does have potential for performance improvements. I would prefer to evolve that one system as a whole rather than doing custom hacks / different behaviors for each feature slice.

Here is a "split screen" example where the left camera uses RenderLayers to filter out the blue sprite.

Note that this builds directly on #5146 and that @james7132 deserves the credit for the baseline visibility inheritance work. This pr moves the inherited visibility field into `ComputedVisibility`, then does the additional work of porting everything to `ComputedVisibility`. See my [comments here](https://github.com/bevyengine/bevy/pull/5146#issuecomment-1182783452) for rationale.

## Follow up work

* Now that lights use ComputedVisibility, VisibleEntities now includes "visible lights" in the entity list. Functionally not a problem as we use queries to filter the list down in the desired context. But we should consider splitting this out into a separate`VisibleLights` collection for both clarity and performance reasons. And _maybe_ even consider scoping `VisibleEntities` down to `VisibleMeshes`?.

* Investigate alternative sprite rendering impls (in combination with visibility system tweaks) that avoid re-generating a per-view fixedbitset of visible entities every frame, then checking each ExtractedEntity. This is where most of the performance overhead lives. Ex: we could generate ExtractedEntities per-view using the VisibleEntities list, avoiding the need for the bitset.

* Should ComputedVisibility use bitflags under the hood? This would cut down on the size of the component, potentially speed up the `is_visible()` function, and allow us to cheaply expand ComputedVisibility with more data (ex: split out local visibility and parent visibility, add more culling classes, etc).

---

## Changelog

* ComputedVisibility now takes hierarchy visibility into account.

* 2D, UI and Light entities now use the ComputedVisibility component.

## Migration Guide

If you were previously reading `Visibility::is_visible` as the "actual visibility" for sprites or lights, use `ComputedVisibilty::is_visible()` instead:

```rust

// before (0.7)

fn system(query: Query<&Visibility>) {

for visibility in query.iter() {

if visibility.is_visible {

log!("found visible entity");

}

}

}

// after (0.8)

fn system(query: Query<&ComputedVisibility>) {

for visibility in query.iter() {

if visibility.is_visible() {

log!("found visible entity");

}

}

}

```

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-07-15 23:24:42 +00:00

|

|

|

// This must run after CheckVisibility because it relies on ComputedVisibility::is_visible()

|

|

|

|

|

.after(VisibilitySystems::CheckVisibility)

|

2022-10-27 12:56:03 +00:00

|

|

|

.after(TransformSystem::TransformPropagate)

|

|

|

|

|

// We assume that no entity will be both a directional light and a spot light,

|

2023-01-06 00:43:30 +00:00

|

|

|

// so these systems will run independently of one another.

|

2022-10-27 12:56:03 +00:00

|

|

|

// FIXME: Add an archetype invariant for this https://github.com/bevyengine/bevy/issues/1481.

|

|

|

|

|

.ambiguous_with(update_spot_light_frusta),

|

2021-12-14 03:58:23 +00:00

|

|

|

)

|

2020-05-26 04:57:48 +00:00

|

|

|

.add_system_to_stage(

|

2021-02-18 21:20:37 +00:00

|

|

|

CoreStage::PostUpdate,

|

2021-12-14 03:58:23 +00:00

|

|

|

update_point_light_frusta

|

2022-07-08 19:57:43 +00:00

|

|

|

.label(SimulationLightSystems::UpdateLightFrusta)

|

|

|

|

|

.after(TransformSystem::TransformPropagate)

|

|

|

|

|

.after(SimulationLightSystems::AssignLightsToClusters),

|

|

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

CoreStage::PostUpdate,

|

|

|

|

|

update_spot_light_frusta

|

|

|

|

|

.label(SimulationLightSystems::UpdateLightFrusta)

|

2021-12-14 03:58:23 +00:00

|

|

|

.after(TransformSystem::TransformPropagate)

|

|

|

|

|

.after(SimulationLightSystems::AssignLightsToClusters),

|

2020-11-15 19:34:55 +00:00

|

|

|

)

|

2021-12-14 03:58:23 +00:00

|

|

|

.add_system_to_stage(

|

|

|

|

|

CoreStage::PostUpdate,

|

|

|

|

|

check_light_mesh_visibility

|

|

|

|

|

.label(SimulationLightSystems::CheckLightVisibility)

|

|

|

|

|

.after(TransformSystem::TransformPropagate)

|

2022-07-08 19:57:43 +00:00

|

|

|

.after(SimulationLightSystems::UpdateLightFrusta)

|

2021-12-14 03:58:23 +00:00

|

|

|

// NOTE: This MUST be scheduled AFTER the core renderer visibility check

|

|

|

|

|

// because that resets entity ComputedVisibility for the first view

|

|

|

|

|

// which would override any results from this otherwise

|

|

|

|

|

.after(VisibilitySystems::CheckVisibility),

|

|

|

|

|

);

|

|

|

|

|

|

2021-12-14 23:04:26 +00:00

|

|

|

app.world

|

2022-02-27 22:37:18 +00:00

|

|

|

.resource_mut::<Assets<StandardMaterial>>()

|

2021-12-14 23:04:26 +00:00

|

|

|

.set_untracked(

|

2021-12-25 21:45:43 +00:00

|

|

|

Handle::<StandardMaterial>::default(),

|

2021-12-14 23:04:26 +00:00

|

|

|

StandardMaterial {

|

|

|

|

|

base_color: Color::rgb(1.0, 0.0, 0.5),

|

|

|

|

|

unlit: true,

|

|

|

|

|

..Default::default()

|

|

|

|

|

},

|

|

|

|

|

);

|

|

|

|

|

|

2022-01-08 10:39:43 +00:00

|

|

|

let render_app = match app.get_sub_app_mut(RenderApp) {

|

|

|

|

|

Ok(render_app) => render_app,

|

|

|

|

|

Err(_) => return,

|

|

|

|

|

};

|

|

|

|

|

|

2021-12-14 03:58:23 +00:00

|

|

|

render_app

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

RenderStage::Extract,

|

|

|

|

|

render::extract_clusters.label(RenderLightSystems::ExtractClusters),

|

|

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

RenderStage::Extract,

|

|

|

|

|

render::extract_lights.label(RenderLightSystems::ExtractLights),

|

|

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

RenderStage::Prepare,

|

|

|

|

|

// this is added as an exclusive system because it contributes new views. it must run (and have Commands applied)

|

|

|

|

|

// _before_ the `prepare_views()` system is run. ideally this becomes a normal system when "stageless" features come out

|

|

|

|

|

render::prepare_lights

|

Exclusive Systems Now Implement `System`. Flexible Exclusive System Params (#6083)

# Objective

The [Stageless RFC](https://github.com/bevyengine/rfcs/pull/45) involves allowing exclusive systems to be referenced and ordered relative to parallel systems. We've agreed that unifying systems under `System` is the right move.

This is an alternative to #4166 (see rationale in the comments I left there). Note that this builds on the learnings established there (and borrows some patterns).

## Solution

This unifies parallel and exclusive systems under the shared `System` trait, removing the old `ExclusiveSystem` trait / impls. This is accomplished by adding a new `ExclusiveFunctionSystem` impl similar to `FunctionSystem`. It is backed by `ExclusiveSystemParam`, which is similar to `SystemParam`. There is a new flattened out SystemContainer api (which cuts out a lot of trait and type complexity).

This means you can remove all cases of `exclusive_system()`:

```rust

// before

commands.add_system(some_system.exclusive_system());

// after

commands.add_system(some_system);

```

I've also implemented `ExclusiveSystemParam` for `&mut QueryState` and `&mut SystemState`, which makes this possible in exclusive systems:

```rust

fn some_exclusive_system(

world: &mut World,

transforms: &mut QueryState<&Transform>,

state: &mut SystemState<(Res<Time>, Query<&Player>)>,

) {

for transform in transforms.iter(world) {

println!("{transform:?}");

}

let (time, players) = state.get(world);

for player in players.iter() {

println!("{player:?}");

}

}

```

Note that "exclusive function systems" assume `&mut World` is present (and the first param). I think this is a fair assumption, given that the presence of `&mut World` is what defines the need for an exclusive system.

I added some targeted SystemParam `static` constraints, which removed the need for this:

``` rust

fn some_exclusive_system(state: &mut SystemState<(Res<'static, Time>, Query<&'static Player>)>) {}

```

## Related

- #2923

- #3001

- #3946

## Changelog

- `ExclusiveSystem` trait (and implementations) has been removed in favor of sharing the `System` trait.

- `ExclusiveFunctionSystem` and `ExclusiveSystemParam` were added, enabling flexible exclusive function systems

- `&mut SystemState` and `&mut QueryState` now implement `ExclusiveSystemParam`

- Exclusive and parallel System configuration is now done via a unified `SystemDescriptor`, `IntoSystemDescriptor`, and `SystemContainer` api.

## Migration Guide

Calling `.exclusive_system()` is no longer required (or supported) for converting exclusive system functions to exclusive systems:

```rust

// Old (0.8)

app.add_system(some_exclusive_system.exclusive_system());

// New (0.9)

app.add_system(some_exclusive_system);

```

Converting "normal" parallel systems to exclusive systems is done by calling the exclusive ordering apis:

```rust

// Old (0.8)

app.add_system(some_system.exclusive_system().at_end());

// New (0.9)

app.add_system(some_system.at_end());

```

Query state in exclusive systems can now be cached via ExclusiveSystemParams, which should be preferred for clarity and performance reasons:

```rust

// Old (0.8)

fn some_system(world: &mut World) {

let mut transforms = world.query::<&Transform>();

for transform in transforms.iter(world) {

}

}

// New (0.9)

fn some_system(world: &mut World, transforms: &mut QueryState<&Transform>) {

for transform in transforms.iter(world) {

}

}

```

2022-09-26 23:57:07 +00:00

|

|

|

.at_start()

|

2021-12-14 03:58:23 +00:00

|

|

|

.label(RenderLightSystems::PrepareLights),

|

|

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

RenderStage::Prepare,

|

2022-04-07 16:16:35 +00:00

|

|

|

// NOTE: This needs to run after prepare_lights. As prepare_lights is an exclusive system,

|

|

|

|

|

// just adding it to the non-exclusive systems in the Prepare stage means it runs after

|

|

|

|

|

// prepare_lights.

|

|

|

|

|

render::prepare_clusters.label(RenderLightSystems::PrepareClusters),

|

2021-12-14 03:58:23 +00:00

|

|

|

)

|

|

|

|

|

.add_system_to_stage(

|

|

|

|

|

RenderStage::Queue,

|

|

|

|

|

render::queue_shadows.label(RenderLightSystems::QueueShadows),

|

|

|

|

|

)

|

|

|

|

|

.add_system_to_stage(RenderStage::Queue, render::queue_shadow_view_bind_group)

|

|

|

|

|

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Shadow>)

|

|

|

|

|

.init_resource::<ShadowPipeline>()

|

|

|

|

|

.init_resource::<DrawFunctions<Shadow>>()

|

|

|

|

|

.init_resource::<LightMeta>()

|

|

|

|

|

.init_resource::<GlobalLightMeta>()

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.