I created this manually as Github didn't want to run CI for the

workflow-generated PR. I'm guessing we didn't hit this in previous

releases because we used bors.

Co-authored-by: Bevy Auto Releaser <41898282+github-actions[bot]@users.noreply.github.com>

This release I'm experimenting with a new changelog format that doesn't

sap hours of extra time to put together. Basically:

1. Sort by "development area" instead of added / changed / fixed. These

distinctions are often unclear anyway and we have no automated way of

assigning them currently. "Development area" sorting feels equally

useful (if not more useful). Our changelog generator also already does

"development area" categorization so this is efficient.

2. Sort by "importance" and trim out irrelevant changes. This is

laborious, but I already do this as part of my blog post construction

methodology, so it isn't "extra" work.

# Objective

fixes#8911, #7712

## Solution

Rounding was added to Taffy which fixed issue #7712.

The implementation uses the f32 `round` method which rounds ties

(fractional part is a half) away from zero. Issue #8911 occurs when a

node's min and max bounds on either axis are "ties" and zero is between

them. Then the bounds are rounded away from each other, and the node

grows by a pixel. This alone shouldn't cause the node to expand

continuously, but I think there is some interaction with the way Taffy

recomputes a layout from its cached data that I didn't identify.

This PR fixes#8911 by first disabling Taffy's internal rounding and

using an alternative rounding function that rounds ties up.

Then, instead of rounding the values of the internal layout tree as

Taffy's built-in rounding does, we leave those values unmodified and

only the values stored in the components are rounded. This requires

walking the tree for the UI node geometry update rather than iterating

through a query.

Because the component values are regenerated each update, that should

mean that UI updates are idempotent (ish) now and make the growing node

behaviour seen in issue #8911 impossible.

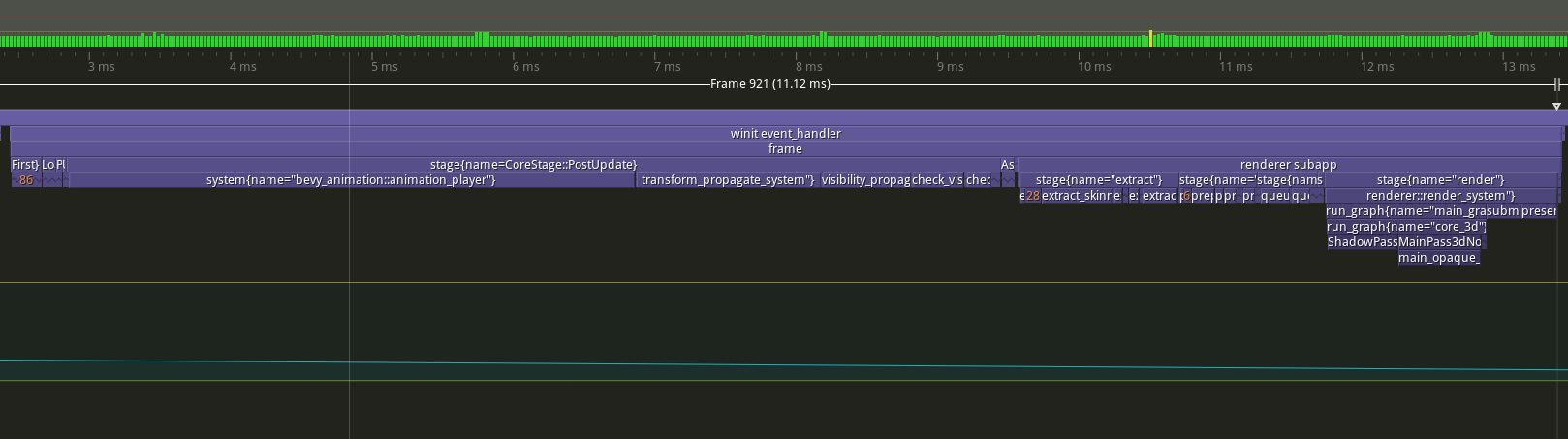

I expected a performance regression, but it's an improvement on main:

```

cargo run --profile stress-test --features trace_tracy --example many_buttons

```

<img width="461" alt="ui-rounding-fix-compare"

src="https://github.com/bevyengine/bevy/assets/27962798/914bfd50-e18a-4642-b262-fafa69005432">

I guess it makes sense to do the rounding together with the node size

and position updates.

---

## Changelog

`bevy_ui::layout`:

* Taffy's built-in rounding is disabled and rounding is now performed by

`ui_layout_system`.

* Instead of rounding the values of the internal layout tree as Taffy's

built-in rounding does, we leave those values unmodified and only the

values stored in the components are rounded. This requires walking the

tree for the UI node geometry update rather than iterating through a

query. Because the component values are regenerated each update, that

should mean that UI updates are idempotent now and make the growing node

behaviour seen in issue #8911 impossible.

* Added two helper functions `round_ties_up` and

`round_layout_coordinates`.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This pull request is mutually exclusive with #9066.

# Objective

Complete the initialization of the plugin in `ScheduleRunnerPlugin`.

## Solution

Wait for asynchronous tasks to complete, then `App::finish` and

`App::cleanup` in the runner function.

# Objective

Fixes#6689.

## Solution

Add `single-threaded` as an optional non-default feature to `bevy_ecs`

and `bevy_tasks` that:

- disable the `ParallelExecutor` as a default runner

- disables the multi-threaded `TaskPool`

- internally replace `QueryParIter::for_each` calls with

`Query::for_each`.

Removed the `Mutex` and `Arc` usage in the single-threaded task pool.

## Future Work/TODO

Create type aliases for `Mutex`, `Arc` that change to single-threaaded

equivalents where possible.

---

## Changelog

Added: Optional default feature `multi-theaded` to that enables

multithreaded parallelism in the engine. Disabling it disables all

multithreading in exchange for higher single threaded performance. Does

nothing on WASM targets.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- accesskit_unix is not optional anymore

## Solution

- Enable `async-io` feature of `accesskit_winit` only when

`accesskit_unix` is enabled

# Objective

Improve the `bevy_audio` API to make it more user-friendly and

ECS-idiomatic. This PR is a first-pass at addressing some of the most

obvious (to me) problems. In the interest of keeping the scope small,

further improvements can be done in future PRs.

The current `bevy_audio` API is very clunky to work with, due to how it

(ab)uses bevy assets to represent audio sinks.

The user needs to write a lot of boilerplate (accessing

`Res<Assets<AudioSink>>`) and deal with a lot of cognitive overhead

(worry about strong vs. weak handles, etc.) in order to control audio

playback.

Audio playback is initiated via a centralized `Audio` resource, which

makes it difficult to keep track of many different sounds playing in a

typical game.

Further, everything carries a generic type parameter for the sound

source type, making it difficult to mix custom sound sources (such as

procedurally generated audio or unofficial formats) with regular audio

assets.

Let's fix these issues.

## Solution

Refactor `bevy_audio` to a more idiomatic ECS API. Remove the `Audio`

resource. Do everything via entities and components instead.

Audio playback data is now stored in components:

- `PlaybackSettings`, `SpatialSettings`, `Handle<AudioSource>` are now

components. The user inserts them to tell Bevy to play a sound and

configure the initial playback parameters.

- `AudioSink`, `SpatialAudioSink` are now components instead of special

magical "asset" types. They are inserted by Bevy when it actually begins

playing the sound, and can be queried for by the user in order to

control the sound during playback.

Bundles: `AudioBundle` and `SpatialAudioBundle` are available to make it

easy for users to play sounds. Spawn an entity with one of these bundles

(or insert them to a complex entity alongside other stuff) to play a

sound.

Each entity represents a sound to be played.

There is also a new "auto-despawn" feature (activated using

`PlaybackSettings`), which, if enabled, tells Bevy to despawn entities

when the sink playback finishes. This allows for "fire-and-forget" sound

playback. Users can simply

spawn entities whenever they want to play sounds and not have to worry

about leaking memory.

## Unsolved Questions

I think the current design is *fine*. I'd be happy for it to be merged.

It has some possibly-surprising usability pitfalls, but I think it is

still much better than the old `bevy_audio`. Here are some discussion

questions for things that we could further improve. I'm undecided on

these questions, which is why I didn't implement them. We should decide

which of these should be addressed in this PR, and what should be left

for future PRs. Or if they should be addressed at all.

### What happens when sounds start playing?

Currently, the audio sink components are inserted and the bundle

components are kept. Should Bevy remove the bundle components? Something

else?

The current design allows an entity to be reused for playing the same

sound with the same parameters repeatedly. This is a niche use case I'd

like to be supported, but if we have to give it up for a simpler design,

I'd be fine with that.

### What happens if users remove any of the components themselves?

As described above, currently, entities can be reused. Removing the

audio sink causes it to be "detached" (I kept the old `Drop` impl), so

the sound keeps playing. However, if the audio bundle components are not

removed, Bevy will detect this entity as a "queued" sound entity again

(has the bundle compoenents, without a sink component), just like before

playing the sound the first time, and start playing the sound again.

This behavior might be surprising? Should we do something different?

### Should mutations to `PlaybackSettings` be applied to the audio sink?

We currently do not do that. `PlaybackSettings` is just for the initial

settings when the sound starts playing. This is clearly documented.

Do we want to keep this behavior, or do we want to allow users to use

`PlaybackSettings` instead of `AudioSink`/`SpatialAudioSink` to control

sounds during playback too?

I think I prefer for them to be kept separate. It is not a bad mental

model once you understand it, and it is documented.

### Should `AudioSink` and `SpatialAudioSink` be unified into a single

component type?

They provide a similar API (via the `AudioSinkPlayback` trait) and it

might be annoying for users to have to deal with both of them. The

unification could be done using an enum that is matched on internally by

the methods. Spatial audio has extra features, so this might make it

harder to access. I think we shouldn't.

### Automatic synchronization of spatial sound properties from

Transforms?

Should Bevy automatically apply changes to Transforms to spatial audio

entities? How do we distinguish between listener and emitter? Which one

does the transform represent? Where should the other one come from?

Alternatively, leave this problem for now, and address it in a future

PR. Or do nothing, and let users deal with it, as shown in the

`spatial_audio_2d` and `spatial_audio_3d` examples.

---

## Changelog

Added:

- `AudioBundle`/`SpatialAudioBundle`, add them to entities to play

sounds.

Removed:

- The `Audio` resource.

- `AudioOutput` is no longer `pub`.

Changed:

- `AudioSink`, `SpatialAudioSink` are now components instead of assets.

## Migration Guide

// TODO: write a more detailed migration guide, after the "unsolved

questions" are answered and this PR is finalized.

Before:

```rust

/// Need to store handles somewhere

#[derive(Resource)]

struct MyMusic {

sink: Handle<AudioSink>,

}

fn play_music(

asset_server: Res<AssetServer>,

audio: Res<Audio>,

audio_sinks: Res<Assets<AudioSink>>,

mut commands: Commands,

) {

let weak_handle = audio.play_with_settings(

asset_server.load("music.ogg"),

PlaybackSettings::LOOP.with_volume(0.5),

);

// upgrade to strong handle and store it

commands.insert_resource(MyMusic {

sink: audio_sinks.get_handle(weak_handle),

});

}

fn toggle_pause_music(

audio_sinks: Res<Assets<AudioSink>>,

mymusic: Option<Res<MyMusic>>,

) {

if let Some(mymusic) = &mymusic {

if let Some(sink) = audio_sinks.get(&mymusic.sink) {

sink.toggle();

}

}

}

```

Now:

```rust

/// Marker component for our music entity

#[derive(Component)]

struct MyMusic;

fn play_music(

mut commands: Commands,

asset_server: Res<AssetServer>,

) {

commands.spawn((

AudioBundle::from_audio_source(asset_server.load("music.ogg"))

.with_settings(PlaybackSettings::LOOP.with_volume(0.5)),

MyMusic,

));

}

fn toggle_pause_music(

// `AudioSink` will be inserted by Bevy when the audio starts playing

query_music: Query<&AudioSink, With<MyMusic>>,

) {

if let Ok(sink) = query.get_single() {

sink.toggle();

}

}

```

# Objective

`accesskit` and `accesskit_winit` need to be upgraded.

## Solution

Upgrade `accesskit` and `accesskit_winit`.

---

## Changelog

### Changed

* Upgrade accesskit to v0.11.

* Upgrade accesskit_winit to v0.14.

# Objective

Currently, `DynamicScene`s extract all components listed in the given

(or the world's) type registry. This acts as a quasi-filter of sorts.

However, it can be troublesome to use effectively and lacks decent

control.

For example, say you need to serialize only the following component over

the network:

```rust

#[derive(Reflect, Component, Default)]

#[reflect(Component)]

struct NPC {

name: Option<String>

}

```

To do this, you'd need to:

1. Create a new `AppTypeRegistry`

2. Register `NPC`

3. Register `Option<String>`

If we skip Step 3, then the entire scene might fail to serialize as

`Option<String>` requires registration.

Not only is this annoying and easy to forget, but it can leave users

with an impossible task: serializing a third-party type that contains

private types.

Generally, the third-party crate will register their private types

within a plugin so the user doesn't need to do it themselves. However,

this means we are now unable to serialize _just_ that type— we're forced

to allow everything!

## Solution

Add the `SceneFilter` enum for filtering components to extract.

This filter can be used to optionally allow or deny entire sets of

components/resources. With the `DynamicSceneBuilder`, users have more

control over how their `DynamicScene`s are built.

To only serialize a subset of components, use the `allow` method:

```rust

let scene = builder

.allow::<ComponentA>()

.allow::<ComponentB>()

.extract_entity(entity)

.build();

```

To serialize everything _but_ a subset of components, use the `deny`

method:

```rust

let scene = builder

.deny::<ComponentA>()

.deny::<ComponentB>()

.extract_entity(entity)

.build();

```

Or create a custom filter:

```rust

let components = HashSet::from([type_id]);

let filter = SceneFilter::Allowlist(components);

// let filter = SceneFilter::Denylist(components);

let scene = builder

.with_filter(Some(filter))

.extract_entity(entity)

.build();

```

Similar operations exist for resources:

<details>

<summary>View Resource Methods</summary>

To only serialize a subset of resources, use the `allow_resource`

method:

```rust

let scene = builder

.allow_resource::<ResourceA>()

.extract_resources()

.build();

```

To serialize everything _but_ a subset of resources, use the

`deny_resource` method:

```rust

let scene = builder

.deny_resource::<ResourceA>()

.extract_resources()

.build();

```

Or create a custom filter:

```rust

let resources = HashSet::from([type_id]);

let filter = SceneFilter::Allowlist(resources);

// let filter = SceneFilter::Denylist(resources);

let scene = builder

.with_resource_filter(Some(filter))

.extract_resources()

.build();

```

</details>

### Open Questions

- [x] ~~`allow` and `deny` are mutually exclusive. Currently, they

overwrite each other. Should this instead be a panic?~~ Took @soqb's

suggestion and made it so that the opposing method simply removes that

type from the list.

- [x] ~~`DynamicSceneBuilder` extracts entity data as soon as

`extract_entity`/`extract_entities` is called. Should this behavior

instead be moved to the `build` method to prevent ordering mixups (e.g.

`.allow::<Foo>().extract_entity(entity)` vs

`.extract_entity(entity).allow::<Foo>()`)? The tradeoff would be

iterating over the given entities twice: once at extraction and again at

build.~~ Based on the feedback from @Testare it sounds like it might be

better to just keep the current functionality (if anything we can open a

separate PR that adds deferred methods for extraction, so the

choice/performance hit is up to the user).

- [ ] An alternative might be to remove the filter from

`DynamicSceneBuilder` and have it as a separate parameter to the

extraction methods (either in the existing ones or as added

`extract_entity_with_filter`-type methods). Is this preferable?

- [x] ~~Should we include constructors that include common types to

allow/deny? For example, a `SceneFilter::standard_allowlist` that

includes things like `Parent` and `Children`?~~ Consensus suggests we

should. I may split this out into a followup PR, though.

- [x] ~~Should we add the ability to remove types from the filter

regardless of whether an allowlist or denylist (e.g.

`filter.remove::<Foo>()`)?~~ See the first list item

- [x] ~~Should `SceneFilter` be an enum? Would it make more sense as a

struct that contains an `is_denylist` boolean?~~ With the added

`SceneFilter::None` state (replacing the need to wrap in an `Option` or

rely on an empty `Denylist`), it seems an enum is better suited now

- [x] ~~Bikeshed: Do we like the naming convention? Should we instead

use `include`/`exclude` terminology?~~ Sounds like we're sticking with

`allow`/`deny`!

- [x] ~~Does this feature need a new example? Do we simply include it in

the existing one (maybe even as a comment?)? Should this be done in a

followup PR instead?~~ Example will be added in a followup PR

### Followup Tasks

- [ ] Add a dedicated `SceneFilter` example

- [ ] Possibly add default types to the filter (e.g. deny things like

`ComputedVisibility`, allow `Parent`, etc)

---

## Changelog

- Added the `SceneFilter` enum for filtering components and resources

when building a `DynamicScene`

- Added methods:

- `DynamicSceneBuilder::with_filter`

- `DynamicSceneBuilder::allow`

- `DynamicSceneBuilder::deny`

- `DynamicSceneBuilder::allow_all`

- `DynamicSceneBuilder::deny_all`

- `DynamicSceneBuilder::with_resource_filter`

- `DynamicSceneBuilder::allow_resource`

- `DynamicSceneBuilder::deny_resource`

- `DynamicSceneBuilder::allow_all_resources`

- `DynamicSceneBuilder::deny_all_resources`

- Removed methods:

- `DynamicSceneBuilder::from_world_with_type_registry`

- `DynamicScene::from_scene` and `DynamicScene::from_world` no longer

require an `AppTypeRegistry` reference

## Migration Guide

- `DynamicScene::from_scene` and `DynamicScene::from_world` no longer

require an `AppTypeRegistry` reference:

```rust

// OLD

let registry = world.resource::<AppTypeRegistry>();

let dynamic_scene = DynamicScene::from_world(&world, registry);

// let dynamic_scene = DynamicScene::from_scene(&scene, registry);

// NEW

let dynamic_scene = DynamicScene::from_world(&world);

// let dynamic_scene = DynamicScene::from_scene(&scene);

```

- Removed `DynamicSceneBuilder::from_world_with_type_registry`. Now the

registry is automatically taken from the given world:

```rust

// OLD

let registry = world.resource::<AppTypeRegistry>();

let builder = DynamicSceneBuilder::from_world_with_type_registry(&world,

registry);

// NEW

let builder = DynamicSceneBuilder::from_world(&world);

```

# Objective

After the UI layout is computed when the coordinates are converted back

from physical coordinates to logical coordinates the `UiScale` is

ignored. This results in a confusing situation where we have two

different systems of logical coordinates.

Example:

```rust

use bevy::prelude::*;

fn main() {

App::new()

.add_plugins(DefaultPlugins)

.add_systems(Startup, setup)

.add_systems(Update, update)

.run();

}

fn setup(mut commands: Commands, mut ui_scale: ResMut<UiScale>) {

ui_scale.scale = 4.;

commands.spawn(Camera2dBundle::default());

commands.spawn(NodeBundle {

style: Style {

align_items: AlignItems::Center,

justify_content: JustifyContent::Center,

width: Val::Percent(100.),

..Default::default()

},

..Default::default()

})

.with_children(|builder| {

builder.spawn(NodeBundle {

style: Style {

width: Val::Px(100.),

height: Val::Px(100.),

..Default::default()

},

background_color: Color::MAROON.into(),

..Default::default()

}).with_children(|builder| {

builder.spawn(TextBundle::from_section("", TextStyle::default());

});

});

}

fn update(

mut text_query: Query<(&mut Text, &Parent)>,

node_query: Query<Ref<Node>>,

) {

for (mut text, parent) in text_query.iter_mut() {

let node = node_query.get(parent.get()).unwrap();

if node.is_changed() {

text.sections[0].value = format!("size: {}", node.size());

}

}

}

```

result:

We asked for a 100x100 UI node but the Node's size is multiplied by the

value of `UiScale` to give a logical size of 400x400.

## Solution

Divide the output physical coordinates by `UiScale` in

`ui_layout_system` and multiply the logical viewport size by `UiScale`

when creating the projection matrix for the UI's `ExtractedView` in

`extract_default_ui_camera_view`.

---

## Changelog

* The UI layout's physical coordinates are divided by both the window

scale factor and `UiScale` when converting them back to logical

coordinates. The logical size of Ui nodes now matches the values given

to their size constraints.

* Multiply the logical viewport size by `UiScale` before creating the

projection matrix for the UI's `ExtractedView` in

`extract_default_ui_camera_view`.

* In `ui_focus_system` the cursor position returned from `Window` is

divided by `UiScale`.

* Added a scale factor parameter to `Node::physical_size` and

`Node::physical_rect`.

* The example `viewport_debug` now uses a `UiScale` of 2. to ensure that

viewport coordinates are working correctly with a non-unit `UiScale`.

## Migration Guide

Physical UI coordinates are now divided by both the `UiScale` and the

window's scale factor to compute the logical sizes and positions of UI

nodes.

This ensures that UI Node size and position values, held by the `Node`

and `GlobalTransform` components, conform to the same logical coordinate

system as the style constraints from which they are derived,

irrespective of the current `scale_factor` and `UiScale`.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

The current mobile example produces an APK of 1.5 Gb.

- Running the example on a real device takes significant time (around

one minute just to copy the file over USB to my phone).

- Default virtual devices in Android studio run out of space after the

first install. This can of course be solved/configured, but it causes

unnecessary friction.

- One impression could be, that Bevy produces bloated APKs. 1.5Gb is

even double the size of debug builds for desktop examples.

## Solution

- Strip the debug symbols of the shared libraries before they are copied

to the APK

APK size after this change: 200Mb

Copy time on my machine: ~8s

## Considered alternative

APKs built in release mode are only 50Mb in size, but require setting up

signing for the profile and compile longer.

# Objective

- Fixes#8630.

## Solution

Since a camera's view and projection matrices are modified during

`PostUpdate` in `camera_system` and `propagate_transforms`, it is fine

to move `update_previous_view_projections` from `Update` to `PreUpdate`.

Doing so adds consistence with `update_mesh_previous_global_transforms`

and allows systems in `Update` to use `PreviousViewProjection` correctly

without explicit ordering.

# Objective

I'm creating an iOS game and had to find a way to persist game state

when the application is terminated. This required listening to the

[`applicationWillTerminate()`

method](https://developer.apple.com/documentation/uikit/uiapplicationdelegate/1623111-applicationwillterminate),

but I cannot do so myself anymore since `winit` already set up a

delegate to listen for it, and there can be only one delegate.

So I had to move up the stack and try to respond to one of the events

from `winit` instead. It appears `winit` fires two events that could

serve my purpose: `WindowEvent::Destroyed` and `Event::LoopDestroyed`.

It seemed to me the former might be slightly more generally useful, and

I also found a past discussion that suggested it would be appropriate

for Bevy to have a `WindowDestroyed` event:

https://github.com/bevyengine/bevy/pull/5589#discussion_r942811021

## Solution

- I've added the `WindowDestroyed` event, which fires when `winit` fires

`WindowEvent::Destroyed`.

---

## Changelog

### Added

- Introduced a new `WindowDestroyed` event type. It is used to indicate

a window has been destroyed by the windowing system.

# Objective

bevy_render currently has a dependency on a random older version of

once_cell which is not used anywhere.

## Solution

Remove the dependency

## Changelog

N/A

## Migration Guide

N/A

# Objective

- Remove need to call `.get()` on two ticks to compare them for

equality.

## Solution

- Derive `Eq` and `PartialEq`.

---

## Changelog

> `Tick` now implements `Eq` and `PartialEq`

# Objective

- Fix#8984

### Solution

- Address compilation errors

I admit: I did sneak it an unrelated mini-refactor. of the

`measurment.rs` module. it seemed to me that directly importing `taffy`

types helped reduce a lot of boilerplate, so I did it.

# Objective

The bounding box colors are from bevy_gizmo are randomized between app

runs. This can get confusing for users.

## Solution

Use a fixed seed with `RandomState::with_seeds` rather than initializing

a `AHash`.

The random number was chose so that the first few colors are clearly

distinct.

According to the `RandomState::hash_one` documentation, it's also

faster.

---

## Changelog

* bevy_gizmo: Keep a consistent color for AABBs of identical entities

between runs

# Objective

Since 10f5c92, shadows were broken for models with morph target.

When #5703 was merged, the morph target code in `render/mesh.wgsl` was

correctly updated to use the new import syntax. However, similar code

exists in `prepass/prepass.wgsl`, but it was never update. (the reason

code is duplicated is that the `Vertex` struct is different for both

files).

## Solution

Update the code, so that shadows render correctly with morph targets.

# Objective

Fixes https://github.com/bevyengine/bevy/issues/8925

## Solution

~~Clamp the bad values.~~

Normalize the prepass normals when we get them in the `prepass_normal()`

function.

## More Info

The issue is that NdotV is sometimes very slightly greater than 1 (maybe

FP rounding issues?), which caused `F_Schlick()` to return NANs in

`pow(1.0 - NdotV, 5.0)` (call stack looked like`pbr()` ->

`directional_light()` -> `Fd_Burley()` -> `F_Schlick()`)

# Objective

Since 10f5c92, parallax mapping was broken.

When #5703 was merged, the change from `in.uv` to `uv` in the pbr shader

was reverted. So the shader would use the wrong coordinate to sample the

various textures.

## Solution

We revert to using the correct uv.

# Objective

The setup code in `animated_fox` uses a `done` boolean to avoid running

the `play` logic repetitively.

It is a common pattern, but it just work with exactly one fox, and

misses an even more common pattern.

When a user modifies the code to try it with several foxes, they are

confused as to why it doesn't work (#8996).

## Solution

The more common pattern is to use `Added<AnimationPlayer>` as a query

filter.

This both reduces complexity and naturally extend the setup code to

handle several foxes, added at any time.

# Objective

Followup bugfix for #5703. Without this we get the following error when

CAS (Contrast Adaptive Sharpening) is enabled:

```

2023-06-29T01:31:23.829331Z ERROR bevy_render::render_resource::pipeline_cache: failed to process shader:

error: unknown type: 'FullscreenVertexOutput'

┌─ crates/bevy_core_pipeline/src/contrast_adaptive_sharpening/robust_contrast_adaptive_sharpening.wgsl:63:17

│

63 │ fn fragment(in: FullscreenVertexOutput) -> @location(0) vec4<f32> {

│ ^^^^^^^^^^^^^^^^^^^^^^ unknown type

│

= unknown type: 'FullscreenVertexOutput'

```

@robtfm I wouldn't expect this to fail. I was under the impression the

`#import bevy_core_pipeline::fullscreen_vertex_shader` would pull

"everything" from that file into this one?

# Objective

- This fixes a crash when loading shaders, when running an Adreno GPU

and using WebGL mode.

- Fixes#8506

- Fixes#8047

## Solution

- The shader pbr_functions.wgsl, will fail in apply_fog function, trying

to access values that are null on Adreno chipsets using WebGL, these

devices are commonly found in android handheld devices.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

# Objective

**This implementation is based on

https://github.com/bevyengine/rfcs/pull/59.**

---

Resolves#4597

Full details and motivation can be found in the RFC, but here's a brief

summary.

`FromReflect` is a very powerful and important trait within the

reflection API. It allows Dynamic types (e.g., `DynamicList`, etc.) to

be formed into Real ones (e.g., `Vec<i32>`, etc.).

This mainly comes into play concerning deserialization, where the

reflection deserializers both return a `Box<dyn Reflect>` that almost

always contain one of these Dynamic representations of a Real type. To

convert this to our Real type, we need to use `FromReflect`.

It also sneaks up in other ways. For example, it's a required bound for

`T` in `Vec<T>` so that `Vec<T>` as a whole can be made `FromReflect`.

It's also required by all fields of an enum as it's used as part of the

`Reflect::apply` implementation.

So in other words, much like `GetTypeRegistration` and `Typed`, it is

very much a core reflection trait.

The problem is that it is not currently treated like a core trait and is

not automatically derived alongside `Reflect`. This makes using it a bit

cumbersome and easy to forget.

## Solution

Automatically derive `FromReflect` when deriving `Reflect`.

Users can then choose to opt-out if needed using the

`#[reflect(from_reflect = false)]` attribute.

```rust

#[derive(Reflect)]

struct Foo;

#[derive(Reflect)]

#[reflect(from_reflect = false)]

struct Bar;

fn test<T: FromReflect>(value: T) {}

test(Foo); // <-- OK

test(Bar); // <-- Panic! Bar does not implement trait `FromReflect`

```

#### `ReflectFromReflect`

This PR also automatically adds the `ReflectFromReflect` (introduced in

#6245) registration to the derived `GetTypeRegistration` impl— if the

type hasn't opted out of `FromReflect` of course.

<details>

<summary><h4>Improved Deserialization</h4></summary>

> **Warning**

> This section includes changes that have since been descoped from this

PR. They will likely be implemented again in a followup PR. I am mainly

leaving these details in for archival purposes, as well as for reference

when implementing this logic again.

And since we can do all the above, we might as well improve

deserialization. We can now choose to deserialize into a Dynamic type or

automatically convert it using `FromReflect` under the hood.

`[Un]TypedReflectDeserializer::new` will now perform the conversion and

return the `Box`'d Real type.

`[Un]TypedReflectDeserializer::new_dynamic` will work like what we have

now and simply return the `Box`'d Dynamic type.

```rust

// Returns the Real type

let reflect_deserializer = UntypedReflectDeserializer::new(®istry);

let mut deserializer = ron:🇩🇪:Deserializer::from_str(input)?;

let output: SomeStruct = reflect_deserializer.deserialize(&mut deserializer)?.take()?;

// Returns the Dynamic type

let reflect_deserializer = UntypedReflectDeserializer::new_dynamic(®istry);

let mut deserializer = ron:🇩🇪:Deserializer::from_str(input)?;

let output: DynamicStruct = reflect_deserializer.deserialize(&mut deserializer)?.take()?;

```

</details>

---

## Changelog

* `FromReflect` is now automatically derived within the `Reflect` derive

macro

* This includes auto-registering `ReflectFromReflect` in the derived

`GetTypeRegistration` impl

* ~~Renamed `TypedReflectDeserializer::new` and

`UntypedReflectDeserializer::new` to

`TypedReflectDeserializer::new_dynamic` and

`UntypedReflectDeserializer::new_dynamic`, respectively~~ **Descoped**

* ~~Changed `TypedReflectDeserializer::new` and

`UntypedReflectDeserializer::new` to automatically convert the

deserialized output using `FromReflect`~~ **Descoped**

## Migration Guide

* `FromReflect` is now automatically derived within the `Reflect` derive

macro. Items with both derives will need to remove the `FromReflect`

one.

```rust

// OLD

#[derive(Reflect, FromReflect)]

struct Foo;

// NEW

#[derive(Reflect)]

struct Foo;

```

If using a manual implementation of `FromReflect` and the `Reflect`

derive, users will need to opt-out of the automatic implementation.

```rust

// OLD

#[derive(Reflect)]

struct Foo;

impl FromReflect for Foo {/* ... */}

// NEW

#[derive(Reflect)]

#[reflect(from_reflect = false)]

struct Foo;

impl FromReflect for Foo {/* ... */}

```

<details>

<summary><h4>Removed Migrations</h4></summary>

> **Warning**

> This section includes changes that have since been descoped from this

PR. They will likely be implemented again in a followup PR. I am mainly

leaving these details in for archival purposes, as well as for reference

when implementing this logic again.

* The reflect deserializers now perform a `FromReflect` conversion

internally. The expected output of `TypedReflectDeserializer::new` and

`UntypedReflectDeserializer::new` is no longer a Dynamic (e.g.,

`DynamicList`), but its Real counterpart (e.g., `Vec<i32>`).

```rust

let reflect_deserializer =

UntypedReflectDeserializer::new_dynamic(®istry);

let mut deserializer = ron:🇩🇪:Deserializer::from_str(input)?;

// OLD

let output: DynamicStruct = reflect_deserializer.deserialize(&mut

deserializer)?.take()?;

// NEW

let output: SomeStruct = reflect_deserializer.deserialize(&mut

deserializer)?.take()?;

```

Alternatively, if this behavior isn't desired, use the

`TypedReflectDeserializer::new_dynamic` and

`UntypedReflectDeserializer::new_dynamic` methods instead:

```rust

// OLD

let reflect_deserializer = UntypedReflectDeserializer::new(®istry);

// NEW

let reflect_deserializer =

UntypedReflectDeserializer::new_dynamic(®istry);

```

</details>

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Title. This is necessary in order to update

[`bevy-trait-query`](https://crates.io/crates/bevy-trait-query) to Bevy

0.11.

---

## Changelog

Added the unsafe function `UnsafeWorldCell::storages`, which provides

unchecked access to the internal data stores of a `World`.

Added `GizmoConfig::render_layers`, which will ensure Gizmos are only

rendered on cameras that can see those `RenderLayers`.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Relax unnecessary type restrictions on `App.runner` function.

## Solution

Changed the type of `App.runner` from `Fn(App)` to `FnOnce(App)`.

# Objective

#5703 caused the normal prepass to fail as the prepass uses

`pbr_functions::apply_normal_mapping`, which uses

`mesh_view_bindings::view` to determine mip bias, which conflicts with

`prepass_bindings::view`.

## Solution

pass the mip bias to the `apply_normal_mapping` function explicitly.

# Objective

Currently `App::edit_schedule` takes in `impl FnMut(&mut Schedule)`, but

it calls the function only once. It is probably the intention has been

to have it take `FnOnce` instead.

## Solution

- Relax the parameter to take `FnOnce` instead of `FnMut`

# Objective

Fixes#8967

## Solution

I think this example was just missed in #5703. I made the same sort of

changes to `fallback_image` that were made in other examples in that PR.

# Objective

- There was a deadlock discovered in the implementation of

`bevy_reflect::utility::GenericTypeCell`, when called on a recursive

type, e.g. `Vec<Vec<VariableCurve>>`

## Solution

- Drop the lock before calling the initialisation function, and then

pick it up again afterwards.

## Additional Context

- [Discussed on

Discord](https://discord.com/channels/691052431525675048/1002362493634629796/1122706835284185108)

# Objective

operate on naga IR directly to improve handling of shader modules.

- give codespan reporting into imported modules

- allow glsl to be used from wgsl and vice-versa

the ultimate objective is to make it possible to

- provide user hooks for core shader functions (to modify light

behaviour within the standard pbr pipeline, for example)

- make automatic binding slot allocation possible

but ... since this is already big, adds some value and (i think) is at

feature parity with the existing code, i wanted to push this now.

## Solution

i made a crate called naga_oil (https://github.com/robtfm/naga_oil -

unpublished for now, could be part of bevy) which manages modules by

- building each module independantly to naga IR

- creating "header" files for each supported language, which are used to

build dependent modules/shaders

- make final shaders by combining the shader IR with the IR for imported

modules

then integrated this into bevy, replacing some of the existing shader

processing stuff. also reworked examples to reflect this.

## Migration Guide

shaders that don't use `#import` directives should work without changes.

the most notable user-facing difference is that imported

functions/variables/etc need to be qualified at point of use, and

there's no "leakage" of visible stuff into your shader scope from the

imports of your imports, so if you used things imported by your imports,

you now need to import them directly and qualify them.

the current strategy of including/'spreading' `mesh_vertex_output`

directly into a struct doesn't work any more, so these need to be

modified as per the examples (e.g. color_material.wgsl, or many others).

mesh data is assumed to be in bindgroup 2 by default, if mesh data is

bound into bindgroup 1 instead then the shader def `MESH_BINDGROUP_1`

needs to be added to the pipeline shader_defs.

# Objective

Currently when `UntypedReflectDeserializerVisitor` deserializes a

`Box<dyn Reflect>` it only considers the first entry of the map,

silently ignoring any additional entries. For example the following RON

data:

```json

{

"f32": 1.23,

"u32": 1,

}

```

is successfully deserialized as a `f32`, completly ignoring the `"u32":

1` part.

## Solution

`UntypedReflectDeserializerVisitor` was changed to check if any other

key could be deserialized, and in that case returns an error.

---

## Changelog

`UntypedReflectDeserializer` now errors on malformed inputs instead of

silently disgarding additional data.

## Migration Guide

If you were deserializing `Box<dyn Reflect>` values with multiple

entries (i.e. entries other than `"type": { /* fields */ }`) you should

remove them or deserialization will fail.

# Objective

`World::entity`, `World::entity_mut` and `Commands::entity` should be

marked with `track_caller` to display where (in user code) the call with

the invalid `Entity` was made. `Commands::entity` already has the

attibute, but it does nothing due to the call to `unwrap_or_else`.

## Solution

- Apply the `track_caller` attribute to the `World::entity_mut` and

`World::entity`.

- Remove the call to `unwrap_or_else` which makes the `track_caller`

attribute useless (because `unwrap_or_else` is not `track_caller`

itself). The avoid eager evaluation of the panicking branch it is never

inlined.

---------

Co-authored-by: Giacomo Stevanato <giaco.stevanato@gmail.com>

# Objective

`color_from_entity` uses the poor man's hash to get a fixed random color

for an entity.

While the poor man's hash is succinct, it has a tendency to clump. As a

result, bevy_gizmos has a tendency to re-use very similar colors for

different entities.

This is bad, we would want non-similar colors that take the whole range

of possible hues. This way, each bevy_gizmos aabb gizmo is easy to

identify.

## Solution

AHash is a nice and fast hash that just so happen to be available to

use, so we use it.

# Objective

In Bevy 10.1 and before, the only way to enable text wrapping was to set

a local `Val::Px` width constraint on the text node itself.

`Val::Percent` constraints and constraints on the text node's ancestors

did nothing.

#7779 fixed those problems. But perversely displaying unwrapped text is

really difficult now, and requires users to nest each `TextBundle` in a

`NodeBundle` and apply `min_width` and `max_width` constraints. Some

constructions may even need more than one layer of nesting. I've seen

several people already who have really struggled with this when porting

their projects to main in advance of 0.11.

## Solution

Add a `NoWrap` variant to the `BreakLineOn` enum.

If `NoWrap` is set, ignore any constraints on the width for the text and

call `TextPipeline::queue_text` with a width bound of `f32::INFINITY`.

---

## Changelog

* Added a `NoWrap` variant to the `BreakLineOn` enum.

* If `NoWrap` is set, any constraints on the width for the text are

ignored and `TextPipeline::queue_text` is called with a width bound of

`f32::INFINITY`.

* Changed the `size` field of `FixedMeasure` to `pub`. This shouldn't

have been private, it was always intended to have `pub` visibility.

* Added a `with_no_wrap` method to `TextBundle`.

## Migration Guide

`bevy_text::text::BreakLineOn` has a new variant `NoWrap` that disables

text wrapping for the `Text`.

Text wrapping can also be disabled using the `with_no_wrap` method of

`TextBundle`.

# Objective

- Fix this error to be able to run UI examples in WebGPU

```

1 error(s) generated while compiling the shader:

:31:18 error: integral user-defined vertex outputs must have a flat interpolation attribute

@location(3) mode: u32,

^^^^

:36:1 note: while analyzing entry point 'vertex'

fn vertex(

^^

```

It was introduce in #8793

## Solution

- Add `@interpolate(flat)` to the `mode` field

`Style` flattened `size`, `min_size` and `max_size` to its root struct,

causing compilation errors.

I uncommented the code to avoid further silent error not caught by CI,

but hid the view to keep the same behaviour.