# Objective

In simple cases we might want to derive the `ExtractComponent` trait.

This adds symmetry to the existing `ExtractResource` derive.

## Solution

Add an implementation of `#[derive(ExtractComponent)]`.

The implementation is adapted from the existing `ExtractResource` derive macro.

Additionally, there is an attribute called `extract_component_filter`. This allows specifying a query filter type used when extracting.

If not specified, no filter (equal to `()`) is used.

So:

```rust

#[derive(Component, Clone, ExtractComponent)]

#[extract_component_filter(With<Fuel>)]

pub struct Car {

pub wheels: usize,

}

```

would expand to (a bit cleaned up here):

```rust

impl ExtractComponent for Car

{

type Query = &'static Self;

type Filter = With<Fuel>;

type Out = Self;

fn extract_component(item: QueryItem<'_, Self::Query>) -> Option<Self::Out> {

Some(item.clone())

}

}

```

---

## Changelog

- Added the ability to `#[derive(ExtractComponent)]` with an optional filter.

# Objective

- Fixes#4592

## Solution

- Implement `SrgbColorSpace` for `u8` via `f32`

- Convert KTX2 R8 and R8G8 non-linear sRGB to wgpu `R8Unorm` and `Rg8Unorm` as non-linear sRGB are not supported by wgpu for these formats

- Convert KTX2 R8G8B8 formats to `Rgba8Unorm` and `Rgba8UnormSrgb` by adding an alpha channel as the Rgb variants don't exist in wgpu

---

## Changelog

- Added: Support for KTX2 `R8_SRGB`, `R8_UNORM`, `R8G8_SRGB`, `R8G8_UNORM`, `R8G8B8_SRGB`, `R8G8B8_UNORM` formats by converting to supported wgpu formats as appropriate

# Objective

Add a `FromReflect` derive to the `Aabb` type, like all other math types, so we can reflect `Vec<Aabb>`.

## Solution

Just add it :)

---

## Changelog

### Added

- Implemented `FromReflect` for `Aabb`.

# Objective

Update Bevy to wgpu 0.15.

## Changelog

- Update to wgpu 0.15, wgpu-hal 0.15.1, and naga 0.11

- Users can now use the [DirectX Shader Compiler](https://github.com/microsoft/DirectXShaderCompiler) (DXC) on Windows with DX12 for faster shader compilation and ShaderModel 6.0+ support (requires `dxcompiler.dll` and `dxil.dll`, which are included in DXC downloads from [here](https://github.com/microsoft/DirectXShaderCompiler/releases/latest))

## Migration Guide

### WGSL Top-Level `let` is now `const`

All top level constants are now declared with `const`, catching up with the wgsl spec.

`let` is no longer allowed at the global scope, only within functions.

```diff

-let SOME_CONSTANT = 12.0;

+const SOME_CONSTANT = 12.0;

```

#### `TextureDescriptor` and `SurfaceConfiguration` now requires a `view_formats` field

The new `view_formats` field in the `TextureDescriptor` is used to specify a list of formats the texture can be re-interpreted to in a texture view. Currently only changing srgb-ness is allowed (ex. `Rgba8Unorm` <=> `Rgba8UnormSrgb`). You should set `view_formats` to `&[]` (empty) unless you have a specific reason not to.

#### The DirectX Shader Compiler (DXC) is now supported on DX12

DXC is now the default shader compiler when using the DX12 backend. DXC is Microsoft's replacement for their legacy FXC compiler, and is faster, less buggy, and allows for modern shader features to be used (ShaderModel 6.0+). DXC requires `dxcompiler.dll` and `dxil.dll` to be available, otherwise it will log a warning and fall back to FXC.

You can get `dxcompiler.dll` and `dxil.dll` by downloading the latest release from [Microsoft's DirectXShaderCompiler github repo](https://github.com/microsoft/DirectXShaderCompiler/releases/latest) and copying them into your project's root directory. These must be included when you distribute your Bevy game/app/etc if you plan on supporting the DX12 backend and are using DXC.

`WgpuSettings` now has a `dx12_shader_compiler` field which can be used to choose between either FXC or DXC (if you pass None for the paths for DXC, it will check for the .dlls in the working directory).

# Objective

## Use Case

A render node which calls `post_process_write()` on a `ViewTarget` multiple times during a single run of the node means both main textures of this view target is accessed.

If the source texture (which alternate between main textures **a** and **b**) is accessed in a shader during those iterations it means that those textures have to be bound using bind groups.

Preparing bind groups for both main textures ahead of time is desired, which means having access to the _other_ main texture is needed.

## Solution

Add a method on `ViewTarget` for accessing the other main texture.

---

## Changelog

### Added

- `main_texture_other` API on `ViewTarget`

# Objective

I found several words in code and docs are incorrect. This should be fixed.

## Solution

- Fix several minor typos

Co-authored-by: Chris Ohk <utilforever@gmail.com>

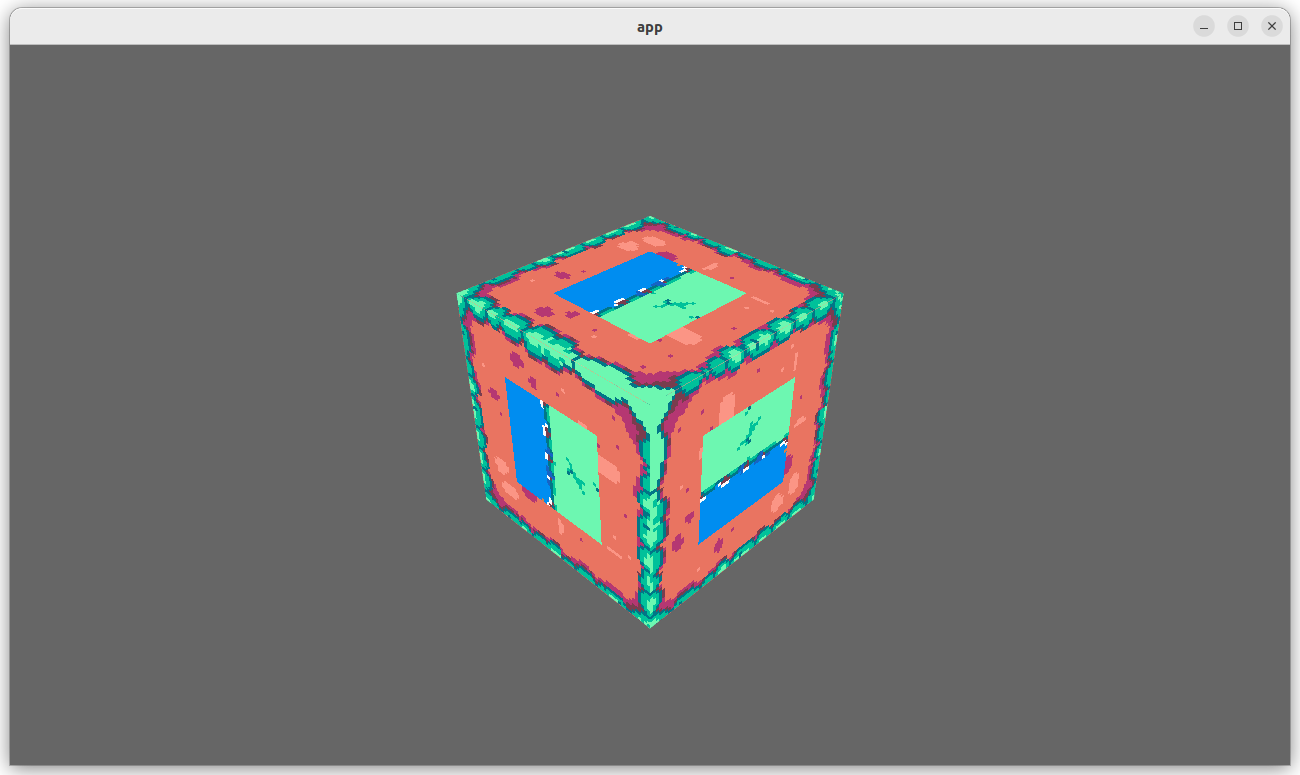

# Objective

Fixes#6952

## Solution

- Request WGPU capabilities `SAMPLED_TEXTURE_AND_STORAGE_BUFFER_ARRAY_NON_UNIFORM_INDEXING`, `SAMPLER_NON_UNIFORM_INDEXING` and `UNIFORM_BUFFER_AND_STORAGE_TEXTURE_ARRAY_NON_UNIFORM_INDEXING` when corresponding features are enabled.

- Add an example (`shaders/texture_binding_array`) illustrating (and testing) the use of non-uniform indexed textures and samplers.

## Changelog

- Added new capabilities for shader validation.

- Added example `shaders/texture_binding_array`.

Co-authored-by: Robert Swain <robert.swain@gmail.com>

# Objective

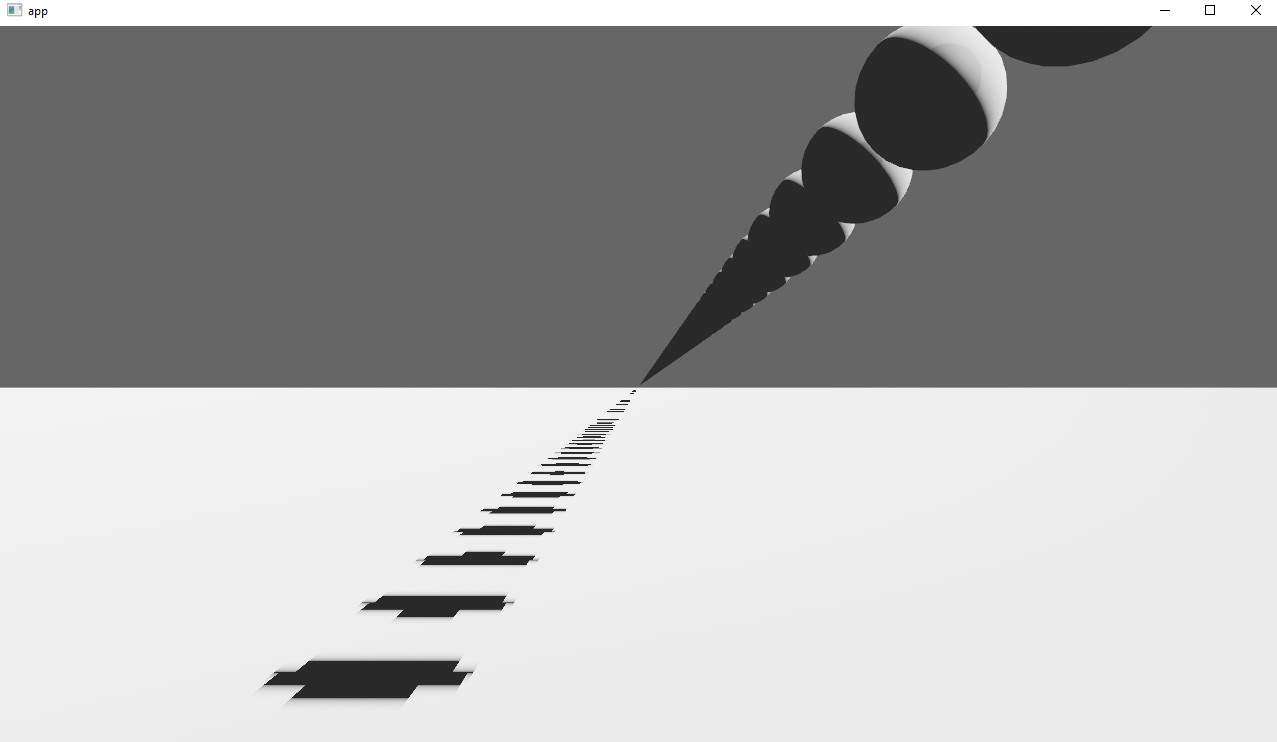

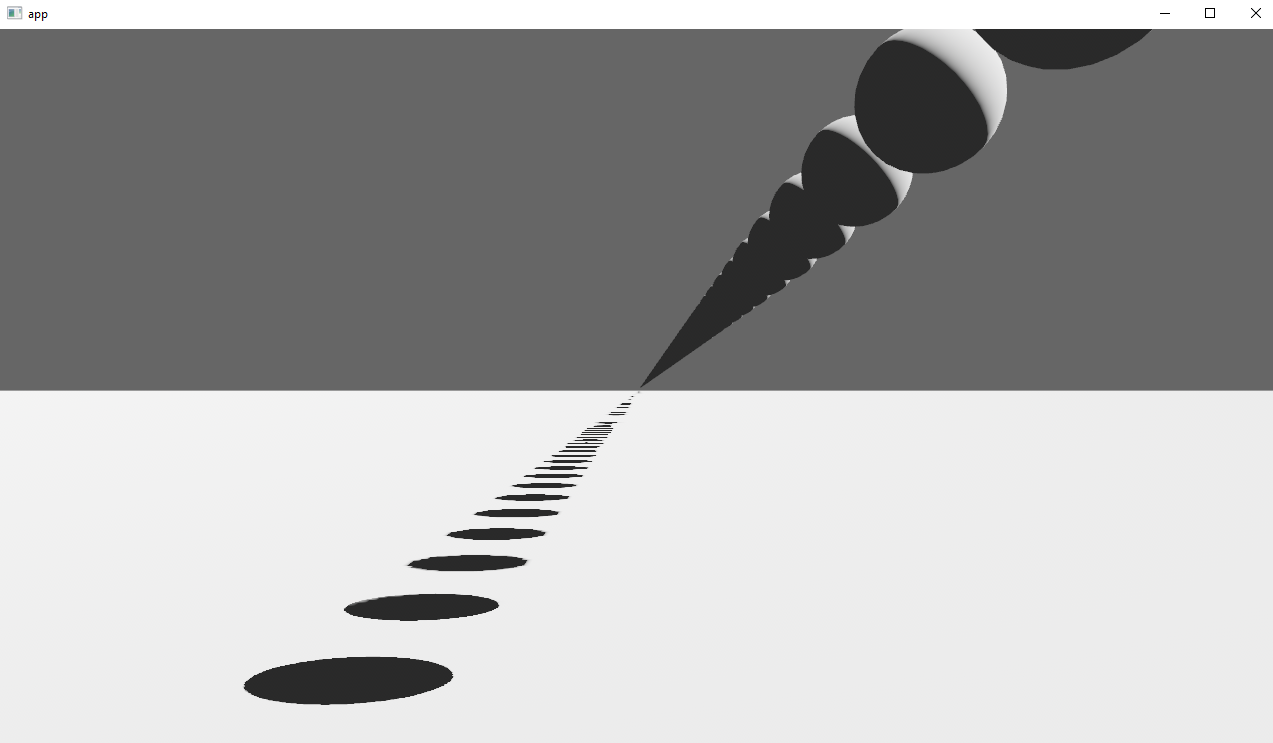

Implements cascaded shadow maps for directional lights, which produces better quality shadows without needing excessively large shadow maps.

Fixes#3629

Before

After

## Solution

Rather than rendering a single shadow map for directional light, the view frustum is divided into a series of cascades, each of which gets its own shadow map. The correct cascade is then sampled for shadow determination.

---

## Changelog

Directional lights now use cascaded shadow maps for improved shadow quality.

## Migration Guide

You no longer have to manually specify a `shadow_projection` for a directional light, and these settings should be removed. If customization of how cascaded shadow maps work is desired, modify the `CascadeShadowConfig` component instead.

# Objective

Fixes#7286. Both `App::add_sub_app` and `App::insert_sub_app` are rather redundant. Before 0.10 is shipped, one of them should be removed.

## Solution

Remove `App::add_sub_app` to prefer `App::insert_sub_app`.

Also hid away `SubApp::extract` since that can be a footgun if someone mutates it for whatever reason. Willing to revert this change if there are objections.

Perhaps we should make `SubApp: Deref<Target=App>`? Might change if we decide to move `!Send` resources into it.

---

## Changelog

Added: `SubApp::new`

Removed: `App::add_sub_app`

## Migration Guide

`App::add_sub_app` has been removed in favor of `App::insert_sub_app`. Use `SubApp::new` and insert it via `App::add_sub_app`

Old:

```rust

let mut sub_app = App::new()

// Build subapp here

app.add_sub_app(MySubAppLabel, sub_app);

```

New:

```rust

let mut sub_app = App::new()

// Build subapp here

app.insert_sub_app(MySubAppLabel, SubApp::new(sub_app, extract_fn));

```

# Objective

`RenderContext`, the core abstraction for running the render graph, currently only supports recording one `CommandBuffer` across the entire render graph. This means the entire buffer must be recorded sequentially, usually via the render graph itself. This prevents parallelization and forces users to only encode their commands in the render graph.

## Solution

Allow `RenderContext` to store a `Vec<CommandBuffer>` that it progressively appends to. By default, the context will not have a command encoder, but will create one as soon as either `begin_tracked_render_pass` or the `command_encoder` accesor is first called. `RenderContext::add_command_buffer` allows users to interrupt the current command encoder, flush it to the vec, append a user-provided `CommandBuffer` and reset the command encoder to start a new buffer. Users or the render graph will call `RenderContext::finish` to retrieve the series of buffers for submitting to the queue.

This allows users to encode their own `CommandBuffer`s outside of the render graph, potentially in different threads, and store them in components or resources.

Ideally, in the future, the core pipeline passes can run in `RenderStage::Render` systems and end up saving the completed command buffers to either `Commands` or a field in `RenderPhase`.

## Alternatives

The alternative is to use to use wgpu's `RenderBundle`s, which can achieve similar results; however it's not universally available (no OpenGL, WebGL, and DX11).

---

## Changelog

Added: `RenderContext::new`

Added: `RenderContext::add_command_buffer`

Added: `RenderContext::finish`

Changed: `RenderContext::render_device` is now private. Use the accessor `RenderContext::render_device()` instead.

Changed: `RenderContext::command_encoder` is now private. Use the accessor `RenderContext::command_encoder()` instead.

Changed: `RenderContext` now supports adding external `CommandBuffer`s for inclusion into the render graphs. These buffers can be encoded outside of the render graph (i.e. in a system).

## Migration Guide

`RenderContext`'s fields are now private. Use the accessors on `RenderContext` instead, and construct it with `RenderContext::new`.

# Objective

Fixes#6931

Continues #6954 by squashing `Msaa` to a flat enum

Helps out #7215

# Solution

```

pub enum Msaa {

Off = 1,

#[default]

Sample4 = 4,

}

```

# Changelog

- Modified

- `Msaa` is now enum

- Defaults to 4 samples

- Uses `.samples()` method to get the sample number as `u32`

# Migration Guide

```

let multi = Msaa { samples: 4 }

// is now

let multi = Msaa::Sample4

multi.samples

// is now

multi.samples()

```

Co-authored-by: Sjael <jakeobrien44@gmail.com>

After #6503, bevy_render uses the `send_blocking` method introduced in async-channel 1.7, but depended only on ^1.4.

I saw this after pulling main without running cargo update.

# Objective

- Fix minimum dependency version of async-channel

## Solution

- Bump async-channel version constraint to ^1.8, which is currently the latest version.

NOTE: Both bevy_ecs and bevy_tasks also depend on async-channel but they didn't use any newer features.

# Objective

Fixes#3184. Fixes#6640. Fixes#4798. Using `Query::par_for_each(_mut)` currently requires a `batch_size` parameter, which affects how it chunks up large archetypes and tables into smaller chunks to run in parallel. Tuning this value is difficult, as the performance characteristics entirely depends on the state of the `World` it's being run on. Typically, users will just use a flat constant and just tune it by hand until it performs well in some benchmarks. However, this is both error prone and risks overfitting the tuning on that benchmark.

This PR proposes a naive automatic batch-size computation based on the current state of the `World`.

## Background

`Query::par_for_each(_mut)` schedules a new Task for every archetype or table that it matches. Archetypes/tables larger than the batch size are chunked into smaller tasks. Assuming every entity matched by the query has an identical workload, this makes the worst case scenario involve using a batch size equal to the size of the largest matched archetype or table. Conversely, a batch size of `max {archetype, table} size / thread count * COUNT_PER_THREAD` is likely the sweetspot where the overhead of scheduling tasks is minimized, at least not without grouping small archetypes/tables together.

There is also likely a strict minimum batch size below which the overhead of scheduling these tasks is heavier than running the entire thing single-threaded.

## Solution

- [x] Remove the `batch_size` from `Query(State)::par_for_each` and friends.

- [x] Add a check to compute `batch_size = max {archeytpe/table} size / thread count * COUNT_PER_THREAD`

- [x] ~~Panic if thread count is 0.~~ Defer to `for_each` if the thread count is 1 or less.

- [x] Early return if there is no matched table/archetype.

- [x] Add override option for users have queries that strongly violate the initial assumption that all iterated entities have an equal workload.

---

## Changelog

Changed: `Query::par_for_each(_mut)` has been changed to `Query::par_iter(_mut)` and will now automatically try to produce a batch size for callers based on the current `World` state.

## Migration Guide

The `batch_size` parameter for `Query(State)::par_for_each(_mut)` has been removed. These calls will automatically compute a batch size for you. Remove these parameters from all calls to these functions.

Before:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_for_each(32, |comp| {

...

});

}

```

After:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_iter().for_each(|comp| {

...

});

}

```

Co-authored-by: Arnav Choubey <56453634+x-52@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Corey Farwell <coreyf@rwell.org>

Co-authored-by: Aevyrie <aevyrie@gmail.com>

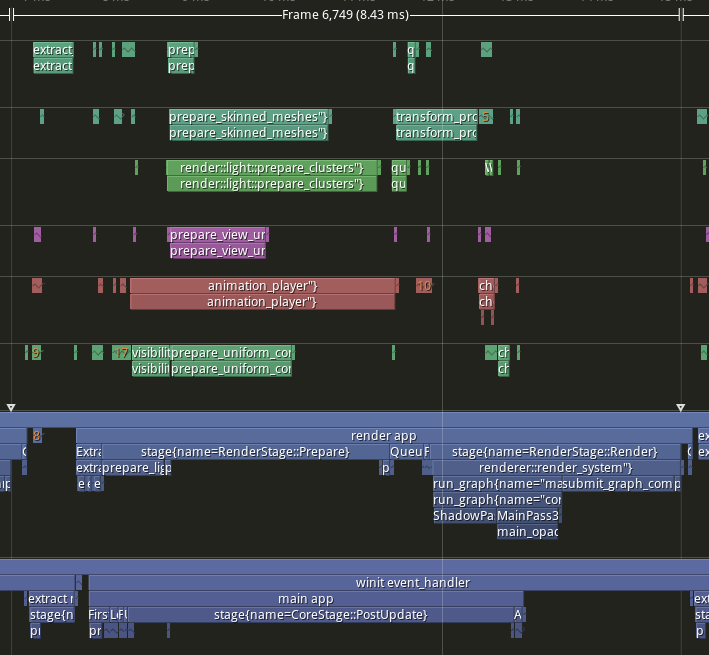

# Objective

- Implement pipelined rendering

- Fixes#5082

- Fixes#4718

## User Facing Description

Bevy now implements piplelined rendering! Pipelined rendering allows the app logic and rendering logic to run on different threads leading to large gains in performance.

*tracy capture of many_foxes example*

To use pipelined rendering, you just need to add the `PipelinedRenderingPlugin`. If you're using `DefaultPlugins` then it will automatically be added for you on all platforms except wasm. Bevy does not currently support multithreading on wasm which is needed for this feature to work. If you aren't using `DefaultPlugins` you can add the plugin manually.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new()

// whatever other plugins you need

.add_plugin(RenderPlugin)

// needs to be added after RenderPlugin

.add_plugin(PipelinedRenderingPlugin)

.run();

}

```

If for some reason pipelined rendering needs to be removed. You can also disable the plugin the normal way.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new.add_plugins(DefaultPlugins.build().disable::<PipelinedRenderingPlugin>());

}

```

### A setup function was added to plugins

A optional plugin lifecycle function was added to the `Plugin trait`. This function is called after all plugins have been built, but before the app runner is called. This allows for some final setup to be done. In the case of pipelined rendering, the function removes the sub app from the main app and sends it to the render thread.

```rust

struct MyPlugin;

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

}

// optional function

fn setup(&self, app: &mut App) {

// do some final setup before runner is called

}

}

```

### A Stage for Frame Pacing

In the `RenderExtractApp` there is a stage labelled `BeforeIoAfterRenderStart` that systems can be added to. The specific use case for this stage is for a frame pacing system that can delay the start of main app processing in render bound apps to reduce input latency i.e. "frame pacing". This is not currently built into bevy, but exists as `bevy`

```text

|-------------------------------------------------------------------|

| | BeforeIoAfterRenderStart | winit events | main schedule |

| extract |---------------------------------------------------------|

| | extract commands | rendering schedule |

|-------------------------------------------------------------------|

```

### Small API additions

* `Schedule::remove_stage`

* `App::insert_sub_app`

* `App::remove_sub_app`

* `TaskPool::scope_with_executor`

## Problems and Solutions

### Moving render app to another thread

Most of the hard bits for this were done with the render redo. This PR just sends the render app back and forth through channels which seems to work ok. I originally experimented with using a scope to run the render task. It was cuter, but that approach didn't allow render to start before i/o processing. So I switched to using channels. There is much complexity in the coordination that needs to be done, but it's worth it. By moving rendering during i/o processing the frame times should be much more consistent in render bound apps. See https://github.com/bevyengine/bevy/issues/4691.

### Unsoundness with Sending World with NonSend resources

Dropping !Send things on threads other than the thread they were spawned on is considered unsound. The render world doesn't have any nonsend resources. So if we tell the users to "pretty please don't spawn nonsend resource on the render world", we can avoid this problem.

More seriously there is this https://github.com/bevyengine/bevy/pull/6534 pr, which patches the unsoundness by aborting the app if a nonsend resource is dropped on the wrong thread. ~~That PR should probably be merged before this one.~~ For a longer term solution we have this discussion going https://github.com/bevyengine/bevy/discussions/6552.

### NonSend Systems in render world

The render world doesn't have any !Send resources, but it does have a non send system. While Window is Send, winit does have some API's that can only be accessed on the main thread. `prepare_windows` in the render schedule thus needs to be scheduled on the main thread. Currently we run nonsend systems by running them on the thread the TaskPool::scope runs on. When we move render to another thread this no longer works.

To fix this, a new `scope_with_executor` method was added that takes a optional `TheadExecutor` that can only be ticked on the thread it was initialized on. The render world then holds a `MainThreadExecutor` resource which can be passed to the scope in the parallel executor that it uses to spawn it's non send systems on.

### Scopes executors between render and main should not share tasks

Since the render world and the app world share the `ComputeTaskPool`. Because `scope` has executors for the ComputeTaskPool a system from the main world could run on the render thread or a render system could run on the main thread. This can cause performance problems because it can delay a stage from finishing. See https://github.com/bevyengine/bevy/pull/6503#issuecomment-1309791442 for more details.

To avoid this problem, `TaskPool::scope` has been changed to not tick the ComputeTaskPool when it's used by the parallel executor. In the future when we move closer to the 1 thread to 1 logical core model we may want to overprovide threads, because the render and main app threads don't do much when executing the schedule.

## Performance

My machine is Windows 11, AMD Ryzen 5600x, RX 6600

### Examples

#### This PR with pipelining vs Main

> Note that these were run on an older version of main and the performance profile has probably changed due to optimizations

Seeing a perf gain from 29% on many lights to 7% on many sprites.

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | 27.01% | 27.34% | -47.09% | 1.58 | 1.55 | -1.78 | 5.85 | 5.67 | 3.78 | 4.27 | 4.12 | 5.56

many lights | 29.35% | 29.94% | -10.84% | 3.02 | 3.03 | -0.57 | 10.29 | 10.12 | 5.26 | 7.27 | 7.09 | 5.83

many animated sprites | 13.97% | 15.69% | 14.20% | 3.79 | 4.17 | 1.41 | 27.12 | 26.57 | 9.93 | 23.33 | 22.4 | 8.52

3d scene | 25.79% | 26.78% | 7.46% | 0.49 | 0.49 | 0.15 | 1.9 | 1.83 | 2.01 | 1.41 | 1.34 | 1.86

many cubes | 11.97% | 11.28% | 14.51% | 1.93 | 1.78 | 1.31 | 16.13 | 15.78 | 9.03 | 14.2 | 14 | 7.72

many sprites | 7.14% | 9.42% | -85.42% | 1.72 | 2.23 | -6.15 | 24.09 | 23.68 | 7.2 | 22.37 | 21.45 | 13.35

<!--EndFragment-->

</body>

</html>

#### This PR with pipelining disabled vs Main

Mostly regressions here. I don't think this should be a problem as users that are disabling pipelined rendering are probably running single threaded and not using the parallel executor. The regression is probably mostly due to the switch to use `async_executor::run` instead of `try_tick` and also having one less thread to run systems on. I'll do a writeup on why switching to `run` causes regressions, so we can try to eventually fix it. Using try_tick causes issues when pipeline rendering is enable as seen [here](https://github.com/bevyengine/bevy/pull/6503#issuecomment-1380803518)

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR no pipelining | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | -3.72% | -4.42% | -1.07% | -0.21 | -0.24 | -0.04 | 5.64 | 5.43 | 3.74 | 5.85 | 5.67 | 3.78

many lights | 0.29% | -0.30% | 4.75% | 0.03 | -0.03 | 0.25 | 10.29 | 10.12 | 5.26 | 10.26 | 10.15 | 5.01

many animated sprites | 0.22% | 1.81% | -2.72% | 0.06 | 0.48 | -0.27 | 27.12 | 26.57 | 9.93 | 27.06 | 26.09 | 10.2

3d scene | -15.79% | -14.75% | -31.34% | -0.3 | -0.27 | -0.63 | 1.9 | 1.83 | 2.01 | 2.2 | 2.1 | 2.64

many cubes | -2.85% | -3.30% | 0.00% | -0.46 | -0.52 | 0 | 16.13 | 15.78 | 9.03 | 16.59 | 16.3 | 9.03

many sprites | 2.49% | 2.41% | 0.69% | 0.6 | 0.57 | 0.05 | 24.09 | 23.68 | 7.2 | 23.49 | 23.11 | 7.15

<!--EndFragment-->

</body>

</html>

### Benchmarks

Mostly the same except empty_systems has got a touch slower. The maybe_pipelining+1 column has the compute task pool with an extra thread over default added. This is because pipelining loses one thread over main to execute systems on, since the main thread no longer runs normal systems.

<details>

<summary>Click Me</summary>

```text

group main maybe-pipelining+1

----- ------------------------- ------------------

busy_systems/01x_entities_03_systems 1.07 30.7±1.32µs ? ?/sec 1.00 28.6±1.35µs ? ?/sec

busy_systems/01x_entities_06_systems 1.10 52.1±1.10µs ? ?/sec 1.00 47.2±1.08µs ? ?/sec

busy_systems/01x_entities_09_systems 1.00 74.6±1.36µs ? ?/sec 1.00 75.0±1.93µs ? ?/sec

busy_systems/01x_entities_12_systems 1.03 100.6±6.68µs ? ?/sec 1.00 98.0±1.46µs ? ?/sec

busy_systems/01x_entities_15_systems 1.11 128.5±3.53µs ? ?/sec 1.00 115.5±1.02µs ? ?/sec

busy_systems/02x_entities_03_systems 1.16 50.4±2.56µs ? ?/sec 1.00 43.5±3.00µs ? ?/sec

busy_systems/02x_entities_06_systems 1.00 87.1±1.27µs ? ?/sec 1.05 91.5±7.15µs ? ?/sec

busy_systems/02x_entities_09_systems 1.04 139.9±6.37µs ? ?/sec 1.00 134.0±1.06µs ? ?/sec

busy_systems/02x_entities_12_systems 1.05 179.2±3.47µs ? ?/sec 1.00 170.1±3.17µs ? ?/sec

busy_systems/02x_entities_15_systems 1.01 219.6±3.75µs ? ?/sec 1.00 218.1±2.55µs ? ?/sec

busy_systems/03x_entities_03_systems 1.10 70.6±2.33µs ? ?/sec 1.00 64.3±0.69µs ? ?/sec

busy_systems/03x_entities_06_systems 1.02 130.2±3.11µs ? ?/sec 1.00 128.0±1.34µs ? ?/sec

busy_systems/03x_entities_09_systems 1.00 195.0±10.11µs ? ?/sec 1.00 194.8±1.41µs ? ?/sec

busy_systems/03x_entities_12_systems 1.01 261.7±4.05µs ? ?/sec 1.00 259.8±4.11µs ? ?/sec

busy_systems/03x_entities_15_systems 1.00 318.0±3.04µs ? ?/sec 1.06 338.3±20.25µs ? ?/sec

busy_systems/04x_entities_03_systems 1.00 82.9±0.63µs ? ?/sec 1.02 84.3±0.63µs ? ?/sec

busy_systems/04x_entities_06_systems 1.01 181.7±3.65µs ? ?/sec 1.00 179.8±1.76µs ? ?/sec

busy_systems/04x_entities_09_systems 1.04 265.0±4.68µs ? ?/sec 1.00 255.3±1.98µs ? ?/sec

busy_systems/04x_entities_12_systems 1.00 335.9±3.00µs ? ?/sec 1.05 352.6±15.84µs ? ?/sec

busy_systems/04x_entities_15_systems 1.00 418.6±10.26µs ? ?/sec 1.08 450.2±39.58µs ? ?/sec

busy_systems/05x_entities_03_systems 1.07 114.3±0.95µs ? ?/sec 1.00 106.9±1.52µs ? ?/sec

busy_systems/05x_entities_06_systems 1.08 229.8±2.90µs ? ?/sec 1.00 212.3±4.18µs ? ?/sec

busy_systems/05x_entities_09_systems 1.03 329.3±1.99µs ? ?/sec 1.00 319.2±2.43µs ? ?/sec

busy_systems/05x_entities_12_systems 1.06 454.7±6.77µs ? ?/sec 1.00 430.1±3.58µs ? ?/sec

busy_systems/05x_entities_15_systems 1.03 554.6±6.15µs ? ?/sec 1.00 538.4±23.87µs ? ?/sec

contrived/01x_entities_03_systems 1.00 14.0±0.15µs ? ?/sec 1.08 15.1±0.21µs ? ?/sec

contrived/01x_entities_06_systems 1.04 28.5±0.37µs ? ?/sec 1.00 27.4±0.44µs ? ?/sec

contrived/01x_entities_09_systems 1.00 41.5±4.38µs ? ?/sec 1.02 42.2±2.24µs ? ?/sec

contrived/01x_entities_12_systems 1.06 55.9±1.49µs ? ?/sec 1.00 52.6±1.36µs ? ?/sec

contrived/01x_entities_15_systems 1.02 68.0±2.00µs ? ?/sec 1.00 66.5±0.78µs ? ?/sec

contrived/02x_entities_03_systems 1.03 25.2±0.38µs ? ?/sec 1.00 24.6±0.52µs ? ?/sec

contrived/02x_entities_06_systems 1.00 46.3±0.49µs ? ?/sec 1.04 48.1±4.13µs ? ?/sec

contrived/02x_entities_09_systems 1.02 70.4±0.99µs ? ?/sec 1.00 68.8±1.04µs ? ?/sec

contrived/02x_entities_12_systems 1.06 96.8±1.49µs ? ?/sec 1.00 91.5±0.93µs ? ?/sec

contrived/02x_entities_15_systems 1.02 116.2±0.95µs ? ?/sec 1.00 114.2±1.42µs ? ?/sec

contrived/03x_entities_03_systems 1.00 33.2±0.38µs ? ?/sec 1.01 33.6±0.45µs ? ?/sec

contrived/03x_entities_06_systems 1.00 62.4±0.73µs ? ?/sec 1.01 63.3±1.05µs ? ?/sec

contrived/03x_entities_09_systems 1.02 96.4±0.85µs ? ?/sec 1.00 94.8±3.02µs ? ?/sec

contrived/03x_entities_12_systems 1.01 126.3±4.67µs ? ?/sec 1.00 125.6±2.27µs ? ?/sec

contrived/03x_entities_15_systems 1.03 160.2±9.37µs ? ?/sec 1.00 156.0±1.53µs ? ?/sec

contrived/04x_entities_03_systems 1.02 41.4±3.39µs ? ?/sec 1.00 40.5±0.52µs ? ?/sec

contrived/04x_entities_06_systems 1.00 78.9±1.61µs ? ?/sec 1.02 80.3±1.06µs ? ?/sec

contrived/04x_entities_09_systems 1.02 121.8±3.97µs ? ?/sec 1.00 119.2±1.46µs ? ?/sec

contrived/04x_entities_12_systems 1.00 157.8±1.48µs ? ?/sec 1.01 160.1±1.72µs ? ?/sec

contrived/04x_entities_15_systems 1.00 197.9±1.47µs ? ?/sec 1.08 214.2±34.61µs ? ?/sec

contrived/05x_entities_03_systems 1.00 49.1±0.33µs ? ?/sec 1.01 49.7±0.75µs ? ?/sec

contrived/05x_entities_06_systems 1.00 95.0±0.93µs ? ?/sec 1.00 94.6±0.94µs ? ?/sec

contrived/05x_entities_09_systems 1.01 143.2±1.68µs ? ?/sec 1.00 142.2±2.00µs ? ?/sec

contrived/05x_entities_12_systems 1.00 191.8±2.03µs ? ?/sec 1.01 192.7±7.88µs ? ?/sec

contrived/05x_entities_15_systems 1.02 239.7±3.71µs ? ?/sec 1.00 235.8±4.11µs ? ?/sec

empty_systems/000_systems 1.01 47.8±0.67ns ? ?/sec 1.00 47.5±2.02ns ? ?/sec

empty_systems/001_systems 1.00 1743.2±126.14ns ? ?/sec 1.01 1761.1±70.10ns ? ?/sec

empty_systems/002_systems 1.01 2.2±0.04µs ? ?/sec 1.00 2.2±0.02µs ? ?/sec

empty_systems/003_systems 1.02 2.7±0.09µs ? ?/sec 1.00 2.7±0.16µs ? ?/sec

empty_systems/004_systems 1.00 3.1±0.11µs ? ?/sec 1.00 3.1±0.24µs ? ?/sec

empty_systems/005_systems 1.00 3.5±0.05µs ? ?/sec 1.11 3.9±0.70µs ? ?/sec

empty_systems/010_systems 1.00 5.5±0.12µs ? ?/sec 1.03 5.7±0.17µs ? ?/sec

empty_systems/015_systems 1.00 7.9±0.19µs ? ?/sec 1.06 8.4±0.16µs ? ?/sec

empty_systems/020_systems 1.00 10.4±1.25µs ? ?/sec 1.02 10.6±0.18µs ? ?/sec

empty_systems/025_systems 1.00 12.4±0.39µs ? ?/sec 1.14 14.1±1.07µs ? ?/sec

empty_systems/030_systems 1.00 15.1±0.39µs ? ?/sec 1.05 15.8±0.62µs ? ?/sec

empty_systems/035_systems 1.00 16.9±0.47µs ? ?/sec 1.07 18.0±0.37µs ? ?/sec

empty_systems/040_systems 1.00 19.3±0.41µs ? ?/sec 1.05 20.3±0.39µs ? ?/sec

empty_systems/045_systems 1.00 22.4±1.67µs ? ?/sec 1.02 22.9±0.51µs ? ?/sec

empty_systems/050_systems 1.00 24.4±1.67µs ? ?/sec 1.01 24.7±0.40µs ? ?/sec

empty_systems/055_systems 1.05 28.6±5.27µs ? ?/sec 1.00 27.2±0.70µs ? ?/sec

empty_systems/060_systems 1.02 29.9±1.64µs ? ?/sec 1.00 29.3±0.66µs ? ?/sec

empty_systems/065_systems 1.02 32.7±3.15µs ? ?/sec 1.00 32.1±0.98µs ? ?/sec

empty_systems/070_systems 1.00 33.0±1.42µs ? ?/sec 1.03 34.1±1.44µs ? ?/sec

empty_systems/075_systems 1.00 34.8±0.89µs ? ?/sec 1.04 36.2±0.70µs ? ?/sec

empty_systems/080_systems 1.00 37.0±1.82µs ? ?/sec 1.05 38.7±1.37µs ? ?/sec

empty_systems/085_systems 1.00 38.7±0.76µs ? ?/sec 1.05 40.8±0.83µs ? ?/sec

empty_systems/090_systems 1.00 41.5±1.09µs ? ?/sec 1.04 43.2±0.82µs ? ?/sec

empty_systems/095_systems 1.00 43.6±1.10µs ? ?/sec 1.04 45.2±0.99µs ? ?/sec

empty_systems/100_systems 1.00 46.7±2.27µs ? ?/sec 1.03 48.1±1.25µs ? ?/sec

```

</details>

## Migration Guide

### App `runner` and SubApp `extract` functions are now required to be Send

This was changed to enable pipelined rendering. If this breaks your use case please report it as these new bounds might be able to be relaxed.

## ToDo

* [x] redo benchmarking

* [x] reinvestigate the perf of the try_tick -> run change for task pool scope

# Objective

- Add a configurable prepass

- A depth prepass is useful for various shader effects and to reduce overdraw. It can be expansive depending on the scene so it's important to be able to disable it if you don't need any effects that uses it or don't suffer from excessive overdraw.

- The goal is to eventually use it for things like TAA, Ambient Occlusion, SSR and various other techniques that can benefit from having a prepass.

## Solution

The prepass node is inserted before the main pass. It runs for each `Camera3d` with a prepass component (`DepthPrepass`, `NormalPrepass`). The presence of one of those components is used to determine which textures are generated in the prepass. When any prepass is enabled, the depth buffer generated will be used by the main pass to reduce overdraw.

The prepass runs for each `Material` created with the `MaterialPlugin::prepass_enabled` option set to `true`. You can overload the shader used by the prepass by using `Material::prepass_vertex_shader()` and/or `Material::prepass_fragment_shader()`. It will also use the `Material::specialize()` for more advanced use cases. It is enabled by default on all materials.

The prepass works on opaque materials and materials using an alpha mask. Transparent materials are ignored.

The `StandardMaterial` overloads the prepass fragment shader to support alpha mask and normal maps.

---

## Changelog

- Add a new `PrepassNode` that runs before the main pass

- Add a `PrepassPlugin` to extract/prepare/queue the necessary data

- Add a `DepthPrepass` and `NormalPrepass` component to control which textures will be created by the prepass and available in later passes.

- Add a new `prepass_enabled` flag to the `MaterialPlugin` that will control if a material uses the prepass or not.

- Add a new `prepass_enabled` flag to the `PbrPlugin` to control if the StandardMaterial uses the prepass. Currently defaults to false.

- Add `Material::prepass_vertex_shader()` and `Material::prepass_fragment_shader()` to control the prepass from the `Material`

## Notes

In bevy's sample 3d scene, the performance is actually worse when enabling the prepass, but on more complex scenes the performance is generally better. I would like more testing on this, but @DGriffin91 has reported a very noticeable improvements in some scenes.

The prepass is also used by @JMS55 for TAA and GTAO

discord thread: <https://discord.com/channels/691052431525675048/1011624228627419187>

This PR was built on top of the work of multiple people

Co-Authored-By: @superdump

Co-Authored-By: @robtfm

Co-Authored-By: @JMS55

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

Fix https://github.com/bevyengine/bevy/issues/4530

- Make it easier to open/close/modify windows by setting them up as `Entity`s with a `Window` component.

- Make multiple windows very simple to set up. (just add a `Window` component to an entity and it should open)

## Solution

- Move all properties of window descriptor to ~components~ a component.

- Replace `WindowId` with `Entity`.

- ~Use change detection for components to update backend rather than events/commands. (The `CursorMoved`/`WindowResized`/... events are kept for user convenience.~

Check each field individually to see what we need to update, events are still kept for user convenience.

---

## Changelog

- `WindowDescriptor` renamed to `Window`.

- Width/height consolidated into a `WindowResolution` component.

- Requesting maximization/minimization is done on the [`Window::state`] field.

- `WindowId` is now `Entity`.

## Migration Guide

- Replace `WindowDescriptor` with `Window`.

- Change `width` and `height` fields in a `WindowResolution`, either by doing

```rust

WindowResolution::new(width, height) // Explicitly

// or using From<_> for tuples for convenience

(1920., 1080.).into()

```

- Replace any `WindowCommand` code to just modify the `Window`'s fields directly and creating/closing windows is now by spawning/despawning an entity with a `Window` component like so:

```rust

let window = commands.spawn(Window { ... }).id(); // open window

commands.entity(window).despawn(); // close window

```

## Unresolved

- ~How do we tell when a window is minimized by a user?~

~Currently using the `Resize(0, 0)` as an indicator of minimization.~

No longer attempting to tell given how finnicky this was across platforms, now the user can only request that a window be maximized/minimized.

## Future work

- Move `exit_on_close` functionality out from windowing and into app(?)

- https://github.com/bevyengine/bevy/issues/5621

- https://github.com/bevyengine/bevy/issues/7099

- https://github.com/bevyengine/bevy/issues/7098

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Speed up the render phase of rendering. An extension of #6885.

`SystemState::get` increments the `World`'s change tick atomically every time it's called. This is notably more expensive than a unsynchronized increment, even without contention. It also updates the archetypes, even when there has been nothing to update when it's called repeatedly.

## Solution

Piggyback off of #6885. Split `SystemState::validate_world_and_update_archetypes` into `SystemState::validate_world` and `SystemState::update_archetypes`, and make the later `pub`. Then create safe variants of `SystemState::get_unchecked_manual` that still validate the `World` but do not update archetypes and do not increment the change tick using `World::read_change_tick` and `World::change_tick`. Update `RenderCommandState` to call `SystemState::update_archetypes` in `Draw::prepare` and `SystemState::get_manual` in `Draw::draw`.

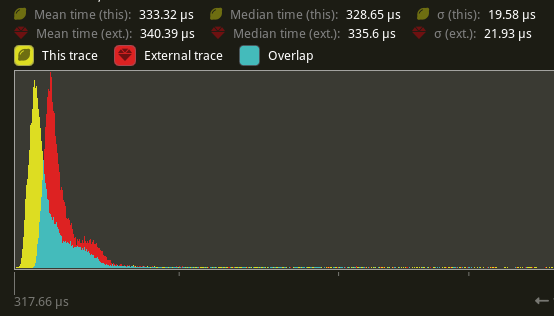

## Performance

There's a slight perf benefit (~2%) for `main_opaque_pass_3d` on `many_foxes` (340.39 us -> 333.32 us)

## Alternatives

We can change `SystemState::get` to not increment the `World`'s change tick. Though this would still put updating the archetypes and an atomic read on the hot-path.

---

## Changelog

Added: `SystemState::get_manual`

Added: `SystemState::get_manual_mut`

Added: `SystemState::update_archetypes`

# Objective

- Allow rendering queue systems to use a `Res<PipelineCache>` even for queueing up new rendering pipelines. This is part of unblocking parallel execution queue systems.

## Solution

- Make `PipelineCache` internally mutable w.r.t to queueing new pipelines. Pipelines are no longer immediately updated into the cache state, but rather queued into a Vec. The Vec of pending new pipelines is then later processed at the same time we actually create the queued pipelines on the GPU device.

---

## Changelog

`PipelineCache` no longer requires mutable access in order to queue render / compute pipelines.

## Migration Guide

* Most usages of `resource_mut::<PipelineCache>` and `ResMut<PipelineCache>` can be changed to `resource::<PipelineCache>` and `Res<PipelineCache>` as long as they don't use any methods requiring mutability - the only public method requiring it is `process_queue`.

# Objective

The documentation of the bevy_render crate is still pretty incomplete.

This PR follows up on #6885 and improves the documentation of the `render_phase` module.

This module contains one of our most important rendering abstractions and the current documentation is pretty confusing. This PR tries to clarify what all of these pieces are for and how they work together to form bevy`s modular rendering logic.

## Solution

### Code Reformating

- I have moved the `rangefinder` into the `render_phase` module since it is only used there.

- I have moved the `PhaseItem` (and the `BatchedPhaseItem`) from `render_phase::draw` over to `render_phase::mod`. This does not change the public-facing API since they are reexported anyway, but this change makes the relation between `RenderPhase` and `PhaseItem` clear and easier to discover.

### Documentation

- revised all documentation in the `render_phase` module

- added a module-level explanation of how `RenderPhase`s, `RenderPass`es, `PhaseItem`s, `Draw` functions, and `RenderCommands` relate to each other and how they are used

---

## Changelog

- The `rangefinder` module has been moved into the `render_phase` module.

## Migration Guide

- The `rangefinder` module has been moved into the `render_phase` module.

```rust

//old

use bevy::render::rangefinder::*;

// new

use bevy::render::render_phase::rangefinder::*;

```

# Objective

- There is a warning when building in release:

```

warning: unused import: `bevy_ecs::system::Local`

--> crates/bevy_render/src/extract_resource.rs:5:5

|

5 | use bevy_ecs::system::Local;

| ^^^^^^^^^^^^^^^^^^^^^^^

|

= note: `#[warn(unused_imports)]` on by default

```

- It's used 59751d6e33/crates/bevy_render/src/extract_resource.rs (L47)

- Fix it

## Solution

- Gate the import

- repeat of #5320

As mentioned in https://github.com/bevyengine/bevy/pull/6530. It allows to not create a new constant and simply having it to show up in the documentation when someone is looking for "transparent" (case insensitive) in rustdoc search.

cc @alice-i-cecile

# Objective

- Fixes#7066

## Solution

- Split the ChangeDetection trait into ChangeDetection and ChangeDetectionMut

- Added Ref as equivalent to &T with change detection

---

## Changelog

- Support for Ref which allow inspecting change detection flags in an immutable way

## Migration Guide

- While bevy prelude includes both ChangeDetection and ChangeDetectionMut any code explicitly referencing ChangeDetection might need to be updated to ChangeDetectionMut or both. Specifically any reading logic requires ChangeDetection while writes requires ChangeDetectionMut.

use bevy_ecs::change_detection::DetectChanges -> use bevy_ecs::change_detection::{DetectChanges, DetectChangesMut}

- Previously Res had methods to access change detection `is_changed` and `is_added` those methods have been moved to the `DetectChanges` trait. If you are including bevy prelude you will have access to these types otherwise you will need to `use bevy_ecs::change_detection::DetectChanges` to continue using them.

# Objective

Fixes#3310. Fixes#6282. Fixes#6278. Fixes#3666.

## Solution

Split out `!Send` resources into `NonSendResources`. Add a `origin_thread_id` to all `!Send` Resources, check it on dropping `NonSendResourceData`, if there's a mismatch, panic. Moved all of the checks that `MainThreadValidator` would do into `NonSendResources` instead.

All `!Send` resources now individually track which thread they were inserted from. This is validated against for every access, mutation, and drop that could be done against the value.

A regression test using an altered version of the example from #3310 has been added.

This is a stopgap solution for the current status quo. A full solution may involve fully removing `!Send` resources/components from `World`, which will likely require a much more thorough design on how to handle the existing in-engine and ecosystem use cases.

This PR also introduces another breaking change:

```rust

use bevy_ecs::prelude::*;

#[derive(Resource)]

struct Resource(u32);

fn main() {

let mut world = World::new();

world.insert_resource(Resource(1));

world.insert_non_send_resource(Resource(2));

let res = world.get_resource_mut::<Resource>().unwrap();

assert_eq!(res.0, 2);

}

```

This code will run correctly on 0.9.1 but not with this PR, since NonSend resources and normal resources have become actual distinct concepts storage wise.

## Changelog

Changed: Fix soundness bug with `World: Send`. Dropping a `World` that contains a `!Send` resource on the wrong thread will now panic.

## Migration Guide

Normal resources and `NonSend` resources no longer share the same backing storage. If `R: Resource`, then `NonSend<R>` and `Res<R>` will return different instances from each other. If you are using both `Res<T>` and `NonSend<T>` (or their mutable variants), to fetch the same resources, it's strongly advised to use `Res<T>`.

# Objective

Speed up the render phase for rendering.

## Solution

- Follow up #6988 and make the internals of atomic IDs `NonZeroU32`. This niches the `Option`s of the IDs in draw state, which reduces the size and branching behavior when evaluating for equality.

- Require `&RenderDevice` to get the device's `Limits` when initializing a `TrackedRenderPass` to preallocate the bind groups and vertex buffer state in `DrawState`, this removes the branch on needing to resize those `Vec`s.

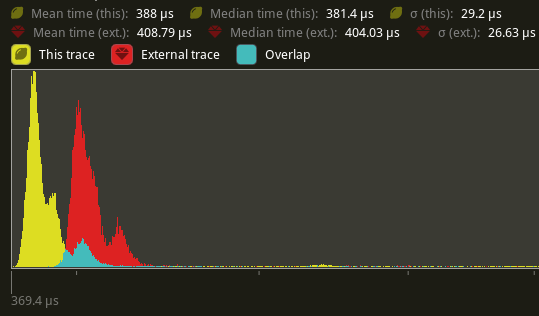

## Performance

This produces a similar speed up akin to that of #6885. This shows an approximate 6% speed up in `main_opaque_pass_3d` on `many_foxes` (408.79 us -> 388us). This should be orthogonal to the gains seen there.

---

## Changelog

Added: `RenderContext::begin_tracked_render_pass`.

Changed: `TrackedRenderPass` now requires a `&RenderDevice` on construction.

Removed: `bevy_render::render_phase::DrawState`. It was not usable in any form outside of `bevy_render`.

## Migration Guide

TODO

# Objective

- This pulls out some of the changes to Plugin setup and sub apps from #6503 to make that PR easier to review.

- Separate the extract stage from running the sub app's schedule to allow for them to be run on separate threads in the future

- Fixes#6990

## Solution

- add a run method to `SubApp` that runs the schedule

- change the name of `sub_app_runner` to extract to make it clear that this function is only for extracting data between the main app and the sub app

- remove the extract stage from the sub app schedule so it can be run separately. This is done by adding a `setup` method to the `Plugin` trait that runs after all plugin build methods run. This is required to allow the extract stage to be removed from the schedule after all the plugins have added their systems to the stage. We will also need the setup method for pipelined rendering to setup the render thread. See e3267965e1/crates/bevy_render/src/pipelined_rendering.rs (L57-L98)

## Changelog

- Separate SubApp Extract stage from running the sub app schedule.

## Migration Guide

### SubApp `runner` has conceptually been changed to an `extract` function.

The `runner` no longer is in charge of running the sub app schedule. It's only concern is now moving data between the main world and the sub app. The `sub_app.app.schedule` is now run for you after the provided function is called.

```rust

// before

fn main() {

let sub_app = App::empty();

sub_app.add_stage(MyStage, SystemStage::parallel());

App::new().add_sub_app(MySubApp, sub_app, move |main_world, sub_app| {

extract(app_world, render_app);

render_app.app.schedule.run();

});

}

// after

fn main() {

let sub_app = App::empty();

sub_app.add_stage(MyStage, SystemStage::parallel());

App::new().add_sub_app(MySubApp, sub_app, move |main_world, sub_app| {

extract(app_world, render_app);

// schedule is automatically called for you after extract is run

});

}

```

# Objective

- Storage buffers are useful and not currently supported by the `AsBindGroup` derive which means you need to expand the macro if you need a storage buffer

## Solution

- Add a new `#[storage]` attribute to the derive `AsBindGroup` macro.

- Support and optional `read_only` parameter that defaults to false when not present.

- Support visibility parameters like the texture and sampler attributes.

---

## Changelog

- Add a new `#[storage(index)]` attribute to the derive `AsBindGroup` macro.

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

# Objective

- Avoid slower than necessary first frame after spawning many entities due to them not having `Aabb`s and so being marked visible

- Avoids unnecessarily large system and VRAM allocations as a consequence

## Solution

- I noticed when debugging the `many_cubes` stress test in Xcode that the `MeshUniform` binding was much larger than it needed to be. I realised that this was because initially, all mesh entities are marked as being visible because they don't have `Aabb`s because `calculate_bounds` is being run in `PostUpdate` and there are no system commands applications before executing the visibility check systems that need the `Aabb`s. The solution then is to run the `calculate_bounds` system just before the previous system commands are applied which is at the end of the `Update` stage.

Spiritual successor to #5205.

Actual successor to #6865.

# Objective

Currently, system params are defined using three traits: `SystemParam`, `ReadOnlySystemParam`, `SystemParamState`. The behavior for each param is specified by the `SystemParamState` trait, while `SystemParam` simply defers to the state.

Splitting the traits in this way makes it easier to implement within macros, but it increases the cognitive load. Worst of all, this approach requires each `MySystemParam` to have a public `MySystemParamState` type associated with it.

## Solution

* Merge the trait `SystemParamState` into `SystemParam`.

* Remove all trivial `SystemParam` state types.

* `OptionNonSendMutState<T>`: you will not be missed.

---

- [x] Fix/resolve the remaining test failure.

## Changelog

* Removed the trait `SystemParamState`, merging its functionality into `SystemParam`.

## Migration Guide

**Note**: this should replace the migration guide for #6865.

This is relative to Bevy 0.9, not main.

The traits `SystemParamState` and `SystemParamFetch` have been removed, and their functionality has been transferred to `SystemParam`.

```rust

// Before (0.9)

impl SystemParam for MyParam<'_, '_> {

type State = MyParamState;

}

unsafe impl SystemParamState for MyParamState {

fn init(world: &mut World, system_meta: &mut SystemMeta) -> Self { ... }

}

unsafe impl<'w, 's> SystemParamFetch<'w, 's> for MyParamState {

type Item = MyParam<'w, 's>;

fn get_param(&mut self, ...) -> Self::Item;

}

unsafe impl ReadOnlySystemParamFetch for MyParamState { }

// After (0.10)

unsafe impl SystemParam for MyParam<'_, '_> {

type State = MyParamState;

type Item<'w, 's> = MyParam<'w, 's>;

fn init_state(world: &mut World, system_meta: &mut SystemMeta) -> Self::State { ... }

fn get_param<'w, 's>(state: &mut Self::State, ...) -> Self::Item<'w, 's>;

}

unsafe impl ReadOnlySystemParam for MyParam<'_, '_> { }

```

The trait `ReadOnlySystemParamFetch` has been replaced with `ReadOnlySystemParam`.

```rust

// Before

unsafe impl ReadOnlySystemParamFetch for MyParamState {}

// After

unsafe impl ReadOnlySystemParam for MyParam<'_, '_> {}

```

# Objective

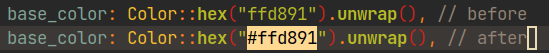

- When using `Color::hex` for the first time, I was confused by the fact that I can't specify colors using #, which is much more familiar.

- In the code editor (if there is support) there is a preview of the color, which is very convenient.

## Solution

- Allow you to enter colors like `#ff33f2` and use the `.strip_prefix` method to delete the `#` character.

# Objective

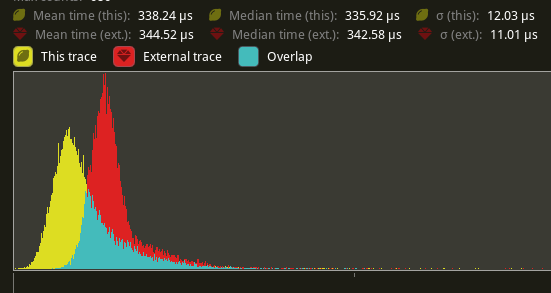

Speed up the render phase of rendering. Simplify the trait structure for render commands.

## Solution

- Merge `EntityPhaseItem` into `PhaseItem` (`EntityPhaseItem::entity` -> `PhaseItem::entity`)

- Merge `EntityRenderCommand` into `RenderCommand`.

- Add two associated types to `RenderCommand`: `RenderCommand::ViewWorldQuery` and `RenderCommand::WorldQuery`.

- Use the new associated types to construct two `QueryStates`s for `RenderCommandState`.

- Hoist any `SQuery<T>` fetches in `EntityRenderCommand`s into the aformentioned two queries. Batch fetch them all at once.

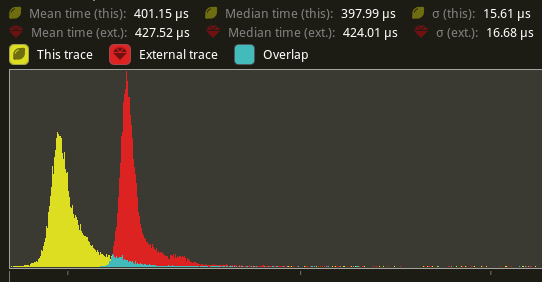

## Performance

`main_opaque_pass_3d` is slightly faster on `many_foxes` (427.52us -> 401.15us)

The shadow pass node is also slightly faster (344.52 -> 338.24us)

## Future Work

- Can we hoist the view level queries out of the core loop?

---

## Changelog

Added: `PhaseItem::entity`

Added: `RenderCommand::ViewWorldQuery` associated type.

Added: `RenderCommand::ItemorldQuery` associated type.

Added: `Draw<T>::prepare` optional trait function.

Removed: `EntityPhaseItem` trait

## Migration Guide

TODO

# Objective

- The recently merged PR #7013 does not allow multiple `RenderPhase`s to share the same `RenderPass`.

- Due to the introduced overhead we want to minimize the number of `RenderPass`es recorded during each frame.

## Solution

- Take a constructed `TrackedRenderPass` instead of a `RenderPassDiscriptor` as a parameter to the `RenderPhase::render` method.

---

## Changelog

To enable multiple `RenderPhases` to share the same `TrackedRenderPass`,

the `RenderPhase::render` signature has changed.

```rust

pub fn render<'w>(

&self,

render_pass: &mut TrackedRenderPass<'w>,

world: &'w World,

view: Entity)

```

Co-authored-by: Kurt Kühnert <51823519+kurtkuehnert@users.noreply.github.com>

# Objective

`TEXTURE_ADAPTER_SPECIFIC_FORMAT_FEATURES` was already included in `adapter.features()` on non-wasm target, and since it is the default value for `WgpuSettings.features`, the subsequent code will also combine into this feature:

b6066c30b6/crates/bevy_render/src/renderer/mod.rs (L155-L156)

# Objective

All `RenderPhases` follow the same render procedure.

The same code is duplicated multiple times across the codebase.

## Solution

I simply extracted this code into a method on the `RenderPhase`.

This avoids code duplication and makes setting up new `RenderPhases` easier.

---

## Changelog

### Changed

You can now set up the rendering code of a `RenderPhase` directly using the `RenderPhase::render` method, instead of implementing it manually in your render graph node.

# Objective

The documentation for camera priority is very confusing at the moment, it requires a bit of "double negative" kind of thinking.

# Solution

Flipping the wording on the documentation to reflect more common usecases like having an overlay camera and also renaming it to "order", since priority implies that it will override the other camera rather than have both run.

Consolidation of all the feedback about #6271 as well as the addition of an "unconditionally visible" mode.

# Objective

The current implementation of the `Visibility` struct simply wraps a boolean.. which seems like an odd pattern when rust has such nice enums that allow for more expression using pattern-matching.

Additionally as it stands Bevy only has two settings for visibility of an entity:

- "unconditionally hidden" `Visibility { is_visible: false }`,

- "inherit visibility from parent" `Visibility { is_visible: true }`

where a root level entity set to "inherit" is visible.

Note that given the behaviour, the current naming of the inner field is a little deceptive or unclear.

Using an enum for `Visibility` opens the door for adding an extra behaviour mode. This PR adds a new "unconditionally visible" mode, which causes an entity to be visible even if its Parent entity is hidden. There should not really be any performance cost to the addition of this new mode.

--

The recently added `toggle` method is removed in this PR, as its semantics could be confusing with 3 variants.

## Solution

Change the Visibility component into

```rust

enum Visibility {

Hidden, // unconditionally hidden

Visible, // unconditionally visible

Inherited, // inherit visibility from parent

}

```

---

## Changelog

### Changed

`Visibility` is now an enum

## Migration Guide

- evaluation of the `visibility.is_visible` field should now check for `visibility == Visibility::Inherited`.

- setting the `visibility.is_visible` field should now directly set the value: `*visibility = Visibility::Inherited`.

- usage of `Visibility::VISIBLE` or `Visibility::INVISIBLE` should now use `Visibility::Inherited` or `Visibility::Hidden` respectively.

- `ComputedVisibility::INVISIBLE` and `SpatialBundle::VISIBLE_IDENTITY` have been renamed to `ComputedVisibility::HIDDEN` and `SpatialBundle::INHERITED_IDENTITY` respectively.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- alternative to #2895

- as mentioned in #2535 the uuid based ids in the render module should be replaced with atomic-counted ones

## Solution

- instead of generating a random UUID for each render resource, this implementation increases an atomic counter

- this might be replaced by the ids of wgpu if they expose them directly in the future

- I have not benchmarked this solution yet, but this should be slightly faster in theory.

- Bevymark does not seem to be affected much by this change, which is to be expected.

- Nothing of our API has changed, other than that the IDs have lost their IMO rather insignificant documentation.

- Maybe the documentation could be added back into the macro, but this would complicate the code.

# Objective

The `WgpuSettings` resource is only used during plugin build. Move it into the `RenderPlugin` struct.

Changing these settings requires re-initializing the render context, which is currently not supported.

If it is supported in the future it should probably be more explicit than changing a field on a resource, maybe something similar to the `CreateWindow` event.

## Migration Guide

```rust

// Before (0.9)

App::new()

.insert_resource(WgpuSettings { .. })

.add_plugins(DefaultPlugins)

// After (0.10)

App::new()

.add_plugins(DefaultPlugins.set(RenderPlugin {

wgpu_settings: WgpuSettings { .. },

}))

```

Co-authored-by: devil-ira <justthecooldude@gmail.com>

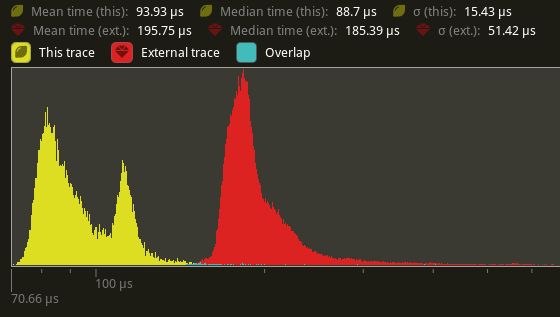

# Objective

Following #4402, extract systems run on the render world instead of the main world, and allow retained state operations on it's resources. We're currently extracting to `ExtractedJoints` and then copying it twice during Prepare. Once into `SkinnedMeshJoints` and again into the actual GPU buffer.

This makes #4902 obsolete.

## Solution

Cut out the middle copy and directly extract joints into `SkinnedMeshJoints` and remove `ExtractedJoints` entirely.

This also removes the per-frame allocation that is being made to send `ExtractedJoints` into the render world.

## Performance

On my local machine, this halves the time for `prepare_skinned _meshes` on `many_foxes` (195.75us -> 93.93us on average).

---

## Changelog

Added: `BufferVec::truncate`

Added: `BufferVec::extend`

Changed: `SkinnedMeshJoints::build` now takes a `&mut BufferVec` instead of a `&mut Vec` as a parameter.

Removed: `ExtractedJoints`.

## Migration Guide

`ExtractedJoints` has been removed. Read the bound bones from `SkinnedMeshJoints` instead.

# Objective

`AsBindGroup` can't be used as a trait object because of the constraint `Sized` and because of the associated function.

This is a problem for [`bevy_atmosphere`](https://github.com/JonahPlusPlus/bevy_atmosphere) because it needs to use a trait that depends on `AsBindGroup` as a trait object, for switching out different shaders at runtime. The current solution it employs is reimplementing the trait and derive macro into that trait, instead of constraining to `AsBindGroup`.

## Solution

Remove the `Sized` constraint from `AsBindGroup` and add the constraint `where Self: Sized` to the associated function `bind_group_layout`. Also change `PreparedBindGroup<T: AsBindGroup>` to `PreparedBindGroup<T>` and use it as `PreparedBindGroup<Self::Data>` instead of `PreparedBindGroup<Self>`.

This weakens the constraints, but increases the flexibility of `AsBindGroup`.

I'm not entirely sure why the `Sized` constraint was there, because it worked fine without it (maybe @cart wasn't aware of use cases for `AsBindGroup` as a trait object or this was just leftover from legacy code?).

---

## Changelog

- `AsBindGroup` can be used as a trait object.