# Objective

`add_system(system)` without any `.in_set` configuration should land in `CoreSet::Update`.

We check that the sets are empty, but for systems there is always the `SystemTypeset`.

## Solution

- instead of `is_empty()`, check that the only set it the `SystemTypeSet`

# Objective

The naming of the two plugin groups `DefaultPlugins` and `MinimalPlugins` suggests that one is a super-set of the other but this is not the case. Instead, the two plugin groups are intended for very different purposes.

Closes: https://github.com/bevyengine/bevy/issues/7173

## Solution

This merge request adds doc. comments that compensate for this and try save the user from confusion.

1. `DefaultPlugins` and `MinimalPlugins` intentions are described.

2. A strong emphasis on embracing `DefaultPlugins` as a whole but controlling what it contains with *Cargo* *features* is added – this is because the ordering in `DefaultPlugins` appears to be important so preventing users with "minimalist" foibles (That's Me!) from recreating the code seems worthwhile.

3. Notes are added explaining the confusing fact that `MinimalPlugins` contains `ScheduleRunnerPlugin` (which is very "important"-sounding) but `DefaultPlugins` does not.

# Objective

Help users understand how to write code that runs when the app is exiting.

See:

- #7067 (Partial resolution)

## Solution

Added documentation to `AppExit` class that mentions using the `Drop` trait for code that needs to run on program exit, as well as linking to the caveat about `App::run()` not being guaranteed to return.

# Objective

`bevy_ecs/system_param.rs` contains many seemingly-arbitrary struct definitions which serve as compile tests.

## Solution

Add a comment to each one, linking the issue or PR that motivated its addition.

# Objective

Shadows are broken on many_foxes on AMD GPUs. This seems to be due to rounding or floating point precision issues combined with the absolute unit of a plane that it's currently using.

Related: https://github.com/bevyengine/bevy/issues/6542

I'm not sure if we want to close that issue, as there's still the underlying issue of shadows breaking on overly large planes.

## Solution

Make the plane smaller.

# Objective

> ℹ️ **This is an adoption of #4081 by @james7132**

Fixes#4080.

Provide a way to pre-parse reflection paths so as to avoid having to parse at each call to `GetPath::path` (or similar method).

## Solution

Adds the `ParsedPath` struct (named `FieldPath` in the original PR) that parses and caches the sequence of accesses to a reflected element. This is functionally similar to the `GetPath` trait, but removes the need to parse an unchanged path more than once.

### Additional Changes

Included in this PR from the original is cleaner code as well as the introduction of a new pathing operation: field access by index. This allows struct and struct variant fields to be accessed in a more performant (albeit more fragile) way if needed. This operation is faster due to not having to perform string matching. As an example, if we wanted the third field on a struct, we'd write `#2`—where `#` denotes indexed access and `2` denotes the desired field index.

This PR also contains improved documentation for `GetPath` and friends, including renaming some of the methods to be more clear to the end-user with a reduced risk of getting them mixed up.

### Future Work

There are a few things that could be done as a separate PR (order doesn't matter— they could be followup PRs or done in parallel). These are:

- [x] ~~Add support for `Tuple`. Currently, we hint that they work but they do not.~~ See #7324

- [ ] Cleanup `ReflectPathError`. I think it would be nicer to give `ReflectPathError` two variants: `ReflectPathError::ParseError` and `ReflectPathError::AccessError`, with all current variants placed within one of those two. It's not obvious when one might expect to receive one type of error over the other, so we can help by explicitly categorizing them.

---

## Changelog

- Cleaned up `GetPath` logic

- Added `ParsedPath` for cached reflection paths

- Added new reflection path syntax: struct field access by index (example syntax: `foo#1`)

- Renamed methods on `GetPath`:

- `path` -> `reflect_path`

- `path_mut` -> `reflect_path_mut`

- `get_path` -> `path`

- `get_path_mut` -> `path_mut`

## Migration Guide

`GetPath` methods have been renamed according to the following:

- `path` -> `reflect_path`

- `path_mut` -> `reflect_path_mut`

- `get_path` -> `path`

- `get_path_mut` -> `path_mut`

Co-authored-by: Gino Valente <gino.valente.code@gmail.com>

# Objective

On wasm, bevy applications currently prevent any of the normal browser hotkeys from working normally (Ctrl+R, F12, F5, Ctrl+F5, tab, etc.).

Some of those events you may want to override, perhaps you can hold the tab key for showing in-game stats?

However, if you want to make a well-behaved game, you probably don't want to needlessly prevent that behavior unless you have a good reason.

Secondary motivation: Also, consider the workaround presented here to get audio working: https://developer.chrome.com/blog/web-audio-autoplay/#moving-forward ; It won't work (for keydown events) if we stop event propagation.

## Solution

- Winit has a field that allows it to not stop event propagation, expose it on the window settings to allow the user to choose the desired behavior. Default to `true` for backwards compatibility.

---

## Changelog

- Added `Window::prevent_default_event_handling` . This allows bevy apps to not override default browser behavior on hotkeys like F5, F12, Ctrl+R etc.

# Problemo

Some code in #5911 and #5454 does not compile with dynamic linking enabled.

The code is behind a feature gate to prevent dynamically linked builds from breaking, but it's not quite set up correctly.

## Solution

Forward the `dynamic` feature flag to the `bevy_diagnostic` crate and gate the code behind it.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

Fixes#5675. Replace `toml` with `toml_edit`

## Solution

Replace `toml` with `toml_edit`. This conveniently also removes the `serde` dependency from `bevy_macro_utils`, which may speed up cold compilation by removing the serde bottleneck from most of the macro crates in the engine.

# Objective

Speed up `prepare_uinodes`. The color `[f32; 4]` is being computed separately for every vertex in the UI, even though the color is the same for all 6 verticies.

## Solution

Avoid recomputing the color and cache it for all 6 verticies.

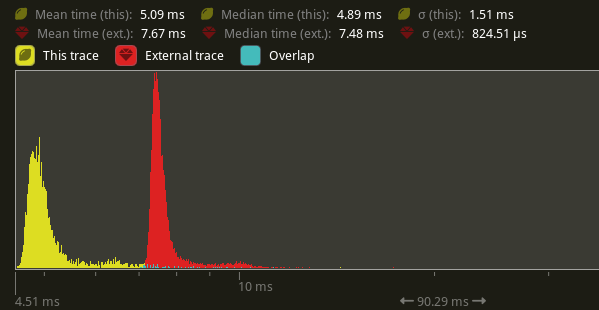

## Performance

On `many_buttons`, this shaved off 33% of the time in `prepare_uinodes` (7.67ms -> 5.09ms) on my local machine.

# Objective

`RenderContext`, the core abstraction for running the render graph, currently only supports recording one `CommandBuffer` across the entire render graph. This means the entire buffer must be recorded sequentially, usually via the render graph itself. This prevents parallelization and forces users to only encode their commands in the render graph.

## Solution

Allow `RenderContext` to store a `Vec<CommandBuffer>` that it progressively appends to. By default, the context will not have a command encoder, but will create one as soon as either `begin_tracked_render_pass` or the `command_encoder` accesor is first called. `RenderContext::add_command_buffer` allows users to interrupt the current command encoder, flush it to the vec, append a user-provided `CommandBuffer` and reset the command encoder to start a new buffer. Users or the render graph will call `RenderContext::finish` to retrieve the series of buffers for submitting to the queue.

This allows users to encode their own `CommandBuffer`s outside of the render graph, potentially in different threads, and store them in components or resources.

Ideally, in the future, the core pipeline passes can run in `RenderStage::Render` systems and end up saving the completed command buffers to either `Commands` or a field in `RenderPhase`.

## Alternatives

The alternative is to use to use wgpu's `RenderBundle`s, which can achieve similar results; however it's not universally available (no OpenGL, WebGL, and DX11).

---

## Changelog

Added: `RenderContext::new`

Added: `RenderContext::add_command_buffer`

Added: `RenderContext::finish`

Changed: `RenderContext::render_device` is now private. Use the accessor `RenderContext::render_device()` instead.

Changed: `RenderContext::command_encoder` is now private. Use the accessor `RenderContext::command_encoder()` instead.

Changed: `RenderContext` now supports adding external `CommandBuffer`s for inclusion into the render graphs. These buffers can be encoded outside of the render graph (i.e. in a system).

## Migration Guide

`RenderContext`'s fields are now private. Use the accessors on `RenderContext` instead, and construct it with `RenderContext::new`.

# Objective

- This PR adds support for blend modes to the PBR `StandardMaterial`.

<img width="1392" alt="Screenshot 2022-11-18 at 20 00 56" src="https://user-images.githubusercontent.com/418473/202820627-0636219a-a1e5-437a-b08b-b08c6856bf9c.png">

<img width="1392" alt="Screenshot 2022-11-18 at 20 01 01" src="https://user-images.githubusercontent.com/418473/202820615-c8d43301-9a57-49c4-bd21-4ae343c3e9ec.png">

## Solution

- The existing `AlphaMode` enum is extended, adding three more modes: `AlphaMode::Premultiplied`, `AlphaMode::Add` and `AlphaMode::Multiply`;

- All new modes are rendered in the existing `Transparent3d` phase;

- The existing mesh flags for alpha mode are reorganized for a more compact/efficient representation, and new values are added;

- `MeshPipelineKey::TRANSPARENT_MAIN_PASS` is refactored into `MeshPipelineKey::BLEND_BITS`.

- `AlphaMode::Opaque` and `AlphaMode::Mask(f32)` share a single opaque pipeline key: `MeshPipelineKey::BLEND_OPAQUE`;

- `Blend`, `Premultiplied` and `Add` share a single premultiplied alpha pipeline key, `MeshPipelineKey::BLEND_PREMULTIPLIED_ALPHA`. In the shader, color values are premultiplied accordingly (or not) depending on the blend mode to produce the three different results after PBR/tone mapping/dithering;

- `Multiply` uses its own independent pipeline key, `MeshPipelineKey::BLEND_MULTIPLY`;

- Example and documentation are provided.

---

## Changelog

### Added

- Added support for additive and multiplicative blend modes in the PBR `StandardMaterial`, via `AlphaMode::Add` and `AlphaMode::Multiply`;

- Added support for premultiplied alpha in the PBR `StandardMaterial`, via `AlphaMode::Premultiplied`;

Current info is not up to date as we are now using a train release model and frequently end up with PRs and issues in the milestone that are not resolved before release. As the release milestone is now mostly used for prioritizing what work gets done I updated this section to be about prioritizing PRs/issues instead of preparing releases.

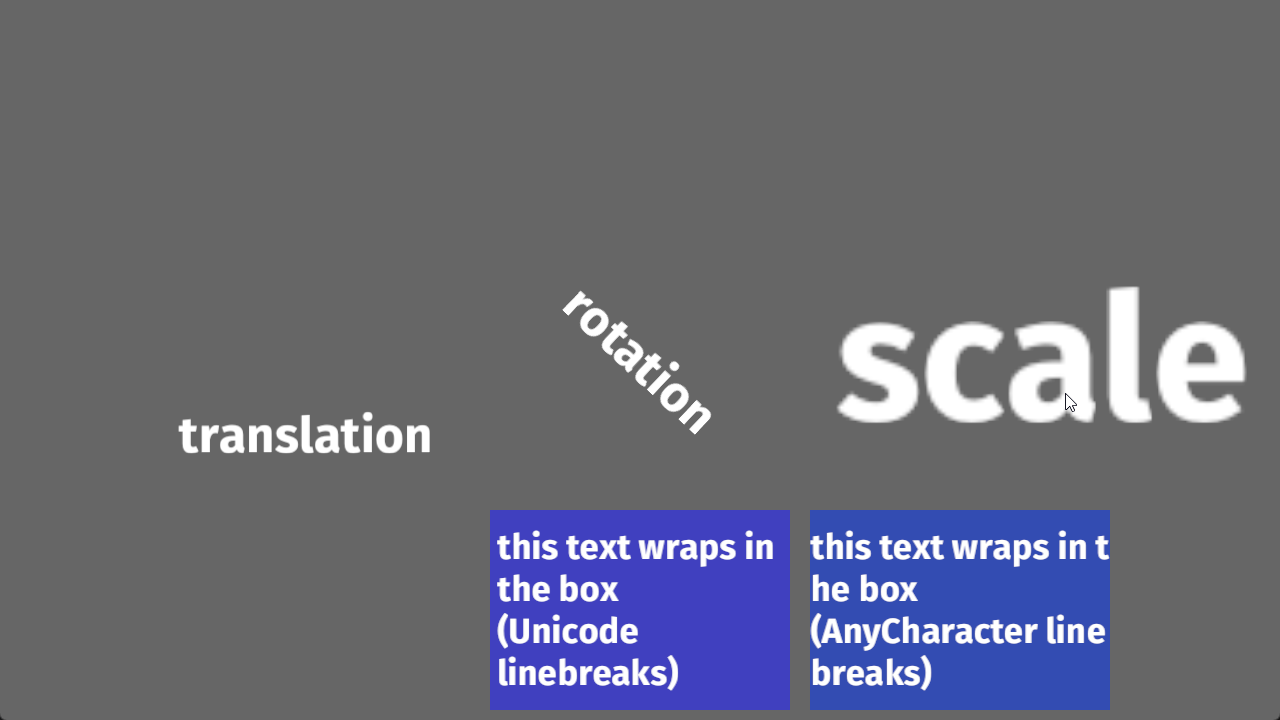

# Objective

Currently, Text always uses the default linebreaking behaviour in glyph_brush_layout `BuiltInLineBreaker::Unicode` which breaks lines at word boundaries. However, glyph_brush_layout also supports breaking lines at any character by setting the linebreaker to `BuiltInLineBreaker::AnyChar`. Having text wrap character-by-character instead of at word boundaries is desirable in some cases - consider that consoles/terminals usually wrap this way.

As a side note, the default Unicode linebreaker does not seem to handle emergency cases, where there is no word boundary on a line to break at. In that case, the text runs out of bounds. Issue #1867 shows an example of this.

## Solution

Basically just copies how TextAlignment is exposed, but for a new enum TextLineBreakBehaviour.

This PR exposes glyph_brush_layout's two simple linebreaking options (Unicode, AnyChar) to users of Text via the enum TextLineBreakBehaviour (which just translates those 2 aforementioned options), plus a method 'with_linebreak_behaviour' on Text and TextBundle.

## Changelog

Added `Text::with_linebreak_behaviour`

Added `TextBundle::with_linebreak_behaviour`

`TextPipeline::queue_text` and `GlyphBrush::compute_glyphs` now need a TextLineBreakBehaviour argument, in order to pass through the new field.

Modified the `text2d` example to show both linebreaking behaviours.

## Example

Here's what the modified example looks like

# Objective

- Fixes#7294

## Solution

- Do not trigger change detection when setting the cursor position from winit

When moving the cursor continuously, Winit sends events:

- CursorMoved(0)

- CursorMoved(1)

- => start of Bevy schedule execution

- CursorMoved(2)

- CursorMoved(3)

- <= End of Bevy schedule execution

if Bevy schedule runs after the event 1, events 2 and 3 would happen during the execution but would be read only on the next system run. During the execution, the system would detect a change on cursor position, and send back an order to winit to move it back to 1, so event 2 and 3 would be ignored. By bypassing change detection when setting the cursor from winit event, it doesn't trigger sending back that change to winit out of order.

# Objective

- The changes to the MeshPipeline done for the prepass broke the shader_instancing example. The issue is that the view_layout changes based on if MSAA is enabled or not, but the example hardcoded the view_layout.

## Solution

- Don't overwrite the bind_group_layout of the descriptor since the MeshPipeline already takes care of this in the specialize function.

Closes https://github.com/bevyengine/bevy/issues/7285

# Objective

- `Components::resource_id` doesn't exist. Like `Components::component_id` but for resources.

## Solution

- Created `Components::resource_id` and added some docs.

---

## Changelog

- Added `Components::resource_id`.

- Changed `World::init_resource` to return the generated `ComponentId`.

- Changed `World::init_non_send_resource` to return the generated `ComponentId`.

# Objective

- Fixes#7288

- Do not expose access directly to cursor position as it is the physical position, ignoring scale

## Solution

- Make cursor position private

- Expose getter/setter on the window to have access to the scale

# Objective

Fixes#6931

Continues #6954 by squashing `Msaa` to a flat enum

Helps out #7215

# Solution

```

pub enum Msaa {

Off = 1,

#[default]

Sample4 = 4,

}

```

# Changelog

- Modified

- `Msaa` is now enum

- Defaults to 4 samples

- Uses `.samples()` method to get the sample number as `u32`

# Migration Guide

```

let multi = Msaa { samples: 4 }

// is now

let multi = Msaa::Sample4

multi.samples

// is now

multi.samples()

```

Co-authored-by: Sjael <jakeobrien44@gmail.com>

After #6503, bevy_render uses the `send_blocking` method introduced in async-channel 1.7, but depended only on ^1.4.

I saw this after pulling main without running cargo update.

# Objective

- Fix minimum dependency version of async-channel

## Solution

- Bump async-channel version constraint to ^1.8, which is currently the latest version.

NOTE: Both bevy_ecs and bevy_tasks also depend on async-channel but they didn't use any newer features.

# Objective

Fixes#3184. Fixes#6640. Fixes#4798. Using `Query::par_for_each(_mut)` currently requires a `batch_size` parameter, which affects how it chunks up large archetypes and tables into smaller chunks to run in parallel. Tuning this value is difficult, as the performance characteristics entirely depends on the state of the `World` it's being run on. Typically, users will just use a flat constant and just tune it by hand until it performs well in some benchmarks. However, this is both error prone and risks overfitting the tuning on that benchmark.

This PR proposes a naive automatic batch-size computation based on the current state of the `World`.

## Background

`Query::par_for_each(_mut)` schedules a new Task for every archetype or table that it matches. Archetypes/tables larger than the batch size are chunked into smaller tasks. Assuming every entity matched by the query has an identical workload, this makes the worst case scenario involve using a batch size equal to the size of the largest matched archetype or table. Conversely, a batch size of `max {archetype, table} size / thread count * COUNT_PER_THREAD` is likely the sweetspot where the overhead of scheduling tasks is minimized, at least not without grouping small archetypes/tables together.

There is also likely a strict minimum batch size below which the overhead of scheduling these tasks is heavier than running the entire thing single-threaded.

## Solution

- [x] Remove the `batch_size` from `Query(State)::par_for_each` and friends.

- [x] Add a check to compute `batch_size = max {archeytpe/table} size / thread count * COUNT_PER_THREAD`

- [x] ~~Panic if thread count is 0.~~ Defer to `for_each` if the thread count is 1 or less.

- [x] Early return if there is no matched table/archetype.

- [x] Add override option for users have queries that strongly violate the initial assumption that all iterated entities have an equal workload.

---

## Changelog

Changed: `Query::par_for_each(_mut)` has been changed to `Query::par_iter(_mut)` and will now automatically try to produce a batch size for callers based on the current `World` state.

## Migration Guide

The `batch_size` parameter for `Query(State)::par_for_each(_mut)` has been removed. These calls will automatically compute a batch size for you. Remove these parameters from all calls to these functions.

Before:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_for_each(32, |comp| {

...

});

}

```

After:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_iter().for_each(|comp| {

...

});

}

```

Co-authored-by: Arnav Choubey <56453634+x-52@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Corey Farwell <coreyf@rwell.org>

Co-authored-by: Aevyrie <aevyrie@gmail.com>

## Problem

`extract_uinodes` checks if an image is loaded for nodes without images

## Solution

Move the image loading skip check so that it is only performed for nodes with a `UiImage` component.

# Objective

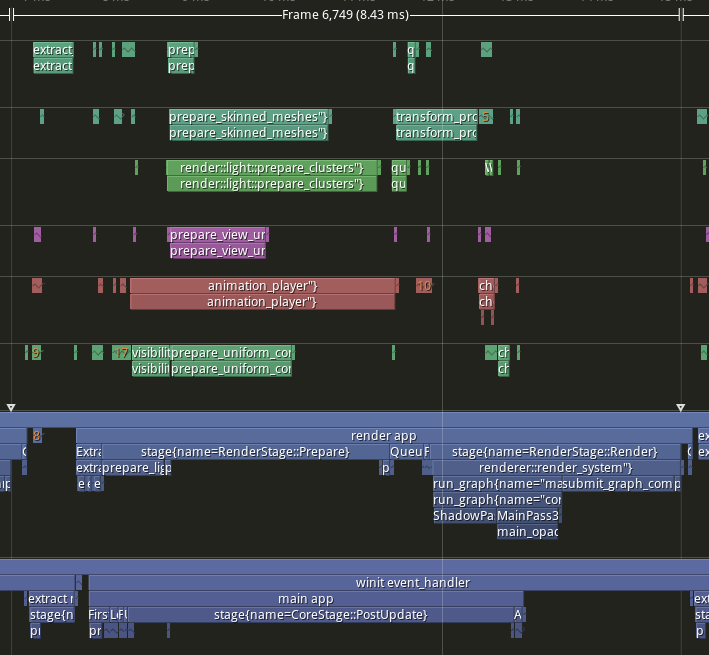

- Implement pipelined rendering

- Fixes#5082

- Fixes#4718

## User Facing Description

Bevy now implements piplelined rendering! Pipelined rendering allows the app logic and rendering logic to run on different threads leading to large gains in performance.

*tracy capture of many_foxes example*

To use pipelined rendering, you just need to add the `PipelinedRenderingPlugin`. If you're using `DefaultPlugins` then it will automatically be added for you on all platforms except wasm. Bevy does not currently support multithreading on wasm which is needed for this feature to work. If you aren't using `DefaultPlugins` you can add the plugin manually.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new()

// whatever other plugins you need

.add_plugin(RenderPlugin)

// needs to be added after RenderPlugin

.add_plugin(PipelinedRenderingPlugin)

.run();

}

```

If for some reason pipelined rendering needs to be removed. You can also disable the plugin the normal way.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new.add_plugins(DefaultPlugins.build().disable::<PipelinedRenderingPlugin>());

}

```

### A setup function was added to plugins

A optional plugin lifecycle function was added to the `Plugin trait`. This function is called after all plugins have been built, but before the app runner is called. This allows for some final setup to be done. In the case of pipelined rendering, the function removes the sub app from the main app and sends it to the render thread.

```rust

struct MyPlugin;

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

}

// optional function

fn setup(&self, app: &mut App) {

// do some final setup before runner is called

}

}

```

### A Stage for Frame Pacing

In the `RenderExtractApp` there is a stage labelled `BeforeIoAfterRenderStart` that systems can be added to. The specific use case for this stage is for a frame pacing system that can delay the start of main app processing in render bound apps to reduce input latency i.e. "frame pacing". This is not currently built into bevy, but exists as `bevy`

```text

|-------------------------------------------------------------------|

| | BeforeIoAfterRenderStart | winit events | main schedule |

| extract |---------------------------------------------------------|

| | extract commands | rendering schedule |

|-------------------------------------------------------------------|

```

### Small API additions

* `Schedule::remove_stage`

* `App::insert_sub_app`

* `App::remove_sub_app`

* `TaskPool::scope_with_executor`

## Problems and Solutions

### Moving render app to another thread

Most of the hard bits for this were done with the render redo. This PR just sends the render app back and forth through channels which seems to work ok. I originally experimented with using a scope to run the render task. It was cuter, but that approach didn't allow render to start before i/o processing. So I switched to using channels. There is much complexity in the coordination that needs to be done, but it's worth it. By moving rendering during i/o processing the frame times should be much more consistent in render bound apps. See https://github.com/bevyengine/bevy/issues/4691.

### Unsoundness with Sending World with NonSend resources

Dropping !Send things on threads other than the thread they were spawned on is considered unsound. The render world doesn't have any nonsend resources. So if we tell the users to "pretty please don't spawn nonsend resource on the render world", we can avoid this problem.

More seriously there is this https://github.com/bevyengine/bevy/pull/6534 pr, which patches the unsoundness by aborting the app if a nonsend resource is dropped on the wrong thread. ~~That PR should probably be merged before this one.~~ For a longer term solution we have this discussion going https://github.com/bevyengine/bevy/discussions/6552.

### NonSend Systems in render world

The render world doesn't have any !Send resources, but it does have a non send system. While Window is Send, winit does have some API's that can only be accessed on the main thread. `prepare_windows` in the render schedule thus needs to be scheduled on the main thread. Currently we run nonsend systems by running them on the thread the TaskPool::scope runs on. When we move render to another thread this no longer works.

To fix this, a new `scope_with_executor` method was added that takes a optional `TheadExecutor` that can only be ticked on the thread it was initialized on. The render world then holds a `MainThreadExecutor` resource which can be passed to the scope in the parallel executor that it uses to spawn it's non send systems on.

### Scopes executors between render and main should not share tasks

Since the render world and the app world share the `ComputeTaskPool`. Because `scope` has executors for the ComputeTaskPool a system from the main world could run on the render thread or a render system could run on the main thread. This can cause performance problems because it can delay a stage from finishing. See https://github.com/bevyengine/bevy/pull/6503#issuecomment-1309791442 for more details.

To avoid this problem, `TaskPool::scope` has been changed to not tick the ComputeTaskPool when it's used by the parallel executor. In the future when we move closer to the 1 thread to 1 logical core model we may want to overprovide threads, because the render and main app threads don't do much when executing the schedule.

## Performance

My machine is Windows 11, AMD Ryzen 5600x, RX 6600

### Examples

#### This PR with pipelining vs Main

> Note that these were run on an older version of main and the performance profile has probably changed due to optimizations

Seeing a perf gain from 29% on many lights to 7% on many sprites.

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | 27.01% | 27.34% | -47.09% | 1.58 | 1.55 | -1.78 | 5.85 | 5.67 | 3.78 | 4.27 | 4.12 | 5.56

many lights | 29.35% | 29.94% | -10.84% | 3.02 | 3.03 | -0.57 | 10.29 | 10.12 | 5.26 | 7.27 | 7.09 | 5.83

many animated sprites | 13.97% | 15.69% | 14.20% | 3.79 | 4.17 | 1.41 | 27.12 | 26.57 | 9.93 | 23.33 | 22.4 | 8.52

3d scene | 25.79% | 26.78% | 7.46% | 0.49 | 0.49 | 0.15 | 1.9 | 1.83 | 2.01 | 1.41 | 1.34 | 1.86

many cubes | 11.97% | 11.28% | 14.51% | 1.93 | 1.78 | 1.31 | 16.13 | 15.78 | 9.03 | 14.2 | 14 | 7.72

many sprites | 7.14% | 9.42% | -85.42% | 1.72 | 2.23 | -6.15 | 24.09 | 23.68 | 7.2 | 22.37 | 21.45 | 13.35

<!--EndFragment-->

</body>

</html>

#### This PR with pipelining disabled vs Main

Mostly regressions here. I don't think this should be a problem as users that are disabling pipelined rendering are probably running single threaded and not using the parallel executor. The regression is probably mostly due to the switch to use `async_executor::run` instead of `try_tick` and also having one less thread to run systems on. I'll do a writeup on why switching to `run` causes regressions, so we can try to eventually fix it. Using try_tick causes issues when pipeline rendering is enable as seen [here](https://github.com/bevyengine/bevy/pull/6503#issuecomment-1380803518)

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR no pipelining | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | -3.72% | -4.42% | -1.07% | -0.21 | -0.24 | -0.04 | 5.64 | 5.43 | 3.74 | 5.85 | 5.67 | 3.78

many lights | 0.29% | -0.30% | 4.75% | 0.03 | -0.03 | 0.25 | 10.29 | 10.12 | 5.26 | 10.26 | 10.15 | 5.01

many animated sprites | 0.22% | 1.81% | -2.72% | 0.06 | 0.48 | -0.27 | 27.12 | 26.57 | 9.93 | 27.06 | 26.09 | 10.2

3d scene | -15.79% | -14.75% | -31.34% | -0.3 | -0.27 | -0.63 | 1.9 | 1.83 | 2.01 | 2.2 | 2.1 | 2.64

many cubes | -2.85% | -3.30% | 0.00% | -0.46 | -0.52 | 0 | 16.13 | 15.78 | 9.03 | 16.59 | 16.3 | 9.03

many sprites | 2.49% | 2.41% | 0.69% | 0.6 | 0.57 | 0.05 | 24.09 | 23.68 | 7.2 | 23.49 | 23.11 | 7.15

<!--EndFragment-->

</body>

</html>

### Benchmarks

Mostly the same except empty_systems has got a touch slower. The maybe_pipelining+1 column has the compute task pool with an extra thread over default added. This is because pipelining loses one thread over main to execute systems on, since the main thread no longer runs normal systems.

<details>

<summary>Click Me</summary>

```text

group main maybe-pipelining+1

----- ------------------------- ------------------

busy_systems/01x_entities_03_systems 1.07 30.7±1.32µs ? ?/sec 1.00 28.6±1.35µs ? ?/sec

busy_systems/01x_entities_06_systems 1.10 52.1±1.10µs ? ?/sec 1.00 47.2±1.08µs ? ?/sec

busy_systems/01x_entities_09_systems 1.00 74.6±1.36µs ? ?/sec 1.00 75.0±1.93µs ? ?/sec

busy_systems/01x_entities_12_systems 1.03 100.6±6.68µs ? ?/sec 1.00 98.0±1.46µs ? ?/sec

busy_systems/01x_entities_15_systems 1.11 128.5±3.53µs ? ?/sec 1.00 115.5±1.02µs ? ?/sec

busy_systems/02x_entities_03_systems 1.16 50.4±2.56µs ? ?/sec 1.00 43.5±3.00µs ? ?/sec

busy_systems/02x_entities_06_systems 1.00 87.1±1.27µs ? ?/sec 1.05 91.5±7.15µs ? ?/sec

busy_systems/02x_entities_09_systems 1.04 139.9±6.37µs ? ?/sec 1.00 134.0±1.06µs ? ?/sec

busy_systems/02x_entities_12_systems 1.05 179.2±3.47µs ? ?/sec 1.00 170.1±3.17µs ? ?/sec

busy_systems/02x_entities_15_systems 1.01 219.6±3.75µs ? ?/sec 1.00 218.1±2.55µs ? ?/sec

busy_systems/03x_entities_03_systems 1.10 70.6±2.33µs ? ?/sec 1.00 64.3±0.69µs ? ?/sec

busy_systems/03x_entities_06_systems 1.02 130.2±3.11µs ? ?/sec 1.00 128.0±1.34µs ? ?/sec

busy_systems/03x_entities_09_systems 1.00 195.0±10.11µs ? ?/sec 1.00 194.8±1.41µs ? ?/sec

busy_systems/03x_entities_12_systems 1.01 261.7±4.05µs ? ?/sec 1.00 259.8±4.11µs ? ?/sec

busy_systems/03x_entities_15_systems 1.00 318.0±3.04µs ? ?/sec 1.06 338.3±20.25µs ? ?/sec

busy_systems/04x_entities_03_systems 1.00 82.9±0.63µs ? ?/sec 1.02 84.3±0.63µs ? ?/sec

busy_systems/04x_entities_06_systems 1.01 181.7±3.65µs ? ?/sec 1.00 179.8±1.76µs ? ?/sec

busy_systems/04x_entities_09_systems 1.04 265.0±4.68µs ? ?/sec 1.00 255.3±1.98µs ? ?/sec

busy_systems/04x_entities_12_systems 1.00 335.9±3.00µs ? ?/sec 1.05 352.6±15.84µs ? ?/sec

busy_systems/04x_entities_15_systems 1.00 418.6±10.26µs ? ?/sec 1.08 450.2±39.58µs ? ?/sec

busy_systems/05x_entities_03_systems 1.07 114.3±0.95µs ? ?/sec 1.00 106.9±1.52µs ? ?/sec

busy_systems/05x_entities_06_systems 1.08 229.8±2.90µs ? ?/sec 1.00 212.3±4.18µs ? ?/sec

busy_systems/05x_entities_09_systems 1.03 329.3±1.99µs ? ?/sec 1.00 319.2±2.43µs ? ?/sec

busy_systems/05x_entities_12_systems 1.06 454.7±6.77µs ? ?/sec 1.00 430.1±3.58µs ? ?/sec

busy_systems/05x_entities_15_systems 1.03 554.6±6.15µs ? ?/sec 1.00 538.4±23.87µs ? ?/sec

contrived/01x_entities_03_systems 1.00 14.0±0.15µs ? ?/sec 1.08 15.1±0.21µs ? ?/sec

contrived/01x_entities_06_systems 1.04 28.5±0.37µs ? ?/sec 1.00 27.4±0.44µs ? ?/sec

contrived/01x_entities_09_systems 1.00 41.5±4.38µs ? ?/sec 1.02 42.2±2.24µs ? ?/sec

contrived/01x_entities_12_systems 1.06 55.9±1.49µs ? ?/sec 1.00 52.6±1.36µs ? ?/sec

contrived/01x_entities_15_systems 1.02 68.0±2.00µs ? ?/sec 1.00 66.5±0.78µs ? ?/sec

contrived/02x_entities_03_systems 1.03 25.2±0.38µs ? ?/sec 1.00 24.6±0.52µs ? ?/sec

contrived/02x_entities_06_systems 1.00 46.3±0.49µs ? ?/sec 1.04 48.1±4.13µs ? ?/sec

contrived/02x_entities_09_systems 1.02 70.4±0.99µs ? ?/sec 1.00 68.8±1.04µs ? ?/sec

contrived/02x_entities_12_systems 1.06 96.8±1.49µs ? ?/sec 1.00 91.5±0.93µs ? ?/sec

contrived/02x_entities_15_systems 1.02 116.2±0.95µs ? ?/sec 1.00 114.2±1.42µs ? ?/sec

contrived/03x_entities_03_systems 1.00 33.2±0.38µs ? ?/sec 1.01 33.6±0.45µs ? ?/sec

contrived/03x_entities_06_systems 1.00 62.4±0.73µs ? ?/sec 1.01 63.3±1.05µs ? ?/sec

contrived/03x_entities_09_systems 1.02 96.4±0.85µs ? ?/sec 1.00 94.8±3.02µs ? ?/sec

contrived/03x_entities_12_systems 1.01 126.3±4.67µs ? ?/sec 1.00 125.6±2.27µs ? ?/sec

contrived/03x_entities_15_systems 1.03 160.2±9.37µs ? ?/sec 1.00 156.0±1.53µs ? ?/sec

contrived/04x_entities_03_systems 1.02 41.4±3.39µs ? ?/sec 1.00 40.5±0.52µs ? ?/sec

contrived/04x_entities_06_systems 1.00 78.9±1.61µs ? ?/sec 1.02 80.3±1.06µs ? ?/sec

contrived/04x_entities_09_systems 1.02 121.8±3.97µs ? ?/sec 1.00 119.2±1.46µs ? ?/sec

contrived/04x_entities_12_systems 1.00 157.8±1.48µs ? ?/sec 1.01 160.1±1.72µs ? ?/sec

contrived/04x_entities_15_systems 1.00 197.9±1.47µs ? ?/sec 1.08 214.2±34.61µs ? ?/sec

contrived/05x_entities_03_systems 1.00 49.1±0.33µs ? ?/sec 1.01 49.7±0.75µs ? ?/sec

contrived/05x_entities_06_systems 1.00 95.0±0.93µs ? ?/sec 1.00 94.6±0.94µs ? ?/sec

contrived/05x_entities_09_systems 1.01 143.2±1.68µs ? ?/sec 1.00 142.2±2.00µs ? ?/sec

contrived/05x_entities_12_systems 1.00 191.8±2.03µs ? ?/sec 1.01 192.7±7.88µs ? ?/sec

contrived/05x_entities_15_systems 1.02 239.7±3.71µs ? ?/sec 1.00 235.8±4.11µs ? ?/sec

empty_systems/000_systems 1.01 47.8±0.67ns ? ?/sec 1.00 47.5±2.02ns ? ?/sec

empty_systems/001_systems 1.00 1743.2±126.14ns ? ?/sec 1.01 1761.1±70.10ns ? ?/sec

empty_systems/002_systems 1.01 2.2±0.04µs ? ?/sec 1.00 2.2±0.02µs ? ?/sec

empty_systems/003_systems 1.02 2.7±0.09µs ? ?/sec 1.00 2.7±0.16µs ? ?/sec

empty_systems/004_systems 1.00 3.1±0.11µs ? ?/sec 1.00 3.1±0.24µs ? ?/sec

empty_systems/005_systems 1.00 3.5±0.05µs ? ?/sec 1.11 3.9±0.70µs ? ?/sec

empty_systems/010_systems 1.00 5.5±0.12µs ? ?/sec 1.03 5.7±0.17µs ? ?/sec

empty_systems/015_systems 1.00 7.9±0.19µs ? ?/sec 1.06 8.4±0.16µs ? ?/sec

empty_systems/020_systems 1.00 10.4±1.25µs ? ?/sec 1.02 10.6±0.18µs ? ?/sec

empty_systems/025_systems 1.00 12.4±0.39µs ? ?/sec 1.14 14.1±1.07µs ? ?/sec

empty_systems/030_systems 1.00 15.1±0.39µs ? ?/sec 1.05 15.8±0.62µs ? ?/sec

empty_systems/035_systems 1.00 16.9±0.47µs ? ?/sec 1.07 18.0±0.37µs ? ?/sec

empty_systems/040_systems 1.00 19.3±0.41µs ? ?/sec 1.05 20.3±0.39µs ? ?/sec

empty_systems/045_systems 1.00 22.4±1.67µs ? ?/sec 1.02 22.9±0.51µs ? ?/sec

empty_systems/050_systems 1.00 24.4±1.67µs ? ?/sec 1.01 24.7±0.40µs ? ?/sec

empty_systems/055_systems 1.05 28.6±5.27µs ? ?/sec 1.00 27.2±0.70µs ? ?/sec

empty_systems/060_systems 1.02 29.9±1.64µs ? ?/sec 1.00 29.3±0.66µs ? ?/sec

empty_systems/065_systems 1.02 32.7±3.15µs ? ?/sec 1.00 32.1±0.98µs ? ?/sec

empty_systems/070_systems 1.00 33.0±1.42µs ? ?/sec 1.03 34.1±1.44µs ? ?/sec

empty_systems/075_systems 1.00 34.8±0.89µs ? ?/sec 1.04 36.2±0.70µs ? ?/sec

empty_systems/080_systems 1.00 37.0±1.82µs ? ?/sec 1.05 38.7±1.37µs ? ?/sec

empty_systems/085_systems 1.00 38.7±0.76µs ? ?/sec 1.05 40.8±0.83µs ? ?/sec

empty_systems/090_systems 1.00 41.5±1.09µs ? ?/sec 1.04 43.2±0.82µs ? ?/sec

empty_systems/095_systems 1.00 43.6±1.10µs ? ?/sec 1.04 45.2±0.99µs ? ?/sec

empty_systems/100_systems 1.00 46.7±2.27µs ? ?/sec 1.03 48.1±1.25µs ? ?/sec

```

</details>

## Migration Guide

### App `runner` and SubApp `extract` functions are now required to be Send

This was changed to enable pipelined rendering. If this breaks your use case please report it as these new bounds might be able to be relaxed.

## ToDo

* [x] redo benchmarking

* [x] reinvestigate the perf of the try_tick -> run change for task pool scope

# Objective

- Add a configurable prepass

- A depth prepass is useful for various shader effects and to reduce overdraw. It can be expansive depending on the scene so it's important to be able to disable it if you don't need any effects that uses it or don't suffer from excessive overdraw.

- The goal is to eventually use it for things like TAA, Ambient Occlusion, SSR and various other techniques that can benefit from having a prepass.

## Solution

The prepass node is inserted before the main pass. It runs for each `Camera3d` with a prepass component (`DepthPrepass`, `NormalPrepass`). The presence of one of those components is used to determine which textures are generated in the prepass. When any prepass is enabled, the depth buffer generated will be used by the main pass to reduce overdraw.

The prepass runs for each `Material` created with the `MaterialPlugin::prepass_enabled` option set to `true`. You can overload the shader used by the prepass by using `Material::prepass_vertex_shader()` and/or `Material::prepass_fragment_shader()`. It will also use the `Material::specialize()` for more advanced use cases. It is enabled by default on all materials.

The prepass works on opaque materials and materials using an alpha mask. Transparent materials are ignored.

The `StandardMaterial` overloads the prepass fragment shader to support alpha mask and normal maps.

---

## Changelog

- Add a new `PrepassNode` that runs before the main pass

- Add a `PrepassPlugin` to extract/prepare/queue the necessary data

- Add a `DepthPrepass` and `NormalPrepass` component to control which textures will be created by the prepass and available in later passes.

- Add a new `prepass_enabled` flag to the `MaterialPlugin` that will control if a material uses the prepass or not.

- Add a new `prepass_enabled` flag to the `PbrPlugin` to control if the StandardMaterial uses the prepass. Currently defaults to false.

- Add `Material::prepass_vertex_shader()` and `Material::prepass_fragment_shader()` to control the prepass from the `Material`

## Notes

In bevy's sample 3d scene, the performance is actually worse when enabling the prepass, but on more complex scenes the performance is generally better. I would like more testing on this, but @DGriffin91 has reported a very noticeable improvements in some scenes.

The prepass is also used by @JMS55 for TAA and GTAO

discord thread: <https://discord.com/channels/691052431525675048/1011624228627419187>

This PR was built on top of the work of multiple people

Co-Authored-By: @superdump

Co-Authored-By: @robtfm

Co-Authored-By: @JMS55

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

# Objective

- Safety comments for the `CommandQueue` type are quite sparse and very imprecise. Sometimes, they are right for the wrong reasons or use circular reasoning.

## Solution

- Document previously-implicit safety invariants.

- Rewrite safety comments to actually reflect the specific invariants of each operation.

- Use `OwningPtr` instead of raw pointers, to encode an invariant in the type system instead of via comments.

- Use typed pointer methods when possible to increase reliability.

---

## Changelog

+ Added the function `OwningPtr::read_unaligned`.

# Objective

Fix https://github.com/bevyengine/bevy/issues/4530

- Make it easier to open/close/modify windows by setting them up as `Entity`s with a `Window` component.

- Make multiple windows very simple to set up. (just add a `Window` component to an entity and it should open)

## Solution

- Move all properties of window descriptor to ~components~ a component.

- Replace `WindowId` with `Entity`.

- ~Use change detection for components to update backend rather than events/commands. (The `CursorMoved`/`WindowResized`/... events are kept for user convenience.~

Check each field individually to see what we need to update, events are still kept for user convenience.

---

## Changelog

- `WindowDescriptor` renamed to `Window`.

- Width/height consolidated into a `WindowResolution` component.

- Requesting maximization/minimization is done on the [`Window::state`] field.

- `WindowId` is now `Entity`.

## Migration Guide

- Replace `WindowDescriptor` with `Window`.

- Change `width` and `height` fields in a `WindowResolution`, either by doing

```rust

WindowResolution::new(width, height) // Explicitly

// or using From<_> for tuples for convenience

(1920., 1080.).into()

```

- Replace any `WindowCommand` code to just modify the `Window`'s fields directly and creating/closing windows is now by spawning/despawning an entity with a `Window` component like so:

```rust

let window = commands.spawn(Window { ... }).id(); // open window

commands.entity(window).despawn(); // close window

```

## Unresolved

- ~How do we tell when a window is minimized by a user?~

~Currently using the `Resize(0, 0)` as an indicator of minimization.~

No longer attempting to tell given how finnicky this was across platforms, now the user can only request that a window be maximized/minimized.

## Future work

- Move `exit_on_close` functionality out from windowing and into app(?)

- https://github.com/bevyengine/bevy/issues/5621

- https://github.com/bevyengine/bevy/issues/7099

- https://github.com/bevyengine/bevy/issues/7098

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

See:

- https://github.com/bevyengine/bevy/issues/7067#issuecomment-1381982285

- (This does not fully close that issue in my opinion.)

- https://discord.com/channels/691052431525675048/1063454009769340989

## Solution

This merge request adds documentation:

1. Alert users to the fact that `App::run()` might never return and code placed after it might never be executed.

2. Makes `winit::WinitSettings::return_from_run` discoverable.

3. Better explains why `winit::WinitSettings::return_from_run` is discouraged and better links to up-stream docs. on that topic.

4. Adds notes to the `app/return_after_run.rs` example which otherwise promotes a feature that carries caveats.

Furthermore, w.r.t `winit::WinitSettings::return_from_run`:

- Broken links to `winit` docs are fixed.

- Links now point to BOTH `EventLoop::run()` and `EventLoopExtRunReturn::run_return()` which are the salient up-stream pages and make more sense, taken together.

- Collateral damage: "Supported platforms" heading; disambiguation of "run" → `App::run()`; links.

## Future Work

I deliberately structured the "`run()` might not return" section under `App::run()` to allow for alternative patterns (e.g. `AppExit` event, `WindowClosed` event) to be listed or mentioned, beneath it, in the future.

# Objective

- Fixes#7260

## Solution

- #6649 used `init_non_send_resource` for `AudioOutput`, but this is before #6436 was merged.

- Use `init_resource` instead.

# Objective

Repeated calls to `init_non_send_resource` currently overwrite the old value because the wrong storage is being checked.

## Solution

Use the correct storage. Add some tests.

## Notes

Without the fix, the new test fails with

```

thread 'world::tests::init_non_send_resource_does_not_overwrite' panicked at 'assertion failed: `(left == right)`

left: `1`,

right: `0`', crates/bevy_ecs/src/world/mod.rs:2267:9

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

test world::tests::init_non_send_resource_does_not_overwrite ... FAILED

```

This was introduced by #7174 and it seems like a fairly straightforward oopsie.

# Objective

I was reading through the bevy_ecs code, trying to understand how everything works.

I was getting a bit confused when reading the doc comment for the `new_archetype` function; it looks like it doesn't create a new archetype but instead updates some internal state in the SystemParam to facility QueryIteration.

(I still couldn't find where a new archetype was actually created)

## Solution

- Adding a doc comment with a more correct explanation.

If it's deemed correct, I can also update the doc-comment for the other `new_archetype` calls

# Objective

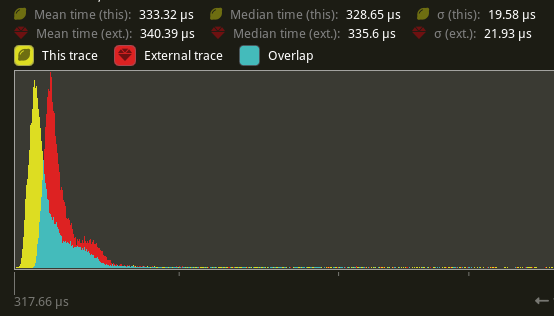

Speed up the render phase of rendering. An extension of #6885.

`SystemState::get` increments the `World`'s change tick atomically every time it's called. This is notably more expensive than a unsynchronized increment, even without contention. It also updates the archetypes, even when there has been nothing to update when it's called repeatedly.

## Solution

Piggyback off of #6885. Split `SystemState::validate_world_and_update_archetypes` into `SystemState::validate_world` and `SystemState::update_archetypes`, and make the later `pub`. Then create safe variants of `SystemState::get_unchecked_manual` that still validate the `World` but do not update archetypes and do not increment the change tick using `World::read_change_tick` and `World::change_tick`. Update `RenderCommandState` to call `SystemState::update_archetypes` in `Draw::prepare` and `SystemState::get_manual` in `Draw::draw`.

## Performance

There's a slight perf benefit (~2%) for `main_opaque_pass_3d` on `many_foxes` (340.39 us -> 333.32 us)

## Alternatives

We can change `SystemState::get` to not increment the `World`'s change tick. Though this would still put updating the archetypes and an atomic read on the hot-path.

---

## Changelog

Added: `SystemState::get_manual`

Added: `SystemState::get_manual_mut`

Added: `SystemState::update_archetypes`

# Objective

Remove the `VerticalAlign` enum.

Text's alignment field should only affect the text's internal text alignment, not its position. The only way to control a `TextBundle`'s position and bounds should be through the manipulation of the constraints in the `Style` components of the nodes in the Bevy UI's layout tree.

`Text2dBundle` should have a separate `Anchor` component that sets its position relative to its transform.

Related issues: #676, #1490, #5502, #5513, #5834, #6717, #6724, #6741, #6748

## Changelog

* Changed `TextAlignment` into an enum with `Left`, `Center`, and `Right` variants.

* Removed the `HorizontalAlign` and `VerticalAlign` types.

* Added an `Anchor` component to `Text2dBundle`

* Added `Component` derive to `Anchor`

* Use `f32::INFINITY` instead of `f32::MAX` to represent unbounded text in Text2dBounds

## Migration Guide

The `alignment` field of `Text` now only affects the text's internal alignment.

### Change `TextAlignment` to TextAlignment` which is now an enum. Replace:

* `TextAlignment::TOP_LEFT`, `TextAlignment::CENTER_LEFT`, `TextAlignment::BOTTOM_LEFT` with `TextAlignment::Left`

* `TextAlignment::TOP_CENTER`, `TextAlignment::CENTER_LEFT`, `TextAlignment::BOTTOM_CENTER` with `TextAlignment::Center`

* `TextAlignment::TOP_RIGHT`, `TextAlignment::CENTER_RIGHT`, `TextAlignment::BOTTOM_RIGHT` with `TextAlignment::Right`

### Changes for `Text2dBundle`

`Text2dBundle` has a new field 'text_anchor' that takes an `Anchor` component that controls its position relative to its transform.

# Objective

- Enabling the `debug_asset_server` feature doesn't compile when using it with `load_internal_binary_asset!()`. The issue is because it assumes the loader takes an `&'static str` as a parameter, but binary assets loader expect `&'static [u8]`.

## Solution

- Add a generic type for the loader and use a different type in `load_internal_asset` and `load_internal_binary_asset`

# Objective

- Fixes#6361

- Fixes#6362

- Fixes#6364

## Solution

- Added an example for creating a custom `Decodable` type

- Clarified the documentation on `Decodable`

- Added an `AddAudioSource` trait and implemented it for `App`

Co-authored-by: dis-da-moe <84386186+dis-da-moe@users.noreply.github.com>

# Objective

Fix#4647. If any child is changed, or even reordered, `Changed<Children>` is true, which causes transform propagation to propagate changes to all siblings of a changed child, even if they don't need to be.

## Solution

As `Parent` and `Children` are updated in tandem in hierarchy commands after #4800. `Changed<Parent>` is true on the child when `Changed<Children>` is true on the parent. However, unlike checking children, checking `Changed<Parent>` is only localized to the current entity and will not force propagation to the siblings.

Also took the opportunity to change propagation to use `Query::iter_many` instead of repeated `Query::get` calls. Should cut a bit of the overhead out of propagation. This means we won't panic when there isn't a `Parent` on the child, just skip over it.

The tests from #4608 still pass, so the change detection here still works just fine under this approach.

The current section does not talk about `D-Complex` and lists things like "adds unsafe code" as a reason to mark a PR `S-Controversial`. This is not how `D-Complex` and `S-Controversial` are being used at the moment.

This PR lists what classifies a PR as `D-Complex` and what classifies a PR as `S-Controversial`. It also links to some PRs with each combination of labels to help give an idea for what this means in practice.

cc #7211 which is doing a similar thing

# Objective

fix bloom when used on a camera with a viewport specified

## Solution

- pass viewport into the prefilter shader, and use it to read from the correct section of the original rendered screen

- don't apply viewport for the intermediate bloom passes, only for the final blend output

# Objective

- Fixes#3158

## Solution

- clear columns

My implementation of `clear_resources` do not remove the components itself but it clears the columns that keeps the resource data. I'm not sure if the issue meant to clear all resources, even the components and component ids (which I'm not sure if it's possible)

Co-authored-by: 2ne1ugly <47616772+2ne1ugly@users.noreply.github.com>

# Objective

The trait `ReadOnlySystemParam` is not implemented for `Option<NonSend<>>`, even though it should be.

Follow-up to #7243. This fixes another mistake made in #6919.

## Solution

Add the missing impl.