2023-10-12 22:10:38 +00:00

|

|

|

use bevy_core_pipeline::core_3d::{Transparent3d, CORE_3D_DEPTH_FORMAT};

|

2024-02-12 15:02:24 +00:00

|

|

|

use bevy_ecs::entity::EntityHashMap;

|

2023-03-02 22:44:10 +00:00

|

|

|

use bevy_ecs::prelude::*;

|

2023-01-25 12:35:39 +00:00

|

|

|

use bevy_math::{Mat4, UVec3, UVec4, Vec2, Vec3, Vec3Swizzles, Vec4, Vec4Swizzles};

|

2021-12-14 03:58:23 +00:00

|

|

|

use bevy_render::{

|

2023-01-25 12:35:39 +00:00

|

|

|

camera::Camera,

|

2021-06-02 02:59:17 +00:00

|

|

|

color::Color,

|

2023-03-02 08:21:21 +00:00

|

|

|

mesh::Mesh,

|

2024-01-22 15:28:33 +00:00

|

|

|

primitives::{CascadesFrusta, CubemapFrusta, Frustum},

|

2021-06-26 22:35:07 +00:00

|

|

|

render_asset::RenderAssets,

|

Make render graph slots optional for most cases (#8109)

# Objective

- Currently, the render graph slots are only used to pass the

view_entity around. This introduces significant boilerplate for very

little value. Instead of using slots for this, make the view_entity part

of the `RenderGraphContext`. This also means we won't need to have

`IN_VIEW` on every node and and we'll be able to use the default impl of

`Node::input()`.

## Solution

- Add `view_entity: Option<Entity>` to the `RenderGraphContext`

- Update all nodes to use this instead of entity slot input

---

## Changelog

- Add optional `view_entity` to `RenderGraphContext`

## Migration Guide

You can now get the view_entity directly from the `RenderGraphContext`.

When implementing the Node:

```rust

// 0.10

struct FooNode;

impl FooNode {

const IN_VIEW: &'static str = "view";

}

impl Node for FooNode {

fn input(&self) -> Vec<SlotInfo> {

vec![SlotInfo::new(Self::IN_VIEW, SlotType::Entity)]

}

fn run(

&self,

graph: &mut RenderGraphContext,

// ...

) -> Result<(), NodeRunError> {

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

// ...

Ok(())

}

}

// 0.11

struct FooNode;

impl Node for FooNode {

fn run(

&self,

graph: &mut RenderGraphContext,

// ...

) -> Result<(), NodeRunError> {

let view_entity = graph.view_entity();

// ...

Ok(())

}

}

```

When adding the node to the graph, you don't need to specify a slot_edge

for the view_entity.

```rust

// 0.10

let mut graph = RenderGraph::default();

graph.add_node(FooNode::NAME, node);

let input_node_id = draw_2d_graph.set_input(vec![SlotInfo::new(

graph::input::VIEW_ENTITY,

SlotType::Entity,

)]);

graph.add_slot_edge(

input_node_id,

graph::input::VIEW_ENTITY,

FooNode::NAME,

FooNode::IN_VIEW,

);

// add_node_edge ...

// 0.11

let mut graph = RenderGraph::default();

graph.add_node(FooNode::NAME, node);

// add_node_edge ...

```

## Notes

This PR paired with #8007 will help reduce a lot of annoying boilerplate

with the render nodes. Depending on which one gets merged first. It will

require a bit of clean up work to make both compatible.

I tagged this as a breaking change, because using the old system to get

the view_entity will break things because it's not a node input slot

anymore.

## Notes for reviewers

A lot of the diffs are just removing the slots in every nodes and graph

creation. The important part is mostly in the

graph_runner/CameraDriverNode.

2023-03-21 20:11:13 +00:00

|

|

|

render_graph::{Node, NodeRunError, RenderGraphContext},

|

2023-10-25 08:40:55 +00:00

|

|

|

render_phase::*,

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

render_resource::*,

|

Modular Rendering (#2831)

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

2021-09-23 06:16:11 +00:00

|

|

|

renderer::{RenderContext, RenderDevice, RenderQueue},

|

2021-06-02 02:59:17 +00:00

|

|

|

texture::*,

|

light renderlayers (#10742)

# Objective

add `RenderLayers` awareness to lights. lights default to

`RenderLayers::layer(0)`, and must intersect the camera entity's

`RenderLayers` in order to affect the camera's output.

note that lights already use renderlayers to filter meshes for shadow

casting. this adds filtering lights per view based on intersection of

camera layers and light layers.

fixes #3462

## Solution

PointLights and SpotLights are assigned to individual views in

`assign_lights_to_clusters`, so we simply cull the lights which don't

match the view layers in that function.

DirectionalLights are global, so we

- add the light layers to the `DirectionalLight` struct

- add the view layers to the `ViewUniform` struct

- check for intersection before processing the light in

`apply_pbr_lighting`

potential issue: when mesh/light layers are smaller than the view layers

weird results can occur. e.g:

camera = layers 1+2

light = layers 1

mesh = layers 2

the mesh does not cast shadows wrt the light as (1 & 2) == 0.

the light affects the view as (1+2 & 1) != 0.

the view renders the mesh as (1+2 & 2) != 0.

so the mesh is rendered and lit, but does not cast a shadow.

this could be fixed (so that the light would not affect the mesh in that

view) by adding the light layers to the point and spot light structs,

but i think the setup is pretty unusual, and space is at a premium in

those structs (adding 4 bytes more would reduce the webgl point+spot

light max count to 240 from 256).

I think typical usage is for cameras to have a single layer, and

meshes/lights to maybe have multiple layers to render to e.g. minimaps

as well as primary views.

if there is a good use case for the above setup and we should support

it, please let me know.

---

## Migration Guide

Lights no longer affect all `RenderLayers` by default, now like cameras

and meshes they default to `RenderLayers::layer(0)`. To recover the

previous behaviour and have all lights affect all views, add a

`RenderLayers::all()` component to the light entity.

2023-12-12 19:45:37 +00:00

|

|

|

view::{ExtractedView, RenderLayers, ViewVisibility, VisibleEntities},

|

Make `RenderStage::Extract` run on the render world (#4402)

# Objective

- Currently, the `Extract` `RenderStage` is executed on the main world, with the render world available as a resource.

- However, when needing access to resources in the render world (e.g. to mutate them), the only way to do so was to get exclusive access to the whole `RenderWorld` resource.

- This meant that effectively only one extract which wrote to resources could run at a time.

- We didn't previously make `Extract`ing writing to the world a non-happy path, even though we want to discourage that.

## Solution

- Move the extract stage to run on the render world.

- Add the main world as a `MainWorld` resource.

- Add an `Extract` `SystemParam` as a convenience to access a (read only) `SystemParam` in the main world during `Extract`.

## Future work

It should be possible to avoid needing to use `get_or_spawn` for the render commands, since now the `Commands`' `Entities` matches up with the world being executed on.

We need to determine how this interacts with https://github.com/bevyengine/bevy/pull/3519

It's theoretically possible to remove the need for the `value` method on `Extract`. However, that requires slightly changing the `SystemParam` interface, which would make it more complicated. That would probably mess up the `SystemState` api too.

## Todo

I still need to add doc comments to `Extract`.

---

## Changelog

### Changed

- The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase.

Resources on the render world can now be accessed using `ResMut` during extract.

### Removed

- `Commands::spawn_and_forget`. Use `Commands::get_or_spawn(e).insert_bundle(bundle)` instead

## Migration Guide

The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase. `Extract` takes a single type parameter, which is any system parameter (such as `Res`, `Query` etc.). It will extract this from the main world, and returns the result of this extraction when `value` is called on it.

For example, if previously your extract system looked like:

```rust

fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

for cloud in clouds.iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

the new version would be:

```rust

fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

The diff is:

```diff

--- a/src/clouds.rs

+++ b/src/clouds.rs

@@ -1,5 +1,5 @@

-fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

- for cloud in clouds.iter() {

+fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

+ for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

You can now also access resources from the render world using the normal system parameters during `Extract`:

```rust

fn extract_assets(mut render_assets: ResMut<MyAssets>, source_assets: Extract<Res<MyAssets>>) {

*render_assets = source_assets.clone();

}

```

Please note that all existing extract systems need to be updated to match this new style; even if they currently compile they will not run as expected. A warning will be emitted on a best-effort basis if this is not met.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-07-08 23:56:33 +00:00

|

|

|

Extract,

|

2021-06-02 02:59:17 +00:00

|

|

|

};

|

2022-07-16 00:51:12 +00:00

|

|

|

use bevy_transform::{components::GlobalTransform, prelude::Transform};

|

Multithreaded render command encoding (#9172)

# Objective

- Encoding many GPU commands (such as in a renderpass with many draws,

such as the main opaque pass) onto a `wgpu::CommandEncoder` is very

expensive, and takes a long time.

- To improve performance, we want to perform the command encoding for

these heavy passes in parallel.

## Solution

- `RenderContext` can now queue up "command buffer generation tasks"

which are closures that will generate a command buffer when called.

- When finalizing the render context to produce the final list of

command buffers, these tasks are run in parallel on the

`ComputeTaskPool` to produce their corresponding command buffers.

- The general idea is that the node graph will run in serial, but in a

node, instead of doing rendering work, you can add tasks to do render

work in parallel with other node's tasks that get ran at the end of the

graph execution.

## Nodes Parallelized

- `MainOpaquePass3dNode`

- `PrepassNode`

- `DeferredGBufferPrepassNode`

- `ShadowPassNode` (One task per view)

## Future Work

- For large number of draws calls, might be worth further subdividing

passes into 2+ tasks.

- Extend this to UI, 2d, transparent, and transmissive nodes?

- Needs testing - small command buffers are inefficient - it may be

worth reverting to the serial command encoder usage for render phases

with few items.

- All "serial" (traditional) rendering work must finish before parallel

rendering tasks (the new stuff) can start to run.

- There is still only one submission to the graphics queue at the end of

the graph execution. There is still no ability to submit work earlier.

## Performance Improvement

Thanks to @Elabajaba for testing on Bistro.

TLDR: Without shadow mapping, this PR has no impact. _With_ shadow

mapping, this PR gives **~40 more fps** than main.

---

## Changelog

- `MainOpaquePass3dNode`, `PrepassNode`, `DeferredGBufferPrepassNode`,

and each shadow map within `ShadowPassNode` are now encoded in parallel,

giving _greatly_ increased CPU performance, mainly when shadow mapping

is enabled.

- Does not work on WASM or AMD+Windows+Vulkan.

- Added `RenderContext::add_command_buffer_generation_task()`.

- `RenderContext::new()` now takes adapter info

- Some render graph and Node related types and methods now have

additional lifetime constraints.

## Migration Guide

`RenderContext::new()` now takes adapter info

- Some render graph and Node related types and methods now have

additional lifetime constraints.

---------

Co-authored-by: Elabajaba <Elabajaba@users.noreply.github.com>

Co-authored-by: François <mockersf@gmail.com>

2024-02-09 07:35:35 +00:00

|

|

|

#[cfg(feature = "trace")]

|

|

|

|

|

use bevy_utils::tracing::info_span;

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120

Fixes #3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-02-23 23:21:13 +00:00

|

|

|

use bevy_utils::{

|

Automatic batching/instancing of draw commands (#9685)

# Objective

- Implement the foundations of automatic batching/instancing of draw

commands as the next step from #89

- NOTE: More performance improvements will come when more data is

managed and bound in ways that do not require rebinding such as mesh,

material, and texture data.

## Solution

- The core idea for batching of draw commands is to check whether any of

the information that has to be passed when encoding a draw command

changes between two things that are being drawn according to the sorted

render phase order. These should be things like the pipeline, bind

groups and their dynamic offsets, index/vertex buffers, and so on.

- The following assumptions have been made:

- Only entities with prepared assets (pipelines, materials, meshes) are

queued to phases

- View bindings are constant across a phase for a given draw function as

phases are per-view

- `batch_and_prepare_render_phase` is the only system that performs this

batching and has sole responsibility for preparing the per-object data.

As such the mesh binding and dynamic offsets are assumed to only vary as

a result of the `batch_and_prepare_render_phase` system, e.g. due to

having to split data across separate uniform bindings within the same

buffer due to the maximum uniform buffer binding size.

- Implement `GpuArrayBuffer` for `Mesh2dUniform` to store Mesh2dUniform

in arrays in GPU buffers rather than each one being at a dynamic offset

in a uniform buffer. This is the same optimisation that was made for 3D

not long ago.

- Change batch size for a range in `PhaseItem`, adding API for getting

or mutating the range. This is more flexible than a size as the length

of the range can be used in place of the size, but the start and end can

be otherwise whatever is needed.

- Add an optional mesh bind group dynamic offset to `PhaseItem`. This

avoids having to do a massive table move just to insert

`GpuArrayBufferIndex` components.

## Benchmarks

All tests have been run on an M1 Max on AC power. `bevymark` and

`many_cubes` were modified to use 1920x1080 with a scale factor of 1. I

run a script that runs a separate Tracy capture process, and then runs

the bevy example with `--features bevy_ci_testing,trace_tracy` and

`CI_TESTING_CONFIG=../benchmark.ron` with the contents of

`../benchmark.ron`:

```rust

(

exit_after: Some(1500)

)

```

...in order to run each test for 1500 frames.

The recent changes to `many_cubes` and `bevymark` added reproducible

random number generation so that with the same settings, the same rng

will occur. They also added benchmark modes that use a fixed delta time

for animations. Combined this means that the same frames should be

rendered both on main and on the branch.

The graphs compare main (yellow) to this PR (red).

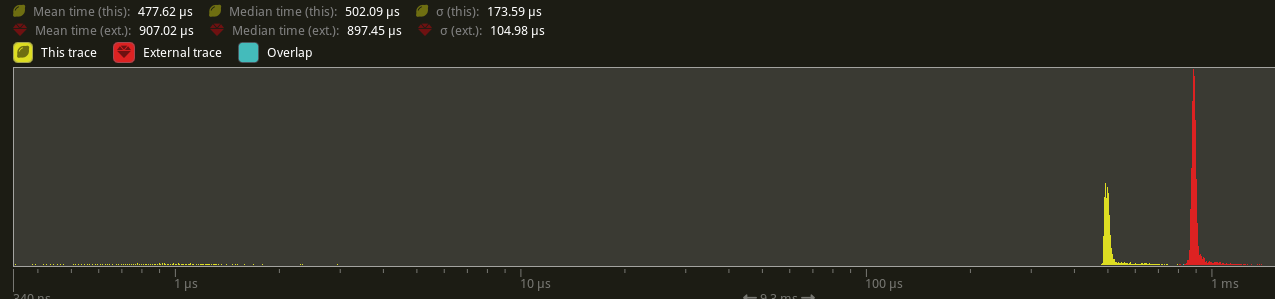

### 3D Mesh `many_cubes --benchmark`

<img width="1411" alt="Screenshot 2023-09-03 at 23 42 10"

src="https://github.com/bevyengine/bevy/assets/302146/2088716a-c918-486c-8129-090b26fd2bc4">

The mesh and material are the same for all instances. This is basically

the best case for the initial batching implementation as it results in 1

draw for the ~11.7k visible meshes. It gives a ~30% reduction in median

frame time.

The 1000th frame is identical using the flip tool:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4615 seconds

```

### 3D Mesh `many_cubes --benchmark --material-texture-count 10`

<img width="1404" alt="Screenshot 2023-09-03 at 23 45 18"

src="https://github.com/bevyengine/bevy/assets/302146/5ee9c447-5bd2-45c6-9706-ac5ff8916daf">

This run uses 10 different materials by varying their textures. The

materials are randomly selected, and there is no sorting by material

bind group for opaque 3D so any batching is 'random'. The PR produces a

~5% reduction in median frame time. If we were to sort the opaque phase

by the material bind group, then this should be a lot faster. This

produces about 10.5k draws for the 11.7k visible entities. This makes

sense as randomly selecting from 10 materials gives a chance that two

adjacent entities randomly select the same material and can be batched.

The 1000th frame is identical in flip:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4537 seconds

```

### 3D Mesh `many_cubes --benchmark --vary-per-instance`

<img width="1394" alt="Screenshot 2023-09-03 at 23 48 44"

src="https://github.com/bevyengine/bevy/assets/302146/f02a816b-a444-4c18-a96a-63b5436f3b7f">

This run varies the material data per instance by randomly-generating

its colour. This is the worst case for batching and that it performs

about the same as `main` is a good thing as it demonstrates that the

batching has minimal overhead when dealing with ~11k visible mesh

entities.

The 1000th frame is identical according to flip:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4568 seconds

```

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d`

<img width="1412" alt="Screenshot 2023-09-03 at 23 59 56"

src="https://github.com/bevyengine/bevy/assets/302146/cb02ae07-237b-4646-ae9f-fda4dafcbad4">

This spawns 160 waves of 1000 quad meshes that are shaded with

ColorMaterial. Each wave has a different material so 160 waves currently

should result in 160 batches. This results in a 50% reduction in median

frame time.

Capturing a screenshot of the 1000th frame main vs PR gives:

```

Mean: 0.001222

Weighted median: 0.750432

1st weighted quartile: 0.453494

3rd weighted quartile: 0.969758

Min: 0.000000

Max: 0.990296

Evaluation time: 0.4255 seconds

```

So they seem to produce the same results. I also double-checked the

number of draws. `main` does 160000 draws, and the PR does 160, as

expected.

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d --material-texture-count 10`

<img width="1392" alt="Screenshot 2023-09-04 at 00 09 22"

src="https://github.com/bevyengine/bevy/assets/302146/4358da2e-ce32-4134-82df-3ab74c40849c">

This generates 10 textures and generates materials for each of those and

then selects one material per wave. The median frame time is reduced by

50%. Similar to the plain run above, this produces 160 draws on the PR

and 160000 on `main` and the 1000th frame is identical (ignoring the fps

counter text overlay).

```

Mean: 0.002877

Weighted median: 0.964980

1st weighted quartile: 0.668871

3rd weighted quartile: 0.982749

Min: 0.000000

Max: 0.992377

Evaluation time: 0.4301 seconds

```

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d --vary-per-instance`

<img width="1396" alt="Screenshot 2023-09-04 at 00 13 53"

src="https://github.com/bevyengine/bevy/assets/302146/b2198b18-3439-47ad-919a-cdabe190facb">

This creates unique materials per instance by randomly-generating the

material's colour. This is the worst case for 2D batching. Somehow, this

PR manages a 7% reduction in median frame time. Both main and this PR

issue 160000 draws.

The 1000th frame is the same:

```

Mean: 0.001214

Weighted median: 0.937499

1st weighted quartile: 0.635467

3rd weighted quartile: 0.979085

Min: 0.000000

Max: 0.988971

Evaluation time: 0.4462 seconds

```

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite`

<img width="1396" alt="Screenshot 2023-09-04 at 12 21 12"

src="https://github.com/bevyengine/bevy/assets/302146/8b31e915-d6be-4cac-abf5-c6a4da9c3d43">

This just spawns 160 waves of 1000 sprites. There should be and is no

notable difference between main and the PR.

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite --material-texture-count 10`

<img width="1389" alt="Screenshot 2023-09-04 at 12 36 08"

src="https://github.com/bevyengine/bevy/assets/302146/45fe8d6d-c901-4062-a349-3693dd044413">

This spawns the sprites selecting a texture at random per instance from

the 10 generated textures. This has no significant change vs main and

shouldn't.

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite --vary-per-instance`

<img width="1401" alt="Screenshot 2023-09-04 at 12 29 52"

src="https://github.com/bevyengine/bevy/assets/302146/762c5c60-352e-471f-8dbe-bbf10e24ebd6">

This sets the sprite colour as being unique per instance. This can still

all be drawn using one batch. There should be no difference but the PR

produces median frame times that are 4% higher. Investigation showed no

clear sources of cost, rather a mix of give and take that should not

happen. It seems like noise in the results.

### Summary

| Benchmark | % change in median frame time |

| ------------- | ------------- |

| many_cubes | 🟩 -30% |

| many_cubes 10 materials | 🟩 -5% |

| many_cubes unique materials | 🟩 ~0% |

| bevymark mesh2d | 🟩 -50% |

| bevymark mesh2d 10 materials | 🟩 -50% |

| bevymark mesh2d unique materials | 🟩 -7% |

| bevymark sprite | 🟥 2% |

| bevymark sprite 10 materials | 🟥 0.6% |

| bevymark sprite unique materials | 🟥 4.1% |

---

## Changelog

- Added: 2D and 3D mesh entities that share the same mesh and material

(same textures, same data) are now batched into the same draw command

for better performance.

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Nicola Papale <nico@nicopap.ch>

2023-09-21 22:12:34 +00:00

|

|

|

nonmax::NonMaxU32,

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120

Fixes #3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-02-23 23:21:13 +00:00

|

|

|

tracing::{error, warn},

|

|

|

|

|

};

|

Automatic batching/instancing of draw commands (#9685)

# Objective

- Implement the foundations of automatic batching/instancing of draw

commands as the next step from #89

- NOTE: More performance improvements will come when more data is

managed and bound in ways that do not require rebinding such as mesh,

material, and texture data.

## Solution

- The core idea for batching of draw commands is to check whether any of

the information that has to be passed when encoding a draw command

changes between two things that are being drawn according to the sorted

render phase order. These should be things like the pipeline, bind

groups and their dynamic offsets, index/vertex buffers, and so on.

- The following assumptions have been made:

- Only entities with prepared assets (pipelines, materials, meshes) are

queued to phases

- View bindings are constant across a phase for a given draw function as

phases are per-view

- `batch_and_prepare_render_phase` is the only system that performs this

batching and has sole responsibility for preparing the per-object data.

As such the mesh binding and dynamic offsets are assumed to only vary as

a result of the `batch_and_prepare_render_phase` system, e.g. due to

having to split data across separate uniform bindings within the same

buffer due to the maximum uniform buffer binding size.

- Implement `GpuArrayBuffer` for `Mesh2dUniform` to store Mesh2dUniform

in arrays in GPU buffers rather than each one being at a dynamic offset

in a uniform buffer. This is the same optimisation that was made for 3D

not long ago.

- Change batch size for a range in `PhaseItem`, adding API for getting

or mutating the range. This is more flexible than a size as the length

of the range can be used in place of the size, but the start and end can

be otherwise whatever is needed.

- Add an optional mesh bind group dynamic offset to `PhaseItem`. This

avoids having to do a massive table move just to insert

`GpuArrayBufferIndex` components.

## Benchmarks

All tests have been run on an M1 Max on AC power. `bevymark` and

`many_cubes` were modified to use 1920x1080 with a scale factor of 1. I

run a script that runs a separate Tracy capture process, and then runs

the bevy example with `--features bevy_ci_testing,trace_tracy` and

`CI_TESTING_CONFIG=../benchmark.ron` with the contents of

`../benchmark.ron`:

```rust

(

exit_after: Some(1500)

)

```

...in order to run each test for 1500 frames.

The recent changes to `many_cubes` and `bevymark` added reproducible

random number generation so that with the same settings, the same rng

will occur. They also added benchmark modes that use a fixed delta time

for animations. Combined this means that the same frames should be

rendered both on main and on the branch.

The graphs compare main (yellow) to this PR (red).

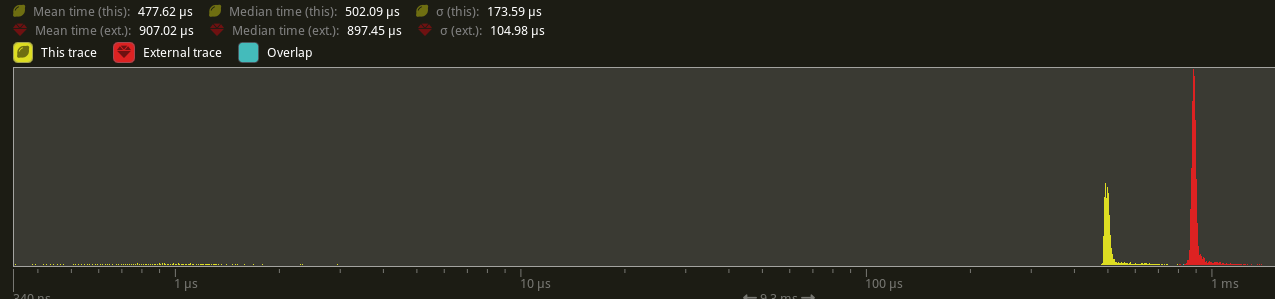

### 3D Mesh `many_cubes --benchmark`

<img width="1411" alt="Screenshot 2023-09-03 at 23 42 10"

src="https://github.com/bevyengine/bevy/assets/302146/2088716a-c918-486c-8129-090b26fd2bc4">

The mesh and material are the same for all instances. This is basically

the best case for the initial batching implementation as it results in 1

draw for the ~11.7k visible meshes. It gives a ~30% reduction in median

frame time.

The 1000th frame is identical using the flip tool:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4615 seconds

```

### 3D Mesh `many_cubes --benchmark --material-texture-count 10`

<img width="1404" alt="Screenshot 2023-09-03 at 23 45 18"

src="https://github.com/bevyengine/bevy/assets/302146/5ee9c447-5bd2-45c6-9706-ac5ff8916daf">

This run uses 10 different materials by varying their textures. The

materials are randomly selected, and there is no sorting by material

bind group for opaque 3D so any batching is 'random'. The PR produces a

~5% reduction in median frame time. If we were to sort the opaque phase

by the material bind group, then this should be a lot faster. This

produces about 10.5k draws for the 11.7k visible entities. This makes

sense as randomly selecting from 10 materials gives a chance that two

adjacent entities randomly select the same material and can be batched.

The 1000th frame is identical in flip:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4537 seconds

```

### 3D Mesh `many_cubes --benchmark --vary-per-instance`

<img width="1394" alt="Screenshot 2023-09-03 at 23 48 44"

src="https://github.com/bevyengine/bevy/assets/302146/f02a816b-a444-4c18-a96a-63b5436f3b7f">

This run varies the material data per instance by randomly-generating

its colour. This is the worst case for batching and that it performs

about the same as `main` is a good thing as it demonstrates that the

batching has minimal overhead when dealing with ~11k visible mesh

entities.

The 1000th frame is identical according to flip:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4568 seconds

```

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d`

<img width="1412" alt="Screenshot 2023-09-03 at 23 59 56"

src="https://github.com/bevyengine/bevy/assets/302146/cb02ae07-237b-4646-ae9f-fda4dafcbad4">

This spawns 160 waves of 1000 quad meshes that are shaded with

ColorMaterial. Each wave has a different material so 160 waves currently

should result in 160 batches. This results in a 50% reduction in median

frame time.

Capturing a screenshot of the 1000th frame main vs PR gives:

```

Mean: 0.001222

Weighted median: 0.750432

1st weighted quartile: 0.453494

3rd weighted quartile: 0.969758

Min: 0.000000

Max: 0.990296

Evaluation time: 0.4255 seconds

```

So they seem to produce the same results. I also double-checked the

number of draws. `main` does 160000 draws, and the PR does 160, as

expected.

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d --material-texture-count 10`

<img width="1392" alt="Screenshot 2023-09-04 at 00 09 22"

src="https://github.com/bevyengine/bevy/assets/302146/4358da2e-ce32-4134-82df-3ab74c40849c">

This generates 10 textures and generates materials for each of those and

then selects one material per wave. The median frame time is reduced by

50%. Similar to the plain run above, this produces 160 draws on the PR

and 160000 on `main` and the 1000th frame is identical (ignoring the fps

counter text overlay).

```

Mean: 0.002877

Weighted median: 0.964980

1st weighted quartile: 0.668871

3rd weighted quartile: 0.982749

Min: 0.000000

Max: 0.992377

Evaluation time: 0.4301 seconds

```

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d --vary-per-instance`

<img width="1396" alt="Screenshot 2023-09-04 at 00 13 53"

src="https://github.com/bevyengine/bevy/assets/302146/b2198b18-3439-47ad-919a-cdabe190facb">

This creates unique materials per instance by randomly-generating the

material's colour. This is the worst case for 2D batching. Somehow, this

PR manages a 7% reduction in median frame time. Both main and this PR

issue 160000 draws.

The 1000th frame is the same:

```

Mean: 0.001214

Weighted median: 0.937499

1st weighted quartile: 0.635467

3rd weighted quartile: 0.979085

Min: 0.000000

Max: 0.988971

Evaluation time: 0.4462 seconds

```

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite`

<img width="1396" alt="Screenshot 2023-09-04 at 12 21 12"

src="https://github.com/bevyengine/bevy/assets/302146/8b31e915-d6be-4cac-abf5-c6a4da9c3d43">

This just spawns 160 waves of 1000 sprites. There should be and is no

notable difference between main and the PR.

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite --material-texture-count 10`

<img width="1389" alt="Screenshot 2023-09-04 at 12 36 08"

src="https://github.com/bevyengine/bevy/assets/302146/45fe8d6d-c901-4062-a349-3693dd044413">

This spawns the sprites selecting a texture at random per instance from

the 10 generated textures. This has no significant change vs main and

shouldn't.

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite --vary-per-instance`

<img width="1401" alt="Screenshot 2023-09-04 at 12 29 52"

src="https://github.com/bevyengine/bevy/assets/302146/762c5c60-352e-471f-8dbe-bbf10e24ebd6">

This sets the sprite colour as being unique per instance. This can still

all be drawn using one batch. There should be no difference but the PR

produces median frame times that are 4% higher. Investigation showed no

clear sources of cost, rather a mix of give and take that should not

happen. It seems like noise in the results.

### Summary

| Benchmark | % change in median frame time |

| ------------- | ------------- |

| many_cubes | 🟩 -30% |

| many_cubes 10 materials | 🟩 -5% |

| many_cubes unique materials | 🟩 ~0% |

| bevymark mesh2d | 🟩 -50% |

| bevymark mesh2d 10 materials | 🟩 -50% |

| bevymark mesh2d unique materials | 🟩 -7% |

| bevymark sprite | 🟥 2% |

| bevymark sprite 10 materials | 🟥 0.6% |

| bevymark sprite unique materials | 🟥 4.1% |

---

## Changelog

- Added: 2D and 3D mesh entities that share the same mesh and material

(same textures, same data) are now batched into the same draw command

for better performance.

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Nicola Papale <nico@nicopap.ch>

2023-09-21 22:12:34 +00:00

|

|

|

use std::{hash::Hash, num::NonZeroU64, ops::Range};

|

2021-06-02 02:59:17 +00:00

|

|

|

|

2023-10-25 08:40:55 +00:00

|

|

|

use crate::*;

|

|

|

|

|

|

2021-11-22 23:16:36 +00:00

|

|

|

#[derive(Component)]

|

2021-06-02 02:59:17 +00:00

|

|

|

pub struct ExtractedPointLight {

|

|

|

|

|

color: Color,

|

bevy_pbr2: Improve lighting units and documentation (#2704)

# Objective

A question was raised on Discord about the units of the `PointLight` `intensity` member.

After digging around in the bevy_pbr2 source code and [Google Filament documentation](https://google.github.io/filament/Filament.html#mjx-eqn-pointLightLuminousPower) I discovered that the intention by Filament was that the 'intensity' value for point lights would be in lumens. This makes a lot of sense as these are quite relatable units given basically all light bulbs I've seen sold over the past years are rated in lumens as people move away from thinking about how bright a bulb is relative to a non-halogen incandescent bulb.

However, it seems that the derivation of the conversion between luminous power (lumens, denoted `Φ` in the Filament formulae) and luminous intensity (lumens per steradian, `I` in the Filament formulae) was missed and I can see why as it is tucked right under equation 58 at the link above. As such, while the formula states that for a point light, `I = Φ / 4 π` we have been using `intensity` as if it were luminous intensity `I`.

Before this PR, the intensity field is luminous intensity in lumens per steradian. After this PR, the intensity field is luminous power in lumens, [as suggested by Filament](https://google.github.io/filament/Filament.html#table_lighttypesunits) (unfortunately the link jumps to the table's caption so scroll up to see the actual table).

I appreciate that it may be confusing to call this an intensity, but I think this is intended as more of a non-scientific, human-relatable general term with a bit of hand waving so that most light types can just have an intensity field and for most of them it works in the same way or at least with some relatable value. I'm inclined to think this is reasonable rather than throwing terms like luminous power, luminous intensity, blah at users.

## Solution

- Documented the `PointLight` `intensity` member as 'luminous power' in units of lumens.

- Added a table of examples relating from various types of household lighting to lumen values.

- Added in the mapping from luminous power to luminous intensity when premultiplying the intensity into the colour before it is made into a graphics uniform.

- Updated the documentation in `pbr.wgsl` to clarify the earlier confusion about the missing `/ 4 π`.

- Bumped the intensity of the point lights in `3d_scene_pipelined` to 1600 lumens.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2021-08-23 23:48:11 +00:00

|

|

|

/// luminous intensity in lumens per steradian

|

2021-06-02 02:59:17 +00:00

|

|

|

intensity: f32,

|

|

|

|

|

range: f32,

|

|

|

|

|

radius: f32,

|

|

|

|

|

transform: GlobalTransform,

|

2021-11-19 21:16:58 +00:00

|

|

|

shadows_enabled: bool,

|

2021-07-16 22:41:56 +00:00

|

|

|

shadow_depth_bias: f32,

|

|

|

|

|

shadow_normal_bias: f32,

|

2022-07-08 19:57:43 +00:00

|

|

|

spot_light_angles: Option<(f32, f32)>,

|

2021-07-08 02:49:33 +00:00

|

|

|

}

|

|

|

|

|

|

2023-01-25 12:35:39 +00:00

|

|

|

#[derive(Component, Debug)]

|

2021-07-08 02:49:33 +00:00

|

|

|

pub struct ExtractedDirectionalLight {

|

|

|

|

|

color: Color,

|

|

|

|

|

illuminance: f32,

|

Take DirectionalLight's GlobalTransform into account when calculating shadow map volume (not just direction) (#6384)

# Objective

This PR fixes #5789, by enabling movable (and scalable) directional light shadow volumes.

## Solution

This PR changes `ExtractedDirectionalLight` to hold a copy of the `DirectionalLight` entity's `GlobalTransform`, instead of just a `direction` vector. This allows the shadow map volume (as defined by the light's `shadow_projection` field) to be transformed honoring translation _and_ scale transforms, and not just rotation.

It also augments the texel size calculation (used to determine the `shadow_normal_bias`) so that it now takes into account the upper bound of the x/y/z scale of the `GlobalTransform`.

This change makes the directional light extraction code more consistent with point and spot lights (that already use `transform`), and allows easily moving and scaling the shadow volume along with a player entity based on camera distance/angle, immediately enabling more real world use cases until we have a more sophisticated adaptive implementation, such as the one described in #3629.

**Note:** While it was previously possible to update the projection achieving a similar effect, depending on the light direction and distance to the origin, the fact that the shadow map camera was always positioned at the origin with a hardcoded `Vec3::Y` up value meant you would get sub-optimal or inconsistent/incorrect results.

---

## Changelog

### Changed

- `DirectionalLight` shadow volumes now honor translation and scale transforms

## Migration Guide

- If your directional lights were positioned at the origin and not scaled (the default, most common scenario) no changes are needed on your part; it just works as before;

- If you previously had a system for dynamically updating directional light shadow projections, you might now be able to simplify your code by updating the directional light entity's transform instead;

- In the unlikely scenario that a scene with directional lights that previously rendered shadows correctly has missing shadows, make sure your directional lights are positioned at (0, 0, 0) and are not scaled to a size that's too large or too small.

2022-11-04 20:12:26 +00:00

|

|

|

transform: GlobalTransform,

|

2021-11-19 21:16:58 +00:00

|

|

|

shadows_enabled: bool,

|

2021-07-16 22:41:56 +00:00

|

|

|

shadow_depth_bias: f32,

|

|

|

|

|

shadow_normal_bias: f32,

|

2023-01-25 12:35:39 +00:00

|

|

|

cascade_shadow_config: CascadeShadowConfig,

|

2024-02-12 15:02:24 +00:00

|

|

|

cascades: EntityHashMap<Vec<Cascade>>,

|

|

|

|

|

frusta: EntityHashMap<Vec<Frustum>>,

|

light renderlayers (#10742)

# Objective

add `RenderLayers` awareness to lights. lights default to

`RenderLayers::layer(0)`, and must intersect the camera entity's

`RenderLayers` in order to affect the camera's output.

note that lights already use renderlayers to filter meshes for shadow

casting. this adds filtering lights per view based on intersection of

camera layers and light layers.

fixes #3462

## Solution

PointLights and SpotLights are assigned to individual views in

`assign_lights_to_clusters`, so we simply cull the lights which don't

match the view layers in that function.

DirectionalLights are global, so we

- add the light layers to the `DirectionalLight` struct

- add the view layers to the `ViewUniform` struct

- check for intersection before processing the light in

`apply_pbr_lighting`

potential issue: when mesh/light layers are smaller than the view layers

weird results can occur. e.g:

camera = layers 1+2

light = layers 1

mesh = layers 2

the mesh does not cast shadows wrt the light as (1 & 2) == 0.

the light affects the view as (1+2 & 1) != 0.

the view renders the mesh as (1+2 & 2) != 0.

so the mesh is rendered and lit, but does not cast a shadow.

this could be fixed (so that the light would not affect the mesh in that

view) by adding the light layers to the point and spot light structs,

but i think the setup is pretty unusual, and space is at a premium in

those structs (adding 4 bytes more would reduce the webgl point+spot

light max count to 240 from 256).

I think typical usage is for cameras to have a single layer, and

meshes/lights to maybe have multiple layers to render to e.g. minimaps

as well as primary views.

if there is a good use case for the above setup and we should support

it, please let me know.

---

## Migration Guide

Lights no longer affect all `RenderLayers` by default, now like cameras

and meshes they default to `RenderLayers::layer(0)`. To recover the

previous behaviour and have all lights affect all views, add a

`RenderLayers::all()` component to the light entity.

2023-12-12 19:45:37 +00:00

|

|

|

render_layers: RenderLayers,

|

2021-06-02 02:59:17 +00:00

|

|

|

}

|

|

|

|

|

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

#[derive(Copy, Clone, ShaderType, Default, Debug)]

|

2021-07-08 02:49:33 +00:00

|

|

|

pub struct GpuPointLight {

|

2022-07-08 19:57:43 +00:00

|

|

|

// For point lights: the lower-right 2x2 values of the projection matrix [2][2] [2][3] [3][2] [3][3]

|

|

|

|

|

// For spot lights: 2 components of the direction (x,z), spot_scale and spot_offset

|

|

|

|

|

light_custom_data: Vec4,

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

color_inverse_square_range: Vec4,

|

|

|

|

|

position_radius: Vec4,

|

2021-11-19 21:16:58 +00:00

|

|

|

flags: u32,

|

2021-07-16 22:41:56 +00:00

|

|

|

shadow_depth_bias: f32,

|

|

|

|

|

shadow_normal_bias: f32,

|

2022-07-08 19:57:43 +00:00

|

|

|

spot_light_tan_angle: f32,

|

2021-07-08 02:49:33 +00:00

|

|

|

}

|

|

|

|

|

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

#[derive(ShaderType)]

|

|

|

|

|

pub struct GpuPointLightsUniform {

|

|

|

|

|

data: Box<[GpuPointLight; MAX_UNIFORM_BUFFER_POINT_LIGHTS]>,

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

impl Default for GpuPointLightsUniform {

|

|

|

|

|

fn default() -> Self {

|

|

|

|

|

Self {

|

|

|

|

|

data: Box::new([GpuPointLight::default(); MAX_UNIFORM_BUFFER_POINT_LIGHTS]),

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

#[derive(ShaderType, Default)]

|

|

|

|

|

pub struct GpuPointLightsStorage {

|

|

|

|

|

#[size(runtime)]

|

|

|

|

|

data: Vec<GpuPointLight>,

|

|

|

|

|

}

|

|

|

|

|

|

2022-04-07 16:16:35 +00:00

|

|

|

pub enum GpuPointLights {

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

Uniform(UniformBuffer<GpuPointLightsUniform>),

|

|

|

|

|

Storage(StorageBuffer<GpuPointLightsStorage>),

|

2022-04-07 16:16:35 +00:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

impl GpuPointLights {

|

|

|

|

|

fn new(buffer_binding_type: BufferBindingType) -> Self {

|

|

|

|

|

match buffer_binding_type {

|

|

|

|

|

BufferBindingType::Storage { .. } => Self::storage(),

|

|

|

|

|

BufferBindingType::Uniform => Self::uniform(),

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn uniform() -> Self {

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

Self::Uniform(UniformBuffer::default())

|

2022-04-07 16:16:35 +00:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn storage() -> Self {

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

Self::Storage(StorageBuffer::default())

|

2022-04-07 16:16:35 +00:00

|

|

|

}

|

|

|

|

|

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

fn set(&mut self, mut lights: Vec<GpuPointLight>) {

|

2022-04-07 16:16:35 +00:00

|

|

|

match self {

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`