# Description

Makes IR the default evaluator, in preparation to remove the non-IR

evaluator in a future release.

# User-Facing Changes

* Remove `NU_USE_IR` option

* Add `NU_DISABLE_IR` option

* IR is enabled unless `NU_DISABLE_IR` is set

# After Submitting

- [ ] release notes

# Description

Fixes a bug in the IR for `try` to match that of the regular evaluator

(continuing from #13515):

```nushell

# without IR:

try { ^false } catch { 'caught' } # == 'caught'

# with IR:

try { ^false } catch { 'caught' } # error, non-zero exit code

```

In this PR, both now evaluate to `caught`. For the implementation, I had

to add another instruction, and feel free to suggest better

alternatives. In the future, it might be possible to get rid of this

extra instruction.

# User-Facing Changes

Bug fix, `try { ^false } catch { 'caught' }` now works in IR.

# Description

This PR makes it so that non-zero exit codes and termination by signal

are treated as a normal `ShellError`. Currently, these are silent

errors. That is, if an external command fails, then it's code block is

aborted, but the parent block can sometimes continue execution. E.g.,

see #8569 and this example:

```nushell

[1 2] | each { ^false }

```

Before this would give:

```

╭───┬──╮

│ 0 │ │

│ 1 │ │

╰───┴──╯

```

Now, this shows an error:

```

Error: nu:🐚:eval_block_with_input

× Eval block failed with pipeline input

╭─[entry #1:1:2]

1 │ [1 2] | each { ^false }

· ┬

· ╰── source value

╰────

Error: nu:🐚:non_zero_exit_code

× External command had a non-zero exit code

╭─[entry #1:1:17]

1 │ [1 2] | each { ^false }

· ──┬──

· ╰── exited with code 1

╰────

```

This PR fixes#12874, fixes#5960, fixes#10856, and fixes#5347. This

PR also partially addresses #10633 and #10624 (only the last command of

a pipeline is currently checked). It looks like #8569 is already fixed,

but this PR will make sure it is definitely fixed (fixes#8569).

# User-Facing Changes

- Non-zero exit codes and termination by signal now cause an error to be

thrown.

- The error record value passed to a `catch` block may now have an

`exit_code` column containing the integer exit code if the error was due

to an external command.

- Adds new config values, `display_errors.exit_code` and

`display_errors.termination_signal`, which determine whether an error

message should be printed in the respective error cases. For

non-interactive sessions, these are set to `true`, and for interactive

sessions `display_errors.exit_code` is false (via the default config).

# Tests

Added a few tests.

# After Submitting

- Update docs and book.

- Future work:

- Error if other external commands besides the last in a pipeline exit

with a non-zero exit code. Then, deprecate `do -c` since this will be

the default behavior everywhere.

- Add a better mechanism for exit codes and deprecate

`$env.LAST_EXIT_CODE` (it's buggy).

# Description

After merging #13788, I get an error message which says that

`use_grid_icons` is invalid.

I think it's good to report the specific error, and guide user to delete

it.

# Description

After looking at #13751 I noticed that the config setting

`use_grid_icons` seems out of place. So, I removed it from the config

and made it a parameter to the `grid` command.

# Description

This PR fixes#13732. However, I don't think it's a proper fix.

1. It doesn't really show what the problem is.

2. It kind of side-steps the error entirely.

I do think the change in span.rs may be valid because I don't think

span.end should ever be 0. In the example in 13732 the span end was

always 0 and so that made contains_span() return true, which seems like

a false positive.

The `checked_sub()` in ide.rs kind of just stops it from failing

outloud.

I'll leave it to smarter folks than me to land this if they think it's

worthy.

Discovered by @cptpiepmatz that #13749 broke the standalone check for

`nu-protocol`

Explicit use of the feature as workspace root also disables all features

for `serde`. Alternatively we could reconsider this there.

# Description

Cleans up and refactors the config code using the `IntoValue` macro.

Shoutout to @cptpiepmatz for making the macro!

# User-Facing Changes

Should be none.

# After Submitting

Somehow refactor the reverse transformation.

Closes#13687Closes#13686

# Description

Light refactoring of `send_request `in `client.rs`. In the end there are

more lines but now the logic is more concise and facilitates adding new

conditions in the future. Unit tests ran fine and I tested a few cases

manually.

Cool project btw, I'll be using nushell from now on.

# Description

This PR allows the helper attribute `nu_value(rename = "...")` to be

used on struct fields and enum variants. This allows renaming keys and

variants just like [`#[serde(rename =

"name")]`](https://serde.rs/field-attrs.html#rename). This has no

singular variants for `IntoValue` or `FromValue`, both need to use the

same (but I think this shouldn't be an issue for now).

# User-Facing Changes

Users of the derive macros `IntoValue` and `FromValue` may now use

`#[nu_value(rename = "...")]` to rename single fields, but no already

existing code will break.

# Description

This changes the behavior of `tee` to be more transparent when given a

value that isn't a list or range. Previously, anything that wasn't a

byte stream would converted to a list stream using the iterator

implementation, which led to some surprising results. Instead, now, if

the value is a string or binary, it will be treated the same way a byte

stream is, and the output of `tee` is a byte stream instead of the

original value. This is done so that we can synchronize with the other

thread on collect, and potentially capture any error produced by the

closure.

For values that can't be converted to streams, the closure is just run

with a clone of the value instead on another thread. Because we can't

wait for the other thread, there is no way to send an error back to the

original thread, so instead it's just written to stderr using

`report_error_new()`.

There are a couple of follow up edge cases I see where byte streams

aren't necessarily treated exactly the same way strings are, but this

should mostly be a good experience.

Fixes#13489.

# User-Facing Changes

Breaking change.

- `tee` now outputs and sends string/binary stream for string/binary

input.

- `tee` now outputs and sends the original value for any other input

other than lists/ranges.

# Tests + Formatting

Added for new behavior.

# After Submitting

- [ ] release notes: breaking change, command change

removing the `std` feature as well would drop some dependencies tied to

`rust_decimal` from the `Cargo.lock` but unclear to me what the actual

impact on compile times is.

We may want to consider dropping the `byte-unit` dependency altogether

as we have a significant fraction of our own logic to support the byte

units with 1024 and 1000 prefixes. Not sure which fraction is covered by

us or the dependency.

# Description

Implements `IntoValue` for `&str` and `DateTime` as well as other

nushell types like `Record` and `Closure`. Also allows `HashMap`s with

keys besides `String` to implement `IntoValue`.

# Description

`cargo` somewhat recently gained the capability to store `lints`

settings for the crate and workspace, that can override the defaults

from `rustc` and `clippy` lints. This means we can enforce some lints

without having to actively pass them to clippy via `cargo clippy -- -W

...`. So users just forking the repo have an easier time to follow

similar requirements like our CI.

## Limitation

An exception that remains is that those lints apply to both the primary

code base and the tests. Thus we can't include e.g. `unwrap_used`

without generating noise in the tests. Here the setup in the CI remains

the most helpful.

## Included lints

- Add `clippy::unchecked_duration_subtraction` (added by #12549)

# User-Facing Changes

Running `cargo clippy --workspace` should be closer to the CI. This has

benefits for editor configured runs of clippy and saves you from having

to use `toolkit` to be close to CI in more cases.

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

@sholderbach mentioned that I introduced `convert_case` as a dependency

while we already had `heck` for case conversion. So in this PR replaced

the use `convert_case` with `heck`. Mostly I rebuilt the `convert_case`

API with `heck` to work with it as I like the API of `convert_case` more

than `heck`.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Nothing changed, the use of `convert_case` wasn't exposed anywhere and

all case conversions are still available.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

No new tests required but my tests in `test_derive` captured some errors

I made while developing this change, (hurray, tests work 🎉)

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

# Description

Using derived `IntoValue` and `FromValue` implementations on structs

with named fields currently produce `Value::Record`s where each key is

the key of the Rust struct. For records like the `$nu` constant, that

won't work as this record uses `kebab-case` for it's keys. To accomodate

this, I upgraded the `#[nu_value(rename_all = "...")]` helper attribute

to also work on structs with named fields which will rename the keys via

the same case conversion as the enums already have.

# User-Facing Changes

Users of these macros may choose different key styles for their in

`Value` representation.

# Tests + Formatting

I added the same test suite as enums already have and updated the traits

documentation with more examples that also pass the doc test.

# After Submitting

I played around with the `$nu` constant but got stuck at the point that

these keys are kebab-cased, with this, I can play around more with it.

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

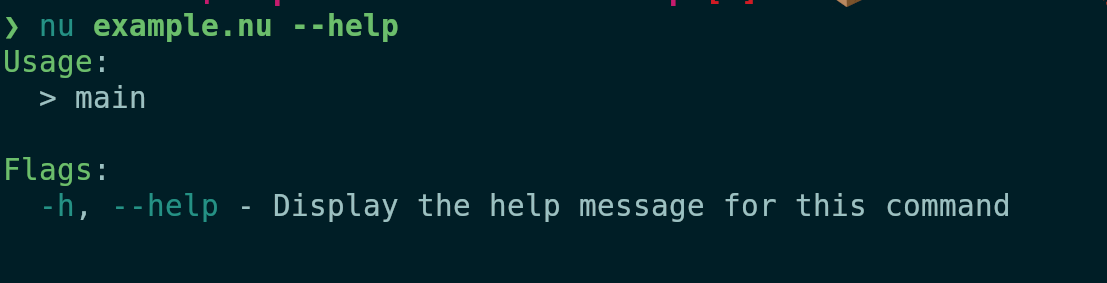

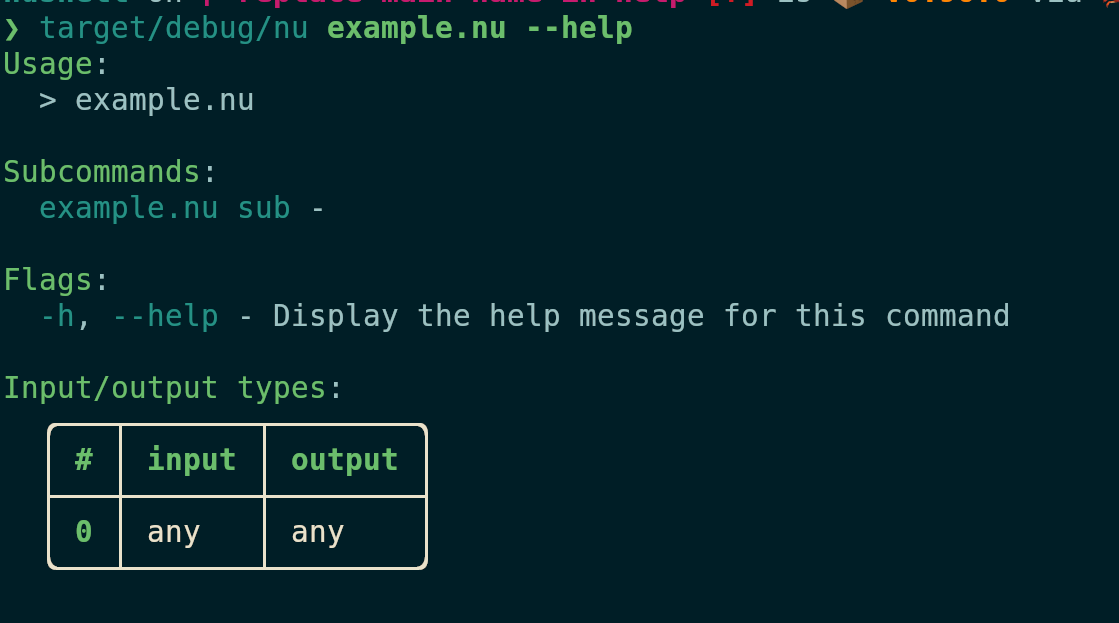

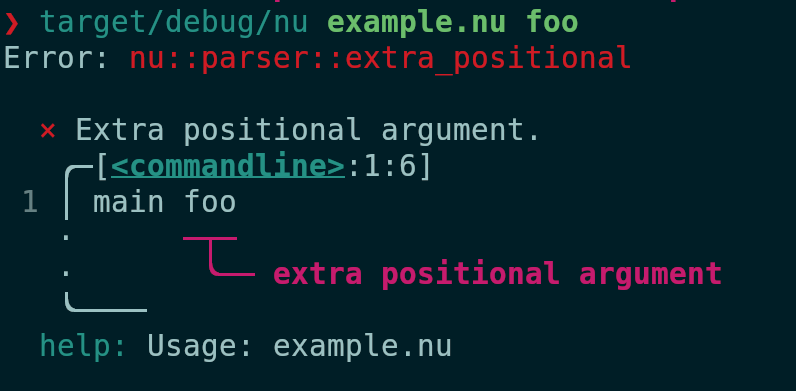

Currently the parser and the documentation generation use the signature

of the command, which means that it doesn't pick up on the changed name

of the `main` block, and therefore shows the name of the command as

"main" and doesn't find the subcommands. This PR changes the

aforementioned places to use the block signature to fix these issues.

This closes#13397. Incidentally it also causes input/output types to be

shown in the help, which is kinda pointless for scripts since they don't

operate on structured data but maybe not worth the effort to remove.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

```

# example.nu

export def main [] { help main }

export def 'main sub' [] { print 'sub' }

```

Before:

After:

# Tests

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

Tests are still missing for the subcommands and the input/output types

---------

Co-authored-by: Stefan Holderbach <sholderbach@users.noreply.github.com>

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

In this PR I expanded the helper attribute `#[nu_value]` on

`#[derive(FromValue)]`. It now allows the usage of `#[nu_value(type_name

= "...")]` to set a type name for the `FromValue::expected_type`

implementation. Currently it only uses the default implementation but

I'd like to change that without having to manually implement the entire

trait on my own.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

Users that derive `FromValue` may now change the name of the expected

type.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

I added some tests that check if this feature work and updated the

documentation about the derive macro.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

<!--

if this PR closes one or more issues, you can automatically link the PR

with

them by using one of the [*linking

keywords*](https://docs.github.com/en/issues/tracking-your-work-with-issues/linking-a-pull-request-to-an-issue#linking-a-pull-request-to-an-issue-using-a-keyword),

e.g.

- this PR should close #xxxx

- fixes #xxxx

you can also mention related issues, PRs or discussions!

-->

# Description

<!--

Thank you for improving Nushell. Please, check our [contributing

guide](../CONTRIBUTING.md) and talk to the core team before making major

changes.

Description of your pull request goes here. **Provide examples and/or

screenshots** if your changes affect the user experience.

-->

I was working with byte collections like `Vec<u8>` and

[`bytes::Bytes`](https://docs.rs/bytes/1.7.1/bytes/struct.Bytes.html),

both are currently not possible to be used directly in a struct that

derives `IntoValue` and `FromValue` at the same time. The `Vec<u8>` will

convert itself into a `Value::List` but expects a `Value::String` or

`Value::Binary` to load from. I now also implemented that it can load

from `Value::List` just like the other `Vec<uX>` versions. For further

working with byte collections the type `bytes::Bytes` is wildly used,

therefore I added a implementation for it. `bytes` is already part of

the dependency graph as many crates (more than 5000 to crates.io) use

it.

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

User of `nu-protocol` as library, e.g. plugin developers, can now use

byte collections more easily in their data structures and derive

`IntoValue` and `FromValue` for it.

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used` to

check that you're using the standard code style

- `cargo test --workspace` to check that all tests pass (on Windows make

sure to [enable developer

mode](https://learn.microsoft.com/en-us/windows/apps/get-started/developer-mode-features-and-debugging))

- `cargo run -- -c "use toolkit.nu; toolkit test stdlib"` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

I added a few tests that check that these byte collections are correctly

translated in and from `Value`. They live in `test_derive.rs` as part of

the `ByteContainer` and I also explicitely tested that `FromValue` for

`Vec<u8>` works as expected.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

Maybe it should be explored if `Value::Binary` should use `bytes::Bytes`

instead of `Vec<u8>`.

# Description

The meaning of the word usage is specific to describing how a command

function is *used* and not a synonym for general description. Usage can

be used to describe the SYNOPSIS or EXAMPLES sections of a man page

where the permitted argument combinations are shown or example *uses*

are given.

Let's not confuse people and call it what it is a description.

Our `help` command already creates its own *Usage* section based on the

available arguments and doesn't refer to the description with usage.

# User-Facing Changes

`help commands` and `scope commands` will now use `description` or

`extra_description`

`usage`-> `description`

`extra_usage` -> `extra_description`

Breaking change in the plugin protocol:

In the signature record communicated with the engine.

`usage`-> `description`

`extra_usage` -> `extra_description`

The same rename also takes place for the methods on

`SimplePluginCommand` and `PluginCommand`

# Tests + Formatting

- Updated plugin protocol specific changes

# After Submitting

- [ ] update plugin protocol doc

# Description

Fixes#11267

Shifting by a `shift >= num_bits` is undefined in the underlying

operation. Previously we also had an overflow on negative shifts for the

operators `bit-shl` and `bit-shr`

Furthermore I found a severe bug in the implementation of shifting of

`binary` data with the commands `bits shl` and `bits shr`, this

categorically produced incorrect results with shifts that were not

`shift % 4 == 0`. `bits shr` also was able to produce outputs with

different size to the input if the shift was exceeding the length of the

input data by more than a byte.

# User-Facing Changes

It is now an error trying to shift by more than the available bits with:

- `bit-shl` operator

- `bit-shr` operator

- command `bits shl`

- command `bits shr`

# Tests + Formatting

Added testing for all relevant cases

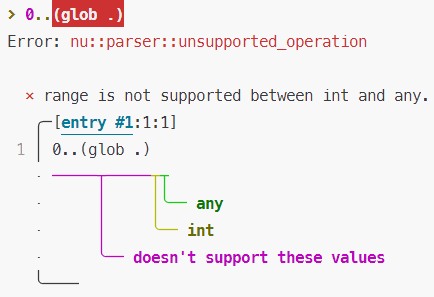

# Description

As part of fixing https://github.com/nushell/nushell/issues/13586, this

PR checks the types of the operands when creating a range. Stuff like

`0..(glob .)` will be rejected at parse time. Additionally, `0..$x` will

be treated as a range and rejected if `x` is not defined, rather than

being treated as a string. A separate PR will need to be made to do

reject streams at runtime, so that stuff like `0..(open /dev/random)`

doesn't hang.

Internally, this PR adds a `ParseError::UnsupportedOperationTernary`

variant, for when you have a range like `1..2..(glob .)`.

# User-Facing Changes

Users will now receive an error if any of the operands in the ranges

they construct have types that aren't compatible with `Type::Number`.

Additionally, if a piece of code looks like a range but some parse error

is encountered while parsing it, that piece of code will still be

treated as a range and the user will be shown the parse error. This

means that a piece of code like `0..$x` will be treated as a range no

matter what. Previously, if `x` weren't the expression would've been

treated as a string `"0..$x"`. I feel like it makes the language less

complicated if we make it less context-sensitive.

Here's an example of the error you get:

```

> 0..(glob .)

Error: nu::parser::unsupported_operation

× range is not supported between int and any.

╭─[entry #1:1:1]

1 │ 0..(glob .)

· ─────┬─────┬┬

· │ │╰── any

· │ ╰── int

· ╰── doesn't support these values

╰────

```

And as an image:

Note: I made the operands themselves (above, `(glob .)`) be garbage,

rather than the `..` operator itself. This doesn't match the behavior of

the math operators (if you do `1 + "foo"`, `+` gets highlighted red).

This is because with ranges, the range operators aren't `Expression`s

themselves, so they can't be turned into garbage. I felt like here, it

makes more sense to highlight the individual operand anyway.

# Description

Something I meant to add a long time ago. We currently don't have a

convenient way to print raw binary data intentionally. You can pipe it

through `cat` to turn it into an unknown stream, or write it to a file

and read it again, but we can't really just e.g. generate msgpack and

write it to stdout without this. For example:

```nushell

[abc def] | to msgpack | print --raw

```

This is useful for nushell scripts that will be piped into something

else. It also means that `nu_plugin_nu_example` probably doesn't need to

do this anymore, but I haven't adjusted it yet:

```nushell

def tell_nushell_encoding [] {

print -n "\u{0004}json"

}

```

This happens to work because 0x04 is a valid UTF-8 character, but it

wouldn't be possible if it were something above 0x80.

`--raw` also formats other things without `table`, I figured the two

things kind of go together. The output is kind of like `to text`.

Debatable whether that should share the same flag, but it was easier

that way and seemed reasonable.

# User-Facing Changes

- `print` new flag: `--raw`

# Tests + Formatting

Added tests.

# After Submitting

- [ ] release notes (command modified)

This PR will close#13501

# Description

This PR expands on [the relay of signals to running plugin

processes](https://github.com/nushell/nushell/pull/13181). The Ctrlc

relay has been generalized to SignalAction::Interrupt and when

reset_signal is called on the main EngineState, a SignalAction::Reset is

now relayed to running plugins.

# User-Facing Changes

The signal handler closure now takes a `signals::SignalAction`, while

previously it took no arguments. The handler will now be called on both

interrupt and reset. The method to register a handler on the plugin side

is now called `register_signal_handler` instead of

`register_ctrlc_handler`

[example](https://github.com/nushell/nushell/pull/13510/files#diff-3e04dff88fd0780a49778a3d1eede092ec729a1264b4ef07ca0d2baa859dad05L38).

This will only affect plugin authors who have started making use of

https://github.com/nushell/nushell/pull/13181, which isn't currently

part of an official release.

The change will also require all of user's plugins to be recompiled in

order that they don't error when a signal is received on the

PluginInterface.

# Testing

```

: example ctrlc

interrupt status: false

waiting for interrupt signal...

^Cinterrupt status: true

peace.

Error: × Operation interrupted

╭─[display_output hook:1:1]

1 │ if (term size).columns >= 100 { table -e } else { table }

· ─┬

· ╰── This operation was interrupted

╰────

: example ctrlc

interrupt status: false <-- NOTE status is false

waiting for interrupt signal...

^Cinterrupt status: true

peace.

Error: × Operation interrupted

╭─[display_output hook:1:1]

1 │ if (term size).columns >= 100 { table -e } else { table }

· ─┬

· ╰── This operation was interrupted

╰────

```

# Description

Part 4 of replacing std::path types with nu_path types added in

https://github.com/nushell/nushell/pull/13115. This PR migrates various

tests throughout the code base.

In some `if let`s we ran the `SharedCow::to_mut` for the test and to get

access to a mutable reference in the happy path. Internally

`Arc::into_mut` has to read atomics and if necessary clone.

For else branches, where we still want to modify the record we

previously called this again (not just in rust, confirmed in the asm).

This would have introduced a `call` instruction and its cost (even if it

would be guaranteed to take the short path in `Arc::into_mut`).

Lifting it get's rid of this.

# Description

Part 3 of replacing `std::path` types with `nu_path` types added in

#13115. This PR targets the paths listed in `$nu`. That is, the home,

config, data, and cache directories.

- **Doccomment style fixes**

- **Forgotten stuff in `nu-pretty-hex`**

- **Don't `for` around an `Option`**

- and more

I think the suggestions here are a net positive, some of the suggestions

moved into #13498 feel somewhat arbitrary, I also raised

https://github.com/rust-lang/rust-clippy/issues/13188 as the nightly

`byte_char_slices` would require either a global allow or otherwise a

ton of granular allows or possibly confusing bytestring literals.

- **Suggested default impl for the new `*Stack`s**

- **Change a hashmap to make clippy happy**

- **Clone from fix**

- **Fix conditional unused in test**

- then **Bump rust toolchain**

# Description

This PR adds a new method to `EngineInterface`: `register_ctrlc_handler`

which takes a closure to run when the plugin's driving engine receives a

ctrlc-signal. It also adds a mirror of the `signals` attribute from the

main shell `EngineState`.

This is an example of how a plugin which makes a long poll http request

can end the request on ctrlc:

https://github.com/cablehead/nu_plugin_http/blob/main/src/commands/request.rs#L68-L77

To facilitate the feature, a new attribute has been added to

`EngineState`: `ctrlc_handlers`. This is a Vec of closures that will be

run when the engine's process receives a ctrlc signal.

When plugins are added to an `engine_state` during a `merge_delta`, the

engine passes the ctrlc_handlers to the plugin's

`.configure_ctrlc_handler` method, which gives the plugin a chance to

register a handler that sends a ctrlc packet through the

`PluginInterface`, if an instance of the plugin is currently running.

On the plugin side: `EngineInterface` also has a ctrlc_handlers Vec of

closures. Plugin calls can use `register_ctrlc_handler` to register a

closure that will be called in the plugin process when the

PluginInput::Ctrlc command is received.

For future reference these are some alternate places that were

investigated for tying the ctrlc trigger to transmitting a Ctrlc packet

through the `PluginInterface`:

- Directly from `src/signals.rs`: the handler there would need a

reference to the Vec<Arc<RegisteredPlugins>>, which would require us to

wrap the plugins in a Mutex, which we don't want to do.

- have `PersistentPlugin.get_plugin` pass down the engine's

CtrlcHandlers to .get and then to .spawn (if the plugin isn't already

running). Once we have CtrlcHandlers in spawn, we can register a handler

to write directly to PluginInterface. We don't want to double down on

passing engine_state to spawn this way though, as it's unpredictable

because it would depend on whether the plugin has already been spawned

or not.

- pass `ctrlc_handlers` to PersistentPlugin::new so it can store it on

itself so it's available to spawn.

- in `PersistentPlugin.spawn`, create a handler that sends to a clone of

the GC event loop's tx. this has the same issues with regards to how to

get CtrlcHandlers to the spawn method, and is more complicated than a

handler that writes directly to PluginInterface

# User-Facing Changes

No breaking changes

---------

Co-authored-by: Ian Manske <ian.manske@pm.me>

# Description

Seems like I developed a bit of a bad habit of trying to link

```rust

/// [`.foo()`]

```

in docstrings, and this just doesn't work automatically; you have to do

```rust

/// [`.foo()`](Self::foo)

```

if you want it to actually link. I think I found and replaced all of

these.

# User-Facing Changes

Just docs.

# Description

Fixes#13441.

I must have forgotten that `Expr::Range` can contain other expressions,

so I wasn't searching for `$in` to replace within it. Easy fix.

# User-Facing Changes

Bug fix, ranges like `6 | 3..$in` work as expected now.

# Tests + Formatting

Added regression test.

# Description

This corrects the parsing of unknown arguments provided to known

externals to behave exactly like external arguments passed to normal

external calls.

I've done this by adding a `SyntaxShape::ExternalArgument` which

triggers the same parsing rules.

Because I didn't like how the highlighting looked, I modified the

flattener to emit `ExternalArg` flat shapes for arguments that have that

syntax shape and are plain strings/globs. This is the same behavior that

external calls have.

Aside from passing the tests, I've also checked manually that the

completer seems to work adequately. I can confirm that specified

positional arguments get completion according to their specified type

(including custom completions), and then anything remaining gets

filepath style completion, as you'd expect from an external command.

Thanks to @OJarrisonn for originally finding this issue.

# User-Facing Changes

- Unknown args are now parsed according to their specified syntax shape,

rather than `Any`. This may be a breaking change, though I think it's

extremely unlikely in practice.

- The unspecified arguments of known externals are now highlighted /

flattened identically to normal external arguments, which makes it more

clear how they're being interpreted, and should help the completer

function properly.

- Known externals now have an implicit rest arg if not specified named

`args`, with a syntax shape of `ExternalArgument`.

# Tests + Formatting

Tests added for the new behaviour. Some old tests had to be corrected to

match.

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

- [ ] release notes (bugfix, and debatable whether it's a breaking

change)

# Description

Trying to help @amtoine out here - the spans calculated by `ast::Call`

by `span()` and `argument_span()` are suspicious and are not properly

guarding against `end` that might be before `start`. Just using

`Span::merge()` / `merge_many()` instead to try to make the behaviour as

simple and consistent as possible. Hopefully then even if the arguments

have some crazy spans, we don't have spans that are just totally

invalid.

# Tests + Formatting

I did check that everything passes with this.

fixes https://github.com/nushell/nushell/issues/13378

# Description

This PR tries to improve usage of system APIs to determine the location

of vendored autoload files.

# User-Facing Changes

The paths listed in #13180 and #13217 are changing. This has not been

part of a release yet, so arguably the user facing changes are only to

unreleased features anyway.

# Tests + Formatting

Haven't done, but if someone wants to help me here, I'm open to doing

it. I just don't know how to properly test this.

# After Submitting

# Description

Adds functionality to the plugin interface to support calling internal

commands from plugins. For example, using `view ir --json`:

```rust

let closure: Value = call.req(0)?;

let Some(decl_id) = engine.find_decl("view ir")? else {

return Err(LabeledError::new("`view ir` not found"));

};

let ir_json = engine.call_decl(

decl_id,

EvaluatedCall::new(call.head)

.with_named("json".into_spanned(call.head), Value::bool(true, call.head))

.with_positional(closure),

PipelineData::Empty,

true,

false,

)?.into_value()?.into_string()?;

let ir = serde_json::from_value(&ir_json);

// ...

```

# User-Facing Changes

Plugin developers can now use `EngineInterface::find_decl()` and

`call_decl()` to call internal commands, which could be handy for

formatters like `to csv` or `to nuon`, or for reflection commands that

help gain insight into the engine.

# Tests + Formatting

- 🟢 `toolkit fmt`

- 🟢 `toolkit clippy`

- 🟢 `toolkit test`

- 🟢 `toolkit test stdlib`

# After Submitting

- [ ] release notes

- [ ] update plugin protocol documentation: `FindDecl`, `CallDecl`

engine calls; `Identifier` engine call response

# Description

This grew quite a bit beyond its original scope, but I've tried to make

`$in` a bit more consistent and easier to work with.

Instead of the parser generating calls to `collect` and creating

closures, this adds `Expr::Collect` which just evaluates in the same

scope and doesn't require any closure.

When `$in` is detected in an expression, it is replaced with a new

variable (also called `$in`) and wrapped in `Expr::Collect`. During

eval, this expression is evaluated directly, with the input and with

that new variable set to the collected value.

Other than being faster and less prone to gotchas, it also makes it

possible to typecheck the output of an expression containing `$in`,

which is nice. This is a breaking change though, because of the lack of

the closure and because now typechecking will actually happen. Also, I

haven't attempted to typecheck the input yet.

The IR generated now just looks like this:

```gas

collect %in

clone %tmp, %in

store-variable $in, %tmp

# %out <- ...expression... <- %in

drop-variable $in

```

(where `$in` is the local variable created for this collection, and not

`IN_VARIABLE_ID`)

which is a lot better than having to create a closure and call `collect

--keep-env`, dealing with all of the capture gathering and allocation

that entails. Ideally we can also detect whether that input is actually

needed, so maybe we don't have to clone, but I haven't tried to do that

yet. Theoretically now that the variable is a unique one every time, it

should be possible to give it a type - I just don't know how to

determine that yet.

On top of that, I've also reworked how `$in` works in pipeline-initial

position. Previously, it was a little bit inconsistent. For example,

this worked:

```nushell

> 3 | do { let x = $in; let y = $in; print $x $y }

3

3

```

However, this causes a runtime variable not found error on the second

`$in`:

```nushell

> def foo [] { let x = $in; let y = $in; print $x $y }; 3 | foo

Error: nu:🐚:variable_not_found

× Variable not found

╭─[entry #115:1:35]

1 │ def foo [] { let x = $in; let y = $in; print $x $y }; 3 | foo

· ─┬─

· ╰── variable not found

╰────

```

I've fixed this by making the first element `$in` detection *always*

happen at the block level, so if you use `$in` in pipeline-initial

position anywhere in a block, it will collect with an implicit

subexpression around the whole thing, and you can then use that `$in`

more than once. In doing this I also rewrote `parse_pipeline()` and

hopefully it's a bit more straightforward and possibly more efficient

too now.

Finally, I've tried to make `let` and `mut` a lot more straightforward

with how they handle the rest of the pipeline, and using a redirection

with `let`/`mut` now does what you'd expect if you assume that they

consume the whole pipeline - the redirection is just processed as

normal. These both work now:

```nushell

let x = ^foo err> err.txt

let y = ^foo out+err>| str length

```

It was previously possible to accomplish this with a subexpression, but

it just seemed like a weird gotcha that you couldn't do it. Intuitively,

`let` and `mut` just seem to take the whole line.

- closes#13137

# User-Facing Changes

- `$in` will behave more consistently with blocks and closures, since

the entire block is now just wrapped to handle it if it appears in the

first pipeline element

- `$in` no longer creates a closure, so what can be done within an

expression containing `$in` is less restrictive

- `$in` containing expressions are now type checked, rather than just

resulting in `any`. However, `$in` itself is still `any`, so this isn't

quite perfect yet

- Redirections are now allowed in `let` and `mut` and behave pretty much

how you'd expect

# Tests + Formatting

Added tests to cover the new behaviour.

# After Submitting

- [ ] release notes (definitely breaking change)

# Description

The name of the `group` command is a little unclear/ambiguous.

Everything I look at it, I think of `group-by`. I think `chunks` more

clearly conveys what the `group` command does. Namely, it divides the

input list into chunks of a certain size. For example,

[`slice::chunks`](https://doc.rust-lang.org/std/primitive.slice.html#method.chunks)

has the same name. So, this PR adds a new `chunks` command to replace

the now deprecated `group` command.

The `chunks` command is a refactored version of `group`. As such, there

is a small performance improvement:

```nushell

# $data is a very large list

> bench { $data | chunks 2 } --rounds 30 | get mean

474ms 921µs 190ns

# deprecation warning was disabled here for fairness

> bench { $data | group 2 } --rounds 30 | get mean

592ms 702µs 440ns

> bench { $data | chunks 200 } --rounds 30 | get mean

374ms 188µs 318ns

> bench { $data | group 200 } --rounds 30 | get mean

481ms 264µs 869ns

> bench { $data | chunks 1 } --rounds 30 | get mean

642ms 574µs 42ns

> bench { $data | group 1 } --rounds 30 | get mean

981ms 602µs 513ns

```

# User-Facing Changes

- `group` command has been deprecated in favor of new `chunks` command.

- `chunks` errors when given a chunk size of `0` whereas `group` returns

chunks with one element.

# Tests + Formatting

Added tests for `chunks`, since `group` did not have any tests.

# After Submitting

Update book if necessary.