13 KiB

9200 - Pentesting Elasticsearch

{% hint style="success" %}

Learn & practice AWS Hacking: HackTricks Training AWS Red Team Expert (ARTE)

HackTricks Training AWS Red Team Expert (ARTE)

Learn & practice GCP Hacking:  HackTricks Training GCP Red Team Expert (GRTE)

HackTricks Training GCP Red Team Expert (GRTE)

Support HackTricks

- Check the subscription plans!

- Join the 💬 Discord group or the telegram group or follow us on Twitter 🐦 @hacktricks_live.

- Share hacking tricks by submitting PRs to the HackTricks and HackTricks Cloud github repos.

Basic information

Elasticsearch is a distributed, open source search and analytics engine for all types of data. It is known for its speed, scalability, and simple REST APIs. Built on Apache Lucene, it was first released in 2010 by Elasticsearch N.V. (now known as Elastic). Elasticsearch is the core component of the Elastic Stack, a collection of open source tools for data ingestion, enrichment, storage, analysis, and visualization. This stack, commonly referred to as the ELK Stack, also includes Logstash and Kibana, and now has lightweight data shipping agents called Beats.

What is an Elasticsearch index?

An Elasticsearch index is a collection of related documents stored as JSON. Each document consists of keys and their corresponding values (strings, numbers, booleans, dates, arrays, geolocations, etc.).

Elasticsearch uses an efficient data structure called an inverted index to facilitate fast full-text searches. This index lists every unique word in the documents and identifies the documents in which each word appears.

During the indexing process, Elasticsearch stores the documents and constructs the inverted index, allowing for near real-time searching. The index API is used to add or update JSON documents within a specific index.

Default port: 9200/tcp

Manual Enumeration

Banner

The protocol used to access Elasticsearch is HTTP. When you access it via HTTP you will find some interesting information: http://10.10.10.115:9200/

If you don't see that response accessing / see the following section.

Authentication

By default Elasticsearch doesn't have authentication enabled, so by default you can access everything inside the database without using any credentials.

You can verify that authentication is disabled with a request to:

curl -X GET "ELASTICSEARCH-SERVER:9200/_xpack/security/user"

{"error":{"root_cause":[{"type":"exception","reason":"Security must be explicitly enabled when using a [basic] license. Enable security by setting [xpack.security.enabled] to [true] in the elasticsearch.yml file and restart the node."}],"type":"exception","reason":"Security must be explicitly enabled when using a [basic] license. Enable security by setting [xpack.security.enabled] to [true] in the elasticsearch.yml file and restart the node."},"status":500}

However, if you send a request to / and receives a response like the following one:

{"error":{"root_cause":[{"type":"security_exception","reason":"missing authentication credentials for REST request [/]","header":{"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}}],"type":"security_exception","reason":"missing authentication credentials for REST request [/]","header":{"WWW-Authenticate":"Basic realm=\"security\" charset=\"UTF-8\""}},"status":401}

That will means that authentication is configured an you need valid credentials to obtain any info from elasticserach. Then, you can try to bruteforce it (it uses HTTP basic auth, so anything that BF HTTP basic auth can be used).

Here you have a list default usernames: elastic (superuser), remote_monitoring_user, beats_system, logstash_system, kibana, kibana_system, apm_system, _anonymous_._ Older versions of Elasticsearch have the default password changeme for this user

curl -X GET http://user:password@IP:9200/

Basic User Enumeration

#List all roles on the system:

curl -X GET "ELASTICSEARCH-SERVER:9200/_security/role"

#List all users on the system:

curl -X GET "ELASTICSEARCH-SERVER:9200/_security/user"

#Get more information about the rights of an user:

curl -X GET "ELASTICSEARCH-SERVER:9200/_security/user/<USERNAME>"

Elastic Info

Here are some endpoints that you can access via GET to obtain some information about elasticsearch:

| _cat | /_cluster | /_security |

|---|---|---|

| /_cat/segments | /_cluster/allocation/explain | /_security/user |

| /_cat/shards | /_cluster/settings | /_security/privilege |

| /_cat/repositories | /_cluster/health | /_security/role_mapping |

| /_cat/recovery | /_cluster/state | /_security/role |

| /_cat/plugins | /_cluster/stats | /_security/api_key |

| /_cat/pending_tasks | /_cluster/pending_tasks | |

| /_cat/nodes | /_nodes | |

| /_cat/tasks | /_nodes/usage | |

| /_cat/templates | /_nodes/hot_threads | |

| /_cat/thread_pool | /_nodes/stats | |

| /_cat/ml/trained_models | /_tasks | |

| /_cat/transforms/_all | /_remote/info | |

| /_cat/aliases | ||

| /_cat/allocation | ||

| /_cat/ml/anomaly_detectors | ||

| /_cat/count | ||

| /_cat/ml/data_frame/analytics | ||

| /_cat/ml/datafeeds | ||

| /_cat/fielddata | ||

| /_cat/health | ||

| /_cat/indices | ||

| /_cat/master | ||

| /_cat/nodeattrs | ||

| /_cat/nodes |

These endpoints were taken from the documentation where you can find more.

Also, if you access /_cat the response will contain the /_cat/* endpoints supported by the instance.

In /_security/user (if auth enabled) you can see which user has role superuser.

Indices

You can gather all the indices accessing http://10.10.10.115:9200/_cat/indices?v

health status index uuid pri rep docs.count docs.deleted store.size pri.store.size

green open .kibana 6tjAYZrgQ5CwwR0g6VOoRg 1 0 1 0 4kb 4kb

yellow open quotes ZG2D1IqkQNiNZmi2HRImnQ 5 1 253 0 262.7kb 262.7kb

yellow open bank eSVpNfCfREyYoVigNWcrMw 5 1 1000 0 483.2kb 483.2kb

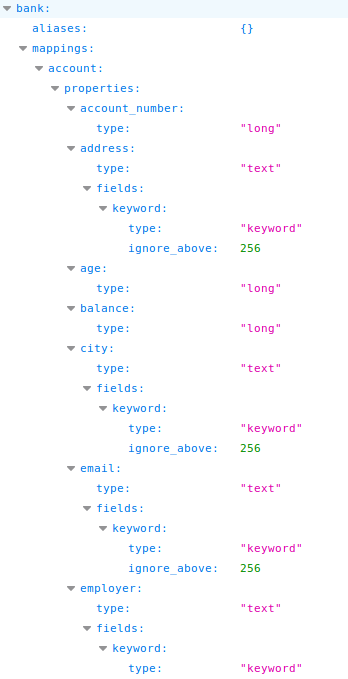

To obtain information about which kind of data is saved inside an index you can access: http://host:9200/<index> from example in this case http://10.10.10.115:9200/bank

Dump index

If you want to dump all the contents of an index you can access: http://host:9200/<index>/_search?pretty=true like http://10.10.10.115:9200/bank/_search?pretty=true

Take a moment to compare the contents of the each document (entry) inside the bank index and the fields of this index that we saw in the previous section.

So, at this point you may notice that there is a field called "total" inside "hits" that indicates that 1000 documents were found inside this index but only 10 were retried. This is because by default there is a limit of 10 documents.

But, now that you know that this index contains 1000 documents, you can dump all of them indicating the number of entries you want to dump in the size parameter: http://10.10.10.115:9200/quotes/_search?pretty=true&size=1000asd

Note: If you indicate bigger number all the entries will be dumped anyway, for example you could indicate size=9999 and it will be weird if there were more entries (but you should check).

Dump all

In order to dump all you can just go to the same path as before but without indicating any indexhttp://host:9200/_search?pretty=true like http://10.10.10.115:9200/_search?pretty=true

Remember that in this case the default limit of 10 results will be applied. You can use the size parameter to dump a bigger amount of results. Read the previous section for more information.

Search

If you are looking for some information you can do a raw search on all the indices going to http://host:9200/_search?pretty=true&q=<search_term> like in http://10.10.10.115:9200/_search?pretty=true&q=Rockwell

If you want just to search on an index you can just specify it on the path: http://host:9200/<index>/_search?pretty=true&q=<search_term>

Note that the q parameter used to search content supports regular expressions

You can also use something like https://github.com/misalabs/horuz to fuzz an elasticsearch service.

Write permissions

You can check your write permissions trying to create a new document inside a new index running something like the following:

curl -X POST '10.10.10.115:9200/bookindex/books' -H 'Content-Type: application/json' -d'

{

"bookId" : "A00-3",

"author" : "Sankaran",

"publisher" : "Mcgrahill",

"name" : "how to get a job"

}'

That cmd will create a new index called bookindex with a document of type books that has the attributes "bookId", "author", "publisher" and "name"

Notice how the new index appears now in the list:

And note the automatically created properties:

Automatic Enumeration

Some tools will obtain some of the data presented before:

msf > use auxiliary/scanner/elasticsearch/indices_enum

{% embed url="https://github.com/theMiddleBlue/nmap-elasticsearch-nse" %}

Shodan

port:9200 elasticsearch

{% hint style="success" %}

Learn & practice AWS Hacking: HackTricks Training AWS Red Team Expert (ARTE)

HackTricks Training AWS Red Team Expert (ARTE)

Learn & practice GCP Hacking:  HackTricks Training GCP Red Team Expert (GRTE)

HackTricks Training GCP Red Team Expert (GRTE)

Support HackTricks

- Check the subscription plans!

- Join the 💬 Discord group or the telegram group or follow us on Twitter 🐦 @hacktricks_live.

- Share hacking tricks by submitting PRs to the HackTricks and HackTricks Cloud github repos.