9.3 KiB

LFI2RCE via Eternal waiting

☁️ HackTricks Cloud ☁️ -🐦 Twitter 🐦 - 🎙️ Twitch 🎙️ - 🎥 Youtube 🎥

- Do you work in a cybersecurity company? Do you want to see your company advertised in HackTricks? or do you want to have access to the latest version of the PEASS or download HackTricks in PDF? Check the SUBSCRIPTION PLANS!

- Discover The PEASS Family, our collection of exclusive NFTs

- Get the official PEASS & HackTricks swag

- Join the 💬 Discord group or the telegram group or follow me on Twitter 🐦@carlospolopm.

- Share your hacking tricks by submitting PRs to the hacktricks repo and hacktricks-cloud repo.

Basic Information

By default when a file is uploaded to PHP (even if it isn't expecting it), it will generate a temporary file in /tmp with a name such as php[a-zA-Z0-9]{6}, although I have seen some docker images where the generated files don't contain digits.

In a local file inclusion, if you manage to include that uploaded file, you will get RCE.

Note that by default PHP only allows to upload 20 files in a single request (set in /etc/php/<version>/apache2/php.ini):

; Maximum number of files that can be uploaded via a single request

max_file_uploads = 20

Also, the number of potential filenames are 62*62*62*62*62*62 = 56800235584

Other techniques

Other techniques relies in attacking PHP protocols (you won't be able if you only control the last part of the path), disclosing the path of the file, abusing expected files, or making PHP suffer a segmentation fault so uploaded temporary files aren't deleted.

This technique is very similar to the last one but without needed to find a zero day.

Eternal wait technique

In this technique we only need to control a relative path. If we manage to upload files and make the LFI never end, we will have "enough time" to brute-force uploaded files and find any of the ones uploaded.

Pros of this technique:

- You just need to control a relative path inside an include

- Doesn't require nginx or unexpected level of access to log files

- Doesn't require a 0 day to cause a segmentation fault

- Doesn't require a path disclosure

The main problems of this technique are:

- Need a specific file(s) to be present (there might be more)

- The insane amount of potential file names: 56800235584

- If the server isn't using digits the total potential amount is: 19770609664

- By default only 20 files can be uploaded in a single request.

- The max number of parallel workers of the used server.

- This limit with the previous ones can make this attack last too much

- Timeout for a PHP request. Ideally this should be eternal or should kill the PHP process without deleting the temp uploaded files, if not, this will also be a pain

So, how can you make a PHP include never end? Just by including the file /sys/kernel/security/apparmor/revision (not available in Docker containers unfortunately...).

Try it just calling:

php -a # open php cli

include("/sys/kernel/security/apparmor/revision");

Apache2

By default, Apache support 150 concurrent connections, following https://ubiq.co/tech-blog/increase-max-connections-apache/ it's possible to upgrade this number up to 8000. Follow this to use PHP with that module: https://www.digitalocean.com/community/tutorials/how-to-configure-apache-http-with-mpm-event-and-php-fpm-on-ubuntu-18-04.

By default, (as I can see in my tests), a PHP process can last eternally.

Let's do some maths:

- We can use 149 connections to generate 149 * 20 = 2980 temp files with our webshell.

- Then, use the last connection to brute-force potential files.

- At a speed of 10 requests/s the times are:

- 56800235584 / 2980 / 10 / 3600 ~= 530 hours (50% chance in 265h)

- (without digits) 19770609664 / 2980 / 10 / 3600 ~= 185h (50% chance in 93h)

{% hint style="warning" %} Note that in the previous example we are completely DoSing other clients! {% endhint %}

If the Apache server is improved and we could abuse 4000 connections (half way to the max number). We could create 3999*20 = 79980 files and the number would be reduced to around 19.7h or 6.9h (10h, 3.5h 50% chance).

PHP-FMP

If instead of using the regular php mod for apache to run PHP scripts the web page is using PHP-FMP (this improves the efficiency of the web page, so it's common to find it), there is something else that can be done to improve the technique.

PHP-FMP allow to configure the parameter request_terminate_timeout in /etc/php/<php-version>/fpm/pool.d/www.conf.

This parameter indicates the maximum amount of seconds when request to PHP must terminate (infinite by default, but 30s if the param is uncommented). When a request is being processed by PHP the indicated number of seconds, it's killed. This means, that if the request was uploading temporary files, because the php processing was stopped, those files aren't going to be deleted. Therefore, if you can make a request last that time, you can generate thousands of temporary files that won't be deleted, which will speed up the process of finding them and reduces the probability of a DoS to the platform by consuming all connections.

So, to avoid DoS lets suppose that an attacker will be using only 100 connections at the same time and php max processing time by php-fmp (request_terminate_timeout) is 30s. Therefore, the number of temp files that can be generated by second is 100*20/30 = 66.67.

Then, to generate 10000 files an attacker would need: 10000/66.67 = 150s (to generate 100000 files the time would be 25min).

Then, the attacker could use those 100 connections to perform a search brute-force. **** Supposing a speed of 300 req/s the time needed to exploit this is the following:

- 56800235584 / 10000 / 300 / 3600 ~= 5.25 hours (50% chance in 2.63h)

- (with 100000 files) 56800235584 / 100000 / 300 / 3600 ~= 0.525 hours (50% chance in 0.263h)

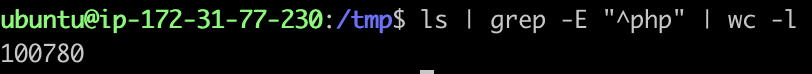

Yes, it's possible to generate 100000 temporary files in an EC2 medium size instance:

{% hint style="warning" %} Note that in order to trigger the timeout it would be enough to include the vulnerable LFI page, so it enters in an eternal include loop. {% endhint %}

Nginx

It looks like by default Nginx supports 512 parallel connections at the same time (and this number can be improved).

☁️ HackTricks Cloud ☁️ -🐦 Twitter 🐦 - 🎙️ Twitch 🎙️ - 🎥 Youtube 🎥

- Do you work in a cybersecurity company? Do you want to see your company advertised in HackTricks? or do you want to have access to the latest version of the PEASS or download HackTricks in PDF? Check the SUBSCRIPTION PLANS!

- Discover The PEASS Family, our collection of exclusive NFTs

- Get the official PEASS & HackTricks swag

- Join the 💬 Discord group or the telegram group or follow me on Twitter 🐦@carlospolopm.

- Share your hacking tricks by submitting PRs to the hacktricks repo and hacktricks-cloud repo.