# Objective

- Closes#4464

## Solution

- Specify default mag and min filter types for `Image` instead of using `wgpu`'s defaults.

---

## Changelog

### Changed

- Default `Image` filtering changed from `Nearest` to `Linear`.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- fix#4946

- fix running 3d in wasm

## Solution

- since #4867, the imports are splitter differently, and this shader def was not always set correctly depending on the shader used

- add it when needed

# Objective

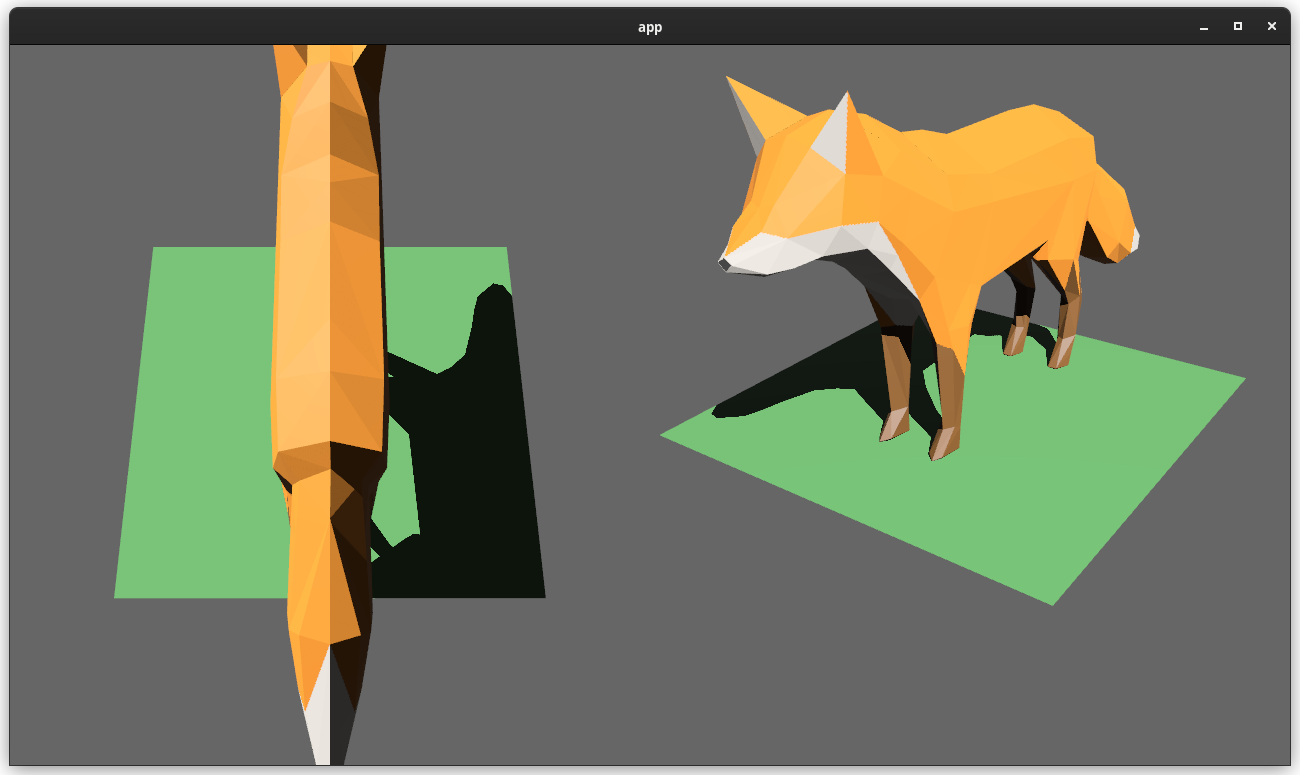

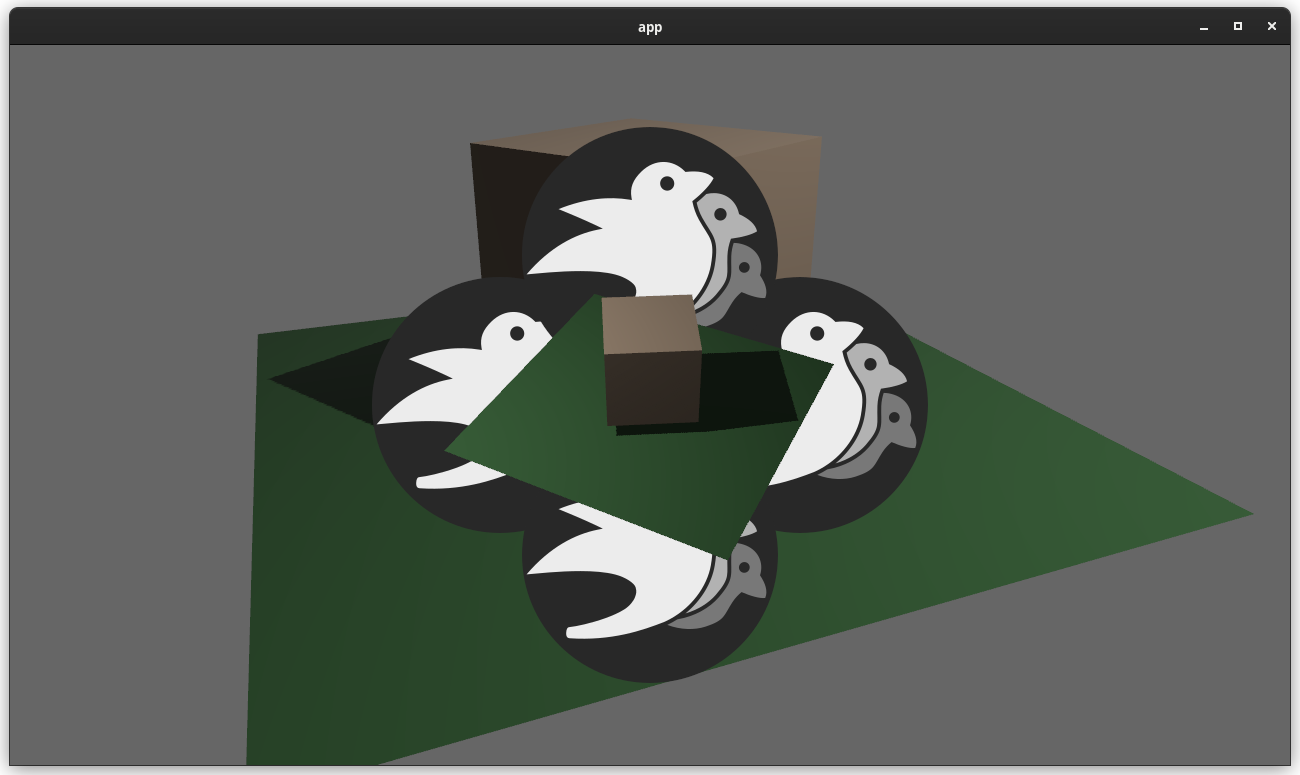

Users should be able to render cameras to specific areas of a render target, which enables scenarios like split screen, minimaps, etc.

Builds on the new Camera Driven Rendering added here: #4745Fixes: #202

Alternative to #1389 and #3626 (which are incompatible with the new Camera Driven Rendering)

## Solution

Cameras can now configure an optional "viewport", which defines a rectangle within their render target to draw to. If a `Viewport` is defined, the camera's `CameraProjection`, `View`, and visibility calculations will use the viewport configuration instead of the full render target.

```rust

// This camera will render to the first half of the primary window (on the left side).

commands.spawn_bundle(Camera3dBundle {

camera: Camera {

viewport: Some(Viewport {

physical_position: UVec2::new(0, 0),

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

depth: 0.0..1.0,

}),

..default()

},

..default()

});

```

To account for this, the `Camera` component has received a few adjustments:

* `Camera` now has some new getter functions:

* `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, `projection_matrix`

* All computed camera values are now private and live on the `ComputedCameraValues` field (logical/physical width/height, the projection matrix). They are now exposed on `Camera` via getters/setters This wasn't _needed_ for viewports, but it was long overdue.

---

## Changelog

### Added

* `Camera` components now have a `viewport` field, which can be set to draw to a portion of a render target instead of the full target.

* `Camera` component has some new functions: `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, and `projection_matrix`

* Added a new split_screen example illustrating how to render two cameras to the same scene

## Migration Guide

`Camera::projection_matrix` is no longer a public field. Use the new `Camera::projection_matrix()` method instead:

```rust

// Bevy 0.7

let projection = camera.projection_matrix;

// Bevy 0.8

let projection = camera.projection_matrix();

```

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

# Objective

- Split PBR and 2D mesh shaders into types and bindings to prepare the shaders to be more reusable.

- See #3969 for details. I'm doing this in multiple steps to make review easier.

---

## Changelog

- Changed: 2D and PBR mesh shaders are now split into types and bindings, the following shader imports are available: `bevy_pbr::mesh_view_types`, `bevy_pbr::mesh_view_bindings`, `bevy_pbr::mesh_types`, `bevy_pbr::mesh_bindings`, `bevy_sprite::mesh2d_view_types`, `bevy_sprite::mesh2d_view_bindings`, `bevy_sprite::mesh2d_types`, `bevy_sprite::mesh2d_bindings`

## Migration Guide

- In shaders for 3D meshes:

- `#import bevy_pbr::mesh_view_bind_group` -> `#import bevy_pbr::mesh_view_bindings`

- `#import bevy_pbr::mesh_struct` -> `#import bevy_pbr::mesh_types`

- NOTE: If you are using the mesh bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_pbr::mesh_bindings` which itself imports the mesh types needed for the bindings.

- In shaders for 2D meshes:

- `#import bevy_sprite::mesh2d_view_bind_group` -> `#import bevy_sprite::mesh2d_view_bindings`

- `#import bevy_sprite::mesh2d_struct` -> `#import bevy_sprite::mesh2d_types`

- NOTE: If you are using the mesh2d bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_sprite::mesh2d_bindings` which itself imports the mesh2d types needed for the bindings.

# Objective

Models can be produced that do not have vertex tangents but do have normal map textures. The tangents can be generated. There is a way that the vertex tangents can be generated to be exactly invertible to avoid introducing error when recreating the normals in the fragment shader.

## Solution

- After attempts to get https://github.com/gltf-rs/mikktspace to integrate simple glam changes and version bumps, and releases of that crate taking weeks / not being made (no offense intended to the authors/maintainers, bevy just has its own timelines and needs to take care of) it was decided to fork that repository. The following steps were taken:

- mikktspace was forked to https://github.com/bevyengine/mikktspace in order to preserve the repository's history in case the original is ever taken down

- The README in that repo was edited to add a note stating from where the repository was forked and explaining why

- The repo was locked for changes as its only purpose is historical

- The repo was integrated into the bevy repo using `git subtree add --prefix crates/bevy_mikktspace git@github.com:bevyengine/mikktspace.git master`

- In `bevy_mikktspace`:

- The travis configuration was removed

- `cargo fmt` was run

- The `Cargo.toml` was conformed to bevy's (just adding bevy to the keywords, changing the homepage and repository, changing the version to 0.7.0-dev - importantly the license is exactly the same)

- Remove the features, remove `nalgebra` entirely, only use `glam`, suppress clippy.

- This was necessary because our CI runs clippy with `--all-features` and the `nalgebra` and `glam` features are mutually exclusive, plus I don't want to modify this highly numerically-sensitive code just to appease clippy and diverge even more from upstream.

- Rebase https://github.com/bevyengine/bevy/pull/1795

- @jakobhellermann said it was fine to copy and paste but it ended up being almost exactly the same with just a couple of adjustments when validating correctness so I decided to actually rebase it and then build on top of it.

- Use the exact same fragment shader code to ensure correct normal mapping.

- Tested with both https://github.com/KhronosGroup/glTF-Sample-Models/tree/master/2.0/NormalTangentMirrorTest which has vertex tangents and https://github.com/KhronosGroup/glTF-Sample-Models/tree/master/2.0/NormalTangentTest which requires vertex tangent generation

Co-authored-by: alteous <alteous@outlook.com>

# Objective

allow meshes with equal z-depth to be rendered in a chosen order / avoid z-fighting

## Solution

add a depth_bias to SpecializedMaterial that is added to the mesh depth used for render-ordering.

# Objective

- Add an `ExtractResourcePlugin` for convenience and consistency

## Solution

- Add an `ExtractResourcePlugin` similar to `ExtractComponentPlugin` but for ECS `Resource`s. The system that is executed simply clones the main world resource into a render world resource, if and only if the main world resource was either added or changed since the last execution of the system.

- Add an `ExtractResource` trait with a `fn extract_resource(res: &Self) -> Self` function. This is used by the `ExtractResourcePlugin` to extract the resource

- Add a derive macro for `ExtractResource` on a `Resource` with the `Clone` trait, that simply returns `res.clone()`

- Use `ExtractResourcePlugin` wherever both possible and appropriate

# Objective

- Fixes#4456

## Solution

- Removed the `near` and `far` fields from the camera and the views.

---

## Changelog

- Removed the `near` and `far` fields from the camera and the views.

- Removed the `ClusterFarZMode::CameraFarPlane` far z mode.

## Migration Guide

- Cameras no longer accept near and far values during initialization

- `ClusterFarZMode::Constant` should be used with the far value instead of `ClusterFarZMode::CameraFarPlane`

# Objective

- noticed a few Vec3 and Vec2 that could be const

## Solution

- Declared them as const

- It seems to make a tiny improvement in example `many_light`, but given that the change is not complex at all it could still be worth it

# Objective

Add support for vertex colors

## Solution

This change is modeled after how vertex tangents are handled, so the shader is conditionally compiled with vertex color support if the mesh has the corresponding attribute set.

Vertex colors are multiplied by the base color. I'm not sure if this is the best for all cases, but may be useful for modifying vertex colors without creating a new mesh.

I chose `VertexFormat::Float32x4`, but I'd prefer 16-bit floats if/when support is added.

## Changelog

### Added

- Vertex colors can be specified using the `Mesh::ATTRIBUTE_COLOR` mesh attribute.

# Objective

- When spawning a sprite the alpha is used for transparency, but when using the `Color::into()` implementation to spawn a `StandardMaterial`, the alpha is ignored.

- Pretty much everytime I want to make something transparent I started with a `Color::rgb().into()` and I'm always surprised that it doesn't work when changing it to `Color::rgba().into()`

- It's possible there's an issue with this approach I am not thinking of, but I'm not sure what's the point of setting an alpha value without the goal of making a color transparent.

## Solution

- Set the alpha_mode to AlphaMode::Blend when the alpha is not the default value.

---

## Migration Guide

This is not a breaking change, but it can easily be migrated to reduce boilerplate

```rust

commands.spawn_bundle(PbrBundle {

mesh: meshes.add(shape::Cube::default().into()),

material: materials.add(StandardMaterial {

base_color: Color::rgba(1.0, 0.0, 0.0, 0.75),

alpha_mode: AlphaMode::Blend,

..default()

}),

..default()

});

// becomes

commands.spawn_bundle(PbrBundle {

mesh: meshes.add(shape::Cube::default().into()),

material: materials.add(Color::rgba(1.0, 0.0, 0.0, 0.75).into()),

..default()

});

```

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

### Problem

It currently isn't possible to construct the default value of a reflected type. Because of that, it isn't possible to use `add_component` of `ReflectComponent` to add a new component to an entity because you can't know what the initial value should be.

### Solution

1. add `ReflectDefault` type

```rust

#[derive(Clone)]

pub struct ReflectDefault {

default: fn() -> Box<dyn Reflect>,

}

impl ReflectDefault {

pub fn default(&self) -> Box<dyn Reflect> {

(self.default)()

}

}

impl<T: Reflect + Default> FromType<T> for ReflectDefault {

fn from_type() -> Self {

ReflectDefault {

default: || Box::new(T::default()),

}

}

}

```

2. add `#[reflect(Default)]` to all component types that implement `Default` and are user facing (so not `ComputedSize`, `CubemapVisibleEntities` etc.)

This makes it possible to add the default value of a component to an entity without any compile-time information:

```rust

fn main() {

let mut app = App::new();

app.register_type::<Camera>();

let type_registry = app.world.get_resource::<TypeRegistry>().unwrap();

let type_registry = type_registry.read();

let camera_registration = type_registry.get(std::any::TypeId::of::<Camera>()).unwrap();

let reflect_default = camera_registration.data::<ReflectDefault>().unwrap();

let reflect_component = camera_registration

.data::<ReflectComponent>()

.unwrap()

.clone();

let default = reflect_default.default();

drop(type_registry);

let entity = app.world.spawn().id();

reflect_component.add_component(&mut app.world, entity, &*default);

let camera = app.world.entity(entity).get::<Camera>().unwrap();

dbg!(&camera);

}

```

### Open questions

- should we have `ReflectDefault` or `ReflectFromWorld` or both?

# Objective

- While optimising many_cubes, I noticed that all material handles are extracted regardless of whether the entity to which the handle belongs is visible or not. As such >100k handles are extracted when only <20k are visible.

## Solution

- Only extract material handles of visible entities.

- This improves `many_cubes -- sphere` from ~42fps to ~48fps. It reduces not only the extraction time but also system commands time. `Handle<StandardMaterial>` extraction and its system commands went from 0.522ms + 3.710ms respectively, to 0.267ms + 0.227ms an 88% reduction for this system for this case. It's very view dependent but...

# Objective

- Creating and executing render passes has GPU overhead. If there are no phase items in the render phase to draw, then this overhead should not be incurred as it has no benefit.

## Solution

- Check if there are no phase items to draw, and if not, do not construct not execute the render pass

---

## Changelog

- Changed: Do not create nor execute empty render passes

# Objective

- Meshes are queued in opaque phase instead of transparent phase when drawing wireframes.

- There is a name mismatch.

## Solution

- Rename `transparent_phase` to `opaque_phase` in `wireframe.rs`.

# Objective

Reduce the catch-all grab-bag of functionality in bevy_core by moving FloatOrd to bevy_utils.

A step in addressing #2931 and splitting bevy_core into more specific locations.

## Solution

Move FloatOrd into bevy_utils. Fix the compile errors.

As a result, bevy_core_pipeline, bevy_pbr, bevy_sprite, bevy_text, and bevy_ui no longer depend on bevy_core (they were only using it for `FloatOrd` previously).

# Objective

- Related #4276.

- Part of the splitting process of #3503.

## Solution

- Move `Size` to `bevy_ui`.

## Reasons

- `Size` is only needed in `bevy_ui` (because it needs to use `Val` instead of `f32`), but it's also used as a worse `Vec2` replacement in other areas.

- `Vec2` is more powerful than `Size` so it should be used whenever possible.

- Discussion in #3503.

## Changelog

### Changed

- The `Size` type got moved from `bevy_math` to `bevy_ui`.

## Migration Guide

- The `Size` type got moved from `bevy::math` to `bevy::ui`. To migrate you just have to import `bevy::ui::Size` instead of `bevy::math::Math` or use the `bevy::prelude` instead.

Co-authored-by: KDecay <KDecayMusic@protonmail.com>

# Objective

- Fix `ClusterConfig::None`

- This fix is from @robtfm but they didn't have time to submit it, so I am.

## Solution

- Always clear clusters and skip processing when `ClusterConfig::None`

- Conditionally remove `VisiblePointLights` from the view if it is present

# Objective

- https://github.com/bevyengine/bevy/pull/4098 still hasn't fixed minimisation on Windows.

- `Clusters.lights` is assumed to have the number of items given by the product of `Clusters.dimensions`'s axes.

## Solution

- Make that true in `clear`.

# Objective

- Fixes#4234

- Fixes#4473

- Built on top of #3989

- Improve performance of `assign_lights_to_clusters`

## Solution

- Remove the OBB-based cluster light assignment algorithm and calculation of view space AABBs

- Implement the 'iterative sphere refinement' algorithm used in Just Cause 3 by Emil Persson as documented in the Siggraph 2015 Practical Clustered Shading talk by Persson, on pages 42-44 http://newq.net/dl/pub/s2015_practical.pdf

- Adapt to also support orthographic projections

- Add `many_lights -- orthographic` for testing many lights using an orthographic projection

## Results

- `assign_lights_to_clusters` in `many_lights` before this PR on an M1 Max over 1500 frames had a median execution time of 1.71ms. With this PR it is 1.51ms, a reduction of 0.2ms or 11.7% for this system.

---

## Changelog

- Changed: Improved cluster light assignment performance

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- While animating 501 https://github.com/KhronosGroup/glTF-Sample-Models/tree/master/2.0/BrainStem, I noticed things were getting a little slow

- Looking in tracy, the system `extract_skinned_meshes` is taking a lot of time, with a mean duration of 15.17ms

## Solution

- ~~Use `Vec` instead of a `SmallVec`~~

- ~~Don't use an temporary variable~~

- Compute the affine matrix as an `Affine3A` instead

- Remove the `temp` vec

| |mean|

|---|---|

|base|15.17ms|

|~~vec~~|~~9.31ms~~|

|~~no temp variable~~|~~11.31ms~~|

|removing the temp vector|8.43ms|

|affine|13.21ms|

|all together|7.23ms|

# Objective

- Make use of storage buffers, where they are available, for clustered forward bindings to support far more point lights in a scene

- Fixes#3605

- Based on top of #4079

This branch on an M1 Max can keep 60fps with about 2150 point lights of radius 1m in the Sponza scene where I've been testing. The bottleneck is mostly assigning lights to clusters which grows faster than linearly (I think 1000 lights was about 1.5ms and 5000 was 7.5ms). I have seen papers and presentations leveraging compute shaders that can get this up to over 1 million. That said, I think any further optimisations should probably be done in a separate PR.

## Solution

- Add `RenderDevice` to the `Material` and `SpecializedMaterial` trait `::key()` functions to allow setting flags on the keys depending on feature/limit availability

- Make `GpuPointLights` and `ViewClusterBuffers` into enums containing `UniformVec` and `StorageBuffer` variants. Implement the necessary API on them to make usage the same for both cases, and the only difference is at initialisation time.

- Appropriate shader defs in the shader code to handle the two cases

## Context on some decisions / open questions

- I'm using `max_storage_buffers_per_shader_stage >= 3` as a check to see if storage buffers are supported. I was thinking about diving into 'binding resource management' but it feels like we don't have enough use cases to understand the problem yet, and it is mostly a separate concern to this PR, so I think it should be handled separately.

- Should `ViewClusterBuffers` and `ViewClusterBindings` be merged, duplicating the count variables into the enum variants?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Animation with shadows crashes with:

```

thread 'main' panicked at 'wgpu error: Validation Error

Caused by:

In Device::create_render_pipeline

note: label = `shadow_pipeline`

error matching VERTEX shader requirements against the pipeline

shader global ResourceBinding { group: 1, binding: 1 } is not available in the layout pipeline layout

visibility flags don't include the shader stage

```

Animation with wireframe crashes with:

```

thread 'main' panicked at 'wgpu error: Validation Error

Caused by:

In Device::create_render_pipeline

note: label = `opaque_mesh_pipeline`

error matching VERTEX shader requirements against the pipeline

shader global ResourceBinding { group: 2, binding: 0 } is not available in the layout pipeline layout

binding is missing from the pipeline layout

```

## Solution

- Fix the bindings

# Objective

Add a system parameter `ParamSet` to be used as container for conflicting parameters.

## Solution

Added two methods to the SystemParamState trait, which gives the access used by the parameter. Did the implementation. Added some convenience methods to FilteredAccessSet. Changed `get_conflicts` to return every conflicting component instead of breaking on the first conflicting `FilteredAccess`.

Co-authored-by: bilsen <40690317+bilsen@users.noreply.github.com>

# Objective

Load skeletal weights and indices from GLTF files. Animate meshes.

## Solution

- Load skeletal weights and indices from GLTF files.

- Added `SkinnedMesh` component and ` SkinnedMeshInverseBindPose` asset

- Added `extract_skinned_meshes` to extract joint matrices.

- Added queue phase systems for enqueuing the buffer writes.

Some notes:

- This ports part of # #2359 to the current main.

- This generates new `BufferVec`s and bind groups every frame. The expectation here is that the number of `Query::get` calls during extract is probably going to be the stronger bottleneck, with up to 256 calls per skinned mesh. Until that is optimized, caching buffers and bind groups is probably a non-concern.

- Unfortunately, due to the uniform size requirements, this means a 16KB buffer is allocated for every skinned mesh every frame. There's probably a few ways to get around this, but most of them require either compute shaders or storage buffers, which are both incompatible with WebGL2.

Co-authored-by: james7132 <contact@jamessliu.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: James Liu <contact@jamessliu.com>

* Refactor assign_lights_to_clusters to always clear + update clusters, even if the screen size isn't available yet / is zero. This fixes#4167. We still avoid the "expensive" per-light work when the screen size isn't available yet. I also consolidated some logic to eliminate some redundancies.

* Removed _a ton_ of (potentially very large) per-frame reallocations

* Removed `Res<VisiblePointLights>` (a vec) in favor of `Res<GlobalVisiblePointLights>` (a hashmap). We were allocating a new hashmap every frame, the collecting it into a vec every frame, then in another system _re-generating the hashmap_. It is always used like a hashmap, might as well embrace that. We now reuse the same hashmap every frame and dont use any intermediate collections.

* We were re-allocating Clusters aabb and light vectors every frame by re-constructing Clusters every frame. We now re-use the existing collections.

* Reuse per-camera VisiblePointLight vecs when possible instead of allocating them every frame. We now only insert VisiblePointLights if the component doesn't exist yet.

# Objective

- Fixes#3970

- To support Bevy's shader abstraction(shader defs, shader imports and hot shader reloading) for compute shaders, I have followed carts advice and change the `PipelinenCache` to accommodate both compute and render pipelines.

## Solution

- renamed `RenderPipelineCache` to `PipelineCache`

- Cached Pipelines are now represented by an enum (render, compute)

- split the `SpecializedPipelines` into `SpecializedRenderPipelines` and `SpecializedComputePipelines`

- updated the game of life example

## Open Questions

- should `SpecializedRenderPipelines` and `SpecializedComputePipelines` be merged and how would we do that?

- should the `get_render_pipeline` and `get_compute_pipeline` methods be merged?

- is pipeline specialization for different entry points a good pattern

Co-authored-by: Kurt Kühnert <51823519+Ku95@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Reduce time spent in the `check_visibility` system

## Solution

- Use `Vec3A` for all bounding volume types to leverage SIMD optimisations and to avoid repeated runtime conversions from `Vec3` to `Vec3A`

- Inline all bounding volume intersection methods

- Add on-the-fly calculated `Aabb` -> `Sphere` and do `Sphere`-`Frustum` intersection tests before `Aabb`-`Frustum` tests. This is faster for `many_cubes` but could be slower in other cases where the sphere test gives a false-positive that the `Aabb` test discards. Also, I tested precalculating the `Sphere`s and inserting them alongside the `Aabb` but this was slower.

- Do not test meshes against the far plane. Apparently games don't do this anymore with infinite projections, and it's one fewer plane to test against. I made it optional and still do the test for culling lights but that is up for discussion.

- These collectively reduce `check_visibility` execution time in `many_cubes -- sphere` from 2.76ms to 1.48ms and increase frame rate from ~42fps to ~44fps

# Objective

- Support compressed textures including 'universal' formats (ETC1S, UASTC) and transcoding of them to

- Support `.dds`, `.ktx2`, and `.basis` files

## Solution

- Fixes https://github.com/bevyengine/bevy/issues/3608 Look there for more details.

- Note that the functionality is all enabled through non-default features. If it is desirable to enable some by default, I can do that.

- The `basis-universal` crate, used for `.basis` file support and for transcoding, is built on bindings against a C++ library. It's not feasible to rewrite in Rust in a short amount of time. There are no Rust alternatives of which I am aware and it's specialised code. In its current state it doesn't support the wasm target, but I don't know for sure. However, it is possible to build the upstream C++ library with emscripten, so there is perhaps a way to add support for web too with some shenanigans.

- There's no support for transcoding from BasisLZ/ETC1S in KTX2 files as it was quite non-trivial to implement and didn't feel important given people could use `.basis` files for ETC1S.

# Objective

fix cluster tilesize and tilecount calculations.

Fixes https://github.com/bevyengine/bevy/issues/4127 & https://github.com/bevyengine/bevy/issues/3596

## Solution

- calculate tilesize as smallest integers such that dimensions.xy() tiles will cover the screen

- calculate final dimensions as smallest integers such that final dimensions * tilesize will cover the screen

there is more cleanup that could be done in these functions. a future PR will likely remove the tilesize completely, so this is just a minimal change set to fix the current bug at small screen sizes

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

provide some customisation for default cluster setup

avoid "cluster index lists is full" in all cases (using a strategy outlined by @superdump)

## Solution

Add ClusterConfig enum (which can be inserted into a view at any time) to allow specifying cluster setup with variants:

- None (do not do any light assignment - for views which do not require light info, e.g. minimaps etc)

- Single (one cluster)

- XYZ (explicit cluster counts in each dimension)

- FixedZ (most similar to current - specify Z-slices and total, then x and y counts are dynamically determined to give approximately square clusters based on current aspect ratio)

Defaults to FixedZ { total: 4096, z: 24 } which is similar to the current setup.

Per frame, estimate the number of indices that would be required for the current config and decrease the cluster counts / increase the cluster sizes in the x and y dimensions if the index list would be too small.

notes:

- I didn't put ClusterConfig in the camera bundles to avoid introducing a dependency from bevy_render to bevy_pbr. the ClusterConfig enum comes with a pbr-centric impl block so i didn't want to move that into bevy_render either.

- ~Might want to add None variant to cluster config for views that don't care about lights?~

- Not well tested for orthographic

- ~there's a cluster_muck branch on my repo which includes some diagnostics / a modified lighting example which may be useful for tyre-kicking~ (outdated, i will bring it up to date if required)

anecdotal timings:

FPS on the lighting demo is negligibly better (~5%), maybe due to a small optimisation constraining the light aabb to be in front of the camera

FPS on the lighting demo with 100 extra lights added is ~33% faster, and also renders correctly as the cluster index count is no longer exceeded

## Objective

Currently, all directional and point lights have their viewing frusta recalculated every frame, even if they have not moved or been disabled/enabled.

## Solution

The relevant systems now make use of change detection to only update those lights whose viewing frusta may have changed.

This makes it possible for materials to configure front or

back face culling, or disable culling.

Initially I looked at specializing the Mesh which currently

controls this state but conceptually it seems more appropriate

to control this at the material level, not the mesh level.

_Just for reference this also seems to be consistent with Unity

where materials/shaders can configure the culling mode between

front/back/off - as opposed to configuring any culling state

when importing a mesh._

After some archaeology, trying to understand how this might

relate to the existing 'double_sided' option, it was determined

that double_sided is a more high level lighting option originally

from Filament that will cause the normals for back faces to be

flipped.

For sake of avoiding complexity, but keeping control this

currently keeps the options orthogonal, and adds some clarifying

documentation for `double_sided`. This won't affect any existing

apps since there hasn't been a way to disable backface culling

up until now, so the option was essentially redundant.

double_sided support could potentially be updated to imply

disabling of backface culling.

For reference https://github.com/bevyengine/bevy/pull/3734/commits also looks at exposing cull mode control. I think the main difference here is that this patch handles RenderPipelineDescriptor specialization directly within the StandardMaterial implementation instead of communicating info back to the Mesh via the `queue_material_meshes` system.

With the way material.rs builds up the final RenderPipelineDescriptor first by calling specialize for the MeshPipeline followed by specialize for the material then it seems like we have a natural place to override anything in the descriptor that's first configured for the mesh state.

# Objective

Add Visibility for lights

## Solution

- add Visibility to PointLightBundle and DirectionLightBundle

- filter lights used by Visibility.is_visible

note: includes changes from #3916 due to overlap, will be cleaner after that is merged