# Objective

Post processing effects cannot read and write to the same texture. Currently they must own their own intermediate texture and redundantly copy from that back to the main texture. This is very inefficient.

Additionally, working with ViewTarget is more complicated than it needs to be, especially when working with HDR textures.

## Solution

`ViewTarget` now stores two copies of the "main texture". It uses an atomic value to track which is currently the "main texture" (this interior mutability is necessary to accommodate read-only RenderGraph execution).

`ViewTarget` now has a `post_process_write` method, which will return a source and destination texture. Each call to this method will flip between the two copies of the "main texture".

```rust

let post_process = render_target.post_process_write();

let source_texture = post_process.source;

let destination_texture = post_process.destination;

```

The caller _must_ read from the source texture and write to the destination texture, as it is assumed that the destination texture will become the new "main texture".

For simplicity / understandability `ViewTarget` is now a flat type. "hdr-ness" is a property of the `TextureFormat`. The internals are fully private in the interest of providing simple / consistent apis. Developers can now easily access the main texture by calling `view_target.main_texture()`.

HDR ViewTargets no longer have an "ldr texture" with `TextureFormat::bevy_default`. They _only_ have their two "hdr" textures. This simplifies the mental model. All we have is the "currently active hdr texture" and the "other hdr texture", which we flip between for post processing effects.

The tonemapping node has been rephrased to use this "post processing pattern". The blit pass has been removed, and it now only runs a pass when HDR is enabled. Notably, both the input and output texture are assumed to be HDR. This means that tonemapping behaves just like any other "post processing effect". It could theoretically be moved anywhere in the "effect chain" and continue to work.

In general, I think these changes will make the lives of people making post processing effects much easier. And they better position us to start building higher level / more structured "post processing effect stacks".

---

## Changelog

- `ViewTarget` now stores two copies of the "main texture". Calling `ViewTarget::post_process_write` will flip between copies of the main texture.

# Objective

Bevy still has many instances of using single-tuples `(T,)` to create a bundle. Due to #2975, this is no longer necessary.

## Solution

Search for regex `\(.+\s*,\)`. This should have found every instance.

# Objective

- fix new clippy lints before they get stable and break CI

## Solution

- run `clippy --fix` to auto-fix machine-applicable lints

- silence `clippy::should_implement_trait` for `fn HandleId::default<T: Asset>`

## Changes

- always prefer `format!("{inline}")` over `format!("{}", not_inline)`

- prefer `Box::default` (or `Box::<T>::default` if necessary) over `Box::new(T::default())`

# Objective

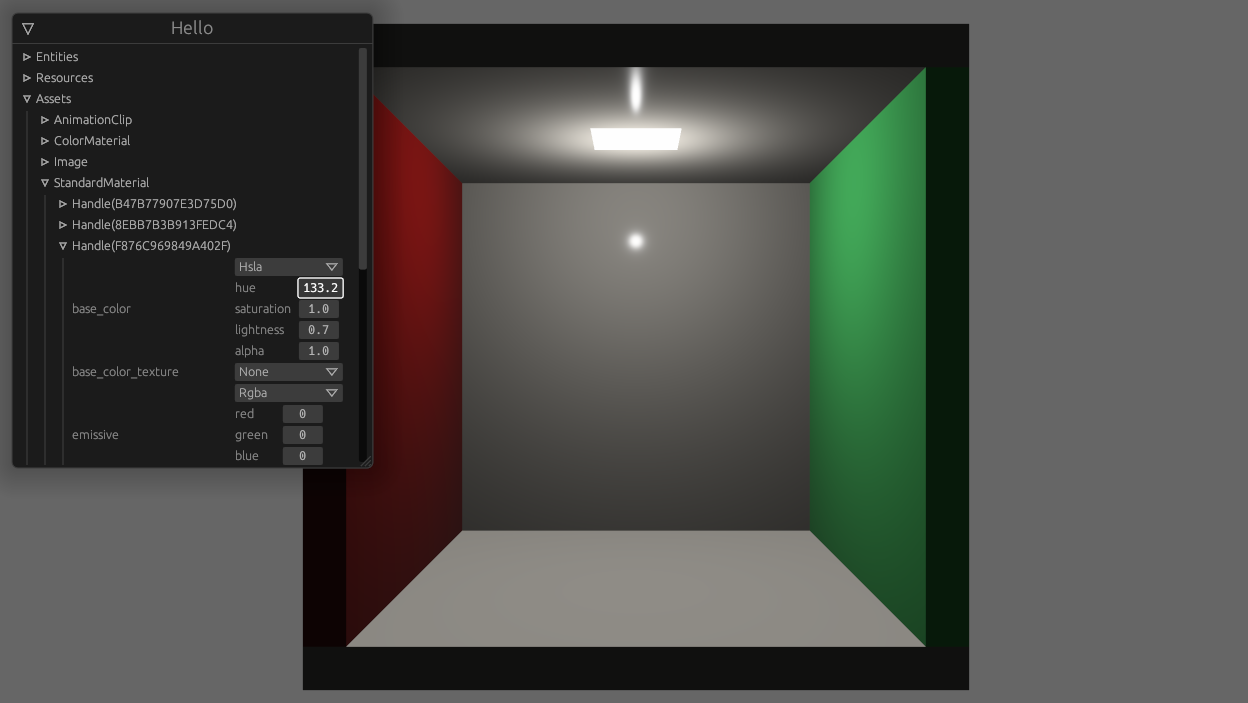

^ enable this

Concretely, I need to

- list all handle ids for an asset type

- fetch the asset as `dyn Reflect`, given a `HandleUntyped`

- when encountering a `Handle<T>`, find out what asset type that handle refers to (`T`'s type id) and turn the handle into a `HandleUntyped`

## Solution

- add `ReflectAsset` type containing function pointers for working with assets

```rust

pub struct ReflectAsset {

type_uuid: Uuid,

assets_resource_type_id: TypeId, // TypeId of the `Assets<T>` resource

get: fn(&World, HandleUntyped) -> Option<&dyn Reflect>,

get_mut: fn(&mut World, HandleUntyped) -> Option<&mut dyn Reflect>,

get_unchecked_mut: unsafe fn(&World, HandleUntyped) -> Option<&mut dyn Reflect>,

add: fn(&mut World, &dyn Reflect) -> HandleUntyped,

set: fn(&mut World, HandleUntyped, &dyn Reflect) -> HandleUntyped,

len: fn(&World) -> usize,

ids: for<'w> fn(&'w World) -> Box<dyn Iterator<Item = HandleId> + 'w>,

remove: fn(&mut World, HandleUntyped) -> Option<Box<dyn Reflect>>,

}

```

- add `ReflectHandle` type relating the handle back to the asset type and providing a way to create a `HandleUntyped`

```rust

pub struct ReflectHandle {

type_uuid: Uuid,

asset_type_id: TypeId,

downcast_handle_untyped: fn(&dyn Any) -> Option<HandleUntyped>,

}

```

- add the corresponding `FromType` impls

- add a function `app.register_asset_reflect` which is supposed to be called after `.add_asset` and registers `ReflectAsset` and `ReflectHandle` in the type registry

---

## Changelog

- add `ReflectAsset` and `ReflectHandle` types, which allow code to use reflection to manipulate arbitrary assets without knowing their types at compile time

fixes https://github.com/bevyengine/bevy/issues/5944

Uses the second solution:

> 2. keep track of the old viewport in the computed_state, and if camera.viewport != camera.computed_state.old_viewport, then update the projection. This is more reliable, but needs to store two UVec2s more in the camera (probably not a big deal).

# Objective

Bevy's internal plugins have lots of execution-order ambiguities, which makes the ambiguity detection tool very noisy for our users.

## Solution

Silence every last ambiguity that can currently be resolved.

Each time an ambiguity is silenced, it is accompanied by a comment describing why it is correct. This description should be based on the public API of the respective systems. Thus, I have added documentation to some systems describing how they use some resources.

# Future work

Some ambiguities remain, due to issues out of scope for this PR.

* The ambiguity checker does not respect `Without<>` filters, leading to false positives.

* Ambiguities between `bevy_ui` and `bevy_animation` cannot be resolved, since neither crate knows that the other exists. We will need a general solution to this problem.

# Objective

Currently, Bevy only supports rendering to the current "surface texture format". This means that "render to texture" scenarios must use the exact format the primary window's surface uses, or Bevy will crash. This is even harder than it used to be now that we detect preferred surface formats at runtime instead of using hard coded BevyDefault values.

## Solution

1. Look up and store each window surface's texture format alongside other extracted window information

2. Specialize the upscaling pass on the current `RenderTarget`'s texture format, now that we can cheaply correlate render targets to their current texture format

3. Remove the old `SurfaceTextureFormat` and `AvailableTextureFormats`: these are now redundant with the information stored on each extracted window, and probably should not have been globals in the first place (as in theory each surface could have a different format).

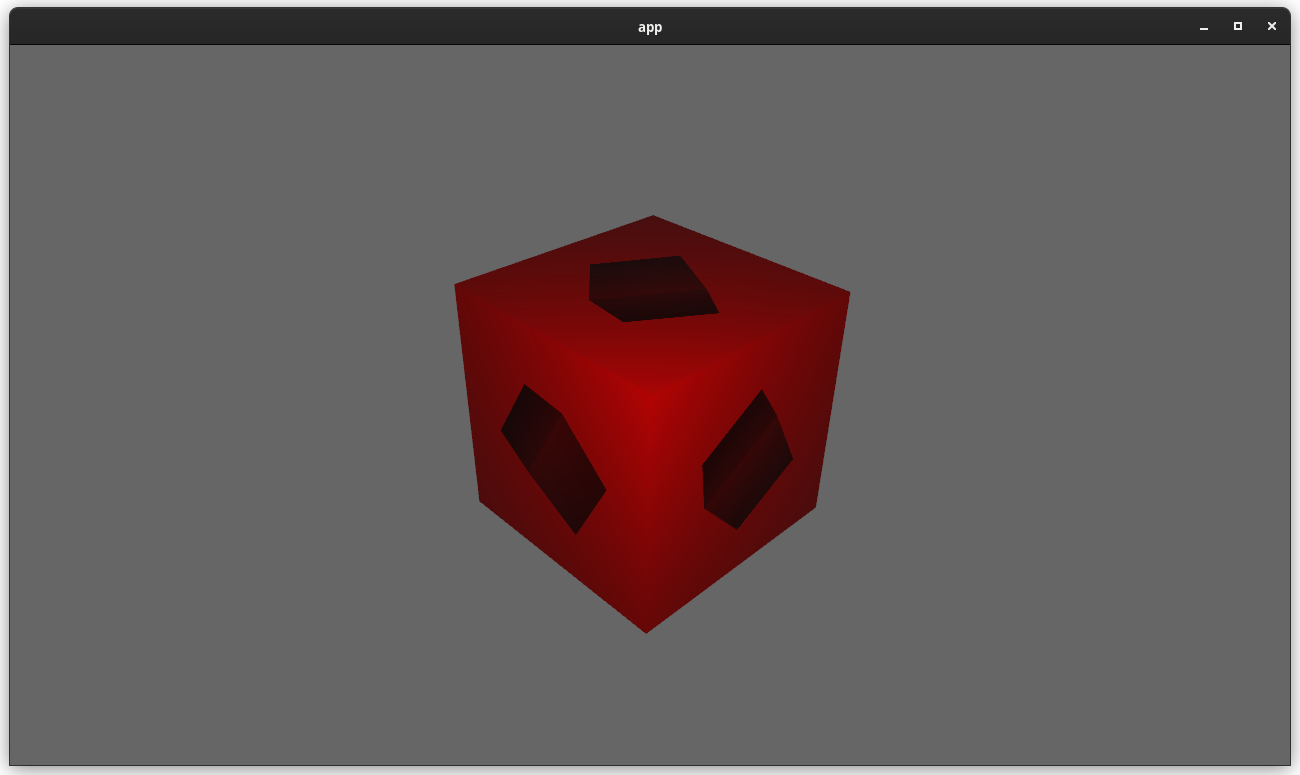

This means you can now use any texture format you want when rendering to a texture! For example, changing the `render_to_texture` example to use `R16Float` now doesn't crash / properly only stores the red component:

Attempt to make features like bloom https://github.com/bevyengine/bevy/pull/2876 easier to implement.

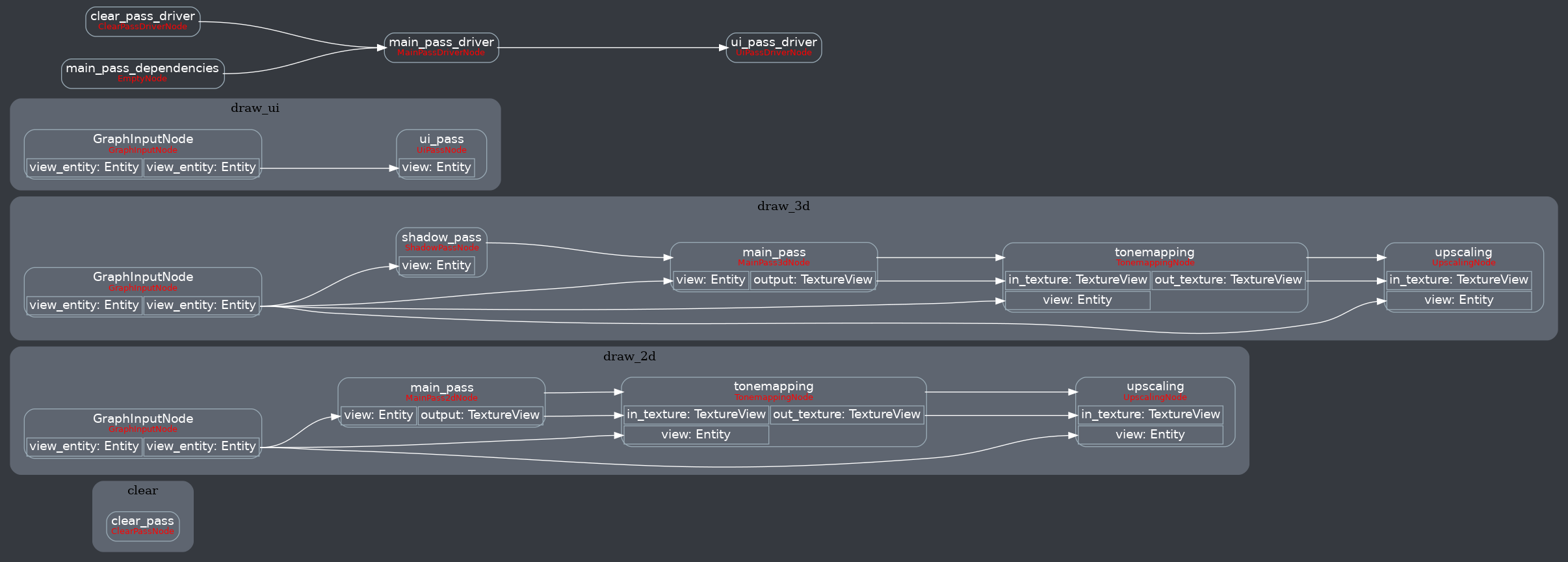

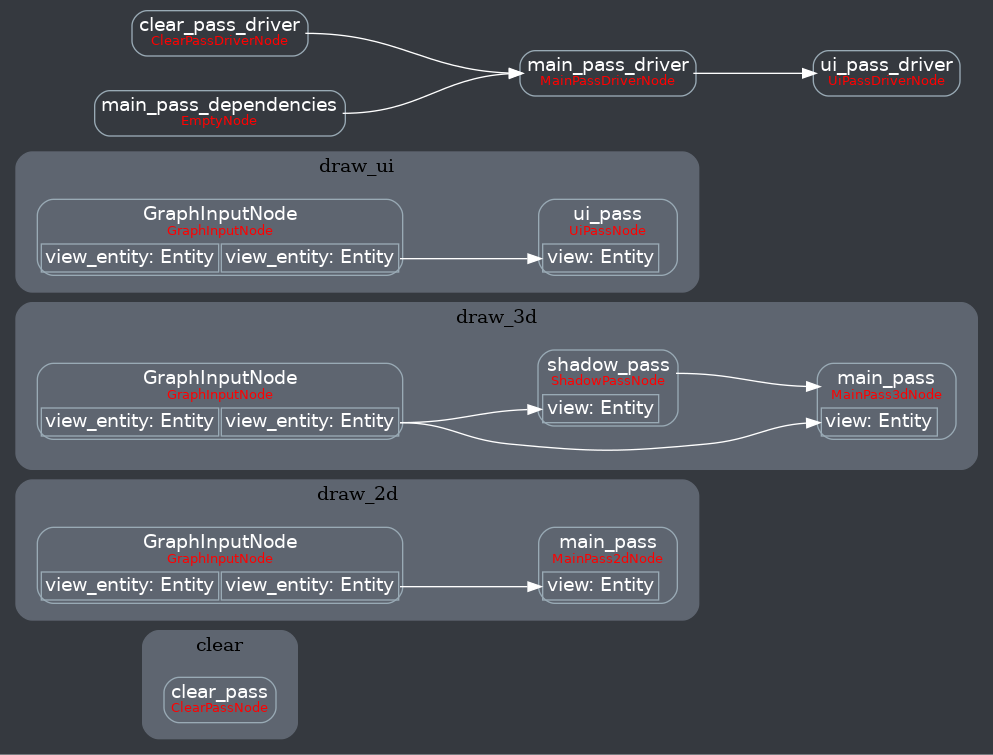

**This PR:**

- Moves the tonemapping from `pbr.wgsl` into a separate pass

- also add a separate upscaling pass after the tonemapping which writes to the swap chain (enables resolution-independant rendering and post-processing after tonemapping)

- adds a `hdr` bool to the camera which controls whether the pbr and sprite shaders render into a `Rgba16Float` texture

**Open questions:**

- ~should the 2d graph work the same as the 3d one?~ it is the same now

- ~The current solution is a bit inflexible because while you can add a post processing pass that writes to e.g. the `hdr_texture`, you can't write to a separate `user_postprocess_texture` while reading the `hdr_texture` and tell the tone mapping pass to read from the `user_postprocess_texture` instead. If the tonemapping and upscaling render graph nodes were to take in a `TextureView` instead of the view entity this would almost work, but the bind groups for their respective input textures are already created in the `Queue` render stage in the hardcoded order.~ solved by creating bind groups in render node

**New render graph:**

<details>

<summary>Before</summary>

</details>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Proactive changing of code to comply with warnings generated by beta of rustlang version of cargo clippy.

## Solution

- Code changed as recommended by `rustup update`, `rustup default beta`, `cargo run -p ci -- clippy`.

- Tested using `beta` and `stable`. No clippy warnings in either after changes made.

---

## Changelog

- Warnings fixed were: `clippy::explicit-auto-deref` (present in 11 files), `clippy::needless-borrow` (present in 2 files), and `clippy::only-used-in-recursion` (only 1 file).

# Objective

- Build on #6336 for more plugin configurations

## Solution

- `LogSettings`, `ImageSettings` and `DefaultTaskPoolOptions` are now plugins settings rather than resources

---

## Changelog

- `LogSettings` plugin settings have been move to `LogPlugin`, `ImageSettings` to `ImagePlugin` and `DefaultTaskPoolOptions` to `CorePlugin`

## Migration Guide

The `LogSettings` settings have been moved from a resource to `LogPlugin` configuration:

```rust

// Old (Bevy 0.8)

app

.insert_resource(LogSettings {

level: Level::DEBUG,

filter: "wgpu=error,bevy_render=info,bevy_ecs=trace".to_string(),

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(LogPlugin {

level: Level::DEBUG,

filter: "wgpu=error,bevy_render=info,bevy_ecs=trace".to_string(),

}))

```

The `ImageSettings` settings have been moved from a resource to `ImagePlugin` configuration:

```rust

// Old (Bevy 0.8)

app

.insert_resource(ImageSettings::default_nearest())

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(ImagePlugin::default_nearest()))

```

The `DefaultTaskPoolOptions` settings have been moved from a resource to `CorePlugin::task_pool_options`:

```rust

// Old (Bevy 0.8)

app

.insert_resource(DefaultTaskPoolOptions::with_num_threads(4))

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(CorePlugin {

task_pool_options: TaskPoolOptions::with_num_threads(4),

}))

```

# Objective

- Improve #3953

## Solution

- The very specific circumstances under which the render world is reset meant that the flush_as_invalid function could be replaced with one that had a noop as its init method.

- This removes a double-writing issue leading to greatly increased performance.

Running the reproduction code in the linked issue, this change nearly doubles the framerate.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Avoids creating a `SurfaceConfiguration` for every window in every frame for the `prepare_windows` system

- As such also avoid calling `get_supported_formats` for every window in every frame

## Solution

- Construct `SurfaceConfiguration` lazyly in `prepare_windows`

---

This also changes the error message for failed initial surface configuration from "Failed to acquire next swapchain texture" to "Error configuring surface".

# Objective

- Make `Time` API more consistent.

- Support time accel/decel/pause.

## Solution

This is just the `Time` half of #3002. I was told that part isn't controversial.

- Give the "delta time" and "total elapsed time" methods `f32`, `f64`, and `Duration` variants with consistent naming.

- Implement accelerating / decelerating the passage of time.

- Implement stopping time.

---

## Changelog

- Changed `time_since_startup` to `elapsed` because `time.time_*` is just silly.

- Added `relative_speed` and `set_relative_speed` methods.

- Added `is_paused`, `pause`, `unpause` , and methods. (I'd prefer `resume`, but `unpause` matches `Timer` API.)

- Added `raw_*` variants of the "delta time" and "total elapsed time" methods.

- Added `first_update` method because there's a non-zero duration between startup and the first update.

## Migration Guide

- `time.time_since_startup()` -> `time.elapsed()`

- `time.seconds_since_startup()` -> `time.elapsed_seconds_f64()`

- `time.seconds_since_startup_wrapped_f32()` -> `time.elapsed_seconds_wrapped()`

If you aren't sure which to use, most systems should continue to use "scaled" time (e.g. `time.delta_seconds()`). The realtime "unscaled" time measurements (e.g. `time.raw_delta_seconds()`) are mostly for debugging and profiling.

# Objective

The `RenderLayers` type is never registered, making it unavailable for reflection.

## Solution

Register it in `CameraPlugin`, the same plugin that registers the related `Visibility*` types.

# Objective

- Update `wgpu` to 0.14.0, `naga` to `0.10.0`, `winit` to 0.27.4, `raw-window-handle` to 0.5.0, `ndk` to 0.7.

## Solution

---

## Changelog

### Changed

- Changed `RawWindowHandleWrapper` to `RawHandleWrapper` which wraps both `RawWindowHandle` and `RawDisplayHandle`, which satisfies the `impl HasRawWindowHandle and HasRawDisplayHandle` that `wgpu` 0.14.0 requires.

- Changed `bevy_window::WindowDescriptor`'s `cursor_locked` to `cursor_grab_mode`, change its type from `bool` to `bevy_window::CursorGrabMode`.

## Migration Guide

- Adjust usage of `bevy_window::WindowDescriptor`'s `cursor_locked` to `cursor_grab_mode`, and adjust its type from `bool` to `bevy_window::CursorGrabMode`.

# Objective

Make toggling the visibility of an entity slightly more convenient.

## Solution

Add a mutating `toggle` method to the `Visibility` component

```rust

fn my_system(mut query: Query<&mut Visibility, With<SomeMarker>>) {

let mut visibility = query.single_mut();

// before:

visibility.is_visible = !visibility.is_visible;

// after:

visibility.toggle();

}

```

## Changelog

### Added

- Added a mutating `toggle` method to the `Visibility` component

# Objective

- Trying to make it possible to do write tests that don't require a raw window handle.

- Fixes https://github.com/bevyengine/bevy/issues/6106.

## Solution

- Make the interface and type changes. Avoid accessing `None`.

---

## Changelog

- Converted `raw_window_handle` field in both `Window` and `ExtractedWindow` to `Option<RawWindowHandleWrapper>`.

- Revised accessor function `Window::raw_window_handle()` to return `Option<RawWindowHandleWrapper>`.

- Skip conditions in loops that would require a raw window handle (to create a `Surface`, for example).

## Migration Guide

`Window::raw_window_handle()` now returns `Option<RawWindowHandleWrapper>`.

Co-authored-by: targrub <62773321+targrub@users.noreply.github.com>

As suggested in #6104, it would be nice to link directly to `linux_dependencies.md` file in the panic message when running on Linux. And when not compiling for Linux, we fall back to the old message.

Signed-off-by: Lena Milizé <me@lvmn.org>

# Objective

Resolves#6104.

## Solution

Add link to `linux_dependencies.md` when compiling for Linux, and fall back to the old one when not.

…

# Objective

- Fixes Camera not being serializable due to missing registrations in core functionality.

- Fixes#6169

## Solution

- Updated Bevy_Render CameraPlugin with registrations for Option<Viewport> and then Bevy_Core CorePlugin with registrations for ReflectSerialize and ReflectDeserialize for type data Range<f32> respectively according to the solution in #6169

Co-authored-by: Noah <noahshomette@gmail.com>

# Objective

There is no Srgb support on some GPU and display protocols with `winit` (for example, Nvidia's GPUs with Wayland). Thus `TextureFormat::bevy_default()` which returns `Rgba8UnormSrgb` or `Bgra8UnormSrgb` will cause panics on such platforms. This patch will resolve this problem. Fix https://github.com/bevyengine/bevy/issues/3897.

## Solution

Make `initialize_renderer` expose `wgpu::Adapter` and `first_available_texture_format`, use the `first_available_texture_format` by default.

## Changelog

* Fixed https://github.com/bevyengine/bevy/issues/3897.

# Objective

- Reflecting `Default` is required for scripts to create `Reflect` types at runtime with no static type information.

- Reflecting `Default` on `Handle<T>` and `ComputedVisibility` should allow scripts from `bevy_mod_js_scripting` to actually spawn sprites from scratch, without needing any hand-holding from the host-game.

## Solution

- Derive `ReflectDefault` for `Handle<T>` and `ComputedVisiblity`.

---

## Changelog

> This section is optional. If this was a trivial fix, or has no externally-visible impact, you can delete this section.

- The `Default` trait is now reflected for `Handle<T>` and `ComputedVisibility`

# Objective

Add a method for getting a world space ray from a viewport position.

Opted to add a `Ray` type to `bevy_math` instead of returning a tuple of `Vec3`'s as this is clearer and easier to document

The docs on `viewport_to_world` are okay, but I'm not super happy with them.

## Changelog

* Add `Camera::viewport_to_world`

* Add `Camera::ndc_to_world`

* Add `Ray` to `bevy_math`

* Some doc tweaks

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- Currently, errors aren't logged as soon as they are found, they are logged only on the next frame. This means your shader could have an unreported error that could have been reported on the first frame.

## Solution

- Log the error as soon as they are found, don't wait until next frame

## Notes

I discovered this issue because I was simply unwrapping the `Result` from `PipelinCache::get_render_pipeline()` which caused it to fail without any explanations. Admittedly, this was a bit of a user error, I shouldn't have unwrapped that, but it seems a bit strange to wait until the next time the pipeline is processed to log the error instead of just logging it as soon as possible since we already have all the info necessary.

# Objective

The [Stageless RFC](https://github.com/bevyengine/rfcs/pull/45) involves allowing exclusive systems to be referenced and ordered relative to parallel systems. We've agreed that unifying systems under `System` is the right move.

This is an alternative to #4166 (see rationale in the comments I left there). Note that this builds on the learnings established there (and borrows some patterns).

## Solution

This unifies parallel and exclusive systems under the shared `System` trait, removing the old `ExclusiveSystem` trait / impls. This is accomplished by adding a new `ExclusiveFunctionSystem` impl similar to `FunctionSystem`. It is backed by `ExclusiveSystemParam`, which is similar to `SystemParam`. There is a new flattened out SystemContainer api (which cuts out a lot of trait and type complexity).

This means you can remove all cases of `exclusive_system()`:

```rust

// before

commands.add_system(some_system.exclusive_system());

// after

commands.add_system(some_system);

```

I've also implemented `ExclusiveSystemParam` for `&mut QueryState` and `&mut SystemState`, which makes this possible in exclusive systems:

```rust

fn some_exclusive_system(

world: &mut World,

transforms: &mut QueryState<&Transform>,

state: &mut SystemState<(Res<Time>, Query<&Player>)>,

) {

for transform in transforms.iter(world) {

println!("{transform:?}");

}

let (time, players) = state.get(world);

for player in players.iter() {

println!("{player:?}");

}

}

```

Note that "exclusive function systems" assume `&mut World` is present (and the first param). I think this is a fair assumption, given that the presence of `&mut World` is what defines the need for an exclusive system.

I added some targeted SystemParam `static` constraints, which removed the need for this:

``` rust

fn some_exclusive_system(state: &mut SystemState<(Res<'static, Time>, Query<&'static Player>)>) {}

```

## Related

- #2923

- #3001

- #3946

## Changelog

- `ExclusiveSystem` trait (and implementations) has been removed in favor of sharing the `System` trait.

- `ExclusiveFunctionSystem` and `ExclusiveSystemParam` were added, enabling flexible exclusive function systems

- `&mut SystemState` and `&mut QueryState` now implement `ExclusiveSystemParam`

- Exclusive and parallel System configuration is now done via a unified `SystemDescriptor`, `IntoSystemDescriptor`, and `SystemContainer` api.

## Migration Guide

Calling `.exclusive_system()` is no longer required (or supported) for converting exclusive system functions to exclusive systems:

```rust

// Old (0.8)

app.add_system(some_exclusive_system.exclusive_system());

// New (0.9)

app.add_system(some_exclusive_system);

```

Converting "normal" parallel systems to exclusive systems is done by calling the exclusive ordering apis:

```rust

// Old (0.8)

app.add_system(some_system.exclusive_system().at_end());

// New (0.9)

app.add_system(some_system.at_end());

```

Query state in exclusive systems can now be cached via ExclusiveSystemParams, which should be preferred for clarity and performance reasons:

```rust

// Old (0.8)

fn some_system(world: &mut World) {

let mut transforms = world.query::<&Transform>();

for transform in transforms.iter(world) {

}

}

// New (0.9)

fn some_system(world: &mut World, transforms: &mut QueryState<&Transform>) {

for transform in transforms.iter(world) {

}

}

```

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

# Objective

- Reconfigure surface after present mode changes. It seems that this is not done currently at runtime. It's pretty common for games to change such graphical settings at runtime.

- Fixes present mode issue in #5111

## Solution

- Exactly like resolution change gets tracked when extracting window, do the same for present mode.

Additionally, I added present mode (vsync) toggling to window settings example.

# Objective

Take advantage of the "impl Bundle for Component" changes in #2975 / add the follow up changes discussed there.

## Solution

- Change `insert` and `remove` to accept a Bundle instead of a Component (for both Commands and World)

- Deprecate `insert_bundle`, `remove_bundle`, and `remove_bundle_intersection`

- Add `remove_intersection`

---

## Changelog

- Change `insert` and `remove` now accept a Bundle instead of a Component (for both Commands and World)

- `insert_bundle` and `remove_bundle` are deprecated

## Migration Guide

Replace `insert_bundle` with `insert`:

```rust

// Old (0.8)

commands.spawn().insert_bundle(SomeBundle::default());

// New (0.9)

commands.spawn().insert(SomeBundle::default());

```

Replace `remove_bundle` with `remove`:

```rust

// Old (0.8)

commands.entity(some_entity).remove_bundle::<SomeBundle>();

// New (0.9)

commands.entity(some_entity).remove::<SomeBundle>();

```

Replace `remove_bundle_intersection` with `remove_intersection`:

```rust

// Old (0.8)

world.entity_mut(some_entity).remove_bundle_intersection::<SomeBundle>();

// New (0.9)

world.entity_mut(some_entity).remove_intersection::<SomeBundle>();

```

Consider consolidating as many operations as possible to improve ergonomics and cut down on archetype moves:

```rust

// Old (0.8)

commands.spawn()

.insert_bundle(SomeBundle::default())

.insert(SomeComponent);

// New (0.9) - Option 1

commands.spawn().insert((

SomeBundle::default(),

SomeComponent,

))

// New (0.9) - Option 2

commands.spawn_bundle((

SomeBundle::default(),

SomeComponent,

))

```

## Next Steps

Consider changing `spawn` to accept a bundle and deprecate `spawn_bundle`.

# Objective

Implement `IntoIterator` for `&Extract<P>` if the system parameter it wraps implements `IntoIterator`.

Enables the use of `IntoIterator` with an extracted query.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

A common pitfall since 0.8 is the requirement on `ComputedVisibility`

being present on all ancestors of an entity that itself has

`ComputedVisibility`, without which, the entity becomes invisible.

I myself hit the issue and got very confused, and saw a few people hit

it as well, so it makes sense to provide a hint of what to do when such

a situation is encountered.

- Fixes#5849

- Closes#5616

- Closes#2277

- Closes#5081

## Solution

We now check that all entities with both a `Parent` and a

`ComputedVisibility` component have parents that themselves have a

`ComputedVisibility` component.

Note that the warning is only printed once.

We also add a similar warning to `GlobalTransform`.

This only emits a warning. Because sometimes it could be an intended

behavior.

Alternatives:

- Do nothing and keep repeating to newcomers how to avoid recurring

pitfalls

- Make the transform and visibility propagation tolerant to missing

components (#5616)

- Probably archetype invariants, though the current draft would not

allow detecting that kind of errors

---

## Changelog

- Add a warning when encountering dubious component hierarchy structure

Co-authored-by: Nicola Papale <nicopap@users.noreply.github.com>

# Objective

fixes#5946

## Solution

adjust cluster index calculation for viewport origin.

from reading point 2 of the rasterization algorithm description in https://gpuweb.github.io/gpuweb/#rasterization, it looks like framebuffer space (and so @bulitin(position)) is not meant to be adjusted for viewport origin, so we need to subtract that to get the right cluster index.

- add viewport origin to rust `ExtractedView` and wgsl `View` structs

- subtract from frag coord for cluster index calculation

# Objective

Currently some TextureFormats are not supported by the Image type.

The `TextureFormat::Rg16Unorm` format is useful for storing minmax heightmaps.

Similar to #5249 I now additionally require image to support the dual channel variant.

## Solution

Added `TextureFormat::Rg16Unorm` support to Image.

Additionally this PR derives `Resource` for `SpecializedComputePipelines`, because for some reason this was missing.

All other special pipelines do derive `Resource` already.

Co-authored-by: Kurt Kühnert <51823519+Ku95@users.noreply.github.com>

## Solution

Exposes the image <-> "texture" as methods on `Image`.

## Extra

I'm wondering if `image_texture_conversion.rs` should be renamed to `image_conversion.rs`. That or the file be deleted altogether in favour of putting the code alongside the rest of the `Image` impl. Its kind-of weird to refer to the `Image` as a texture.

Also `Image::convert` is a public method so I didn't want to edit its signature, but it might be nice to have the function consume the image instead of just passing a reference to it because it would eliminate a clone.

## Changelog

> Rename `image_to_texture` to `Image::from_dynamic`

> Rename `texture_to_image` to `Image::try_into_dynamic`

> `Image::try_into_dynamic` now returns a `Result` (this is to make it easier for users who didn't read that only a few conversions are supported to figure it out.)

# Objective

Document most of the public items of the `bevy_render::camera` module and its

sub-modules.

## Solution

Add docs to most public items. Follow-up from #3447.

# Objective

Document `PipelineCache` and a few other related types.

## Solution

Add documenting comments to `PipelineCache` and a few other related

types in the same file.

# Objective

- In WASM, creating a pipeline can easily take 2 seconds, freezing the game while doing so

- Preloading pipelines can be done during a "loading" state, but it is not trivial to know which pipeline to preload, or when it's done

## Solution

- Add a log with shaders being loaded and their shader defs

- add a function on `PipelineCache` to return the number of ready pipelines

# Objective

Since `identity` is a const fn that takes no arguments it seems logical to make it an associated constant.

This is also more in line with types from glam (eg. `Quat::IDENTITY`).

## Migration Guide

The method `identity()` on `Transform`, `GlobalTransform` and `TransformBundle` has been deprecated.

Use the associated constant `IDENTITY` instead.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- While generating https://github.com/jakobhellermann/bevy_reflect_ts_type_export/blob/main/generated/types.ts, I noticed that some types that implement `Reflect` did not register themselves

- `Viewport` isn't reflect but can be (there's a TODO)

## Solution

- register all reflected types

- derive `Reflect` for `Viewport`

## Changelog

- more types are not registered in the type registry

- remove `Serialize`, `Deserialize` impls from `Viewport`

I also decided to remove the `Serialize, Deserialize` from the `Viewport`, since they were (AFAIK) only used for reflection, which now is done without serde. So this is technically a breaking change for people who relied on that impl directly.

Personally I don't think that every bevy type should implement `Serialize, Deserialize`, as that would lead to a ton of code generation that mostly isn't necessary because we can do the same with `Reflect`, but if this is deemed controversial I can remove it from this PR.

## Migration Guide

- `KeyCode` now implements `Reflect` not as `reflect_value`, but with proper struct reflection. The `Serialize` and `Deserialize` impls were removed, now that they are no longer required for scene serialization.

# Objective

Fix a nasty system ordering bug between `update_frusta` and `camera_system` that lead to incorrect frustum s, leading to excessive culling and extremely hard-to-debug visual glitches

## Solution

- add explicit system ordering