# Objective

It is difficult to inspect the generated assembly of benchmark systems

using a tool such as `cargo-asm`

## Solution

Mark the related functions as `#[inline(never)]`. This way, you can pass

the module name as argument to `cargo-asm` to get the generated assembly

for the given function.

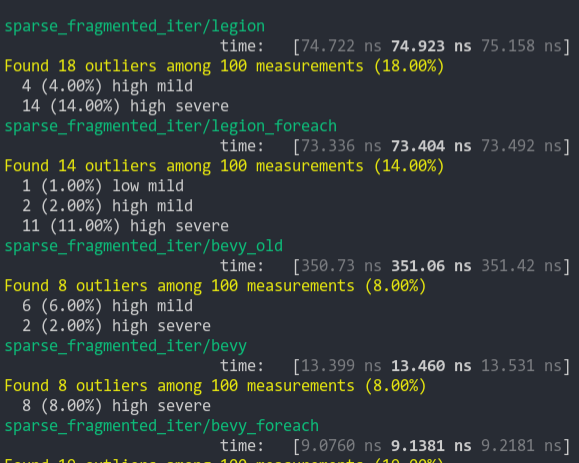

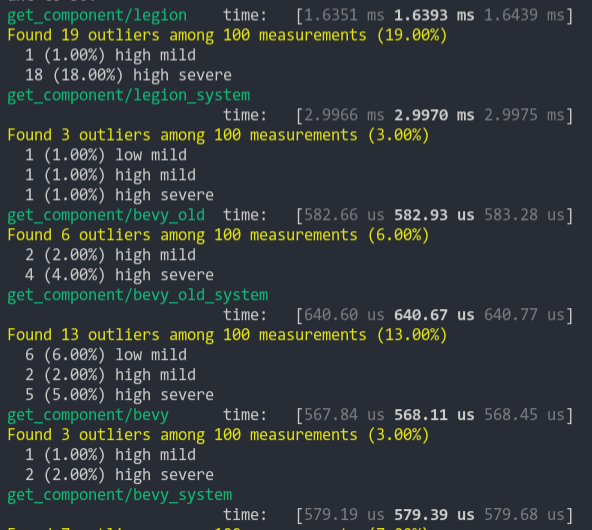

It may have as side effect to make benchmarks a bit more predictable and

useful too. As it prevents inlining where in bevy no inlining could

possibly take place.

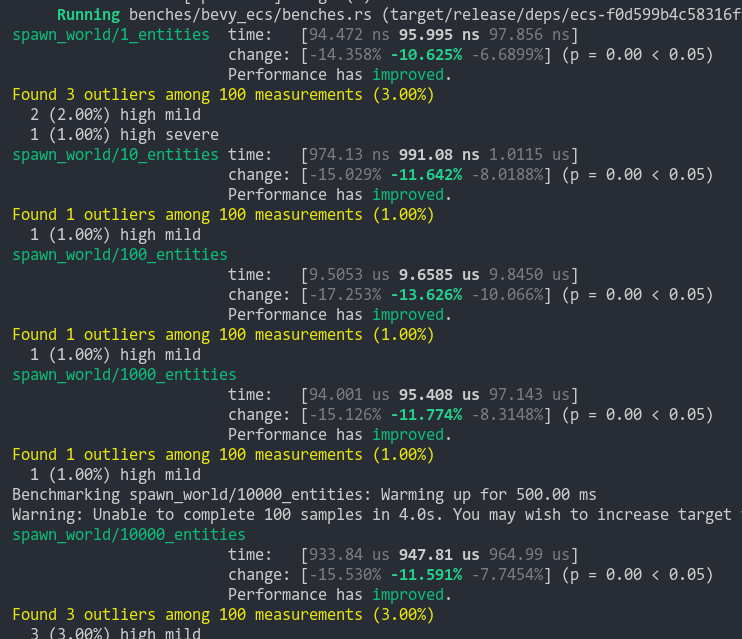

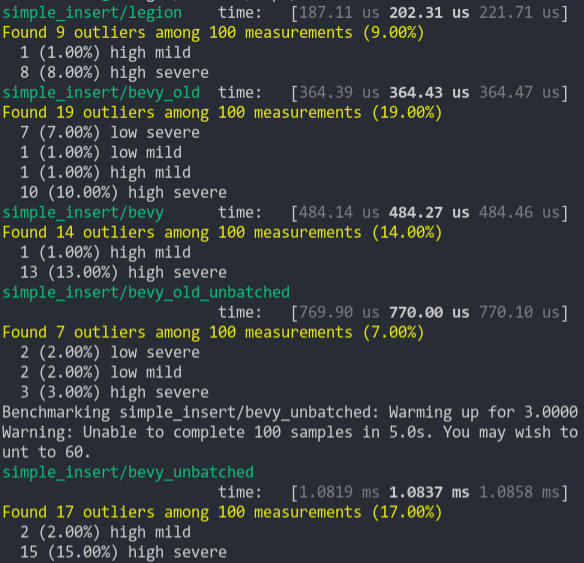

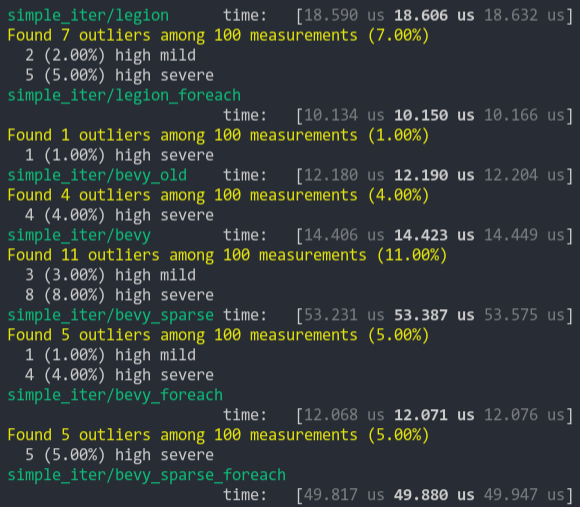

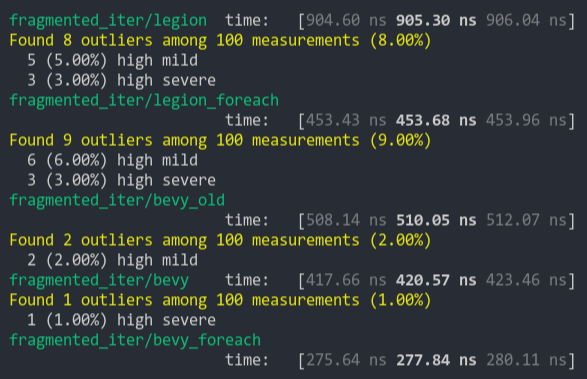

### Measurements

Following the recommendations in

<https://easyperf.net/blog/2019/08/02/Perf-measurement-environment-on-Linux>,

I

1. Put my CPU in "AMD ECO" mode, which surprisingly is the equivalent of

disabling turboboost, giving more consistent performances

2. Disabled all hyperthreading cores using `echo 0 >

/sys/devices/system/cpu/cpu{11,12…}/online`

3. Set the scaling governor to `performance`

4. Manually disabled AMD boost with `echo 0 >

/sys/devices/system/cpu/cpufreq/boost`

5. Set the nice level of the criterion benchmark using `cargo bench … &

sudo renice -n -5 -p $! ; fg`

6. Not running any other program than the benchmarks (outside of system

daemons and the X11 server)

With this setup, running multiple times the same benchmarks on `main`

gives me a lot of "regression" and "improvement" messages, which is

absurd given that no code changed.

On this branch, there is still some spurious performance change

detection, but they are much less frequent.

This only accounts for `iter_simple` and `iter_frag` benchmarks of

course.

# Objective

`no_archetype` benchmark group results were very noisy

## Solution

Use the `SingeThreaded` executor.

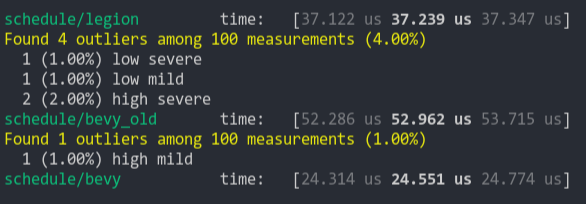

On my machine, this makes the `no_archetype` bench group 20 to 30 times

faster. Meaning that most of the runtime was accounted by the

multithreaded scheduler. ie: the benchmark was not testing system

archetype update, but the overhead of multithreaded scheduling.

With this change, the benchmark results are more meaningful.

The add_archetypes function is also simplified.

# Objective

- Move schedule name into `Schedule` to allow the schedule name to be

used for errors and tracing in Schedule methods

- Fixes#9510

## Solution

- Move label onto `Schedule` and adjust api's on `World` and `Schedule`

to not pass explicit label where it makes sense to.

- add name to errors and tracing.

- `Schedule::new` now takes a label so either add the label or use

`Schedule::default` which uses a default label. `default` is mostly used

in doc examples and tests.

---

## Changelog

- move label onto `Schedule` to improve error message and logging for

schedules.

## Migration Guide

`Schedule::new` and `App::add_schedule`

```rust

// old

let schedule = Schedule::new();

app.add_schedule(MyLabel, schedule);

// new

let schedule = Schedule::new(MyLabel);

app.add_schedule(schedule);

```

if you aren't using a label and are using the schedule struct directly

you can use the default constructor.

```rust

// old

let schedule = Schedule::new();

schedule.run(world);

// new

let schedule = Schedule::default();

schedule.run(world);

```

`Schedules:insert`

```rust

// old

let schedule = Schedule::new();

schedules.insert(MyLabel, schedule);

// new

let schedule = Schedule::new(MyLabel);

schedules.insert(schedule);

```

`World::add_schedule`

```rust

// old

let schedule = Schedule::new();

world.add_schedule(MyLabel, schedule);

// new

let schedule = Schedule::new(MyLabel);

world.add_schedule(schedule);

```

# Objective

A Bezier curve is a curve defined by two or more control points. In the

simplest form, it's just a line. The (arguably) most common type of

Bezier curve is a cubic Bezier, defined by four control points. These

are often used in animation, etc. Bevy has a Bezier curve struct called

`Bezier`. However, this is technically a misnomer as it only represents

cubic Bezier curves.

## Solution

This PR changes the struct name to `CubicBezier` to more accurately

reflect the struct's usage. Since it's exposed in Bevy's prelude, it can

potentially collide with other `Bezier` implementations. While that

might instead be an argument for removing it from the prelude, there's

also something to be said for adding a more general `Bezier` into Bevy,

in which case we'd likely want to use the name `Bezier`. As a final

motivator, not only is the struct located in `cubic_spines.rs`, there

are also several other spline-related structs which follow the

`CubicXxx` naming convention where applicable. For example,

`CubicSegment` represents a cubic Bezier curve (with coefficients

pre-baked).

---

## Migration Guide

- Change all `Bezier` references to `CubicBezier`

# Objective

We want to measure performance on path reflection parsing.

## Solution

Benchmark path-based reflection:

- Add a benchmark for `ParsedPath::parse`

It's fairly noisy, this is why I added the 3% threshold.

Ideally we would fix the noisiness though. Supposedly I'm seeding the

RNG correctly, so there shouldn't be much observable variance. Maybe

someone can help spot the issue.

# Objective

The `QueryParIter::for_each_mut` function is required when doing

parallel iteration with mutable queries.

This results in an unfortunate stutter:

`query.par_iter_mut().par_for_each_mut()` ('mut' is repeated).

## Solution

- Make `for_each` compatible with mutable queries, and deprecate

`for_each_mut`. In order to prevent `for_each` from being called

multiple times in parallel, we take ownership of the QueryParIter.

---

## Changelog

- `QueryParIter::for_each` is now compatible with mutable queries.

`for_each_mut` has been deprecated as it is now redundant.

## Migration Guide

The method `QueryParIter::for_each_mut` has been deprecated and is no

longer functional. Use `for_each` instead, which now supports mutable

queries.

```rust

// Before:

query.par_iter_mut().for_each_mut(|x| ...);

// After:

query.par_iter_mut().for_each(|x| ...);

```

The method `QueryParIter::for_each` now takes ownership of the

`QueryParIter`, rather than taking a shared reference.

```rust

// Before:

let par_iter = my_query.par_iter().batching_strategy(my_batching_strategy);

par_iter.for_each(|x| {

// ...Do stuff with x...

par_iter.for_each(|y| {

// ...Do nested stuff with y...

});

});

// After:

my_query.par_iter().batching_strategy(my_batching_strategy).for_each(|x| {

// ...Do stuff with x...

my_query.par_iter().batching_strategy(my_batching_strategy).for_each(|y| {

// ...Do nested stuff with y...

});

});

```

# Objective

Follow-up to #6404 and #8292.

Mutating the world through a shared reference is surprising, and it

makes the meaning of `&World` unclear: sometimes it gives read-only

access to the entire world, and sometimes it gives interior mutable

access to only part of it.

This is an up-to-date version of #6972.

## Solution

Use `UnsafeWorldCell` for all interior mutability. Now, `&World`

*always* gives you read-only access to the entire world.

---

## Changelog

TODO - do we still care about changelogs?

## Migration Guide

Mutating any world data using `&World` is now considered unsound -- the

type `UnsafeWorldCell` must be used to achieve interior mutability. The

following methods now accept `UnsafeWorldCell` instead of `&World`:

- `QueryState`: `get_unchecked`, `iter_unchecked`,

`iter_combinations_unchecked`, `for_each_unchecked`,

`get_single_unchecked`, `get_single_unchecked_manual`.

- `SystemState`: `get_unchecked_manual`

```rust

let mut world = World::new();

let mut query = world.query::<&mut T>();

// Before:

let t1 = query.get_unchecked(&world, entity_1);

let t2 = query.get_unchecked(&world, entity_2);

// After:

let world_cell = world.as_unsafe_world_cell();

let t1 = query.get_unchecked(world_cell, entity_1);

let t2 = query.get_unchecked(world_cell, entity_2);

```

The methods `QueryState::validate_world` and

`SystemState::matches_world` now take a `WorldId` instead of `&World`:

```rust

// Before:

query_state.validate_world(&world);

// After:

query_state.validate_world(world.id());

```

The methods `QueryState::update_archetypes` and

`SystemState::update_archetypes` now take `UnsafeWorldCell` instead of

`&World`:

```rust

// Before:

query_state.update_archetypes(&world);

// After:

query_state.update_archetypes(world.as_unsafe_world_cell_readonly());

```

# Objective

- Fixes#8811 .

## Solution

- Rename "write" method to "apply" in Command trait definition.

- Rename other implementations of command trait throughout bevy's code

base.

---

## Changelog

- Changed: `Command::write` has been changed to `Command::apply`

- Changed: `EntityCommand::write` has been changed to

`EntityCommand::apply`

## Migration Guide

- `Command::write` implementations need to be changed to implement

`Command::apply` instead. This is a mere name change, with no further

actions needed.

- `EntityCommand::write` implementations need to be changed to implement

`EntityCommand::apply` instead. This is a mere name change, with no

further actions needed.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

# Objective

Be consistent with `Resource`s and `Components` and have `Event` types

be more self-documenting.

Although not susceptible to accidentally using a function instead of a

value due to `Event`s only being initialized by their type, much of the

same reasoning for removing the blanket impl on `Resource` also applies

here.

* Not immediately obvious if a type is intended to be an event

* Prevent invisible conflicts if the same third-party or primitive types

are used as events

* Allows for further extensions (e.g. opt-in warning for missed events)

## Solution

Remove the blanket impl for the `Event` trait. Add a derive macro for

it.

---

## Changelog

- `Event` is no longer implemented for all applicable types. Add the

`#[derive(Event)]` macro for events.

## Migration Guide

* Add the `#[derive(Event)]` macro for events. Third-party types used as

events should be wrapped in a newtype.

# Objective

Fix#7833.

Safety comments in the multi-threaded executor don't really talk about

system world accesses, which makes it unclear if the code is actually

valid.

## Solution

Update the `System` trait to use `UnsafeWorldCell`. This type's API is

written in a way that makes it much easier to cleanly maintain safety

invariants. Use this type throughout the multi-threaded executor, with a

liberal use of safety comments.

---

## Migration Guide

The `System` trait now uses `UnsafeWorldCell` instead of `&World`. This

type provides a robust API for interior mutable world access.

- The method `run_unsafe` uses this type to manage world mutations

across multiple threads.

- The method `update_archetype_component_access` uses this type to

ensure that only world metadata can be used.

```rust

let mut system = IntoSystem::into_system(my_system);

system.initialize(&mut world);

// Before:

system.update_archetype_component_access(&world);

unsafe { system.run_unsafe(&world) }

// After:

system.update_archetype_component_access(world.as_unsafe_world_cell_readonly());

unsafe { system.run_unsafe(world.as_unsafe_world_cell()) }

```

---------

Co-authored-by: James Liu <contact@jamessliu.com>

# Objective

> This PR is based on discussion from #6601

The Dynamic types (e.g. `DynamicStruct`, `DynamicList`, etc.) act as

both:

1. Dynamic containers which may hold any arbitrary data

2. Proxy types which may represent any other type

Currently, the only way we can represent the proxy-ness of a Dynamic is

by giving it a name.

```rust

// This is just a dynamic container

let mut data = DynamicStruct::default();

// This is a "proxy"

data.set_name(std::any::type_name::<Foo>());

```

This type name is the only way we check that the given Dynamic is a

proxy of some other type. When we need to "assert the type" of a `dyn

Reflect`, we call `Reflect::type_name` on it. However, because we're

only using a string to denote the type, we run into a few gotchas and

limitations.

For example, hashing a Dynamic proxy may work differently than the type

it proxies:

```rust

#[derive(Reflect, Hash)]

#[reflect(Hash)]

struct Foo(i32);

let concrete = Foo(123);

let dynamic = concrete.clone_dynamic();

let concrete_hash = concrete.reflect_hash();

let dynamic_hash = dynamic.reflect_hash();

// The hashes are not equal because `concrete` uses its own `Hash` impl

// while `dynamic` uses a reflection-based hashing algorithm

assert_ne!(concrete_hash, dynamic_hash);

```

Because the Dynamic proxy only knows about the name of the type, it's

unaware of any other information about it. This means it also differs on

`Reflect::reflect_partial_eq`, and may include ignored or skipped fields

in places the concrete type wouldn't.

## Solution

Rather than having Dynamics pass along just the type name of proxied

types, we can instead have them pass around the `TypeInfo`.

Now all Dynamic types contain an `Option<&'static TypeInfo>` rather than

a `String`:

```diff

pub struct DynamicTupleStruct {

- type_name: String,

+ represented_type: Option<&'static TypeInfo>,

fields: Vec<Box<dyn Reflect>>,

}

```

By changing `Reflect::get_type_info` to

`Reflect::represented_type_info`, hopefully we make this behavior a

little clearer. And to account for `None` values on these dynamic types,

`Reflect::represented_type_info` now returns `Option<&'static

TypeInfo>`.

```rust

let mut data = DynamicTupleStruct::default();

// Not proxying any specific type

assert!(dyn_tuple_struct.represented_type_info().is_none());

let type_info = <Foo as Typed>::type_info();

dyn_tuple_struct.set_represented_type(Some(type_info));

// Alternatively:

// let dyn_tuple_struct = foo.clone_dynamic();

// Now we're proxying `Foo`

assert!(dyn_tuple_struct.represented_type_info().is_some());

```

This means that we can have full access to all the static type

information for the proxied type. Future work would include

transitioning more static type information (trait impls, attributes,

etc.) over to the `TypeInfo` so it can actually be utilized by Dynamic

proxies.

### Alternatives & Rationale

> **Note**

> These alternatives were written when this PR was first made using a

`Proxy` trait. This trait has since been removed.

<details>

<summary>View</summary>

#### Alternative: The `Proxy<T>` Approach

I had considered adding something like a `Proxy<T>` type where `T` would

be the Dynamic and would contain the proxied type information.

This was nice in that it allows us to explicitly determine whether

something is a proxy or not at a type level. `Proxy<DynamicStruct>`

proxies a struct. Makes sense.

The reason I didn't go with this approach is because (1) tuples, (2)

complexity, and (3) `PartialReflect`.

The `DynamicTuple` struct allows us to represent tuples at runtime. It

also allows us to do something you normally can't with tuples: add new

fields. Because of this, adding a field immediately invalidates the

proxy (e.g. our info for `(i32, i32)` doesn't apply to `(i32, i32,

NewField)`). By going with this PR's approach, we can just remove the

type info on `DynamicTuple` when that happens. However, with the

`Proxy<T>` approach, it becomes difficult to represent this behavior—

we'd have to completely control how we access data for `T` for each `T`.

Secondly, it introduces some added complexities (aside from the manual

impls for each `T`). Does `Proxy<T>` impl `Reflect`? Likely yes, if we

want to represent it as `dyn Reflect`. What `TypeInfo` do we give it?

How would we forward reflection methods to the inner type (remember, we

don't have specialization)? How do we separate this from Dynamic types?

And finally, how do all this in a way that's both logical and intuitive

for users?

Lastly, introducing a `Proxy` trait rather than a `Proxy<T>` struct is

actually more inline with the [Unique Reflect

RFC](https://github.com/bevyengine/rfcs/pull/56). In a way, the `Proxy`

trait is really one part of the `PartialReflect` trait introduced in

that RFC (it's technically not in that RFC but it fits well with it),

where the `PartialReflect` serves as a way for proxies to work _like_

concrete types without having full access to everything a concrete

`Reflect` type can do. This would help bridge the gap between the

current state of the crate and the implementation of that RFC.

All that said, this is still a viable solution. If the community

believes this is the better path forward, then we can do that instead.

These were just my reasons for not initially going with it in this PR.

#### Alternative: The Type Registry Approach

The `Proxy` trait is great and all, but how does it solve the original

problem? Well, it doesn't— yet!

The goal would be to start moving information from the derive macro and

its attributes to the generated `TypeInfo` since these are known

statically and shouldn't change. For example, adding `ignored: bool` to

`[Un]NamedField` or a list of impls.

However, there is another way of storing this information. This is, of

course, one of the uses of the `TypeRegistry`. If we're worried about

Dynamic proxies not aligning with their concrete counterparts, we could

move more type information to the registry and require its usage.

For example, we could replace `Reflect::reflect_hash(&self)` with

`Reflect::reflect_hash(&self, registry: &TypeRegistry)`.

That's not the _worst_ thing in the world, but it is an ergonomics loss.

Additionally, other attributes may have their own requirements, further

restricting what's possible without the registry. The `Reflect::apply`

method will require the registry as well now. Why? Well because the

`map_apply` function used for the `Reflect::apply` impls on `Map` types

depends on `Map::insert_boxed`, which (at least for `DynamicMap`)

requires `Reflect::reflect_hash`. The same would apply when adding

support for reflection-based diffing, which will require

`Reflect::reflect_partial_eq`.

Again, this is a totally viable alternative. I just chose not to go with

it for the reasons above. If we want to go with it, then we can close

this PR and we can pursue this alternative instead.

#### Downsides

Just to highlight a quick potential downside (likely needs more

investigation): retrieving the `TypeInfo` requires acquiring a lock on

the `GenericTypeInfoCell` used by the `Typed` impls for generic types

(non-generic types use a `OnceBox which should be faster). I am not sure

how much of a performance hit that is and will need to run some

benchmarks to compare against.

</details>

### Open Questions

1. Should we use `Cow<'static, TypeInfo>` instead? I think that might be

easier for modding? Perhaps, in that case, we need to update

`Typed::type_info` and friends as well?

2. Are the alternatives better than the approach this PR takes? Are

there other alternatives?

---

## Changelog

### Changed

- `Reflect::get_type_info` has been renamed to

`Reflect::represented_type_info`

- This method now returns `Option<&'static TypeInfo>` rather than just

`&'static TypeInfo`

### Added

- Added `Reflect::is_dynamic` method to indicate when a type is dynamic

- Added a `set_represented_type` method on all dynamic types

### Removed

- Removed `TypeInfo::Dynamic` (use `Reflect::is_dynamic` instead)

- Removed `Typed` impls for all dynamic types

## Migration Guide

- The Dynamic types no longer take a string type name. Instead, they

require a static reference to `TypeInfo`:

```rust

#[derive(Reflect)]

struct MyTupleStruct(f32, f32);

let mut dyn_tuple_struct = DynamicTupleStruct::default();

dyn_tuple_struct.insert(1.23_f32);

dyn_tuple_struct.insert(3.21_f32);

// BEFORE:

let type_name = std::any::type_name::<MyTupleStruct>();

dyn_tuple_struct.set_name(type_name);

// AFTER:

let type_info = <MyTupleStruct as Typed>::type_info();

dyn_tuple_struct.set_represented_type(Some(type_info));

```

- `Reflect::get_type_info` has been renamed to

`Reflect::represented_type_info` and now also returns an

`Option<&'static TypeInfo>` (instead of just `&'static TypeInfo`):

```rust

// BEFORE:

let info: &'static TypeInfo = value.get_type_info();

// AFTER:

let info: &'static TypeInfo = value.represented_type_info().unwrap();

```

- `TypeInfo::Dynamic` and `DynamicInfo` has been removed. Use

`Reflect::is_dynamic` instead:

```rust

// BEFORE:

if matches!(value.get_type_info(), TypeInfo::Dynamic) {

// ...

}

// AFTER:

if value.is_dynamic() {

// ...

}

```

---------

Co-authored-by: radiish <cb.setho@gmail.com>

# Objective

There aren't any reflection bench tests for `Struct::clone_dynamic` or

`Reflect::get_type_info`.

## Solution

Add benches for `Struct::clone_dynamic` and `Reflect::get_type_info`.

# Objective

Fix#7731. Add basic Event sending and iteration benchmarks to

bevy_ecs's benchmark suite.

## Solution

Add said benchmarks scaling from 100 to 50,000 events.

Not sure if I want to include a randomization of the events going in,

the current implementation might be too easy for the compiler to

optimize.

---------

Co-authored-by: JoJoJet <21144246+JoJoJet@users.noreply.github.com>

# Objective

- Update `glam` to the latest version.

## Solution

- Update `glam` to version `0.23`.

Since the breaking change in `glam` only affects the `scalar-math` feature, this should cause no issues.

# Objective

- Make cubic splines more flexible and more performant

- Remove the existing spline implementation that is generic over many degrees

- This is a potential performance footgun and adds type complexity for negligible gain.

- Add implementations of:

- Bezier splines

- Cardinal splines (inc. Catmull-Rom)

- B-Splines

- Hermite splines

https://user-images.githubusercontent.com/2632925/221780519-495d1b20-ab46-45b4-92a3-32c46da66034.mp4https://user-images.githubusercontent.com/2632925/221780524-2b154016-699f-404f-9c18-02092f589b04.mp4https://user-images.githubusercontent.com/2632925/221780525-f934f99d-9ad4-4999-bae2-75d675f5644f.mp4

## Solution

- Implements the concept that splines are curve generators (e.g. https://youtu.be/jvPPXbo87ds?t=3488) via the `CubicGenerator` trait.

- Common splines are bespoke data types that implement this trait. This gives us flexibility to add custom spline-specific methods on these types, while ultimately all generating a `CubicCurve`.

- All splines generate `CubicCurve`s, which are a chain of precomputed polynomial coefficients. This means that all splines have the same evaluation cost, as the calculations for determining position, velocity, and acceleration are all identical. In addition, `CubicCurve`s are simply a list of `CubicSegment`s, which are evaluated from t=0 to t=1. This also means cubic splines of different type can be chained together, as ultimately they all are simply a collection of `CubicSegment`s.

- Because easing is an operation on a singe segment of a Bezier curve, we can simply implement easing on `Beziers` that use the `Vec2` type for points. Higher level crates such as `bevy_ui` can wrap this in a more ergonomic interface as needed.

### Performance

Measured on a desktop i5 8600K (6-year-old CPU):

- easing: 2.7x faster (19ns)

- cubic vec2 position sample: 1.5x faster (1.8ns)

- cubic vec3 position sample: 1.5x faster (2.6ns)

- cubic vec3a position sample: 1.9x faster (1.4ns)

On a laptop i7 11800H:

- easing: 16ns

- cubic vec2 position sample: 1.6ns

- cubic vec3 position sample: 2.3ns

- cubic vec3a position sample: 1.2ns

---

## Changelog

- Added a generic cubic curve trait, and implementation for Cardinal splines (including Catmull-Rom), B-Splines, Beziers, and Hermite Splines. 2D cubic curve segments also implement easing functionality for animation.

# Objective

Base sets, added in #7466 are a special type of system set. Systems can only be added to base sets via `in_base_set`, while non-base sets can only be added via `in_set`. Unfortunately this is currently guarded by a runtime panic, which presents an unfortunate toe-stub when the wrong method is used. The delayed response between writing code and encountering the error (possibly hours) makes the distinction between base sets and other sets much more difficult to learn.

## Solution

Add the marker traits `BaseSystemSet` and `FreeSystemSet`. `in_base_set` and `in_set` now respectively accept these traits, which moves the runtime panic to a compile time error.

---

## Changelog

+ Added the marker trait `BaseSystemSet`, which is distinguished from a `FreeSystemSet`. These are both subtraits of `SystemSet`.

## Migration Guide

None if merged with 0.10

# Objective

- Adds foundational math for Bezier curves, useful for UI/2D/3D animation and smooth paths.

https://user-images.githubusercontent.com/2632925/218883143-e138f994-1795-40da-8c59-21d779666991.mp4

## Solution

- Adds the generic `Bezier` type, and a `Point` trait. The `Point` trait allows us to use control points of any dimension, as long as they support vector math. I've implemented it for `f32`(1D), `Vec2`(2D), and `Vec3`/`Vec3A`(3D).

- Adds `CubicBezierEasing` on top of `Bezier` with the addition of an implementation of cubic Bezier easing, which is a foundational tool for UI animation.

- This involves solving for $t$ in the parametric Bezier function $B(t)$ using the Newton-Raphson method to find a value with error $\leq$ 1e-7, capped at 8 iterations.

- Added type aliases for common Bezier curves: `CubicBezier2d`, `CubicBezier3d`, `QuadraticBezier2d`, and `QuadraticBezier3d`. These types use `Vec3A` to represent control points, as this was found to have an 80-90% speedup over using `Vec3`.

- Benchmarking shows quadratic/cubic Bezier evaluations $B(t)$ take \~1.8/2.4ns respectively. Easing, which requires an iterative solve takes \~50ns for cubic Beziers.

---

## Changelog

- Added `CubicBezier2d`, `CubicBezier3d`, `QuadraticBezier2d`, and `QuadraticBezier3d` types with methods for sampling position, velocity, and acceleration. The generic `Bezier` type is also available, and generic over any degree of Bezier curve.

- Added `CubicBezierEasing`, with additional methods to allow for smooth easing animations.

# Objective

We have a few old system labels that are now system sets but are still named or documented as labels. Documentation also generally mentioned system labels in some places.

## Solution

- Clean up naming and documentation regarding system sets

## Migration Guide

`PrepareAssetLabel` is now called `PrepareAssetSet`

Huge thanks to @maniwani, @devil-ira, @hymm, @cart, @superdump and @jakobhellermann for the help with this PR.

# Objective

- Followup #6587.

- Minimal integration for the Stageless Scheduling RFC: https://github.com/bevyengine/rfcs/pull/45

## Solution

- [x] Remove old scheduling module

- [x] Migrate new methods to no longer use extension methods

- [x] Fix compiler errors

- [x] Fix benchmarks

- [x] Fix examples

- [x] Fix docs

- [x] Fix tests

## Changelog

### Added

- a large number of methods on `App` to work with schedules ergonomically

- the `CoreSchedule` enum

- `App::add_extract_system` via the `RenderingAppExtension` trait extension method

- the private `prepare_view_uniforms` system now has a public system set for scheduling purposes, called `ViewSet::PrepareUniforms`

### Removed

- stages, and all code that mentions stages

- states have been dramatically simplified, and no longer use a stack

- `RunCriteriaLabel`

- `AsSystemLabel` trait

- `on_hierarchy_reports_enabled` run criteria (now just uses an ad hoc resource checking run condition)

- systems in `RenderSet/Stage::Extract` no longer warn when they do not read data from the main world

- `RunCriteriaLabel`

- `transform_propagate_system_set`: this was a nonstandard pattern that didn't actually provide enough control. The systems are already `pub`: the docs have been updated to ensure that the third-party usage is clear.

### Changed

- `System::default_labels` is now `System::default_system_sets`.

- `App::add_default_labels` is now `App::add_default_sets`

- `CoreStage` and `StartupStage` enums are now `CoreSet` and `StartupSet`

- `App::add_system_set` was renamed to `App::add_systems`

- The `StartupSchedule` label is now defined as part of the `CoreSchedules` enum

- `.label(SystemLabel)` is now referred to as `.in_set(SystemSet)`

- `SystemLabel` trait was replaced by `SystemSet`

- `SystemTypeIdLabel<T>` was replaced by `SystemSetType<T>`

- The `ReportHierarchyIssue` resource now has a public constructor (`new`), and implements `PartialEq`

- Fixed time steps now use a schedule (`CoreSchedule::FixedTimeStep`) rather than a run criteria.

- Adding rendering extraction systems now panics rather than silently failing if no subapp with the `RenderApp` label is found.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied.

- `SceneSpawnerSystem` now runs under `CoreSet::Update`, rather than `CoreStage::PreUpdate.at_end()`.

- `bevy_pbr::add_clusters` is no longer an exclusive system

- the top level `bevy_ecs::schedule` module was replaced with `bevy_ecs::scheduling`

- `tick_global_task_pools_on_main_thread` is no longer run as an exclusive system. Instead, it has been replaced by `tick_global_task_pools`, which uses a `NonSend` resource to force running on the main thread.

## Migration Guide

- Calls to `.label(MyLabel)` should be replaced with `.in_set(MySet)`

- Stages have been removed. Replace these with system sets, and then add command flushes using the `apply_system_buffers` exclusive system where needed.

- The `CoreStage`, `StartupStage, `RenderStage` and `AssetStage` enums have been replaced with `CoreSet`, `StartupSet, `RenderSet` and `AssetSet`. The same scheduling guarantees have been preserved.

- Systems are no longer added to `CoreSet::Update` by default. Add systems manually if this behavior is needed, although you should consider adding your game logic systems to `CoreSchedule::FixedTimestep` instead for more reliable framerate-independent behavior.

- Similarly, startup systems are no longer part of `StartupSet::Startup` by default. In most cases, this won't matter to you.

- For example, `add_system_to_stage(CoreStage::PostUpdate, my_system)` should be replaced with

- `add_system(my_system.in_set(CoreSet::PostUpdate)`

- When testing systems or otherwise running them in a headless fashion, simply construct and run a schedule using `Schedule::new()` and `World::run_schedule` rather than constructing stages

- Run criteria have been renamed to run conditions. These can now be combined with each other and with states.

- Looping run criteria and state stacks have been removed. Use an exclusive system that runs a schedule if you need this level of control over system control flow.

- For app-level control flow over which schedules get run when (such as for rollback networking), create your own schedule and insert it under the `CoreSchedule::Outer` label.

- Fixed timesteps are now evaluated in a schedule, rather than controlled via run criteria. The `run_fixed_timestep` system runs this schedule between `CoreSet::First` and `CoreSet::PreUpdate` by default.

- Command flush points introduced by `AssetStage` have been removed. If you were relying on these, add them back manually.

- Adding extract systems is now typically done directly on the main app. Make sure the `RenderingAppExtension` trait is in scope, then call `app.add_extract_system(my_system)`.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied. You may need to order your movement systems to occur before this system in order to avoid system order ambiguities in culling behavior.

- the `RenderLabel` `AppLabel` was renamed to `RenderApp` for clarity

- `App::add_state` now takes 0 arguments: the starting state is set based on the `Default` impl.

- Instead of creating `SystemSet` containers for systems that run in stages, simply use `.on_enter::<State::Variant>()` or its `on_exit` or `on_update` siblings.

- `SystemLabel` derives should be replaced with `SystemSet`. You will also need to add the `Debug`, `PartialEq`, `Eq`, and `Hash` traits to satisfy the new trait bounds.

- `with_run_criteria` has been renamed to `run_if`. Run criteria have been renamed to run conditions for clarity, and should now simply return a bool.

- States have been dramatically simplified: there is no longer a "state stack". To queue a transition to the next state, call `NextState::set`

## TODO

- [x] remove dead methods on App and World

- [x] add `App::add_system_to_schedule` and `App::add_systems_to_schedule`

- [x] avoid adding the default system set at inappropriate times

- [x] remove any accidental cycles in the default plugins schedule

- [x] migrate benchmarks

- [x] expose explicit labels for the built-in command flush points

- [x] migrate engine code

- [x] remove all mentions of stages from the docs

- [x] verify docs for States

- [x] fix uses of exclusive systems that use .end / .at_start / .before_commands

- [x] migrate RenderStage and AssetStage

- [x] migrate examples

- [x] ensure that transform propagation is exported in a sufficiently public way (the systems are already pub)

- [x] ensure that on_enter schedules are run at least once before the main app

- [x] re-enable opt-in to execution order ambiguities

- [x] revert change to `update_bounds` to ensure it runs in `PostUpdate`

- [x] test all examples

- [x] unbreak directional lights

- [x] unbreak shadows (see 3d_scene, 3d_shape, lighting, transparaency_3d examples)

- [x] game menu example shows loading screen and menu simultaneously

- [x] display settings menu is a blank screen

- [x] `without_winit` example panics

- [x] ensure all tests pass

- [x] SubApp doc test fails

- [x] runs_spawn_local tasks fails

- [x] [Fix panic_when_hierachy_cycle test hanging](https://github.com/alice-i-cecile/bevy/pull/120)

## Points of Difficulty and Controversy

**Reviewers, please give feedback on these and look closely**

1. Default sets, from the RFC, have been removed. These added a tremendous amount of implicit complexity and result in hard to debug scheduling errors. They're going to be tackled in the form of "base sets" by @cart in a followup.

2. The outer schedule controls which schedule is run when `App::update` is called.

3. I implemented `Label for `Box<dyn Label>` for our label types. This enables us to store schedule labels in concrete form, and then later run them. I ran into the same set of problems when working with one-shot systems. We've previously investigated this pattern in depth, and it does not appear to lead to extra indirection with nested boxes.

4. `SubApp::update` simply runs the default schedule once. This sucks, but this whole API is incomplete and this was the minimal changeset.

5. `time_system` and `tick_global_task_pools_on_main_thread` no longer use exclusive systems to attempt to force scheduling order

6. Implemetnation strategy for fixed timesteps

7. `AssetStage` was migrated to `AssetSet` without reintroducing command flush points. These did not appear to be used, and it's nice to remove these bottlenecks.

8. Migration of `bevy_render/lib.rs` and pipelined rendering. The logic here is unusually tricky, as we have complex scheduling requirements.

## Future Work (ideally before 0.10)

- Rename schedule_v3 module to schedule or scheduling

- Add a derive macro to states, and likely a `EnumIter` trait of some form

- Figure out what exactly to do with the "systems added should basically work by default" problem

- Improve ergonomics for working with fixed timesteps and states

- Polish FixedTime API to match Time

- Rebase and merge #7415

- Resolve all internal ambiguities (blocked on better tools, especially #7442)

- Add "base sets" to replace the removed default sets.

# Objective

Fixes#3184. Fixes#6640. Fixes#4798. Using `Query::par_for_each(_mut)` currently requires a `batch_size` parameter, which affects how it chunks up large archetypes and tables into smaller chunks to run in parallel. Tuning this value is difficult, as the performance characteristics entirely depends on the state of the `World` it's being run on. Typically, users will just use a flat constant and just tune it by hand until it performs well in some benchmarks. However, this is both error prone and risks overfitting the tuning on that benchmark.

This PR proposes a naive automatic batch-size computation based on the current state of the `World`.

## Background

`Query::par_for_each(_mut)` schedules a new Task for every archetype or table that it matches. Archetypes/tables larger than the batch size are chunked into smaller tasks. Assuming every entity matched by the query has an identical workload, this makes the worst case scenario involve using a batch size equal to the size of the largest matched archetype or table. Conversely, a batch size of `max {archetype, table} size / thread count * COUNT_PER_THREAD` is likely the sweetspot where the overhead of scheduling tasks is minimized, at least not without grouping small archetypes/tables together.

There is also likely a strict minimum batch size below which the overhead of scheduling these tasks is heavier than running the entire thing single-threaded.

## Solution

- [x] Remove the `batch_size` from `Query(State)::par_for_each` and friends.

- [x] Add a check to compute `batch_size = max {archeytpe/table} size / thread count * COUNT_PER_THREAD`

- [x] ~~Panic if thread count is 0.~~ Defer to `for_each` if the thread count is 1 or less.

- [x] Early return if there is no matched table/archetype.

- [x] Add override option for users have queries that strongly violate the initial assumption that all iterated entities have an equal workload.

---

## Changelog

Changed: `Query::par_for_each(_mut)` has been changed to `Query::par_iter(_mut)` and will now automatically try to produce a batch size for callers based on the current `World` state.

## Migration Guide

The `batch_size` parameter for `Query(State)::par_for_each(_mut)` has been removed. These calls will automatically compute a batch size for you. Remove these parameters from all calls to these functions.

Before:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_for_each(32, |comp| {

...

});

}

```

After:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_iter().for_each(|comp| {

...

});

}

```

Co-authored-by: Arnav Choubey <56453634+x-52@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Corey Farwell <coreyf@rwell.org>

Co-authored-by: Aevyrie <aevyrie@gmail.com>

# Objective

- https://github.com/bevyengine/bevy/pull/3505 marked `S-Adopt-Me` , this pr is to continue his work.

## Solution

- run `cargo clippy --workspace --all-targets --all-features -- -Aclippy::type_complexity -Wclippy::doc_markdown -Wclippy::redundant_else -Wclippy::match_same_arms -Wclippy::semicolon_if_nothing_returned -Wclippy::explicit_iter_loop -Wclippy::map_flatten -Dwarnings` under benches dir.

- fix issue according to suggestion.

# Objective

* Add benchmarks for `Query::get_many`.

* Speed up `Query::get_many`.

## Solution

Previously, `get_many` and `get_many_mut` used the method `array::map`, which tends to optimize very poorly. This PR replaces uses of that method with loops.

## Benchmarks

| Benchmark name | Execution time | Change from this PR |

|--------------------------------------|----------------|---------------------|

| query_get_many_2/50000_calls_table | 1.3732 ms | -24.967% |

| query_get_many_2/50000_calls_sparse | 1.3826 ms | -24.572% |

| query_get_many_5/50000_calls_table | 2.6833 ms | -30.681% |

| query_get_many_5/50000_calls_sparse | 2.9936 ms | -30.672% |

| query_get_many_10/50000_calls_table | 5.7771 ms | -36.950% |

| query_get_many_10/50000_calls_sparse | 7.4345 ms | -36.987% |

# Objective

Bevy still has many instances of using single-tuples `(T,)` to create a bundle. Due to #2975, this is no longer necessary.

## Solution

Search for regex `\(.+\s*,\)`. This should have found every instance.

# Objective

Speed up queries that are fragmented over many empty archetypes and tables.

## Solution

Add a early-out to check if the table or archetype is empty before iterating over it. This adds an extra branch for every archetype matched, but skips setting the archetype/table to the underlying state and any iteration over it.

This may not be worth it for the default `Query::iter` and maybe even the `Query::for_each` implementations, but this definitely avoids scheduling unnecessary tasks in the `Query::par_for_each` case.

Ideally, `matched_archetypes` should only contain archetypes where there's actually work to do, but this would add a `O(n)` flat cost to every call to `update_archetypes` that scales with the number of matched archetypes.

TODO: Benchmark

# Objective

The [Stageless RFC](https://github.com/bevyengine/rfcs/pull/45) involves allowing exclusive systems to be referenced and ordered relative to parallel systems. We've agreed that unifying systems under `System` is the right move.

This is an alternative to #4166 (see rationale in the comments I left there). Note that this builds on the learnings established there (and borrows some patterns).

## Solution

This unifies parallel and exclusive systems under the shared `System` trait, removing the old `ExclusiveSystem` trait / impls. This is accomplished by adding a new `ExclusiveFunctionSystem` impl similar to `FunctionSystem`. It is backed by `ExclusiveSystemParam`, which is similar to `SystemParam`. There is a new flattened out SystemContainer api (which cuts out a lot of trait and type complexity).

This means you can remove all cases of `exclusive_system()`:

```rust

// before

commands.add_system(some_system.exclusive_system());

// after

commands.add_system(some_system);

```

I've also implemented `ExclusiveSystemParam` for `&mut QueryState` and `&mut SystemState`, which makes this possible in exclusive systems:

```rust

fn some_exclusive_system(

world: &mut World,

transforms: &mut QueryState<&Transform>,

state: &mut SystemState<(Res<Time>, Query<&Player>)>,

) {

for transform in transforms.iter(world) {

println!("{transform:?}");

}

let (time, players) = state.get(world);

for player in players.iter() {

println!("{player:?}");

}

}

```

Note that "exclusive function systems" assume `&mut World` is present (and the first param). I think this is a fair assumption, given that the presence of `&mut World` is what defines the need for an exclusive system.

I added some targeted SystemParam `static` constraints, which removed the need for this:

``` rust

fn some_exclusive_system(state: &mut SystemState<(Res<'static, Time>, Query<&'static Player>)>) {}

```

## Related

- #2923

- #3001

- #3946

## Changelog

- `ExclusiveSystem` trait (and implementations) has been removed in favor of sharing the `System` trait.

- `ExclusiveFunctionSystem` and `ExclusiveSystemParam` were added, enabling flexible exclusive function systems

- `&mut SystemState` and `&mut QueryState` now implement `ExclusiveSystemParam`

- Exclusive and parallel System configuration is now done via a unified `SystemDescriptor`, `IntoSystemDescriptor`, and `SystemContainer` api.

## Migration Guide

Calling `.exclusive_system()` is no longer required (or supported) for converting exclusive system functions to exclusive systems:

```rust

// Old (0.8)

app.add_system(some_exclusive_system.exclusive_system());

// New (0.9)

app.add_system(some_exclusive_system);

```

Converting "normal" parallel systems to exclusive systems is done by calling the exclusive ordering apis:

```rust

// Old (0.8)

app.add_system(some_system.exclusive_system().at_end());

// New (0.9)

app.add_system(some_system.at_end());

```

Query state in exclusive systems can now be cached via ExclusiveSystemParams, which should be preferred for clarity and performance reasons:

```rust

// Old (0.8)

fn some_system(world: &mut World) {

let mut transforms = world.query::<&Transform>();

for transform in transforms.iter(world) {

}

}

// New (0.9)

fn some_system(world: &mut World, transforms: &mut QueryState<&Transform>) {

for transform in transforms.iter(world) {

}

}

```

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

# Objective

Take advantage of the "impl Bundle for Component" changes in #2975 / add the follow up changes discussed there.

## Solution

- Change `insert` and `remove` to accept a Bundle instead of a Component (for both Commands and World)

- Deprecate `insert_bundle`, `remove_bundle`, and `remove_bundle_intersection`

- Add `remove_intersection`

---

## Changelog

- Change `insert` and `remove` now accept a Bundle instead of a Component (for both Commands and World)

- `insert_bundle` and `remove_bundle` are deprecated

## Migration Guide

Replace `insert_bundle` with `insert`:

```rust

// Old (0.8)

commands.spawn().insert_bundle(SomeBundle::default());

// New (0.9)

commands.spawn().insert(SomeBundle::default());

```

Replace `remove_bundle` with `remove`:

```rust

// Old (0.8)

commands.entity(some_entity).remove_bundle::<SomeBundle>();

// New (0.9)

commands.entity(some_entity).remove::<SomeBundle>();

```

Replace `remove_bundle_intersection` with `remove_intersection`:

```rust

// Old (0.8)

world.entity_mut(some_entity).remove_bundle_intersection::<SomeBundle>();

// New (0.9)

world.entity_mut(some_entity).remove_intersection::<SomeBundle>();

```

Consider consolidating as many operations as possible to improve ergonomics and cut down on archetype moves:

```rust

// Old (0.8)

commands.spawn()

.insert_bundle(SomeBundle::default())

.insert(SomeComponent);

// New (0.9) - Option 1

commands.spawn().insert((

SomeBundle::default(),

SomeComponent,

))

// New (0.9) - Option 2

commands.spawn_bundle((

SomeBundle::default(),

SomeComponent,

))

```

## Next Steps

Consider changing `spawn` to accept a bundle and deprecate `spawn_bundle`.

*This PR description is an edited copy of #5007, written by @alice-i-cecile.*

# Objective

Follow-up to https://github.com/bevyengine/bevy/pull/2254. The `Resource` trait currently has a blanket implementation for all types that meet its bounds.

While ergonomic, this results in several drawbacks:

* it is possible to make confusing, silent mistakes such as inserting a function pointer (Foo) rather than a value (Foo::Bar) as a resource

* it is challenging to discover if a type is intended to be used as a resource

* we cannot later add customization options (see the [RFC](https://github.com/bevyengine/rfcs/blob/main/rfcs/27-derive-component.md) for the equivalent choice for Component).

* dependencies can use the same Rust type as a resource in invisibly conflicting ways

* raw Rust types used as resources cannot preserve privacy appropriately, as anyone able to access that type can read and write to internal values

* we cannot capture a definitive list of possible resources to display to users in an editor

## Notes to reviewers

* Review this commit-by-commit; there's effectively no back-tracking and there's a lot of churn in some of these commits.

*ira: My commits are not as well organized :')*

* I've relaxed the bound on Local to Send + Sync + 'static: I don't think these concerns apply there, so this can keep things simple. Storing e.g. a u32 in a Local is fine, because there's a variable name attached explaining what it does.

* I think this is a bad place for the Resource trait to live, but I've left it in place to make reviewing easier. IMO that's best tackled with https://github.com/bevyengine/bevy/issues/4981.

## Changelog

`Resource` is no longer automatically implemented for all matching types. Instead, use the new `#[derive(Resource)]` macro.

## Migration Guide

Add `#[derive(Resource)]` to all types you are using as a resource.

If you are using a third party type as a resource, wrap it in a tuple struct to bypass orphan rules. Consider deriving `Deref` and `DerefMut` to improve ergonomics.

`ClearColor` no longer implements `Component`. Using `ClearColor` as a component in 0.8 did nothing.

Use the `ClearColorConfig` in the `Camera3d` and `Camera2d` components instead.

Co-authored-by: Alice <alice.i.cecile@gmail.com>

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: devil-ira <justthecooldude@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Closes#4954

- Reduce the complexity of the `{System, App, *}Label` APIs.

## Solution

For the sake of brevity I will only refer to `SystemLabel`, but everything applies to all of the other label types as well.

- Add `SystemLabelId`, a lightweight, `copy` struct.

- Convert custom types into `SystemLabelId` using the trait `SystemLabel`.

## Changelog

- String literals implement `SystemLabel` for now, but this should be changed with #4409 .

## Migration Guide

- Any previous use of `Box<dyn SystemLabel>` should be replaced with `SystemLabelId`.

- `AsSystemLabel` trait has been modified.

- No more output generics.

- Method `as_system_label` now returns `SystemLabelId`, removing an unnecessary level of indirection.

- If you *need* a label that is determined at runtime, you can use `Box::leak`. Not recommended.

## Questions for later

* Should we generate a `Debug` impl along with `#[derive(*Label)]`?

* Should we rename `as_str()`?

* Should we remove the extra derives (such as `Hash`) from builtin `*Label` types?

* Should we automatically derive types like `Clone, Copy, PartialEq, Eq`?

* More-ergonomic comparisons between `Label` and `LabelId`.

* Move `Dyn{Eq, Hash,Clone}` somewhere else.

* Some API to make interning dynamic labels easier.

* Optimize string representation

* Empty string for unit structs -- no debug info but faster comparisons

* Don't show enum types -- same tradeoffs as asbove.

Remove unnecessary calls to `iter()`/`iter_mut()`.

Mainly updates the use of queries in our code, docs, and examples.

```rust

// From

for _ in list.iter() {

for _ in list.iter_mut() {

// To

for _ in &list {

for _ in &mut list {

```

We already enable the pedantic lint [clippy::explicit_iter_loop](https://rust-lang.github.io/rust-clippy/stable/) inside of Bevy. However, this only warns for a few known types from the standard library.

## Note for reviewers

As you can see the additions and deletions are exactly equal.

Maybe give it a quick skim to check I didn't sneak in a crypto miner, but you don't have to torture yourself by reading every line.

I already experienced enough pain making this PR :)

Co-authored-by: devil-ira <justthecooldude@gmail.com>

## Objective

Fixes: #5110

## Solution

- Moved benches into separate modules according to the part of ECS they are testing.

- Made so all ECS benches are included in one `benches.rs` so they don’t need to be added separately in `Cargo.toml`.

- Renamed a bunch of files to have more coherent names.

- Merged `schedule.rs` and `system_schedule.rs` into one file.

Removed `const_vec2`/`const_vec3`

and replaced with equivalent `.from_array`.

# Objective

Fixes#5112

## Solution

- `encase` needs to update to `glam` as well. See teoxoy/encase#4 on progress on that.

- `hexasphere` also needs to be updated, see OptimisticPeach/hexasphere#12.

# Objective

As a part of evaluating #4800, at the behest of @cart, it was noted that the ECS microbenchmarks all focus on singular component queries, whereas in reality most systems will have wider queries with multiple components in each.

## Solution

Use const generics to add wider variants of existing benchmarks.

# Objective

- Add benchmarks to test the performance of `Schedule`'s system dependency resolution.

## Solution

- Do a series of benchmarks while increasing the number of systems in the schedule to see how the run-time scales.

- Split the benchmarks into a group with no dependencies, and a group with many dependencies.

Right now, a direct reference to the target TaskPool is required to launch tasks on the pools, despite the three newtyped pools (AsyncComputeTaskPool, ComputeTaskPool, and IoTaskPool) effectively acting as global instances. The need to pass a TaskPool reference adds notable friction to spawning subtasks within existing tasks. Possible use cases for this may include chaining tasks within the same pool like spawning separate send/receive I/O tasks after waiting on a network connection to be established, or allowing cross-pool dependent tasks like starting dependent multi-frame computations following a long I/O load.

Other task execution runtimes provide static access to spawning tasks (i.e. `tokio::spawn`), which is notably easier to use than the reference passing required by `bevy_tasks` right now.

This PR makes does the following:

* Adds `*TaskPool::init` which initializes a `OnceCell`'ed with a provided TaskPool. Failing if the pool has already been initialized.

* Adds `*TaskPool::get` which fetches the initialized global pool of the respective type or panics. This generally should not be an issue in normal Bevy use, as the pools are initialized before they are accessed.

* Updated default task pool initialization to either pull the global handles and save them as resources, or if they are already initialized, pull the a cloned global handle as the resource.

This should make it notably easier to build more complex task hierarchies for dependent tasks. It should also make writing bevy-adjacent, but not strictly bevy-only plugin crates easier, as the global pools ensure it's all running on the same threads.

One alternative considered is keeping a thread-local reference to the pool for all threads in each pool to enable the same `tokio::spawn` interface. This would spawn tasks on the same pool that a task is currently running in. However this potentially leads to potential footgun situations where long running blocking tasks run on `ComputeTaskPool`.

# Objective

Fixes#3183. Requiring a `&TaskPool` parameter is sort of meaningless if the only correct one is to use the one provided by `Res<ComputeTaskPool>` all the time.

## Solution

Have `QueryState` save a clone of the `ComputeTaskPool` which is used for all `par_for_each` functions.

~~Adds a small overhead of the internal `Arc` clone as a part of the startup, but the ergonomics win should be well worth this hardly-noticable overhead.~~

Updated the docs to note that it will panic the task pool is not present as a resource.

# Future Work

If https://github.com/bevyengine/rfcs/pull/54 is approved, we can replace these resource lookups with a static function call instead to get the `ComputeTaskPool`.

---

## Changelog

Removed: The `task_pool` parameter of `Query(State)::par_for_each(_mut)`. These calls will use the `World`'s `ComputeTaskPool` resource instead.

## Migration Guide

The `task_pool` parameter for `Query(State)::par_for_each(_mut)` has been removed. Remove these parameters from all calls to these functions.

Before:

```rust

fn parallel_system(

task_pool: Res<ComputeTaskPool>,

query: Query<&MyComponent>,

) {

query.par_for_each(&task_pool, 32, |comp| {

...

});

}

```

After:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_for_each(32, |comp| {

...

});

}

```

If using `Query(State)` outside of a system run by the scheduler, you may need to manually configure and initialize a `ComputeTaskPool` as a resource in the `World`.

# Objective

Partially addresses #3594.

## Solution

This adds basic benchmarks for `List`, `Map`, and `Struct` implementors, both concrete (`Vec`, `HashMap`, and defined struct types) and dynamic (`DynamicList`, `DynamicMap` and `DynamicStruct`).

A few insights from the benchmarks (all measurements are local on my machine):

- Applying a list with many elements to a list with no elements is slower than applying to a list of the same length:

- 3-4x slower when applying to a `Vec`

- 5-6x slower when applying to a `DynamicList`

I suspect this could be improved by `reserve()`ing the correct length up front, but haven't tested.

- Applying a `DynamicMap` to another `Map` is linear in the number of elements, but applying a `HashMap` seems to be at least quadratic. No intuition on this one.

- Applying like structs (concrete -> concrete, `DynamicStruct` -> `DynamicStruct`) seems to be faster than applying unlike structs.

# Objective

- The code in `events.rs` was a bit messy. There was lots of duplication between `EventReader` and `ManualEventReader`, and the state management code is not needed.

## Solution

- Clean it up.

## Future work

Should we remove the type parameter from `ManualEventReader`?

It doesn't have any meaning outside of its source `Events`. But there's no real reason why it needs to have a type parameter - it's just plain data. I didn't remove it yet to keep the type safety in some of the users of it (primarily related to `&mut World` usage)

# Objective

- Add benches for run criteria. This is in anticipation of run criteria being redone in stageless.

## Solution

- Benches run criteria that don't access anything to test overhead

- Test run criteria that use a query

- Test run criteria that use a resource

# Objective

- Make it possible to use `System`s outside of the scheduler/executor without having to define logic to track new archetypes and call `System::add_archetype()` for each.

## Solution

- Replace `System::add_archetype(&Archetype)` with `System::update_archetypes(&World)`, making systems responsible for tracking their own most recent archetype generation the way that `SystemState` already does.

This has minimal (or simplifying) effect on most of the code with the exception of `FunctionSystem`, which must now track the latest `ArchetypeGeneration` it saw instead of relying on the executor to do it.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Benchmarks are good.

- Licensing situation appears to be [cleared up](https://github.com/bevyengine/bevy/pull/4225#issuecomment-1078710209).

## Solution

- Add the benchmark suite back in

- Suggested PR title: "Revert "Revert "Add cart's fork of ecs_bench_suite (#4225)" (#4252)"

Co-authored-by: Daniel McNab <36049421+DJMcNab@users.noreply.github.com>

This reverts commit 08ef2f0a28.

# Objective

- #4225 was merged without considering the licensing considerations.

- It merges in code taken from https://github.com/cart/ecs_bench_suite/tree/bevy-benches/src/bevy.

- We can safely assume that we do have a license to cart's contributions. However, these build upon 377e96e69a, for which we have no license.

- This has been verified by looking in the Cargo.toml, the root folder and the readme, none of which mention a license. Additionally, the string "license" [doesn't appear](https://github.com/rust-gamedev/ecs_bench_suite/search?q=license) in the repository.

- This means the code is all rights reserved.

- (The author of these commits also hasn't commented in #2373, though even if they had, it would be legally *dubious* to rely on that to license any code they ever wrote)

- (Note that the latest commit on the head at https://github.com/rust-gamedev/ecs_bench_suite hasn't had a license added either.)

- We are currently incorrectly claiming to be able to give an MIT/Apache 2.0 license to this code.

## Solution

- Revert it

# Objective

Better benchmarking for ECS. Fix#2062.

## Solution

Port @cart's fork of ecs_bench_suite to the official bench suite for bevy_ecs, replace cgmath with glam, update to latest bevy.

# Objective

- The addition was being optimised out in the `for_each` loop, but not the `for` loop

- Previously this meant that the `for_each` loop looked 3 times as fast - it's actually only 2 times as fast

- Effectively, the addition take one unit of time, the for_each takes one unit of time, and the for loop version takes two units of time.

## Solution

- `black_box` the count in each loop

Note that this does not fix `for_each` being faster than `for`, unfortunately.

This PR is part of the issue #3492.

# Objective

- Add and update the bevy_tasks documentation to achieve a 100% documentation coverage (sans `prelude` module)

- Add the #![warn(missing_docs)] lint to keep the documentation coverage for the future.

## Solution

- Add and update the bevy_math documentation.

- Add the #![warn(missing_docs)] lint.

- Added doctest wherever there should be in the missing docs.

# Objective

- Document that the error codes will be rendered on the bevy website (see bevyengine/bevy-website#216)

- Some Cargo.toml files did not include the license or a description field

## Solution

- Readme for the errors crate

- Mark internal/development crates with `publish = false`

- Add missing license/descriptions to some crates

- [x] merge bevyengine/bevy-website#216

Objective

During work on #3009 I've found that not all jobs use actions-rs, and therefore, an previous version of Rust is used for them. So while compilation and other stuff can pass, checking markup and Android build may fail with compilation errors.

Solution

This PR adds `action-rs` for any job running cargo, and updates the edition to 2021.

This implements the most minimal variant of #1843 - a derive for marker trait. This is a prerequisite to more complicated features like statically defined storage type or opt-out component reflection.

In order to make component struct's purpose explicit and avoid misuse, it must be annotated with `#[derive(Component)]` (manual impl is discouraged for compatibility). Right now this is just a marker trait, but in the future it might be expanded. Making this change early allows us to make further changes later without breaking backward compatibility for derive macro users.

This already prevents a lot of issues, like using bundles in `insert` calls. Primitive types are no longer valid components as well. This can be easily worked around by adding newtype wrappers and deriving `Component` for them.

One funny example of prevented bad code (from our own tests) is when an newtype struct or enum variant is used. Previously, it was possible to write `insert(Newtype)` instead of `insert(Newtype(value))`. That code compiled, because function pointers (in this case newtype struct constructor) implement `Send + Sync + 'static`, so we allowed them to be used as components. This is no longer the case and such invalid code will trigger a compile error.

Co-authored-by: = <=>

Co-authored-by: TheRawMeatball <therawmeatball@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Fixes#2674

- Check that benches build

## Solution

- Adds a job that runs `cargo check --benches`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This upstreams the code changes used by the new renderer to enable cross-app Entity reuse:

* Spawning at specific entities

* get_or_spawn: spawns an entity if it doesn't already exist and returns an EntityMut

* insert_or_spawn_batch: the batched equivalent to `world.get_or_spawn(entity).insert_bundle(bundle)`

* Clearing entities and storages

* Allocating Entities with "invalid" archetypes. These entities cannot be queried / are treated as "non existent". They serve as "reserved" entities that won't show up when calling `spawn()`. They must be "specifically spawned at" using apis like `get_or_spawn(entity)`.

In combination, these changes enable the "render world" to clear entities / storages each frame and reserve all "app world entities". These can then be spawned during the "render extract step".

This refactors "spawn" and "insert" code in a way that I think is a massive improvement to legibility and re-usability. It also yields marginal performance wins by reducing some duplicate lookups (less than a percentage point improvement on insertion benchmarks). There is also some potential for future unsafe reduction (by making BatchSpawner and BatchInserter generic). But for now I want to cut down generic usage to a minimum to encourage smaller binaries and faster compiles.

This is currently a draft because it needs more tests (although this code has already had some real-world testing on my custom-shaders branch).

I also fixed the benchmarks (which currently don't compile!) / added new ones to illustrate batching wins.

After these changes, Bevy ECS is basically ready to accommodate the new renderer. I think the biggest missing piece at this point is "sub apps".

# Objective

- Remove all the `.system()` possible.

- Check for remaining missing cases.

## Solution

- Remove all `.system()`, fix compile errors

- 32 calls to `.system()` remains, mostly internals, the few others should be removed after #2446

# Objective

While looking at the code of `World`, I noticed two basic functions (`get` and `get_mut`) that are probably called a lot and with simple code that are not `inline`

## Solution

- Add benchmark to check impact

- Add `#[inline]`

```

group this pr main

----- ---- ----

world_entity/50000_entities 1.00 115.9±11.90µs ? ?/sec 1.71 198.5±29.54µs ? ?/sec

world_get/50000_entities_SparseSet 1.00 409.9±46.96µs ? ?/sec 1.18 483.5±36.41µs ? ?/sec

world_get/50000_entities_Table 1.00 391.3±29.83µs ? ?/sec 1.16 455.6±57.85µs ? ?/sec

world_query_for_each/50000_entities_SparseSet 1.02 121.3±18.36µs ? ?/sec 1.00 119.4±13.88µs ? ?/sec

world_query_for_each/50000_entities_Table 1.03 13.8±0.96µs ? ?/sec 1.00 13.3±0.54µs ? ?/sec

world_query_get/50000_entities_SparseSet 1.00 666.9±54.36µs ? ?/sec 1.03 687.1±57.77µs ? ?/sec

world_query_get/50000_entities_Table 1.01 584.4±55.12µs ? ?/sec 1.00 576.3±36.13µs ? ?/sec

world_query_iter/50000_entities_SparseSet 1.01 169.7±19.50µs ? ?/sec 1.00 168.6±32.56µs ? ?/sec

world_query_iter/50000_entities_Table 1.00 26.2±1.38µs ? ?/sec 1.91 50.0±4.40µs ? ?/sec

```

I didn't add benchmarks for the mutable path but I don't see how it could hurt to make it inline too...

# Objective

- Currently `Commands` are quite slow due to the need to allocate for each command and wrap it in a `Box<dyn Command>`.

- For example:

```rust

fn my_system(mut cmds: Commands) {

cmds.spawn().insert(42).insert(3.14);

}

```

will have 3 separate `Box<dyn Command>` that need to be allocated and ran.

## Solution

- Utilize a specialized data structure keyed `CommandQueueInner`.

- The purpose of `CommandQueueInner` is to hold a collection of commands in contiguous memory.

- This allows us to store each `Command` type contiguously in memory and quickly iterate through them and apply the `Command::write` trait function to each element.

# Objective

- Currently the `Commands` and `CommandQueue` have no performance testing.

- As `Commands` are quite expensive due to the `Box<dyn Command>` allocated for each command, there should be perf tests for implementations that attempt to improve the performance.

## Solution

- Add some benchmarking for `Commands` and `CommandQueue`.

# Bevy ECS V2

This is a rewrite of Bevy ECS (basically everything but the new executor/schedule, which are already awesome). The overall goal was to improve the performance and versatility of Bevy ECS. Here is a quick bulleted list of changes before we dive into the details:

* Complete World rewrite

* Multiple component storage types:

* Tables: fast cache friendly iteration, slower add/removes (previously called Archetypes)

* Sparse Sets: fast add/remove, slower iteration

* Stateful Queries (caches query results for faster iteration. fragmented iteration is _fast_ now)

* Stateful System Params (caches expensive operations. inspired by @DJMcNab's work in #1364)

* Configurable System Params (users can set configuration when they construct their systems. once again inspired by @DJMcNab's work)

* Archetypes are now "just metadata", component storage is separate

* Archetype Graph (for faster archetype changes)

* Component Metadata

* Configure component storage type

* Retrieve information about component size/type/name/layout/send-ness/etc

* Components are uniquely identified by a densely packed ComponentId

* TypeIds are now totally optional (which should make implementing scripting easier)

* Super fast "for_each" query iterators

* Merged Resources into World. Resources are now just a special type of component

* EntityRef/EntityMut builder apis (more efficient and more ergonomic)

* Fast bitset-backed `Access<T>` replaces old hashmap-based approach everywhere

* Query conflicts are determined by component access instead of archetype component access (to avoid random failures at runtime)

* With/Without are still taken into account for conflicts, so this should still be comfy to use

* Much simpler `IntoSystem` impl

* Significantly reduced the amount of hashing throughout the ecs in favor of Sparse Sets (indexed by densely packed ArchetypeId, ComponentId, BundleId, and TableId)

* Safety Improvements

* Entity reservation uses a normal world reference instead of unsafe transmute

* QuerySets no longer transmute lifetimes

* Made traits "unsafe" where relevant

* More thorough safety docs

* WorldCell

* Exposes safe mutable access to multiple resources at a time in a World

* Replaced "catch all" `System::update_archetypes(world: &World)` with `System::new_archetype(archetype: &Archetype)`

* Simpler Bundle implementation

* Replaced slow "remove_bundle_one_by_one" used as fallback for Commands::remove_bundle with fast "remove_bundle_intersection"

* Removed `Mut<T>` query impl. it is better to only support one way: `&mut T`

* Removed with() from `Flags<T>` in favor of `Option<Flags<T>>`, which allows querying for flags to be "filtered" by default

* Components now have is_send property (currently only resources support non-send)

* More granular module organization

* New `RemovedComponents<T>` SystemParam that replaces `query.removed::<T>()`

* `world.resource_scope()` for mutable access to resources and world at the same time

* WorldQuery and QueryFilter traits unified. FilterFetch trait added to enable "short circuit" filtering. Auto impled for cases that don't need it

* Significantly slimmed down SystemState in favor of individual SystemParam state

* System Commands changed from `commands: &mut Commands` back to `mut commands: Commands` (to allow Commands to have a World reference)

Fixes#1320

## `World` Rewrite