# Objective

Fixes https://github.com/bevyengine/bevy/issues/3499

## Solution

Uses a `HashMap` from `RenderTarget` to sampled textures when preparing `ViewTarget`s to ensure that two passes with the same render target get sampled to the same texture.

This builds on and depends on https://github.com/bevyengine/bevy/pull/3412, so this will be a draft PR until #3412 is merged. All changes for this PR are in the last commit.

# Objective

- Several examples are useful for qualitative tests of Bevy's performance

- By contrast, these are less useful for learning material: they are often relatively complex and have large amounts of setup and are performance optimized.

## Solution

- Move bevymark, many_sprites and many_cubes into the new stress_tests example folder

- Move contributors into the games folder: unlike the remaining examples in the 2d folder, it is not focused on demonstrating a clear feature.

# Objective

- Make use of storage buffers, where they are available, for clustered forward bindings to support far more point lights in a scene

- Fixes#3605

- Based on top of #4079

This branch on an M1 Max can keep 60fps with about 2150 point lights of radius 1m in the Sponza scene where I've been testing. The bottleneck is mostly assigning lights to clusters which grows faster than linearly (I think 1000 lights was about 1.5ms and 5000 was 7.5ms). I have seen papers and presentations leveraging compute shaders that can get this up to over 1 million. That said, I think any further optimisations should probably be done in a separate PR.

## Solution

- Add `RenderDevice` to the `Material` and `SpecializedMaterial` trait `::key()` functions to allow setting flags on the keys depending on feature/limit availability

- Make `GpuPointLights` and `ViewClusterBuffers` into enums containing `UniformVec` and `StorageBuffer` variants. Implement the necessary API on them to make usage the same for both cases, and the only difference is at initialisation time.

- Appropriate shader defs in the shader code to handle the two cases

## Context on some decisions / open questions

- I'm using `max_storage_buffers_per_shader_stage >= 3` as a check to see if storage buffers are supported. I was thinking about diving into 'binding resource management' but it feels like we don't have enough use cases to understand the problem yet, and it is mostly a separate concern to this PR, so I think it should be handled separately.

- Should `ViewClusterBuffers` and `ViewClusterBindings` be merged, duplicating the count variables into the enum variants?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Load skeletal weights and indices from GLTF files. Animate meshes.

## Solution

- Load skeletal weights and indices from GLTF files.

- Added `SkinnedMesh` component and ` SkinnedMeshInverseBindPose` asset

- Added `extract_skinned_meshes` to extract joint matrices.

- Added queue phase systems for enqueuing the buffer writes.

Some notes:

- This ports part of # #2359 to the current main.

- This generates new `BufferVec`s and bind groups every frame. The expectation here is that the number of `Query::get` calls during extract is probably going to be the stronger bottleneck, with up to 256 calls per skinned mesh. Until that is optimized, caching buffers and bind groups is probably a non-concern.

- Unfortunately, due to the uniform size requirements, this means a 16KB buffer is allocated for every skinned mesh every frame. There's probably a few ways to get around this, but most of them require either compute shaders or storage buffers, which are both incompatible with WebGL2.

Co-authored-by: james7132 <contact@jamessliu.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: James Liu <contact@jamessliu.com>

## Objective

There recently was a discussion on Discord about a possible test case for stress-testing transform hierarchies.

## Solution

Create a test case for stress testing transform propagation.

*Edit:* I have scrapped my previous example and built something more functional and less focused on visuals.

There are three test setups:

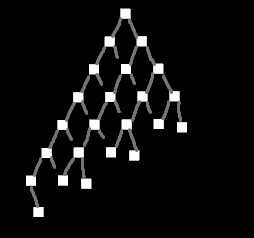

- `TestCase::Tree` recursively creates a tree with a specified depth and branch width

- `TestCase::NonUniformTree` is the same as `Tree` but omits nodes in a way that makes the tree "lean" towards one side, like this:

<details>

<summary></summary>

</details>

- `TestCase::Humanoids` creates one or more separate hierarchies based on the structure of common humanoid rigs

- this can both insert `active` and `inactive` instances of the human rig

It's possible to parameterize which parts of the hierarchy get updated (transform change) and which remain unchanged. This is based on @james7132 suggestion:

There's a probability to decide which entities should remain static. On top of that these changes can be limited to a certain range in the hierarchy (min_depth..max_depth).

# Objective

- Allow quick and easy testing of scenes

## Solution

- Add a `scene-viewer` tool based on `load_gltf`.

- Run it with e.g. `cargo run --release --example scene_viewer --features jpeg -- ../some/path/assets/models/Sponza/glTF/Sponza.gltf#Scene0`

- Configure the asset path as pointing to the repo root for convenience (paths specified relative to current working directory)

- Copy over the camera controller from the `shadow_biases` example

- Support toggling the light animation

- Support toggling shadows

- Support adjusting the directional light shadow projection (cascaded shadow maps will remove the need for this later)

I don't want to do too much on it up-front. Rather we can add features over time as we need them.

# Add Transform Examples

- Adding examples for moving/rotating entities (with its own section) to resolve#2400

I've stumbled upon this project and been fiddling around a little. Saw the issue and thought I might just add some examples for the proposed transformations.

Mind to check if I got the gist correctly and suggest anything I can improve?

# Objective

- Reduce power usage for games when not focused.

- Reduce power usage to ~0 when a desktop application is minimized (opt-in).

- Reduce power usage when focused, only updating on a `winit` event, or the user sends a redraw request. (opt-in)

https://user-images.githubusercontent.com/2632925/156904387-ec47d7de-7f06-4c6f-8aaf-1e952c1153a2.mp4

Note resource usage in the Task Manager in the above video.

## Solution

- Added a type `UpdateMode` that allows users to specify how the winit event loop is updated, without exposing winit types.

- Added two fields to `WinitConfig`, both with the `UpdateMode` type. One configures how the application updates when focused, and the other configures how the application behaves when it is not focused. Users can modify this resource manually to set the type of event loop control flow they want.

- For convenience, two functions were added to `WinitConfig`, that provide reasonable presets: `game()` (default) and `desktop_app()`.

- The `game()` preset, which is used by default, is unchanged from current behavior with one exception: when the app is out of focus the app updates at a minimum of 10fps, or every time a winit event is received. This has a huge positive impact on power use and responsiveness on my machine, which will otherwise continue running the app at many hundreds of fps when out of focus or minimized.

- The `desktop_app()` preset is fully reactive, only updating when user input (winit event) is supplied or a `RedrawRequest` event is sent. When the app is out of focus, it only updates on `Window` events - i.e. any winit event that directly interacts with the window. What this means in practice is that the app uses *zero* resources when minimized or not interacted with, but still updates fluidly when the app is out of focus and the user mouses over the application.

- Added a `RedrawRequest` event so users can force an update even if there are no events. This is useful in an application when you want to, say, run an animation even when the user isn't providing input.

- Added an example `low_power` to demonstrate these changes

## Usage

Configuring the event loop:

```rs

use bevy::winit::{WinitConfig};

// ...

.insert_resource(WinitConfig::desktop_app()) // preset

// or

.insert_resource(WinitConfig::game()) // preset

// or

.insert_resource(WinitConfig{ .. }) // manual

```

Requesting a redraw:

```rs

use bevy:🪟:RequestRedraw;

// ...

fn request_redraw(mut event: EventWriter<RequestRedraw>) {

event.send(RequestRedraw);

}

```

## Other details

- Because we have a single event loop for multiple windows, every time I've mentioned "focused" above, I more precisely mean, "if at least one bevy window is focused".

- Due to a platform bug in winit (https://github.com/rust-windowing/winit/issues/1619), we can't simply use `Window::request_redraw()`. As a workaround, this PR will temporarily set the window mode to `Poll` when a redraw is requested. This is then reset to the user's `WinitConfig` setting on the next frame.

# Objective

- Add ways to control how audio is played

## Solution

- playing a sound will return a (weak) handle to an asset that can be used to control playback

- if the asset is dropped, it will detach the sink (same behaviour as now)

# Objective

Will fix#3377 and #3254

## Solution

Use an enum to represent either a `WindowId` or `Handle<Image>` in place of `Camera::window`.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Closes#786

- Closes#2252

- Closes#2588

This PR implements a derive macro that allows users to define their queries as structs with named fields.

## Example

```rust

#[derive(WorldQuery)]

#[world_query(derive(Debug))]

struct NumQuery<'w, T: Component, P: Component> {

entity: Entity,

u: UNumQuery<'w>,

generic: GenericQuery<'w, T, P>,

}

#[derive(WorldQuery)]

#[world_query(derive(Debug))]

struct UNumQuery<'w> {

u_16: &'w u16,

u_32_opt: Option<&'w u32>,

}

#[derive(WorldQuery)]

#[world_query(derive(Debug))]

struct GenericQuery<'w, T: Component, P: Component> {

generic: (&'w T, &'w P),

}

#[derive(WorldQuery)]

#[world_query(filter)]

struct NumQueryFilter<T: Component, P: Component> {

_u_16: With<u16>,

_u_32: With<u32>,

_or: Or<(With<i16>, Changed<u16>, Added<u32>)>,

_generic_tuple: (With<T>, With<P>),

_without: Without<Option<u16>>,

_tp: PhantomData<(T, P)>,

}

fn print_nums_readonly(query: Query<NumQuery<u64, i64>, NumQueryFilter<u64, i64>>) {

for num in query.iter() {

println!("{:#?}", num);

}

}

#[derive(WorldQuery)]

#[world_query(mutable, derive(Debug))]

struct MutNumQuery<'w, T: Component, P: Component> {

i_16: &'w mut i16,

i_32_opt: Option<&'w mut i32>,

}

fn print_nums(mut query: Query<MutNumQuery, NumQueryFilter<u64, i64>>) {

for num in query.iter_mut() {

println!("{:#?}", num);

}

}

```

## TODOs:

- [x] Add support for `&T` and `&mut T`

- [x] Test

- [x] Add support for optional types

- [x] Test

- [x] Add support for `Entity`

- [x] Test

- [x] Add support for nested `WorldQuery`

- [x] Test

- [x] Add support for tuples

- [x] Test

- [x] Add support for generics

- [x] Test

- [x] Add support for query filters

- [x] Test

- [x] Add support for `PhantomData`

- [x] Test

- [x] Refactor `read_world_query_field_type_info`

- [x] Properly document `readonly` attribute for nested queries and the static assertions that guarantee safety

- [x] Test that we never implement `ReadOnlyFetch` for types that need mutable access

- [x] Test that we insert static assertions for nested `WorldQuery` that a user marked as readonly

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120Fixes#3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

My attempt at fixing #2142. My very first attempt at contributing to Bevy so more than open to any feedback.

I borrowed heavily from the [Bevy Cheatbook page](https://bevy-cheatbook.github.io/patterns/generic-systems.html?highlight=generic#generic-systems).

## Solution

Fairly straightforward example using a clean up system to delete entities that are coupled with app state after exiting that state.

Co-authored-by: B-Janson <brandon@canva.com>

Add two examples on how to communicate with a task that is running either in another thread or in a thread from `AsyncComputeTaskPool`.

Loosely based on https://github.com/bevyengine/bevy/discussions/1150

## Objective

There is no bevy example that shows how to transform a sprite. At least as its singular purpose. This creates an example of how to use transform.translate to move a sprite up and down. The last pull request had issues that I couldn't fix so I created a new one

### Solution

I created move_sprite example.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Some new bevy users are unfamiliar with quaternions and have trouble working with rotations in 2D.

There has been an [issue](https://github.com/bitshifter/glam-rs/issues/226) raised with glam to add helpers to better support these users, however for now I feel could be better to provide examples of how to do this in Bevy as a starting point for new users.

## Solution

I've added a 2d_rotation example which demonstrates 3 different rotation examples to try help get people started:

- Rotating and translating a player ship based on keyboard input

- An enemy ship type that rotates to face the player ship immediately

- An enemy ship type that rotates to face the player at a fixed angular velocity

I also have a standalone version of this example here https://github.com/bitshifter/bevy-2d-rotation-example but I think it would be more discoverable if it's included with Bevy.

# Objective

- There are wasm specific examples, which is misleading as now it works by default

- I saw a few people on discord trying to work through those examples that are very limited

## Solution

- Remove them and update the instructions

adds an example using UI for something more related to a game than the current UI examples.

Example with a game menu:

* new game - will display settings for 5 seconds before returning to menu

* preferences - can modify the settings, with two sub menus

* quit - will quit the game

I wanted a more complex UI example before starting the UI rewrite to have ground for comparison

Co-authored-by: François <8672791+mockersf@users.noreply.github.com>

# Objective

In this PR I added the ability to opt-out graphical backends. Closes#3155.

## Solution

I turned backends into `Option` ~~and removed panicking sub app API to force users handle the error (was suggested by `@cart`)~~.

# Objective

The current 2d rendering is specialized to render sprites, we need a generic way to render 2d items, using meshes and materials like we have for 3d.

## Solution

I cloned a good part of `bevy_pbr` into `bevy_sprite/src/mesh2d`, removed lighting and pbr itself, adapted it to 2d rendering, added a `ColorMaterial`, and modified the sprite rendering to break batches around 2d meshes.

~~The PR is a bit crude; I tried to change as little as I could in both the parts copied from 3d and the current sprite rendering to make reviewing easier. In the future, I expect we could make the sprite rendering a normal 2d material, cleanly integrated with the rest.~~ _edit: see <https://github.com/bevyengine/bevy/pull/3460#issuecomment-1003605194>_

## Remaining work

- ~~don't require mesh normals~~ _out of scope_

- ~~add an example~~ _done_

- support 2d meshes & materials in the UI?

- bikeshed names (I didn't think hard about naming, please check if it's fine)

## Remaining questions

- ~~should we add a depth buffer to 2d now that there are 2d meshes?~~ _let's revisit that when we have an opaque render phase_

- ~~should we add MSAA support to the sprites, or remove it from the 2d meshes?~~ _I added MSAA to sprites since it's really needed for 2d meshes_

- ~~how to customize vertex attributes?~~ _#3120_

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- The multiple windows example which was viciously murdered in #3175.

- cart asked me to

## Solution

- Rework the example to work on pipelined-rendering, based on the work from #2898

# Objective

Every time I come back to Bevy I face the same issue: how do I draw a rectangle again? How did that work? So I go to https://github.com/bevyengine/bevy/tree/main/examples in the hope of finding literally the simplest possible example that draws something on the screen without any dependency such as an image. I don't want to have to add some image first, I just quickly want to get something on the screen with `main.rs` alone so that I can continue building on from that point on. Such an example is particularly helpful for a quick start for smaller projects that don't even need any assets such as images (this is my case currently).

Currently every single example of https://github.com/bevyengine/bevy/tree/main/examples#2d-rendering (which is the first section after hello world that beginners will look for for very minimalistic and quick examples) depends on at least an asset or is too complex. This PR solves this.

It also serves as a great comparison for a beginner to realize what Bevy is really like and how different it is from what they may expect Bevy to be. For example for someone coming from [LÖVE](https://love2d.org/), they will have something like this in their head when they think of drawing a rectangle:

```lua

function love.draw()

love.graphics.setColor(0.25, 0.25, 0.75);

love.graphics.rectangle("fill", 0, 0, 50, 50);

end

```

This, of course, differs quite a lot from what you do in Bevy. I imagine there will be people that just want to see something as simple as this in comparison to have a better understanding for the amount of differences.

## Solution

Add a dead simple example drawing a blue 50x50 rectangle in the center with no more and no less than needed.

# Objective

This PR fixes a crash when winit is enabled when there is a camera in the world. Part of #3155

## Solution

In this PR, I removed two unwraps and added an example for regression testing.

This makes the [New Bevy Renderer](#2535) the default (and only) renderer. The new renderer isn't _quite_ ready for the final release yet, but I want as many people as possible to start testing it so we can identify bugs and address feedback prior to release.

The examples are all ported over and operational with a few exceptions:

* I removed a good portion of the examples in the `shader` folder. We still have some work to do in order to make these examples possible / ergonomic / worthwhile: #3120 and "high level shader material plugins" are the big ones. This is a temporary measure.

* Temporarily removed the multiple_windows example: doing this properly in the new renderer will require the upcoming "render targets" changes. Same goes for the render_to_texture example.

* Removed z_sort_debug: entity visibility sort info is no longer available in app logic. we could do this on the "render app" side, but i dont consider it a priority.

# Objective

Port bevy_ui to pipelined-rendering (see #2535 )

## Solution

I did some changes during the port:

- [X] separate color from the texture asset (as suggested [here](https://discord.com/channels/691052431525675048/743663924229963868/874353914525413406))

- [X] ~give the vertex shader a per-instance buffer instead of per-vertex buffer~ (incompatible with batching)

Remaining features to implement to reach parity with the old renderer:

- [x] textures

- [X] TextBundle

I'd also like to add these features, but they need some design discussion:

- [x] batching

- [ ] separate opaque and transparent phases

- [ ] multiple windows

- [ ] texture atlases

- [ ] (maybe) clipping

Applogies, had to recreate this pr because of branching issue.

Old PR: https://github.com/bevyengine/bevy/pull/3033

# Objective

Fixes#3032

Allowing a user to create a transparent window

## Solution

I've allowed the transparent bool to be passed to the winit window builder

# Objective

- Remove `cargo-lipo` as [it's deprecated](https://github.com/TimNN/cargo-lipo#maintenance-status) and doesn't work on new Apple processors

- Fix CI that will fail as soon as GitHub update the worker used by Bevy to macOS 11

## Solution

- Replace `cargo-lipo` with building with the correct target

- Setup the correct path to libraries by using `xcrun --show-sdk-path`

- Also try and fix path to cmake in case it's not found but available through homebrew

Add an example that demonstrates the difference between no MSAA and MSAA 4x. This is also useful for testing panics when resizing the window using MSAA. This is on top of #3042 .

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

## New Features

This adds the following to the new renderer:

* **Shader Assets**

* Shaders are assets again! Users no longer need to call `include_str!` for their shaders

* Shader hot-reloading

* **Shader Defs / Shader Preprocessing**

* Shaders now support `# ifdef NAME`, `# ifndef NAME`, and `# endif` preprocessor directives

* **Bevy RenderPipelineDescriptor and RenderPipelineCache**

* Bevy now provides its own `RenderPipelineDescriptor` and the wgpu version is now exported as `RawRenderPipelineDescriptor`. This allows users to define pipelines with `Handle<Shader>` instead of needing to manually compile and reference `ShaderModules`, enables passing in shader defs to configure the shader preprocessor, makes hot reloading possible (because the descriptor can be owned and used to create new pipelines when a shader changes), and opens the doors to pipeline specialization.

* The `RenderPipelineCache` now handles compiling and re-compiling Bevy RenderPipelineDescriptors. It has internal PipelineLayout and ShaderModule caches. Users receive a `CachedPipelineId`, which can be used to look up the actual `&RenderPipeline` during rendering.

* **Pipeline Specialization**

* This enables defining per-entity-configurable pipelines that specialize on arbitrary custom keys. In practice this will involve specializing based on things like MSAA values, Shader Defs, Bind Group existence, and Vertex Layouts.

* Adds a `SpecializedPipeline` trait and `SpecializedPipelines<MyPipeline>` resource. This is a simple layer that generates Bevy RenderPipelineDescriptors based on a custom key defined for the pipeline.

* Specialized pipelines are also hot-reloadable.

* This was the result of experimentation with two different approaches:

1. **"generic immediate mode multi-key hash pipeline specialization"**

* breaks up the pipeline into multiple "identities" (the core pipeline definition, shader defs, mesh layout, bind group layout). each of these identities has its own key. looking up / compiling a specific version of a pipeline requires composing all of these keys together

* the benefit of this approach is that it works for all pipelines / the pipeline is fully identified by the keys. the multiple keys allow pre-hashing parts of the pipeline identity where possible (ex: pre compute the mesh identity for all meshes)

* the downside is that any per-entity data that informs the values of these keys could require expensive re-hashes. computing each key for each sprite tanked bevymark performance (sprites don't actually need this level of specialization yet ... but things like pbr and future sprite scenarios might).

* this is the approach rafx used last time i checked

2. **"custom key specialization"**

* Pipelines by default are not specialized

* Pipelines that need specialization implement a SpecializedPipeline trait with a custom key associated type

* This allows specialization keys to encode exactly the amount of information required (instead of needing to be a combined hash of the entire pipeline). Generally this should fit in a small number of bytes. Per-entity specialization barely registers anymore on things like bevymark. It also makes things like "shader defs" way cheaper to hash because we can use context specific bitflags instead of strings.

* Despite the extra trait, it actually generally makes pipeline definitions + lookups simpler: managing multiple keys (and making the appropriate calls to manage these keys) was way more complicated.

* I opted for custom key specialization. It performs better generally and in my opinion is better UX. Fortunately the way this is implemented also allows for custom caches as this all builds on a common abstraction: the RenderPipelineCache. The built in custom key trait is just a simple / pre-defined way to interact with the cache

## Callouts

* The SpecializedPipeline trait makes it easy to inherit pipeline configuration in custom pipelines. The changes to `custom_shader_pipelined` and the new `shader_defs_pipelined` example illustrate how much simpler it is to define custom pipelines based on the PbrPipeline.

* The shader preprocessor is currently pretty naive (it just uses regexes to process each line). Ultimately we might want to build a more custom parser for more performance + better error handling, but for now I'm happy to optimize for "easy to implement and understand".

## Next Steps

* Port compute pipelines to the new system

* Add more preprocessor directives (else, elif, import)

* More flexible vertex attribute specialization / enable cheaply specializing on specific mesh vertex layouts

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

This updates the `pipelined-rendering` branch to use the latest `bevy_ecs` from `main`. This accomplishes a couple of goals:

1. prepares for upcoming `custom-shaders` branch changes, which were what drove many of the recent bevy_ecs changes on `main`

2. prepares for the soon-to-happen merge of `pipelined-rendering` into `main`. By including bevy_ecs changes now, we make that merge simpler / easier to review.

I split this up into 3 commits:

1. **add upstream bevy_ecs**: please don't bother reviewing this content. it has already received thorough review on `main` and is a literal copy/paste of the relevant folders (the old folders were deleted so the directories are literally exactly the same as `main`).

2. **support manual buffer application in stages**: this is used to enable the Extract step. we've already reviewed this once on the `pipelined-rendering` branch, but its worth looking at one more time in the new context of (1).

3. **support manual archetype updates in QueryState**: same situation as (2).

# Objective

Forward perspective projections have poor floating point precision distribution over the depth range. Reverse projections fair much better, and instead of having to have a far plane, with the reverse projection, using an infinite far plane is not a problem. The infinite reverse perspective projection has become the industry standard. The renderer rework is a great time to migrate to it.

## Solution

All perspective projections, including point lights, have been moved to using `glam::Mat4::perspective_infinite_reverse_rh()` and so have no far plane. As various depth textures are shared between orthographic and perspective projections, a quirk of this PR is that the near and far planes of the orthographic projection are swapped when the Mat4 is computed. This has no impact on 2D/3D orthographic projection usage, and provides consistency in shaders, texture clear values, etc. throughout the codebase.

## Known issues

For some reason, when looking along -Z, all geometry is black. The camera can be translated up/down / strafed left/right and geometry will still be black. Moving forward/backward or rotating the camera away from looking exactly along -Z causes everything to work as expected.

I have tried to debug this issue but both in macOS and Windows I get crashes when doing pixel debugging. If anyone could reproduce this and debug it I would be very grateful. Otherwise I will have to try to debug it further without pixel debugging, though the projections and such all looked fine to me.

# Objective

Allow marking meshes as not casting / receiving shadows.

## Solution

- Added `NotShadowCaster` and `NotShadowReceiver` zero-sized type components.

- Extract these components into `bool`s in `ExtractedMesh`

- Only generate `DrawShadowMesh` `Drawable`s for meshes _without_ `NotShadowCaster`

- Add a `u32` bit `flags` member to `MeshUniform` with one flag indicating whether the mesh is a shadow receiver

- If a mesh does _not_ have the `NotShadowReceiver` component, then it is a shadow receiver, and so the bit in the `MeshUniform` is set, otherwise it is not set.

- Added an example illustrating the functionality.

NOTE: I wanted to have the default state of a mesh as being a shadow caster and shadow receiver, hence the `Not*` components. However, I am on the fence about this. I don't want to have a negative performance impact, nor have people wondering why their custom meshes don't have shadows because they forgot to add `ShadowCaster` and `ShadowReceiver` components, but I also really don't like the double negatives the `Not*` approach incurs. What do you think?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Allow the user to set the clear color when using the pipelined renderer

## Solution

- Add a `ClearColor` resource that can be added to the world to configure the clear color

## Remaining Issues

Currently the `ClearColor` resource is cloned from the app world to the render world every frame. There are two ways I can think of around this:

1. Figure out why `app_world.is_resource_changed::<ClearColor>()` always returns `true` in the `extract` step and fix it so that we are only updating the resource when it changes

2. Require the users to add the `ClearColor` resource to the render sub-app instead of the parent app. This is currently sub-optimal until we have labled sub-apps, and probably a helper funciton on `App` such as `app.with_sub_app(RenderApp, |app| { ... })`. Even if we had that, I think it would be more than we want the user to have to think about. They shouldn't have to know about the render sub-app I don't think.

I think the first option is the best, but I could really use some help figuring out the nuance of why `is_resource_changed` is always returning true in that context.

# Objective

Restore the functionality of sprite atlases in the new renderer.

### **Note:** This PR relies on #2555

## Solution

Mostly just a copy paste of the existing sprite atlas implementation, however I unified the rendering between sprites and atlases.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Port bevy_gltf to the pipelined-rendering branch.

## Solution

crates/bevy_gltf has been copied and pasted into pipelined/bevy_gltf2 and modifications were made to work with the pipelined-rendering branch. Notably vertex tangents and vertex colours are not supported.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Noticed a warning when running tests:

```

> cargo test --workspace

warning: output filename collision.

The example target `change_detection` in package `bevy_ecs v0.5.0 (/bevy/crates/bevy_ecs)` has the same output filename as the example target `change_detection` in package `bevy v0.5.0 (/bevy)`.

Colliding filename is: /bevy/target/debug/examples/change_detection

The targets should have unique names.

Consider changing their names to be unique or compiling them separately.

This may become a hard error in the future; see <https://github.com/rust-lang/cargo/issues/6313>.

warning: output filename collision.

The example target `change_detection` in package `bevy_ecs v0.5.0 (/bevy/crates/bevy_ecs)` has the same output filename as the example target `change_detection` in package `bevy v0.5.0 (/bevy)`.

Colliding filename is: /bevy/target/debug/examples/change_detection.dSYM

The targets should have unique names.

Consider changing their names to be unique or compiling them separately.

This may become a hard error in the future; see <https://github.com/rust-lang/cargo/issues/6313>.

```

## Solution

I renamed example `change_detection` to `component_change_detection`

Adds an GitHub Action to check all local (non http://, https:// ) links in all Markdown files of the repository for liveness.

Fails if a file is not found.

# Goal

This should help maintaining the quality of the documentation.

# Impact

Takes ~24 seconds currently and found 3 dead links (pull requests already created).

# Dependent PRs

* #2064

* #2065

* #2066

# Info

See [markdown-link-check](https://github.com/marketplace/actions/markdown-link-check).

# Example output

```

FILE: ./docs/profiling.md

1 links checked.

FILE: ./docs/plugins_guidelines.md

37 links checked.

FILE: ./docs/linters.md

[✖] ../.github/linters/markdown-lint.yml → Status: 400 [Error: ENOENT: no such file or directory, access '/github/workspace/.github/linters/markdown-lint.yml'] {

errno: -2,

code: 'ENOENT',

syscall: 'access',

path: '/github/workspace/.github/linters/markdown-lint.yml'

}

```

# Improvements

* Can also be used to check external links, but fails because of:

* Too many requests (429) responses:

```

FILE: ./CHANGELOG.md

[✖] https://github.com/bevyengine/bevy/pull/1762 → Status: 429

```

* crates.io links respond 404

```

FILE: ./README.md

[✖] https://crates.io/crates/bevy → Status: 404

```