builds on top of #4780

# Objective

`Reflect` and `Serialize` are currently very tied together because `Reflect` has a `fn serialize(&self) -> Option<Serializable<'_>>` method. Because of that, we can either implement `Reflect` for types like `Option<T>` with `T: Serialize` and have `fn serialize` be implemented, or without the bound but having `fn serialize` return `None`.

By separating `ReflectSerialize` into a separate type (like how it already is for `ReflectDeserialize`, `ReflectDefault`), we could separately `.register::<Option<T>>()` and `.register_data::<Option<T>, ReflectSerialize>()` only if the type `T: Serialize`.

This PR does not change the registration but allows it to be changed in a future PR.

## Solution

- add the type

```rust

struct ReflectSerialize { .. }

impl<T: Reflect + Serialize> FromType<T> for ReflectSerialize { .. }

```

- remove `#[reflect(Serialize)]` special casing.

- when serializing reflect value types, look for `ReflectSerialize` in the `TypeRegistry` instead of calling `value.serialize()`

# Objective

Fix#4958

There was 4 issues:

- this is not true in WASM and on macOS: f28b921209/examples/3d/split_screen.rs (L90)

- ~~I made sure the system was running at least once~~

- I'm sending the event on window creation

- in webgl, setting a viewport has impacts on other render passes

- only in webgl and when there is a custom viewport, I added a render pass without a custom viewport

- shaderdef NO_ARRAY_TEXTURES_SUPPORT was not used by the 2d pipeline

- webgl feature was used but not declared in bevy_sprite, I added it to the Cargo.toml

- shaderdef NO_STORAGE_BUFFERS_SUPPORT was not used by the 2d pipeline

- I added it based on the BufferBindingType

The last commit changes the two last fixes to add the shaderdefs in the shader cache directly instead of needing to do it in each pipeline

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Users should be able to configure depth load operations on cameras. Currently every camera clears depth when it is rendered. But sometimes later passes need to rely on depth from previous passes.

## Solution

This adds the `Camera3d::depth_load_op` field with a new `Camera3dDepthLoadOp` value. This is a custom type because Camera3d uses "reverse-z depth" and this helps us record and document that in a discoverable way. It also gives us more control over reflection + other trait impls, whereas `LoadOp` is owned by the `wgpu` crate.

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

depth_load_op: Camera3dDepthLoadOp::Load,

..default()

},

..default()

});

```

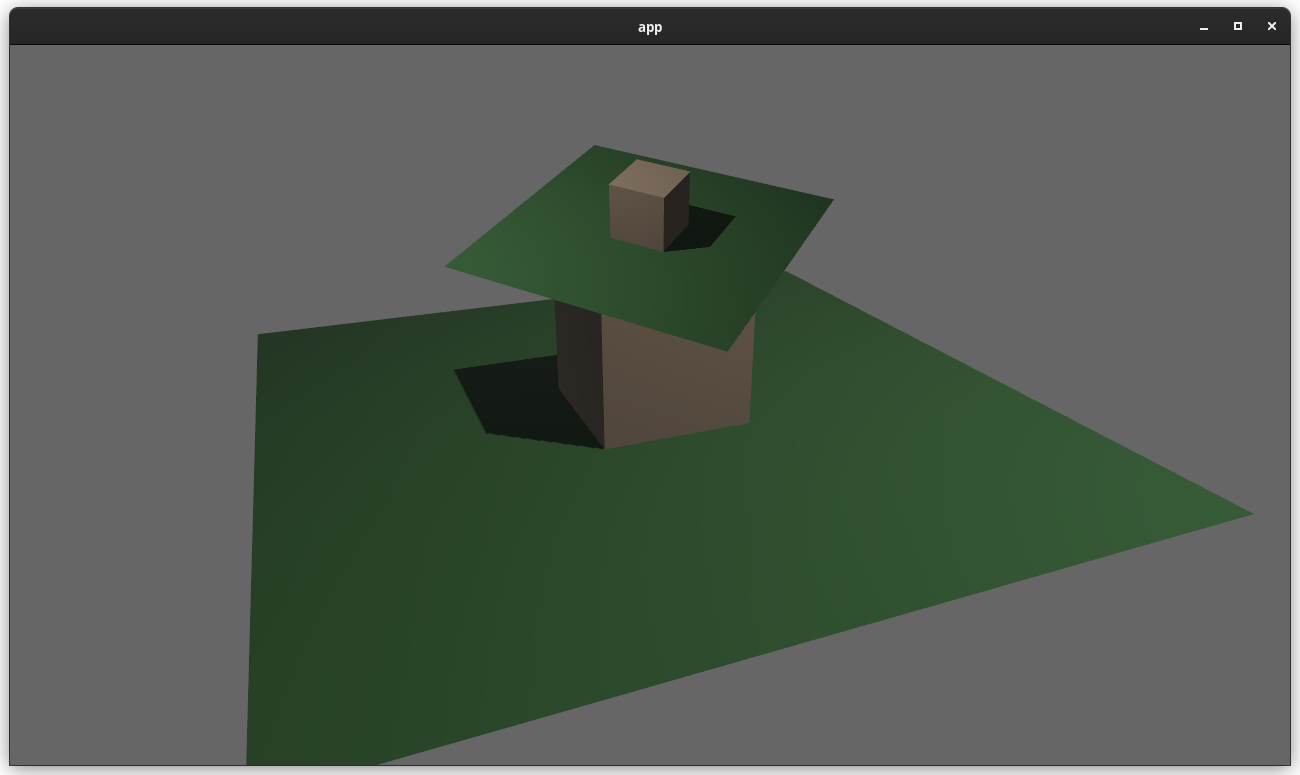

### two_passes example with the "second pass" camera configured to the default (clear depth to 0.0)

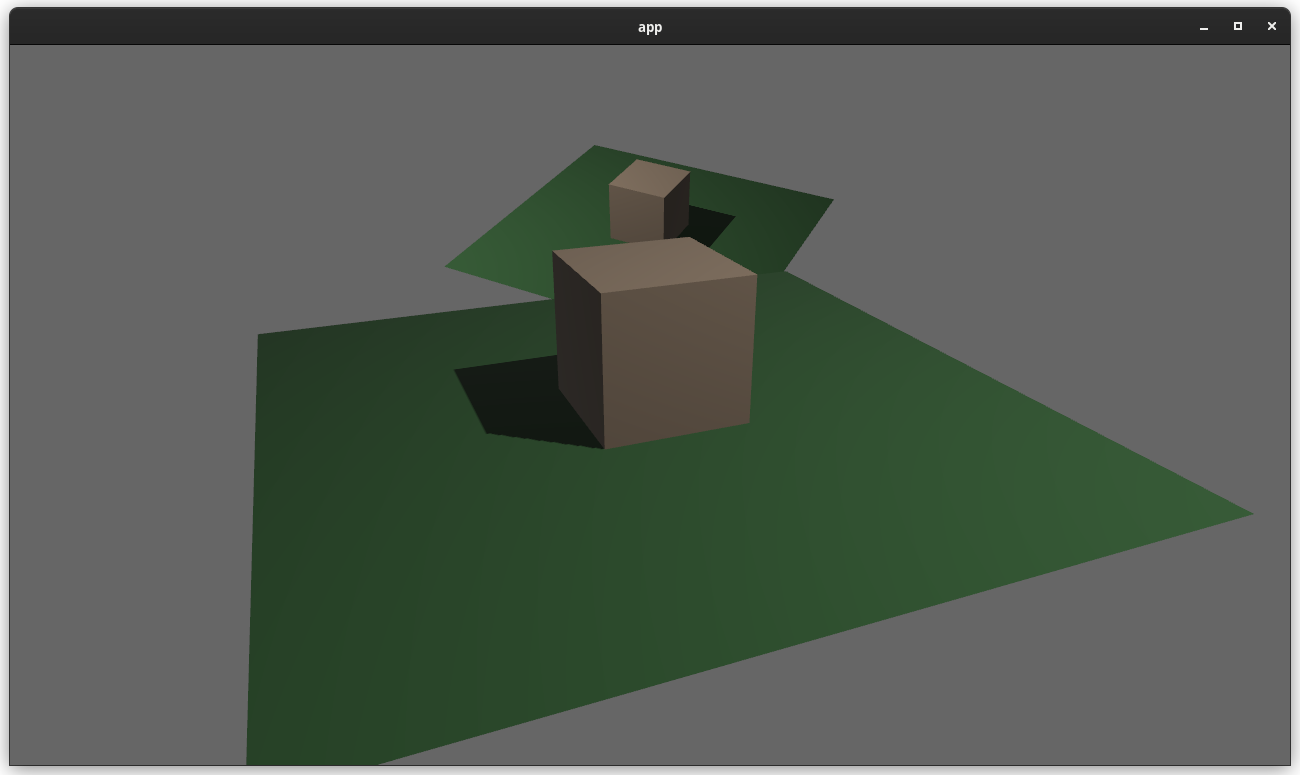

### two_passes example with the "second pass" camera configured to "load" the depth

---

## Changelog

### Added

* `Camera3d` now has a `depth_load_op` field, which can configure the Camera's main 3d pass depth loading behavior.

# Objective

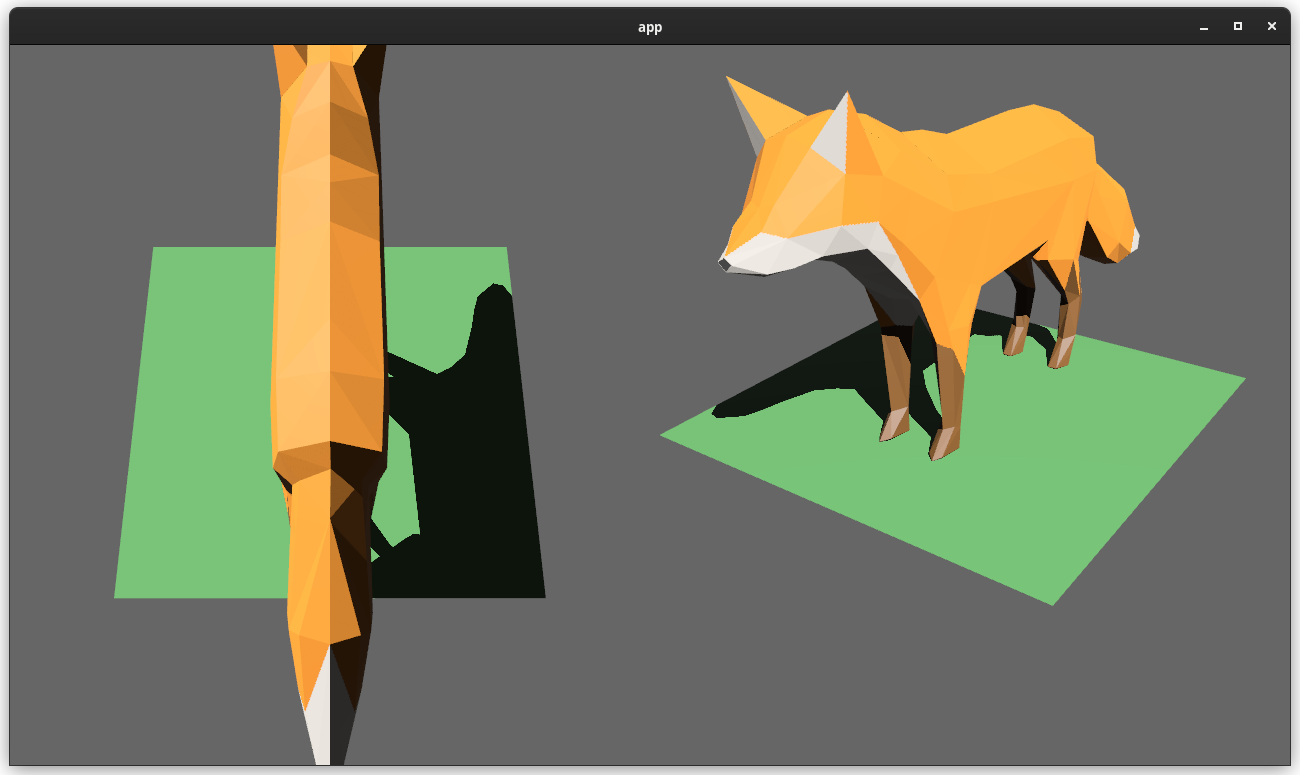

Users should be able to render cameras to specific areas of a render target, which enables scenarios like split screen, minimaps, etc.

Builds on the new Camera Driven Rendering added here: #4745Fixes: #202

Alternative to #1389 and #3626 (which are incompatible with the new Camera Driven Rendering)

## Solution

Cameras can now configure an optional "viewport", which defines a rectangle within their render target to draw to. If a `Viewport` is defined, the camera's `CameraProjection`, `View`, and visibility calculations will use the viewport configuration instead of the full render target.

```rust

// This camera will render to the first half of the primary window (on the left side).

commands.spawn_bundle(Camera3dBundle {

camera: Camera {

viewport: Some(Viewport {

physical_position: UVec2::new(0, 0),

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

depth: 0.0..1.0,

}),

..default()

},

..default()

});

```

To account for this, the `Camera` component has received a few adjustments:

* `Camera` now has some new getter functions:

* `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, `projection_matrix`

* All computed camera values are now private and live on the `ComputedCameraValues` field (logical/physical width/height, the projection matrix). They are now exposed on `Camera` via getters/setters This wasn't _needed_ for viewports, but it was long overdue.

---

## Changelog

### Added

* `Camera` components now have a `viewport` field, which can be set to draw to a portion of a render target instead of the full target.

* `Camera` component has some new functions: `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, and `projection_matrix`

* Added a new split_screen example illustrating how to render two cameras to the same scene

## Migration Guide

`Camera::projection_matrix` is no longer a public field. Use the new `Camera::projection_matrix()` method instead:

```rust

// Bevy 0.7

let projection = camera.projection_matrix;

// Bevy 0.8

let projection = camera.projection_matrix();

```

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

# Objective

- Add an `ExtractResourcePlugin` for convenience and consistency

## Solution

- Add an `ExtractResourcePlugin` similar to `ExtractComponentPlugin` but for ECS `Resource`s. The system that is executed simply clones the main world resource into a render world resource, if and only if the main world resource was either added or changed since the last execution of the system.

- Add an `ExtractResource` trait with a `fn extract_resource(res: &Self) -> Self` function. This is used by the `ExtractResourcePlugin` to extract the resource

- Add a derive macro for `ExtractResource` on a `Resource` with the `Clone` trait, that simply returns `res.clone()`

- Use `ExtractResourcePlugin` wherever both possible and appropriate

# Objective

- Creating and executing render passes has GPU overhead. If there are no phase items in the render phase to draw, then this overhead should not be incurred as it has no benefit.

## Solution

- Check if there are no phase items to draw, and if not, do not construct not execute the render pass

---

## Changelog

- Changed: Do not create nor execute empty render passes

# Objective

Reduce the catch-all grab-bag of functionality in bevy_core by moving FloatOrd to bevy_utils.

A step in addressing #2931 and splitting bevy_core into more specific locations.

## Solution

Move FloatOrd into bevy_utils. Fix the compile errors.

As a result, bevy_core_pipeline, bevy_pbr, bevy_sprite, bevy_text, and bevy_ui no longer depend on bevy_core (they were only using it for `FloatOrd` previously).

# Objective

Fixes https://github.com/bevyengine/bevy/issues/3499

## Solution

Uses a `HashMap` from `RenderTarget` to sampled textures when preparing `ViewTarget`s to ensure that two passes with the same render target get sampled to the same texture.

This builds on and depends on https://github.com/bevyengine/bevy/pull/3412, so this will be a draft PR until #3412 is merged. All changes for this PR are in the last commit.

# Objective

- Fixes#3970

- To support Bevy's shader abstraction(shader defs, shader imports and hot shader reloading) for compute shaders, I have followed carts advice and change the `PipelinenCache` to accommodate both compute and render pipelines.

## Solution

- renamed `RenderPipelineCache` to `PipelineCache`

- Cached Pipelines are now represented by an enum (render, compute)

- split the `SpecializedPipelines` into `SpecializedRenderPipelines` and `SpecializedComputePipelines`

- updated the game of life example

## Open Questions

- should `SpecializedRenderPipelines` and `SpecializedComputePipelines` be merged and how would we do that?

- should the `get_render_pipeline` and `get_compute_pipeline` methods be merged?

- is pipeline specialization for different entry points a good pattern

Co-authored-by: Kurt Kühnert <51823519+Ku95@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Make visible how much time is spent building the Opaque3d, AlphaMask3d, and Transparent3d passes

## Solution

- Add a `trace` feature to `bevy_core_pipeline`

- Add tracy spans around the three passes

- I didn't do this for shadows, sprites, etc as they are only one pass in the node. Perhaps it should be split into 3 nodes to allow insertion of other nodes between...?

**Problem**

- whenever you want more than one of the builtin cameras (for example multiple windows, split screen, portals), you need to add a render graph node that executes the correct sub graph, extract the camera into the render world and add the correct `RenderPhase<T>` components

- querying for the 3d camera is annoying because you need to compare the camera's name to e.g. `CameraPlugin::CAMERA_3d`

**Solution**

- Introduce the marker types `Camera3d`, `Camera2d` and `CameraUi`

-> `Query<&mut Transform, With<Camera3d>>` works

- `PerspectiveCameraBundle::new_3d()` and `PerspectiveCameraBundle::<Camera3d>::default()` contain the `Camera3d` marker

- `OrthographicCameraBundle::new_3d()` has `Camera3d`, `OrthographicCameraBundle::new_2d()` has `Camera2d`

- remove `ActiveCameras`, `ExtractedCameraNames`

- run 2d, 3d and ui passes for every camera of their respective marker

-> no custom setup for multiple windows example needed

**Open questions**

- do we need a replacement for `ActiveCameras`? What about a component `ActiveCamera { is_active: bool }` similar to `Visibility`?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- In the large majority of cases, users were calling `.unwrap()` immediately after `.get_resource`.

- Attempting to add more helpful error messages here resulted in endless manual boilerplate (see #3899 and the linked PRs).

## Solution

- Add an infallible variant named `.resource` and so on.

- Use these infallible variants over `.get_resource().unwrap()` across the code base.

## Notes

I did not provide equivalent methods on `WorldCell`, in favor of removing it entirely in #3939.

## Migration Guide

Infallible variants of `.get_resource` have been added that implicitly panic, rather than needing to be unwrapped.

Replace `world.get_resource::<Foo>().unwrap()` with `world.resource::<Foo>()`.

## Impact

- `.unwrap` search results before: 1084

- `.unwrap` search results after: 942

- internal `unwrap_or_else` calls added: 4

- trivial unwrap calls removed from tests and code: 146

- uses of the new `try_get_resource` API: 11

- percentage of the time the unwrapping API was used internally: 93%

# Objective

Will fix#3377 and #3254

## Solution

Use an enum to represent either a `WindowId` or `Handle<Image>` in place of `Camera::window`.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

What is says on the tin.

This has got more to do with making `clippy` slightly more *quiet* than it does with changing anything that might greatly impact readability or performance.

that said, deriving `Default` for a couple of structs is a nice easy win

# Objective

- Update the `ClearColor` resource docs as described in #3837 so new users (like me) understand it better

## Solution

- Update the docs to use what @alice-i-cecile described in #3837

I took this one up because I got confused by it this weekend. I didn't understand why the "background" was being set by a `ClearColor` resource.

# Objective

In this PR I added the ability to opt-out graphical backends. Closes#3155.

## Solution

I turned backends into `Option` ~~and removed panicking sub app API to force users handle the error (was suggested by `@cart`)~~.

# Objective

The current 2d rendering is specialized to render sprites, we need a generic way to render 2d items, using meshes and materials like we have for 3d.

## Solution

I cloned a good part of `bevy_pbr` into `bevy_sprite/src/mesh2d`, removed lighting and pbr itself, adapted it to 2d rendering, added a `ColorMaterial`, and modified the sprite rendering to break batches around 2d meshes.

~~The PR is a bit crude; I tried to change as little as I could in both the parts copied from 3d and the current sprite rendering to make reviewing easier. In the future, I expect we could make the sprite rendering a normal 2d material, cleanly integrated with the rest.~~ _edit: see <https://github.com/bevyengine/bevy/pull/3460#issuecomment-1003605194>_

## Remaining work

- ~~don't require mesh normals~~ _out of scope_

- ~~add an example~~ _done_

- support 2d meshes & materials in the UI?

- bikeshed names (I didn't think hard about naming, please check if it's fine)

## Remaining questions

- ~~should we add a depth buffer to 2d now that there are 2d meshes?~~ _let's revisit that when we have an opaque render phase_

- ~~should we add MSAA support to the sprites, or remove it from the 2d meshes?~~ _I added MSAA to sprites since it's really needed for 2d meshes_

- ~~how to customize vertex attributes?~~ _#3120_

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Currently the `ClearPassNode` is not exported, due to an additional `use ...;` in the core pipeline's `lib.rs`. This seems unintentional, as there already is a public glob import in the file.

This just removes the explicit use. If it actually was intentional to keep the node internal, let me know.

# Objective

This PR fixes a crash when winit is enabled when there is a camera in the world. Part of #3155

## Solution

In this PR, I removed two unwraps and added an example for regression testing.

This makes the [New Bevy Renderer](#2535) the default (and only) renderer. The new renderer isn't _quite_ ready for the final release yet, but I want as many people as possible to start testing it so we can identify bugs and address feedback prior to release.

The examples are all ported over and operational with a few exceptions:

* I removed a good portion of the examples in the `shader` folder. We still have some work to do in order to make these examples possible / ergonomic / worthwhile: #3120 and "high level shader material plugins" are the big ones. This is a temporary measure.

* Temporarily removed the multiple_windows example: doing this properly in the new renderer will require the upcoming "render targets" changes. Same goes for the render_to_texture example.

* Removed z_sort_debug: entity visibility sort info is no longer available in app logic. we could do this on the "render app" side, but i dont consider it a priority.