2021-08-25 05:57:57 +00:00

|

|

|

use crate::{

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

AmbientLight, Clusters, CubemapVisibleEntities, DirectionalLight, DirectionalLightShadowMap,

|

|

|

|

|

DrawMesh, MeshPipeline, NotShadowCaster, PointLight, PointLightShadowMap, SetMeshBindGroup,

|

|

|

|

|

VisiblePointLights, SHADOW_SHADER_HANDLE,

|

Modular Rendering (#2831)

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

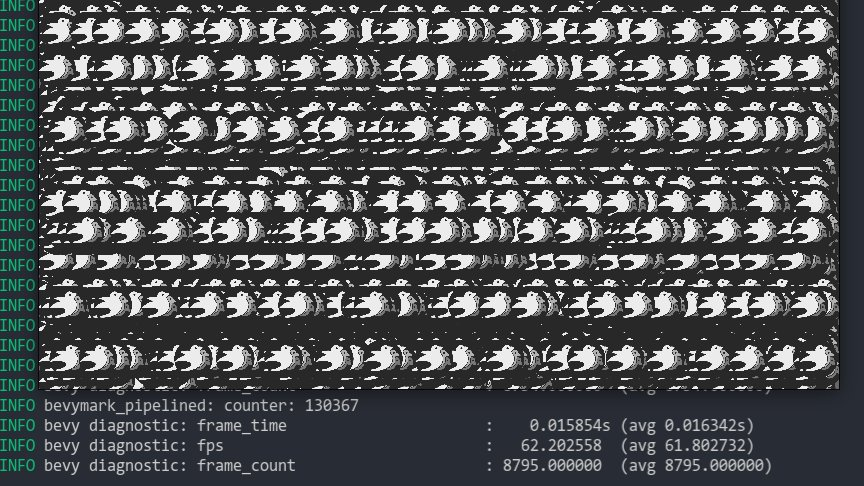

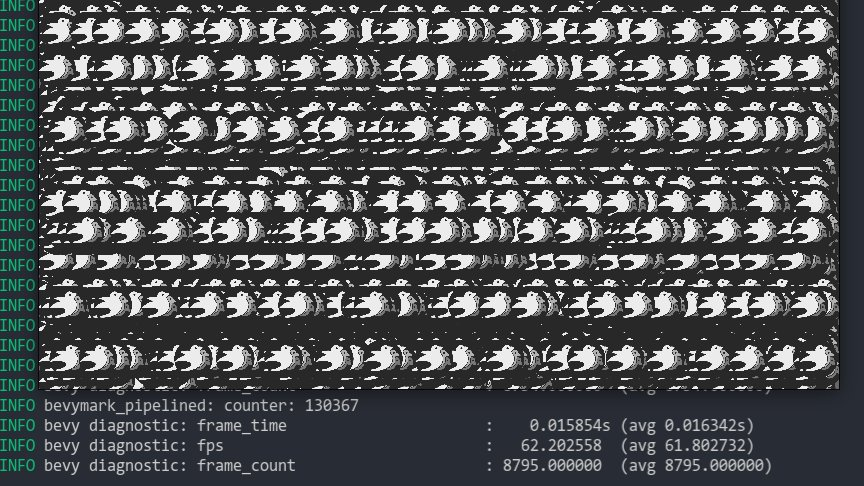

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

2021-09-23 06:16:11 +00:00

|

|

|

};

|

|

|

|

|

use bevy_asset::Handle;

|

|

|

|

|

use bevy_core::FloatOrd;

|

|

|

|

|

use bevy_core_pipeline::Transparent3d;

|

|

|

|

|

use bevy_ecs::{

|

|

|

|

|

prelude::*,

|

2021-11-12 22:27:17 +00:00

|

|

|

system::{lifetimeless::*, SystemParamItem},

|

2021-08-25 05:57:57 +00:00

|

|

|

};

|

2021-12-14 23:42:35 +00:00

|

|

|

use bevy_math::{const_vec3, Mat4, UVec3, UVec4, Vec2, Vec3, Vec4, Vec4Swizzles};

|

2021-12-14 03:58:23 +00:00

|

|

|

use bevy_render::{

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

camera::{Camera, CameraProjection},

|

2021-06-02 02:59:17 +00:00

|

|

|

color::Color,

|

2021-06-26 22:35:07 +00:00

|

|

|

mesh::Mesh,

|

2022-01-04 20:08:12 +00:00

|

|

|

options::WgpuOptions,

|

2021-06-26 22:35:07 +00:00

|

|

|

render_asset::RenderAssets,

|

2021-06-02 02:59:17 +00:00

|

|

|

render_graph::{Node, NodeRunError, RenderGraphContext, SlotInfo, SlotType},

|

Modular Rendering (#2831)

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

2021-09-23 06:16:11 +00:00

|

|

|

render_phase::{

|

2021-11-12 22:27:17 +00:00

|

|

|

CachedPipelinePhaseItem, DrawFunctionId, DrawFunctions, EntityPhaseItem,

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

EntityRenderCommand, PhaseItem, RenderCommandResult, RenderPhase, SetItemPipeline,

|

|

|

|

|

TrackedRenderPass,

|

Modular Rendering (#2831)

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

2021-09-23 06:16:11 +00:00

|

|

|

},

|

2021-12-23 22:49:12 +00:00

|

|

|

render_resource::{std140::AsStd140, *},

|

Modular Rendering (#2831)

This changes how render logic is composed to make it much more modular. Previously, all extraction logic was centralized for a given "type" of rendered thing. For example, we extracted meshes into a vector of ExtractedMesh, which contained the mesh and material asset handles, the transform, etc. We looked up bindings for "drawn things" using their index in the `Vec<ExtractedMesh>`. This worked fine for built in rendering, but made it hard to reuse logic for "custom" rendering. It also prevented us from reusing things like "extracted transforms" across contexts.

To make rendering more modular, I made a number of changes:

* Entities now drive rendering:

* We extract "render components" from "app components" and store them _on_ entities. No more centralized uber lists! We now have true "ECS-driven rendering"

* To make this perform well, I implemented #2673 in upstream Bevy for fast batch insertions into specific entities. This was merged into the `pipelined-rendering` branch here: #2815

* Reworked the `Draw` abstraction:

* Generic `PhaseItems`: each draw phase can define its own type of "rendered thing", which can define its own "sort key"

* Ported the 2d, 3d, and shadow phases to the new PhaseItem impl (currently Transparent2d, Transparent3d, and Shadow PhaseItems)

* `Draw` trait and and `DrawFunctions` are now generic on PhaseItem

* Modular / Ergonomic `DrawFunctions` via `RenderCommands`

* RenderCommand is a trait that runs an ECS query and produces one or more RenderPass calls. Types implementing this trait can be composed to create a final DrawFunction. For example the DrawPbr DrawFunction is created from the following DrawCommand tuple. Const generics are used to set specific bind group locations:

```rust

pub type DrawPbr = (

SetPbrPipeline,

SetMeshViewBindGroup<0>,

SetStandardMaterialBindGroup<1>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* The new `custom_shader_pipelined` example illustrates how the commands above can be reused to create a custom draw function:

```rust

type DrawCustom = (

SetCustomMaterialPipeline,

SetMeshViewBindGroup<0>,

SetTransformBindGroup<2>,

DrawMesh,

);

```

* ExtractComponentPlugin and UniformComponentPlugin:

* Simple, standardized ways to easily extract individual components and write them to GPU buffers

* Ported PBR and Sprite rendering to the new primitives above.

* Removed staging buffer from UniformVec in favor of direct Queue usage

* Makes UniformVec much easier to use and more ergonomic. Completely removes the need for custom render graph nodes in these contexts (see the PbrNode and view Node removals and the much simpler call patterns in the relevant Prepare systems).

* Added a many_cubes_pipelined example to benchmark baseline 3d rendering performance and ensure there were no major regressions during this port. Avoiding regressions was challenging given that the old approach of extracting into centralized vectors is basically the "optimal" approach. However thanks to a various ECS optimizations and render logic rephrasing, we pretty much break even on this benchmark!

* Lifetimeless SystemParams: this will be a bit divisive, but as we continue to embrace "trait driven systems" (ex: ExtractComponentPlugin, UniformComponentPlugin, DrawCommand), the ergonomics of `(Query<'static, 'static, (&'static A, &'static B, &'static)>, Res<'static, C>)` were getting very hard to bear. As a compromise, I added "static type aliases" for the relevant SystemParams. The previous example can now be expressed like this: `(SQuery<(Read<A>, Read<B>)>, SRes<C>)`. If anyone has better ideas / conflicting opinions, please let me know!

* RunSystem trait: a way to define Systems via a trait with a SystemParam associated type. This is used to implement the various plugins mentioned above. I also added SystemParamItem and QueryItem type aliases to make "trait stye" ecs interactions nicer on the eyes (and fingers).

* RenderAsset retrying: ensures that render assets are only created when they are "ready" and allows us to create bind groups directly inside render assets (which significantly simplified the StandardMaterial code). I think ultimately we should swap this out on "asset dependency" events to wait for dependencies to load, but this will require significant asset system changes.

* Updated some built in shaders to account for missing MeshUniform fields

2021-09-23 06:16:11 +00:00

|

|

|

renderer::{RenderContext, RenderDevice, RenderQueue},

|

2021-06-02 02:59:17 +00:00

|

|

|

texture::*,

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

view::{ExtractedView, ViewUniform, ViewUniformOffset, ViewUniforms, VisibleEntities},

|

2021-06-02 02:59:17 +00:00

|

|

|

};

|

|

|

|

|

use bevy_transform::components::GlobalTransform;

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

use bevy_utils::{tracing::warn, HashMap};

|

2021-06-02 02:59:17 +00:00

|

|

|

use std::num::NonZeroU32;

|

|

|

|

|

|

Frustum culling (#2861)

# Objective

Implement frustum culling for much better performance on more complex scenes. With the Amazon Lumberyard Bistro scene, I was getting roughly 15fps without frustum culling and 60+fps with frustum culling on a MacBook Pro 16 with i9 9980HK 8c/16t CPU and Radeon Pro 5500M.

macOS does weird things with vsync so even though vsync was off, it really looked like sometimes other applications or the desktop window compositor were interfering, but the difference could be even more as I even saw up to 90+fps sometimes.

## Solution

- Until the https://github.com/bevyengine/rfcs/pull/12 RFC is completed, I wanted to implement at least some of the bounding volume functionality we needed to be able to unblock a bunch of rendering features and optimisations such as frustum culling, fitting the directional light orthographic projection to the relevant meshes in the view, clustered forward rendering, etc.

- I have added `Aabb`, `Frustum`, and `Sphere` types with only the necessary intersection tests for the algorithms used. I also added `CubemapFrusta` which contains a `[Frustum; 6]` and can be used by cube maps such as environment maps, and point light shadow maps.

- I did do a bit of benchmarking and optimisation on the intersection tests. I compared the [rafx parallel-comparison bitmask approach](https://github.com/aclysma/rafx/blob/c91bd5fcfdfa3f4d1b43507c32d84b94ffdf1b2e/rafx-visibility/src/geometry/frustum.rs#L64-L92) with a naïve loop that has an early-out in case of a bounding volume being outside of any one of the `Frustum` planes and found them to be very similar, so I chose the simpler and more readable option. I also compared using Vec3 and Vec3A and it turned out that promoting Vec3s to Vec3A improved performance of the culling significantly due to Vec3A operations using SIMD optimisations where Vec3 uses plain scalar operations.

- When loading glTF models, the vertex attribute accessors generally store the minimum and maximum values, which allows for adding AABBs to meshes loaded from glTF for free.

- For meshes without an AABB (`PbrBundle` deliberately does not have an AABB by default), a system is executed that scans over the vertex positions to find the minimum and maximum values along each axis. This is used to construct the AABB.

- The `Frustum::intersects_obb` and `Sphere::insersects_obb` algorithm is from Foundations of Game Engine Development 2: Rendering by Eric Lengyel. There is no OBB type, yet, rather an AABB and the model matrix are passed in as arguments. This calculates a 'relative radius' of the AABB with respect to the plane normal (the plane normal in the Sphere case being something I came up with as the direction pointing from the centre of the sphere to the centre of the AABB) such that it can then do a sphere-sphere intersection test in practice.

- `RenderLayers` were copied over from the current renderer.

- `VisibleEntities` was copied over from the current renderer and a `CubemapVisibleEntities` was added to support `PointLight`s for now. `VisibleEntities` are added to views (cameras and lights) and contain a `Vec<Entity>` that is populated by culling/visibility systems that run in PostUpdate of the app world, and are iterated over in the render world for, for example, queuing up meshes to be drawn by lights for shadow maps and the main pass for cameras.

- `Visibility` and `ComputedVisibility` components were added. The `Visibility` component is user-facing so that, for example, the entity can be marked as not visible in an editor. `ComputedVisibility` on the other hand is the result of the culling/visibility systems and takes `Visibility` into account. So if an entity is marked as not being visible in its `Visibility` component, that will skip culling/visibility intersection tests and just mark the `ComputedVisibility` as false.

- The `ComputedVisibility` is used to decide which meshes to extract.

- I had to add a way to get the far plane from the `CameraProjection` in order to define an explicit far frustum plane for culling. This should perhaps be optional as it is not always desired and in that case, testing 5 planes instead of 6 is a performance win.

I think that's about all. I discussed some of the design with @cart on Discord already so hopefully it's not too far from being mergeable. It works well at least. 😄

2021-11-07 21:45:52 +00:00

|

|

|

#[derive(Debug, Hash, PartialEq, Eq, Clone, SystemLabel)]

|

|

|

|

|

pub enum RenderLightSystems {

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

ExtractClusters,

|

Frustum culling (#2861)

# Objective

Implement frustum culling for much better performance on more complex scenes. With the Amazon Lumberyard Bistro scene, I was getting roughly 15fps without frustum culling and 60+fps with frustum culling on a MacBook Pro 16 with i9 9980HK 8c/16t CPU and Radeon Pro 5500M.

macOS does weird things with vsync so even though vsync was off, it really looked like sometimes other applications or the desktop window compositor were interfering, but the difference could be even more as I even saw up to 90+fps sometimes.

## Solution

- Until the https://github.com/bevyengine/rfcs/pull/12 RFC is completed, I wanted to implement at least some of the bounding volume functionality we needed to be able to unblock a bunch of rendering features and optimisations such as frustum culling, fitting the directional light orthographic projection to the relevant meshes in the view, clustered forward rendering, etc.

- I have added `Aabb`, `Frustum`, and `Sphere` types with only the necessary intersection tests for the algorithms used. I also added `CubemapFrusta` which contains a `[Frustum; 6]` and can be used by cube maps such as environment maps, and point light shadow maps.

- I did do a bit of benchmarking and optimisation on the intersection tests. I compared the [rafx parallel-comparison bitmask approach](https://github.com/aclysma/rafx/blob/c91bd5fcfdfa3f4d1b43507c32d84b94ffdf1b2e/rafx-visibility/src/geometry/frustum.rs#L64-L92) with a naïve loop that has an early-out in case of a bounding volume being outside of any one of the `Frustum` planes and found them to be very similar, so I chose the simpler and more readable option. I also compared using Vec3 and Vec3A and it turned out that promoting Vec3s to Vec3A improved performance of the culling significantly due to Vec3A operations using SIMD optimisations where Vec3 uses plain scalar operations.

- When loading glTF models, the vertex attribute accessors generally store the minimum and maximum values, which allows for adding AABBs to meshes loaded from glTF for free.

- For meshes without an AABB (`PbrBundle` deliberately does not have an AABB by default), a system is executed that scans over the vertex positions to find the minimum and maximum values along each axis. This is used to construct the AABB.

- The `Frustum::intersects_obb` and `Sphere::insersects_obb` algorithm is from Foundations of Game Engine Development 2: Rendering by Eric Lengyel. There is no OBB type, yet, rather an AABB and the model matrix are passed in as arguments. This calculates a 'relative radius' of the AABB with respect to the plane normal (the plane normal in the Sphere case being something I came up with as the direction pointing from the centre of the sphere to the centre of the AABB) such that it can then do a sphere-sphere intersection test in practice.

- `RenderLayers` were copied over from the current renderer.

- `VisibleEntities` was copied over from the current renderer and a `CubemapVisibleEntities` was added to support `PointLight`s for now. `VisibleEntities` are added to views (cameras and lights) and contain a `Vec<Entity>` that is populated by culling/visibility systems that run in PostUpdate of the app world, and are iterated over in the render world for, for example, queuing up meshes to be drawn by lights for shadow maps and the main pass for cameras.

- `Visibility` and `ComputedVisibility` components were added. The `Visibility` component is user-facing so that, for example, the entity can be marked as not visible in an editor. `ComputedVisibility` on the other hand is the result of the culling/visibility systems and takes `Visibility` into account. So if an entity is marked as not being visible in its `Visibility` component, that will skip culling/visibility intersection tests and just mark the `ComputedVisibility` as false.

- The `ComputedVisibility` is used to decide which meshes to extract.

- I had to add a way to get the far plane from the `CameraProjection` in order to define an explicit far frustum plane for culling. This should perhaps be optional as it is not always desired and in that case, testing 5 planes instead of 6 is a performance win.

I think that's about all. I discussed some of the design with @cart on Discord already so hopefully it's not too far from being mergeable. It works well at least. 😄

2021-11-07 21:45:52 +00:00

|

|

|

ExtractLights,

|

Clustered forward rendering (#3153)

# Objective

Implement clustered-forward rendering.

## Solution

~~FIXME - in the interest of keeping the merge train moving, I'm submitting this PR now before the description is ready. I want to add in some comments into the code with references for the various bits and pieces and I want to describe some of the key decisions I made here. I'll do that as soon as I can.~~ Anyone reviewing is welcome to add review comments where you want to know more about how something or other works.

* The summary of the technique is that the view frustum is divided into a grid of sub-volumes called clusters, point lights are tested against each of the clusters to see if they would affect that volume within the scene and if so, added to a list of lights affecting that cluster. Then when shading a fragment which is a point on the surface of a mesh within the scene, the point is mapped to a cluster and only the lights affecting that clusters are used in lighting calculations. This brings huge performance and scalability benefits as most of the time lights are placed so that there are not that many that overlap each other in terms of their sphere of influence, but there may be many distinct point lights visible in the scene. Doing all the lighting calculations for all visible lights in the scene for every pixel on the screen quickly becomes a performance limitation. Clustered forward rendering allows us to make an approximate list of lights that affect each pixel, indeed each surface in the scene (as it works along the view z axis too, unlike tiled/forward+).

* WebGL2 is a platform we want to support and it does not support storage buffers. Uniform buffer bindings are limited to a maximum of 16384 bytes per binding. I used bit shifting and masking to pack the cluster light lists and various indices into a uniform buffer and the 16kB limit is very likely the first bottleneck in scaling the number of lights in a scene at the moment if the lights can affect many clusters due to their range or proximity to the camera (there are a lot of clusters close to the camera, which is an area for improvement). We could store the information in textures instead of uniform buffers to remove this bottleneck though I don’t know if there are performance implications to reading from textures instead if uniform buffers.

* Because of the uniform buffer binding size limitations we can support a maximum of 256 lights with the current size of the PointLight struct

* The z-slicing method (i.e. the mapping from view space z to a depth slice which defines the near and far planes of a cluster) is using the Doom 2016 method. I need to add comments with references to this. It’s an exponential function that simplifies well for the purposes of optimising the fragment shader. xy grid divisions are regular in screen space.

* Some optimisation work was done on the allocation of lights to clusters, which involves intersection tests, and for this number of clusters and lights the system has insignificant cost using a fairly naïve algorithm. I think for more lights / finer-grained clusters we could use a BVH, but at some point it would be just much better to use compute shaders and storage buffers.

* Something else to note is that it is absolutely infeasible to use plain cube map point light shadow mapping for many lights. It does not scale in terms of performance nor memory usage. There are some interesting methods I saw discussed in reference material that I will add a link to which render and update shadow maps piece-wise, but they also need compute shaders to work well. Basically for now you need to sacrifice point light shadows for all but a handful of point lights if you don’t want to kill performance. I set the limit to 10 but that’s just what we had from before where 10 was the maximum number of point lights before this PR.

* I added a couple of debug visualisations behind a shader def that were useful for seeing performance impact of light distribution - I should make the debug mode configurable without modifying the shader code. One mode shows the number of lights affecting each cluster by tinting toward red for few lights or green for many lights (maxes out at 16, but not sure that’s a reasonable max). The other shows which cluster the surface at a fragment belongs to by tinting it with a randomish colour. This can help to understand deeper performance issues due to screen space tiles spanning multiple clusters in depth with divergent shader execution times.

Also, there are more things that could be done as improvements, and I will document those somewhere (I'm not sure where will be the best place... in a todo alongside the code, a GitHub issue, somewhere else?) but I think it works well enough and brings significant performance and scalability benefits that it's worth integrating already now and then iterating on.

* Calculate the light’s effective range based on its intensity and physical falloff and either just use this, or take the minimum of the user-supplied range and this. This would avoid unnecessary lighting calculations for clusters that cannot be affected. This would need to take into account HDR tone mapping as in my not-fully-understanding-the-details understanding, the threshold is relative to how bright the scene is.

* Improve the z-slicing to use a larger first slice.

* More gracefully handle the cluster light list uniform buffer binding size limitations by prioritising which lights are included (some heuristic for most significant like closest to the camera, brightest, affecting the most pixels, …)

* Switch to using a texture instead of uniform buffer

* Figure out the / a better story for shadows

I will also probably add an example that demonstrates some of the issues:

* What situations exhaust the space available in the uniform buffers

* Light range too large making lights affect many clusters and so exhausting the space for the lists of lights that affect clusters

* Light range set to be too small producing visible artifacts where clusters the light would physically affect are not affected by the light

* Perhaps some performance issues

* How many lights can be closely packed or affect large portions of the view before performance drops?

2021-12-09 03:08:54 +00:00

|

|

|

PrepareClusters,

|

Frustum culling (#2861)

# Objective

Implement frustum culling for much better performance on more complex scenes. With the Amazon Lumberyard Bistro scene, I was getting roughly 15fps without frustum culling and 60+fps with frustum culling on a MacBook Pro 16 with i9 9980HK 8c/16t CPU and Radeon Pro 5500M.

macOS does weird things with vsync so even though vsync was off, it really looked like sometimes other applications or the desktop window compositor were interfering, but the difference could be even more as I even saw up to 90+fps sometimes.

## Solution

- Until the https://github.com/bevyengine/rfcs/pull/12 RFC is completed, I wanted to implement at least some of the bounding volume functionality we needed to be able to unblock a bunch of rendering features and optimisations such as frustum culling, fitting the directional light orthographic projection to the relevant meshes in the view, clustered forward rendering, etc.

- I have added `Aabb`, `Frustum`, and `Sphere` types with only the necessary intersection tests for the algorithms used. I also added `CubemapFrusta` which contains a `[Frustum; 6]` and can be used by cube maps such as environment maps, and point light shadow maps.

- I did do a bit of benchmarking and optimisation on the intersection tests. I compared the [rafx parallel-comparison bitmask approach](https://github.com/aclysma/rafx/blob/c91bd5fcfdfa3f4d1b43507c32d84b94ffdf1b2e/rafx-visibility/src/geometry/frustum.rs#L64-L92) with a naïve loop that has an early-out in case of a bounding volume being outside of any one of the `Frustum` planes and found them to be very similar, so I chose the simpler and more readable option. I also compared using Vec3 and Vec3A and it turned out that promoting Vec3s to Vec3A improved performance of the culling significantly due to Vec3A operations using SIMD optimisations where Vec3 uses plain scalar operations.

- When loading glTF models, the vertex attribute accessors generally store the minimum and maximum values, which allows for adding AABBs to meshes loaded from glTF for free.

- For meshes without an AABB (`PbrBundle` deliberately does not have an AABB by default), a system is executed that scans over the vertex positions to find the minimum and maximum values along each axis. This is used to construct the AABB.

- The `Frustum::intersects_obb` and `Sphere::insersects_obb` algorithm is from Foundations of Game Engine Development 2: Rendering by Eric Lengyel. There is no OBB type, yet, rather an AABB and the model matrix are passed in as arguments. This calculates a 'relative radius' of the AABB with respect to the plane normal (the plane normal in the Sphere case being something I came up with as the direction pointing from the centre of the sphere to the centre of the AABB) such that it can then do a sphere-sphere intersection test in practice.

- `RenderLayers` were copied over from the current renderer.

- `VisibleEntities` was copied over from the current renderer and a `CubemapVisibleEntities` was added to support `PointLight`s for now. `VisibleEntities` are added to views (cameras and lights) and contain a `Vec<Entity>` that is populated by culling/visibility systems that run in PostUpdate of the app world, and are iterated over in the render world for, for example, queuing up meshes to be drawn by lights for shadow maps and the main pass for cameras.

- `Visibility` and `ComputedVisibility` components were added. The `Visibility` component is user-facing so that, for example, the entity can be marked as not visible in an editor. `ComputedVisibility` on the other hand is the result of the culling/visibility systems and takes `Visibility` into account. So if an entity is marked as not being visible in its `Visibility` component, that will skip culling/visibility intersection tests and just mark the `ComputedVisibility` as false.

- The `ComputedVisibility` is used to decide which meshes to extract.

- I had to add a way to get the far plane from the `CameraProjection` in order to define an explicit far frustum plane for culling. This should perhaps be optional as it is not always desired and in that case, testing 5 planes instead of 6 is a performance win.

I think that's about all. I discussed some of the design with @cart on Discord already so hopefully it's not too far from being mergeable. It works well at least. 😄

2021-11-07 21:45:52 +00:00

|

|

|

PrepareLights,

|

|

|

|

|

QueueShadows,

|

|

|

|

|

}

|

|

|

|

|

|

2021-06-27 23:10:23 +00:00

|

|

|

pub struct ExtractedAmbientLight {

|

|

|

|

|

color: Color,

|

|

|

|

|

brightness: f32,

|

|

|

|

|

}

|

|

|

|

|

|

2021-11-22 23:16:36 +00:00

|

|

|

#[derive(Component)]

|

2021-06-02 02:59:17 +00:00

|

|

|

pub struct ExtractedPointLight {

|

|

|

|

|

color: Color,

|

bevy_pbr2: Improve lighting units and documentation (#2704)

# Objective

A question was raised on Discord about the units of the `PointLight` `intensity` member.

After digging around in the bevy_pbr2 source code and [Google Filament documentation](https://google.github.io/filament/Filament.html#mjx-eqn-pointLightLuminousPower) I discovered that the intention by Filament was that the 'intensity' value for point lights would be in lumens. This makes a lot of sense as these are quite relatable units given basically all light bulbs I've seen sold over the past years are rated in lumens as people move away from thinking about how bright a bulb is relative to a non-halogen incandescent bulb.

However, it seems that the derivation of the conversion between luminous power (lumens, denoted `Φ` in the Filament formulae) and luminous intensity (lumens per steradian, `I` in the Filament formulae) was missed and I can see why as it is tucked right under equation 58 at the link above. As such, while the formula states that for a point light, `I = Φ / 4 π` we have been using `intensity` as if it were luminous intensity `I`.

Before this PR, the intensity field is luminous intensity in lumens per steradian. After this PR, the intensity field is luminous power in lumens, [as suggested by Filament](https://google.github.io/filament/Filament.html#table_lighttypesunits) (unfortunately the link jumps to the table's caption so scroll up to see the actual table).