While reading through the code base, I stumbled across a piece of code that I found hard to read despite its simple purpose. This is my attempt at making the code easier to understand for future readers.

I won't be offended if this is too minor and not worth your time.

TokenMap -> SpanMap rewrite

Opening early so I can have an overview over the full diff more easily, still very unfinished and lots of work to be done.

The gist of what this PR does is move away from assigning IDs to tokens in arguments and expansions and instead gives the subtrees the text ranges they are sourced from (made relative to some item for incrementality). This means we now only have a single map per expension, opposed to map for expansion and arguments.

A few of the things that are not done yet (in arbitrary order):

- [x] generally clean up the current mess

- [x] proc-macros, have been completely ignored so far

- [x] syntax fixups, has been commented out for the time being needs to be rewritten on top of some marker SyntaxContextId

- [x] macro invocation syntax contexts are not properly passed around yet, so $crate hygiene does not work in all cases (but most)

- [x] builtin macros do not set spans properly, $crate basically does not work with them rn (which we use)

~~- [ ] remove all uses of dummy spans (or if that does not work, change the dummy entries for dummy spans so that tests will not silently pass due to havin a file id for the dummy file)~~

- [x] de-queryfy `macro_expand`, the sole caller of it is `parse_macro_expansion`, and both of these are lru-cached with the same limit so having it be a query is pointless

- [x] docs and more docs

- [x] fix eager macro spans and other stuff

- [x] simplify include! handling

- [x] Figure out how to undo the sudden `()` expression wrapping in expansions / alternatively prioritize getting invisible delimiters working again

- [x] Simplify InFile stuff and HirFIleId extensions

~~- [ ] span crate containing all the file ids, span stuff, ast ids. Then remove the dependency injection generics from tt and mbe~~

Fixes https://github.com/rust-lang/rust-analyzer/issues/10300

Fixes https://github.com/rust-lang/rust-analyzer/issues/15685

internal: simplify the removal of dulicate workspaces.

### Summary:

Refactoring the duplicate removal process for `workspaces` in `fetch_workspaces`.

### Changes Made:

Replaced `[].iter().enumerate().skip(...).filter_map(...)` with a more concise `[i+1..].positions(...)` provided by `itertools`, which enhances clarity without changing functionality

### Impact:

This change aims to enhance the duplicate removal process for `workspaces`. This change has been tested on my machine.

Please review and provide feedback. Thanks!

ensure renames happen after edit

This is a bugfix for an issue I fould while working on helix. Rust-analyzer currently always sends any filesystem edits (rename/file creation) before any other edits. When renaming a file that is also being edited that would mean that the edit would be discarded and therefore an incomplete/incorrect refactor (or even cause the creation of a new file in helix altough that is probably a pub on our side).

Example:

* create a module: `mod foo` containing a `pub sturct Bar;`

* reexport the struct uneder a different name in the `foo` module using a *fully qualified path*: `pub use crate::foo::Bar as Bar2`.

* rename the `foo` module to `foo2` using rust-analyzer

* obsereve that the path is not correctly updated (rust-analyer first sends a rename `foo.rs` to `foo2.rs` and then edits `foo.rs` after)

This PR fixes that issue by simply executing all rename operations after all edit operations (while still executing file creation operations first). I also added a testcase similar to the example above.

Relevent excerpt from the LSP standard:

> Since version 3.13.0 a workspace edit can contain resource operations (create, delete or rename files and folders) as well. If resource operations are present clients need to execute the operations in the order in which they are provided. So a workspace edit for example can consist of the following two changes: (1) create file a.txt and (2) a text document edit which insert text into file a.txt. An invalid sequence (e.g. (1) delete file a.txt and (2) insert text into file a.txt) will cause failure of the operation. How the client recovers from the failure is described by the client capability: workspace.workspaceEdit.failureHandling

internal: port anymap

## Description

- The anymap crate has been ported. During this process, unnecessary features for rust-analyzer have been removed.

- From the tests that were checking the existing licenses, the anymap license (`BlueOak-1.0.0 OR MIT OR Apache-2.0`) has been removed.

## Requests

- While porting the code this time, I have tried to respect the original author's intentions and have kept the comments/codes as much as possible. Please don't hesitate to tell me if you think the comments/codes also need to be appropriately modified.

- If there are any necessary changes regarding the licensing or anything else, please let me know so I can fix them.

## Issue

https://github.com/rust-lang/rust-analyzer/issues/15500

Add dedicated field for `target_dir` in the configurations for Cargo

and Flycheck. Also change the directory to be a `PathBuf` as opposed to

a `String` to be more appropriate to the operating system.

Adds a Rust Analyzer configuration option to set a custom

target directory for builds. This is a workaround for Rust Analyzer

blocking debug builds while running `cargo check`. This change

should close#6007

fix: ensure `rustfmt` runs when configured with `./`

(Hopefully) resolves https://github.com/rust-lang/rust-analyzer/issues/15595. This change kinda approaches canonicalization—which I am not a fan of—but only in service of making `./`-configured commands run correctly.

Longer-term, I feel like this code should be removed once `rustfmt` supports recursive searches of configuration files or interpolation of values like `${workspace_folder}` lands in rust-analyzer.

## Testing

I cloned `rustc`, setup rust-analyzer as suggested in the [`rustc` dev guide](https://rustc-dev-guide.rust-lang.org/building/suggested.html#configuring-rust-analyzer-for-rustc), saved and formatted files in `src/tools/miri` and `compiler`, and saw `rustfmt` (seemingly) correctly.

extend check.overrideCommand and buildScripts.overrideCommand docs

Extend check.overrideCommand and buildScripts.overrideCommand docs regarding invocation strategy and location.

However something still seems a bit odd -- the docs for `invocationStrategy`/`invocationLocation` talk about "workspaces", but the setting that controls which workspaces are considered is called `linkedProjects`. Is a project the same as a workspace here or is there some subtle difference?

VSCode behaves strangely, allowing to navigate into label location, but

not allowing to apply hint's text edit, after hint is resolved.

See https://github.com/microsoft/vscode/issues/193124 for details.

For now, stub hint resolution for VSCode specifically.

Switch to in-tree rustc dependencies with a cfg flag

We can use this flag to detect and prevent breakages in rustc CI. (see #14846 and #15569)

~The `IN_RUSTC_REPOSITORY` is just a placeholder. Is there any existing cfg flag that rustc CI sets?~

Resolve inlay hint data

Part of https://github.com/rust-lang/rust-analyzer/issues/13962

Support https://microsoft.github.io/language-server-protocol/specifications/lsp/3.17/specification/#inlayHint_resolve better, by omitting all inlay hint fields specified in the client hint resolve capabilities.

Current list of all capabilities possible to resolve later:

```

"textEdits"

"tooltip"

"label.tooltip"

"label.location"

"label.command"

```

and every one specified in the client capabilities is now resolved by r-a, being omitted in the initial response.

--------------

When editing `inlay_hints.rs` file around line `457` with no resolve capabilities, I get

<details>

<summary>resolved json, 10803 characters</summary>

```json

{"jsonrpc":"2.0","id":55,"result":[{"position":{"line":477,"character":1},"label":[{"value":"fn inlay_hints","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/ide/src/inlay_hints.rs","range":{"start":{"line":445,"character":14},"end":{"line":445,"character":25}}}}],"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":451,"character":10},"label":[{"value":": "},{"value":"ProfileSpan","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/profile/src/hprof.rs","range":{"start":{"line":85,"character":11},"end":{"line":85,"character":22}}}},{"value":""}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":451,"character":27},"label":[{"value":"label:","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/profile/src/hprof.rs","range":{"start":{"line":60,"character":12},"end":{"line":60,"character":17}}}}],"kind":2,"paddingLeft":false,"paddingRight":true,"data":{"file_id":0}},{"position":{"line":452,"character":12},"label":[{"value":": "},{"value":"Semantics","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/hir/src/semantics.rs","range":{"start":{"line":108,"character":11},"end":{"line":108,"character":20}}}},{"value":"<'_, "},{"value":"RootDatabase","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/ide-db/src/lib.rs","range":{"start":{"line":75,"character":11},"end":{"line":75,"character":23}}}},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":453,"character":12},"label":[{"value":": "},{"value":"SourceFile","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/ast/generated/nodes.rs","range":{"start":{"line":223,"character":11},"end":{"line":223,"character":21}}}},{"value":""}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":454,"character":12},"label":[{"value":": &"},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":456,"character":12},"label":": i32","kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":458,"character":15},"label":[{"value":": "},{"value":"Vec","location":{"uri":"file:///Users/someonetoignore/.rustup/toolchains/stable-aarch64-apple-darwin/lib/rustlib/src/rust/library/alloc/src/vec/mod.rs","range":{"start":{"line":395,"character":11},"end":{"line":395,"character":14}}}},{"value":"<"},{"value":"InlayHint","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/ide/src/inlay_hints.rs","range":{"start":{"line":149,"character":11},"end":{"line":149,"character":20}}}},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":460,"character":21},"label":[{"value":": "},{"value":"SemanticsScope","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/hir/src/semantics.rs","range":{"start":{"line":1651,"character":11},"end":{"line":1651,"character":25}}}},{"value":"<'_>"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":460,"character":36},"label":[{"value":"node:","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/hir/src/semantics.rs","range":{"start":{"line":482,"character":24},"end":{"line":482,"character":28}}}}],"kind":2,"paddingLeft":false,"paddingRight":true,"data":{"file_id":0}},{"position":{"line":461,"character":23},"label":[{"value":": "},{"value":"FamousDefs","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/ide-db/src/famous_defs.rs","range":{"start":{"line":20,"character":11},"end":{"line":20,"character":21}}}},{"value":"<'_, '_>"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":463,"character":17},"label":[{"value":": impl FnMut("},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">)"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":463,"character":25},"label":[{"value":": "},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":463,"character":33},"label":[{"value":"hints:","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/ide/src/inlay_hints.rs","range":{"start":{"line":480,"character":4},"end":{"line":480,"character":9}}}}],"kind":2,"paddingLeft":false,"paddingRight":true,"data":{"file_id":0}},{"position":{"line":465,"character":22},"label":[{"value":": "},{"value":"TextRange","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/text-size-1.1.0/src/range.rs","range":{"start":{"line":14,"character":11},"end":{"line":14,"character":20}}}},{"value":""}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":467,"character":35},"label":[{"value":": "},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":469,"character":92},"label":[{"value":"impl "},{"value":"Iterator","location":{"uri":"file:///Users/someonetoignore/.rustup/toolchains/stable-aarch64-apple-darwin/lib/rustlib/src/rust/library/core/src/iter/traits/iterator.rs","range":{"start":{"line":72,"character":10},"end":{"line":72,"character":18}}}},{"value":"<"},{"value":"Item","location":{"uri":"file:///Users/someonetoignore/.rustup/toolchains/stable-aarch64-apple-darwin/lib/rustlib/src/rust/library/core/src/iter/traits/iterator.rs","range":{"start":{"line":76,"character":9},"end":{"line":76,"character":13}}}},{"value":" = "},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">>"}],"kind":1,"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":468,"character":34},"label":[{"value":"impl "},{"value":"Iterator","location":{"uri":"file:///Users/someonetoignore/.rustup/toolchains/stable-aarch64-apple-darwin/lib/rustlib/src/rust/library/core/src/iter/traits/iterator.rs","range":{"start":{"line":72,"character":10},"end":{"line":72,"character":18}}}},{"value":"<"},{"value":"Item","location":{"uri":"file:///Users/someonetoignore/.rustup/toolchains/stable-aarch64-apple-darwin/lib/rustlib/src/rust/library/core/src/iter/traits/iterator.rs","range":{"start":{"line":76,"character":9},"end":{"line":76,"character":13}}}},{"value":" = "},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">>"},{"value":""}],"kind":1,"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":467,"character":41},"label":[{"value":""},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">"}],"kind":1,"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":469,"character":40},"label":" -> bool","kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":469,"character":39},"label":[{"value":": &"},{"value":"SyntaxNode","location":{"uri":"file:///Users/someonetoignore/.cargo/registry/src/index.crates.io-6f17d22bba15001f/rowan-0.15.11/src/api.rs","range":{"start":{"line":15,"character":11},"end":{"line":15,"character":21}}}},{"value":"<"},{"value":"RustLanguage","location":{"uri":"file:///Users/someonetoignore/work/rust-analyzer/crates/syntax/src/syntax_node.rs","range":{"start":{"line":15,"character":9},"end":{"line":15,"character":21}}}},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}}]}

```

</details>

for the visible editor range alone, pretty much repeated on every consequent edit.

With this patch and all inlay hint resolve capabilities enabled, for the same example I observe quite a footprint reduction:

<details>

<summary>unresolved json, 4142 characters</summary>

```json

{"jsonrpc":"2.0","id":49,"result":[{"position":{"line":477,"character":1},"label":[{"value":"fn inlay_hints"}],"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":451,"character":10},"label":[{"value":": "},{"value":"ProfileSpan"},{"value":""}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":451,"character":27},"label":[{"value":"label:"}],"kind":2,"paddingLeft":false,"paddingRight":true,"data":{"file_id":0}},{"position":{"line":452,"character":12},"label":[{"value":": "},{"value":"Semantics"},{"value":"<'_, "},{"value":"RootDatabase"},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":453,"character":12},"label":[{"value":": "},{"value":"SourceFile"},{"value":""}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":454,"character":12},"label":[{"value":": &"},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":456,"character":12},"label":": i32","kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":458,"character":15},"label":[{"value":": "},{"value":"Vec"},{"value":"<"},{"value":"InlayHint"},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":460,"character":21},"label":[{"value":": "},{"value":"SemanticsScope"},{"value":"<'_>"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":460,"character":36},"label":[{"value":"node:"}],"kind":2,"paddingLeft":false,"paddingRight":true,"data":{"file_id":0}},{"position":{"line":461,"character":23},"label":[{"value":": "},{"value":"FamousDefs"},{"value":"<'_, '_>"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":463,"character":17},"label":[{"value":": impl FnMut("},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">)"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":463,"character":25},"label":[{"value":": "},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":463,"character":33},"label":[{"value":"hints:"}],"kind":2,"paddingLeft":false,"paddingRight":true,"data":{"file_id":0}},{"position":{"line":465,"character":22},"label":[{"value":": "},{"value":"TextRange"},{"value":""}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":467,"character":35},"label":[{"value":": "},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":469,"character":92},"label":[{"value":"impl "},{"value":"Iterator"},{"value":"<"},{"value":"Item"},{"value":" = "},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">>"}],"kind":1,"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":468,"character":34},"label":[{"value":"impl "},{"value":"Iterator"},{"value":"<"},{"value":"Item"},{"value":" = "},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">>"},{"value":""}],"kind":1,"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":467,"character":41},"label":[{"value":""},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">"}],"kind":1,"paddingLeft":true,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":469,"character":40},"label":" -> bool","kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}},{"position":{"line":469,"character":39},"label":[{"value":": &"},{"value":"SyntaxNode"},{"value":"<"},{"value":"RustLanguage"},{"value":">"}],"kind":1,"paddingLeft":false,"paddingRight":false,"data":{"file_id":0}}]}

```

</details>

with all unresolved parts needing only for navigation, hover or applying the hint edit — dynamic parts that are made after mouse hover or similar events, that resolve the hint data.

Do not send inlay hint refresh requests on file edits

See https://github.com/rust-lang/rust-analyzer/issues/13369#issuecomment-1695306870

Editor itself is able to invalidate hints after edits, and /refresh was sent after editor reports changes to the language server. This forces the editor to either query & invalidate the hints twice after every edit, or wait for /refresh to come before querying the hints.

Both options are rather useless, so instead, send a request on server startup only: client editors do not know when the server actually starts up, this will help to query the initial hints after editor was open and the server was still starting up.

On type format '(', by adding closing ')' automatically

If I understand right, `()` can surround pretty much the same `{}` can, so add another on type formatting pair for convenience: sometimes it's not that pleasant to write parenthesis in `Some(2).map(|i| (i, i+1))` cases and I would prefer r-a to do that for me.

One note: currently, b06503b6ec/crates/rust-analyzer/src/handlers/request.rs (L357) fires always.

Should we remove the assertion entirely now, since apparently things work in release despite that check?

Editor itself is able to invalidate hints after edits, and /refresh was

sent after editor reports changes to the language server.

This forces the editor to either query & invalidate the hints twice

after every edit, or wait for /refresh to come before querying the

hints.

Both options are rather useless, so instead, send a request on server

startup only: client editors do not know when the server actually starts

up, this will help to query the initial hints after editor was open and

the server was still starting up.

Previously this was hard coded to "0.1". The SCIP protocol allows this

to be an arbitrary string:

```

message ToolInfo {

// Name of the indexer that produced this index.

string name = 1;

// Version of the indexer that produced this index.

string version = 2;

// Command-line arguments that were used to invoke this indexer.

repeated string arguments = 3;

}

```

so use the same string reported by `rust-analyzer --version`.

SCIP requires symbols to be unique, but multiple functions may have a

parameter with the same name. Qualify parameters according to the

containing function.

internal: Defer structured snippet rendering to allow escaping snippet bits

Since we know exactly where snippets are, we can transparently escape snippet bits to the exact text edits that need it, and not have to do it for anything other text edits.

Also will eventually fix#11006 once all assists are migrated. This comes as a side-effect of text edits that don't have snippets get marked as having no insert formatting at all.

Structured snippets precisely track which text edits need to be marked

as snippet text edits, but the cases where structured snippets aren't

used but snippets are still present are for simple single text-edit

changes, so it's perfectly fine to mark all one of them as being a

snippet text edit

Map our diagnostics to rustc and clippy's ones

And control their severity by lint attributes `#[allow]`, `#[deny]` and ... .

It doesn't work with proc macros and I would like to fix that before merge but I don't know how to do it.

internal: Format let-else

As nightly finally got support for it I went ahead and formatted r-a with the latest nightly, then with the latest stable (in case other stuff changed)

Split out project loading capabilities from rust-analyzer crate

External tools currently depend on the entire lsp infra for no good reason so let's lift that out so those tools have something better to depend on

Use anonymous lifetime where possible

Because anonymous lifetimes are *super* cool.

More seriously, I believe anonymous lifetimes, especially those in impl headers, reduce cognitive load to a certain extent because they usually signify that they are not relevant in the signature of the methods within (or that we can apply the usual lifetime elision rules even if they are relevant).

internal: add `library` fixture meta

Currently, there is no way to specify `CrateOrigin` of a file fixture ([this] might be a bug?). This PR adds `library` meta to explicitly specify the fixture to be `CrateOrigin::Library` and also makes sure crates that belong to a library source root are set `CrateOrigin::Library`.

(`library` isn't really the best name. It essentially means that the crate is outside workspace but `non_workspace_member` feels a bit too long. Suggestions for the better name would be appreciated)

Additionally:

- documents the fixture meta syntax as thoroughly as possible

- refactors relevant code

[this]: 4b06d3c595/crates/base-db/src/fixture.rs (L450)

internal: remove spurious regex dependency

- replace tokio's env-filter with a smaller&simpler targets filter

- reshuffle logging infra a bit to make sure there's only a single place where we read environmental variables

- use anyhow::Result in rust-analyzer binary

- replace tokio's env-filter with a smaller&simpler targets filter

- reshuffle logging infra a bit to make sure there's only a single place

where we read environmental variables

- use anyhow::Result in rust-analyzer binary

Lower const params with a bad id

cc #7434

This PR adds an `InTypeConstId` which is a `DefWithBodyId` and lower const generic parameters into bodies using it, and evaluate them with the mir interpreter. I think this is the last unimplemented const generic feature relative to rustc stable.

But there is a problem: The id used in the `InTypeConstId` is the raw `FileAstId`, which changes frequently. So these ids and their bodies will be invalidated very frequently, which is bad for incremental analysis.

Due this problem, I disabled lowering for local crates (in library crate the id is stable since files won't be changed). This might be overreacting (const generic expressions are usually small, maybe it would be better enabled with bad performance than disabled) but it makes motivation for doing it in the correct way, and it splits the potential panic and breakages that usually comes with const generic PRs in two steps.

Other than the id, I think (at least I hope) other parts are in the right direction.

Properly format documentation for `SignatureHelpRequest`s

Properly formats function documentation instead of returning it raw when responding to `SignatureHelpRequest`s.

I added a test in `crates/rust-analyzer/tests/slow-tests/main.rs` -- not sure if this is the best location given the relevant code is in `crates/rust-analyzer` or if it's possible to test in a less heavyweight manner.

Closes#14958

Add span to group.

This appears to fix#14959, but I've never contributed to rust-analyzer before and there were some things that confused me:

- I had to add the `fn byte_range` method to get it to build. This was added to rust in [April](https://github.com/rust-lang/rust/pull/109002), so I don't understand why it wasn't needed until now

- When testing, I ran into the fact that rust recently updated its `METADATA_VERSION`, so I had to test this with nightly-2023-05-20. But then I noticed that rust has its own copy of `rust-analyzer`, and the metadata version bump has already been [handled there](60e95e76d0). So I guess I don't really understand the relationship between the code there and the code here.

Prioritize threads affected by user typing

To this end I’ve introduced a new custom thread pool type which can spawn threads using each QoS class. This way we can run latency-sensitive requests under one QoS class and everything else under another QoS class. The implementation is very similar to that of the `threadpool` crate (which is currently used by rust-analyzer) but with unused functionality stripped out.

I’ll have to rebase on master once #14859 is merged but I think everything else is alright :D

This code replaces the thread pool implementation we were using

previously (from the `threadpool` crate). By making the thread pool

aware of QoS, each job spawned on the thread pool can have a different

QoS class.

This commit also replaces every QoS class used previously with Default

as a temporary measure so that each usage can be chosen deliberately.

Specify thread types using Quality of Service API

<details>

<summary>Some background (in case you haven’t heard of QoS before)</summary>

Heterogenous multi-core CPUs are increasingly found in laptops and desktops (e.g. Alder Lake, Snapdragon 8cx Gen 3, M1). To maximize efficiency on this kind of hardware, it is important to provide the operating system with more information so threads can be scheduled on different core types appropriately.

The approach that XNU (the kernel of macOS, iOS, etc) and Windows have taken is to provide a high-level semantic API – quality of service, or QoS – which informs the OS of the program’s intent. For instance, you might specify that a thread is running a render loop for a game. This makes the OS provide this thread with as large a share of the system’s resources as possible. Specifying a thread is running an unimportant background task, on the other hand, is cause for it to be scheduled exclusively on high-efficiency cores instead of high-performance cores.

QoS APIs allows for easy configuration of many different parameters at once; for instance, setting QoS on XNU affects scheduling, timer latency, I/O priorities, and of course what core type the thread in question should run on. I don’t know any details on how QoS works on Windows, but I would guess it’s similar.

Hypothetically, taking advantage of these APIs would improve power consumption, thermals, battery life if applicable, etc.

</details>

# Relevance to rust-analyzer

From what I can tell the philosophy behind both the XNU and Windows QoS APIs is that _user interfaces should never stutter under any circumstances._ You can see this in the array of QoS classes which are available: the highest QoS class in both APIs is one intended explicitly for UI render loops.

Imagine rust-analyzer is performing CPU-intensive background work – maybe you just invoked Find Usages on `usize` or opened a large project – in this scenario the editor’s render loop should absolutely get higher priority than rust-analyzer, no matter what. You could view it in terms of “realtime-ness”: flight control software is hard realtime, audio software is soft realtime, GUIs are softer realtime, and rust-analyzer is not realtime at all. Of course, maximizing responsiveness is important, but respecting the rest of the system is more important.

# Implementation

I’ve tried my best to unify thread creation in `stdx`, where the new API I’ve introduced _requires_ specifying a QoS class. Different points along the performance/efficiency curve can make a great difference; the M1’s e-cores use around three times less power than the p-cores, so putting in this effort is worthwhile IMO.

It’s worth mentioning that Linux does not [yet](https://youtu.be/RfgPWpTwTQo) have a QoS API. Maybe translating QoS into regular thread priorities would be acceptable? From what I can tell the only scheduling-related code in rust-analyzer is Windows-specific, so ignoring QoS entirely on Linux shouldn’t cause any new issues. Also, I haven’t implemented support for the Windows QoS APIs because I don’t have a Windows machine to test on, and because I’m completely unfamiliar with Windows APIs :)

I noticed that rust-analyzer handles some requests on the main thread (using `.on_sync()`) and others on a threadpool (using `.on()`). I think it would make sense to run the main thread at the User Initiated QoS and the threadpool at Utility, but only if all requests that are caused by typing use `.on_sync()` and all that don’t use `.on()`. I don’t understand how the `.on_sync()`/`.on()` split that’s currently present was chosen, so I’ve let this code be for the moment. Let me know if changing this to what I proposed makes any sense.

To avoid having to change everything back in case I’ve misunderstood something, I’ve left all threads at the Utility QoS for now. Of course, this isn’t what I hope the code will look like in the end, but I figured I have to start somewhere :P

# References

<ul>

<li><a href="https://developer.apple.com/library/archive/documentation/Performance/Conceptual/power_efficiency_guidelines_osx/PrioritizeWorkAtTheTaskLevel.html">Apple documentation related to QoS</a></li>

<li><a href="67e155c940/include/pthread/qos.h">pthread API for setting QoS on XNU</a></li>

<li><a href="https://learn.microsoft.com/en-us/windows/win32/procthread/quality-of-service">Windows’s QoS classes</a></li>

<li>

<details>

<summary>Full documentation of XNU QoS classes. This documentation is only available as a huge not-very-readable comment in a header file, so I’ve reformatted it and put it here for reference.</summary>

<ul>

<li><p><strong><code>QOS_CLASS_USER_INTERACTIVE</code>: A QOS class which indicates work performed by this thread is interactive with the user.</strong></p><p>Such work is requested to run at high priority relative to other work on the system. Specifying this QOS class is a request to run with nearly all available system CPU and I/O bandwidth even under contention. This is not an energy-efficient QOS class to use for large tasks. The use of this QOS class should be limited to critical interaction with the user such as handling events on the main event loop, view drawing, animation, etc.</p></li>

<li><p><strong><code>QOS_CLASS_USER_INITIATED</code>: A QOS class which indicates work performed by this thread was initiated by the user and that the user is likely waiting for the results.</strong></p><p>Such work is requested to run at a priority below critical user-interactive work, but relatively higher than other work on the system. This is not an energy-efficient QOS class to use for large tasks. Its use should be limited to operations of short enough duration that the user is unlikely to switch tasks while waiting for the results. Typical user-initiated work will have progress indicated by the display of placeholder content or modal user interface.</p></li>

<li><p><strong><code>QOS_CLASS_DEFAULT</code>: A default QOS class used by the system in cases where more specific QOS class information is not available.</strong></p><p>Such work is requested to run at a priority below critical user-interactive and user-initiated work, but relatively higher than utility and background tasks. Threads created by <code>pthread_create()</code> without an attribute specifying a QOS class will default to <code>QOS_CLASS_DEFAULT</code>. This QOS class value is not intended to be used as a work classification, it should only be set when propagating or restoring QOS class values provided by the system.</p></li>

<li><p><strong><code>QOS_CLASS_UTILITY</code>: A QOS class which indicates work performed by this thread may or may not be initiated by the user and that the user is unlikely to be immediately waiting for the results.</strong></p><p>Such work is requested to run at a priority below critical user-interactive and user-initiated work, but relatively higher than low-level system maintenance tasks. The use of this QOS class indicates the work should be run in an energy and thermally-efficient manner. The progress of utility work may or may not be indicated to the user, but the effect of such work is user-visible.</p></li>

<li><p><strong><code>QOS_CLASS_BACKGROUND</code>: A QOS class which indicates work performed by this thread was not initiated by the user and that the user may be unaware of the results.</strong></p><p>Such work is requested to run at a priority below other work. The use of this QOS class indicates the work should be run in the most energy and thermally-efficient manner.</p></li>

<li><p><strong><code>QOS_CLASS_UNSPECIFIED</code>: A QOS class value which indicates the absence or removal of QOS class information.</strong></p><p>As an API return value, may indicate that threads or pthread attributes were configured with legacy API incompatible or in conflict with the QOS class system.</p></li>

</ul>

</details>

</li>

</ul>

feat: Highlight closure captures when cursor is on pipe or move keyword

This runs into the same issue on vscode as exit points for `->`, where highlights are only triggered on identifiers, https://github.com/rust-lang/rust-analyzer/issues/9395

Though putting the cursor on `move` should at least work.

internal: Warn when loading sysroot fails to find the core library

Should help a bit more with user experience, before we only logged this now we show it in the status

Closes https://github.com/rust-lang/rust-analyzer/issues/11606

Drop support for non-syroot proc macro ABIs

This makes some bigger changes to how we handle the proc-macro-srv things, for one it is now an empty crate if built without the `sysroot-abi` feature, this simplifies some things dropping the need to put the feature cfg in various places. The cli wrapper now actually depends on the server, instead of being part of the server that is just exported, that way we can have a true dummy server that just errors on each request if no sysroot support was specified.

internal: Add config to specifiy lru capacities for all queries

Might help figuring out what queries should be limited by LRU by default, as currently we only limit `parse`, `parse_macro_expansion` and `macro_expand`.

fix: allow new, subsequent `rust-project.json`-based workspaces to get proc macro expansion

As detailed in https://github.com/rust-lang/rust-analyzer/issues/14417#issuecomment-1485336174, `rust-project.json` workspaces added after the initial `rust-project.json`-based workspace was already indexed by rust-analyzer would not receive procedural macro expansion despite `config.expand_proc_macros` returning true. To fix this issue:

1. I changed `reload.rs` to check which workspaces are newly added.

2. Spawned new procedural macro expansion servers based on the _new_ workspaces.

1. This is to prevent spawning duplicate procedural macro expansion servers for already existing workspaces. While the overall memory usage of duplicate procedural macro servers is minimal, this is more about the _principle_ of not leaking processes 😅.

3. Launched procedural macro expansion if any workspaces are `rust-project.json`-based _or_ `same_workspaces` is true. `same_workspaces` being true (and reachable) indicates that that build scripts have finished building (in Cargo-based projects), while the build scripts in `rust-project.json`-based projects have _already been built_ by the build system that produced the `rust-project.json`.

I couldn't really think of structuring this code in a better way without engaging with https://github.com/rust-lang/rust-analyzer/issues/7444.

Remove client side proc-macro version check

The server already verifies versions due to ABI picking now so there shouldn't be a need for the client side check anymore

fix: Do not retry inlay hint requests

Should close https://github.com/rust-lang/rust-analyzer/issues/13372, retrying the way its currently implemented is not ideal as we do not adjust offsets in the requests, but doing that is a major PITA, so this should at least work around one of the more annoying issues stemming from it.

Add Cargo-style project discovery for Buck and Bazel Users

This feature requires the user to add a command that generates a `rust-project.json` from a set of files. Project discovery can be invoked in two ways:

1. At extension activation time, which includes the generated `rust-project.json` as part of the linkedProjects argument in `InitializeParams`.

2. Through a new command titled "rust-analyzer: Add current file to workspace", which makes use of a new, rust-analyzer-specific LSP request that adds the workspace without erasing any existing workspaces. Note that there is no mechanism to _remove_ workspaces other than "quit the rust-analyzer server".

Few notes:

- I think that the command-running functionality _could_ merit being placed into its own extension (and expose it via extension contribution points) to provide build-system idiomatic progress reporting and status handling, but I haven't (yet) made an extension that does this nor does Buck expose this sort of functionality.

- This approach would _just work_ for Bazel. I'll try and get the tool that's responsible for Buck integration open-sourced soon.

- On the testing side of things, I've used this in around my employer's Buck-powered monorepo and it's a nice experience. That being said, I can't think of an open-source repository where this can be tested in public, so you might need to trust me on this one.

I'd love to get feedback on:

- Naming of LSP extensions/new commands. I'm not too pleased with how "rust-analyzer: Add current file to workspace" is named, in that it's creating a _new_ workspace. I think that this command being added should be gated on `rust-analyzer.discoverProjectCommand` on being set, so I can add this in sequent commits.

- My Typescript. It's not particularly good.

- Suggestions on handling folders with _both_ Cargo and non-Cargo build systems and if I make activation a bit better.

(I previously tried to add this functionality entirely within rust-analyzer-the-LSP server itself, but matklad was right—an extension side approach is much, much easier.)

This feature requires the user to add a command that generates a

`rust-project.json` from a set of files. Project discovery can be invoked

in two ways:

1. At extension activation time, which includes the generated

`rust-project.json` as part of the linkedProjects argument in

InitializeParams

2. Through a new command titled "Add current file to workspace", which

makes use of a new, rust-analyzer specific LSP request that adds

the workspace without erasing any existing workspaces.

I think that the command-running functionality _could_ merit being

placed into its own extension (and expose it via extension contribution

points), if only provide build-system idiomatic progress reporting and

status handling, but I haven't (yet) made an extension that does this.

Handle trait alias definitions

Part of #2773

This PR adds a bunch of structs and enum variants for trait aliases. Trait aliases should be handled as an independent item because they are semantically distinct from traits.

I basically started by adding `TraitAlias{Id, Loc}` to `hir_def::item_tree` and iterated adding necessary stuffs until compiler stopped complaining what's missing. Let me know if there's still anything I need to add.

I'm opening up this PR for early review and stuff. I'm planning to add tests for IDE functionalities in this PR, but not type-related support, for which I put FIXME notes.

Beginning of MIR

This pull request introduces the initial implementation of MIR lowering and interpreting in Rust Analyzer.

The implementation of MIR has potential to bring several benefits:

- Executing a unit test without compiling it: This is my main goal. It can be useful for quickly testing code changes and print-debugging unit tests without the need for a full compilation (ideally in almost zero time, similar to languages like python and js). There is a probability that it goes nowhere, it might become slower than rustc, or it might need some unreasonable amount of memory, or we may fail to support a common pattern/function that make it unusable for most of the codes.

- Constant evaluation: MIR allows for easier and more correct constant evaluation, on par with rustc. If r-a wants to fully support the type system, it needs full const eval, which means arbitrary code execution, which needs MIR or something similar.

- Supporting more diagnostics: MIR can be used to detect errors, most famously borrow checker and lifetime errors, but also mutability errors and uninitialized variables, which can be difficult/impossible to detect in HIR.

- Lowering closures: With MIR we can find out closure capture modes, which is useful in detecting if a closure implements the `FnMut` or `Fn` traits, and calculating its size and data layout.

But the current PR implements no diagnostics and doesn't support closures. About const eval, I removed the old const eval code and it now uses the mir interpreter. Everything that is supported in stable rustc is either implemented or is super easy to implement. About interpreting unit tests, I added an experimental config, disabled by default, that shows a `pass` or `fail` on hover of unit tests (ideally it should be a button similar to `Run test` button, but I didn't figured out how to add them). Currently, no real world test works, due to missing features including closures, heap allocation, `dyn Trait` and ... so at this point it is only useful for me selecting what to implement next.

The implementation of MIR is based on the design of rustc, the data structures are almost copy paste (so it should be easy to migrate it to a possible future stable-mir), but the lowering and interpreting code is from me.

Rename `checkOnSave` settings to `check`

Now that flychecks can be triggered without saving the setting name doesn't make that much sense anymore. This PR renames it to just `check`, but keeps `checkOnSave` as the enabling setting.

feat: add the ability to limit the number of threads launched by `main_loop`

## Motivation

`main_loop` defaults to launch as many threads as cpus in one machine. When developing on multi-core remote servers on multiple projects, this will lead to thousands of idle threads being created. This is very annoying when one wants check whether his program under developing is running correctly via `htop`.

<img width="756" alt="image" src="https://user-images.githubusercontent.com/41831480/206656419-fa3f0dd2-e554-4f36-be1b-29d54739930c.png">

## Contribution

This patch introduce the configuration option `rust-analyzer.numThreads` to set the desired thread number used by the main thread pool.

This should have no effects on the performance as not all threads are actually used.

<img width="1325" alt="image" src="https://user-images.githubusercontent.com/41831480/206656834-fe625c4c-b993-4771-8a82-7427c297fd41.png">

## Demonstration

The following is a snippet of `lunarvim` configuration using my own build.

```lua

vim.list_extend(lvim.lsp.automatic_configuration.skipped_servers, { "rust_analyzer" })

require("lvim.lsp.manager").setup("rust_analyzer", {

cmd = { "env", "RA_LOG=debug", "RA_LOG_FILE=/tmp/ra-test.log",

"/home/jlhu/Projects/rust-analyzer/target/debug/rust-analyzer",

},

init_options = {

numThreads = 4,

},

settings = {

cachePriming = {

numThreads = 8,

},

},

})

```

## Limitations

The `numThreads` can only be modified via `initializationOptions` in early initialisation because everything has to wait until the thread pool starts including the dynamic settings modification support.

The `numThreads` also does not reflect the end results of how many threads is actually created, because I have not yet tracked down everything that spawns threads.

fix a bunch of clippy lints

fixes a bunch of clippy lints for fun and profit

i'm aware of this repo's position on clippy. The changes are split into separate commits so they can be reviewed separately

feat: Package Windows release artifacts as ZIP and add symbols file

Closes#13872Closes#7747

CC #10371

This allows us to ship a format that's easier to handle on Windows. As a bonus, we can also include the PDB, to get useful stack traces. Unfortunately, it adds a couple of dependencies to `xtask`, increasing the debug build times from 1.28 to 1.58 s (release from 1.60s to 2.20s) on my system.

This makes code more readale and concise,

moving all format arguments like `format!("{}", foo)`

into the more compact `format!("{foo}")` form.

The change was automatically created with, so there are far less change

of an accidental typo.

```

cargo clippy --fix -- -A clippy::all -W clippy::uninlined_format_args

```

Seems like these can be safely fixed. With one, I was particularly

surprised -- `Some(pats) => &**pats,` in body.rs?

```

cargo clippy --fix -- -A clippy::all -D clippy::explicit_auto_deref

```

I am not certain if this will improve performance,

but it seems having a .clone() without any need should be removed.

This was done with clippy, and manually reviewed:

```

cargo clippy --fix -- -A clippy::all -D clippy::redundant_clone

```

feat: Add an option to hide adjustment hints outside of `unsafe` blocks and functions

As the title suggests: this PR adds an option (namely `rust-analyzer.inlayHints.expressionAdjustmentHints.hideOutsideUnsafe`) that allows to hide adjustment hints outside of `unsafe` blocks and functions:

Requested by `@BoxyUwU` <3

Don't show runnable code lenses in libraries outside of the workspace

Addresses #13664. For now I'm just disabling runnable code lenses since the ones that display the number of references and implementations do work correctly with external code.

Also made a tiny TypeScript change to use the typed `sendNotification` overload.

Mega-sync from `rust-lang/rust`

This essentially implements `@oli-obk's` suggestion here https://github.com/rust-lang/rust-analyzer/pull/13459#issuecomment-1297285607, with `@eddyb's` help.

This PR is equivalent to 14 syncs (back and forth) between `rust-lang/rust` and `rust-lang/rust-analyzer`.

Working from this list (from bottom to top):

```

(x) a2a1d9954⬆️ rust-analyzer

(x) 79923c382⬆️ rust-analyzer

(x) c60b1f641⬆️ rust-analyzer

(x) 8807fc4cc⬆️ rust-analyzer

(x) a99a48e78⬆️ rust-analyzer

(x) 4f55ebbd4⬆️ rust-analyzer

(x) f5fde4df4⬆️ rust-analyzer

(x) 459bbb422⬆️ rust-analyzer

(x) 65e1dc4d9⬆️ rust-analyzer

(x) 3e358a682⬆️ rust-analyzer

(x) 31519bb39⬆️ rust-analyzer

(x) 8231fee46⬆️ rust-analyzer

(x) 22c8c9c40⬆️ rust-analyzer

(x) 9d2cb42a4⬆️ rust-analyzer

```

(This listed was assembled by doing a `git subtree push`, which made a branch, and looking at the new commits in that branch, picking only those that were `⬆️ rust-analyzer` commits)

We used the following commands to simulate merges in both directions:

```shell

TO_MERGE=22c8c9c40 # taken from the list above, bottom to top

git merge --no-edit --no-ff $TO_MERGE

git merge --no-edit --no-ff $(git -C ../rust log --pretty=format:'%cN | %s | %ad => %P' | rg -m1 -F "$(git show --no-patch --pretty=format:%ad $TO_MERGE)" | tee /dev/stderr | rg '.* => \S+ (\S+)$' --replace '$1')

```

We encountered no merge conflicts that Git wasn't able to solve by doing it this way.

Here's what the commit graph looks like (as shown in the Git Lens VSCode extension):

<img width="1345" alt="image" src="https://user-images.githubusercontent.com/7998310/203984523-7c1a690a-8224-416c-8015-ed6e49667066.png">

This PR closes#13459

## Does this unbreak `rust->ra` syncs?

Yes, here's how we tried:

In `rust-analyzer`:

* check out `subtree-fix` (this PR's branch)

* make a new branch off of it: `git checkout -b subtree-fix-merge-test`

* simulate this PR getting merged with `git merge master`

In `rust`:

* pull latest master

* make a new branch: `git checkout -b test-change`

* mess with rust-analyzer (I added a comment to `src/tools/rust-analyzer/Cargo.toml`)

* commit

* run `git subtree push -P src/tools/rust-analyzer ra-local final-sync` (this follows the [Clippy sync guide](https://doc.rust-lang.org/nightly/clippy/development/infrastructure/sync.html))

This created a `final-sync` branch in `rust-analyzer`.

In `rust-analyzer`:

* `git merge --no-ff final-sync` (this follows the [Clippy sync guide](https://doc.rust-lang.org/nightly/clippy/development/infrastructure/sync.html))

Now `git log` in `rust-analyzer` shows this:

```

commit 460128387e46ddfc2b95921b2d7f6e913a3d2b9f (HEAD -> subtree-fix-merge-test)

Merge: 0513fc02a 9ce6a734f

Author: Amos Wenger <amoswenger@gmail.com>

Date: Fri Nov 25 13:28:24 2022 +0100

Merge branch 'final-sync' into subtree-fix-merge-test

commit 0513fc02a08ea9de952983624bd0a00e98044b36

Merge: 38c98d1ff6918009fe

Author: Amos Wenger <amoswenger@gmail.com>

Date: Fri Nov 25 13:28:02 2022 +0100

Merge branch 'master' into subtree-fix-merge-test

commit 9ce6a734f37ef8e53689f1c6f427a9efafe846bd (final-sync)

Author: Amos Wenger <amoswenger@gmail.com>

Date: Fri Nov 25 13:26:26 2022 +0100

Mess with rust-analyzer just for fun

```

And `git diff 0513fc02a08ea9de952983624bd0a00e98044b36` shows this:

```patch

diff --git a/Cargo.toml b/Cargo.toml

index 286ef1e7d..c9e24cd19 100644

--- a/Cargo.toml

+++ b/Cargo.toml

`@@` -32,3 +32,5 `@@` debug = 0

# ungrammar = { path = "../ungrammar" }

# salsa = { path = "../salsa" }

+

+# lol, hi

```

## Does this unbreak `ra->rust` syncs?

Yes, here's how we tried.

From `rust`:

* `git checkout -b sync-from-ra`

* `git subtree pull -P src/tools/rust-analyzer ra-local subtree-fix-merge-test` (this is adapted from the [Clippy sync guide](https://doc.rust-lang.org/nightly/clippy/development/infrastructure/sync.html#performing-the-sync-from-clippy-to-rust-langrust), you would normally use `ra-upstream master` but we're simulating things here)

A commit editor pops up, there was no merge conflicts.

## How do we prevent this from happening again?

Like `@bjorn3` said in https://github.com/rust-lang/rust-analyzer/pull/13459#issuecomment-1293587848

> Whenever syncing from rust-analyzer -> rust you have to immediately sync the merge commit from rust -> rust-analyzer to prevent merge conflicts in the future.

But if we get it wrong again, at least now we have a not-so-painful way to fix it.

Fix: Handle empty `checkOnSave/target` values

This fixes a regression introduced by #13290, in which failing to set `checkOnSave/target` (or `checkOnSave/targets`) would lead to an invalid config.

[Fixes#13660]

Support multiple targets for checkOnSave (in conjunction with cargo 1.64.0+)

This PR adds support for the ability to pass multiple `--target` flags when using

`cargo` 1.64.0+.

## Questions

I needed to change the type of two configurations options, but I did not plurialize the names to

avoid too much churn, should I ?

## Zulip thread

https://rust-lang.zulipchat.com/#narrow/stream/185405-t-compiler.2Frust-analyzer/topic/Issue.2013282.20.28supporting.20multiple.20targets.20with.201.2E64.2B.29

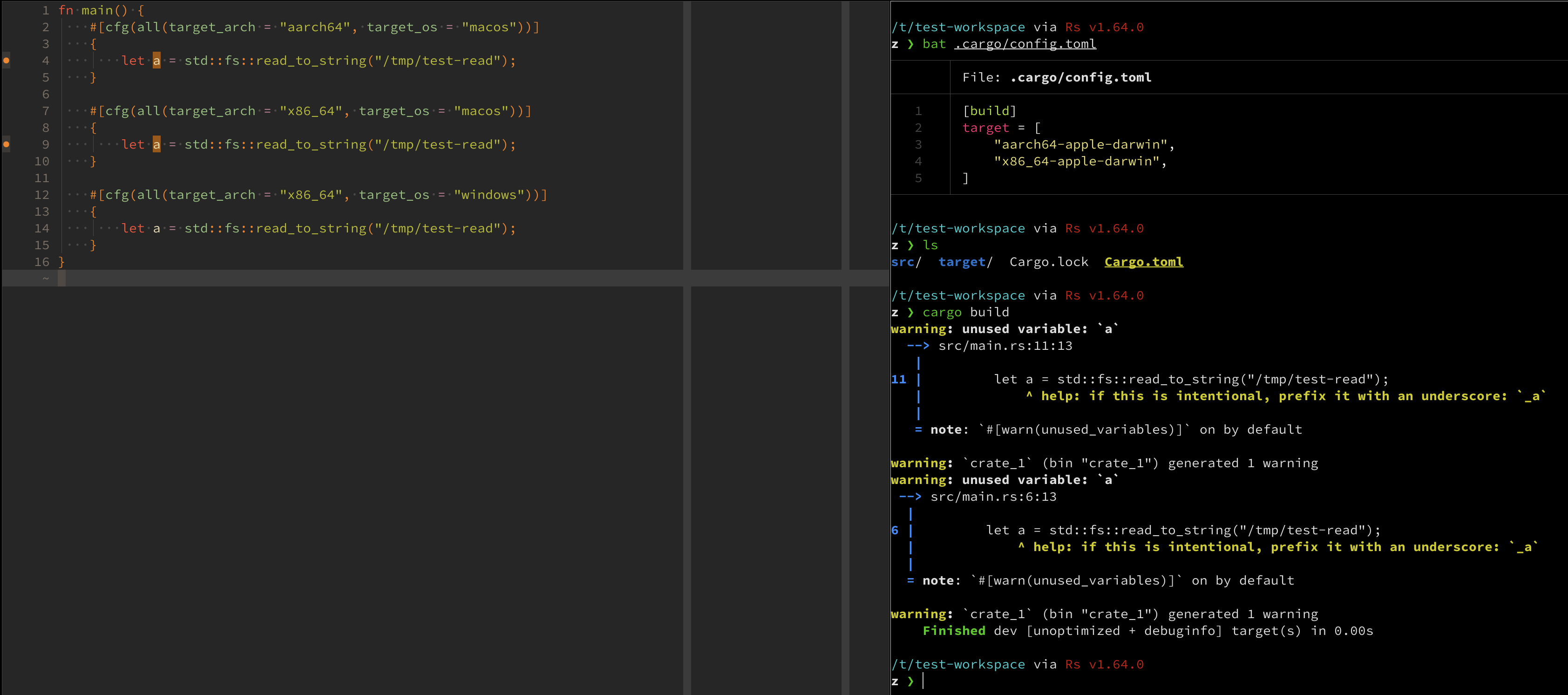

## Example

To see it working, on a macOS machine:

```sh

$ cd /tmp

$ cargo new cargo-multiple-targets-support-ra-test

$ cd !$

$ mkdir .cargo

$ echo '

[build]

target = [

"aarch64-apple-darwin",

"x86_64-apple-darwin",

]

' > .cargo/config.toml

$ echo '

fn main() {

#[cfg(all(target_arch = "aarch64", target_os = "macos"))]

{

let a = std::fs::read_to_string("/tmp/test-read");

}

#[cfg(all(target_arch = "x86_64", target_os = "macos"))]

{

let a = std::fs::read_to_string("/tmp/test-read");

}

#[cfg(all(target_arch = "x86_64", target_os = "windows"))]

{

let a = std::fs::read_to_string("/tmp/test-read");

}

}

' > src/main.rs

# launch your favorite editor with the version of RA from this PR

#

# You should see warnings under the first two `let a = ...` but not the third

```

## Screen

Helps with #13282

scip: Generate symbols for local crates.

Consider something like:

```

// a.rs

pub struct Foo { .. } // Foo is "local 1"

fn something() {

crate:🅱️:Bar::new() // Bar is "local 1", but of "b.rs"

}

// b.rs

pub struct Bar { .. } // "local 1"

```

Without this there's no way to disambiguate whether "local 1" references "Bar" or "Foo".

feat: add config for inserting must_use in `generate_enum_as_method`

Should fix#13312

Didn't add a test because I was not sure on how to add test for a specific configuration option, tried to look for the usages for other `AssistConfig` variants but couldn't find any in `tests`. If there is a way to test this, do point me towards it.

I tried to extract the formatting string as a common `template_string` and only have if-else for that, but it didn't compile :(

Also it seems these tests are failing:

```

test config::tests::generate_config_documentation ... FAILED

test config::tests::generate_package_json_config ... FAILED

```

Can you also point me to how to correct these 😅 ( I guess there is some command to automatically generate these? )