mirror of

https://github.com/rust-lang/rust-analyzer

synced 2025-01-12 05:08:52 +00:00

Merge #10067

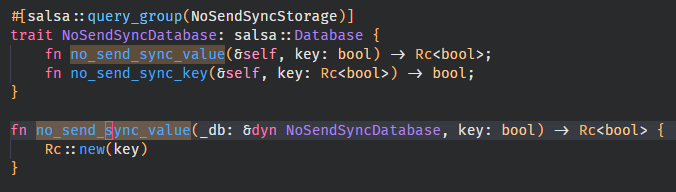

10067: Downmap tokens to all token descendants instead of just the first r=Veykril a=Veykril With this we can now resolve usages of identifiers inside (proc-)macros even if they are used for different purposes multiple times inside the expansion. Example here being with the cursor being on the `no_send_sync_value` function causing us to still highlight the identifier in the attribute invocation correctly as we now resolve its usages in there. Prior we only saw the first usage of the identifier which is for a definition only, as such we bailed and didn't highlight it.  Note that this has to be explicitly switched over for most IDE features now as pretty much everything expects a single node/token as a result from descending. Co-authored-by: Lukas Wirth <lukastw97@gmail.com>

This commit is contained in:

commit

7c7a41c5e9

9 changed files with 198 additions and 105 deletions

|

|

@ -2,7 +2,7 @@

|

|||

|

||||

mod source_to_def;

|

||||

|

||||

use std::{cell::RefCell, fmt, iter::successors};

|

||||

use std::{cell::RefCell, fmt};

|

||||

|

||||

use base_db::{FileId, FileRange};

|

||||

use hir_def::{

|

||||

|

|

@ -14,6 +14,7 @@ use hir_expand::{name::AsName, ExpansionInfo};

|

|||

use hir_ty::{associated_type_shorthand_candidates, Interner};

|

||||

use itertools::Itertools;

|

||||

use rustc_hash::{FxHashMap, FxHashSet};

|

||||

use smallvec::{smallvec, SmallVec};

|

||||

use syntax::{

|

||||

algo::find_node_at_offset,

|

||||

ast::{self, GenericParamsOwner, LoopBodyOwner},

|

||||

|

|

@ -165,7 +166,13 @@ impl<'db, DB: HirDatabase> Semantics<'db, DB> {

|

|||

self.imp.speculative_expand(actual_macro_call, speculative_args, token_to_map)

|

||||

}

|

||||

|

||||

// FIXME: Rename to descend_into_macros_single

|

||||

pub fn descend_into_macros(&self, token: SyntaxToken) -> SyntaxToken {

|

||||

self.imp.descend_into_macros(token).pop().unwrap()

|

||||

}

|

||||

|

||||

// FIXME: Rename to descend_into_macros

|

||||

pub fn descend_into_macros_many(&self, token: SyntaxToken) -> SmallVec<[SyntaxToken; 1]> {

|

||||

self.imp.descend_into_macros(token)

|

||||

}

|

||||

|

||||

|

|

@ -174,7 +181,7 @@ impl<'db, DB: HirDatabase> Semantics<'db, DB> {

|

|||

node: &SyntaxNode,

|

||||

offset: TextSize,

|

||||

) -> Option<N> {

|

||||

self.imp.descend_node_at_offset(node, offset).find_map(N::cast)

|

||||

self.imp.descend_node_at_offset(node, offset).flatten().find_map(N::cast)

|

||||

}

|

||||

|

||||

pub fn hir_file_for(&self, syntax_node: &SyntaxNode) -> HirFileId {

|

||||

|

|

@ -228,7 +235,17 @@ impl<'db, DB: HirDatabase> Semantics<'db, DB> {

|

|||

return Some(it);

|

||||

}

|

||||

|

||||

self.imp.descend_node_at_offset(node, offset).find_map(N::cast)

|

||||

self.imp.descend_node_at_offset(node, offset).flatten().find_map(N::cast)

|

||||

}

|

||||

|

||||

/// Find an AstNode by offset inside SyntaxNode, if it is inside *MacroCall*,

|

||||

/// descend it and find again

|

||||

pub fn find_nodes_at_offset_with_descend<'slf, N: AstNode + 'slf>(

|

||||

&'slf self,

|

||||

node: &SyntaxNode,

|

||||

offset: TextSize,

|

||||

) -> impl Iterator<Item = N> + 'slf {

|

||||

self.imp.descend_node_at_offset(node, offset).filter_map(|mut it| it.find_map(N::cast))

|

||||

}

|

||||

|

||||

pub fn resolve_lifetime_param(&self, lifetime: &ast::Lifetime) -> Option<LifetimeParam> {

|

||||

|

|

@ -440,17 +457,20 @@ impl<'db> SemanticsImpl<'db> {

|

|||

)

|

||||

}

|

||||

|

||||

fn descend_into_macros(&self, token: SyntaxToken) -> SyntaxToken {

|

||||

fn descend_into_macros(&self, token: SyntaxToken) -> SmallVec<[SyntaxToken; 1]> {

|

||||

let _p = profile::span("descend_into_macros");

|

||||

let parent = match token.parent() {

|

||||

Some(it) => it,

|

||||

None => return token,

|

||||

None => return smallvec![token],

|

||||

};

|

||||

let sa = self.analyze(&parent);

|

||||

|

||||

let token = successors(Some(InFile::new(sa.file_id, token)), |token| {

|

||||

let mut queue = vec![InFile::new(sa.file_id, token)];

|

||||

let mut cache = self.expansion_info_cache.borrow_mut();

|

||||

let mut res = smallvec![];

|

||||

while let Some(token) = queue.pop() {

|

||||

self.db.unwind_if_cancelled();

|

||||

|

||||

let was_not_remapped = (|| {

|

||||

for node in token.value.ancestors() {

|

||||

match_ast! {

|

||||

match node {

|

||||

|

|

@ -468,59 +488,62 @@ impl<'db> SemanticsImpl<'db> {

|

|||

return None;

|

||||

}

|

||||

let file_id = sa.expand(self.db, token.with_value(¯o_call))?;

|

||||

let token = self

|

||||

.expansion_info_cache

|

||||

.borrow_mut()

|

||||

let tokens = cache

|

||||

.entry(file_id)

|

||||

.or_insert_with(|| file_id.expansion_info(self.db.upcast()))

|

||||

.as_ref()?

|

||||

.map_token_down(self.db.upcast(), None, token.as_ref())?;

|

||||

|

||||

let len = queue.len();

|

||||

queue.extend(tokens.inspect(|token| {

|

||||

if let Some(parent) = token.value.parent() {

|

||||

self.cache(find_root(&parent), token.file_id);

|

||||

}

|

||||

|

||||

return Some(token);

|

||||

}));

|

||||

return (queue.len() != len).then(|| ());

|

||||

},

|

||||

ast::Item(item) => {

|

||||

if let Some(call_id) = self.with_ctx(|ctx| ctx.item_to_macro_call(token.with_value(item.clone()))) {

|

||||

let file_id = call_id.as_file();

|

||||

let token = self

|

||||

.expansion_info_cache

|

||||

.borrow_mut()

|

||||

let tokens = cache

|

||||

.entry(file_id)

|

||||

.or_insert_with(|| file_id.expansion_info(self.db.upcast()))

|

||||

.as_ref()?

|

||||

.map_token_down(self.db.upcast(), Some(item), token.as_ref())?;

|

||||

|

||||

let len = queue.len();

|

||||

queue.extend(tokens.inspect(|token| {

|

||||

if let Some(parent) = token.value.parent() {

|

||||

self.cache(find_root(&parent), token.file_id);

|

||||

}

|

||||

|

||||

return Some(token);

|

||||

}));

|

||||

return (queue.len() != len).then(|| ());

|

||||

}

|

||||

},

|

||||

_ => {}

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

None

|

||||

})

|

||||

.last()

|

||||

.unwrap();

|

||||

token.value

|

||||

})().is_none();

|

||||

if was_not_remapped {

|

||||

res.push(token.value)

|

||||

}

|

||||

}

|

||||

res

|

||||

}

|

||||

|

||||

// Note this return type is deliberate as [`find_nodes_at_offset_with_descend`] wants to stop

|

||||

// traversing the inner iterator when it finds a node.

|

||||

fn descend_node_at_offset(

|

||||

&self,

|

||||

node: &SyntaxNode,

|

||||

offset: TextSize,

|

||||

) -> impl Iterator<Item = SyntaxNode> + '_ {

|

||||

) -> impl Iterator<Item = impl Iterator<Item = SyntaxNode> + '_> + '_ {

|

||||

// Handle macro token cases

|

||||

node.token_at_offset(offset)

|

||||

.map(|token| self.descend_into_macros(token))

|

||||

.map(|it| self.token_ancestors_with_macros(it))

|

||||

.map(move |token| self.descend_into_macros(token))

|

||||

.map(|it| it.into_iter().map(move |it| self.token_ancestors_with_macros(it)))

|

||||

.flatten()

|

||||

}

|

||||

|

||||

|

|

|

|||

|

|

@ -163,7 +163,7 @@ pub fn expand_speculative(

|

|||

mbe::token_tree_to_syntax_node(&speculative_expansion.value, fragment_kind).ok()?;

|

||||

|

||||

let token_id = macro_def.map_id_down(token_id);

|

||||

let range = tmap_2.range_by_token(token_id, token_to_map.kind())?;

|

||||

let range = tmap_2.first_range_by_token(token_id, token_to_map.kind())?;

|

||||

let token = node.syntax_node().covering_element(range).into_token()?;

|

||||

Some((node.syntax_node(), token))

|

||||

}

|

||||

|

|

|

|||

|

|

@ -171,7 +171,7 @@ impl HygieneInfo {

|

|||

},

|

||||

};

|

||||

|

||||

let range = token_map.range_by_token(token_id, SyntaxKind::IDENT)?;

|

||||

let range = token_map.first_range_by_token(token_id, SyntaxKind::IDENT)?;

|

||||

Some((tt.with_value(range + tt.value), origin))

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -368,7 +368,7 @@ impl ExpansionInfo {

|

|||

db: &dyn db::AstDatabase,

|

||||

item: Option<ast::Item>,

|

||||

token: InFile<&SyntaxToken>,

|

||||

) -> Option<InFile<SyntaxToken>> {

|

||||

) -> Option<impl Iterator<Item = InFile<SyntaxToken>> + '_> {

|

||||

assert_eq!(token.file_id, self.arg.file_id);

|

||||

let token_id = if let Some(item) = item {

|

||||

let call_id = match self.expanded.file_id.0 {

|

||||

|

|

@ -411,11 +411,12 @@ impl ExpansionInfo {

|

|||

}

|

||||

};

|

||||

|

||||

let range = self.exp_map.range_by_token(token_id, token.value.kind())?;

|

||||

let tokens = self

|

||||

.exp_map

|

||||

.ranges_by_token(token_id, token.value.kind())

|

||||

.flat_map(move |range| self.expanded.value.covering_element(range).into_token());

|

||||

|

||||

let token = self.expanded.value.covering_element(range).into_token()?;

|

||||

|

||||

Some(self.expanded.with_value(token))

|

||||

Some(tokens.map(move |token| self.expanded.with_value(token)))

|

||||

}

|

||||

|

||||

pub fn map_token_up(

|

||||

|

|

@ -453,7 +454,7 @@ impl ExpansionInfo {

|

|||

},

|

||||

};

|

||||

|

||||

let range = token_map.range_by_token(token_id, token.value.kind())?;

|

||||

let range = token_map.first_range_by_token(token_id, token.value.kind())?;

|

||||

let token =

|

||||

tt.value.covering_element(range + tt.value.text_range().start()).into_token()?;

|

||||

Some((tt.with_value(token), origin))

|

||||

|

|

|

|||

|

|

@ -6,6 +6,7 @@ use ide_db::{

|

|||

search::{FileReference, ReferenceAccess, SearchScope},

|

||||

RootDatabase,

|

||||

};

|

||||

use rustc_hash::FxHashSet;

|

||||

use syntax::{

|

||||

ast::{self, LoopBodyOwner},

|

||||

match_ast, AstNode, SyntaxNode, SyntaxToken, TextRange, TextSize, T,

|

||||

|

|

@ -13,6 +14,7 @@ use syntax::{

|

|||

|

||||

use crate::{display::TryToNav, references, NavigationTarget};

|

||||

|

||||

#[derive(PartialEq, Eq, Hash)]

|

||||

pub struct HighlightedRange {

|

||||

pub range: TextRange,

|

||||

pub access: Option<ReferenceAccess>,

|

||||

|

|

@ -70,7 +72,7 @@ fn highlight_references(

|

|||

syntax: &SyntaxNode,

|

||||

FilePosition { offset, file_id }: FilePosition,

|

||||

) -> Option<Vec<HighlightedRange>> {

|

||||

let defs = find_defs(sema, syntax, offset)?;

|

||||

let defs = find_defs(sema, syntax, offset);

|

||||

let usages = defs

|

||||

.iter()

|

||||

.flat_map(|&d| {

|

||||

|

|

@ -99,7 +101,12 @@ fn highlight_references(

|

|||

})

|

||||

});

|

||||

|

||||

Some(declarations.chain(usages).collect())

|

||||

let res: FxHashSet<_> = declarations.chain(usages).collect();

|

||||

if res.is_empty() {

|

||||

None

|

||||

} else {

|

||||

Some(res.into_iter().collect())

|

||||

}

|

||||

}

|

||||

|

||||

fn highlight_exit_points(

|

||||

|

|

@ -270,29 +277,40 @@ fn find_defs(

|

|||

sema: &Semantics<RootDatabase>,

|

||||

syntax: &SyntaxNode,

|

||||

offset: TextSize,

|

||||

) -> Option<Vec<Definition>> {

|

||||

let defs = match sema.find_node_at_offset_with_descend(syntax, offset)? {

|

||||

ast::NameLike::NameRef(name_ref) => match NameRefClass::classify(sema, &name_ref)? {

|

||||

) -> FxHashSet<Definition> {

|

||||

sema.find_nodes_at_offset_with_descend(syntax, offset)

|

||||

.flat_map(|name_like| {

|

||||

Some(match name_like {

|

||||

ast::NameLike::NameRef(name_ref) => {

|

||||

match NameRefClass::classify(sema, &name_ref)? {

|

||||

NameRefClass::Definition(def) => vec![def],

|

||||

NameRefClass::FieldShorthand { local_ref, field_ref } => {

|

||||

vec![Definition::Local(local_ref), Definition::Field(field_ref)]

|

||||

}

|

||||

},

|

||||

}

|

||||

}

|

||||

ast::NameLike::Name(name) => match NameClass::classify(sema, &name)? {

|

||||

NameClass::Definition(it) | NameClass::ConstReference(it) => vec![it],

|

||||

NameClass::PatFieldShorthand { local_def, field_ref } => {

|

||||

vec![Definition::Local(local_def), Definition::Field(field_ref)]

|

||||

}

|

||||

},

|

||||

ast::NameLike::Lifetime(lifetime) => NameRefClass::classify_lifetime(sema, &lifetime)

|

||||

ast::NameLike::Lifetime(lifetime) => {

|

||||

NameRefClass::classify_lifetime(sema, &lifetime)

|

||||

.and_then(|class| match class {

|

||||

NameRefClass::Definition(it) => Some(it),

|

||||

_ => None,

|

||||

})

|

||||

.or_else(|| NameClass::classify_lifetime(sema, &lifetime).and_then(NameClass::defined))

|

||||

.map(|it| vec![it])?,

|

||||

};

|

||||

Some(defs)

|

||||

.or_else(|| {

|

||||

NameClass::classify_lifetime(sema, &lifetime)

|

||||

.and_then(NameClass::defined)

|

||||

})

|

||||

.map(|it| vec![it])?

|

||||

}

|

||||

})

|

||||

})

|

||||

.flatten()

|

||||

.collect()

|

||||

}

|

||||

|

||||

#[cfg(test)]

|

||||

|

|

@ -392,6 +410,45 @@ fn foo() {

|

|||

);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_multi_macro_usage() {

|

||||

check(

|

||||

r#"

|

||||

macro_rules! foo {

|

||||

($ident:ident) => {

|

||||

fn $ident() -> $ident { loop {} }

|

||||

struct $ident;

|

||||

}

|

||||

}

|

||||

|

||||

foo!(bar$0);

|

||||

// ^^^

|

||||

fn foo() {

|

||||

let bar: bar = bar();

|

||||

// ^^^

|

||||

// ^^^

|

||||

}

|

||||

"#,

|

||||

);

|

||||

check(

|

||||

r#"

|

||||

macro_rules! foo {

|

||||

($ident:ident) => {

|

||||

fn $ident() -> $ident { loop {} }

|

||||

struct $ident;

|

||||

}

|

||||

}

|

||||

|

||||

foo!(bar);

|

||||

// ^^^

|

||||

fn foo() {

|

||||

let bar: bar$0 = bar();

|

||||

// ^^^

|

||||

}

|

||||

"#,

|

||||

);

|

||||

}

|

||||

|

||||

#[test]

|

||||

fn test_hl_yield_points() {

|

||||

check(

|

||||

|

|

@ -813,7 +870,6 @@ fn function(field: u32) {

|

|||

//^^^^^

|

||||

Struct { field$0 }

|

||||

//^^^^^ read

|

||||

//^^^^^ read

|

||||

}

|

||||

"#,

|

||||

);

|

||||

|

|

|

|||

|

|

@ -17,7 +17,7 @@ use syntax::{

|

|||

use crate::RootDatabase;

|

||||

|

||||

// FIXME: a more precise name would probably be `Symbol`?

|

||||

#[derive(Debug, PartialEq, Eq, Copy, Clone)]

|

||||

#[derive(Debug, PartialEq, Eq, Copy, Clone, Hash)]

|

||||

pub enum Definition {

|

||||

Macro(MacroDef),

|

||||

Field(Field),

|

||||

|

|

|

|||

|

|

@ -61,7 +61,7 @@ pub struct FileReference {

|

|||

pub access: Option<ReferenceAccess>,

|

||||

}

|

||||

|

||||

#[derive(Debug, Copy, Clone, PartialEq)]

|

||||

#[derive(Debug, Copy, Clone, PartialEq, Eq, Hash)]

|

||||

pub enum ReferenceAccess {

|

||||

Read,

|

||||

Write,

|

||||

|

|

@ -393,7 +393,7 @@ impl<'a> FindUsages<'a> {

|

|||

continue;

|

||||

}

|

||||

|

||||

if let Some(name) = sema.find_node_at_offset_with_descend(&tree, offset) {

|

||||

for name in sema.find_nodes_at_offset_with_descend(&tree, offset) {

|

||||

if match name {

|

||||

ast::NameLike::NameRef(name_ref) => self.found_name_ref(&name_ref, sink),

|

||||

ast::NameLike::Name(name) => self.found_name(&name, sink),

|

||||

|

|

@ -410,9 +410,7 @@ impl<'a> FindUsages<'a> {

|

|||

continue;

|

||||

}

|

||||

|

||||

if let Some(ast::NameLike::NameRef(name_ref)) =

|

||||

sema.find_node_at_offset_with_descend(&tree, offset)

|

||||

{

|

||||

for name_ref in sema.find_nodes_at_offset_with_descend(&tree, offset) {

|

||||

if self.found_self_ty_name_ref(self_ty, &name_ref, sink) {

|

||||

return;

|

||||

}

|

||||

|

|

|

|||

|

|

@ -58,8 +58,9 @@ macro_rules! foobar {

|

|||

let (node, token_map) = token_tree_to_syntax_node(&expanded, FragmentKind::Items).unwrap();

|

||||

let content = node.syntax_node().to_string();

|

||||

|

||||

let get_text =

|

||||

|id, kind| -> String { content[token_map.range_by_token(id, kind).unwrap()].to_string() };

|

||||

let get_text = |id, kind| -> String {

|

||||

content[token_map.first_range_by_token(id, kind).unwrap()].to_string()

|

||||

};

|

||||

|

||||

assert_eq!(expanded.token_trees.len(), 4);

|

||||

// {($e:ident) => { fn $e() {} }}

|

||||

|

|

|

|||

|

|

@ -46,9 +46,23 @@ impl TokenMap {

|

|||

Some(token_id)

|

||||

}

|

||||

|

||||

pub fn range_by_token(&self, token_id: tt::TokenId, kind: SyntaxKind) -> Option<TextRange> {

|

||||

let &(_, range) = self.entries.iter().find(|(tid, _)| *tid == token_id)?;

|

||||

range.by_kind(kind)

|

||||

pub fn ranges_by_token(

|

||||

&self,

|

||||

token_id: tt::TokenId,

|

||||

kind: SyntaxKind,

|

||||

) -> impl Iterator<Item = TextRange> + '_ {

|

||||

self.entries

|

||||

.iter()

|

||||

.filter(move |&&(tid, _)| tid == token_id)

|

||||

.filter_map(move |(_, range)| range.by_kind(kind))

|

||||

}

|

||||

|

||||

pub fn first_range_by_token(

|

||||

&self,

|

||||

token_id: tt::TokenId,

|

||||

kind: SyntaxKind,

|

||||

) -> Option<TextRange> {

|

||||

self.ranges_by_token(token_id, kind).next()

|

||||

}

|

||||

|

||||

pub(crate) fn shrink_to_fit(&mut self) {

|

||||

|

|

|

|||

Loading…

Reference in a new issue