a recent addition must have removed the `create_default_context` command

from `nu_command`, which breaks the benchmarks 😮

## before this PR

```bash

cargo check --all-targets --workspace

```

gives a bunch of

```bash

error[E0425]: cannot find function `create_default_context` in crate `nu_command`

--> benches/benchmarks.rs:93:48

|

93 | let mut engine_state = nu_command::create_default_context();

| ^^^^^^^^^^^^^^^^^^^^^^ not found in `nu_command`

|

help: consider importing this function

|

1 | use nu_cmd_lang::create_default_context;

|

help: if you import `create_default_context`, refer to it directly

|

93 - let mut engine_state = nu_command::create_default_context();

93 + let mut engine_state = create_default_context();

|

```

and `cargo bench` does not run...

## with this PR

```bash

cargo check --all-targets --workspace

```

is not happy and the benchmarks run again with `cargo bench`

---------

Co-authored-by: sholderbach <sholderbach@users.noreply.github.com>

# Description

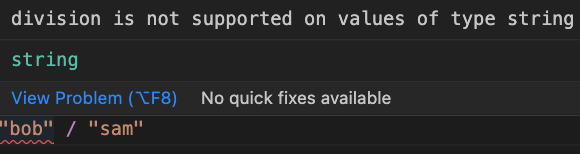

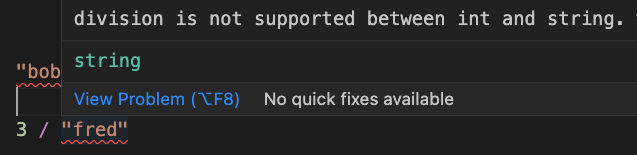

This improves the operation mismatch error in a few ways:

* We now detect if the left-hand side of the operation is at fault, and

show a simpler error/error message if it is

* Removed the unhelpful hint

* Updated the error text to make it clear what types are causing the

issue

# User-Facing Changes

Error texts and spans will change

# Tests + Formatting

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-utils/standard_library/tests.nu` to run the

tests for the standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

# After Submitting

If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

# Description

Broken after #7415

We currently don't try to build the benchmarks in the CI thus this

slipped through the cracks.

# User-Facing Changes

None

# Tests + Formatting

Compile check is currently missing, but working towards that

I've been using the new Criterion benchmarks and I noticed that they

take a _long_ time to build before the benchmark can run. Turns out

`cargo build` was building 3 separate benchmarking binaries with most of

Nu's functionality in each one.

As a simple temporary fix, I've moved all the benchmarks into a single

file so that we only build 1 binary.

### Future work

Would be nice to split the unrelated benchmarks out into modules, but

when I did that a separate binary still got built for each one. I

suspect Criterion's macros are doing something funny with module or file

names. I've left a FIXME in the code to investigate this further.