2023-04-05 19:34:47 +00:00

|

|

|

use miette::IntoDiagnostic;

|

2023-04-08 11:53:43 +00:00

|

|

|

use nu_cli::NuCompleter;

|

2023-04-05 19:34:47 +00:00

|

|

|

use nu_parser::{flatten_block, parse, FlatShape};

|

|

|

|

|

use nu_protocol::{

|

|

|

|

|

engine::{EngineState, Stack, StateWorkingSet},

|

2023-09-22 15:53:25 +00:00

|

|

|

eval_const::create_nu_constant,

|

|

|

|

|

report_error, DeclId, ShellError, Span, Value, VarId, NU_VARIABLE_ID,

|

2023-04-05 19:34:47 +00:00

|

|

|

};

|

|

|

|

|

use reedline::Completer;

|

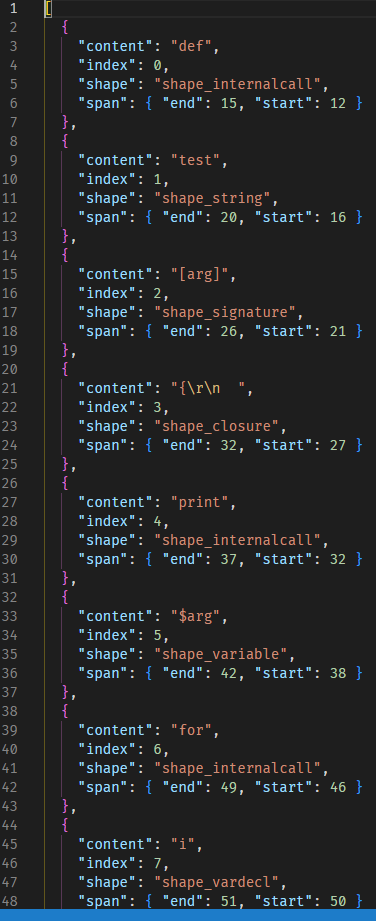

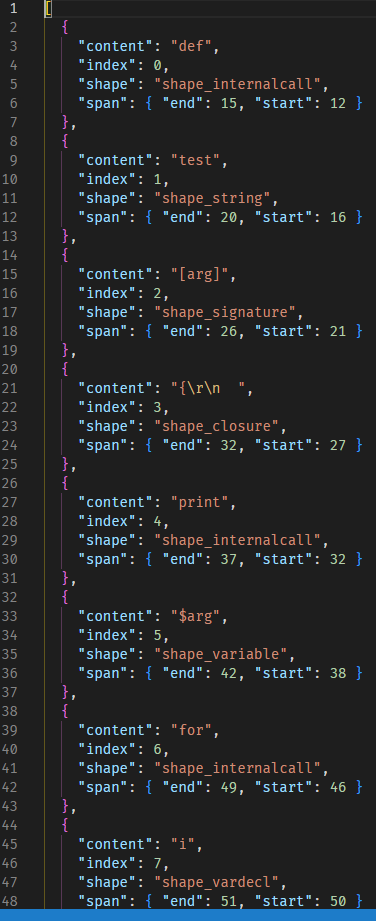

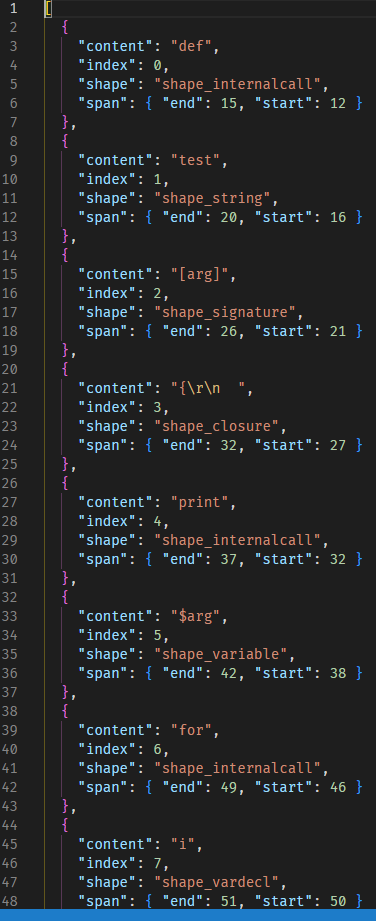

add `--ide-ast` for a simplistic ast for editors (#8995)

# Description

This is WIP. This generates a simplistic AST so that we can call it from

the vscode side for semantic token highlighting. The output is a

minified version of this screenshot.

The script

```

def test [arg] {

print $arg

for i in (seq 1 10) {

echo $i

}

}

```

The simplistic AST

```json

[{"content":"def","index":0,"shape":"shape_internalcall","span":{"end":15,"start":12}},{"content":"test","index":1,"shape":"shape_string","span":{"end":20,"start":16}},{"content":"[arg]","index":2,"shape":"shape_signature","span":{"end":26,"start":21}},{"content":"{\r\n ","index":3,"shape":"shape_closure","span":{"end":32,"start":27}},{"content":"print","index":4,"shape":"shape_internalcall","span":{"end":37,"start":32}},{"content":"$arg","index":5,"shape":"shape_variable","span":{"end":42,"start":38}},{"content":"for","index":6,"shape":"shape_internalcall","span":{"end":49,"start":46}},{"content":"i","index":7,"shape":"shape_vardecl","span":{"end":51,"start":50}},{"content":"in","index":8,"shape":"shape_keyword","span":{"end":54,"start":52}},{"content":"(","index":9,"shape":"shape_block","span":{"end":56,"start":55}},{"content":"seq","index":10,"shape":"shape_internalcall","span":{"end":59,"start":56}},{"content":"1","index":11,"shape":"shape_int","span":{"end":61,"start":60}},{"content":"10","index":12,"shape":"shape_int","span":{"end":64,"start":62}},{"content":")","index":13,"shape":"shape_block","span":{"end":65,"start":64}},{"content":"{\r\n ","index":14,"shape":"shape_block","span":{"end":73,"start":66}},{"content":"echo","index":15,"shape":"shape_internalcall","span":{"end":77,"start":73}},{"content":"$i","index":16,"shape":"shape_variable","span":{"end":80,"start":78}},{"content":"\r\n }","index":17,"shape":"shape_block","span":{"end":85,"start":80}},{"content":"\r\n}","index":18,"shape":"shape_closure","span":{"end":88,"start":85}}]

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-std/tests/run.nu` to run the tests for the

standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

2023-04-28 13:51:51 +00:00

|

|

|

use serde_json::{json, Value as JsonValue};

|

2023-04-05 19:34:47 +00:00

|

|

|

use std::sync::Arc;

|

|

|

|

|

|

2023-04-12 17:36:29 +00:00

|

|

|

#[derive(Debug)]

|

2023-04-05 19:34:47 +00:00

|

|

|

enum Id {

|

|

|

|

|

Variable(VarId),

|

|

|

|

|

Declaration(DeclId),

|

|

|

|

|

Value(FlatShape),

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn find_id(

|

|

|

|

|

working_set: &mut StateWorkingSet,

|

|

|

|

|

file_path: &str,

|

|

|

|

|

file: &[u8],

|

|

|

|

|

location: &Value,

|

|

|

|

|

) -> Option<(Id, usize, Span)> {

|

2023-04-13 23:28:16 +00:00

|

|

|

let file_id = working_set.add_file(file_path.to_string(), file);

|

|

|

|

|

let offset = working_set.get_span_for_file(file_id).start;

|

2023-04-07 18:09:38 +00:00

|

|

|

let block = parse(working_set, Some(file_path), file, false);

|

2023-04-05 19:34:47 +00:00

|

|

|

let flattened = flatten_block(working_set, &block);

|

|

|

|

|

|

|

|

|

|

if let Ok(location) = location.as_i64() {

|

|

|

|

|

let location = location as usize + offset;

|

|

|

|

|

for item in flattened {

|

|

|

|

|

if location >= item.0.start && location < item.0.end {

|

|

|

|

|

match &item.1 {

|

|

|

|

|

FlatShape::Variable(var_id) | FlatShape::VarDecl(var_id) => {

|

|

|

|

|

return Some((Id::Variable(*var_id), offset, item.0));

|

|

|

|

|

}

|

|

|

|

|

FlatShape::InternalCall(decl_id) => {

|

|

|

|

|

return Some((Id::Declaration(*decl_id), offset, item.0));

|

|

|

|

|

}

|

|

|

|

|

_ => return Some((Id::Value(item.1), offset, item.0)),

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

None

|

|

|

|

|

} else {

|

|

|

|

|

None

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn read_in_file<'a>(

|

|

|

|

|

engine_state: &'a mut EngineState,

|

2023-09-12 06:06:56 +00:00

|

|

|

file_path: &str,

|

2023-04-05 19:34:47 +00:00

|

|

|

) -> (Vec<u8>, StateWorkingSet<'a>) {

|

|

|

|

|

let file = std::fs::read(file_path)

|

|

|

|

|

.into_diagnostic()

|

|

|

|

|

.unwrap_or_else(|e| {

|

|

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

report_error(

|

|

|

|

|

&working_set,

|

2023-11-21 23:30:21 +00:00

|

|

|

&ShellError::FileNotFoundCustom {

|

|

|

|

|

msg: format!("Could not read file '{}': {:?}", file_path, e.to_string()),

|

|

|

|

|

span: Span::unknown(),

|

|

|

|

|

},

|

2023-04-05 19:34:47 +00:00

|

|

|

);

|

|

|

|

|

std::process::exit(1);

|

|

|

|

|

});

|

|

|

|

|

|

|

|

|

|

engine_state.start_in_file(Some(file_path));

|

|

|

|

|

|

|

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

|

|

|

|

|

(file, working_set)

|

|

|

|

|

}

|

|

|

|

|

|

2023-09-12 06:06:56 +00:00

|

|

|

pub fn check(engine_state: &mut EngineState, file_path: &str, max_errors: &Value) {

|

2023-04-12 17:36:29 +00:00

|

|

|

let cwd = std::env::current_dir().expect("Could not get current working directory.");

|

|

|

|

|

engine_state.add_env_var("PWD".into(), Value::test_string(cwd.to_string_lossy()));

|

2023-09-22 15:53:25 +00:00

|

|

|

let working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

|

|

|

|

|

let nu_const = match create_nu_constant(engine_state, Span::unknown()) {

|

|

|

|

|

Ok(nu_const) => nu_const,

|

|

|

|

|

Err(err) => {

|

|

|

|

|

report_error(&working_set, &err);

|

|

|

|

|

std::process::exit(1);

|

|

|

|

|

}

|

|

|

|

|

};

|

|

|

|

|

engine_state.set_variable_const_val(NU_VARIABLE_ID, nu_const);

|

2023-04-12 17:36:29 +00:00

|

|

|

|

2023-04-05 19:34:47 +00:00

|

|

|

let mut working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

let file = std::fs::read(file_path);

|

|

|

|

|

|

2023-04-13 17:53:18 +00:00

|

|

|

let max_errors = if let Ok(max_errors) = max_errors.as_i64() {

|

|

|

|

|

max_errors as usize

|

|

|

|

|

} else {

|

|

|

|

|

100

|

|

|

|

|

};

|

|

|

|

|

|

2023-04-05 19:34:47 +00:00

|

|

|

if let Ok(contents) = file {

|

|

|

|

|

let offset = working_set.next_span_start();

|

2023-04-07 18:09:38 +00:00

|

|

|

let block = parse(&mut working_set, Some(file_path), &contents, false);

|

2023-04-05 19:34:47 +00:00

|

|

|

|

2023-04-13 17:53:18 +00:00

|

|

|

for (idx, err) in working_set.parse_errors.iter().enumerate() {

|

|

|

|

|

if idx >= max_errors {

|

|

|

|

|

// eprintln!("Too many errors, stopping here. idx: {idx} max_errors: {max_errors}");

|

|

|

|

|

break;

|

|

|

|

|

}

|

2023-04-05 19:34:47 +00:00

|

|

|

let mut span = err.span();

|

|

|

|

|

span.start -= offset;

|

|

|

|

|

span.end -= offset;

|

|

|

|

|

|

|

|

|

|

let msg = err.to_string();

|

|

|

|

|

|

|

|

|

|

println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"type": "diagnostic",

|

|

|

|

|

"severity": "Error",

|

|

|

|

|

"message": msg,

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start,

|

|

|

|

|

"end": span.end

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

let flattened = flatten_block(&working_set, &block);

|

|

|

|

|

|

|

|

|

|

for flat in flattened {

|

|

|

|

|

if let FlatShape::VarDecl(var_id) = flat.1 {

|

|

|

|

|

let var = working_set.get_variable(var_id);

|

|

|

|

|

println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"type": "hint",

|

2023-04-07 20:40:59 +00:00

|

|

|

"typename": var.ty.to_string(),

|

2023-04-05 19:34:47 +00:00

|

|

|

"position": {

|

|

|

|

|

"start": flat.0.start - offset,

|

|

|

|

|

"end": flat.0.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2023-09-12 06:06:56 +00:00

|

|

|

pub fn goto_def(engine_state: &mut EngineState, file_path: &str, location: &Value) {

|

2023-04-12 17:36:29 +00:00

|

|

|

let cwd = std::env::current_dir().expect("Could not get current working directory.");

|

|

|

|

|

engine_state.add_env_var("PWD".into(), Value::test_string(cwd.to_string_lossy()));

|

|

|

|

|

|

2023-04-05 19:34:47 +00:00

|

|

|

let (file, mut working_set) = read_in_file(engine_state, file_path);

|

|

|

|

|

|

|

|

|

|

match find_id(&mut working_set, file_path, &file, location) {

|

2023-04-12 17:36:29 +00:00

|

|

|

Some((Id::Declaration(decl_id), ..)) => {

|

2023-04-05 19:34:47 +00:00

|

|

|

let result = working_set.get_decl(decl_id);

|

|

|

|

|

if let Some(block_id) = result.get_block_id() {

|

|

|

|

|

let block = working_set.get_block(block_id);

|

|

|

|

|

if let Some(span) = &block.span {

|

|

|

|

|

for file in working_set.files() {

|

|

|

|

|

if span.start >= file.1 && span.start < file.2 {

|

|

|

|

|

println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!(

|

|

|

|

|

{

|

Make EngineState clone cheaper with Arc on all of the heavy objects (#12229)

# Description

This makes many of the larger objects in `EngineState` into `Arc`, and

uses `Arc::make_mut` to do clone-on-write if the reference is not

unique. This is generally very cheap, giving us the best of both worlds

- allowing us to mutate without cloning if we have an exclusive

reference, and cloning if we don't.

This started as more of a curiosity for me after remembering that

`Arc::make_mut` exists and can make using `Arc` for mostly immutable

data that sometimes needs to be changed very convenient, and also after

hearing someone complain about memory usage on Discord - this is a

somewhat significant win for that.

The exact objects that were wrapped in `Arc`:

- `files`, `file_contents` - the strings and byte buffers

- `decls` - the whole `Vec`, but mostly to avoid lots of individual

`malloc()` calls on Clone rather than for memory usage

- `blocks` - the blocks themselves, rather than the outer Vec

- `modules` - the modules themselves, rather than the outer Vec

- `env_vars`, `previous_env_vars` - the entire maps

- `config`

The changes required were relatively minimal, but this is a breaking API

change. In particular, blocks are added as Arcs, to allow the parser

cache functionality to work.

With my normal nu config, running on Linux, this saves me about 15 MiB

of process memory usage when running interactively (65 MiB → 50 MiB).

This also makes quick command executions cheaper, particularly since

every REPL loop now involves a clone of the engine state so that we can

recover from a panic. It also reduces memory usage where engine state

needs to be cloned and sent to another thread or kept within an

iterator.

# User-Facing Changes

Shouldn't be any, since it's all internal stuff, but it does change some

public interfaces so it's a breaking change

2024-03-19 18:07:00 +00:00

|

|

|

"file": &**file.0,

|

2023-04-12 17:36:29 +00:00

|

|

|

"start": span.start - file.1,

|

|

|

|

|

"end": span.end - file.1

|

2023-04-05 19:34:47 +00:00

|

|

|

}

|

|

|

|

|

)

|

|

|

|

|

);

|

|

|

|

|

return;

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

2023-04-12 17:36:29 +00:00

|

|

|

Some((Id::Variable(var_id), ..)) => {

|

2023-04-05 19:34:47 +00:00

|

|

|

let var = working_set.get_variable(var_id);

|

|

|

|

|

for file in working_set.files() {

|

|

|

|

|

if var.declaration_span.start >= file.1 && var.declaration_span.start < file.2 {

|

|

|

|

|

println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!(

|

|

|

|

|

{

|

Make EngineState clone cheaper with Arc on all of the heavy objects (#12229)

# Description

This makes many of the larger objects in `EngineState` into `Arc`, and

uses `Arc::make_mut` to do clone-on-write if the reference is not

unique. This is generally very cheap, giving us the best of both worlds

- allowing us to mutate without cloning if we have an exclusive

reference, and cloning if we don't.

This started as more of a curiosity for me after remembering that

`Arc::make_mut` exists and can make using `Arc` for mostly immutable

data that sometimes needs to be changed very convenient, and also after

hearing someone complain about memory usage on Discord - this is a

somewhat significant win for that.

The exact objects that were wrapped in `Arc`:

- `files`, `file_contents` - the strings and byte buffers

- `decls` - the whole `Vec`, but mostly to avoid lots of individual

`malloc()` calls on Clone rather than for memory usage

- `blocks` - the blocks themselves, rather than the outer Vec

- `modules` - the modules themselves, rather than the outer Vec

- `env_vars`, `previous_env_vars` - the entire maps

- `config`

The changes required were relatively minimal, but this is a breaking API

change. In particular, blocks are added as Arcs, to allow the parser

cache functionality to work.

With my normal nu config, running on Linux, this saves me about 15 MiB

of process memory usage when running interactively (65 MiB → 50 MiB).

This also makes quick command executions cheaper, particularly since

every REPL loop now involves a clone of the engine state so that we can

recover from a panic. It also reduces memory usage where engine state

needs to be cloned and sent to another thread or kept within an

iterator.

# User-Facing Changes

Shouldn't be any, since it's all internal stuff, but it does change some

public interfaces so it's a breaking change

2024-03-19 18:07:00 +00:00

|

|

|

"file": &**file.0,

|

2023-04-12 17:36:29 +00:00

|

|

|

"start": var.declaration_span.start - file.1,

|

|

|

|

|

"end": var.declaration_span.end - file.1

|

2023-04-05 19:34:47 +00:00

|

|

|

}

|

|

|

|

|

)

|

|

|

|

|

);

|

|

|

|

|

return;

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

_ => {}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

println!("{{}}");

|

|

|

|

|

}

|

|

|

|

|

|

2023-09-12 06:06:56 +00:00

|

|

|

pub fn hover(engine_state: &mut EngineState, file_path: &str, location: &Value) {

|

2023-04-12 17:36:29 +00:00

|

|

|

let cwd = std::env::current_dir().expect("Could not get current working directory.");

|

|

|

|

|

engine_state.add_env_var("PWD".into(), Value::test_string(cwd.to_string_lossy()));

|

|

|

|

|

|

2023-04-05 19:34:47 +00:00

|

|

|

let (file, mut working_set) = read_in_file(engine_state, file_path);

|

|

|

|

|

|

|

|

|

|

match find_id(&mut working_set, file_path, &file, location) {

|

|

|

|

|

Some((Id::Declaration(decl_id), offset, span)) => {

|

|

|

|

|

let decl = working_set.get_decl(decl_id);

|

|

|

|

|

|

2023-12-15 13:00:08 +00:00

|

|

|

//let mut description = "```\n### Signature\n```\n".to_string();

|

|

|

|

|

let mut description = "```\n".to_string();

|

2023-04-13 23:28:16 +00:00

|

|

|

|

2023-12-15 13:00:08 +00:00

|

|

|

// first description

|

|

|

|

|

description.push_str(&format!("{}\n", decl.usage()));

|

|

|

|

|

|

|

|

|

|

// additional description

|

|

|

|

|

if !decl.extra_usage().is_empty() {

|

|

|

|

|

description.push_str(&format!("\n{}\n", decl.extra_usage()));

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// Usage

|

|

|

|

|

description.push_str("### Usage\n```\n");

|

2023-04-13 23:28:16 +00:00

|

|

|

let signature = decl.signature();

|

|

|

|

|

description.push_str(&format!(" {}", signature.name));

|

|

|

|

|

if !signature.named.is_empty() {

|

|

|

|

|

description.push_str(" {flags}")

|

|

|

|

|

}

|

|

|

|

|

for required_arg in &signature.required_positional {

|

|

|

|

|

description.push_str(&format!(" <{}>", required_arg.name));

|

|

|

|

|

}

|

|

|

|

|

for optional_arg in &signature.optional_positional {

|

|

|

|

|

description.push_str(&format!(" <{}?>", optional_arg.name));

|

|

|

|

|

}

|

|

|

|

|

if let Some(arg) = &signature.rest_positional {

|

|

|

|

|

description.push_str(&format!(" <...{}>", arg.name));

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

description.push_str("\n```\n");

|

|

|

|

|

|

2023-12-15 13:00:08 +00:00

|

|

|

// Flags

|

|

|

|

|

if !signature.named.is_empty() {

|

|

|

|

|

description.push_str("\n### Flags\n\n");

|

|

|

|

|

|

|

|

|

|

let mut first = true;

|

|

|

|

|

for named in &signature.named {

|

|

|

|

|

if !first {

|

|

|

|

|

description.push_str("\\\n");

|

|

|

|

|

} else {

|

|

|

|

|

first = false;

|

|

|

|

|

}

|

|

|

|

|

description.push_str(" ");

|

|

|

|

|

if let Some(short_flag) = &named.short {

|

|

|

|

|

description.push_str(&format!("`-{}`", short_flag));

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if !named.long.is_empty() {

|

|

|

|

|

if named.short.is_some() {

|

|

|

|

|

description.push_str(", ")

|

|

|

|

|

}

|

|

|

|

|

description.push_str(&format!("`--{}`", named.long));

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if let Some(arg) = &named.arg {

|

|

|

|

|

description.push_str(&format!(" `<{}>`", arg.to_type()))

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

if !named.desc.is_empty() {

|

|

|

|

|

description.push_str(&format!(" - {}", named.desc));

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

description.push('\n');

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// Parameters

|

2023-04-13 23:28:16 +00:00

|

|

|

if !signature.required_positional.is_empty()

|

|

|

|

|

|| !signature.optional_positional.is_empty()

|

|

|

|

|

|| signature.rest_positional.is_some()

|

|

|

|

|

{

|

|

|

|

|

description.push_str("\n### Parameters\n\n");

|

|

|

|

|

let mut first = true;

|

|

|

|

|

for required_arg in &signature.required_positional {

|

|

|

|

|

if !first {

|

|

|

|

|

description.push_str("\\\n");

|

|

|

|

|

} else {

|

|

|

|

|

first = false;

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

description.push_str(&format!(

|

|

|

|

|

" `{}: {}`",

|

|

|

|

|

required_arg.name,

|

|

|

|

|

required_arg.shape.to_type()

|

|

|

|

|

));

|

|

|

|

|

if !required_arg.desc.is_empty() {

|

|

|

|

|

description.push_str(&format!(" - {}", required_arg.desc));

|

|

|

|

|

}

|

|

|

|

|

description.push('\n');

|

|

|

|

|

}

|

|

|

|

|

for optional_arg in &signature.optional_positional {

|

|

|

|

|

if !first {

|

|

|

|

|

description.push_str("\\\n");

|

|

|

|

|

} else {

|

|

|

|

|

first = false;

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

description.push_str(&format!(

|

|

|

|

|

" `{}: {}`",

|

|

|

|

|

optional_arg.name,

|

|

|

|

|

optional_arg.shape.to_type()

|

|

|

|

|

));

|

|

|

|

|

if !optional_arg.desc.is_empty() {

|

|

|

|

|

description.push_str(&format!(" - {}", optional_arg.desc));

|

|

|

|

|

}

|

|

|

|

|

description.push('\n');

|

|

|

|

|

}

|

|

|

|

|

if let Some(arg) = &signature.rest_positional {

|

|

|

|

|

if !first {

|

|

|

|

|

description.push_str("\\\n");

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

description.push_str(&format!(" `...{}: {}`", arg.name, arg.shape.to_type()));

|

|

|

|

|

if !arg.desc.is_empty() {

|

|

|

|

|

description.push_str(&format!(" - {}", arg.desc));

|

|

|

|

|

}

|

|

|

|

|

description.push('\n');

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

description.push('\n');

|

|

|

|

|

}

|

|

|

|

|

|

2023-12-15 13:00:08 +00:00

|

|

|

// Input/output types

|

2023-04-13 23:28:16 +00:00

|

|

|

if !signature.input_output_types.is_empty() {

|

2023-12-15 13:00:08 +00:00

|

|

|

description.push_str("\n### Input/output types\n");

|

2023-04-13 23:28:16 +00:00

|

|

|

|

|

|

|

|

description.push_str("\n```\n");

|

|

|

|

|

for input_output in &signature.input_output_types {

|

|

|

|

|

description.push_str(&format!(" {} | {}\n", input_output.0, input_output.1));

|

|

|

|

|

}

|

|

|

|

|

description.push_str("\n```\n");

|

|

|

|

|

}

|

2023-04-05 19:34:47 +00:00

|

|

|

|

2023-12-15 13:00:08 +00:00

|

|

|

// Examples

|

2023-04-05 19:34:47 +00:00

|

|

|

if !decl.examples().is_empty() {

|

2023-04-13 23:28:16 +00:00

|

|

|

description.push_str("### Example(s)\n```\n");

|

2023-04-05 19:34:47 +00:00

|

|

|

|

|

|

|

|

for example in decl.examples() {

|

|

|

|

|

description.push_str(&format!(

|

|

|

|

|

"```\n {}\n```\n {}\n\n",

|

|

|

|

|

example.description, example.example

|

|

|

|

|

));

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": description,

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

Some((Id::Variable(var_id), offset, span)) => {

|

|

|

|

|

let var = working_set.get_variable(var_id);

|

|

|

|

|

|

|

|

|

|

println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": format!("{}{}", if var.mutable { "mutable " } else { "" }, var.ty),

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

Some((Id::Value(shape), offset, span)) => match shape {

|

|

|

|

|

FlatShape::Binary => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "binary",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Bool => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "bool",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::DateTime => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "datetime",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::External => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "external",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::ExternalArg => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "external arg",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Flag => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "flag",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Block => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "block",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Directory => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "directory",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Filepath => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "file path",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Float => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "float",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::GlobPattern => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "glob pattern",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Int => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "int",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Keyword => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "keyword",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::List => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "list",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::MatchPattern => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

2023-09-06 18:22:12 +00:00

|

|

|

"hover": "match-pattern",

|

2023-04-05 19:34:47 +00:00

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Nothing => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "nothing",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Range => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "range",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Record => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "record",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::String => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "string",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::StringInterpolation => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "string interpolation",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

FlatShape::Table => println!(

|

|

|

|

|

"{}",

|

|

|

|

|

json!({

|

|

|

|

|

"hover": "table",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset

|

|

|

|

|

}

|

|

|

|

|

})

|

|

|

|

|

),

|

|

|

|

|

_ => {}

|

|

|

|

|

},

|

|

|

|

|

_ => {}

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2023-09-12 06:06:56 +00:00

|

|

|

pub fn complete(engine_reference: Arc<EngineState>, file_path: &str, location: &Value) {

|

2023-04-05 19:34:47 +00:00

|

|

|

let stack = Stack::new();

|

|

|

|

|

let mut completer = NuCompleter::new(engine_reference, stack);

|

|

|

|

|

|

|

|

|

|

let file = std::fs::read(file_path)

|

|

|

|

|

.into_diagnostic()

|

|

|

|

|

.unwrap_or_else(|_| {

|

|

|

|

|

std::process::exit(1);

|

|

|

|

|

});

|

|

|

|

|

|

|

|

|

|

if let Ok(location) = location.as_i64() {

|

2024-01-11 17:58:14 +00:00

|

|

|

let results = completer.complete(

|

|

|

|

|

&String::from_utf8_lossy(&file)[..location as usize],

|

|

|

|

|

location as usize,

|

|

|

|

|

);

|

2023-04-05 19:34:47 +00:00

|

|

|

print!("{{\"completions\": [");

|

|

|

|

|

let mut first = true;

|

|

|

|

|

for result in results {

|

|

|

|

|

if !first {

|

|

|

|

|

print!(", ")

|

|

|

|

|

} else {

|

|

|

|

|

first = false;

|

|

|

|

|

}

|

|

|

|

|

print!("\"{}\"", result.value,)

|

|

|

|

|

}

|

|

|

|

|

println!("]}}");

|

|

|

|

|

}

|

|

|

|

|

}

|

add `--ide-ast` for a simplistic ast for editors (#8995)

# Description

This is WIP. This generates a simplistic AST so that we can call it from

the vscode side for semantic token highlighting. The output is a

minified version of this screenshot.

The script

```

def test [arg] {

print $arg

for i in (seq 1 10) {

echo $i

}

}

```

The simplistic AST

```json

[{"content":"def","index":0,"shape":"shape_internalcall","span":{"end":15,"start":12}},{"content":"test","index":1,"shape":"shape_string","span":{"end":20,"start":16}},{"content":"[arg]","index":2,"shape":"shape_signature","span":{"end":26,"start":21}},{"content":"{\r\n ","index":3,"shape":"shape_closure","span":{"end":32,"start":27}},{"content":"print","index":4,"shape":"shape_internalcall","span":{"end":37,"start":32}},{"content":"$arg","index":5,"shape":"shape_variable","span":{"end":42,"start":38}},{"content":"for","index":6,"shape":"shape_internalcall","span":{"end":49,"start":46}},{"content":"i","index":7,"shape":"shape_vardecl","span":{"end":51,"start":50}},{"content":"in","index":8,"shape":"shape_keyword","span":{"end":54,"start":52}},{"content":"(","index":9,"shape":"shape_block","span":{"end":56,"start":55}},{"content":"seq","index":10,"shape":"shape_internalcall","span":{"end":59,"start":56}},{"content":"1","index":11,"shape":"shape_int","span":{"end":61,"start":60}},{"content":"10","index":12,"shape":"shape_int","span":{"end":64,"start":62}},{"content":")","index":13,"shape":"shape_block","span":{"end":65,"start":64}},{"content":"{\r\n ","index":14,"shape":"shape_block","span":{"end":73,"start":66}},{"content":"echo","index":15,"shape":"shape_internalcall","span":{"end":77,"start":73}},{"content":"$i","index":16,"shape":"shape_variable","span":{"end":80,"start":78}},{"content":"\r\n }","index":17,"shape":"shape_block","span":{"end":85,"start":80}},{"content":"\r\n}","index":18,"shape":"shape_closure","span":{"end":88,"start":85}}]

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-std/tests/run.nu` to run the tests for the

standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

2023-04-28 13:51:51 +00:00

|

|

|

|

2023-09-12 06:06:56 +00:00

|

|

|

pub fn ast(engine_state: &mut EngineState, file_path: &str) {

|

add `--ide-ast` for a simplistic ast for editors (#8995)

# Description

This is WIP. This generates a simplistic AST so that we can call it from

the vscode side for semantic token highlighting. The output is a

minified version of this screenshot.

The script

```

def test [arg] {

print $arg

for i in (seq 1 10) {

echo $i

}

}

```

The simplistic AST

```json

[{"content":"def","index":0,"shape":"shape_internalcall","span":{"end":15,"start":12}},{"content":"test","index":1,"shape":"shape_string","span":{"end":20,"start":16}},{"content":"[arg]","index":2,"shape":"shape_signature","span":{"end":26,"start":21}},{"content":"{\r\n ","index":3,"shape":"shape_closure","span":{"end":32,"start":27}},{"content":"print","index":4,"shape":"shape_internalcall","span":{"end":37,"start":32}},{"content":"$arg","index":5,"shape":"shape_variable","span":{"end":42,"start":38}},{"content":"for","index":6,"shape":"shape_internalcall","span":{"end":49,"start":46}},{"content":"i","index":7,"shape":"shape_vardecl","span":{"end":51,"start":50}},{"content":"in","index":8,"shape":"shape_keyword","span":{"end":54,"start":52}},{"content":"(","index":9,"shape":"shape_block","span":{"end":56,"start":55}},{"content":"seq","index":10,"shape":"shape_internalcall","span":{"end":59,"start":56}},{"content":"1","index":11,"shape":"shape_int","span":{"end":61,"start":60}},{"content":"10","index":12,"shape":"shape_int","span":{"end":64,"start":62}},{"content":")","index":13,"shape":"shape_block","span":{"end":65,"start":64}},{"content":"{\r\n ","index":14,"shape":"shape_block","span":{"end":73,"start":66}},{"content":"echo","index":15,"shape":"shape_internalcall","span":{"end":77,"start":73}},{"content":"$i","index":16,"shape":"shape_variable","span":{"end":80,"start":78}},{"content":"\r\n }","index":17,"shape":"shape_block","span":{"end":85,"start":80}},{"content":"\r\n}","index":18,"shape":"shape_closure","span":{"end":88,"start":85}}]

```

# User-Facing Changes

<!-- List of all changes that impact the user experience here. This

helps us keep track of breaking changes. -->

# Tests + Formatting

<!--

Don't forget to add tests that cover your changes.

Make sure you've run and fixed any issues with these commands:

- `cargo fmt --all -- --check` to check standard code formatting (`cargo

fmt --all` applies these changes)

- `cargo clippy --workspace -- -D warnings -D clippy::unwrap_used -A

clippy::needless_collect` to check that you're using the standard code

style

- `cargo test --workspace` to check that all tests pass

- `cargo run -- crates/nu-std/tests/run.nu` to run the tests for the

standard library

> **Note**

> from `nushell` you can also use the `toolkit` as follows

> ```bash

> use toolkit.nu # or use an `env_change` hook to activate it

automatically

> toolkit check pr

> ```

-->

# After Submitting

<!-- If your PR had any user-facing changes, update [the

documentation](https://github.com/nushell/nushell.github.io) after the

PR is merged, if necessary. This will help us keep the docs up to date.

-->

2023-04-28 13:51:51 +00:00

|

|

|

let cwd = std::env::current_dir().expect("Could not get current working directory.");

|

|

|

|

|

engine_state.add_env_var("PWD".into(), Value::test_string(cwd.to_string_lossy()));

|

|

|

|

|

|

|

|

|

|

let mut working_set = StateWorkingSet::new(engine_state);

|

|

|

|

|

let file = std::fs::read(file_path);

|

|

|

|

|

|

|

|

|

|

if let Ok(contents) = file {

|

|

|

|

|

let offset = working_set.next_span_start();

|

|

|

|

|

let parsed_block = parse(&mut working_set, Some(file_path), &contents, false);

|

|

|

|

|

|

|

|

|

|

let flat = flatten_block(&working_set, &parsed_block);

|

|

|

|

|

let mut json_val: JsonValue = json!([]);

|

|

|

|

|

for (span, shape) in flat {

|

|

|

|

|

let content = String::from_utf8_lossy(working_set.get_span_contents(span)).to_string();

|

|

|

|

|

|

|

|

|

|

let json = json!(

|

|

|

|

|

{

|

|

|

|

|

"type": "ast",

|

|

|

|

|

"span": {

|

|

|

|

|

"start": span.start - offset,

|

|

|

|

|

"end": span.end - offset,

|

|

|

|

|

},

|

|

|

|

|

"shape": shape.to_string(),

|

|

|

|

|

"content": content // may not be necessary, but helpful for debugging

|

|

|

|

|

}

|

|

|

|

|

);

|

|

|

|

|

json_merge(&mut json_val, &json);

|

|

|

|

|

}

|

|

|

|

|

if let Ok(json_str) = serde_json::to_string(&json_val) {

|

|

|

|

|

println!("{json_str}");

|

|

|

|

|

} else {

|

|

|

|

|

println!("{{}}");

|

|

|

|

|

};

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn json_merge(a: &mut JsonValue, b: &JsonValue) {

|

|

|

|

|

match (a, b) {

|

|

|

|

|

(JsonValue::Object(ref mut a), JsonValue::Object(b)) => {

|

|

|

|

|

for (k, v) in b {

|

|

|

|

|

json_merge(a.entry(k).or_insert(JsonValue::Null), v);

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

(JsonValue::Array(ref mut a), JsonValue::Array(b)) => {

|

|

|

|

|

a.extend(b.clone());

|

|

|

|

|

}

|

|

|

|

|

(JsonValue::Array(ref mut a), JsonValue::Object(b)) => {

|

|

|

|

|

a.extend([JsonValue::Object(b.clone())]);

|

|

|

|

|

}

|

|

|

|

|

(a, b) => {

|

|

|

|

|

*a = b.clone();

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

}

|