A bucket is typically considered “public” if any user can list the contents of the bucket, and “private” if the bucket's contents can only be listed or written by certain S3 users. This is important to understand and emphasize. _**A public bucket will list all of its files and directories to any user that asks.**_

It should be emphasized that a public bucket is not a risk created by Amazon but rather a misconfiguration caused by the owner of the bucket. And although a file might be listed in a bucket it does not necessarily mean that it can be downloaded. Buckets and objects have their own access control lists (ACLs). Amazon provides information on managing access controls for buckets [here](http://docs.aws.amazon.com/AmazonS3/latest/dev/UsingAuthAccess.html). Furthermore, Amazon helps their users by publishing a best practices document on [public access considerations around S3 buckets](http://aws.amazon.com/articles/5050). The default configuration of an S3 bucket is private.

**Learn about AWS-S3 misconfiguration here:** [ **http://flaws.cloud**](../../../) **and** [**http://flaws2.cloud/**](http://flaws2.cloud) **(Most of the information here has been taken from those resources)**

You can get your credentials here [https://console.aws.amazon.com/iam/home?#/security\_credential](https://console.aws.amazon.com/iam/home?#/security\_credential) but you need an aws account, free tier account : [https://aws.amazon.com/s/dm/optimization/server-side-test/free-tier/free\_np/](https://aws.amazon.com/s/dm/optimization/server-side-test/free-tier/free\_np/)

If you try to access a bucket, but in the domain name you specify another region (for example the bucket is in `bucket.s3.amazonaws.com` but you try to access `bucket.s3-website-us-west-2.amazonaws.com`, then you will be redirected to the correct location.

To test the openness of the bucket a user can just enter the URL in their web browser. A private bucket will respond with "Access Denied". A public bucket will list the first 1,000 objects that have been stored.

If the bucket doesn't have a domain name, when trying to enumerate it, **only put the bucket name** and not the hole AWSs3 domain. Example: `s3://<BUCKETNAME>`

## Enumerating a AWS User

If you find some private AWS keys, you can create a profile using those:

And finally call the function accessing (notice that the ID, Name and functoin-name appears in the URL): [https://s33ppypa75.execute-api.us-west-2.amazonaws.com/Prod/level6](https://s33ppypa75.execute-api.us-west-2.amazonaws.com/Prod/level6)

Notice that _\*\*_AWS allows you to make snapshots of EC2's and databases (RDS). The main purpose for that is to make backups, but people sometimes use snapshots to get access back to their own EC2's when they forget the passwords.

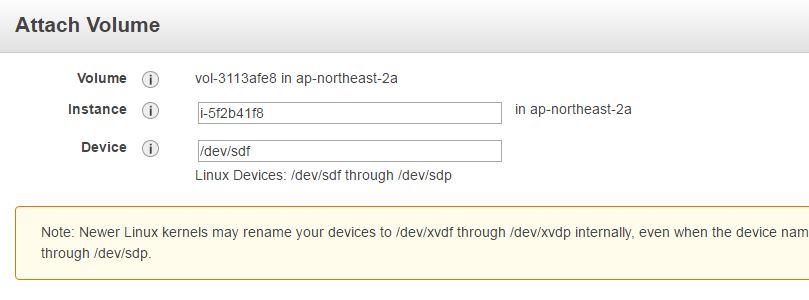

**Step 2:** Select the created volume, right click and select the “attach volume” option.

**Step 3:** Select the instance from the instance text box as shown below.[](https://devopscube.com/wp-content/uploads/2016/08/ebs-volume.jpg)

**Step 4:** Now, login to your ec2 instance and list the available disks using the following command.

If you want to read about how can you exploit meta-data in AWS [you should read this page](../../../pentesting-web/ssrf-server-side-request-forgery/#abusing-ssrf-in-aws-environment)