The documentation for `ShouldRun` doesn't completely explain what each of the variants you can return does. For instance, it isn't very clear that looping systems aren't executed again until after all the systems in a stage have had a chance to run.

This PR adds to the documentation for `ShouldRun`, and hopefully clarifies what is happening during a stage's execution when run criteria are checked and systems are being executed.

Some panic messages for systems include the system name, but there's a few panic messages which do not. This PR adds the system name for the remaining panic messages.

This is a continuation of the work done in #1864.

Related: #1846

This shrinks breakout from 316k to 310k when using `--feature dynamic`.

I haven't run the ecs benchmark to test performance as my laptop is too noisy for reliable benchmarking.

We discussed with @alice-i-cecile privately on iterators and agreed that making a custom ordered iterator over query makes no sense since materialization is required anyway and it's better to reuse existing components or code. Therefore, just adding an example to the documentation as requested.

Fixes#1470.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This includes a lot of single line comments where either saying more wasn't helpful or due to me not knowing enough about things yet to be able to go more indepth. Proofreading is very much welcome.

Fixes#1846

Got scared of the other "Requested resource does not exist" error at line 395 in `system_param.rs`, under `impl<'a, T: Component> SystemParamFetch<'a> for ResMutState<T> {`. Someone with better knowledge of the code might be able to go in and improve that one.

Fixes#1809. It makes it also possible to use `derive` for `SystemParam` inside ECS and avoid manual implementation. An alternative solution to macro changes is to use `use crate as bevy_ecs;` in `event.rs`.

fixes#1772

1st commit: the limit was at 11 as the macro was not using a range including the upper end. I changed that as it feels the purpose of the macro is clearer that way.

2nd commit: as suggested in the `// TODO`, I added a `Config` trait to go to 16 elements tuples. This means that if someone has a custom system parameter with a config that is not a tuple or an `Option`, they will have to implement `Config` for it instead of the standard `Default`.

I think [collection, thing_removed_from_collection] is a more natural order than [thing_removed_from_collection, collection]. Just a small tweak that I think we should include in 0.5.

Fixes#1753.

The problem was introduced while reworking the logic around stages' own criteria. Before #1675 they used to be stored and processed inline with the systems' criteria, and systems without criteria used that of their stage. After, criteria-less systems think they should run, always. This PR more or less restores previous behavior; a less cludge solution can wait until after 0.5 - ideally, until stageless.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

This is intended to help protect users against #1671. It doesn't resolve the issue, but I think its a good stop-gap solution for 0.5. A "full" fix would be very involved (and maybe not worth the added complexity).

Removing the checks on this line https://github.com/bevyengine/bevy/blob/main/crates/bevy_sprite/src/frustum_culling.rs#L64 and running the "many_sprites" example revealed two corner case bugs in bevy_ecs. The first, a simple and honest missed line introduced in #1471. The other, an insidious monster that has been there since the ECS v2 rewrite, just waiting for the time to strike:

1. #1471 accidentally removed the "insert" line for sparse set components with the "mutated" bundle state. Re-adding it fixes the problem. I did a slight refactor here to make the implementation simpler and remove a branch.

2. The other issue is nastier. ECS v2 added an "archetype graph". When determining what components were added/mutated during an archetype change, we read the FromBundle edge (which encodes this state) on the "new" archetype. The problem is that unlike "add edges" which are guaranteed to be unique for a given ("graph node", "bundle id") pair, FromBundle edges are not necessarily unique:

```rust

// OLD_ARCHETYPE -> NEW_ARCHETYPE

// [] -> [usize]

e.insert(2usize);

// [usize] -> [usize, i32]

e.insert(1i32);

// [usize, i32] -> [usize, i32]

e.insert(1i32);

// [usize, i32] -> [usize]

e.remove::<i32>();

// [usize] -> [usize, i32]

e.insert(1i32);

```

Note that the second `e.insert(1i32)` command has a different "archetype graph edge" than the first, but they both lead to the same "new archetype".

The fix here is simple: just remove FromBundle edges because they are broken and store the information in the "add edges", which are guaranteed to be unique.

FromBundle edges were added to cut down on the number of archetype accesses / make the archetype access patterns nicer. But benching this change resulted in no significant perf changes and the addition of get_2_mut() for archetypes resolves the access pattern issue.

In the current impl, next clears out the entire stack and replaces it with a new state. This PR moves this functionality into a replace method, and changes the behavior of next to only change the top state.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

I'm opening this prematurely; consider this an RFC that predates RFCs and therefore not super-RFC-like.

This PR does two "big" things: decouple run criteria from system sets, reimagine system sets as weapons of mass system description.

### What it lets us do:

* Reuse run criteria within a stage.

* Pipe output of one run criteria as input to another.

* Assign labels, dependencies, run criteria, and ambiguity sets to many systems at the same time.

### Things already done:

* Decoupled run criteria from system sets.

* Mass system description superpowers to `SystemSet`.

* Implemented `RunCriteriaDescriptor`.

* Removed `VirtualSystemSet`.

* Centralized all run criteria of `SystemStage`.

* Extended system descriptors with per-system run criteria.

* `.before()` and `.after()` for run criteria.

* Explicit order between state driver and related run criteria. Fixes#1672.

* Opt-in run criteria deduplication; default behavior is to panic.

* Labels (not exposed) for state run criteria; state run criteria are deduplicated.

### API issues that need discussion:

* [`FixedTimestep::step(1.0).label("my label")`](eaccf857cd/crates/bevy_ecs/src/schedule/run_criteria.rs (L120-L122)) and [`FixedTimestep::step(1.0).with_label("my label")`](eaccf857cd/crates/bevy_core/src/time/fixed_timestep.rs (L86-L89)) are both valid but do very different things.

---

I will try to maintain this post up-to-date as things change. Do check the diffs in "edited" thingy from time to time.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Resolves#1253#1562

This makes the Commands apis consistent with World apis. This moves to a "type state" pattern (like World) where the "current entity" is stored in an `EntityCommands` builder.

In general this tends to cuts down on indentation and line count. It comes at the cost of needing to type `commands` more and adding more semicolons to terminate expressions.

I also added `spawn_bundle` to Commands because this is a common enough operation that I think its worth providing a shorthand.

Updates the requirements on [fixedbitset](https://github.com/bluss/fixedbitset) to permit the latest version.

<details>

<summary>Commits</summary>

<ul>

<li>See full diff in <a href="https://github.com/bluss/fixedbitset/commits">compare view</a></li>

</ul>

</details>

<br />

Dependabot will resolve any conflicts with this PR as long as you don't alter it yourself. You can also trigger a rebase manually by commenting `@dependabot rebase`.

[//]: # (dependabot-automerge-start)

[//]: # (dependabot-automerge-end)

---

<details>

<summary>Dependabot commands and options</summary>

<br />

You can trigger Dependabot actions by commenting on this PR:

- `@dependabot rebase` will rebase this PR

- `@dependabot recreate` will recreate this PR, overwriting any edits that have been made to it

- `@dependabot merge` will merge this PR after your CI passes on it

- `@dependabot squash and merge` will squash and merge this PR after your CI passes on it

- `@dependabot cancel merge` will cancel a previously requested merge and block automerging

- `@dependabot reopen` will reopen this PR if it is closed

- `@dependabot close` will close this PR and stop Dependabot recreating it. You can achieve the same result by closing it manually

- `@dependabot ignore this major version` will close this PR and stop Dependabot creating any more for this major version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this minor version` will close this PR and stop Dependabot creating any more for this minor version (unless you reopen the PR or upgrade to it yourself)

- `@dependabot ignore this dependency` will close this PR and stop Dependabot creating any more for this dependency (unless you reopen the PR or upgrade to it yourself)

</details>

Fixes#1692

Alternative to #1696

This ensures that the capacity actually grows in increments of grow_amount, and also ensures that Table capacity is always <= column and entity vec capacity.

Debug logs that describe the new logic (running the example in #1692)

[out.txt](https://github.com/bevyengine/bevy/files/6173808/out.txt)

# Problem Definition

The current change tracking (via flags for both components and resources) fails to detect changes made by systems that are scheduled to run earlier in the frame than they are.

This issue is discussed at length in [#68](https://github.com/bevyengine/bevy/issues/68) and [#54](https://github.com/bevyengine/bevy/issues/54).

This is very much a draft PR, and contributions are welcome and needed.

# Criteria

1. Each change is detected at least once, no matter the ordering.

2. Each change is detected at most once, no matter the ordering.

3. Changes should be detected the same frame that they are made.

4. Competitive ergonomics. Ideally does not require opting-in.

5. Low CPU overhead of computation.

6. Memory efficient. This must not increase over time, except where the number of entities / resources does.

7. Changes should not be lost for systems that don't run.

8. A frame needs to act as a pure function. Given the same set of entities / components it needs to produce the same end state without side-effects.

**Exact** change-tracking proposals satisfy criteria 1 and 2.

**Conservative** change-tracking proposals satisfy criteria 1 but not 2.

**Flaky** change tracking proposals satisfy criteria 2 but not 1.

# Code Base Navigation

There are three types of flags:

- `Added`: A piece of data was added to an entity / `Resources`.

- `Mutated`: A piece of data was able to be modified, because its `DerefMut` was accessed

- `Changed`: The bitwise OR of `Added` and `Changed`

The special behavior of `ChangedRes`, with respect to the scheduler is being removed in [#1313](https://github.com/bevyengine/bevy/pull/1313) and does not need to be reproduced.

`ChangedRes` and friends can be found in "bevy_ecs/core/resources/resource_query.rs".

The `Flags` trait for Components can be found in "bevy_ecs/core/query.rs".

`ComponentFlags` are stored in "bevy_ecs/core/archetypes.rs", defined on line 446.

# Proposals

**Proposal 5 was selected for implementation.**

## Proposal 0: No Change Detection

The baseline, where computations are performed on everything regardless of whether it changed.

**Type:** Conservative

**Pros:**

- already implemented

- will never miss events

- no overhead

**Cons:**

- tons of repeated work

- doesn't allow users to avoid repeating work (or monitoring for other changes)

## Proposal 1: Earlier-This-Tick Change Detection

The current approach as of Bevy 0.4. Flags are set, and then flushed at the end of each frame.

**Type:** Flaky

**Pros:**

- already implemented

- simple to understand

- low memory overhead (2 bits per component)

- low time overhead (clear every flag once per frame)

**Cons:**

- misses systems based on ordering

- systems that don't run every frame miss changes

- duplicates detection when looping

- can lead to unresolvable circular dependencies

## Proposal 2: Two-Tick Change Detection

Flags persist for two frames, using a double-buffer system identical to that used in events.

A change is observed if it is found in either the current frame's list of changes or the previous frame's.

**Type:** Conservative

**Pros:**

- easy to understand

- easy to implement

- low memory overhead (4 bits per component)

- low time overhead (bit mask and shift every flag once per frame)

**Cons:**

- can result in a great deal of duplicated work

- systems that don't run every frame miss changes

- duplicates detection when looping

## Proposal 3: Last-Tick Change Detection

Flags persist for two frames, using a double-buffer system identical to that used in events.

A change is observed if it is found in the previous frame's list of changes.

**Type:** Exact

**Pros:**

- exact

- easy to understand

- easy to implement

- low memory overhead (4 bits per component)

- low time overhead (bit mask and shift every flag once per frame)

**Cons:**

- change detection is always delayed, possibly causing painful chained delays

- systems that don't run every frame miss changes

- duplicates detection when looping

## Proposal 4: Flag-Doubling Change Detection

Combine Proposal 2 and Proposal 3. Differentiate between `JustChanged` (current behavior) and `Changed` (Proposal 3).

Pack this data into the flags according to [this implementation proposal](https://github.com/bevyengine/bevy/issues/68#issuecomment-769174804).

**Type:** Flaky + Exact

**Pros:**

- allows users to acc

- easy to implement

- low memory overhead (4 bits per component)

- low time overhead (bit mask and shift every flag once per frame)

**Cons:**

- users must specify the type of change detection required

- still quite fragile to system ordering effects when using the flaky `JustChanged` form

- cannot get immediate + exact results

- systems that don't run every frame miss changes

- duplicates detection when looping

## [SELECTED] Proposal 5: Generation-Counter Change Detection

A global counter is increased after each system is run. Each component saves the time of last mutation, and each system saves the time of last execution. Mutation is detected when the component's counter is greater than the system's counter. Discussed [here](https://github.com/bevyengine/bevy/issues/68#issuecomment-769174804). How to handle addition detection is unsolved; the current proposal is to use the highest bit of the counter as in proposal 1.

**Type:** Exact (for mutations), flaky (for additions)

**Pros:**

- low time overhead (set component counter on access, set system counter after execution)

- robust to systems that don't run every frame

- robust to systems that loop

**Cons:**

- moderately complex implementation

- must be modified as systems are inserted dynamically

- medium memory overhead (4 bytes per component + system)

- unsolved addition detection

## Proposal 6: System-Data Change Detection

For each system, track which system's changes it has seen. This approach is only worth fully designing and implementing if Proposal 5 fails in some way.

**Type:** Exact

**Pros:**

- exact

- conceptually simple

**Cons:**

- requires storing data on each system

- implementation is complex

- must be modified as systems are inserted dynamically

## Proposal 7: Total-Order Change Detection

Discussed [here](https://github.com/bevyengine/bevy/issues/68#issuecomment-754326523). This proposal is somewhat complicated by the new scheduler, but I believe it should still be conceptually feasible. This approach is only worth fully designing and implementing if Proposal 5 fails in some way.

**Type:** Exact

**Pros:**

- exact

- efficient data storage relative to other exact proposals

**Cons:**

- requires access to the scheduler

- complex implementation and difficulty grokking

- must be modified as systems are inserted dynamically

# Tests

- We will need to verify properties 1, 2, 3, 7 and 8. Priority: 1 > 2 = 3 > 8 > 7

- Ideally we can use identical user-facing syntax for all proposals, allowing us to re-use the same syntax for each.

- When writing tests, we need to carefully specify order using explicit dependencies.

- These tests will need to be duplicated for both components and resources.

- We need to be sure to handle cases where ambiguous system orders exist.

`changing_system` is always the system that makes the changes, and `detecting_system` always detects the changes.

The component / resource changed will be simple boolean wrapper structs.

## Basic Added / Mutated / Changed

2 x 3 design:

- Resources vs. Components

- Added vs. Changed vs. Mutated

- `changing_system` runs before `detecting_system`

- verify at the end of tick 2

## At Least Once

2 x 3 design:

- Resources vs. Components

- Added vs. Changed vs. Mutated

- `changing_system` runs after `detecting_system`

- verify at the end of tick 2

## At Most Once

2 x 3 design:

- Resources vs. Components

- Added vs. Changed vs. Mutated

- `changing_system` runs once before `detecting_system`

- increment a counter based on the number of changes detected

- verify at the end of tick 2

## Fast Detection

2 x 3 design:

- Resources vs. Components

- Added vs. Changed vs. Mutated

- `changing_system` runs before `detecting_system`

- verify at the end of tick 1

## Ambiguous System Ordering Robustness

2 x 3 x 2 design:

- Resources vs. Components

- Added vs. Changed vs. Mutated

- `changing_system` runs [before/after] `detecting_system` in tick 1

- `changing_system` runs [after/before] `detecting_system` in tick 2

## System Pausing

2 x 3 design:

- Resources vs. Components

- Added vs. Changed vs. Mutated

- `changing_system` runs in tick 1, then is disabled by run criteria

- `detecting_system` is disabled by run criteria until it is run once during tick 3

- verify at the end of tick 3

## Addition Causes Mutation

2 design:

- Resources vs. Components

- `adding_system_1` adds a component / resource

- `adding system_2` adds the same component / resource

- verify the `Mutated` flag at the end of the tick

- verify the `Added` flag at the end of the tick

First check tests for: https://github.com/bevyengine/bevy/issues/333

Second check tests for: https://github.com/bevyengine/bevy/issues/1443

## Changes Made By Commands

- `adding_system` runs in Update in tick 1, and sends a command to add a component

- `detecting_system` runs in Update in tick 1 and 2, after `adding_system`

- We can't detect the changes in tick 1, since they haven't been processed yet

- If we were to track these changes as being emitted by `adding_system`, we can't detect the changes in tick 2 either, since `detecting_system` has already run once after `adding_system` :(

# Benchmarks

See: [general advice](https://github.com/bevyengine/bevy/blob/master/docs/profiling.md), [Criterion crate](https://github.com/bheisler/criterion.rs)

There are several critical parameters to vary:

1. entity count (1 to 10^9)

2. fraction of entities that are changed (0% to 100%)

3. cost to perform work on changed entities, i.e. workload (1 ns to 1s)

1 and 2 should be varied between benchmark runs. 3 can be added on computationally.

We want to measure:

- memory cost

- run time

We should collect these measurements across several frames (100?) to reduce bootup effects and accurately measure the mean, variance and drift.

Entity-component change detection is much more important to benchmark than resource change detection, due to the orders of magnitude higher number of pieces of data.

No change detection at all should be included in benchmarks as a second control for cases where missing changes is unacceptable.

## Graphs

1. y: performance, x: log_10(entity count), color: proposal, facet: performance metric. Set cost to perform work to 0.

2. y: run time, x: cost to perform work, color: proposal, facet: fraction changed. Set number of entities to 10^6

3. y: memory, x: frames, color: proposal

# Conclusions

1. Is the theoretical categorization of the proposals correct according to our tests?

2. How does the performance of the proposals compare without any load?

3. How does the performance of the proposals compare with realistic loads?

4. At what workload does more exact change tracking become worth the (presumably) higher overhead?

5. When does adding change-detection to save on work become worthwhile?

6. Is there enough divergence in performance between the best solutions in each class to ship more than one change-tracking solution?

# Implementation Plan

1. Write a test suite.

2. Verify that tests fail for existing approach.

3. Write a benchmark suite.

4. Get performance numbers for existing approach.

5. Implement, test and benchmark various solutions using a Git branch per proposal.

6. Create a draft PR with all solutions and present results to team.

7. Select a solution and replace existing change detection.

Co-authored-by: Brice DAVIER <bricedavier@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

An alternative to StateStages that uses SystemSets. Also includes pop and push operations since this was originally developed for my personal project which needed them.

Fixes all warnings from `cargo doc --all`.

Those related to code blocks were introduced in #1612, but re-formatting using the experimental features in `rustfmt.toml` doesn't seem to reintroduce them.

These are largely targeted at beginners, as `Entity`, `Component` and `System` are the most obvious terms to search when first getting introduced to Bevy.

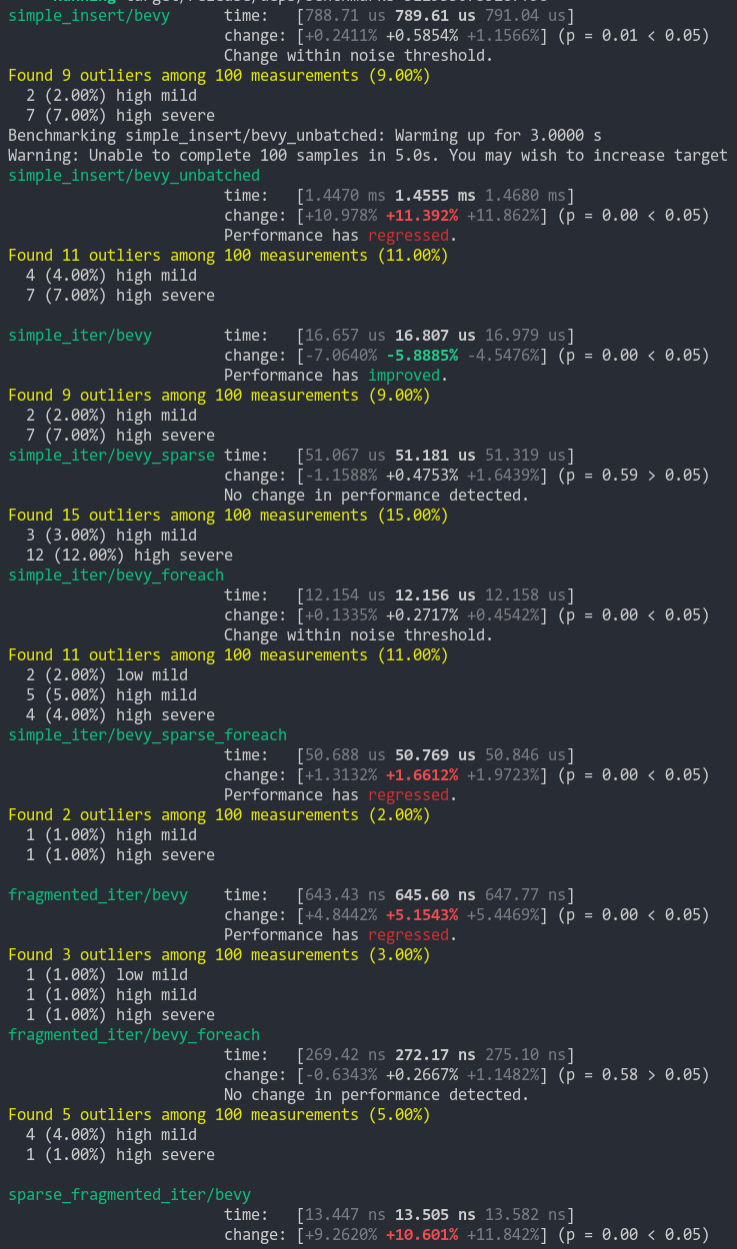

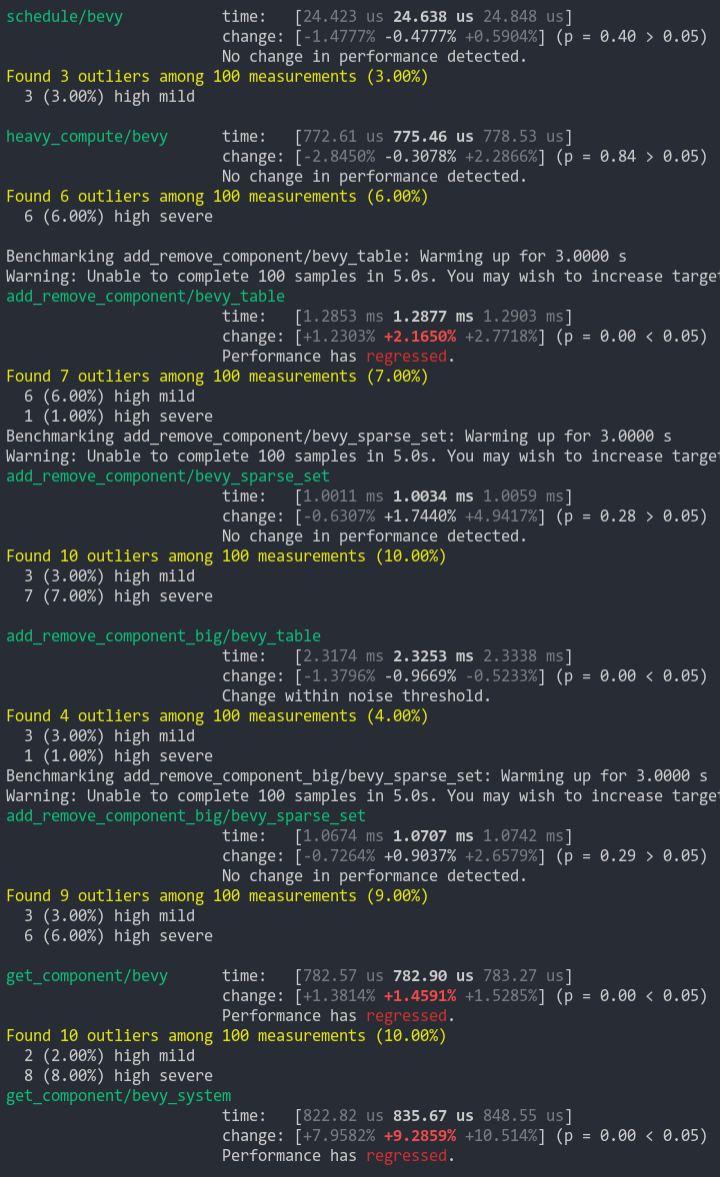

Removes `get_unchecked` and `get_unchecked_mut` from `Tables` and `Archetypes` collections in favor of safe Index implementations. This fixes a safety error in `Archetypes::get_id_or_insert()` (which previously relied on TableId being valid to be safe ... the alternative was to make that method unsafe too). It also cuts down on a lot of unsafe and makes the code easier to look at. I'm not sure what changed since the last benchmark, but these numbers are more favorable than my last tests of similar changes. I didn't include the Components collection as those severely killed perf last time I tried. But this does inspire me to try again (just in a separate pr)!

Note that the `simple_insert/bevy_unbatched` benchmark fluctuates a lot on both branches (this was also true for prior versions of bevy). It seems like the allocator has more variance for many small allocations. And `sparse_frag_iter/bevy` operates on such a small scale that 10% fluctuations are common.

Some benches do take a small hit here, but I personally think its worth it.

This also fixes a safety error in Query::for_each_mut, which needed to mutably borrow Query (aaahh!).