# Objective

- A more intuitive distinction between the two. `remove_intersection` is verbose and unclear.

- `EntityMut::remove` and `Commands::remove` should match.

## Solution

- What the title says.

---

## Migration Guide

Before

```rust

fn clear_children(parent: Entity, world: &mut World) {

if let Some(children) = world.entity_mut(parent).remove::<Children>() {

for &child in &children.0 {

world.entity_mut(child).remove_intersection::<Parent>();

}

}

}

```

After

```rust

fn clear_children(parent: Entity, world: &mut World) {

if let Some(children) = world.entity_mut(parent).take::<Children>() {

for &child in &children.0 {

world.entity_mut(child).remove::<Parent>();

}

}

}

```

# Objective

One pattern to increase parallelism is deferred mutation: instead of directly mutating the world (and preventing other systems from running at the same time), you queue up operations to be applied to the world at the end of the stage. The most common example of this pattern uses the `Commands` SystemParam.

In order to avoid the overhead associated with commands, some power users may want to add their own deferred mutation behavior. To do this, you must implement the unsafe trait `SystemParam`, which interfaces with engine internals in a way that we'd like users to be able to avoid.

## Solution

Add the `Deferred<T>` primitive `SystemParam`, which encapsulates the deferred mutation pattern.

This can be combined with other types of `SystemParam` to safely and ergonomically create powerful custom types.

Essentially, this is just a variant of `Local<T>` which can run code at the end of the stage.

This type is used in the engine to derive `Commands` and `ParallelCommands`, which removes a bunch of unsafe boilerplate.

### Example

```rust

use bevy_ecs::system::{Deferred, SystemBuffer};

/// Sends events with a delay, but may run in parallel with other event writers.

#[derive(SystemParam)]

pub struct BufferedEventWriter<'s, E: Event> {

queue: Deferred<'s, EventQueue<E>>,

}

struct EventQueue<E>(Vec<E>);

impl<'s, E: Event> BufferedEventWriter<'s, E> {

/// Queues up an event to be sent at the end of this stage.

pub fn send(&mut self, event: E) {

self.queue.0.push(event);

}

}

// The `SystemBuffer` trait controls how [`Deferred`] gets applied at the end of the stage.

impl<E: Event> SystemBuffer for EventQueue<E> {

fn apply(&mut self, world: &mut World) {

let mut events = world.resource_mut::<Events<E>>();

for e in self.0.drain(..) {

events.send(e);

}

}

}

```

---

## Changelog

+ Added the `SystemParam` type `Deferred<T>`, which can be used to defer `World` mutations. Powered by the new trait `SystemBuffer`.

Huge thanks to @maniwani, @devil-ira, @hymm, @cart, @superdump and @jakobhellermann for the help with this PR.

# Objective

- Followup #6587.

- Minimal integration for the Stageless Scheduling RFC: https://github.com/bevyengine/rfcs/pull/45

## Solution

- [x] Remove old scheduling module

- [x] Migrate new methods to no longer use extension methods

- [x] Fix compiler errors

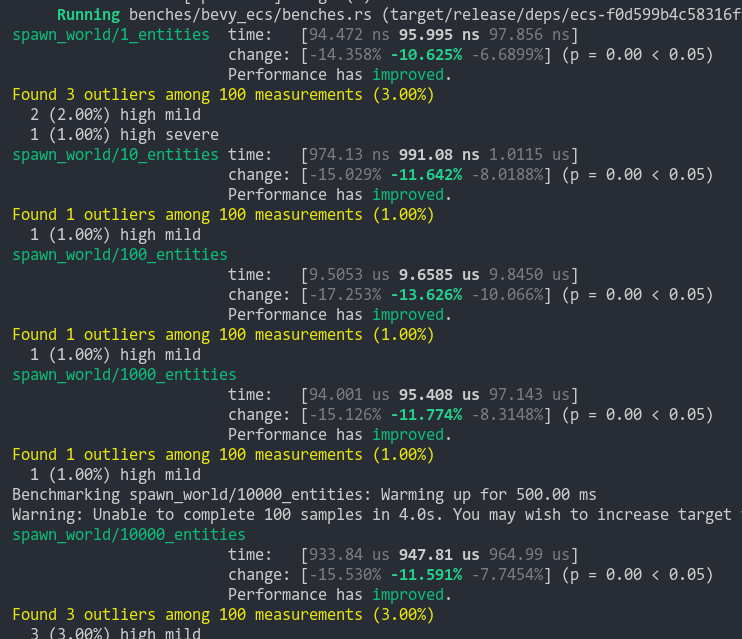

- [x] Fix benchmarks

- [x] Fix examples

- [x] Fix docs

- [x] Fix tests

## Changelog

### Added

- a large number of methods on `App` to work with schedules ergonomically

- the `CoreSchedule` enum

- `App::add_extract_system` via the `RenderingAppExtension` trait extension method

- the private `prepare_view_uniforms` system now has a public system set for scheduling purposes, called `ViewSet::PrepareUniforms`

### Removed

- stages, and all code that mentions stages

- states have been dramatically simplified, and no longer use a stack

- `RunCriteriaLabel`

- `AsSystemLabel` trait

- `on_hierarchy_reports_enabled` run criteria (now just uses an ad hoc resource checking run condition)

- systems in `RenderSet/Stage::Extract` no longer warn when they do not read data from the main world

- `RunCriteriaLabel`

- `transform_propagate_system_set`: this was a nonstandard pattern that didn't actually provide enough control. The systems are already `pub`: the docs have been updated to ensure that the third-party usage is clear.

### Changed

- `System::default_labels` is now `System::default_system_sets`.

- `App::add_default_labels` is now `App::add_default_sets`

- `CoreStage` and `StartupStage` enums are now `CoreSet` and `StartupSet`

- `App::add_system_set` was renamed to `App::add_systems`

- The `StartupSchedule` label is now defined as part of the `CoreSchedules` enum

- `.label(SystemLabel)` is now referred to as `.in_set(SystemSet)`

- `SystemLabel` trait was replaced by `SystemSet`

- `SystemTypeIdLabel<T>` was replaced by `SystemSetType<T>`

- The `ReportHierarchyIssue` resource now has a public constructor (`new`), and implements `PartialEq`

- Fixed time steps now use a schedule (`CoreSchedule::FixedTimeStep`) rather than a run criteria.

- Adding rendering extraction systems now panics rather than silently failing if no subapp with the `RenderApp` label is found.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied.

- `SceneSpawnerSystem` now runs under `CoreSet::Update`, rather than `CoreStage::PreUpdate.at_end()`.

- `bevy_pbr::add_clusters` is no longer an exclusive system

- the top level `bevy_ecs::schedule` module was replaced with `bevy_ecs::scheduling`

- `tick_global_task_pools_on_main_thread` is no longer run as an exclusive system. Instead, it has been replaced by `tick_global_task_pools`, which uses a `NonSend` resource to force running on the main thread.

## Migration Guide

- Calls to `.label(MyLabel)` should be replaced with `.in_set(MySet)`

- Stages have been removed. Replace these with system sets, and then add command flushes using the `apply_system_buffers` exclusive system where needed.

- The `CoreStage`, `StartupStage, `RenderStage` and `AssetStage` enums have been replaced with `CoreSet`, `StartupSet, `RenderSet` and `AssetSet`. The same scheduling guarantees have been preserved.

- Systems are no longer added to `CoreSet::Update` by default. Add systems manually if this behavior is needed, although you should consider adding your game logic systems to `CoreSchedule::FixedTimestep` instead for more reliable framerate-independent behavior.

- Similarly, startup systems are no longer part of `StartupSet::Startup` by default. In most cases, this won't matter to you.

- For example, `add_system_to_stage(CoreStage::PostUpdate, my_system)` should be replaced with

- `add_system(my_system.in_set(CoreSet::PostUpdate)`

- When testing systems or otherwise running them in a headless fashion, simply construct and run a schedule using `Schedule::new()` and `World::run_schedule` rather than constructing stages

- Run criteria have been renamed to run conditions. These can now be combined with each other and with states.

- Looping run criteria and state stacks have been removed. Use an exclusive system that runs a schedule if you need this level of control over system control flow.

- For app-level control flow over which schedules get run when (such as for rollback networking), create your own schedule and insert it under the `CoreSchedule::Outer` label.

- Fixed timesteps are now evaluated in a schedule, rather than controlled via run criteria. The `run_fixed_timestep` system runs this schedule between `CoreSet::First` and `CoreSet::PreUpdate` by default.

- Command flush points introduced by `AssetStage` have been removed. If you were relying on these, add them back manually.

- Adding extract systems is now typically done directly on the main app. Make sure the `RenderingAppExtension` trait is in scope, then call `app.add_extract_system(my_system)`.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied. You may need to order your movement systems to occur before this system in order to avoid system order ambiguities in culling behavior.

- the `RenderLabel` `AppLabel` was renamed to `RenderApp` for clarity

- `App::add_state` now takes 0 arguments: the starting state is set based on the `Default` impl.

- Instead of creating `SystemSet` containers for systems that run in stages, simply use `.on_enter::<State::Variant>()` or its `on_exit` or `on_update` siblings.

- `SystemLabel` derives should be replaced with `SystemSet`. You will also need to add the `Debug`, `PartialEq`, `Eq`, and `Hash` traits to satisfy the new trait bounds.

- `with_run_criteria` has been renamed to `run_if`. Run criteria have been renamed to run conditions for clarity, and should now simply return a bool.

- States have been dramatically simplified: there is no longer a "state stack". To queue a transition to the next state, call `NextState::set`

## TODO

- [x] remove dead methods on App and World

- [x] add `App::add_system_to_schedule` and `App::add_systems_to_schedule`

- [x] avoid adding the default system set at inappropriate times

- [x] remove any accidental cycles in the default plugins schedule

- [x] migrate benchmarks

- [x] expose explicit labels for the built-in command flush points

- [x] migrate engine code

- [x] remove all mentions of stages from the docs

- [x] verify docs for States

- [x] fix uses of exclusive systems that use .end / .at_start / .before_commands

- [x] migrate RenderStage and AssetStage

- [x] migrate examples

- [x] ensure that transform propagation is exported in a sufficiently public way (the systems are already pub)

- [x] ensure that on_enter schedules are run at least once before the main app

- [x] re-enable opt-in to execution order ambiguities

- [x] revert change to `update_bounds` to ensure it runs in `PostUpdate`

- [x] test all examples

- [x] unbreak directional lights

- [x] unbreak shadows (see 3d_scene, 3d_shape, lighting, transparaency_3d examples)

- [x] game menu example shows loading screen and menu simultaneously

- [x] display settings menu is a blank screen

- [x] `without_winit` example panics

- [x] ensure all tests pass

- [x] SubApp doc test fails

- [x] runs_spawn_local tasks fails

- [x] [Fix panic_when_hierachy_cycle test hanging](https://github.com/alice-i-cecile/bevy/pull/120)

## Points of Difficulty and Controversy

**Reviewers, please give feedback on these and look closely**

1. Default sets, from the RFC, have been removed. These added a tremendous amount of implicit complexity and result in hard to debug scheduling errors. They're going to be tackled in the form of "base sets" by @cart in a followup.

2. The outer schedule controls which schedule is run when `App::update` is called.

3. I implemented `Label for `Box<dyn Label>` for our label types. This enables us to store schedule labels in concrete form, and then later run them. I ran into the same set of problems when working with one-shot systems. We've previously investigated this pattern in depth, and it does not appear to lead to extra indirection with nested boxes.

4. `SubApp::update` simply runs the default schedule once. This sucks, but this whole API is incomplete and this was the minimal changeset.

5. `time_system` and `tick_global_task_pools_on_main_thread` no longer use exclusive systems to attempt to force scheduling order

6. Implemetnation strategy for fixed timesteps

7. `AssetStage` was migrated to `AssetSet` without reintroducing command flush points. These did not appear to be used, and it's nice to remove these bottlenecks.

8. Migration of `bevy_render/lib.rs` and pipelined rendering. The logic here is unusually tricky, as we have complex scheduling requirements.

## Future Work (ideally before 0.10)

- Rename schedule_v3 module to schedule or scheduling

- Add a derive macro to states, and likely a `EnumIter` trait of some form

- Figure out what exactly to do with the "systems added should basically work by default" problem

- Improve ergonomics for working with fixed timesteps and states

- Polish FixedTime API to match Time

- Rebase and merge #7415

- Resolve all internal ambiguities (blocked on better tools, especially #7442)

- Add "base sets" to replace the removed default sets.

# Objective

I found several words in code and docs are incorrect. This should be fixed.

## Solution

- Fix several minor typos

Co-authored-by: Chris Ohk <utilforever@gmail.com>

# Objective

Fixes#3184. Fixes#6640. Fixes#4798. Using `Query::par_for_each(_mut)` currently requires a `batch_size` parameter, which affects how it chunks up large archetypes and tables into smaller chunks to run in parallel. Tuning this value is difficult, as the performance characteristics entirely depends on the state of the `World` it's being run on. Typically, users will just use a flat constant and just tune it by hand until it performs well in some benchmarks. However, this is both error prone and risks overfitting the tuning on that benchmark.

This PR proposes a naive automatic batch-size computation based on the current state of the `World`.

## Background

`Query::par_for_each(_mut)` schedules a new Task for every archetype or table that it matches. Archetypes/tables larger than the batch size are chunked into smaller tasks. Assuming every entity matched by the query has an identical workload, this makes the worst case scenario involve using a batch size equal to the size of the largest matched archetype or table. Conversely, a batch size of `max {archetype, table} size / thread count * COUNT_PER_THREAD` is likely the sweetspot where the overhead of scheduling tasks is minimized, at least not without grouping small archetypes/tables together.

There is also likely a strict minimum batch size below which the overhead of scheduling these tasks is heavier than running the entire thing single-threaded.

## Solution

- [x] Remove the `batch_size` from `Query(State)::par_for_each` and friends.

- [x] Add a check to compute `batch_size = max {archeytpe/table} size / thread count * COUNT_PER_THREAD`

- [x] ~~Panic if thread count is 0.~~ Defer to `for_each` if the thread count is 1 or less.

- [x] Early return if there is no matched table/archetype.

- [x] Add override option for users have queries that strongly violate the initial assumption that all iterated entities have an equal workload.

---

## Changelog

Changed: `Query::par_for_each(_mut)` has been changed to `Query::par_iter(_mut)` and will now automatically try to produce a batch size for callers based on the current `World` state.

## Migration Guide

The `batch_size` parameter for `Query(State)::par_for_each(_mut)` has been removed. These calls will automatically compute a batch size for you. Remove these parameters from all calls to these functions.

Before:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_for_each(32, |comp| {

...

});

}

```

After:

```rust

fn parallel_system(query: Query<&MyComponent>) {

query.par_iter().for_each(|comp| {

...

});

}

```

Co-authored-by: Arnav Choubey <56453634+x-52@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Corey Farwell <coreyf@rwell.org>

Co-authored-by: Aevyrie <aevyrie@gmail.com>

# Objective

- Safety comments for the `CommandQueue` type are quite sparse and very imprecise. Sometimes, they are right for the wrong reasons or use circular reasoning.

## Solution

- Document previously-implicit safety invariants.

- Rewrite safety comments to actually reflect the specific invariants of each operation.

- Use `OwningPtr` instead of raw pointers, to encode an invariant in the type system instead of via comments.

- Use typed pointer methods when possible to increase reliability.

---

## Changelog

+ Added the function `OwningPtr::read_unaligned`.

Spiritual successor to #5205.

Actual successor to #6865.

# Objective

Currently, system params are defined using three traits: `SystemParam`, `ReadOnlySystemParam`, `SystemParamState`. The behavior for each param is specified by the `SystemParamState` trait, while `SystemParam` simply defers to the state.

Splitting the traits in this way makes it easier to implement within macros, but it increases the cognitive load. Worst of all, this approach requires each `MySystemParam` to have a public `MySystemParamState` type associated with it.

## Solution

* Merge the trait `SystemParamState` into `SystemParam`.

* Remove all trivial `SystemParam` state types.

* `OptionNonSendMutState<T>`: you will not be missed.

---

- [x] Fix/resolve the remaining test failure.

## Changelog

* Removed the trait `SystemParamState`, merging its functionality into `SystemParam`.

## Migration Guide

**Note**: this should replace the migration guide for #6865.

This is relative to Bevy 0.9, not main.

The traits `SystemParamState` and `SystemParamFetch` have been removed, and their functionality has been transferred to `SystemParam`.

```rust

// Before (0.9)

impl SystemParam for MyParam<'_, '_> {

type State = MyParamState;

}

unsafe impl SystemParamState for MyParamState {

fn init(world: &mut World, system_meta: &mut SystemMeta) -> Self { ... }

}

unsafe impl<'w, 's> SystemParamFetch<'w, 's> for MyParamState {

type Item = MyParam<'w, 's>;

fn get_param(&mut self, ...) -> Self::Item;

}

unsafe impl ReadOnlySystemParamFetch for MyParamState { }

// After (0.10)

unsafe impl SystemParam for MyParam<'_, '_> {

type State = MyParamState;

type Item<'w, 's> = MyParam<'w, 's>;

fn init_state(world: &mut World, system_meta: &mut SystemMeta) -> Self::State { ... }

fn get_param<'w, 's>(state: &mut Self::State, ...) -> Self::Item<'w, 's>;

}

unsafe impl ReadOnlySystemParam for MyParam<'_, '_> { }

```

The trait `ReadOnlySystemParamFetch` has been replaced with `ReadOnlySystemParam`.

```rust

// Before

unsafe impl ReadOnlySystemParamFetch for MyParamState {}

// After

unsafe impl ReadOnlySystemParam for MyParam<'_, '_> {}

```

# Objective

Resolve#6156.

The most common type of command is one that runs for a single entity. Built-in commands like this can be ergonomically added to the command queue using the `EntityCommands` struct. However, adding custom entity commands to the queue is quite cumbersome. You must first spawn an entity, store its ID in a local, then construct a command using that ID and add it to the queue. This prevents method chaining, which is the main benefit of using `EntityCommands`.

### Example (before)

```rust

struct MyCustomCommand(Entity);

impl Command for MyCustomCommand { ... }

let id = commands.spawn((...)).id();

commmands.add(MyCustomCommand(id));

```

## Solution

Add the `EntityCommand` trait, which allows directly adding per-entity commands to the `EntityCommands` struct.

### Example (after)

```rust

struct MyCustomCommand;

impl EntityCommand for MyCustomCommand { ... }

commands.spawn((...)).add(MyCustomCommand);

```

---

## Changelog

- Added the trait `EntityCommand`. This is a counterpart of `Command` for types that execute code for a single entity.

## Future Work

If we feel its necessary, we can simplify built-in commands (such as `Despawn`) to use this trait.

# Objective

Any closure with the signature `FnOnce(&mut World)` implicitly implements the trait `Command` due to a blanket implementation. However, this implementation unnecessarily has the `Sync` bound, which limits the types that can be used.

## Solution

Remove the bound.

---

## Changelog

- `Command` closures no longer need to implement the marker trait `std::marker::Sync`.

# Objective

A separate `tracing` span for running a system's commands is created, even if the system doesn't have commands. This is adding extra measuring overhead (see #4892) where it's not needed.

## Solution

Move the span into `ParallelCommandState` and `CommandQueue`'s `SystemParamState::apply`. To get the right metadata for the span, a additional `&SystemMeta` parameter was added to `SystemParamState::apply`.

---

## Changelog

Added: `SystemMeta::name`

Changed: Systems without `Commands` and `ParallelCommands` will no longer show a "system_commands" span when profiling.

Changed: `SystemParamState::apply` now takes a `&SystemMeta` parameter in addition to the provided `&mut World`.

# Objective

* Implementing a custom `SystemParam` by hand requires implementing three traits -- four if it is read-only.

* The trait `SystemParamFetch<'w, 's>` is a workaround from before we had generic associated types, and is no longer necessary.

## Solution

* Combine the trait `SystemParamFetch` with `SystemParamState`.

* I decided to remove the `Fetch` name and keep the `State` name, since the former was consistently conflated with the latter.

* Replace the trait `ReadOnlySystemParamFetch` with `ReadOnlySystemParam`, which simplifies trait bounds in generic code.

---

## Changelog

- Removed the trait `SystemParamFetch`, moving its functionality to `SystemParamState`.

- Replaced the trait `ReadOnlySystemParamFetch` with `ReadOnlySystemParam`.

## Migration Guide

The trait `SystemParamFetch` has been removed, and its functionality has been transferred to `SystemParamState`.

```rust

// Before

impl SystemParamState for MyParamState {

fn init(world: &mut World, system_meta: &mut SystemMeta) -> Self { ... }

}

impl<'w, 's> SystemParamFetch<'w, 's> for MyParamState {

type Item = MyParam<'w, 's>;

fn get_param(...) -> Self::Item;

}

// After

impl SystemParamState for MyParamState {

type Item<'w, 's> = MyParam<'w, 's>; // Generic associated types!

fn init(world: &mut World, system_meta: &mut SystemMeta) -> Self { ... }

fn get_param<'w, 's>(...) -> Self::Item<'w, 's>;

}

```

The trait `ReadOnlySystemParamFetch` has been replaced with `ReadOnlySystemParam`.

```rust

// Before

unsafe impl ReadOnlySystemParamFetch for MyParamState {}

// After

unsafe impl<'w, 's> ReadOnlySystemParam for MyParam<'w, 's> {}

```

* Move the despawn debug log from `World::despawn` to `EntityMut::despawn`.

* Move the despawn non-existent warning log from `Commands::despawn` to `World::despawn`.

This should make logging consistent regardless of which of the three `despawn` methods is used.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

As explained by #5960, `Commands::get_or_spawn` may return a dangling `EntityCommands` that references a non-existing entities. As explained in [this comment], it may be undesirable to make the method return an `Option`.

- Addresses #5960

- Alternative to #5961

## Solution

This PR adds a doc comment to the method to inform the user that the returned `EntityCommands` is not guaranteed to be valid. It also adds panic doc comments on appropriate `EntityCommands` methods.

[this comment]: https://github.com/bevyengine/bevy/pull/5961#issuecomment-1259870849

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

# Objective

Take advantage of the "impl Bundle for Component" changes in #2975 / add the follow up changes discussed there.

## Solution

- Change `insert` and `remove` to accept a Bundle instead of a Component (for both Commands and World)

- Deprecate `insert_bundle`, `remove_bundle`, and `remove_bundle_intersection`

- Add `remove_intersection`

---

## Changelog

- Change `insert` and `remove` now accept a Bundle instead of a Component (for both Commands and World)

- `insert_bundle` and `remove_bundle` are deprecated

## Migration Guide

Replace `insert_bundle` with `insert`:

```rust

// Old (0.8)

commands.spawn().insert_bundle(SomeBundle::default());

// New (0.9)

commands.spawn().insert(SomeBundle::default());

```

Replace `remove_bundle` with `remove`:

```rust

// Old (0.8)

commands.entity(some_entity).remove_bundle::<SomeBundle>();

// New (0.9)

commands.entity(some_entity).remove::<SomeBundle>();

```

Replace `remove_bundle_intersection` with `remove_intersection`:

```rust

// Old (0.8)

world.entity_mut(some_entity).remove_bundle_intersection::<SomeBundle>();

// New (0.9)

world.entity_mut(some_entity).remove_intersection::<SomeBundle>();

```

Consider consolidating as many operations as possible to improve ergonomics and cut down on archetype moves:

```rust

// Old (0.8)

commands.spawn()

.insert_bundle(SomeBundle::default())

.insert(SomeComponent);

// New (0.9) - Option 1

commands.spawn().insert((

SomeBundle::default(),

SomeComponent,

))

// New (0.9) - Option 2

commands.spawn_bundle((

SomeBundle::default(),

SomeComponent,

))

```

## Next Steps

Consider changing `spawn` to accept a bundle and deprecate `spawn_bundle`.

# Objective

The doc comments for `Command` methods are a bit inconsistent on the format, they sometimes go out of scope, and most importantly they are wrong, in the sense that they claim to perform the action described by the command, while in reality, they just push a command to perform the action.

- Follow-up of #5938.

- Related to #5913.

## Solution

- Where applicable, only stated that a `Command` is pushed.

- Added a “See also” section for similar methods.

- Added a missing “Panics” section for `Commands::entity`.

- Removed a wrong comment about `Commands::get_or_spawn` returning `None` (It does not return an option).

- Removed polluting descriptions of other items.

- Misc formatting changes.

## Future possibilities

Since the `Command` implementors (`Spawn`, `InsertBundle`, `InitResource`, ...) are public, I thought that it might be appropriate to describe the action of the command there instead of the method, and to add a `method → command struct` link to fill the gap.

If that seems too far-fetched, we may opt to make them private, if possible, or `#[doc(hidden)]`.

# Objective

- Make people stop believing that commands are applied immediately (hopefully).

- Close#5913.

- Alternative to #5930.

## Solution

I added the clause “to perform impactful changes to the `World`” to the first line to subliminally help the reader accept the fact that some operations cannot be performed immediately without messing up everything.

Then I explicitely said that applying a command requires exclusive `World` access, and finally I proceeded to show when these commands are automatically applied.

I also added a brief paragraph about how commands can be applied manually, if they want.

---

### Further possibilities

If you agree, we can also change the text of the method documentation (in a separate PR) to stress about enqueueing an action instead of just performing it. For example, in `Commands::spawn`:

> Creates a new `Entity`

would be changed to something like:

> Issues a `Command` to spawn a new `Entity`

This may even have a greater effect, since when typing in an IDE, the docs of the method pop up and the programmer can read them on the fly.

# Objective

- Fixes#5850

## Solution

- As described in the issue, added a `get_entity` method on `Commands` that returns an `Option<EntityCommands>`

## Changelog

- Added the new method with a simple doc test

- I have re-used `get_entity` in `entity`, similarly to how `get_single` is used in `single` while additionally preserving the error message

- Add `#[inline]` to both functions

Entities that have commands queued to despawn system will still return commands when `get_entity` is called but that is representative of the fact that the entity is still around until those commands are flushed.

A potential `contains_entity` could also be added in this PR if desired, that would effectively be replacing Entities.contains but may be more discoverable if this is a common use case.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

*This PR description is an edited copy of #5007, written by @alice-i-cecile.*

# Objective

Follow-up to https://github.com/bevyengine/bevy/pull/2254. The `Resource` trait currently has a blanket implementation for all types that meet its bounds.

While ergonomic, this results in several drawbacks:

* it is possible to make confusing, silent mistakes such as inserting a function pointer (Foo) rather than a value (Foo::Bar) as a resource

* it is challenging to discover if a type is intended to be used as a resource

* we cannot later add customization options (see the [RFC](https://github.com/bevyengine/rfcs/blob/main/rfcs/27-derive-component.md) for the equivalent choice for Component).

* dependencies can use the same Rust type as a resource in invisibly conflicting ways

* raw Rust types used as resources cannot preserve privacy appropriately, as anyone able to access that type can read and write to internal values

* we cannot capture a definitive list of possible resources to display to users in an editor

## Notes to reviewers

* Review this commit-by-commit; there's effectively no back-tracking and there's a lot of churn in some of these commits.

*ira: My commits are not as well organized :')*

* I've relaxed the bound on Local to Send + Sync + 'static: I don't think these concerns apply there, so this can keep things simple. Storing e.g. a u32 in a Local is fine, because there's a variable name attached explaining what it does.

* I think this is a bad place for the Resource trait to live, but I've left it in place to make reviewing easier. IMO that's best tackled with https://github.com/bevyengine/bevy/issues/4981.

## Changelog

`Resource` is no longer automatically implemented for all matching types. Instead, use the new `#[derive(Resource)]` macro.

## Migration Guide

Add `#[derive(Resource)]` to all types you are using as a resource.

If you are using a third party type as a resource, wrap it in a tuple struct to bypass orphan rules. Consider deriving `Deref` and `DerefMut` to improve ergonomics.

`ClearColor` no longer implements `Component`. Using `ClearColor` as a component in 0.8 did nothing.

Use the `ClearColorConfig` in the `Camera3d` and `Camera2d` components instead.

Co-authored-by: Alice <alice.i.cecile@gmail.com>

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: devil-ira <justthecooldude@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Improve documentation, information users of the limitations in bevy's idiomatic patterns, and suggesting alternatives for when those limitations are encountered.

## Solution

* Add documentation to `Commands` informing the user of the option of writing one-shot commands with closures.

* Add documentation to `EventWriter` regarding the limitations of event types, and suggesting alternatives using commands.

Remove unnecessary calls to `iter()`/`iter_mut()`.

Mainly updates the use of queries in our code, docs, and examples.

```rust

// From

for _ in list.iter() {

for _ in list.iter_mut() {

// To

for _ in &list {

for _ in &mut list {

```

We already enable the pedantic lint [clippy::explicit_iter_loop](https://rust-lang.github.io/rust-clippy/stable/) inside of Bevy. However, this only warns for a few known types from the standard library.

## Note for reviewers

As you can see the additions and deletions are exactly equal.

Maybe give it a quick skim to check I didn't sneak in a crypto miner, but you don't have to torture yourself by reading every line.

I already experienced enough pain making this PR :)

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- Currently, the `Extract` `RenderStage` is executed on the main world, with the render world available as a resource.

- However, when needing access to resources in the render world (e.g. to mutate them), the only way to do so was to get exclusive access to the whole `RenderWorld` resource.

- This meant that effectively only one extract which wrote to resources could run at a time.

- We didn't previously make `Extract`ing writing to the world a non-happy path, even though we want to discourage that.

## Solution

- Move the extract stage to run on the render world.

- Add the main world as a `MainWorld` resource.

- Add an `Extract` `SystemParam` as a convenience to access a (read only) `SystemParam` in the main world during `Extract`.

## Future work

It should be possible to avoid needing to use `get_or_spawn` for the render commands, since now the `Commands`' `Entities` matches up with the world being executed on.

We need to determine how this interacts with https://github.com/bevyengine/bevy/pull/3519

It's theoretically possible to remove the need for the `value` method on `Extract`. However, that requires slightly changing the `SystemParam` interface, which would make it more complicated. That would probably mess up the `SystemState` api too.

## Todo

I still need to add doc comments to `Extract`.

---

## Changelog

### Changed

- The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase.

Resources on the render world can now be accessed using `ResMut` during extract.

### Removed

- `Commands::spawn_and_forget`. Use `Commands::get_or_spawn(e).insert_bundle(bundle)` instead

## Migration Guide

The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase. `Extract` takes a single type parameter, which is any system parameter (such as `Res`, `Query` etc.). It will extract this from the main world, and returns the result of this extraction when `value` is called on it.

For example, if previously your extract system looked like:

```rust

fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

for cloud in clouds.iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

the new version would be:

```rust

fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

The diff is:

```diff

--- a/src/clouds.rs

+++ b/src/clouds.rs

@@ -1,5 +1,5 @@

-fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

- for cloud in clouds.iter() {

+fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

+ for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

You can now also access resources from the render world using the normal system parameters during `Extract`:

```rust

fn extract_assets(mut render_assets: ResMut<MyAssets>, source_assets: Extract<Res<MyAssets>>) {

*render_assets = source_assets.clone();

}

```

Please note that all existing extract systems need to be updated to match this new style; even if they currently compile they will not run as expected. A warning will be emitted on a best-effort basis if this is not met.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Help users fix issue when their app panic when executing a command on a despawned entity

## Solution

- Add an error code and a page describing how to debug the issue

# Objective

`SAFETY` comments are meant to be placed before `unsafe` blocks and should contain the reasoning of why in this case the usage of unsafe is okay. This is useful when reading the code because it makes it clear which assumptions are required for safety, and makes it easier to spot possible unsoundness holes. It also forces the code writer to think of something to write and maybe look at the safety contracts of any called unsafe methods again to double-check their correct usage.

There's a clippy lint called `undocumented_unsafe_blocks` which warns when using a block without such a comment.

## Solution

- since clippy expects `SAFETY` instead of `SAFE`, rename those

- add `SAFETY` comments in more places

- for the last remaining 3 places, add an `#[allow()]` and `// TODO` since I wasn't comfortable enough with the code to justify their safety

- add ` #![warn(clippy::undocumented_unsafe_blocks)]` to `bevy_ecs`

### Note for reviewers

The first commit only renames `SAFETY` to `SAFE` so it doesn't need a thorough review.

cb042a416e..55cef2d6fa is the diff for all other changes.

### Safety comments where I'm not too familiar with the code

774012ece5/crates/bevy_ecs/src/entity/mod.rs (L540-L546)774012ece5/crates/bevy_ecs/src/world/entity_ref.rs (L249-L252)

### Locations left undocumented with a `TODO` comment

5dde944a30/crates/bevy_ecs/src/schedule/executor_parallel.rs (L196-L199)5dde944a30/crates/bevy_ecs/src/world/entity_ref.rs (L287-L289)5dde944a30/crates/bevy_ecs/src/world/entity_ref.rs (L413-L415)

Co-authored-by: Jakob Hellermann <hellermann@sipgate.de>

# Objective

- Provide a way to see the components of an entity.

- Fixes#1467

## Solution

- Add `World::inspect_entity`. It accepts an `Entity` and returns a vector of `&ComponentInfo` that the entity has.

- Add `EntityCommands::log_components`. It logs the component names of the entity. (info level)

---

## Changelog

### Added

- Ability to inspect components of an entity through `World::inspect_entity` or `EntityCommands::log_components`

(follow-up to #4423)

# Objective

Currently, it isn't possible to easily fire commands from within par_for_each blocks. This PR allows for issuing commands from within parallel scopes.

# Objective

This PR aims to improve the soundness of `CommandQueue`. In particular it aims to:

- make it sound to store commands that contain padding or uninitialized bytes;

- avoid uses of commands after moving them in the queue's buffer (`std::mem::forget` is technically a use of its argument);

- remove useless checks: `self.bytes.as_mut_ptr().is_null()` is always `false` because even `Vec`s that haven't allocated use a dangling pointer. Moreover the same pointer was used to write the command, so it ought to be valid for reads if it was for writes.

## Solution

- To soundly store padding or uninitialized bytes `CommandQueue` was changed to contain a `Vec<MaybeUninit<u8>>` instead of `Vec<u8>`;

- To avoid uses of the command through `std::mem::forget`, `ManuallyDrop` was used.

## Other observations

While writing this PR I noticed that `CommandQueue` doesn't seem to drop the commands that weren't applied. While this is a pretty niche case (you would have to be manually using `CommandQueue`/`std::mem::swap`ping one), I wonder if it should be documented anyway.

# Objective

- The current API docs of `Commands` is very short and is very opaque to newcomers.

## Solution

- Try to explain what it is without requiring knowledge of other parts of `bevy_ecs` like `World` or `SystemParam`.

Co-authored-by: Charles <IceSentry@users.noreply.github.com>

Free at last!

# Objective

- Using `.system()` is no longer needed anywhere, and anyone using it will have already gotten a deprecation warning.

- https://github.com/bevyengine/bevy/pull/3302 was a super special case for `.system()`, since it was so prevelant. However, that's no reason.

- Despite it being deprecated, another couple of uses of it have already landed, including in the deprecating PR.

- These have all been because of doc examples having warnings not breaking CI - 🎟️?

## Solution

- Remove it.

- It's gone

---

## Changelog

- You can no longer use `.system()`

## Migration Guide

- You can no longer use `.system()`. It was deprecated in 0.7.0, and you should have followed the deprecation warning then. You can just remove the method call.

- Thanks to the @TheRawMeatball for producing

What is says on the tin.

This has got more to do with making `clippy` slightly more *quiet* than it does with changing anything that might greatly impact readability or performance.

that said, deriving `Default` for a couple of structs is a nice easy win

# Objective

- Fixes#3078

- Fixes#1397

## Solution

- Implement Commands::init_resource.

- Also implement for World, for consistency and to simplify internal structure.

- While we're here, clean up some of the docs for Command and World resource modification.

This is my first contribution to this exciting project! Thanks so much for your wonderful work. If there is anything that I can improve about this PR, please let me know :)

# Objective

- Fixes#2899

- If a simple one-off command is needed to be added within a System, this simplifies that process so that we can simply do `commands.add(|world: &mut World| { /* code here */ })` instead of defining a custom type implementing `Command`.

## Solution

- This is achieved by `impl Command for F where F: FnOnce(&mut World) + Send + Sync + 'static` as just calling the function.

I am not sure if the bounds can be further relaxed but needed the whole `Send`, `Sync`, and `'static` to get it to compile.

# Objective

- Calling .id() has no purpose unless you use the Entity returned

- This is an easy source of confusion for beginners.

- This is easily missed during refactors.

## Solution

- Mark the appropriate methods as #[must_use]

# Objective

- Removes warning about accidently inserting bundles with `EntityCommands::insert`, but since a component now needs to implement `Component` it is unnecessary.

#3457 adds the `doc_markdown` clippy lint, which checks doc comments to make sure code identifiers are escaped with backticks. This causes a lot of lint errors, so this is one of a number of PR's that will fix those lint errors one crate at a time.

This PR fixes lints in the `bevy_ecs` crate.

This implements the most minimal variant of #1843 - a derive for marker trait. This is a prerequisite to more complicated features like statically defined storage type or opt-out component reflection.

In order to make component struct's purpose explicit and avoid misuse, it must be annotated with `#[derive(Component)]` (manual impl is discouraged for compatibility). Right now this is just a marker trait, but in the future it might be expanded. Making this change early allows us to make further changes later without breaking backward compatibility for derive macro users.

This already prevents a lot of issues, like using bundles in `insert` calls. Primitive types are no longer valid components as well. This can be easily worked around by adding newtype wrappers and deriving `Component` for them.

One funny example of prevented bad code (from our own tests) is when an newtype struct or enum variant is used. Previously, it was possible to write `insert(Newtype)` instead of `insert(Newtype(value))`. That code compiled, because function pointers (in this case newtype struct constructor) implement `Send + Sync + 'static`, so we allowed them to be used as components. This is no longer the case and such invalid code will trigger a compile error.

Co-authored-by: = <=>

Co-authored-by: TheRawMeatball <therawmeatball@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

## Objective

The upcoming Bevy Book makes many references to the API documentation of bevy.

Most references belong to the first two chapters of the Bevy Book:

- bevyengine/bevy-website#176

- bevyengine/bevy-website#182

This PR attempts to improve the documentation of `bevy_ecs` and `bevy_app` in order to help readers of the Book who want to delve deeper into technical details.

## Solution

- Add crate and level module documentation

- Document the most important items (basically those included in the preludes), with the following style, where applicable:

- **Summary.** Short description of the item.

- **Second paragraph.** Detailed description of the item, without going too much in the implementation.

- **Code example(s).**

- **Safety or panic notes.**

## Collaboration

Any kind of collaboration is welcome, especially corrections, wording, new ideas and guidelines on where the focus should be put in.

---

### Related issues

- Fixes#2246

# Objective

Sometimes, the unwraps in `entity_mut` could fail here, if the entity was despawned *before* this command was applied.

The simplest case involves two command buffers:

```rust

use bevy::prelude::*;

fn b(mut commands1: Commands, mut commands2: Commands) {

let id = commands2.spawn().insert_bundle(()).id();

commands1.entity(id).despawn();

}

fn main() {

App::build().add_system(b.system()).run();

}

```

However, a more complicated version arises in the case of ambiguity:

```rust

use std::time::Duration;

use bevy::{app::ScheduleRunnerPlugin, prelude::*};

use rand::Rng;

fn cleanup(mut e: ResMut<Option<Entity>>) {

*e = None;

}

fn sleep_randomly() {

let mut rng = rand::thread_rng();

std:🧵:sleep(Duration::from_millis(rng.gen_range(0..50)));

}

fn spawn(mut commands: Commands, mut e: ResMut<Option<Entity>>) {

*e = Some(commands.spawn().insert_bundle(()).id());

}

fn despawn(mut commands: Commands, e: Res<Option<Entity>>) {

let mut rng = rand::thread_rng();

std:🧵:sleep(Duration::from_millis(rng.gen_range(0..50)));

if let Some(e) = *e {

commands.entity(e).despawn();

}

}

fn main() {

App::build()

.add_system(cleanup.system().label("cleanup"))

.add_system(sleep_randomly.system().label("before_despawn"))

.add_system(despawn.system().after("cleanup").after("before_despawn"))

.add_system(sleep_randomly.system().label("before_spawn"))

.add_system(spawn.system().after("cleanup").after("before_spawn"))

.insert_resource(None::<Entity>)

.add_plugin(ScheduleRunnerPlugin::default())

.run();

}

```

In the cases where this example crashes, it's because `despawn` was ordered before `spawn` in the topological ordering of systems (which determines when buffers are applied). However, `despawn` actually ran *after* `spawn`, because these systems are ambiguous, so the jiggles in the sleeping time triggered a case where this works.

## Solution

- Give a better error message

This upstreams the code changes used by the new renderer to enable cross-app Entity reuse:

* Spawning at specific entities

* get_or_spawn: spawns an entity if it doesn't already exist and returns an EntityMut

* insert_or_spawn_batch: the batched equivalent to `world.get_or_spawn(entity).insert_bundle(bundle)`

* Clearing entities and storages

* Allocating Entities with "invalid" archetypes. These entities cannot be queried / are treated as "non existent". They serve as "reserved" entities that won't show up when calling `spawn()`. They must be "specifically spawned at" using apis like `get_or_spawn(entity)`.

In combination, these changes enable the "render world" to clear entities / storages each frame and reserve all "app world entities". These can then be spawned during the "render extract step".

This refactors "spawn" and "insert" code in a way that I think is a massive improvement to legibility and re-usability. It also yields marginal performance wins by reducing some duplicate lookups (less than a percentage point improvement on insertion benchmarks). There is also some potential for future unsafe reduction (by making BatchSpawner and BatchInserter generic). But for now I want to cut down generic usage to a minimum to encourage smaller binaries and faster compiles.

This is currently a draft because it needs more tests (although this code has already had some real-world testing on my custom-shaders branch).

I also fixed the benchmarks (which currently don't compile!) / added new ones to illustrate batching wins.

After these changes, Bevy ECS is basically ready to accommodate the new renderer. I think the biggest missing piece at this point is "sub apps".

# Objective

Enable using exact World lifetimes during read-only access . This is motivated by the new renderer's need to allow read-only world-only queries to outlive the query itself (but still be constrained by the world lifetime).

For example:

115b170d1f/pipelined/bevy_pbr2/src/render/mod.rs (L774)

## Solution

Split out SystemParam state and world lifetimes and pipe those lifetimes up to read-only Query ops (and add into_inner for Res). According to every safety test I've run so far (except one), this is safe (see the temporary safety test commit). Note that changing the mutable variants to the new lifetimes would allow aliased mutable pointers (try doing that to see how it affects the temporary safety tests).

The new state lifetime on SystemParam does make `#[derive(SystemParam)]` more cumbersome (the current impl requires PhantomData if you don't use both lifetimes). We can make this better by detecting whether or not a lifetime is used in the derive and adjusting accordingly, but that should probably be done in its own pr.

## Why is this a draft?

The new lifetimes break QuerySet safety in one very specific case (see the query_set system in system_safety_test). We need to solve this before we can use the lifetimes given.

This is due to the fact that QuerySet is just a wrapper over Query, which now relies on world lifetimes instead of `&self` lifetimes to prevent aliasing (but in systems, each Query has its own implied lifetime, not a centralized world lifetime). I believe the fix is to rewrite QuerySet to have its own World lifetime (and own the internal reference). This will complicate the impl a bit, but I think it is doable. I'm curious if anyone else has better ideas.

Personally, I think these new lifetimes need to happen. We've gotta have a way to directly tie read-only World queries to the World lifetime. The new renderer is the first place this has come up, but I doubt it will be the last. Worst case scenario we can come up with a second `WorldLifetimeQuery<Q, F = ()>` parameter to enable these read-only scenarios, but I'd rather not add another type to the type zoo.