This adds support for **Multiple Asset Sources**. You can now register a

named `AssetSource`, which you can load assets from like you normally

would:

```rust

let shader: Handle<Shader> = asset_server.load("custom_source://path/to/shader.wgsl");

```

Notice that `AssetPath` now supports `some_source://` syntax. This can

now be accessed through the `asset_path.source()` accessor.

Asset source names _are not required_. If one is not specified, the

default asset source will be used:

```rust

let shader: Handle<Shader> = asset_server.load("path/to/shader.wgsl");

```

The behavior of the default asset source has not changed. Ex: the

`assets` folder is still the default.

As referenced in #9714

## Why?

**Multiple Asset Sources** enables a number of often-asked-for

scenarios:

* **Loading some assets from other locations on disk**: you could create

a `config` asset source that reads from the OS-default config folder

(not implemented in this PR)

* **Loading some assets from a remote server**: you could register a new

`remote` asset source that reads some assets from a remote http server

(not implemented in this PR)

* **Improved "Binary Embedded" Assets**: we can use this system for

"embedded-in-binary assets", which allows us to replace the old

`load_internal_asset!` approach, which couldn't support asset

processing, didn't support hot-reloading _well_, and didn't make

embedded assets accessible to the `AssetServer` (implemented in this pr)

## Adding New Asset Sources

An `AssetSource` is "just" a collection of `AssetReader`, `AssetWriter`,

and `AssetWatcher` entries. You can configure new asset sources like

this:

```rust

app.register_asset_source(

"other",

AssetSource::build()

.with_reader(|| Box::new(FileAssetReader::new("other")))

)

)

```

Note that `AssetSource` construction _must_ be repeatable, which is why

a closure is accepted.

`AssetSourceBuilder` supports `with_reader`, `with_writer`,

`with_watcher`, `with_processed_reader`, `with_processed_writer`, and

`with_processed_watcher`.

Note that the "asset source" system replaces the old "asset providers"

system.

## Processing Multiple Sources

The `AssetProcessor` now supports multiple asset sources! Processed

assets can refer to assets in other sources and everything "just works".

Each `AssetSource` defines an unprocessed and processed `AssetReader` /

`AssetWriter`.

Currently this is all or nothing for a given `AssetSource`. A given

source is either processed or it is not. Later we might want to add

support for "lazy asset processing", where an `AssetSource` (such as a

remote server) can be configured to only process assets that are

directly referenced by local assets (in order to save local disk space

and avoid doing extra work).

## A new `AssetSource`: `embedded`

One of the big features motivating **Multiple Asset Sources** was

improving our "embedded-in-binary" asset loading. To prove out the

**Multiple Asset Sources** implementation, I chose to build a new

`embedded` `AssetSource`, which replaces the old `load_interal_asset!`

system.

The old `load_internal_asset!` approach had a number of issues:

* The `AssetServer` was not aware of (or capable of loading) internal

assets.

* Because internal assets weren't visible to the `AssetServer`, they

could not be processed (or used by assets that are processed). This

would prevent things "preprocessing shaders that depend on built in Bevy

shaders", which is something we desperately need to start doing.

* Each "internal asset" needed a UUID to be defined in-code to reference

it. This was very manual and toilsome.

The new `embedded` `AssetSource` enables the following pattern:

```rust

// Called in `crates/bevy_pbr/src/render/mesh.rs`

embedded_asset!(app, "mesh.wgsl");

// later in the app

let shader: Handle<Shader> = asset_server.load("embedded://bevy_pbr/render/mesh.wgsl");

```

Notice that this always treats the crate name as the "root path", and it

trims out the `src` path for brevity. This is generally predictable, but

if you need to debug you can use the new `embedded_path!` macro to get a

`PathBuf` that matches the one used by `embedded_asset`.

You can also reference embedded assets in arbitrary assets, such as WGSL

shaders:

```rust

#import "embedded://bevy_pbr/render/mesh.wgsl"

```

This also makes `embedded` assets go through the "normal" asset

lifecycle. They are only loaded when they are actually used!

We are also discussing implicitly converting asset paths to/from shader

modules, so in the future (not in this PR) you might be able to load it

like this:

```rust

#import bevy_pbr::render::mesh::Vertex

```

Compare that to the old system!

```rust

pub const MESH_SHADER_HANDLE: Handle<Shader> = Handle::weak_from_u128(3252377289100772450);

load_internal_asset!(app, MESH_SHADER_HANDLE, "mesh.wgsl", Shader::from_wgsl);

// The mesh asset is the _only_ accessible via MESH_SHADER_HANDLE and _cannot_ be loaded via the AssetServer.

```

## Hot Reloading `embedded`

You can enable `embedded` hot reloading by enabling the

`embedded_watcher` cargo feature:

```

cargo run --features=embedded_watcher

```

## Improved Hot Reloading Workflow

First: the `filesystem_watcher` cargo feature has been renamed to

`file_watcher` for brevity (and to match the `FileAssetReader` naming

convention).

More importantly, hot asset reloading is no longer configured in-code by

default. If you enable any asset watcher feature (such as `file_watcher`

or `rust_source_watcher`), asset watching will be automatically enabled.

This removes the need to _also_ enable hot reloading in your app code.

That means you can replace this:

```rust

app.add_plugins(DefaultPlugins.set(AssetPlugin::default().watch_for_changes()))

```

with this:

```rust

app.add_plugins(DefaultPlugins)

```

If you want to hot reload assets in your app during development, just

run your app like this:

```

cargo run --features=file_watcher

```

This means you can use the same code for development and deployment! To

deploy an app, just don't include the watcher feature

```

cargo build --release

```

My intent is to move to this approach for pretty much all dev workflows.

In a future PR I would like to replace `AssetMode::ProcessedDev` with a

`runtime-processor` cargo feature. We could then group all common "dev"

cargo features under a single `dev` feature:

```sh

# this would enable file_watcher, embedded_watcher, runtime-processor, and more

cargo run --features=dev

```

## AssetMode

`AssetPlugin::Unprocessed`, `AssetPlugin::Processed`, and

`AssetPlugin::ProcessedDev` have been replaced with an `AssetMode` field

on `AssetPlugin`.

```rust

// before

app.add_plugins(DefaultPlugins.set(AssetPlugin::Processed { /* fields here */ })

// after

app.add_plugins(DefaultPlugins.set(AssetPlugin { mode: AssetMode::Processed, ..default() })

```

This aligns `AssetPlugin` with our other struct-like plugins. The old

"source" and "destination" `AssetProvider` fields in the enum variants

have been replaced by the "asset source" system. You no longer need to

configure the AssetPlugin to "point" to custom asset providers.

## AssetServerMode

To improve the implementation of **Multiple Asset Sources**,

`AssetServer` was made aware of whether or not it is using "processed"

or "unprocessed" assets. You can check that like this:

```rust

if asset_server.mode() == AssetServerMode::Processed {

/* do something */

}

```

Note that this refactor should also prepare the way for building "one to

many processed output files", as it makes the server aware of whether it

is loading from processed or unprocessed sources. Meaning we can store

and read processed and unprocessed assets differently!

## AssetPath can now refer to folders

The "file only" restriction has been removed from `AssetPath`. The

`AssetServer::load_folder` API now accepts an `AssetPath` instead of a

`Path`, meaning you can load folders from other asset sources!

## Improved AssetPath Parsing

AssetPath parsing was reworked to support sources, improve error

messages, and to enable parsing with a single pass over the string.

`AssetPath::new` was replaced by `AssetPath::parse` and

`AssetPath::try_parse`.

## AssetWatcher broken out from AssetReader

`AssetReader` is no longer responsible for constructing `AssetWatcher`.

This has been moved to `AssetSourceBuilder`.

## Duplicate Event Debouncing

Asset V2 already debounced duplicate filesystem events, but this was

_input_ events. Multiple input event types can produce the same _output_

`AssetSourceEvent`. Now that we have `embedded_watcher`, which does

expensive file io on events, it made sense to debounce output events

too, so I added that! This will also benefit the AssetProcessor by

preventing integrity checks for duplicate events (and helps keep the

noise down in trace logs).

## Next Steps

* **Port Built-in Shaders**: Currently the primary (and essentially

only) user of `load_interal_asset` in Bevy's source code is "built-in

shaders". I chose not to do that in this PR for a few reasons:

1. We need to add the ability to pass shader defs in to shaders via meta

files. Some shaders (such as MESH_VIEW_TYPES) need to pass shader def

values in that are defined in code.

2. We need to revisit the current shader module naming system. I think

we _probably_ want to imply modules from source structure (at least by

default). Ideally in a way that can losslessly convert asset paths

to/from shader modules (to enable the asset system to resolve modules

using the asset server).

3. I want to keep this change set minimal / get this merged first.

* **Deprecate `load_internal_asset`**: we can't do that until we do (1)

and (2)

* **Relative Asset Paths**: This PR significantly increases the need for

relative asset paths (which was already pretty high). Currently when

loading dependencies, it is assumed to be an absolute path, which means

if in an `AssetLoader` you call `context.load("some/path/image.png")` it

will assume that is the "default" asset source, _even if the current

asset is in a different asset source_. This will cause breakage for

AssetLoaders that are not designed to add the current source to whatever

paths are being used. AssetLoaders should generally not need to be aware

of the name of their current asset source, or need to think about the

"current asset source" generally. We should build apis that support

relative asset paths and then encourage using relative paths as much as

possible (both via api design and docs). Relative paths are also

important because they will allow developers to move folders around

(even across providers) without reprocessing, provided there is no path

breakage.

# Objective

- When I've tested alternative async executors with bevy a common

problem is that they deadlock when we try to run nested scopes. i.e.

running a multithreaded schedule from inside another multithreaded

schedule. This adds a test to bevy_tasks for that so the issue can be

spotted earlier while developing.

## Changelog

- add a test for nested scopes.

# Objective

Fixes#9625

## Solution

Adds `async-io` as an optional dependency of `bevy_tasks`. When enabled,

this causes calls to `futures_lite::future::block_on` to be replaced

with calls to `async_io::block_on`.

---

## Changelog

- Added a new `async-io` feature to `bevy_tasks`. When enabled, this

causes `bevy_tasks` to use `async-io`'s implemention of `block_on`

instead of `futures-lite`'s implementation. You should enable this if

you use `async-io` in your application.

# Objective

`single_threaded_task_pool` emitted a warning:

```

warning: use of `default` to create a unit struct

--> crates/bevy_tasks/src/single_threaded_task_pool.rs:22:25

|

22 | Self(PhantomData::default())

| ^^^^^^^^^^^ help: remove this call to `default`

|

= help: for further information visit https://rust-lang.github.io/rust-clippy/master/index.html#default_constructed_unit_structs

= note: `#[warn(clippy::default_constructed_unit_structs)]` on by default

```

## Solution

fix the lint

# Objective

I found it very difficult to understand how bevy tasks work, and I

concluded that the documentation should be improved for beginners like

me.

## Solution

These changes to the documentation were written from my beginner's

perspective after

some extremely helpful explanations by nil on Discord.

I am not familiar enough with rustdoc yet; when looking at the source, I

found the documentation at the very top of `usages.rs` helpful, but I

don't know where they are rendered. They should probably be linked to

from the main `bevy_tasks` README.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: Mike <mike.hsu@gmail.com>

# Objective

- `bevy_tasks` emits warnings under certain conditions

When I run `cargo clippy -p bevy_tasks` the warning doesn't show up,

while if I run it with `cargo clippy -p bevy_asset` the warning shows

up.

## Solution

- Fix the warnings.

## Longer term solution

We should probably fix CI so that those warnings do not slip through.

But that's not the goal of this PR.

# Objective

Fixes#9113

## Solution

disable `multi-threaded` default feature

## Migration Guide

The `multi-threaded` feature in `bevy_ecs` and `bevy_tasks` is no longer

enabled by default. However, this remains a default feature for the

umbrella `bevy` crate. If you depend on `bevy_ecs` or `bevy_tasks`

directly, you should consider enabling this to allow systems to run in

parallel.

CI-capable version of #9086

---------

Co-authored-by: Bevy Auto Releaser <41898282+github-actions[bot]@users.noreply.github.com>

Co-authored-by: François <mockersf@gmail.com>

I created this manually as Github didn't want to run CI for the

workflow-generated PR. I'm guessing we didn't hit this in previous

releases because we used bors.

Co-authored-by: Bevy Auto Releaser <41898282+github-actions[bot]@users.noreply.github.com>

# Objective

Fixes#6689.

## Solution

Add `single-threaded` as an optional non-default feature to `bevy_ecs`

and `bevy_tasks` that:

- disable the `ParallelExecutor` as a default runner

- disables the multi-threaded `TaskPool`

- internally replace `QueryParIter::for_each` calls with

`Query::for_each`.

Removed the `Mutex` and `Arc` usage in the single-threaded task pool.

## Future Work/TODO

Create type aliases for `Mutex`, `Arc` that change to single-threaaded

equivalents where possible.

---

## Changelog

Added: Optional default feature `multi-theaded` to that enables

multithreaded parallelism in the engine. Disabling it disables all

multithreading in exchange for higher single threaded performance. Does

nothing on WASM targets.

---------

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Links in the api docs are nice. I noticed that there were several places

where structs / functions and other things were referenced in the docs,

but weren't linked. I added the links where possible / logical.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

# Objective

Fixes#8215 and #8152. When systems panic, it causes the main thread to

panic as well, which clutters the output.

## Solution

Resolves the panic in the multi-threaded scheduler. Also adds an extra

message that tells the user the system that panicked.

Using the example from the issue, here is what the messages now look

like:

```rust

use bevy::prelude::*;

fn main() {

App::new()

.add_plugins(DefaultPlugins)

.add_systems(Update, panicking_system)

.run();

}

fn panicking_system() {

panic!("oooh scary");

}

```

### Before

```

Compiling bevy_test v0.1.0 (E:\Projects\Rust\bevy_test)

Finished dev [unoptimized + debuginfo] target(s) in 2m 58s

Running `target\debug\bevy_test.exe`

2023-03-30T22:19:09.234932Z INFO bevy_diagnostic::system_information_diagnostics_plugin::internal: SystemInfo { os: "Windows 10 Pro", kernel: "19044", cpu: "AMD Ryzen 5 2600 Six-Core Processor", core_count: "6", memory: "15.9 GiB" }

thread 'Compute Task Pool (5)' panicked at 'oooh scary', src\main.rs:11:5

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

thread 'Compute Task Pool (5)' panicked at 'A system has panicked so the executor cannot continue.: RecvError', E:\Projects\Rust\bevy\crates\bevy_ecs\src\schedule\executor\multi_threaded.rs:194:60

thread 'main' panicked at 'called `Option::unwrap()` on a `None` value', E:\Projects\Rust\bevy\crates\bevy_tasks\src\task_pool.rs:376:49

error: process didn't exit successfully: `target\debug\bevy_test.exe` (exit code: 101)

```

### After

```

Compiling bevy_test v0.1.0 (E:\Projects\Rust\bevy_test)

Finished dev [unoptimized + debuginfo] target(s) in 2.39s

Running `target\debug\bevy_test.exe`

2023-03-30T22:11:24.748513Z INFO bevy_diagnostic::system_information_diagnostics_plugin::internal: SystemInfo { os: "Windows 10 Pro", kernel: "19044", cpu: "AMD Ryzen 5 2600 Six-Core Processor", core_count: "6", memory: "15.9 GiB" }

thread 'Compute Task Pool (5)' panicked at 'oooh scary', src\main.rs:11:5

note: run with `RUST_BACKTRACE=1` environment variable to display a backtrace

Encountered a panic in system `bevy_test::panicking_system`!

Encountered a panic in system `bevy_app::main_schedule::Main::run_main`!

error: process didn't exit successfully: `target\debug\bevy_test.exe` (exit code: 101)

```

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: François <mockersf@gmail.com>

Fixes issue mentioned in PR #8285.

_Note: By mistake, this is currently dependent on #8285_

# Objective

Ensure consistency in the spelling of the documentation.

Exceptions:

`crates/bevy_mikktspace/src/generated.rs` - Has not been changed from

licence to license as it is part of a licensing agreement.

Maybe for further consistency,

https://github.com/bevyengine/bevy-website should also be given a look.

## Solution

### Changed the spelling of the current words (UK/CN/AU -> US) :

cancelled -> canceled (Breaking API changes in #8285)

behaviour -> behavior (Breaking API changes in #8285)

neighbour -> neighbor

grey -> gray

recognise -> recognize

centre -> center

metres -> meters

colour -> color

### ~~Update [`engine_style_guide.md`]~~ Moved to #8324

---

## Changelog

Changed UK spellings in documentation to US

## Migration Guide

Non-breaking changes*

\* If merged after #8285

# Objective

The clippy lint `type_complexity` is known not to play well with bevy.

It frequently triggers when writing complex queries, and taking the

lint's advice of using a type alias almost always just obfuscates the

code with no benefit. Because of this, this lint is currently ignored in

CI, but unfortunately it still shows up when viewing bevy code in an

IDE.

As someone who's made a fair amount of pull requests to this repo, I

will say that this issue has been a consistent thorn in my side. Since

bevy code is filled with spurious, ignorable warnings, it can be very

difficult to spot the *real* warnings that must be fixed -- most of the

time I just ignore all warnings, only to later find out that one of them

was real after I'm done when CI runs.

## Solution

Suppress this lint in all bevy crates. This was previously attempted in

#7050, but the review process ended up making it more complicated than

it needs to be and landed on a subpar solution.

The discussion in https://github.com/rust-lang/rust-clippy/pull/10571

explores some better long-term solutions to this problem. Since there is

no timeline on when these solutions may land, we should resolve this

issue in the meantime by locally suppressing these lints.

### Unresolved issues

Currently, these lints are not suppressed in our examples, since that

would require suppressing the lint in every single source file. They are

still ignored in CI.

…or's ticker for one thread.

# Objective

- Fix debug_asset_server hang.

## Solution

- Reuse the thread_local executor for MainThreadExecutor resource, so there will be only one ThreadExecutor for main thread.

- If ThreadTickers from same executor, they are conflict with each other. Then only tick one.

# Objective

- While working on scope recently, I ran into a missing invariant for the transmutes in scope. The references passed into Scope are active for the rest of the scope function, but rust doesn't know this so it allows using the owned `spawned` and `scope` after `f` returns.

## Solution

- Update the safety comment

- Shadow the owned values so they can't be used.

Huge thanks to @maniwani, @devil-ira, @hymm, @cart, @superdump and @jakobhellermann for the help with this PR.

# Objective

- Followup #6587.

- Minimal integration for the Stageless Scheduling RFC: https://github.com/bevyengine/rfcs/pull/45

## Solution

- [x] Remove old scheduling module

- [x] Migrate new methods to no longer use extension methods

- [x] Fix compiler errors

- [x] Fix benchmarks

- [x] Fix examples

- [x] Fix docs

- [x] Fix tests

## Changelog

### Added

- a large number of methods on `App` to work with schedules ergonomically

- the `CoreSchedule` enum

- `App::add_extract_system` via the `RenderingAppExtension` trait extension method

- the private `prepare_view_uniforms` system now has a public system set for scheduling purposes, called `ViewSet::PrepareUniforms`

### Removed

- stages, and all code that mentions stages

- states have been dramatically simplified, and no longer use a stack

- `RunCriteriaLabel`

- `AsSystemLabel` trait

- `on_hierarchy_reports_enabled` run criteria (now just uses an ad hoc resource checking run condition)

- systems in `RenderSet/Stage::Extract` no longer warn when they do not read data from the main world

- `RunCriteriaLabel`

- `transform_propagate_system_set`: this was a nonstandard pattern that didn't actually provide enough control. The systems are already `pub`: the docs have been updated to ensure that the third-party usage is clear.

### Changed

- `System::default_labels` is now `System::default_system_sets`.

- `App::add_default_labels` is now `App::add_default_sets`

- `CoreStage` and `StartupStage` enums are now `CoreSet` and `StartupSet`

- `App::add_system_set` was renamed to `App::add_systems`

- The `StartupSchedule` label is now defined as part of the `CoreSchedules` enum

- `.label(SystemLabel)` is now referred to as `.in_set(SystemSet)`

- `SystemLabel` trait was replaced by `SystemSet`

- `SystemTypeIdLabel<T>` was replaced by `SystemSetType<T>`

- The `ReportHierarchyIssue` resource now has a public constructor (`new`), and implements `PartialEq`

- Fixed time steps now use a schedule (`CoreSchedule::FixedTimeStep`) rather than a run criteria.

- Adding rendering extraction systems now panics rather than silently failing if no subapp with the `RenderApp` label is found.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied.

- `SceneSpawnerSystem` now runs under `CoreSet::Update`, rather than `CoreStage::PreUpdate.at_end()`.

- `bevy_pbr::add_clusters` is no longer an exclusive system

- the top level `bevy_ecs::schedule` module was replaced with `bevy_ecs::scheduling`

- `tick_global_task_pools_on_main_thread` is no longer run as an exclusive system. Instead, it has been replaced by `tick_global_task_pools`, which uses a `NonSend` resource to force running on the main thread.

## Migration Guide

- Calls to `.label(MyLabel)` should be replaced with `.in_set(MySet)`

- Stages have been removed. Replace these with system sets, and then add command flushes using the `apply_system_buffers` exclusive system where needed.

- The `CoreStage`, `StartupStage, `RenderStage` and `AssetStage` enums have been replaced with `CoreSet`, `StartupSet, `RenderSet` and `AssetSet`. The same scheduling guarantees have been preserved.

- Systems are no longer added to `CoreSet::Update` by default. Add systems manually if this behavior is needed, although you should consider adding your game logic systems to `CoreSchedule::FixedTimestep` instead for more reliable framerate-independent behavior.

- Similarly, startup systems are no longer part of `StartupSet::Startup` by default. In most cases, this won't matter to you.

- For example, `add_system_to_stage(CoreStage::PostUpdate, my_system)` should be replaced with

- `add_system(my_system.in_set(CoreSet::PostUpdate)`

- When testing systems or otherwise running them in a headless fashion, simply construct and run a schedule using `Schedule::new()` and `World::run_schedule` rather than constructing stages

- Run criteria have been renamed to run conditions. These can now be combined with each other and with states.

- Looping run criteria and state stacks have been removed. Use an exclusive system that runs a schedule if you need this level of control over system control flow.

- For app-level control flow over which schedules get run when (such as for rollback networking), create your own schedule and insert it under the `CoreSchedule::Outer` label.

- Fixed timesteps are now evaluated in a schedule, rather than controlled via run criteria. The `run_fixed_timestep` system runs this schedule between `CoreSet::First` and `CoreSet::PreUpdate` by default.

- Command flush points introduced by `AssetStage` have been removed. If you were relying on these, add them back manually.

- Adding extract systems is now typically done directly on the main app. Make sure the `RenderingAppExtension` trait is in scope, then call `app.add_extract_system(my_system)`.

- the `calculate_bounds` system, with the `CalculateBounds` label, is now in `CoreSet::Update`, rather than in `CoreSet::PostUpdate` before commands are applied. You may need to order your movement systems to occur before this system in order to avoid system order ambiguities in culling behavior.

- the `RenderLabel` `AppLabel` was renamed to `RenderApp` for clarity

- `App::add_state` now takes 0 arguments: the starting state is set based on the `Default` impl.

- Instead of creating `SystemSet` containers for systems that run in stages, simply use `.on_enter::<State::Variant>()` or its `on_exit` or `on_update` siblings.

- `SystemLabel` derives should be replaced with `SystemSet`. You will also need to add the `Debug`, `PartialEq`, `Eq`, and `Hash` traits to satisfy the new trait bounds.

- `with_run_criteria` has been renamed to `run_if`. Run criteria have been renamed to run conditions for clarity, and should now simply return a bool.

- States have been dramatically simplified: there is no longer a "state stack". To queue a transition to the next state, call `NextState::set`

## TODO

- [x] remove dead methods on App and World

- [x] add `App::add_system_to_schedule` and `App::add_systems_to_schedule`

- [x] avoid adding the default system set at inappropriate times

- [x] remove any accidental cycles in the default plugins schedule

- [x] migrate benchmarks

- [x] expose explicit labels for the built-in command flush points

- [x] migrate engine code

- [x] remove all mentions of stages from the docs

- [x] verify docs for States

- [x] fix uses of exclusive systems that use .end / .at_start / .before_commands

- [x] migrate RenderStage and AssetStage

- [x] migrate examples

- [x] ensure that transform propagation is exported in a sufficiently public way (the systems are already pub)

- [x] ensure that on_enter schedules are run at least once before the main app

- [x] re-enable opt-in to execution order ambiguities

- [x] revert change to `update_bounds` to ensure it runs in `PostUpdate`

- [x] test all examples

- [x] unbreak directional lights

- [x] unbreak shadows (see 3d_scene, 3d_shape, lighting, transparaency_3d examples)

- [x] game menu example shows loading screen and menu simultaneously

- [x] display settings menu is a blank screen

- [x] `without_winit` example panics

- [x] ensure all tests pass

- [x] SubApp doc test fails

- [x] runs_spawn_local tasks fails

- [x] [Fix panic_when_hierachy_cycle test hanging](https://github.com/alice-i-cecile/bevy/pull/120)

## Points of Difficulty and Controversy

**Reviewers, please give feedback on these and look closely**

1. Default sets, from the RFC, have been removed. These added a tremendous amount of implicit complexity and result in hard to debug scheduling errors. They're going to be tackled in the form of "base sets" by @cart in a followup.

2. The outer schedule controls which schedule is run when `App::update` is called.

3. I implemented `Label for `Box<dyn Label>` for our label types. This enables us to store schedule labels in concrete form, and then later run them. I ran into the same set of problems when working with one-shot systems. We've previously investigated this pattern in depth, and it does not appear to lead to extra indirection with nested boxes.

4. `SubApp::update` simply runs the default schedule once. This sucks, but this whole API is incomplete and this was the minimal changeset.

5. `time_system` and `tick_global_task_pools_on_main_thread` no longer use exclusive systems to attempt to force scheduling order

6. Implemetnation strategy for fixed timesteps

7. `AssetStage` was migrated to `AssetSet` without reintroducing command flush points. These did not appear to be used, and it's nice to remove these bottlenecks.

8. Migration of `bevy_render/lib.rs` and pipelined rendering. The logic here is unusually tricky, as we have complex scheduling requirements.

## Future Work (ideally before 0.10)

- Rename schedule_v3 module to schedule or scheduling

- Add a derive macro to states, and likely a `EnumIter` trait of some form

- Figure out what exactly to do with the "systems added should basically work by default" problem

- Improve ergonomics for working with fixed timesteps and states

- Polish FixedTime API to match Time

- Rebase and merge #7415

- Resolve all internal ambiguities (blocked on better tools, especially #7442)

- Add "base sets" to replace the removed default sets.

# Objective

- Currently exclusive systems and applying buffers run outside of the multithreaded executor and just calls the funtions on the thread the schedule is running on. Stageless changes this to run these using tasks in a scope. Specifically It uses `spawn_on_scope` to run these. For the render thread this is incorrect as calling `spawn_on_scope` there runs tasks on the main thread. It should instead run these on the render thread and only run nonsend systems on the main thread.

## Solution

- Add another executor to `Scope` for spawning tasks on the scope. `spawn_on_scope` now always runs the task on the thread the scope is running on. `spawn_on_external` spawns onto the external executor than is optionally passed in. If None is passed `spawn_on_external` will spawn onto the scope executor.

- Eventually this new machinery will be able to be removed. This will happen once a fix for removing NonSend resources from the world lands. So this is a temporary fix to support stageless.

---

## Changelog

- add a spawn_on_external method to allow spawning on the scope's thread or an external thread

## Migration Guide

> No migration guide. The main thread executor was introduced in pipelined rendering which was merged for 0.10. spawn_on_scope now behaves the same way as on 0.9.

# Objective

I found several words in code and docs are incorrect. This should be fixed.

## Solution

- Fix several minor typos

Co-authored-by: Chris Ohk <utilforever@gmail.com>

# Objective

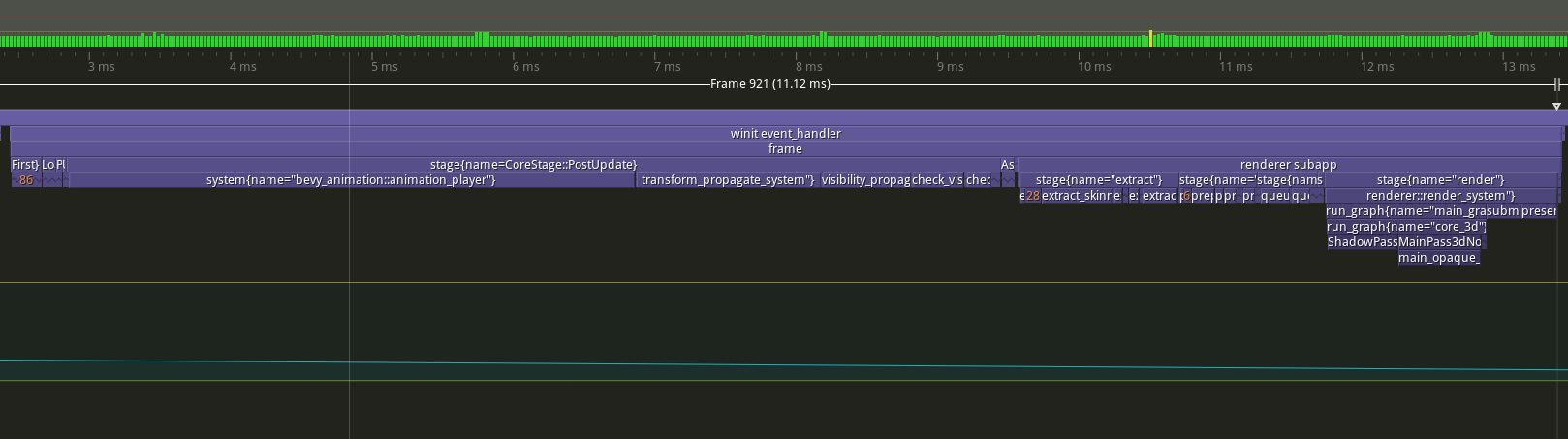

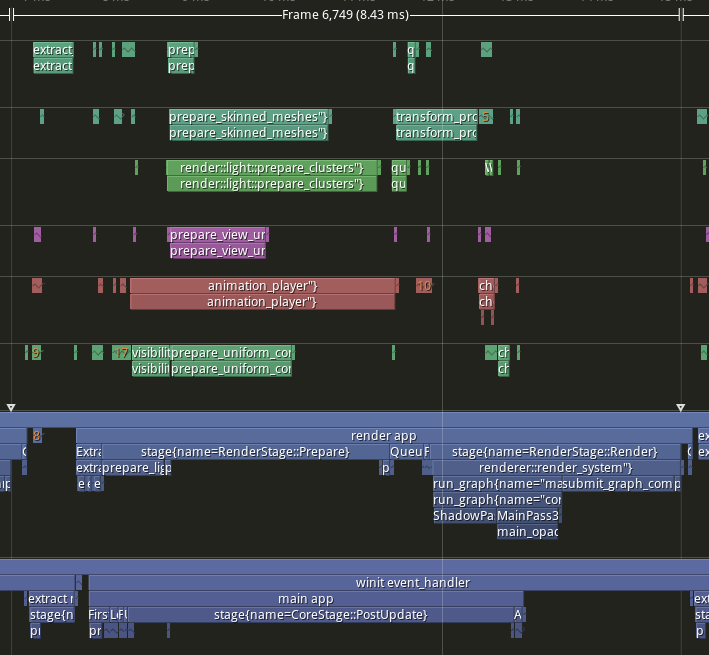

- Implement pipelined rendering

- Fixes#5082

- Fixes#4718

## User Facing Description

Bevy now implements piplelined rendering! Pipelined rendering allows the app logic and rendering logic to run on different threads leading to large gains in performance.

*tracy capture of many_foxes example*

To use pipelined rendering, you just need to add the `PipelinedRenderingPlugin`. If you're using `DefaultPlugins` then it will automatically be added for you on all platforms except wasm. Bevy does not currently support multithreading on wasm which is needed for this feature to work. If you aren't using `DefaultPlugins` you can add the plugin manually.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new()

// whatever other plugins you need

.add_plugin(RenderPlugin)

// needs to be added after RenderPlugin

.add_plugin(PipelinedRenderingPlugin)

.run();

}

```

If for some reason pipelined rendering needs to be removed. You can also disable the plugin the normal way.

```rust

use bevy::prelude::*;

use bevy::render::pipelined_rendering::PipelinedRenderingPlugin;

fn main() {

App::new.add_plugins(DefaultPlugins.build().disable::<PipelinedRenderingPlugin>());

}

```

### A setup function was added to plugins

A optional plugin lifecycle function was added to the `Plugin trait`. This function is called after all plugins have been built, but before the app runner is called. This allows for some final setup to be done. In the case of pipelined rendering, the function removes the sub app from the main app and sends it to the render thread.

```rust

struct MyPlugin;

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

}

// optional function

fn setup(&self, app: &mut App) {

// do some final setup before runner is called

}

}

```

### A Stage for Frame Pacing

In the `RenderExtractApp` there is a stage labelled `BeforeIoAfterRenderStart` that systems can be added to. The specific use case for this stage is for a frame pacing system that can delay the start of main app processing in render bound apps to reduce input latency i.e. "frame pacing". This is not currently built into bevy, but exists as `bevy`

```text

|-------------------------------------------------------------------|

| | BeforeIoAfterRenderStart | winit events | main schedule |

| extract |---------------------------------------------------------|

| | extract commands | rendering schedule |

|-------------------------------------------------------------------|

```

### Small API additions

* `Schedule::remove_stage`

* `App::insert_sub_app`

* `App::remove_sub_app`

* `TaskPool::scope_with_executor`

## Problems and Solutions

### Moving render app to another thread

Most of the hard bits for this were done with the render redo. This PR just sends the render app back and forth through channels which seems to work ok. I originally experimented with using a scope to run the render task. It was cuter, but that approach didn't allow render to start before i/o processing. So I switched to using channels. There is much complexity in the coordination that needs to be done, but it's worth it. By moving rendering during i/o processing the frame times should be much more consistent in render bound apps. See https://github.com/bevyengine/bevy/issues/4691.

### Unsoundness with Sending World with NonSend resources

Dropping !Send things on threads other than the thread they were spawned on is considered unsound. The render world doesn't have any nonsend resources. So if we tell the users to "pretty please don't spawn nonsend resource on the render world", we can avoid this problem.

More seriously there is this https://github.com/bevyengine/bevy/pull/6534 pr, which patches the unsoundness by aborting the app if a nonsend resource is dropped on the wrong thread. ~~That PR should probably be merged before this one.~~ For a longer term solution we have this discussion going https://github.com/bevyengine/bevy/discussions/6552.

### NonSend Systems in render world

The render world doesn't have any !Send resources, but it does have a non send system. While Window is Send, winit does have some API's that can only be accessed on the main thread. `prepare_windows` in the render schedule thus needs to be scheduled on the main thread. Currently we run nonsend systems by running them on the thread the TaskPool::scope runs on. When we move render to another thread this no longer works.

To fix this, a new `scope_with_executor` method was added that takes a optional `TheadExecutor` that can only be ticked on the thread it was initialized on. The render world then holds a `MainThreadExecutor` resource which can be passed to the scope in the parallel executor that it uses to spawn it's non send systems on.

### Scopes executors between render and main should not share tasks

Since the render world and the app world share the `ComputeTaskPool`. Because `scope` has executors for the ComputeTaskPool a system from the main world could run on the render thread or a render system could run on the main thread. This can cause performance problems because it can delay a stage from finishing. See https://github.com/bevyengine/bevy/pull/6503#issuecomment-1309791442 for more details.

To avoid this problem, `TaskPool::scope` has been changed to not tick the ComputeTaskPool when it's used by the parallel executor. In the future when we move closer to the 1 thread to 1 logical core model we may want to overprovide threads, because the render and main app threads don't do much when executing the schedule.

## Performance

My machine is Windows 11, AMD Ryzen 5600x, RX 6600

### Examples

#### This PR with pipelining vs Main

> Note that these were run on an older version of main and the performance profile has probably changed due to optimizations

Seeing a perf gain from 29% on many lights to 7% on many sprites.

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | 27.01% | 27.34% | -47.09% | 1.58 | 1.55 | -1.78 | 5.85 | 5.67 | 3.78 | 4.27 | 4.12 | 5.56

many lights | 29.35% | 29.94% | -10.84% | 3.02 | 3.03 | -0.57 | 10.29 | 10.12 | 5.26 | 7.27 | 7.09 | 5.83

many animated sprites | 13.97% | 15.69% | 14.20% | 3.79 | 4.17 | 1.41 | 27.12 | 26.57 | 9.93 | 23.33 | 22.4 | 8.52

3d scene | 25.79% | 26.78% | 7.46% | 0.49 | 0.49 | 0.15 | 1.9 | 1.83 | 2.01 | 1.41 | 1.34 | 1.86

many cubes | 11.97% | 11.28% | 14.51% | 1.93 | 1.78 | 1.31 | 16.13 | 15.78 | 9.03 | 14.2 | 14 | 7.72

many sprites | 7.14% | 9.42% | -85.42% | 1.72 | 2.23 | -6.15 | 24.09 | 23.68 | 7.2 | 22.37 | 21.45 | 13.35

<!--EndFragment-->

</body>

</html>

#### This PR with pipelining disabled vs Main

Mostly regressions here. I don't think this should be a problem as users that are disabling pipelined rendering are probably running single threaded and not using the parallel executor. The regression is probably mostly due to the switch to use `async_executor::run` instead of `try_tick` and also having one less thread to run systems on. I'll do a writeup on why switching to `run` causes regressions, so we can try to eventually fix it. Using try_tick causes issues when pipeline rendering is enable as seen [here](https://github.com/bevyengine/bevy/pull/6503#issuecomment-1380803518)

<html>

<body>

<!--StartFragment--><google-sheets-html-origin>

| percent | | | Diff | | | Main | | | PR no pipelining | |

-- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | -- | --

tracy frame time | mean | median | sigma | mean | median | sigma | mean | median | sigma | mean | median | sigma

many foxes | -3.72% | -4.42% | -1.07% | -0.21 | -0.24 | -0.04 | 5.64 | 5.43 | 3.74 | 5.85 | 5.67 | 3.78

many lights | 0.29% | -0.30% | 4.75% | 0.03 | -0.03 | 0.25 | 10.29 | 10.12 | 5.26 | 10.26 | 10.15 | 5.01

many animated sprites | 0.22% | 1.81% | -2.72% | 0.06 | 0.48 | -0.27 | 27.12 | 26.57 | 9.93 | 27.06 | 26.09 | 10.2

3d scene | -15.79% | -14.75% | -31.34% | -0.3 | -0.27 | -0.63 | 1.9 | 1.83 | 2.01 | 2.2 | 2.1 | 2.64

many cubes | -2.85% | -3.30% | 0.00% | -0.46 | -0.52 | 0 | 16.13 | 15.78 | 9.03 | 16.59 | 16.3 | 9.03

many sprites | 2.49% | 2.41% | 0.69% | 0.6 | 0.57 | 0.05 | 24.09 | 23.68 | 7.2 | 23.49 | 23.11 | 7.15

<!--EndFragment-->

</body>

</html>

### Benchmarks

Mostly the same except empty_systems has got a touch slower. The maybe_pipelining+1 column has the compute task pool with an extra thread over default added. This is because pipelining loses one thread over main to execute systems on, since the main thread no longer runs normal systems.

<details>

<summary>Click Me</summary>

```text

group main maybe-pipelining+1

----- ------------------------- ------------------

busy_systems/01x_entities_03_systems 1.07 30.7±1.32µs ? ?/sec 1.00 28.6±1.35µs ? ?/sec

busy_systems/01x_entities_06_systems 1.10 52.1±1.10µs ? ?/sec 1.00 47.2±1.08µs ? ?/sec

busy_systems/01x_entities_09_systems 1.00 74.6±1.36µs ? ?/sec 1.00 75.0±1.93µs ? ?/sec

busy_systems/01x_entities_12_systems 1.03 100.6±6.68µs ? ?/sec 1.00 98.0±1.46µs ? ?/sec

busy_systems/01x_entities_15_systems 1.11 128.5±3.53µs ? ?/sec 1.00 115.5±1.02µs ? ?/sec

busy_systems/02x_entities_03_systems 1.16 50.4±2.56µs ? ?/sec 1.00 43.5±3.00µs ? ?/sec

busy_systems/02x_entities_06_systems 1.00 87.1±1.27µs ? ?/sec 1.05 91.5±7.15µs ? ?/sec

busy_systems/02x_entities_09_systems 1.04 139.9±6.37µs ? ?/sec 1.00 134.0±1.06µs ? ?/sec

busy_systems/02x_entities_12_systems 1.05 179.2±3.47µs ? ?/sec 1.00 170.1±3.17µs ? ?/sec

busy_systems/02x_entities_15_systems 1.01 219.6±3.75µs ? ?/sec 1.00 218.1±2.55µs ? ?/sec

busy_systems/03x_entities_03_systems 1.10 70.6±2.33µs ? ?/sec 1.00 64.3±0.69µs ? ?/sec

busy_systems/03x_entities_06_systems 1.02 130.2±3.11µs ? ?/sec 1.00 128.0±1.34µs ? ?/sec

busy_systems/03x_entities_09_systems 1.00 195.0±10.11µs ? ?/sec 1.00 194.8±1.41µs ? ?/sec

busy_systems/03x_entities_12_systems 1.01 261.7±4.05µs ? ?/sec 1.00 259.8±4.11µs ? ?/sec

busy_systems/03x_entities_15_systems 1.00 318.0±3.04µs ? ?/sec 1.06 338.3±20.25µs ? ?/sec

busy_systems/04x_entities_03_systems 1.00 82.9±0.63µs ? ?/sec 1.02 84.3±0.63µs ? ?/sec

busy_systems/04x_entities_06_systems 1.01 181.7±3.65µs ? ?/sec 1.00 179.8±1.76µs ? ?/sec

busy_systems/04x_entities_09_systems 1.04 265.0±4.68µs ? ?/sec 1.00 255.3±1.98µs ? ?/sec

busy_systems/04x_entities_12_systems 1.00 335.9±3.00µs ? ?/sec 1.05 352.6±15.84µs ? ?/sec

busy_systems/04x_entities_15_systems 1.00 418.6±10.26µs ? ?/sec 1.08 450.2±39.58µs ? ?/sec

busy_systems/05x_entities_03_systems 1.07 114.3±0.95µs ? ?/sec 1.00 106.9±1.52µs ? ?/sec

busy_systems/05x_entities_06_systems 1.08 229.8±2.90µs ? ?/sec 1.00 212.3±4.18µs ? ?/sec

busy_systems/05x_entities_09_systems 1.03 329.3±1.99µs ? ?/sec 1.00 319.2±2.43µs ? ?/sec

busy_systems/05x_entities_12_systems 1.06 454.7±6.77µs ? ?/sec 1.00 430.1±3.58µs ? ?/sec

busy_systems/05x_entities_15_systems 1.03 554.6±6.15µs ? ?/sec 1.00 538.4±23.87µs ? ?/sec

contrived/01x_entities_03_systems 1.00 14.0±0.15µs ? ?/sec 1.08 15.1±0.21µs ? ?/sec

contrived/01x_entities_06_systems 1.04 28.5±0.37µs ? ?/sec 1.00 27.4±0.44µs ? ?/sec

contrived/01x_entities_09_systems 1.00 41.5±4.38µs ? ?/sec 1.02 42.2±2.24µs ? ?/sec

contrived/01x_entities_12_systems 1.06 55.9±1.49µs ? ?/sec 1.00 52.6±1.36µs ? ?/sec

contrived/01x_entities_15_systems 1.02 68.0±2.00µs ? ?/sec 1.00 66.5±0.78µs ? ?/sec

contrived/02x_entities_03_systems 1.03 25.2±0.38µs ? ?/sec 1.00 24.6±0.52µs ? ?/sec

contrived/02x_entities_06_systems 1.00 46.3±0.49µs ? ?/sec 1.04 48.1±4.13µs ? ?/sec

contrived/02x_entities_09_systems 1.02 70.4±0.99µs ? ?/sec 1.00 68.8±1.04µs ? ?/sec

contrived/02x_entities_12_systems 1.06 96.8±1.49µs ? ?/sec 1.00 91.5±0.93µs ? ?/sec

contrived/02x_entities_15_systems 1.02 116.2±0.95µs ? ?/sec 1.00 114.2±1.42µs ? ?/sec

contrived/03x_entities_03_systems 1.00 33.2±0.38µs ? ?/sec 1.01 33.6±0.45µs ? ?/sec

contrived/03x_entities_06_systems 1.00 62.4±0.73µs ? ?/sec 1.01 63.3±1.05µs ? ?/sec

contrived/03x_entities_09_systems 1.02 96.4±0.85µs ? ?/sec 1.00 94.8±3.02µs ? ?/sec

contrived/03x_entities_12_systems 1.01 126.3±4.67µs ? ?/sec 1.00 125.6±2.27µs ? ?/sec

contrived/03x_entities_15_systems 1.03 160.2±9.37µs ? ?/sec 1.00 156.0±1.53µs ? ?/sec

contrived/04x_entities_03_systems 1.02 41.4±3.39µs ? ?/sec 1.00 40.5±0.52µs ? ?/sec

contrived/04x_entities_06_systems 1.00 78.9±1.61µs ? ?/sec 1.02 80.3±1.06µs ? ?/sec

contrived/04x_entities_09_systems 1.02 121.8±3.97µs ? ?/sec 1.00 119.2±1.46µs ? ?/sec

contrived/04x_entities_12_systems 1.00 157.8±1.48µs ? ?/sec 1.01 160.1±1.72µs ? ?/sec

contrived/04x_entities_15_systems 1.00 197.9±1.47µs ? ?/sec 1.08 214.2±34.61µs ? ?/sec

contrived/05x_entities_03_systems 1.00 49.1±0.33µs ? ?/sec 1.01 49.7±0.75µs ? ?/sec

contrived/05x_entities_06_systems 1.00 95.0±0.93µs ? ?/sec 1.00 94.6±0.94µs ? ?/sec

contrived/05x_entities_09_systems 1.01 143.2±1.68µs ? ?/sec 1.00 142.2±2.00µs ? ?/sec

contrived/05x_entities_12_systems 1.00 191.8±2.03µs ? ?/sec 1.01 192.7±7.88µs ? ?/sec

contrived/05x_entities_15_systems 1.02 239.7±3.71µs ? ?/sec 1.00 235.8±4.11µs ? ?/sec

empty_systems/000_systems 1.01 47.8±0.67ns ? ?/sec 1.00 47.5±2.02ns ? ?/sec

empty_systems/001_systems 1.00 1743.2±126.14ns ? ?/sec 1.01 1761.1±70.10ns ? ?/sec

empty_systems/002_systems 1.01 2.2±0.04µs ? ?/sec 1.00 2.2±0.02µs ? ?/sec

empty_systems/003_systems 1.02 2.7±0.09µs ? ?/sec 1.00 2.7±0.16µs ? ?/sec

empty_systems/004_systems 1.00 3.1±0.11µs ? ?/sec 1.00 3.1±0.24µs ? ?/sec

empty_systems/005_systems 1.00 3.5±0.05µs ? ?/sec 1.11 3.9±0.70µs ? ?/sec

empty_systems/010_systems 1.00 5.5±0.12µs ? ?/sec 1.03 5.7±0.17µs ? ?/sec

empty_systems/015_systems 1.00 7.9±0.19µs ? ?/sec 1.06 8.4±0.16µs ? ?/sec

empty_systems/020_systems 1.00 10.4±1.25µs ? ?/sec 1.02 10.6±0.18µs ? ?/sec

empty_systems/025_systems 1.00 12.4±0.39µs ? ?/sec 1.14 14.1±1.07µs ? ?/sec

empty_systems/030_systems 1.00 15.1±0.39µs ? ?/sec 1.05 15.8±0.62µs ? ?/sec

empty_systems/035_systems 1.00 16.9±0.47µs ? ?/sec 1.07 18.0±0.37µs ? ?/sec

empty_systems/040_systems 1.00 19.3±0.41µs ? ?/sec 1.05 20.3±0.39µs ? ?/sec

empty_systems/045_systems 1.00 22.4±1.67µs ? ?/sec 1.02 22.9±0.51µs ? ?/sec

empty_systems/050_systems 1.00 24.4±1.67µs ? ?/sec 1.01 24.7±0.40µs ? ?/sec

empty_systems/055_systems 1.05 28.6±5.27µs ? ?/sec 1.00 27.2±0.70µs ? ?/sec

empty_systems/060_systems 1.02 29.9±1.64µs ? ?/sec 1.00 29.3±0.66µs ? ?/sec

empty_systems/065_systems 1.02 32.7±3.15µs ? ?/sec 1.00 32.1±0.98µs ? ?/sec

empty_systems/070_systems 1.00 33.0±1.42µs ? ?/sec 1.03 34.1±1.44µs ? ?/sec

empty_systems/075_systems 1.00 34.8±0.89µs ? ?/sec 1.04 36.2±0.70µs ? ?/sec

empty_systems/080_systems 1.00 37.0±1.82µs ? ?/sec 1.05 38.7±1.37µs ? ?/sec

empty_systems/085_systems 1.00 38.7±0.76µs ? ?/sec 1.05 40.8±0.83µs ? ?/sec

empty_systems/090_systems 1.00 41.5±1.09µs ? ?/sec 1.04 43.2±0.82µs ? ?/sec

empty_systems/095_systems 1.00 43.6±1.10µs ? ?/sec 1.04 45.2±0.99µs ? ?/sec

empty_systems/100_systems 1.00 46.7±2.27µs ? ?/sec 1.03 48.1±1.25µs ? ?/sec

```

</details>

## Migration Guide

### App `runner` and SubApp `extract` functions are now required to be Send

This was changed to enable pipelined rendering. If this breaks your use case please report it as these new bounds might be able to be relaxed.

## ToDo

* [x] redo benchmarking

* [x] reinvestigate the perf of the try_tick -> run change for task pool scope

# Objective

- Spawn tasks from other threads onto an async executor, but limit those tasks to run on a specific thread.

- This is a continuation of trying to break up some of the changes in pipelined rendering.

- Eventually this will be used to allow `NonSend` systems to run on the main thread in pipelined rendering #6503 and also to solve #6552.

- For this specific PR this allows for us to store a thread executor in a thread local, rather than recreating a scope executor for every scope which should save on a little work.

## Solution

- We create a Executor that does a runtime check for what thread it's on before creating a !Send ticker. The ticker is the only way for the executor to make progress.

---

## Changelog

- create a ThreadExecutor that can only be ticked on one thread.

# Objective

Fix#1991. Allow users to have a bit more control over the creation and finalization of the threads in `TaskPool`.

## Solution

Add new methods to `TaskPoolBuilder` that expose callbacks that are called to initialize and finalize each thread in the `TaskPool`.

Unlike the proposed solution in #1991, the callback is argument-less. If an an identifier is needed, `std:🧵:current` should provide that information easily.

Added a unit test to ensure that they're being called correctly.

# Objective

- Fixes https://github.com/bevyengine/bevy/issues/6603

## Solution

- `Task`s will cancel when dropped, but wait until they return Pending before they actually get canceled. That means that if a task panics, it's possible for that error to get propagated to the scope and the scope gets dropped, while scoped tasks in other threads are still running. This is a big problem since scoped task can hold life-timed values that are dropped as the scope is dropped leading to UB.

---

## Changelog

- changed `Scope` to use `FallibleTask` and await the cancellation of all remaining tasks when it's dropped.

# Objective

Fix#6453.

## Solution

Use the solution mentioned in the issue by catching the unwind and dropping the error. Wrap the `executor.try_tick` calls with `std::catch::unwind`.

Ideally this would be moved outside of the hot loop, but the mut ref to the `spawned` future is not `UnwindSafe`.

This PR only addresses the bug, we can address the perf issues (should there be any) later.

# Objective

Attempting to build `bevy_tasks` produces the following error:

```

error[E0599]: no method named `is_finished` found for struct `async_executor::Task` in the current scope

--> /[...]]/bevy/crates/bevy_tasks/src/task.rs:51:16

|

51 | self.0.is_finished()

| ^^^^^^^^^^^ method not found in `async_executor::Task<T>`

```

It looks like this was introduced along with `Task::is_finished`, which delegates to `async_task::Task::is_finished`. However, the latter was only introduced in `async-task` 4.2.0; `bevy_tasks` does not explicitly depend on `async-task` but on `async-executor` ^1.3.0, which in turn depends on `async-task` ^4.0.0.

## Solution

Add an explicit dependency on `async-task` ^4.2.0.

# Objective

Right now, the `TaskPool` implementation allows panics to permanently kill worker threads upon panicking. This is currently non-recoverable without using a `std::panic::catch_unwind` in every scheduled task. This is poor ergonomics and even poorer developer experience. This is exacerbated by #2250 as these threads are global and cannot be replaced after initialization.

Removes the need for temporary fixes like #4998. Fixes#4996. Fixes#6081. Fixes#5285. Fixes#5054. Supersedes #2307.

## Solution

The current solution is to wrap `Executor::run` in `TaskPool` with a `catch_unwind`, and discarding the potential panic. This was taken straight from [smol](404c7bcc0a/src/spawn.rs (L44))'s current implementation. ~~However, this is not entirely ideal as:~~

- ~~the signaled to the awaiting task. We would need to change `Task<T>` to use `async_task::FallibleTask` internally, and even then it doesn't signal *why* it panicked, just that it did.~~ (See below).

- ~~no error is logged of any kind~~ (See below)

- ~~it's unclear if it drops other tasks in the executor~~ (it does not)

- ~~This allows the ECS parallel executor to keep chugging even though a system's task has been dropped. This inevitably leads to deadlock in the executor.~~ Assuming we don't catch the unwind in ParallelExecutor, this will naturally kill the main thread.

### Alternatives

A final solution likely will incorporate elements of any or all of the following.

#### ~~Log and Ignore~~

~~Log the panic, drop the task, keep chugging. This only addresses the discoverability of the panic. The process will continue to run, probably deadlocking the executor. tokio's detatched tasks operate in this fashion.~~

Panics already do this by default, even when caught by `catch_unwind`.

#### ~~`catch_unwind` in `ParallelExecutor`~~

~~Add another layer catching system-level panics into the `ParallelExecutor`. How the executor continues when a core dependency of many systems fails to run is up for debate.~~

`async_task::Task` bubbles up panics already, this will transitively push panics all the way to the main thread.

#### ~~Emulate/Copy `tokio::JoinHandle` with `Task<T>`~~

~~`tokio::JoinHandle<T>` bubbles up the panic from the underlying task when awaited. This can be transitively applied across other APIs that also use `Task<T>` like `Query::par_for_each` and `TaskPool::scope`, bubbling up the panic until it's either caught or it reaches the main thread.~~

`async_task::Task` bubbles up panics already, this will transitively push panics all the way to the main thread.

#### Abort on Panic

The nuclear option. Log the error, abort the entire process on any thread in the task pool panicking. Definitely avoids any additional infrastructure for passing the panic around, and might actually lead to more efficient code as any unwinding is optimized out. However gives the developer zero options for dealing with the issue, a seemingly poor choice for debuggability, and prevents graceful shutdown of the process. Potentially an option for handling very low-level task management (a la #4740). Roughly takes the shape of:

```rust

struct AbortOnPanic;

impl Drop for AbortOnPanic {

fn drop(&mut self) {

abort!();

}

}

let guard = AbortOnPanic;

// Run task

std::mem::forget(AbortOnPanic);

```

---

## Changelog

Changed: `bevy_tasks::TaskPool`'s threads will no longer terminate permanently when a task scheduled onto them panics.

Changed: `bevy_tasks::Task` and`bevy_tasks::Scope` will propagate panics in the spawned tasks/scopes to the parent thread.

This reverts commit 53d387f340.

# Objective

Reverts #6448. This didn't have the intended effect: we're now getting bevy::prelude shown in the docs again.

Co-authored-by: Alejandro Pascual <alejandro.pascual.pozo@gmail.com>

# Objective

- Right now re-exports are completely hidden in prelude docs.

- Fixes#6433

## Solution

- We could show the re-exports without inlining their documentation.

# Objective

In some scenarios it can be useful to check if a task has been finished without polling it. I added a function called `is_finished` to check if a task has been finished.

## Solution

Since `async_task` supports it out of the box, it is just a simple wrapper function.

---

# Objective

- fix new clippy lints before they get stable and break CI

## Solution

- run `clippy --fix` to auto-fix machine-applicable lints

- silence `clippy::should_implement_trait` for `fn HandleId::default<T: Asset>`

## Changes

- always prefer `format!("{inline}")` over `format!("{}", not_inline)`

- prefer `Box::default` (or `Box::<T>::default` if necessary) over `Box::new(T::default())`

# Objective

- Proactive changing of code to comply with warnings generated by beta of rustlang version of cargo clippy.

## Solution

- Code changed as recommended by `rustup update`, `rustup default beta`, `cargo run -p ci -- clippy`.

- Tested using `beta` and `stable`. No clippy warnings in either after changes made.

---

## Changelog

- Warnings fixed were: `clippy::explicit-auto-deref` (present in 11 files), `clippy::needless-borrow` (present in 2 files), and `clippy::only-used-in-recursion` (only 1 file).

# Objective

- #4466 broke local tasks running.

- Fixes https://github.com/bevyengine/bevy/issues/6120

## Solution

- Add system for ticking local executors on main thread into bevy_core where the tasks pools are initialized.

- Add ticking local executors into thread executors

## Changelog

- tick all thread local executors in task pool.

## Notes

- ~~Not 100% sure about this PR. Ticking the local executor for the main thread in scope feels a little kludgy as it requires users of bevy_tasks to be calling scope periodically for those tasks to make progress.~~ took this out in favor of a system that ticks the local executors.

# Objective

Fixes https://github.com/bevyengine/bevy/issues/6306

## Solution

Change the failing assert and expand example to explain when ordering is deterministic or not.

Co-authored-by: Mike Hsu <mike.hsu@gmail.com>

# Objective

- Add ability to create nested spawns. This is needed for stageless. The current executor spawns tasks for each system early and runs the system by communicating through a channel. In stageless we want to spawn the task late, so that archetypes can be updated right before the task is run. The executor is run on a separate task, so this enables the scope to be passed to the spawned executor.

- Fixes#4301

## Solution

- Instantiate a single threaded executor on the scope and use that instead of the LocalExecutor. This allows the scope to be Send, but still able to spawn tasks onto the main thread the scope is run on. This works because while systems can access nonsend data. The systems themselves are Send. Because of this change we lose the ability to spawn nonsend tasks on the scope, but I don't think this is being used anywhere. Users would still be able to use spawn_local on TaskPools.

- Steals the lifetime tricks the `std:🧵:scope` uses to allow nested spawns, but disallow scope to be passed to tasks or threads not associated with the scope.

- Change the storage for the tasks to a `ConcurrentQueue`. This is to allow a &Scope to be passed for spawning instead of a &mut Scope. `ConcurrentQueue` was chosen because it was already in our dependency tree because `async_executor` depends on it.

- removed the optimizations for 0 and 1 spawned tasks. It did improve those cases, but made the cases of more than 1 task slower.

---

## Changelog

Add ability to nest spawns

```rust

fn main() {

let pool = TaskPool::new();

pool.scope(|scope| {

scope.spawn(async move {

// calling scope.spawn from an spawn task was not possible before

scope.spawn(async move {

// do something

});

});

})

}

```

## Migration Guide

If you were using explicit lifetimes and Passing Scope you'll need to specify two lifetimes now.

```rust

fn scoped_function<'scope>(scope: &mut Scope<'scope, ()>) {}

// should become

fn scoped_function<'scope>(scope: &Scope<'_, 'scope, ()>) {}

```

`scope.spawn_local` changed to `scope.spawn_on_scope` this should cover cases where you needed to run tasks on the local thread, but does not cover spawning Nonsend Futures.

## TODO

* [x] think real hard about all the lifetimes

* [x] add doc about what 'env and 'scope mean.

* [x] manually check that the single threaded task pool still works

* [x] Get updated perf numbers

* [x] check and make sure all the transmutes are necessary

* [x] move commented out test into a compile fail test

* [x] look through the tests for scope on std and see if I should add any more tests

Co-authored-by: Michael Hsu <myhsu@benjaminelectric.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

As of Rust 1.59, `std:🧵:available_parallelism` has been stabilized. As of Rust 1.61, the API matches `num_cpus::get` by properly handling Linux's cgroups and other sandboxing mechanisms.

As bevy does not have an established MSRV, we can replace `num_cpus` in `bevy_tasks` and reduce our dependency tree by one dep.

## Solution

Replace `num_cpus` with `std:🧵:available_parallelism`. Wrap it to have a fallback in the case it errors out and have it operate in the same manner as `num_cpus` did.

This however removes `physical_core_count` from the API, though we are currently not using it in any way in first-party crates.

---

## Changelog

Changed: `bevy_tasks::logical_core_count` -> `bevy_tasks::available_parallelism`.

Removed: `bevy_tasks::physical_core_count`.

## Migration Guide

`bevy_tasks::logical_core_count` and `bevy_tasks::physical_core_count` have been removed. `logical_core_count` has been replaced with `bevy_tasks::available_parallelism`, which works identically. If `bevy_tasks::physical_core_count` is required, the `num_cpus` crate can be used directly, as these two were just aliases for `num_cpus` APIs.