# Objective

Make core pipeline graphic nodes, including `BloomNode`, `FxaaNode`, `TonemappingNode` and `UpscalingNode` public.

This will allow users to construct their own render graphs with these build-in nodes.

## Solution

Make them public.

Also put node names into bevy's core namespace (`core_2d::graph::node`, `core_3d::graph::node`) which makes them consistent.

# Objective

- Closes#5262

- Fix color banding caused by quantization.

## Solution

- Adds dithering to the tonemapping node from #3425.

- This is inspired by Godot's default "debanding" shader: https://gist.github.com/belzecue/

- Unlike Godot:

- debanding happens after tonemapping. My understanding is that this is preferred, because we are running the debanding at the last moment before quantization (`[f32, f32, f32, f32]` -> `f32`). This ensures we aren't biasing the dithering strength by applying it in a different (linear) color space.

- This code instead uses and reference the origin source, Valve at GDC 2015

## Additional Notes

Real time rendering to standard dynamic range outputs is limited to 8 bits of depth per color channel. Internally we keep everything in full 32-bit precision (`vec4<f32>`) inside passes and 16-bit between passes until the image is ready to be displayed, at which point the GPU implicitly converts our `vec4<f32>` into a single 32bit value per pixel, with each channel (rgba) getting 8 of those 32 bits.

### The Problem

8 bits of color depth is simply not enough precision to make each step invisible - we only have 256 values per channel! Human vision can perceive steps in luma to about 14 bits of precision. When drawing a very slight gradient, the transition between steps become visible because with a gradient, neighboring pixels will all jump to the next "step" of precision at the same time.

### The Solution

One solution is to simply output in HDR - more bits of color data means the transition between bands will become smaller. However, not everyone has hardware that supports 10+ bit color depth. Additionally, 10 bit color doesn't even fully solve the issue, banding will result in coherent bands on shallow gradients, but the steps will be harder to perceive.

The solution in this PR adds noise to the signal before it is "quantized" or resampled from 32 to 8 bits. Done naively, it's easy to add unneeded noise to the image. To ensure dithering is correct and absolutely minimal, noise is adding *within* one step of the output color depth. When converting from the 32bit to 8bit signal, the value is rounded to the nearest 8 bit value (0 - 255). Banding occurs around the transition from one value to the next, let's say from 50-51. Dithering will never add more than +/-0.5 bits of noise, so the pixels near this transition might round to 50 instead of 51 but will never round more than one step. This means that the output image won't have excess variance:

- in a gradient from 49 to 51, there will be a step between each band at 49, 50, and 51.

- Done correctly, the modified image of this gradient will never have a adjacent pixels more than one step (0-255) from each other.

- I.e. when scanning across the gradient you should expect to see:

```

|-band-| |-band-| |-band-|

Baseline: 49 49 49 50 50 50 51 51 51

Dithered: 49 50 49 50 50 51 50 51 51

Dithered (wrong): 49 50 51 49 50 51 49 51 50

```

You can see from above how correct dithering "fuzzes" the transition between bands to reduce distinct steps in color, without adding excess noise.

### HDR

The previous section (and this PR) assumes the final output is to an 8-bit texture, however this is not always the case. When Bevy adds HDR support, the dithering code will need to take the per-channel depth into account instead of assuming it to be 0-255. Edit: I talked with Rob about this and it seems like the current solution is okay. We may need to revisit once we have actual HDR final image output.

---

## Changelog

### Added

- All pipelines now support deband dithering. This is enabled by default in 3D, and can be toggled in the `Tonemapping` component in camera bundles. Banding is a graphical artifact created when the rendered image is crunched from high precision (f32 per color channel) down to the final output (u8 per channel in SDR). This results in subtle gradients becoming blocky due to the reduced color precision. Deband dithering applies a small amount of noise to the signal before it is "crunched", which breaks up the hard edges of blocks (bands) of color. Note that this does not add excess noise to the image, as the amount of noise is less than a single step of a color channel - just enough to break up the transition between color blocks in a gradient.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- it would be useful to inspect these structs using reflection

## Solution

- derive and register reflect

- Note that `#[reflect(Component)]` requires `Default` (or `FromWorld`) until #6060, so I implemented `Default` for `Tonemapping` with `is_enabled: false`

# Objective

Bevy UI (and third party plugins) currently have no good way to position themselves after all post processing effects. They currently use the tonemapping node, but this is not adequate if there is anything after tonemapping (such as FXAA).

## Solution

Add a logical `END_MAIN_PASS_POST_PROCESSING` RenderGraph node that main pass post processing effects position themselves before, and things like UIs can position themselves after.

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Co-authored-by: DGriffin91 <github@dgdigital.net>

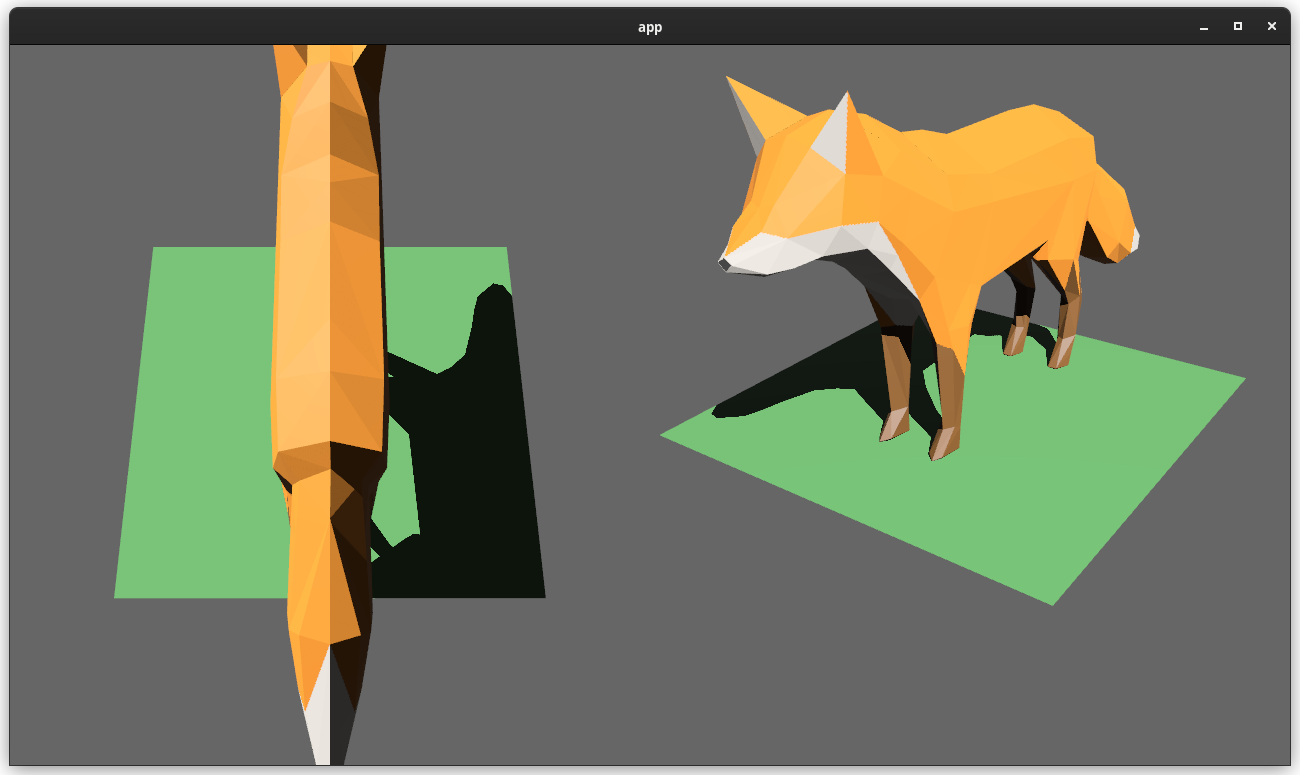

# Objective

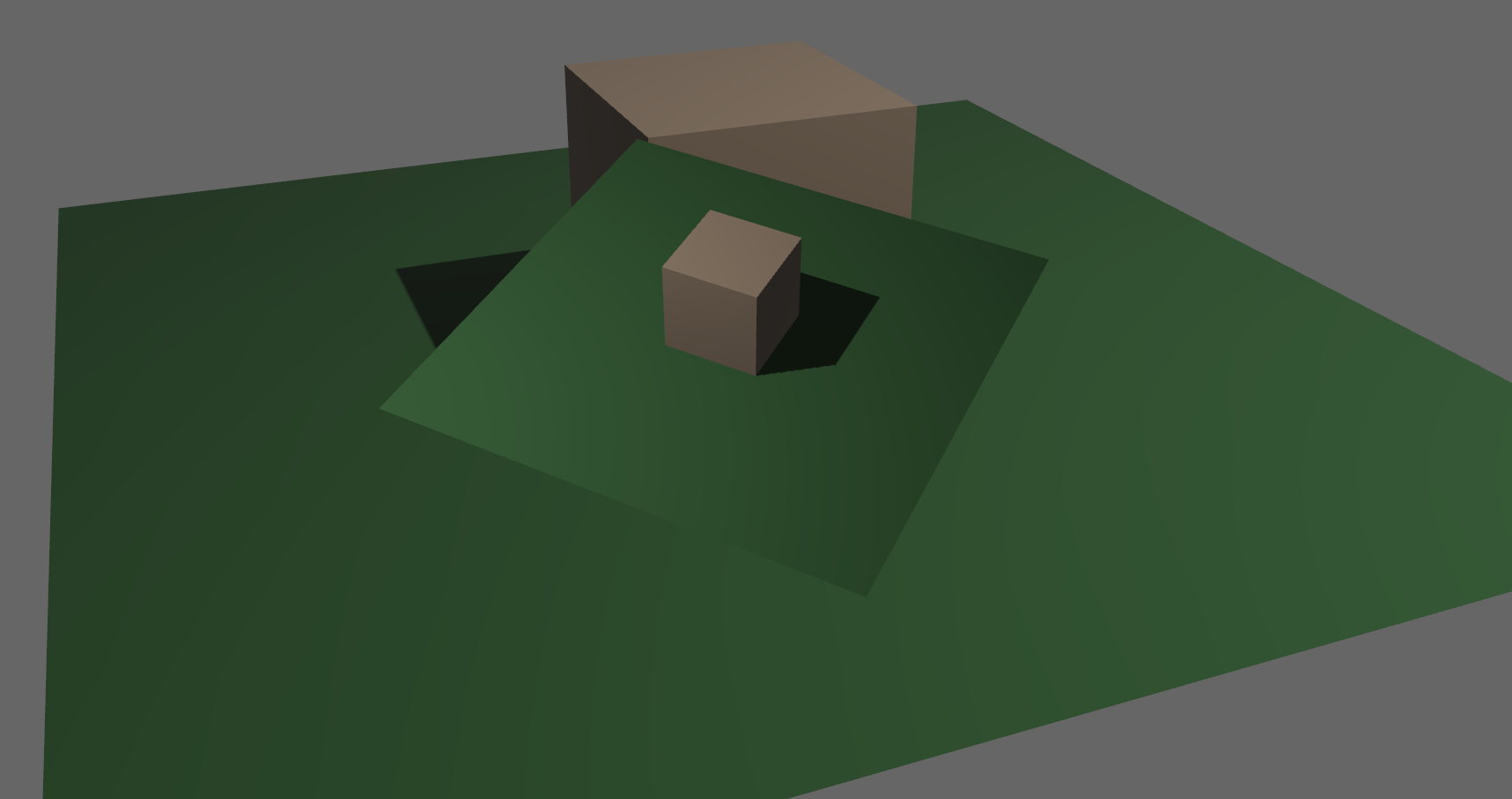

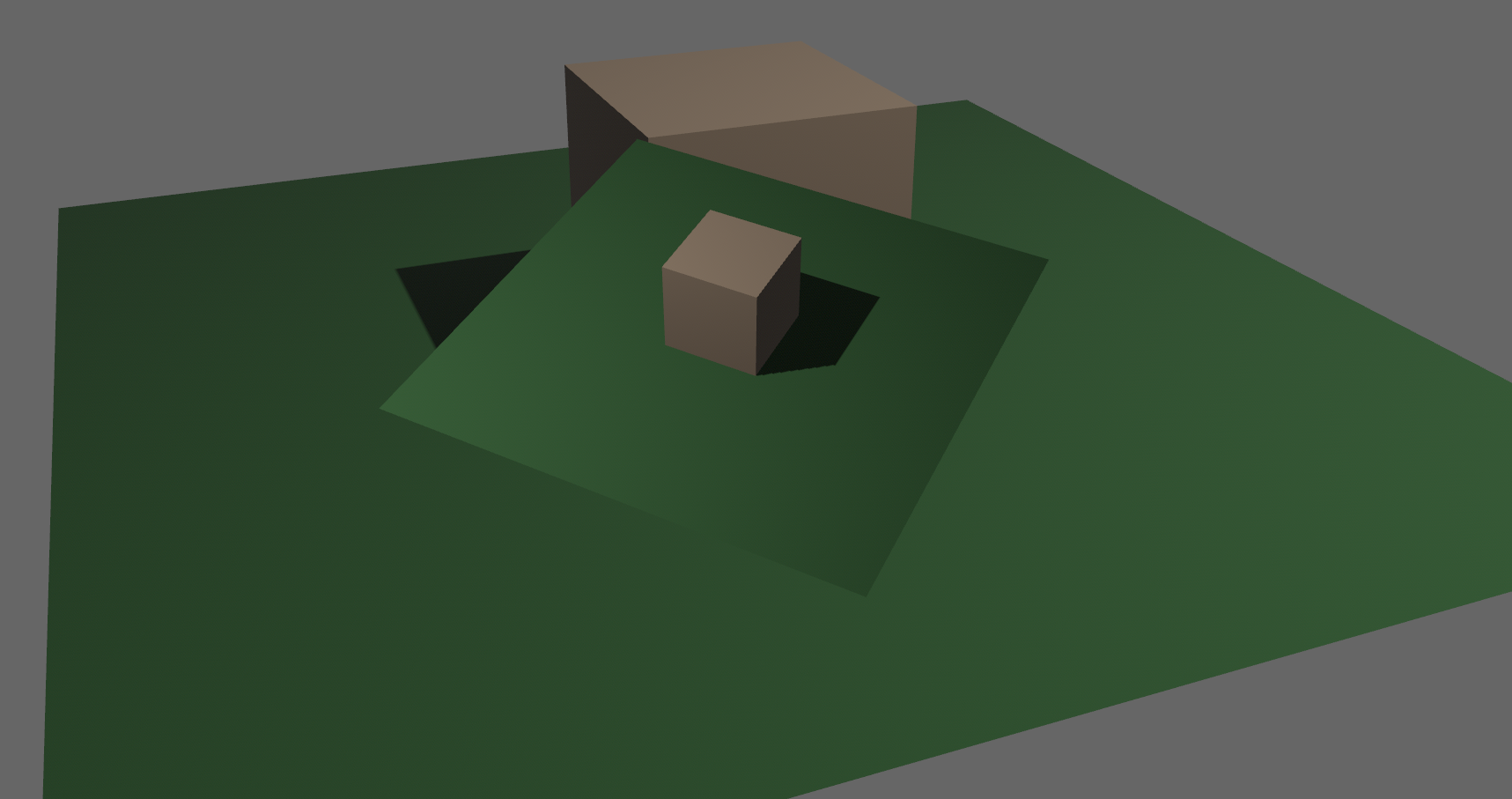

- Adds a bloom pass for HDR-enabled Camera3ds.

- Supersedes (and all credit due to!) https://github.com/bevyengine/bevy/pull/3430 and https://github.com/bevyengine/bevy/pull/2876

## Solution

- A threshold is applied to isolate emissive samples, and then a series of downscale and upscaling passes are applied and composited together.

- Bloom is applied to 2d or 3d Cameras with hdr: true and a BloomSettings component.

---

## Changelog

- Added a `core_pipeline::bloom::BloomSettings` component.

- Added `BloomNode` that runs between the main pass and tonemapping.

- Added a `BloomPlugin` that is loaded as part of CorePipelinePlugin.

- Added a bloom example project.

Co-authored-by: JMS55 <47158642+JMS55@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

Co-authored-by: DGriffin91 <github@dgdigital.net>

# Objective

Replace `WorldQueryGats` trait with actual gats

## Solution

Replace `WorldQueryGats` trait with actual gats

---

## Changelog

- Replaced `WorldQueryGats` trait with actual gats

## Migration Guide

- Replace usage of `WorldQueryGats` assoc types with the actual gats on `WorldQuery` trait

This reverts commit 53d387f340.

# Objective

Reverts #6448. This didn't have the intended effect: we're now getting bevy::prelude shown in the docs again.

Co-authored-by: Alejandro Pascual <alejandro.pascual.pozo@gmail.com>

# Objective

- Right now re-exports are completely hidden in prelude docs.

- Fixes#6433

## Solution

- We could show the re-exports without inlining their documentation.

# Objective

- Add post processing passes for FXAA (Fast Approximate Anti-Aliasing)

- Add example comparing MSAA and FXAA

## Solution

When the FXAA plugin is added, passes for FXAA are inserted between the main pass and the tonemapping pass. Supports using either HDR or LDR output from the main pass.

---

## Changelog

- Add a new FXAANode that runs after the main pass when the FXAA plugin is added.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Post processing effects cannot read and write to the same texture. Currently they must own their own intermediate texture and redundantly copy from that back to the main texture. This is very inefficient.

Additionally, working with ViewTarget is more complicated than it needs to be, especially when working with HDR textures.

## Solution

`ViewTarget` now stores two copies of the "main texture". It uses an atomic value to track which is currently the "main texture" (this interior mutability is necessary to accommodate read-only RenderGraph execution).

`ViewTarget` now has a `post_process_write` method, which will return a source and destination texture. Each call to this method will flip between the two copies of the "main texture".

```rust

let post_process = render_target.post_process_write();

let source_texture = post_process.source;

let destination_texture = post_process.destination;

```

The caller _must_ read from the source texture and write to the destination texture, as it is assumed that the destination texture will become the new "main texture".

For simplicity / understandability `ViewTarget` is now a flat type. "hdr-ness" is a property of the `TextureFormat`. The internals are fully private in the interest of providing simple / consistent apis. Developers can now easily access the main texture by calling `view_target.main_texture()`.

HDR ViewTargets no longer have an "ldr texture" with `TextureFormat::bevy_default`. They _only_ have their two "hdr" textures. This simplifies the mental model. All we have is the "currently active hdr texture" and the "other hdr texture", which we flip between for post processing effects.

The tonemapping node has been rephrased to use this "post processing pattern". The blit pass has been removed, and it now only runs a pass when HDR is enabled. Notably, both the input and output texture are assumed to be HDR. This means that tonemapping behaves just like any other "post processing effect". It could theoretically be moved anywhere in the "effect chain" and continue to work.

In general, I think these changes will make the lives of people making post processing effects much easier. And they better position us to start building higher level / more structured "post processing effect stacks".

---

## Changelog

- `ViewTarget` now stores two copies of the "main texture". Calling `ViewTarget::post_process_write` will flip between copies of the main texture.

# Objective

Currently, Bevy only supports rendering to the current "surface texture format". This means that "render to texture" scenarios must use the exact format the primary window's surface uses, or Bevy will crash. This is even harder than it used to be now that we detect preferred surface formats at runtime instead of using hard coded BevyDefault values.

## Solution

1. Look up and store each window surface's texture format alongside other extracted window information

2. Specialize the upscaling pass on the current `RenderTarget`'s texture format, now that we can cheaply correlate render targets to their current texture format

3. Remove the old `SurfaceTextureFormat` and `AvailableTextureFormats`: these are now redundant with the information stored on each extracted window, and probably should not have been globals in the first place (as in theory each surface could have a different format).

This means you can now use any texture format you want when rendering to a texture! For example, changing the `render_to_texture` example to use `R16Float` now doesn't crash / properly only stores the red component:

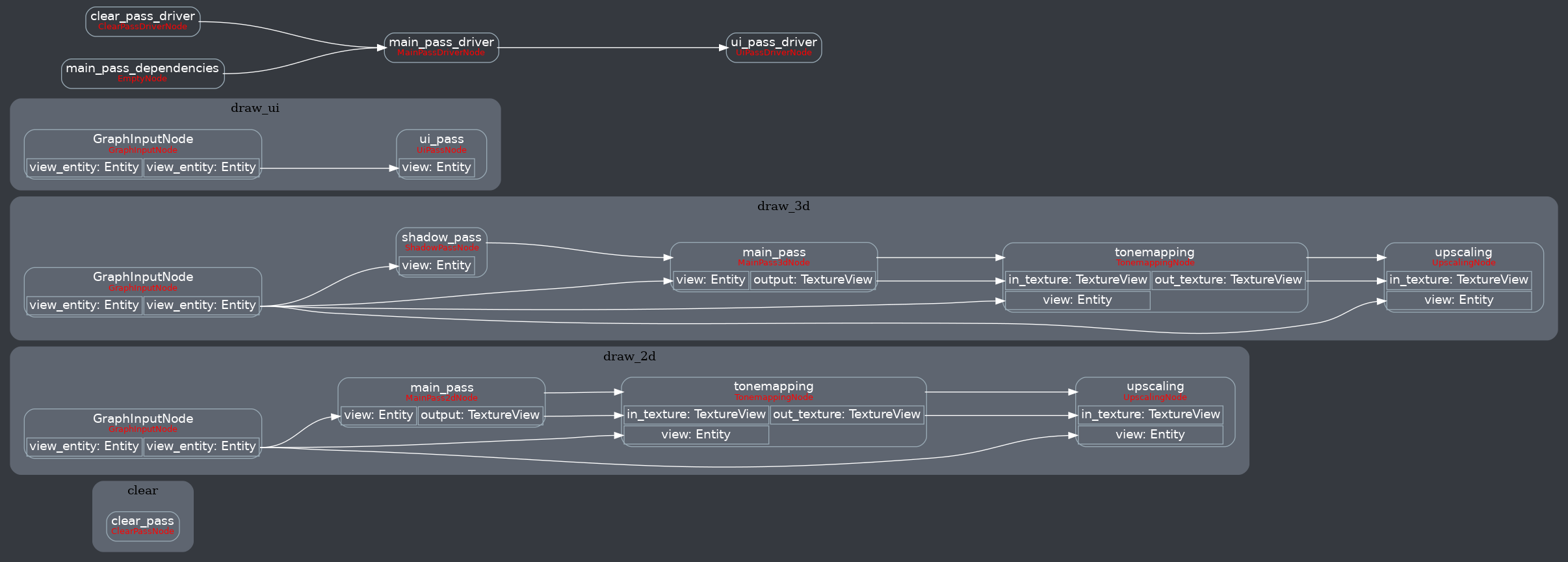

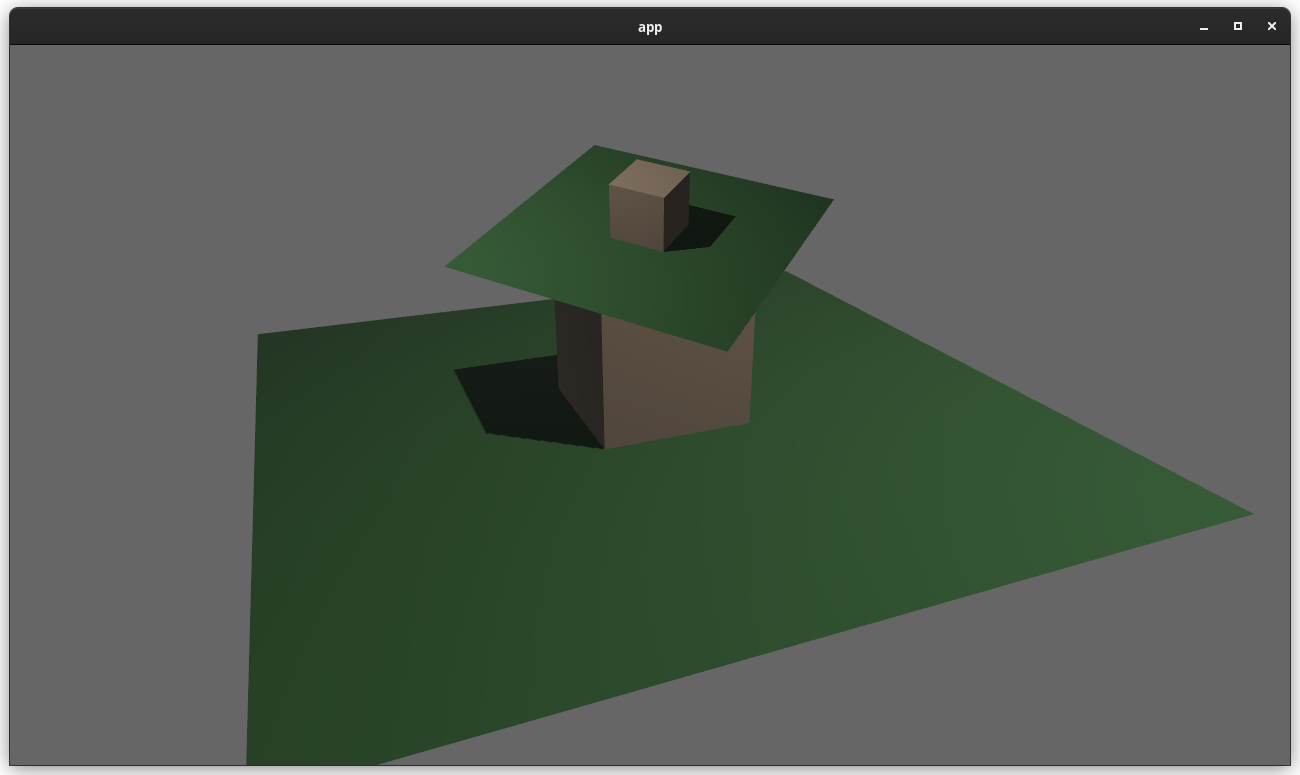

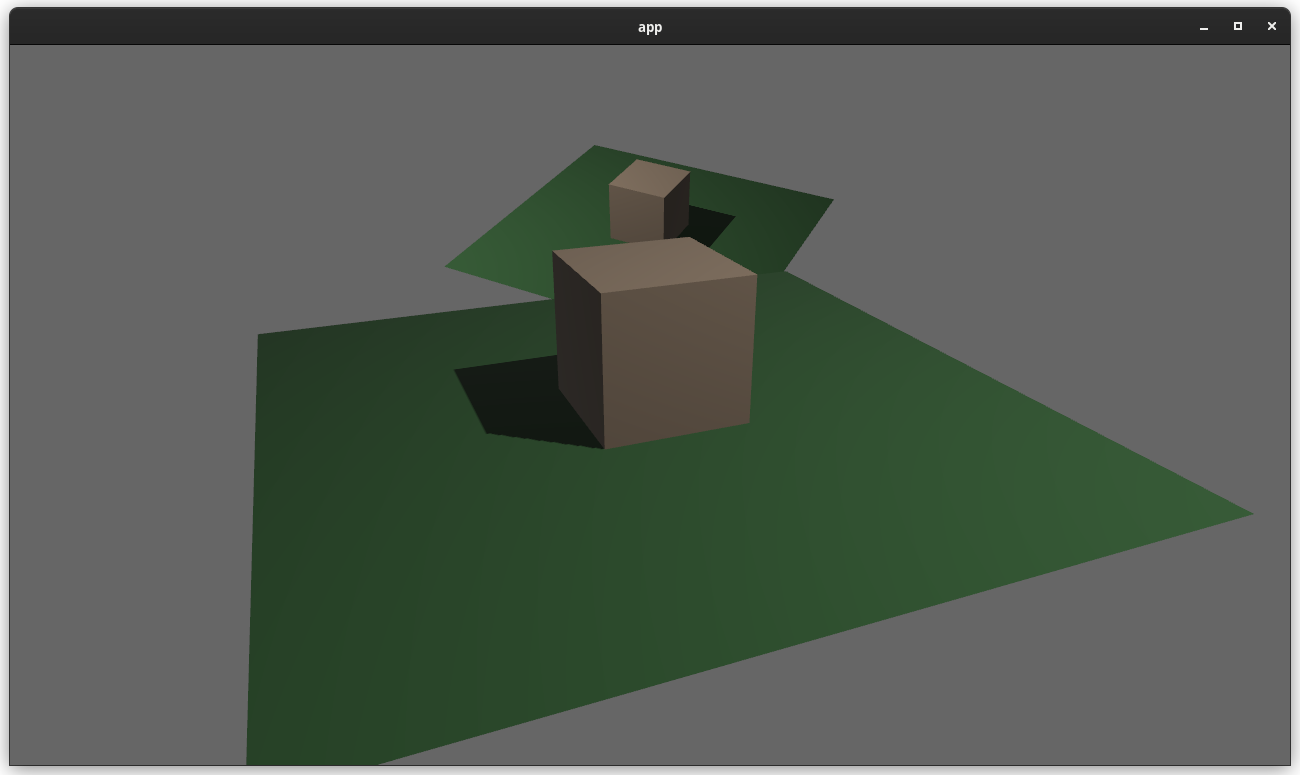

Attempt to make features like bloom https://github.com/bevyengine/bevy/pull/2876 easier to implement.

**This PR:**

- Moves the tonemapping from `pbr.wgsl` into a separate pass

- also add a separate upscaling pass after the tonemapping which writes to the swap chain (enables resolution-independant rendering and post-processing after tonemapping)

- adds a `hdr` bool to the camera which controls whether the pbr and sprite shaders render into a `Rgba16Float` texture

**Open questions:**

- ~should the 2d graph work the same as the 3d one?~ it is the same now

- ~The current solution is a bit inflexible because while you can add a post processing pass that writes to e.g. the `hdr_texture`, you can't write to a separate `user_postprocess_texture` while reading the `hdr_texture` and tell the tone mapping pass to read from the `user_postprocess_texture` instead. If the tonemapping and upscaling render graph nodes were to take in a `TextureView` instead of the view entity this would almost work, but the bind groups for their respective input textures are already created in the `Queue` render stage in the hardcoded order.~ solved by creating bind groups in render node

**New render graph:**

<details>

<summary>Before</summary>

</details>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Take advantage of the "impl Bundle for Component" changes in #2975 / add the follow up changes discussed there.

## Solution

- Change `insert` and `remove` to accept a Bundle instead of a Component (for both Commands and World)

- Deprecate `insert_bundle`, `remove_bundle`, and `remove_bundle_intersection`

- Add `remove_intersection`

---

## Changelog

- Change `insert` and `remove` now accept a Bundle instead of a Component (for both Commands and World)

- `insert_bundle` and `remove_bundle` are deprecated

## Migration Guide

Replace `insert_bundle` with `insert`:

```rust

// Old (0.8)

commands.spawn().insert_bundle(SomeBundle::default());

// New (0.9)

commands.spawn().insert(SomeBundle::default());

```

Replace `remove_bundle` with `remove`:

```rust

// Old (0.8)

commands.entity(some_entity).remove_bundle::<SomeBundle>();

// New (0.9)

commands.entity(some_entity).remove::<SomeBundle>();

```

Replace `remove_bundle_intersection` with `remove_intersection`:

```rust

// Old (0.8)

world.entity_mut(some_entity).remove_bundle_intersection::<SomeBundle>();

// New (0.9)

world.entity_mut(some_entity).remove_intersection::<SomeBundle>();

```

Consider consolidating as many operations as possible to improve ergonomics and cut down on archetype moves:

```rust

// Old (0.8)

commands.spawn()

.insert_bundle(SomeBundle::default())

.insert(SomeComponent);

// New (0.9) - Option 1

commands.spawn().insert((

SomeBundle::default(),

SomeComponent,

))

// New (0.9) - Option 2

commands.spawn_bundle((

SomeBundle::default(),

SomeComponent,

))

```

## Next Steps

Consider changing `spawn` to accept a bundle and deprecate `spawn_bundle`.

# Objective

Implement `IntoIterator` for `&Extract<P>` if the system parameter it wraps implements `IntoIterator`.

Enables the use of `IntoIterator` with an extracted query.

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

Without this we can inappropriately merge batches together without properly accounting for non-batch items between them, and the merged batch will then be sorted incorrectly later.

This change seems to reliably fix the issue I was seeing in #5919.

## Solution

Ensure the `batch_phase_system` runs after the `sort_phase_system`, so that batching can only look at actually adjacent phase items.

# Objective

- While generating https://github.com/jakobhellermann/bevy_reflect_ts_type_export/blob/main/generated/types.ts, I noticed that some types that implement `Reflect` did not register themselves

- `Viewport` isn't reflect but can be (there's a TODO)

## Solution

- register all reflected types

- derive `Reflect` for `Viewport`

## Changelog

- more types are not registered in the type registry

- remove `Serialize`, `Deserialize` impls from `Viewport`

I also decided to remove the `Serialize, Deserialize` from the `Viewport`, since they were (AFAIK) only used for reflection, which now is done without serde. So this is technically a breaking change for people who relied on that impl directly.

Personally I don't think that every bevy type should implement `Serialize, Deserialize`, as that would lead to a ton of code generation that mostly isn't necessary because we can do the same with `Reflect`, but if this is deemed controversial I can remove it from this PR.

## Migration Guide

- `KeyCode` now implements `Reflect` not as `reflect_value`, but with proper struct reflection. The `Serialize` and `Deserialize` impls were removed, now that they are no longer required for scene serialization.

# Objective

Remove unused `enum DepthCalculation` and its usages. This was used to compute visible entities in the [old renderer](db665b96c0/crates/bevy_render/src/camera/visible_entities.rs), but is now unused.

## Solution

`sed 's/DepthCalculation//g'`

---

## Changelog

### Changed

Removed `bevy_render:📷:DepthCalculation`.

## Migration Guide

Remove references to `bevy_render:📷:DepthCalculation`, such as `use bevy_render:📷:DepthCalculation`. Remove `depth_calculation` fields from Projections.

*This PR description is an edited copy of #5007, written by @alice-i-cecile.*

# Objective

Follow-up to https://github.com/bevyengine/bevy/pull/2254. The `Resource` trait currently has a blanket implementation for all types that meet its bounds.

While ergonomic, this results in several drawbacks:

* it is possible to make confusing, silent mistakes such as inserting a function pointer (Foo) rather than a value (Foo::Bar) as a resource

* it is challenging to discover if a type is intended to be used as a resource

* we cannot later add customization options (see the [RFC](https://github.com/bevyengine/rfcs/blob/main/rfcs/27-derive-component.md) for the equivalent choice for Component).

* dependencies can use the same Rust type as a resource in invisibly conflicting ways

* raw Rust types used as resources cannot preserve privacy appropriately, as anyone able to access that type can read and write to internal values

* we cannot capture a definitive list of possible resources to display to users in an editor

## Notes to reviewers

* Review this commit-by-commit; there's effectively no back-tracking and there's a lot of churn in some of these commits.

*ira: My commits are not as well organized :')*

* I've relaxed the bound on Local to Send + Sync + 'static: I don't think these concerns apply there, so this can keep things simple. Storing e.g. a u32 in a Local is fine, because there's a variable name attached explaining what it does.

* I think this is a bad place for the Resource trait to live, but I've left it in place to make reviewing easier. IMO that's best tackled with https://github.com/bevyengine/bevy/issues/4981.

## Changelog

`Resource` is no longer automatically implemented for all matching types. Instead, use the new `#[derive(Resource)]` macro.

## Migration Guide

Add `#[derive(Resource)]` to all types you are using as a resource.

If you are using a third party type as a resource, wrap it in a tuple struct to bypass orphan rules. Consider deriving `Deref` and `DerefMut` to improve ergonomics.

`ClearColor` no longer implements `Component`. Using `ClearColor` as a component in 0.8 did nothing.

Use the `ClearColorConfig` in the `Camera3d` and `Camera2d` components instead.

Co-authored-by: Alice <alice.i.cecile@gmail.com>

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: devil-ira <justthecooldude@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

> In draft until #4761 is merged. See the relevant commits [here](a85fe94a18).

---

# Objective

Update enums across Bevy to use the new enum reflection and get rid of `#[reflect_value(...)]` usages.

## Solution

Find and replace all[^1] instances of `#[reflect_value(...)]` on enum types.

---

## Changelog

- Updated all[^1] reflected enums to implement `Enum` (i.e. they are no longer `ReflectRef::Value`)

## Migration Guide

Bevy-defined enums have been updated to implement `Enum` and are not considered value types (`ReflectRef::Value`) anymore. This means that their serialized representations will need to be updated. For example, given the Bevy enum:

```rust

pub enum ScalingMode {

None,

WindowSize,

Auto { min_width: f32, min_height: f32 },

FixedVertical(f32),

FixedHorizontal(f32),

}

```

You will need to update the serialized versions accordingly.

```js

// OLD FORMAT

{

"type": "bevy_render:📷:projection::ScalingMode",

"value": FixedHorizontal(720),

},

// NEW FORMAT

{

"type": "bevy_render:📷:projection::ScalingMode",

"enum": {

"variant": "FixedHorizontal",

"tuple": [

{

"type": "f32",

"value": 720,

},

],

},

},

```

This may also have other smaller implications (such as `Debug` representation), but serialization is probably the most prominent.

[^1]: All enums except `HandleId` as neither `Uuid` nor `AssetPathId` implement the reflection traits

# Objective

- Added a bunch of backticks to things that should have them, like equations, abstract variable names,

- Changed all small x, y, and z to capitals X, Y, Z.

This might be more annoying than helpful; Feel free to refuse this PR.

Remove unnecessary calls to `iter()`/`iter_mut()`.

Mainly updates the use of queries in our code, docs, and examples.

```rust

// From

for _ in list.iter() {

for _ in list.iter_mut() {

// To

for _ in &list {

for _ in &mut list {

```

We already enable the pedantic lint [clippy::explicit_iter_loop](https://rust-lang.github.io/rust-clippy/stable/) inside of Bevy. However, this only warns for a few known types from the standard library.

## Note for reviewers

As you can see the additions and deletions are exactly equal.

Maybe give it a quick skim to check I didn't sneak in a crypto miner, but you don't have to torture yourself by reading every line.

I already experienced enough pain making this PR :)

Co-authored-by: devil-ira <justthecooldude@gmail.com>

# Objective

- Currently, the `Extract` `RenderStage` is executed on the main world, with the render world available as a resource.

- However, when needing access to resources in the render world (e.g. to mutate them), the only way to do so was to get exclusive access to the whole `RenderWorld` resource.

- This meant that effectively only one extract which wrote to resources could run at a time.

- We didn't previously make `Extract`ing writing to the world a non-happy path, even though we want to discourage that.

## Solution

- Move the extract stage to run on the render world.

- Add the main world as a `MainWorld` resource.

- Add an `Extract` `SystemParam` as a convenience to access a (read only) `SystemParam` in the main world during `Extract`.

## Future work

It should be possible to avoid needing to use `get_or_spawn` for the render commands, since now the `Commands`' `Entities` matches up with the world being executed on.

We need to determine how this interacts with https://github.com/bevyengine/bevy/pull/3519

It's theoretically possible to remove the need for the `value` method on `Extract`. However, that requires slightly changing the `SystemParam` interface, which would make it more complicated. That would probably mess up the `SystemState` api too.

## Todo

I still need to add doc comments to `Extract`.

---

## Changelog

### Changed

- The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase.

Resources on the render world can now be accessed using `ResMut` during extract.

### Removed

- `Commands::spawn_and_forget`. Use `Commands::get_or_spawn(e).insert_bundle(bundle)` instead

## Migration Guide

The `Extract` `RenderStage` now runs on the render world (instead of the main world as before).

You must use the `Extract` `SystemParam` to access the main world during the extract phase. `Extract` takes a single type parameter, which is any system parameter (such as `Res`, `Query` etc.). It will extract this from the main world, and returns the result of this extraction when `value` is called on it.

For example, if previously your extract system looked like:

```rust

fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

for cloud in clouds.iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

the new version would be:

```rust

fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

The diff is:

```diff

--- a/src/clouds.rs

+++ b/src/clouds.rs

@@ -1,5 +1,5 @@

-fn extract_clouds(mut commands: Commands, clouds: Query<Entity, With<Cloud>>) {

- for cloud in clouds.iter() {

+fn extract_clouds(mut commands: Commands, mut clouds: Extract<Query<Entity, With<Cloud>>>) {

+ for cloud in clouds.value().iter() {

commands.get_or_spawn(cloud).insert(Cloud);

}

}

```

You can now also access resources from the render world using the normal system parameters during `Extract`:

```rust

fn extract_assets(mut render_assets: ResMut<MyAssets>, source_assets: Extract<Res<MyAssets>>) {

*render_assets = source_assets.clone();

}

```

Please note that all existing extract systems need to be updated to match this new style; even if they currently compile they will not run as expected. A warning will be emitted on a best-effort basis if this is not met.

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Reduce the boilerplate code needed to make draw order sorting work correctly when queuing items through new common functionality. Also fix several instances in the bevy code-base (mostly examples) where this boilerplate appears to be incorrect.

## Solution

- Moved the logic for handling back-to-front vs front-to-back draw ordering into the PhaseItems by inverting the sort key ordering of Opaque3d and AlphaMask3d. The means that all the standard 3d rendering phases measure distance in the same way. Clients of these structs no longer need to know to negate the distance.

- Added a new utility struct, ViewRangefinder3d, which encapsulates the maths needed to calculate a "distance" from an ExtractedView and a mesh's transform matrix.

- Converted all the occurrences of the distance calculations in Bevy and its examples to use ViewRangefinder3d. Several of these occurrences appear to be buggy because they don't invert the view matrix or don't negate the distance where appropriate. This leads me to the view that Bevy should expose a facility to correctly perform this calculation.

## Migration Guide

Code which creates Opaque3d, AlphaMask3d, or Transparent3d phase items _should_ use ViewRangefinder3d to calculate the distance value.

Code which manually calculated the distance for Opaque3d or AlphaMask3d phase items and correctly negated the z value will no longer depth sort correctly. However, incorrect depth sorting for these types will not impact the rendered output as sorting is only a performance optimisation when drawing with depth-testing enabled. Code which manually calculated the distance for Transparent3d phase items will continue to work as before.

# Objective

We don't have reflection for resources.

## Solution

Introduce reflection for resources.

Continues #3580 (by @Davier), related to #3576.

---

## Changelog

### Added

* Reflection on a resource type (by adding `ReflectResource`):

```rust

#[derive(Reflect)]

#[reflect(Resource)]

struct MyResourse;

```

### Changed

* Rename `ReflectComponent::add_component` into `ReflectComponent::insert_component` for consistency.

## Migration Guide

* Rename `ReflectComponent::add_component` into `ReflectComponent::insert_component`.

# Objective

Fixes#5153

## Solution

Search for all enums and manually check if they have default impls that can use this new derive.

By my reckoning:

| enum | num |

|-|-|

| total | 159 |

| has default impl | 29 |

| default is unit variant | 23 |

# Objective

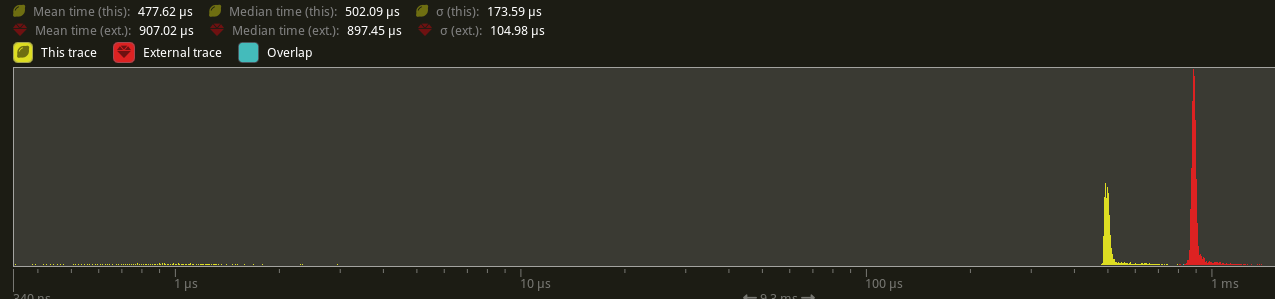

Partially addresses #4291.

Speed up the sort phase for unbatched render phases.

## Solution

Split out one of the optimizations in #4899 and allow implementors of `PhaseItem` to change what kind of sort is used when sorting the items in the phase. This currently includes Stable, Unstable, and Unsorted. Each of these corresponds to `Vec::sort_by_key`, `Vec::sort_unstable_by_key`, and no sorting at all. The default is `Unstable`. The last one can be used as a default if users introduce a preliminary depth prepass.

## Performance

This will not impact the performance of any batched phases, as it is still using a stable sort. 2D's only phase is unchanged. All 3D phases are unbatched currently, and will benefit from this change.

On `many_cubes`, where the primary phase is opaque, this change sees a speed up from 907.02us -> 477.62us, a 47.35% reduction.

## Future Work

There were prior discussions to add support for faster radix sorts in #4291, which in theory should be a `O(n)` instead of a `O(nlog(n))` time. [`voracious`](https://crates.io/crates/voracious_radix_sort) has been proposed, but it seems to be optimize for use cases with more than 30,000 items, which may be atypical for most systems.

Another optimization included in #4899 is to reduce the size of a few of the IDs commonly used in `PhaseItem` implementations to shrink the types to make swapping/sorting faster. Both `CachedPipelineId` and `DrawFunctionId` could be reduced to `u32` instead of `usize`.

Ideally, this should automatically change to use stable sorts when `BatchedPhaseItem` is implemented on the same phase item type, but this requires specialization, which may not land in stable Rust for a short while.

---

## Changelog

Added: `PhaseItem::sort`

## Migration Guide

RenderPhases now default to a unstable sort (via `slice::sort_unstable_by_key`). This can typically improve sort phase performance, but may produce incorrect batching results when implementing `BatchedPhaseItem`. To revert to the older stable sort, manually implement `PhaseItem::sort` to implement a stable sort (i.e. via `slice::sort_by_key`).

Co-authored-by: Federico Rinaldi <gisquerin@gmail.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: colepoirier <colepoirier@gmail.com>

builds on top of #4780

# Objective

`Reflect` and `Serialize` are currently very tied together because `Reflect` has a `fn serialize(&self) -> Option<Serializable<'_>>` method. Because of that, we can either implement `Reflect` for types like `Option<T>` with `T: Serialize` and have `fn serialize` be implemented, or without the bound but having `fn serialize` return `None`.

By separating `ReflectSerialize` into a separate type (like how it already is for `ReflectDeserialize`, `ReflectDefault`), we could separately `.register::<Option<T>>()` and `.register_data::<Option<T>, ReflectSerialize>()` only if the type `T: Serialize`.

This PR does not change the registration but allows it to be changed in a future PR.

## Solution

- add the type

```rust

struct ReflectSerialize { .. }

impl<T: Reflect + Serialize> FromType<T> for ReflectSerialize { .. }

```

- remove `#[reflect(Serialize)]` special casing.

- when serializing reflect value types, look for `ReflectSerialize` in the `TypeRegistry` instead of calling `value.serialize()`

# Objective

Fix#4958

There was 4 issues:

- this is not true in WASM and on macOS: f28b921209/examples/3d/split_screen.rs (L90)

- ~~I made sure the system was running at least once~~

- I'm sending the event on window creation

- in webgl, setting a viewport has impacts on other render passes

- only in webgl and when there is a custom viewport, I added a render pass without a custom viewport

- shaderdef NO_ARRAY_TEXTURES_SUPPORT was not used by the 2d pipeline

- webgl feature was used but not declared in bevy_sprite, I added it to the Cargo.toml

- shaderdef NO_STORAGE_BUFFERS_SUPPORT was not used by the 2d pipeline

- I added it based on the BufferBindingType

The last commit changes the two last fixes to add the shaderdefs in the shader cache directly instead of needing to do it in each pipeline

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

Users should be able to configure depth load operations on cameras. Currently every camera clears depth when it is rendered. But sometimes later passes need to rely on depth from previous passes.

## Solution

This adds the `Camera3d::depth_load_op` field with a new `Camera3dDepthLoadOp` value. This is a custom type because Camera3d uses "reverse-z depth" and this helps us record and document that in a discoverable way. It also gives us more control over reflection + other trait impls, whereas `LoadOp` is owned by the `wgpu` crate.

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

depth_load_op: Camera3dDepthLoadOp::Load,

..default()

},

..default()

});

```

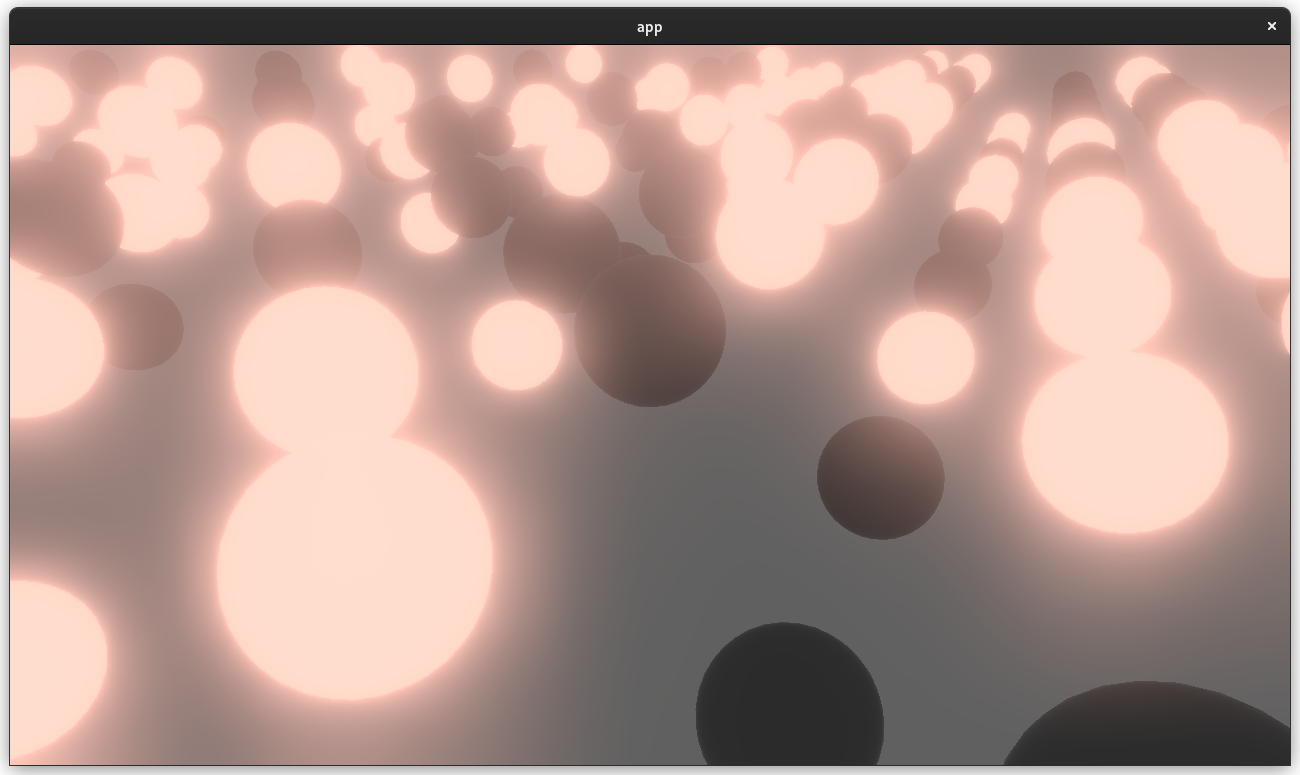

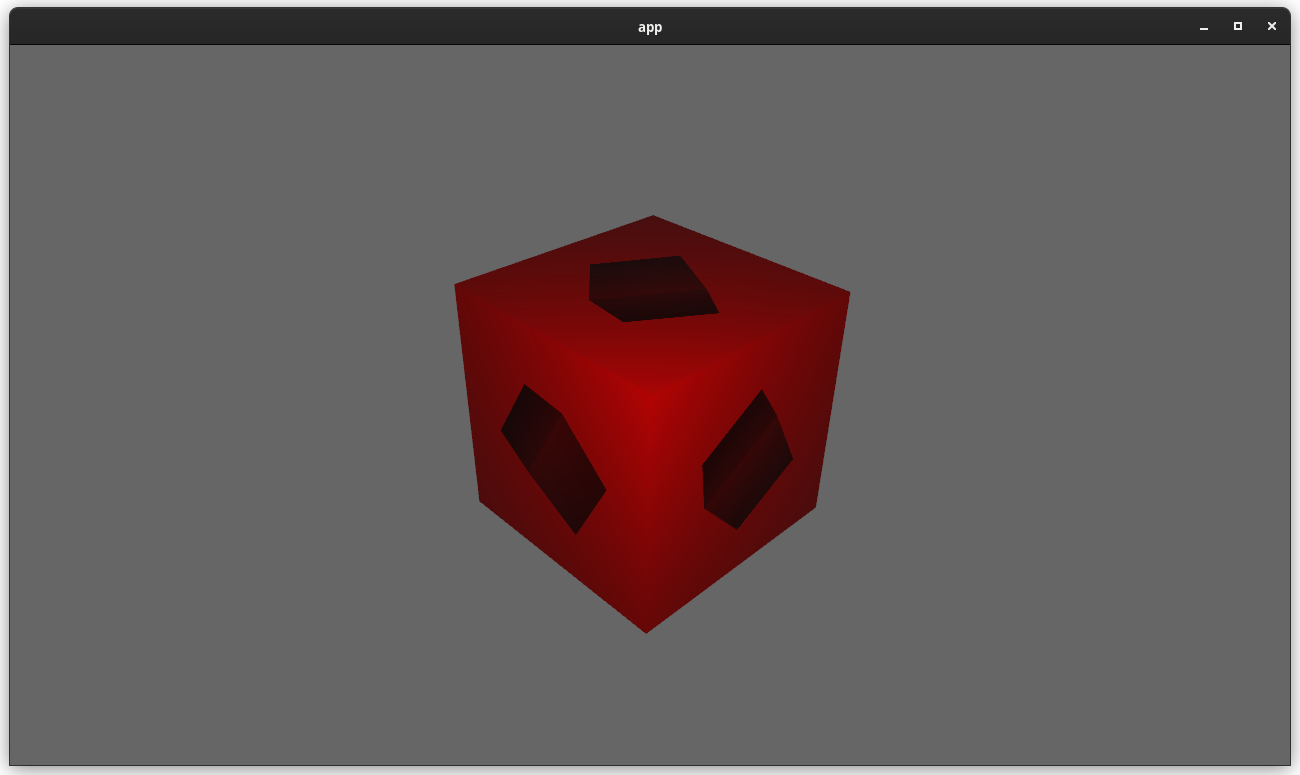

### two_passes example with the "second pass" camera configured to the default (clear depth to 0.0)

### two_passes example with the "second pass" camera configured to "load" the depth

---

## Changelog

### Added

* `Camera3d` now has a `depth_load_op` field, which can configure the Camera's main 3d pass depth loading behavior.

# Objective

Users should be able to render cameras to specific areas of a render target, which enables scenarios like split screen, minimaps, etc.

Builds on the new Camera Driven Rendering added here: #4745Fixes: #202

Alternative to #1389 and #3626 (which are incompatible with the new Camera Driven Rendering)

## Solution

Cameras can now configure an optional "viewport", which defines a rectangle within their render target to draw to. If a `Viewport` is defined, the camera's `CameraProjection`, `View`, and visibility calculations will use the viewport configuration instead of the full render target.

```rust

// This camera will render to the first half of the primary window (on the left side).

commands.spawn_bundle(Camera3dBundle {

camera: Camera {

viewport: Some(Viewport {

physical_position: UVec2::new(0, 0),

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

depth: 0.0..1.0,

}),

..default()

},

..default()

});

```

To account for this, the `Camera` component has received a few adjustments:

* `Camera` now has some new getter functions:

* `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, `projection_matrix`

* All computed camera values are now private and live on the `ComputedCameraValues` field (logical/physical width/height, the projection matrix). They are now exposed on `Camera` via getters/setters This wasn't _needed_ for viewports, but it was long overdue.

---

## Changelog

### Added

* `Camera` components now have a `viewport` field, which can be set to draw to a portion of a render target instead of the full target.

* `Camera` component has some new functions: `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, and `projection_matrix`

* Added a new split_screen example illustrating how to render two cameras to the same scene

## Migration Guide

`Camera::projection_matrix` is no longer a public field. Use the new `Camera::projection_matrix()` method instead:

```rust

// Bevy 0.7

let projection = camera.projection_matrix;

// Bevy 0.8

let projection = camera.projection_matrix();

```

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

# Objective

- Add an `ExtractResourcePlugin` for convenience and consistency

## Solution

- Add an `ExtractResourcePlugin` similar to `ExtractComponentPlugin` but for ECS `Resource`s. The system that is executed simply clones the main world resource into a render world resource, if and only if the main world resource was either added or changed since the last execution of the system.

- Add an `ExtractResource` trait with a `fn extract_resource(res: &Self) -> Self` function. This is used by the `ExtractResourcePlugin` to extract the resource

- Add a derive macro for `ExtractResource` on a `Resource` with the `Clone` trait, that simply returns `res.clone()`

- Use `ExtractResourcePlugin` wherever both possible and appropriate

# Objective

- Creating and executing render passes has GPU overhead. If there are no phase items in the render phase to draw, then this overhead should not be incurred as it has no benefit.

## Solution

- Check if there are no phase items to draw, and if not, do not construct not execute the render pass

---

## Changelog

- Changed: Do not create nor execute empty render passes

# Objective

Reduce the catch-all grab-bag of functionality in bevy_core by moving FloatOrd to bevy_utils.

A step in addressing #2931 and splitting bevy_core into more specific locations.

## Solution

Move FloatOrd into bevy_utils. Fix the compile errors.

As a result, bevy_core_pipeline, bevy_pbr, bevy_sprite, bevy_text, and bevy_ui no longer depend on bevy_core (they were only using it for `FloatOrd` previously).

# Objective

Fixes https://github.com/bevyengine/bevy/issues/3499

## Solution

Uses a `HashMap` from `RenderTarget` to sampled textures when preparing `ViewTarget`s to ensure that two passes with the same render target get sampled to the same texture.

This builds on and depends on https://github.com/bevyengine/bevy/pull/3412, so this will be a draft PR until #3412 is merged. All changes for this PR are in the last commit.

# Objective

- Fixes#3970

- To support Bevy's shader abstraction(shader defs, shader imports and hot shader reloading) for compute shaders, I have followed carts advice and change the `PipelinenCache` to accommodate both compute and render pipelines.

## Solution

- renamed `RenderPipelineCache` to `PipelineCache`

- Cached Pipelines are now represented by an enum (render, compute)

- split the `SpecializedPipelines` into `SpecializedRenderPipelines` and `SpecializedComputePipelines`

- updated the game of life example

## Open Questions

- should `SpecializedRenderPipelines` and `SpecializedComputePipelines` be merged and how would we do that?

- should the `get_render_pipeline` and `get_compute_pipeline` methods be merged?

- is pipeline specialization for different entry points a good pattern

Co-authored-by: Kurt Kühnert <51823519+Ku95@users.noreply.github.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

# Objective

- Make visible how much time is spent building the Opaque3d, AlphaMask3d, and Transparent3d passes

## Solution

- Add a `trace` feature to `bevy_core_pipeline`

- Add tracy spans around the three passes

- I didn't do this for shadows, sprites, etc as they are only one pass in the node. Perhaps it should be split into 3 nodes to allow insertion of other nodes between...?

**Problem**

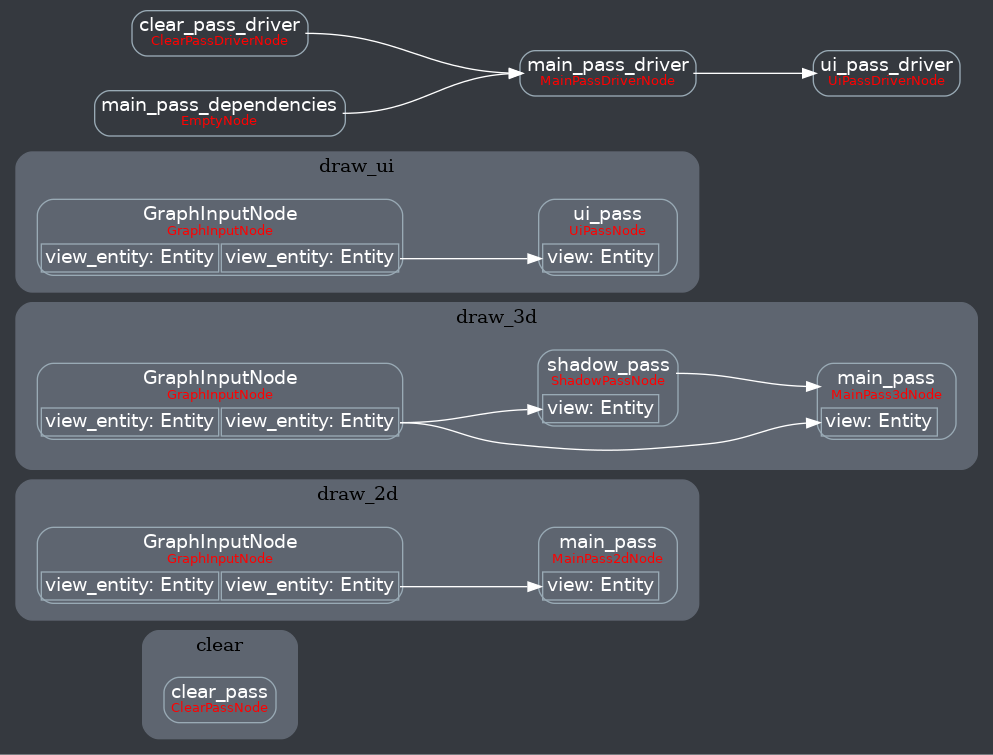

- whenever you want more than one of the builtin cameras (for example multiple windows, split screen, portals), you need to add a render graph node that executes the correct sub graph, extract the camera into the render world and add the correct `RenderPhase<T>` components

- querying for the 3d camera is annoying because you need to compare the camera's name to e.g. `CameraPlugin::CAMERA_3d`

**Solution**

- Introduce the marker types `Camera3d`, `Camera2d` and `CameraUi`

-> `Query<&mut Transform, With<Camera3d>>` works

- `PerspectiveCameraBundle::new_3d()` and `PerspectiveCameraBundle::<Camera3d>::default()` contain the `Camera3d` marker

- `OrthographicCameraBundle::new_3d()` has `Camera3d`, `OrthographicCameraBundle::new_2d()` has `Camera2d`

- remove `ActiveCameras`, `ExtractedCameraNames`

- run 2d, 3d and ui passes for every camera of their respective marker

-> no custom setup for multiple windows example needed

**Open questions**

- do we need a replacement for `ActiveCameras`? What about a component `ActiveCamera { is_active: bool }` similar to `Visibility`?

Co-authored-by: Carter Anderson <mcanders1@gmail.com>