# Objective

- Handle suspend / resume events on Android without exiting

## Solution

- On suspend: despawn the window, and set the control flow to wait for

events from the OS

- On resume: spawn a new window, and set the control flow to poll

In this video, you can see the Android example being suspended, stopping

receiving events, and working again after being resumed

https://github.com/bevyengine/bevy/assets/8672791/aaaf4b09-ee6a-4a0d-87ad-41f05def7945

Objective

---------

- Since #6742, It is not possible to build an `ArchetypeId` from a

`ArchetypeGeneration`

- This was useful to 3rd party crate extending the base bevy ECS

capabilities, such as [`bevy_ecs_dynamic`] and now

[`bevy_mod_dynamic_query`]

- Making `ArchetypeGeneration` opaque this way made it completely

useless, and removed the ability to limit archetype updates to a subset

of archetypes.

- Making the `index` method on `ArchetypeId` private prevented the use

of bitfields and other optimized data structure to store sets of

archetype ids. (without `transmute`)

This PR is not a simple reversal of the change. It exposes a different

API, rethought to keep the private stuff private and the public stuff

less error-prone.

- Add a `StartRange<ArchetypeGeneration>` `Index` implementation to

`Archetypes`

- Instead of converting the generation into an index, then creating a

ArchetypeId from that index, and indexing `Archetypes` with it, use

directly the old `ArchetypeGeneration` to get the range of new

archetypes.

From careful benchmarking, it seems to also be a performance improvement

(~0-5%) on add_archetypes.

---

Changelog

---------

- Added `impl Index<RangeFrom<ArchetypeGeneration>> for Archetypes` this

allows you to get a slice of newly added archetypes since the last

recorded generation.

- Added `ArchetypeId::index` and `ArchetypeId::new` methods. It should

enable 3rd party crates to use the `Archetypes` API in a meaningful way.

[`bevy_ecs_dynamic`]:

https://github.com/jakobhellermann/bevy_ecs_dynamic/tree/main

[`bevy_mod_dynamic_query`]:

https://github.com/nicopap/bevy_mod_dynamic_query/

---------

Co-authored-by: vero <email@atlasdostal.com>

# Objective

It is difficult to inspect the generated assembly of benchmark systems

using a tool such as `cargo-asm`

## Solution

Mark the related functions as `#[inline(never)]`. This way, you can pass

the module name as argument to `cargo-asm` to get the generated assembly

for the given function.

It may have as side effect to make benchmarks a bit more predictable and

useful too. As it prevents inlining where in bevy no inlining could

possibly take place.

### Measurements

Following the recommendations in

<https://easyperf.net/blog/2019/08/02/Perf-measurement-environment-on-Linux>,

I

1. Put my CPU in "AMD ECO" mode, which surprisingly is the equivalent of

disabling turboboost, giving more consistent performances

2. Disabled all hyperthreading cores using `echo 0 >

/sys/devices/system/cpu/cpu{11,12…}/online`

3. Set the scaling governor to `performance`

4. Manually disabled AMD boost with `echo 0 >

/sys/devices/system/cpu/cpufreq/boost`

5. Set the nice level of the criterion benchmark using `cargo bench … &

sudo renice -n -5 -p $! ; fg`

6. Not running any other program than the benchmarks (outside of system

daemons and the X11 server)

With this setup, running multiple times the same benchmarks on `main`

gives me a lot of "regression" and "improvement" messages, which is

absurd given that no code changed.

On this branch, there is still some spurious performance change

detection, but they are much less frequent.

This only accounts for `iter_simple` and `iter_frag` benchmarks of

course.

# Objective

We've done a lot of work to remove the pattern of a `&World` with

interior mutability (#6404, #8833). However, this pattern still persists

within `bevy_ecs` via the `unsafe_world` method.

## Solution

* Make `unsafe_world` private. Adjust any callsites to use

`UnsafeWorldCell` for interior mutability.

* Add `UnsafeWorldCell::removed_components`, since it is always safe to

access the removed components collection through `UnsafeWorldCell`.

## Future Work

Remove/hide `UnsafeWorldCell::world_metadata`, once we have provided

safe ways of accessing all world metadata.

---

## Changelog

+ Added `UnsafeWorldCell::removed_components`, which provides read-only

access to a world's collection of removed components.

# Objective

- Fixes#4610

## Solution

- Replaced all instances of `parking_lot` locks with equivalents from

`std::sync`. Acquiring locks within `std::sync` can fail, so

`.expect("Lock Poisoned")` statements were added where required.

## Comments

In [this

comment](https://github.com/bevyengine/bevy/issues/4610#issuecomment-1592407881),

the lack of deadlock detection was mentioned as a potential reason to

not make this change. From what I can gather, Bevy doesn't appear to be

using this functionality within the engine. Unless it was expected that

a Bevy consumer was expected to enable and use this functionality, it

appears to be a feature lost without consequence.

Unfortunately, `cpal` and `wgpu` both still rely on `parking_lot`,

leaving it in the dependency graph even after this change.

From my basic experimentation, this change doesn't appear to have any

performance impacts, positive or negative. I tested this using

`bevymark` with 50,000 entities and observed 20ms of frame-time before

and after the change. More extensive testing with larger/real projects

should probably be done.

# Objective

`Has<T>` was added to bevy_ecs, but we're still using the

`Option<With<T>>` pattern in multiple locations.

## Solution

Replace them with `Has<T>`.

# Objective

Add a new method so you can do `set_if_neq` with dereferencing

components: `as_deref_mut()`!

## Solution

Added an as_deref_mut method so that we can use `set_if_neq()` without

having to wrap up types for derefencable components

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: Joseph <21144246+JoJoJet@users.noreply.github.com>

# Objective

- Fixes#9363

## Solution

Moved `fq_std` from `bevy_reflect_derive` to `bevy_macro_utils`. This

does make the `FQ*` types public where they were previously private,

which is a change to the public-facing API, but I don't believe a

breaking one. Additionally, I've done a basic QA pass over the

`bevy_macro_utils` crate, adding `deny(unsafe)`, `warn(missing_docs)`,

and documentation where required.

# Objective

Avert a panic when removing resources from Scenes.

### Reproduction Steps

```rust

let mut scene = Scene::new(World::default());

scene.world.init_resource::<Time>();

scene.world.remove_resource::<Time>();

scene.clone_with(&app.resource::<AppTypeRegistry>());

```

### Panic Message

```

thread 'Compute Task Pool (10)' panicked at 'Requested resource bevy_time::time::Time does not exist in the `World`.

Did you forget to add it using `app.insert_resource` / `app.init_resource`?

Resources are also implicitly added via `app.add_event`,

and can be added by plugins.', .../bevy/crates/bevy_ecs/src/reflect/resource.rs:203:52

```

## Solution

Check that the resource actually still exists before copying.

---

## Changelog

- resolved a panic caused by removing resources from scenes

# Objective

Finish documenting `bevy_scene`.

## Solution

Document the remaining items and add a crate-level `warn(missing_doc)`

attribute as for the other crates with completed documentation.

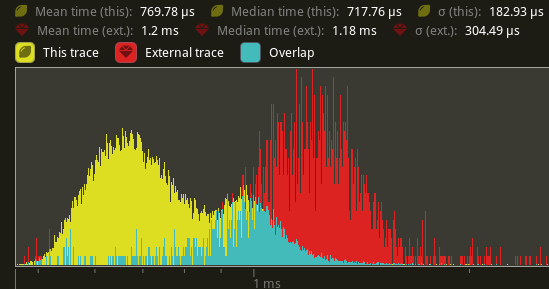

# Objective

`extract_meshes` can easily be one of the most expensive operations in

the blocking extract schedule for 3D apps. It also has no fundamentally

serialized parts and can easily be run across multiple threads. Let's

speed it up by parallelizing it!

## Solution

Use the `ThreadLocal<Cell<Vec<T>>>` approach utilized by #7348 in

conjunction with `Query::par_iter` to build a set of thread-local

queues, and collect them after going wide.

## Performance

Using `cargo run --profile stress-test --features trace_tracy --example

many_cubes`. Yellow is this PR. Red is main.

`extract_meshes`:

An average reduction from 1.2ms to 770us is seen, a 41.6% improvement.

Note: this is still not including #9950's changes, so this may actually

result in even faster speedups once that's merged in.

# Objective

- sometimes when bevy shuts down on certain machines the render thread

tries to send the time after the main world has been dropped.

- fixes an error mentioned in a reply in

https://github.com/bevyengine/bevy/issues/9543

---

## Changelog

- ignore disconnected errors from the time channel.

# Objective

The `States::variants` method was once used to construct `OnExit` and

`OnEnter` schedules for every possible value of a given `States` type.

[Since the switch to lazily initialized

schedules](https://github.com/bevyengine/bevy/pull/8028/files#diff-b2fba3a0c86e496085ce7f0e3f1de5960cb754c7d215ed0f087aa556e529f97f),

we no longer need to track every possible value.

This also opens the door to `States` types that aren't enums.

## Solution

- Remove the unused `States::variants` method and its associated type.

- Remove the enum-only restriction on derived States types.

---

## Changelog

- Removed `States::variants` and its associated type.

- Derived `States` can now be datatypes other than enums.

## Migration Guide

- `States::variants` no longer exists. If you relied on this function,

consider using a library that provides enum iterators.

# Objective

I was wondering whether to use `Timer::finished` or

`Timer::just_finished` for my repeating timer. This PR clarifies their

difference (or rather, lack thereof).

## Solution

More docs & examples.

# Objective

- There were a few typos in the project.

- This PR fixes these typos.

## Solution

- Fixing the typos.

Signed-off-by: SADIK KUZU <sadikkuzu@hotmail.com>

# Objective

In order to derive `Asset`s (v2), `TypePath` must also be implemented.

`TypePath` is not currently in the prelude, but given it is *required*

when deriving something that *is* in the prelude, I think it deserves to

be added.

## Solution

Add `TypePath` to `bevy_reflect::prelude`.

# Objective

Text bounds are computed by the layout algorithm using the text's

measurefunc so that text will only wrap after it's used the maximum

amount of available horizontal space.

When the layout size is returned the layout coordinates are rounded and

this sometimes results in the final size of the Node not matching the

size computed with the measurefunc. This means that the text may no

longer fit the horizontal available space and instead wrap onto a new

line. However, no glyphs will be generated for this new line because no

vertical space for the extra line was allocated.

fixes#9874

## Solution

Store both the rounded and unrounded node sizes in `Node`.

Rounding is used to eliminate pixel-wide gaps between nodes that should

be touching edge to edge, but this isn't necessary for text nodes as

they don't have solid edges.

## Changelog

* Added the `rounded_size: Vec2` field to `Node`.

* `text_system` uses the unrounded node size when computing a text

layout.

---------

Co-authored-by: Rob Parrett <robparrett@gmail.com>

# Objective

- run mobile tests on more devices / OS versions

## Solution

- Add more recent iOS devices / OS versions

- Add older Android devices / OS versions

You can check the results of a recent run on those devices here:

https://percy.io/dede4209/Bevy-Mobile-Example/builds/30355307

# Objective

Improve compatibility with macOS Sonoma and Xcode 15.0.

## Solution

- Adds the workaround by @ptxmac to ignore the invalid window sizes

provided by `winit` on macOS 14.0

- This still provides a slightly wrong content size when resizing (it

fails to account for the window title bar, so some content gets clipped

at the bottom) but it's _much better_ than crashing.

- Adds docs on how to work around the `bindgen` bug on Xcode 15.0.

## Related Issues:

- https://github.com/RustAudio/coreaudio-sys/issues/85

- https://github.com/rust-windowing/winit/issues/2876

---

## Changelog

- Added a workaround for a `winit`-related crash under macOS Sonoma

(14.0)

---------

Co-authored-by: Peter Kristensen <peter@ptx.dk>

# Objective

- Improve rendering performance, particularly by avoiding the large

system commands costs of using the ECS in the way that the render world

does.

## Solution

- Define `EntityHasher` that calculates a hash from the

`Entity.to_bits()` by `i | (i.wrapping_mul(0x517cc1b727220a95) << 32)`.

`0x517cc1b727220a95` is something like `u64::MAX / N` for N that gives a

value close to π and that works well for hashing. Thanks for @SkiFire13

for the suggestion and to @nicopap for alternative suggestions and

discussion. This approach comes from `rustc-hash` (a.k.a. `FxHasher`)

with some tweaks for the case of hashing an `Entity`. `FxHasher` and

`SeaHasher` were also tested but were significantly slower.

- Define `EntityHashMap` type that uses the `EntityHashser`

- Use `EntityHashMap<Entity, T>` for render world entity storage,

including:

- `RenderMaterialInstances` - contains the `AssetId<M>` of the material

associated with the entity. Also for 2D.

- `RenderMeshInstances` - contains mesh transforms, flags and properties

about mesh entities. Also for 2D.

- `SkinIndices` and `MorphIndices` - contains the skin and morph index

for an entity, respectively

- `ExtractedSprites`

- `ExtractedUiNodes`

## Benchmarks

All benchmarks have been conducted on an M1 Max connected to AC power.

The tests are run for 1500 frames. The 1000th frame is captured for

comparison to check for visual regressions. There were none.

### 2D Meshes

`bevymark --benchmark --waves 160 --per-wave 1000 --mode mesh2d`

#### `--ordered-z`

This test spawns the 2D meshes with z incrementing back to front, which

is the ideal arrangement allocation order as it matches the sorted

render order which means lookups have a high cache hit rate.

<img width="1112" alt="Screenshot 2023-09-27 at 07 50 45"

src="https://github.com/bevyengine/bevy/assets/302146/e140bc98-7091-4a3b-8ae1-ab75d16d2ccb">

-39.1% median frame time.

#### Random

This test spawns the 2D meshes with random z. This not only makes the

batching and transparent 2D pass lookups get a lot of cache misses, it

also currently means that the meshes are almost certain to not be

batchable.

<img width="1108" alt="Screenshot 2023-09-27 at 07 51 28"

src="https://github.com/bevyengine/bevy/assets/302146/29c2e813-645a-43ce-982a-55df4bf7d8c4">

-7.2% median frame time.

### 3D Meshes

`many_cubes --benchmark`

<img width="1112" alt="Screenshot 2023-09-27 at 07 51 57"

src="https://github.com/bevyengine/bevy/assets/302146/1a729673-3254-4e2a-9072-55e27c69f0fc">

-7.7% median frame time.

### Sprites

**NOTE: On `main` sprites are using `SparseSet<Entity, T>`!**

`bevymark --benchmark --waves 160 --per-wave 1000 --mode sprite`

#### `--ordered-z`

This test spawns the sprites with z incrementing back to front, which is

the ideal arrangement allocation order as it matches the sorted render

order which means lookups have a high cache hit rate.

<img width="1116" alt="Screenshot 2023-09-27 at 07 52 31"

src="https://github.com/bevyengine/bevy/assets/302146/bc8eab90-e375-4d31-b5cd-f55f6f59ab67">

+13.0% median frame time.

#### Random

This test spawns the sprites with random z. This makes the batching and

transparent 2D pass lookups get a lot of cache misses.

<img width="1109" alt="Screenshot 2023-09-27 at 07 53 01"

src="https://github.com/bevyengine/bevy/assets/302146/22073f5d-99a7-49b0-9584-d3ac3eac3033">

+0.6% median frame time.

### UI

**NOTE: On `main` UI is using `SparseSet<Entity, T>`!**

`many_buttons`

<img width="1111" alt="Screenshot 2023-09-27 at 07 53 26"

src="https://github.com/bevyengine/bevy/assets/302146/66afd56d-cbe4-49e7-8b64-2f28f6043d85">

+15.1% median frame time.

## Alternatives

- Cart originally suggested trying out `SparseSet<Entity, T>` and indeed

that is slightly faster under ideal conditions. However,

`PassHashMap<Entity, T>` has better worst case performance when data is

randomly distributed, rather than in sorted render order, and does not

have the worst case memory usage that `SparseSet`'s dense `Vec<usize>`

that maps from the `Entity` index to sparse index into `Vec<T>`. This

dense `Vec` has to be as large as the largest Entity index used with the

`SparseSet`.

- I also tested `PassHashMap<u32, T>`, intending to use `Entity.index()`

as the key, but this proved to sometimes be slower and mostly no

different.

- The only outstanding approach that has not been implemented and tested

is to _not_ clear the render world of its entities each frame. That has

its own problems, though they could perhaps be solved.

- Performance-wise, if the entities and their component data were not

cleared, then they would incur table moves on spawn, and should not

thereafter, rather just their component data would be overwritten.

Ideally we would have a neat way of either updating data in-place via

`&mut T` queries, or inserting components if not present. This would

likely be quite cumbersome to have to remember to do everywhere, but

perhaps it only needs to be done in the more performance-sensitive

systems.

- The main problem to solve however is that we want to both maintain a

mapping between main world entities and render world entities, be able

to run the render app and world in parallel with the main app and world

for pipelined rendering, and at the same time be able to spawn entities

in the render world in such a way that those Entity ids do not collide

with those spawned in the main world. This is potentially quite

solvable, but could well be a lot of ECS work to do it in a way that

makes sense.

---

## Changelog

- Changed: Component data for entities to be drawn are no longer stored

on entities in the render world. Instead, data is stored in a

`EntityHashMap<Entity, T>` in various resources. This brings significant

performance benefits due to the way the render app clears entities every

frame. Resources of most interest are `RenderMeshInstances` and

`RenderMaterialInstances`, and their 2D counterparts.

## Migration Guide

Previously the render app extracted mesh entities and their component

data from the main world and stored them as entities and components in

the render world. Now they are extracted into essentially

`EntityHashMap<Entity, T>` where `T` are structs containing an

appropriate group of data. This means that while extract set systems

will continue to run extract queries against the main world they will

store their data in hash maps. Also, systems in later sets will either

need to look up entities in the available resources such as

`RenderMeshInstances`, or maintain their own `EntityHashMap<Entity, T>`

for their own data.

Before:

```rust

fn queue_custom(

material_meshes: Query<(Entity, &MeshTransforms, &Handle<Mesh>), With<InstanceMaterialData>>,

) {

...

for (entity, mesh_transforms, mesh_handle) in &material_meshes {

...

}

}

```

After:

```rust

fn queue_custom(

render_mesh_instances: Res<RenderMeshInstances>,

instance_entities: Query<Entity, With<InstanceMaterialData>>,

) {

...

for entity in &instance_entities {

let Some(mesh_instance) = render_mesh_instances.get(&entity) else { continue; };

// The mesh handle in `AssetId<Mesh>` form, and the `MeshTransforms` can now

// be found in `mesh_instance` which is a `RenderMeshInstance`

...

}

}

```

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

# Objective

Complete the documentation for `bevy_window`.

## Solution

The `warn(missing_doc)` attribute was only applying to the `cursor`

module as it was declared as an inner attribute. I switched it to an

outer attribute and documented the remaining items.

# Objective

Fixes: #9898

## Solution

Make morph behave like other keyframes, lerping first between start and

end, and then between the current state and the result.

## Changelog

Fixed jerky morph targets

---------

Co-authored-by: Nicola Papale <nicopap@users.noreply.github.com>

Co-authored-by: CGMossa <cgmossa@gmail.com>

# Objective

The scetion for guides about flexbox has a link to grid and the section

for grid has a link to a guide about flexbox.

## Solution

Swapped links for flexbox and grid.

---

This is a duplicate of #9632, it was created since I forgot to make a

new branch when I first made this PR, so I was having trouble resolving

merge conflicts, meaning I had to rebuild my PR.

# Objective

- Allow other plugins to create the renderer resources. An example of

where this would be required is my [OpenXR

plugin](https://github.com/awtterpip/bevy_openxr)

## Solution

- Changed the bevy RenderPlugin to optionally take precreated render

resources instead of a configuration.

## Migration Guide

The `RenderPlugin` now takes a `RenderCreation` enum instead of

`WgpuSettings`. `RenderSettings::default()` returns

`RenderSettings::Automatic(WgpuSettings::default())`. `RenderSettings`

also implements `From<WgpuSettings>`.

```rust

// before

RenderPlugin {

wgpu_settings: WgpuSettings {

...

},

}

// now

RenderPlugin {

render_creation: RenderCreation::Automatic(WgpuSettings {

...

}),

}

// or

RenderPlugin {

render_creation: WgpuSettings {

...

}.into(),

}

```

---------

Co-authored-by: Malek <pocmalek@gmail.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

# Objective

Some beginners spend time trying to manually set the position of a

`TextBundle`, without realizing that `Text2dBundle` exists.

## Solution

Mention `Text2dBundle` in the documentation of `TextBundle`.

---------

Co-authored-by: Rob Parrett <robparrett@gmail.com>

# Objective

Fixes#9625

## Solution

Adds `async-io` as an optional dependency of `bevy_tasks`. When enabled,

this causes calls to `futures_lite::future::block_on` to be replaced

with calls to `async_io::block_on`.

---

## Changelog

- Added a new `async-io` feature to `bevy_tasks`. When enabled, this

causes `bevy_tasks` to use `async-io`'s implemention of `block_on`

instead of `futures-lite`'s implementation. You should enable this if

you use `async-io` in your application.

# Objective

This is a minimally disruptive version of #8340. I attempted to update

it, but failed due to the scope of the changes added in #8204.

Fixes#8307. Partially addresses #4642. As seen in

https://github.com/bevyengine/bevy/issues/8284, we're actually copying

data twice in Prepare stage systems. Once into a CPU-side intermediate

scratch buffer, and once again into a mapped buffer. This is inefficient

and effectively doubles the time spent and memory allocated to run these

systems.

## Solution

Skip the scratch buffer entirely and use

`wgpu::Queue::write_buffer_with` to directly write data into mapped

buffers.

Separately, this also directly uses

`wgpu::Limits::min_uniform_buffer_offset_alignment` to set up the

alignment when writing to the buffers. Partially addressing the issue

raised in #4642.

Storage buffers and the abstractions built on top of

`DynamicUniformBuffer` will need to come in followup PRs.

This may not have a noticeable performance difference in this PR, as the

only first-party systems affected by this are view related, and likely

are not going to be particularly heavy.

---

## Changelog

Added: `DynamicUniformBuffer::get_writer`.

Added: `DynamicUniformBufferWriter`.

derive `Reflect` to `GlyphAtlasInfo`,`PositionedGlyph` and

`TextLayoutInfo`.

# Objective

- I need reflection gets all components of the `TextBundle` and

`clone_value` it

## Solution

- registry it

# Objective

mesh.rs is infamously large. We could split off unrelated code.

## Solution

Morph targets are very similar to skinning and have their own module. We

move skinned meshes to an independent module like morph targets and give

the systems similar names.

### Open questions

Should the skinning systems and structs stay public?

---

## Migration Guide

Renamed skinning systems, resources and components:

- extract_skinned_meshes -> extract_skins

- prepare_skinned_meshes -> prepare_skins

- SkinnedMeshUniform -> SkinUniform

- SkinnedMeshJoints -> SkinIndex

---------

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: vero <email@atlasdostal.com>

# Objective

Scheduling low cost systems has significant overhead due to task pool

contention and the extra machinery to schedule and run them. Event

update systems are the prime example of a low cost system, requiring a

guaranteed O(1) operation, and there are a *lot* of them.

## Solution

Add a run condition to every event system so they only run when there is

an event in either of it's two internal Vecs.

---

## Changelog

Changed: Event update systems will not run if there are no events to

process.

## Migration Guide

`Events<T>::update_system` has been split off from the the type and can

be found at `bevy_ecs::event::event_update_system`.

---------

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

# Objective

- Fixes#9707

## Solution

- At large translations (a few thousand units), the precision of

calculating the ray direction from the fragment world position and

camera world position seems to break down. Sampling the cubemap only

needs the ray direction. As such we can use the view space fragment

position, normalise it, rotate it to world space, and use that.

---

## Changelog

- Fixed: Jittery skybox at large translations.

# Objective

- https://github.com/bevyengine/bevy/pull/7609 broke Android support

```

8721 8770 I event crates/bevy_winit/src/system.rs:55: Creating new window "App" (0v0)

8721 8769 I RustStdoutStderr: thread '<unnamed>' panicked at 'Cannot get the native window, it's null and will always be null before Event::Resumed and after Event::Suspended. Make sure you only call this function between those events.', winit-0.28.6/src/platform_impl/android/mod.rs:1058:13

```

## Solution

- Don't create windows on `StartCause::Init` as it's too early

# Objective

- Make it possible to write APIs that require a type or homogenous

storage for both `Children` & `Parent` that is agnostic to edge

direction.

## Solution

- Add a way to get the `Entity` from `Parent` as a slice.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: Joseph <21144246+JoJoJet@users.noreply.github.com>

# Objective

- Implement the foundations of automatic batching/instancing of draw

commands as the next step from #89

- NOTE: More performance improvements will come when more data is

managed and bound in ways that do not require rebinding such as mesh,

material, and texture data.

## Solution

- The core idea for batching of draw commands is to check whether any of

the information that has to be passed when encoding a draw command

changes between two things that are being drawn according to the sorted

render phase order. These should be things like the pipeline, bind

groups and their dynamic offsets, index/vertex buffers, and so on.

- The following assumptions have been made:

- Only entities with prepared assets (pipelines, materials, meshes) are

queued to phases

- View bindings are constant across a phase for a given draw function as

phases are per-view

- `batch_and_prepare_render_phase` is the only system that performs this

batching and has sole responsibility for preparing the per-object data.

As such the mesh binding and dynamic offsets are assumed to only vary as

a result of the `batch_and_prepare_render_phase` system, e.g. due to

having to split data across separate uniform bindings within the same

buffer due to the maximum uniform buffer binding size.

- Implement `GpuArrayBuffer` for `Mesh2dUniform` to store Mesh2dUniform

in arrays in GPU buffers rather than each one being at a dynamic offset

in a uniform buffer. This is the same optimisation that was made for 3D

not long ago.

- Change batch size for a range in `PhaseItem`, adding API for getting

or mutating the range. This is more flexible than a size as the length

of the range can be used in place of the size, but the start and end can

be otherwise whatever is needed.

- Add an optional mesh bind group dynamic offset to `PhaseItem`. This

avoids having to do a massive table move just to insert

`GpuArrayBufferIndex` components.

## Benchmarks

All tests have been run on an M1 Max on AC power. `bevymark` and

`many_cubes` were modified to use 1920x1080 with a scale factor of 1. I

run a script that runs a separate Tracy capture process, and then runs

the bevy example with `--features bevy_ci_testing,trace_tracy` and

`CI_TESTING_CONFIG=../benchmark.ron` with the contents of

`../benchmark.ron`:

```rust

(

exit_after: Some(1500)

)

```

...in order to run each test for 1500 frames.

The recent changes to `many_cubes` and `bevymark` added reproducible

random number generation so that with the same settings, the same rng

will occur. They also added benchmark modes that use a fixed delta time

for animations. Combined this means that the same frames should be

rendered both on main and on the branch.

The graphs compare main (yellow) to this PR (red).

### 3D Mesh `many_cubes --benchmark`

<img width="1411" alt="Screenshot 2023-09-03 at 23 42 10"

src="https://github.com/bevyengine/bevy/assets/302146/2088716a-c918-486c-8129-090b26fd2bc4">

The mesh and material are the same for all instances. This is basically

the best case for the initial batching implementation as it results in 1

draw for the ~11.7k visible meshes. It gives a ~30% reduction in median

frame time.

The 1000th frame is identical using the flip tool:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4615 seconds

```

### 3D Mesh `many_cubes --benchmark --material-texture-count 10`

<img width="1404" alt="Screenshot 2023-09-03 at 23 45 18"

src="https://github.com/bevyengine/bevy/assets/302146/5ee9c447-5bd2-45c6-9706-ac5ff8916daf">

This run uses 10 different materials by varying their textures. The

materials are randomly selected, and there is no sorting by material

bind group for opaque 3D so any batching is 'random'. The PR produces a

~5% reduction in median frame time. If we were to sort the opaque phase

by the material bind group, then this should be a lot faster. This

produces about 10.5k draws for the 11.7k visible entities. This makes

sense as randomly selecting from 10 materials gives a chance that two

adjacent entities randomly select the same material and can be batched.

The 1000th frame is identical in flip:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4537 seconds

```

### 3D Mesh `many_cubes --benchmark --vary-per-instance`

<img width="1394" alt="Screenshot 2023-09-03 at 23 48 44"

src="https://github.com/bevyengine/bevy/assets/302146/f02a816b-a444-4c18-a96a-63b5436f3b7f">

This run varies the material data per instance by randomly-generating

its colour. This is the worst case for batching and that it performs

about the same as `main` is a good thing as it demonstrates that the

batching has minimal overhead when dealing with ~11k visible mesh

entities.

The 1000th frame is identical according to flip:

```

Mean: 0.000000

Weighted median: 0.000000

1st weighted quartile: 0.000000

3rd weighted quartile: 0.000000

Min: 0.000000

Max: 0.000000

Evaluation time: 0.4568 seconds

```

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d`

<img width="1412" alt="Screenshot 2023-09-03 at 23 59 56"

src="https://github.com/bevyengine/bevy/assets/302146/cb02ae07-237b-4646-ae9f-fda4dafcbad4">

This spawns 160 waves of 1000 quad meshes that are shaded with

ColorMaterial. Each wave has a different material so 160 waves currently

should result in 160 batches. This results in a 50% reduction in median

frame time.

Capturing a screenshot of the 1000th frame main vs PR gives:

```

Mean: 0.001222

Weighted median: 0.750432

1st weighted quartile: 0.453494

3rd weighted quartile: 0.969758

Min: 0.000000

Max: 0.990296

Evaluation time: 0.4255 seconds

```

So they seem to produce the same results. I also double-checked the

number of draws. `main` does 160000 draws, and the PR does 160, as

expected.

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d --material-texture-count 10`

<img width="1392" alt="Screenshot 2023-09-04 at 00 09 22"

src="https://github.com/bevyengine/bevy/assets/302146/4358da2e-ce32-4134-82df-3ab74c40849c">

This generates 10 textures and generates materials for each of those and

then selects one material per wave. The median frame time is reduced by

50%. Similar to the plain run above, this produces 160 draws on the PR

and 160000 on `main` and the 1000th frame is identical (ignoring the fps

counter text overlay).

```

Mean: 0.002877

Weighted median: 0.964980

1st weighted quartile: 0.668871

3rd weighted quartile: 0.982749

Min: 0.000000

Max: 0.992377

Evaluation time: 0.4301 seconds

```

### 2D Mesh `bevymark --benchmark --waves 160 --per-wave 1000 --mode

mesh2d --vary-per-instance`

<img width="1396" alt="Screenshot 2023-09-04 at 00 13 53"

src="https://github.com/bevyengine/bevy/assets/302146/b2198b18-3439-47ad-919a-cdabe190facb">

This creates unique materials per instance by randomly-generating the

material's colour. This is the worst case for 2D batching. Somehow, this

PR manages a 7% reduction in median frame time. Both main and this PR

issue 160000 draws.

The 1000th frame is the same:

```

Mean: 0.001214

Weighted median: 0.937499

1st weighted quartile: 0.635467

3rd weighted quartile: 0.979085

Min: 0.000000

Max: 0.988971

Evaluation time: 0.4462 seconds

```

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite`

<img width="1396" alt="Screenshot 2023-09-04 at 12 21 12"

src="https://github.com/bevyengine/bevy/assets/302146/8b31e915-d6be-4cac-abf5-c6a4da9c3d43">

This just spawns 160 waves of 1000 sprites. There should be and is no

notable difference between main and the PR.

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite --material-texture-count 10`

<img width="1389" alt="Screenshot 2023-09-04 at 12 36 08"

src="https://github.com/bevyengine/bevy/assets/302146/45fe8d6d-c901-4062-a349-3693dd044413">

This spawns the sprites selecting a texture at random per instance from

the 10 generated textures. This has no significant change vs main and

shouldn't.

### 2D Sprite `bevymark --benchmark --waves 160 --per-wave 1000 --mode

sprite --vary-per-instance`

<img width="1401" alt="Screenshot 2023-09-04 at 12 29 52"

src="https://github.com/bevyengine/bevy/assets/302146/762c5c60-352e-471f-8dbe-bbf10e24ebd6">

This sets the sprite colour as being unique per instance. This can still

all be drawn using one batch. There should be no difference but the PR

produces median frame times that are 4% higher. Investigation showed no

clear sources of cost, rather a mix of give and take that should not

happen. It seems like noise in the results.

### Summary

| Benchmark | % change in median frame time |

| ------------- | ------------- |

| many_cubes | 🟩 -30% |

| many_cubes 10 materials | 🟩 -5% |

| many_cubes unique materials | 🟩 ~0% |

| bevymark mesh2d | 🟩 -50% |

| bevymark mesh2d 10 materials | 🟩 -50% |

| bevymark mesh2d unique materials | 🟩 -7% |

| bevymark sprite | 🟥 2% |

| bevymark sprite 10 materials | 🟥 0.6% |

| bevymark sprite unique materials | 🟥 4.1% |

---

## Changelog

- Added: 2D and 3D mesh entities that share the same mesh and material

(same textures, same data) are now batched into the same draw command

for better performance.

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Nicola Papale <nico@nicopap.ch>

# Objective

Improve code-gen for `QueryState::validate_world` and

`SystemState::validate_world`.

## Solution

* Move panics into separate, non-inlined functions, to reduce the code

size of the outer methods.

* Mark the panicking functions with `#[cold]` to help the compiler

optimize for the happy path.

* Mark the functions with `#[track_caller]` to make debugging easier.

---------

Co-authored-by: James Liu <contact@jamessliu.com>

# Objective

Fix a performance regression in the "[bevy vs

pixi](https://github.com/SUPERCILEX/bevy-vs-pixi)" benchmark.

This benchmark seems to have a slightly pathological distribution of `z`

values -- Sprites are spawned with a random `z` value with a child

sprite at `f32::EPSILON` relative to the parent.

See discussion here:

https://github.com/bevyengine/bevy/issues/8100#issuecomment-1726978633

## Solution

Use `radsort` for sorting `Transparent2d` `PhaseItem`s.

Use random `z` values in bevymark to stress the phase sort. Add an

`--ordered-z` option to `bevymark` that uses the old behavior.

## Benchmarks

mac m1 max

| benchmark | fps before | fps after | diff |

| - | - | - | - |

| bevymark --waves 120 --per-wave 1000 --random-z | 42.16 | 47.06 | 🟩

+11.6% |

| bevymark --waves 120 --per-wave 1000 | 52.50 | 52.29 | 🟥 -0.4% |

| bevymark --waves 120 --per-wave 1000 --mode mesh2d --random-z | 9.64 |

10.24 | 🟩 +6.2% |

| bevymark --waves 120 --per-wave 1000 --mode mesh2d | 15.83 | 15.59 | 🟥

-1.5% |

| bevy-vs-pixi | 39.71 | 59.88 | 🟩 +50.1% |

## Discussion

It's possible that `TransparentUi` should also change. We could probably

use `slice::sort_unstable_by_key` with the current sort key though, as

its items are always sorted and unique. I'd prefer to follow up later to

look into that.

Here's a survey of sorts used by other `PhaseItem`s

#### slice::sort_by_key

`Transparent2d`, `TransparentUi`

#### radsort

`Opaque3d`, `AlphaMask3d`, `Transparent3d`, `Opaque3dPrepass`,

`AlphaMask3dPrepass`, `Shadow`

I also tried `slice::sort_unstable_by_key` with a compound sort key

including `Entity`, but it didn't seem as promising and I didn't test it

as thoroughly.

---------

Co-authored-by: Alice Cecile <alice.i.cecile@gmail.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

# Objective

Some rendering system did heavy use of `if let`, and could be improved

by using `let else`.

## Solution

- Reduce rightward drift by using let-else over if-let

- Extract value-to-key mappings to their own functions so that the

system is less bloated, easier to understand

- Use a `let` binding instead of untupling in closure argument to reduce

indentation

## Note to reviewers

Enable the "no white space diff" for easier viewing.

In the "Files changed" view, click on the little cog right of the "Jump

to" text, on the row where the "Review changes" button is. then enable

the "Hide whitespace" checkbox and click reload.

# Objective

Rename the `num_font_atlases` method of `FontAtlasSet` to `len`.

All the function does is return the number of entries in its hashmap and

the unnatural naming only makes it harder to discover.

---

## Changelog

* Renamed the `num_font_atlases` method of `FontAtlasSet` to `len`.

## Migration Guide

The `num_font_atlases` method of `FontAtlasSet` has been renamed to

`len`.