mirror of

https://github.com/bevyengine/bevy

synced 2025-02-18 15:08:36 +00:00

Camera Driven Rendering (#4745)

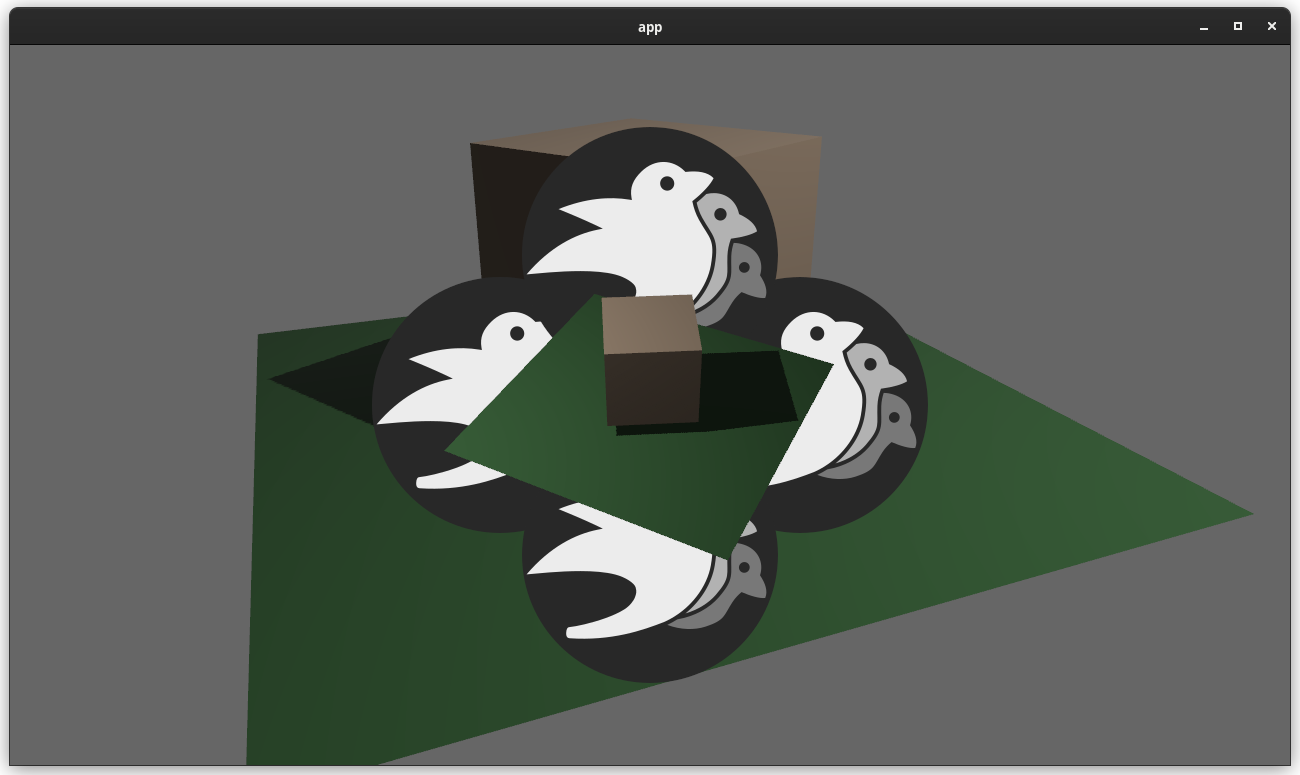

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier. Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):  Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work". Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id: ```rust // main camera (main window) commands.spawn_bundle(Camera2dBundle::default()); // second camera (other window) commands.spawn_bundle(Camera2dBundle { camera: Camera { target: RenderTarget::Window(window_id), ..default() }, ..default() }); ``` Rendering to a texture is as simple as pointing the camera at a texture: ```rust commands.spawn_bundle(Camera2dBundle { camera: Camera { target: RenderTarget::Texture(image_handle), ..default() }, ..default() }); ``` Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`). ```rust // main pass camera with a default priority of 0 commands.spawn_bundle(Camera2dBundle::default()); commands.spawn_bundle(Camera2dBundle { camera: Camera { target: RenderTarget::Texture(image_handle.clone()), priority: -1, ..default() }, ..default() }); commands.spawn_bundle(SpriteBundle { texture: image_handle, ..default() }) ``` Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system: ```rust commands.spawn_bundle(Camera3dBundle::default()); commands.spawn_bundle(Camera2dBundle { camera: Camera { // this will render 2d entities "on top" of the default 3d camera's render priority: 1, ..default() }, ..default() }); ``` There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active. Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections. ```rust // old 3d perspective camera commands.spawn_bundle(PerspectiveCameraBundle::default()) // new 3d perspective camera commands.spawn_bundle(Camera3dBundle::default()) ``` ```rust // old 2d orthographic camera commands.spawn_bundle(OrthographicCameraBundle::new_2d()) // new 2d orthographic camera commands.spawn_bundle(Camera2dBundle::default()) ``` ```rust // old 3d orthographic camera commands.spawn_bundle(OrthographicCameraBundle::new_3d()) // new 3d orthographic camera commands.spawn_bundle(Camera3dBundle { projection: OrthographicProjection { scale: 3.0, scaling_mode: ScalingMode::FixedVertical, ..default() }.into(), ..default() }) ``` Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors. If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component: ```rust commands.spawn_bundle(Camera3dBundle { camera_render_graph: CameraRenderGraph::new(some_render_graph_name), ..default() }) ``` Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added. Speaking of using components to configure graphs / passes, there are a number of new configuration options: ```rust commands.spawn_bundle(Camera3dBundle { camera_3d: Camera3d { // overrides the default global clear color clear_color: ClearColorConfig::Custom(Color::RED), ..default() }, ..default() }) commands.spawn_bundle(Camera3dBundle { camera_3d: Camera3d { // disables clearing clear_color: ClearColorConfig::None, ..default() }, ..default() }) ``` Expect to see more of the "graph configuration Components on Cameras" pattern in the future. By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component: ```rust commands .spawn_bundle(Camera3dBundle::default()) .insert(CameraUi { is_enabled: false, ..default() }) ``` ## Other Changes * The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr. * I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization. * I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler. * All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr. * Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic. * Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals: 1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs. 2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense. ## Follow Up Work * Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen) * Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor) * Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system). * Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable. * Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

This commit is contained in:

parent

f2b53de4aa

commit

f487407e07

120 changed files with 1537 additions and 1742 deletions

|

|

@ -19,7 +19,12 @@ trace = []

|

|||

# bevy

|

||||

bevy_app = { path = "../bevy_app", version = "0.8.0-dev" }

|

||||

bevy_asset = { path = "../bevy_asset", version = "0.8.0-dev" }

|

||||

bevy_derive = { path = "../bevy_derive", version = "0.8.0-dev" }

|

||||

bevy_ecs = { path = "../bevy_ecs", version = "0.8.0-dev" }

|

||||

bevy_reflect = { path = "../bevy_reflect", version = "0.8.0-dev" }

|

||||

bevy_render = { path = "../bevy_render", version = "0.8.0-dev" }

|

||||

bevy_transform = { path = "../bevy_transform", version = "0.8.0-dev" }

|

||||

bevy_utils = { path = "../bevy_utils", version = "0.8.0-dev" }

|

||||

|

||||

serde = { version = "1", features = ["derive"] }

|

||||

|

||||

|

|

|

|||

32

crates/bevy_core_pipeline/src/clear_color.rs

Normal file

32

crates/bevy_core_pipeline/src/clear_color.rs

Normal file

|

|

@ -0,0 +1,32 @@

|

|||

use bevy_derive::{Deref, DerefMut};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_reflect::{Reflect, ReflectDeserialize};

|

||||

use bevy_render::{color::Color, extract_resource::ExtractResource};

|

||||

use serde::{Deserialize, Serialize};

|

||||

|

||||

#[derive(Reflect, Serialize, Deserialize, Clone, Debug)]

|

||||

#[reflect_value(Serialize, Deserialize)]

|

||||

pub enum ClearColorConfig {

|

||||

Default,

|

||||

Custom(Color),

|

||||

None,

|

||||

}

|

||||

|

||||

impl Default for ClearColorConfig {

|

||||

fn default() -> Self {

|

||||

ClearColorConfig::Default

|

||||

}

|

||||

}

|

||||

|

||||

/// When used as a resource, sets the color that is used to clear the screen between frames.

|

||||

///

|

||||

/// This color appears as the "background" color for simple apps, when

|

||||

/// there are portions of the screen with nothing rendered.

|

||||

#[derive(Component, Clone, Debug, Deref, DerefMut, ExtractResource)]

|

||||

pub struct ClearColor(pub Color);

|

||||

|

||||

impl Default for ClearColor {

|

||||

fn default() -> Self {

|

||||

Self(Color::rgb(0.4, 0.4, 0.4))

|

||||

}

|

||||

}

|

||||

|

|

@ -1,128 +0,0 @@

|

|||

use std::collections::HashSet;

|

||||

|

||||

use crate::{ClearColor, RenderTargetClearColors};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

camera::{ExtractedCamera, RenderTarget},

|

||||

prelude::Image,

|

||||

render_asset::RenderAssets,

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext, SlotInfo},

|

||||

render_resource::{

|

||||

LoadOp, Operations, RenderPassColorAttachment, RenderPassDepthStencilAttachment,

|

||||

RenderPassDescriptor,

|

||||

},

|

||||

renderer::RenderContext,

|

||||

view::{ExtractedView, ExtractedWindows, ViewDepthTexture, ViewTarget},

|

||||

};

|

||||

|

||||

pub struct ClearPassNode {

|

||||

query: QueryState<

|

||||

(

|

||||

&'static ViewTarget,

|

||||

Option<&'static ViewDepthTexture>,

|

||||

Option<&'static ExtractedCamera>,

|

||||

),

|

||||

With<ExtractedView>,

|

||||

>,

|

||||

}

|

||||

|

||||

impl ClearPassNode {

|

||||

pub fn new(world: &mut World) -> Self {

|

||||

Self {

|

||||

query: QueryState::new(world),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl Node for ClearPassNode {

|

||||

fn input(&self) -> Vec<SlotInfo> {

|

||||

vec![]

|

||||

}

|

||||

|

||||

fn update(&mut self, world: &mut World) {

|

||||

self.query.update_archetypes(world);

|

||||

}

|

||||

|

||||

fn run(

|

||||

&self,

|

||||

_graph: &mut RenderGraphContext,

|

||||

render_context: &mut RenderContext,

|

||||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

let mut cleared_targets = HashSet::new();

|

||||

let clear_color = world.resource::<ClearColor>();

|

||||

let render_target_clear_colors = world.resource::<RenderTargetClearColors>();

|

||||

|

||||

// This gets all ViewTargets and ViewDepthTextures and clears its attachments

|

||||

// TODO: This has the potential to clear the same target multiple times, if there

|

||||

// are multiple views drawing to the same target. This should be fixed when we make

|

||||

// clearing happen on "render targets" instead of "views" (see the TODO below for more context).

|

||||

for (target, depth, camera) in self.query.iter_manual(world) {

|

||||

let mut color = &clear_color.0;

|

||||

if let Some(camera) = camera {

|

||||

cleared_targets.insert(&camera.target);

|

||||

if let Some(target_color) = render_target_clear_colors.get(&camera.target) {

|

||||

color = target_color;

|

||||

}

|

||||

}

|

||||

let pass_descriptor = RenderPassDescriptor {

|

||||

label: Some("clear_pass"),

|

||||

color_attachments: &[target.get_color_attachment(Operations {

|

||||

load: LoadOp::Clear((*color).into()),

|

||||

store: true,

|

||||

})],

|

||||

depth_stencil_attachment: depth.map(|depth| RenderPassDepthStencilAttachment {

|

||||

view: &depth.view,

|

||||

depth_ops: Some(Operations {

|

||||

load: LoadOp::Clear(0.0),

|

||||

store: true,

|

||||

}),

|

||||

stencil_ops: None,

|

||||

}),

|

||||

};

|

||||

|

||||

render_context

|

||||

.command_encoder

|

||||

.begin_render_pass(&pass_descriptor);

|

||||

}

|

||||

|

||||

// TODO: This is a hack to ensure we don't call present() on frames without any work,

|

||||

// which will cause panics. The real fix here is to clear "render targets" directly

|

||||

// instead of "views". This should be removed once full RenderTargets are implemented.

|

||||

let windows = world.resource::<ExtractedWindows>();

|

||||

let images = world.resource::<RenderAssets<Image>>();

|

||||

for target in render_target_clear_colors.colors.keys().cloned().chain(

|

||||

windows

|

||||

.values()

|

||||

.map(|window| RenderTarget::Window(window.id)),

|

||||

) {

|

||||

// skip windows that have already been cleared

|

||||

if cleared_targets.contains(&target) {

|

||||

continue;

|

||||

}

|

||||

let pass_descriptor = RenderPassDescriptor {

|

||||

label: Some("clear_pass"),

|

||||

color_attachments: &[RenderPassColorAttachment {

|

||||

view: target.get_texture_view(windows, images).unwrap(),

|

||||

resolve_target: None,

|

||||

ops: Operations {

|

||||

load: LoadOp::Clear(

|

||||

(*render_target_clear_colors

|

||||

.get(&target)

|

||||

.unwrap_or(&clear_color.0))

|

||||

.into(),

|

||||

),

|

||||

store: true,

|

||||

},

|

||||

}],

|

||||

depth_stencil_attachment: None,

|

||||

};

|

||||

|

||||

render_context

|

||||

.command_encoder

|

||||

.begin_render_pass(&pass_descriptor);

|

||||

}

|

||||

|

||||

Ok(())

|

||||

}

|

||||

}

|

||||

|

|

@ -1,20 +0,0 @@

|

|||

use bevy_ecs::world::World;

|

||||

use bevy_render::{

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext},

|

||||

renderer::RenderContext,

|

||||

};

|

||||

|

||||

pub struct ClearPassDriverNode;

|

||||

|

||||

impl Node for ClearPassDriverNode {

|

||||

fn run(

|

||||

&self,

|

||||

graph: &mut RenderGraphContext,

|

||||

_render_context: &mut RenderContext,

|

||||

_world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

graph.run_sub_graph(crate::clear_graph::NAME, vec![])?;

|

||||

|

||||

Ok(())

|

||||

}

|

||||

}

|

||||

82

crates/bevy_core_pipeline/src/core_2d/camera_2d.rs

Normal file

82

crates/bevy_core_pipeline/src/core_2d/camera_2d.rs

Normal file

|

|

@ -0,0 +1,82 @@

|

|||

use crate::clear_color::ClearColorConfig;

|

||||

use bevy_ecs::{prelude::*, query::QueryItem};

|

||||

use bevy_reflect::Reflect;

|

||||

use bevy_render::{

|

||||

camera::{

|

||||

Camera, CameraProjection, CameraRenderGraph, DepthCalculation, OrthographicProjection,

|

||||

},

|

||||

extract_component::ExtractComponent,

|

||||

primitives::Frustum,

|

||||

view::VisibleEntities,

|

||||

};

|

||||

use bevy_transform::prelude::{GlobalTransform, Transform};

|

||||

|

||||

#[derive(Component, Default, Reflect, Clone)]

|

||||

#[reflect(Component)]

|

||||

pub struct Camera2d {

|

||||

pub clear_color: ClearColorConfig,

|

||||

}

|

||||

|

||||

impl ExtractComponent for Camera2d {

|

||||

type Query = &'static Self;

|

||||

type Filter = With<Camera>;

|

||||

|

||||

fn extract_component(item: QueryItem<Self::Query>) -> Self {

|

||||

item.clone()

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Bundle)]

|

||||

pub struct Camera2dBundle {

|

||||

pub camera: Camera,

|

||||

pub camera_render_graph: CameraRenderGraph,

|

||||

pub projection: OrthographicProjection,

|

||||

pub visible_entities: VisibleEntities,

|

||||

pub frustum: Frustum,

|

||||

pub transform: Transform,

|

||||

pub global_transform: GlobalTransform,

|

||||

pub camera_2d: Camera2d,

|

||||

}

|

||||

|

||||

impl Default for Camera2dBundle {

|

||||

fn default() -> Self {

|

||||

Self::new_with_far(1000.0)

|

||||

}

|

||||

}

|

||||

|

||||

impl Camera2dBundle {

|

||||

/// Create an orthographic projection camera with a custom Z position.

|

||||

///

|

||||

/// The camera is placed at `Z=far-0.1`, looking toward the world origin `(0,0,0)`.

|

||||

/// Its orthographic projection extends from `0.0` to `-far` in camera view space,

|

||||

/// corresponding to `Z=far-0.1` (closest to camera) to `Z=-0.1` (furthest away from

|

||||

/// camera) in world space.

|

||||

pub fn new_with_far(far: f32) -> Self {

|

||||

// we want 0 to be "closest" and +far to be "farthest" in 2d, so we offset

|

||||

// the camera's translation by far and use a right handed coordinate system

|

||||

let projection = OrthographicProjection {

|

||||

far,

|

||||

depth_calculation: DepthCalculation::ZDifference,

|

||||

..Default::default()

|

||||

};

|

||||

let transform = Transform::from_xyz(0.0, 0.0, far - 0.1);

|

||||

let view_projection =

|

||||

projection.get_projection_matrix() * transform.compute_matrix().inverse();

|

||||

let frustum = Frustum::from_view_projection(

|

||||

&view_projection,

|

||||

&transform.translation,

|

||||

&transform.back(),

|

||||

projection.far(),

|

||||

);

|

||||

Self {

|

||||

camera_render_graph: CameraRenderGraph::new(crate::core_2d::graph::NAME),

|

||||

projection,

|

||||

visible_entities: VisibleEntities::default(),

|

||||

frustum,

|

||||

transform,

|

||||

global_transform: Default::default(),

|

||||

camera: Camera::default(),

|

||||

camera_2d: Camera2d::default(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -1,4 +1,7 @@

|

|||

use crate::Transparent2d;

|

||||

use crate::{

|

||||

clear_color::{ClearColor, ClearColorConfig},

|

||||

core_2d::{camera_2d::Camera2d, Transparent2d},

|

||||

};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext, SlotInfo, SlotType},

|

||||

|

|

@ -9,8 +12,14 @@ use bevy_render::{

|

|||

};

|

||||

|

||||

pub struct MainPass2dNode {

|

||||

query:

|

||||

QueryState<(&'static RenderPhase<Transparent2d>, &'static ViewTarget), With<ExtractedView>>,

|

||||

query: QueryState<

|

||||

(

|

||||

&'static RenderPhase<Transparent2d>,

|

||||

&'static ViewTarget,

|

||||

&'static Camera2d,

|

||||

),

|

||||

With<ExtractedView>,

|

||||

>,

|

||||

}

|

||||

|

||||

impl MainPass2dNode {

|

||||

|

|

@ -18,7 +27,7 @@ impl MainPass2dNode {

|

|||

|

||||

pub fn new(world: &mut World) -> Self {

|

||||

Self {

|

||||

query: QueryState::new(world),

|

||||

query: world.query_filtered(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -39,20 +48,24 @@ impl Node for MainPass2dNode {

|

|||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

|

||||

// If there is no view entity, do not try to process the render phase for the view

|

||||

let (transparent_phase, target) = match self.query.get_manual(world, view_entity) {

|

||||

Ok(it) => it,

|

||||

_ => return Ok(()),

|

||||

};

|

||||

|

||||

if transparent_phase.items.is_empty() {

|

||||

let (transparent_phase, target, camera_2d) =

|

||||

if let Ok(result) = self.query.get_manual(world, view_entity) {

|

||||

result

|

||||

} else {

|

||||

// no target

|

||||

return Ok(());

|

||||

}

|

||||

};

|

||||

|

||||

let pass_descriptor = RenderPassDescriptor {

|

||||

label: Some("main_pass_2d"),

|

||||

color_attachments: &[target.get_color_attachment(Operations {

|

||||

load: LoadOp::Load,

|

||||

load: match camera_2d.clear_color {

|

||||

ClearColorConfig::Default => {

|

||||

LoadOp::Clear(world.resource::<ClearColor>().0.into())

|

||||

}

|

||||

ClearColorConfig::Custom(color) => LoadOp::Clear(color.into()),

|

||||

ClearColorConfig::None => LoadOp::Load,

|

||||

},

|

||||

store: true,

|

||||

})],

|

||||

depth_stencil_attachment: None,

|

||||

130

crates/bevy_core_pipeline/src/core_2d/mod.rs

Normal file

130

crates/bevy_core_pipeline/src/core_2d/mod.rs

Normal file

|

|

@ -0,0 +1,130 @@

|

|||

mod camera_2d;

|

||||

mod main_pass_2d_node;

|

||||

|

||||

pub mod graph {

|

||||

pub const NAME: &str = "core_2d";

|

||||

pub mod input {

|

||||

pub const VIEW_ENTITY: &str = "view_entity";

|

||||

}

|

||||

pub mod node {

|

||||

pub const MAIN_PASS: &str = "main_pass";

|

||||

}

|

||||

}

|

||||

|

||||

pub use camera_2d::*;

|

||||

pub use main_pass_2d_node::*;

|

||||

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

camera::Camera,

|

||||

extract_component::ExtractComponentPlugin,

|

||||

render_graph::{RenderGraph, SlotInfo, SlotType},

|

||||

render_phase::{

|

||||

batch_phase_system, sort_phase_system, BatchedPhaseItem, CachedRenderPipelinePhaseItem,

|

||||

DrawFunctionId, DrawFunctions, EntityPhaseItem, PhaseItem, RenderPhase,

|

||||

},

|

||||

render_resource::CachedRenderPipelineId,

|

||||

RenderApp, RenderStage,

|

||||

};

|

||||

use bevy_utils::FloatOrd;

|

||||

use std::ops::Range;

|

||||

|

||||

pub struct Core2dPlugin;

|

||||

|

||||

impl Plugin for Core2dPlugin {

|

||||

fn build(&self, app: &mut App) {

|

||||

app.register_type::<Camera2d>()

|

||||

.add_plugin(ExtractComponentPlugin::<Camera2d>::default());

|

||||

|

||||

let render_app = match app.get_sub_app_mut(RenderApp) {

|

||||

Ok(render_app) => render_app,

|

||||

Err(_) => return,

|

||||

};

|

||||

|

||||

render_app

|

||||

.init_resource::<DrawFunctions<Transparent2d>>()

|

||||

.add_system_to_stage(RenderStage::Extract, extract_core_2d_camera_phases)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Transparent2d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, batch_phase_system::<Transparent2d>);

|

||||

|

||||

let pass_node_2d = MainPass2dNode::new(&mut render_app.world);

|

||||

let mut graph = render_app.world.resource_mut::<RenderGraph>();

|

||||

|

||||

let mut draw_2d_graph = RenderGraph::default();

|

||||

draw_2d_graph.add_node(graph::node::MAIN_PASS, pass_node_2d);

|

||||

let input_node_id = draw_2d_graph.set_input(vec![SlotInfo::new(

|

||||

graph::input::VIEW_ENTITY,

|

||||

SlotType::Entity,

|

||||

)]);

|

||||

draw_2d_graph

|

||||

.add_slot_edge(

|

||||

input_node_id,

|

||||

graph::input::VIEW_ENTITY,

|

||||

graph::node::MAIN_PASS,

|

||||

MainPass2dNode::IN_VIEW,

|

||||

)

|

||||

.unwrap();

|

||||

graph.add_sub_graph(graph::NAME, draw_2d_graph);

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Transparent2d {

|

||||

pub sort_key: FloatOrd,

|

||||

pub entity: Entity,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub draw_function: DrawFunctionId,

|

||||

/// Range in the vertex buffer of this item

|

||||

pub batch_range: Option<Range<u32>>,

|

||||

}

|

||||

|

||||

impl PhaseItem for Transparent2d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

self.sort_key

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for Transparent2d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for Transparent2d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

impl BatchedPhaseItem for Transparent2d {

|

||||

fn batch_range(&self) -> &Option<Range<u32>> {

|

||||

&self.batch_range

|

||||

}

|

||||

|

||||

fn batch_range_mut(&mut self) -> &mut Option<Range<u32>> {

|

||||

&mut self.batch_range

|

||||

}

|

||||

}

|

||||

|

||||

pub fn extract_core_2d_camera_phases(

|

||||

mut commands: Commands,

|

||||

cameras_2d: Query<(Entity, &Camera), With<Camera2d>>,

|

||||

) {

|

||||

for (entity, camera) in cameras_2d.iter() {

|

||||

if camera.is_active {

|

||||

commands

|

||||

.get_or_spawn(entity)

|

||||

.insert(RenderPhase::<Transparent2d>::default());

|

||||

}

|

||||

}

|

||||

}

|

||||

53

crates/bevy_core_pipeline/src/core_3d/camera_3d.rs

Normal file

53

crates/bevy_core_pipeline/src/core_3d/camera_3d.rs

Normal file

|

|

@ -0,0 +1,53 @@

|

|||

use crate::clear_color::ClearColorConfig;

|

||||

use bevy_ecs::{prelude::*, query::QueryItem};

|

||||

use bevy_reflect::Reflect;

|

||||

use bevy_render::{

|

||||

camera::{Camera, CameraRenderGraph, Projection},

|

||||

extract_component::ExtractComponent,

|

||||

primitives::Frustum,

|

||||

view::VisibleEntities,

|

||||

};

|

||||

use bevy_transform::prelude::{GlobalTransform, Transform};

|

||||

|

||||

#[derive(Component, Default, Reflect, Clone)]

|

||||

#[reflect(Component)]

|

||||

pub struct Camera3d {

|

||||

pub clear_color: ClearColorConfig,

|

||||

}

|

||||

|

||||

impl ExtractComponent for Camera3d {

|

||||

type Query = &'static Self;

|

||||

type Filter = With<Camera>;

|

||||

|

||||

fn extract_component(item: QueryItem<Self::Query>) -> Self {

|

||||

item.clone()

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Bundle)]

|

||||

pub struct Camera3dBundle {

|

||||

pub camera: Camera,

|

||||

pub camera_render_graph: CameraRenderGraph,

|

||||

pub projection: Projection,

|

||||

pub visible_entities: VisibleEntities,

|

||||

pub frustum: Frustum,

|

||||

pub transform: Transform,

|

||||

pub global_transform: GlobalTransform,

|

||||

pub camera_3d: Camera3d,

|

||||

}

|

||||

|

||||

// NOTE: ideally Perspective and Orthographic defaults can share the same impl, but sadly it breaks rust's type inference

|

||||

impl Default for Camera3dBundle {

|

||||

fn default() -> Self {

|

||||

Self {

|

||||

camera_render_graph: CameraRenderGraph::new(crate::core_3d::graph::NAME),

|

||||

camera: Default::default(),

|

||||

projection: Default::default(),

|

||||

visible_entities: Default::default(),

|

||||

frustum: Default::default(),

|

||||

transform: Default::default(),

|

||||

global_transform: Default::default(),

|

||||

camera_3d: Default::default(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -1,4 +1,7 @@

|

|||

use crate::{AlphaMask3d, Opaque3d, Transparent3d};

|

||||

use crate::{

|

||||

clear_color::{ClearColor, ClearColorConfig},

|

||||

core_3d::{AlphaMask3d, Camera3d, Opaque3d, Transparent3d},

|

||||

};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext, SlotInfo, SlotType},

|

||||

|

|

@ -16,6 +19,7 @@ pub struct MainPass3dNode {

|

|||

&'static RenderPhase<Opaque3d>,

|

||||

&'static RenderPhase<AlphaMask3d>,

|

||||

&'static RenderPhase<Transparent3d>,

|

||||

&'static Camera3d,

|

||||

&'static ViewTarget,

|

||||

&'static ViewDepthTexture,

|

||||

),

|

||||

|

|

@ -28,7 +32,7 @@ impl MainPass3dNode {

|

|||

|

||||

pub fn new(world: &mut World) -> Self {

|

||||

Self {

|

||||

query: QueryState::new(world),

|

||||

query: world.query_filtered(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -49,13 +53,16 @@ impl Node for MainPass3dNode {

|

|||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

|

||||

let (opaque_phase, alpha_mask_phase, transparent_phase, target, depth) =

|

||||

let (opaque_phase, alpha_mask_phase, transparent_phase, camera_3d, target, depth) =

|

||||

match self.query.get_manual(world, view_entity) {

|

||||

Ok(query) => query,

|

||||

Err(_) => return Ok(()), // No window

|

||||

Err(_) => {

|

||||

return Ok(());

|

||||

} // No window

|

||||

};

|

||||

|

||||

if !opaque_phase.items.is_empty() {

|

||||

// Always run opaque pass to ensure screen is cleared

|

||||

{

|

||||

// Run the opaque pass, sorted front-to-back

|

||||

// NOTE: Scoped to drop the mutable borrow of render_context

|

||||

#[cfg(feature = "trace")]

|

||||

|

|

@ -65,14 +72,21 @@ impl Node for MainPass3dNode {

|

|||

// NOTE: The opaque pass loads the color

|

||||

// buffer as well as writing to it.

|

||||

color_attachments: &[target.get_color_attachment(Operations {

|

||||

load: LoadOp::Load,

|

||||

load: match camera_3d.clear_color {

|

||||

ClearColorConfig::Default => {

|

||||

LoadOp::Clear(world.resource::<ClearColor>().0.into())

|

||||

}

|

||||

ClearColorConfig::Custom(color) => LoadOp::Clear(color.into()),

|

||||

ClearColorConfig::None => LoadOp::Load,

|

||||

},

|

||||

store: true,

|

||||

})],

|

||||

depth_stencil_attachment: Some(RenderPassDepthStencilAttachment {

|

||||

view: &depth.view,

|

||||

// NOTE: The opaque main pass loads the depth buffer and possibly overwrites it

|

||||

depth_ops: Some(Operations {

|

||||

load: LoadOp::Load,

|

||||

// NOTE: 0.0 is the far plane due to bevy's use of reverse-z projections

|

||||

load: LoadOp::Clear(0.0),

|

||||

store: true,

|

||||

}),

|

||||

stencil_ops: None,

|

||||

250

crates/bevy_core_pipeline/src/core_3d/mod.rs

Normal file

250

crates/bevy_core_pipeline/src/core_3d/mod.rs

Normal file

|

|

@ -0,0 +1,250 @@

|

|||

mod camera_3d;

|

||||

mod main_pass_3d_node;

|

||||

|

||||

pub mod graph {

|

||||

pub const NAME: &str = "core_3d";

|

||||

pub mod input {

|

||||

pub const VIEW_ENTITY: &str = "view_entity";

|

||||

}

|

||||

pub mod node {

|

||||

pub const MAIN_PASS: &str = "main_pass";

|

||||

}

|

||||

}

|

||||

|

||||

pub use camera_3d::*;

|

||||

pub use main_pass_3d_node::*;

|

||||

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

camera::{Camera, ExtractedCamera},

|

||||

extract_component::ExtractComponentPlugin,

|

||||

prelude::Msaa,

|

||||

render_graph::{RenderGraph, SlotInfo, SlotType},

|

||||

render_phase::{

|

||||

sort_phase_system, CachedRenderPipelinePhaseItem, DrawFunctionId, DrawFunctions,

|

||||

EntityPhaseItem, PhaseItem, RenderPhase,

|

||||

},

|

||||

render_resource::{

|

||||

CachedRenderPipelineId, Extent3d, TextureDescriptor, TextureDimension, TextureFormat,

|

||||

TextureUsages,

|

||||

},

|

||||

renderer::RenderDevice,

|

||||

texture::TextureCache,

|

||||

view::{ExtractedView, ViewDepthTexture},

|

||||

RenderApp, RenderStage,

|

||||

};

|

||||

use bevy_utils::{FloatOrd, HashMap};

|

||||

|

||||

pub struct Core3dPlugin;

|

||||

|

||||

impl Plugin for Core3dPlugin {

|

||||

fn build(&self, app: &mut App) {

|

||||

app.register_type::<Camera3d>()

|

||||

.add_plugin(ExtractComponentPlugin::<Camera3d>::default());

|

||||

|

||||

let render_app = match app.get_sub_app_mut(RenderApp) {

|

||||

Ok(render_app) => render_app,

|

||||

Err(_) => return,

|

||||

};

|

||||

|

||||

render_app

|

||||

.init_resource::<DrawFunctions<Opaque3d>>()

|

||||

.init_resource::<DrawFunctions<AlphaMask3d>>()

|

||||

.init_resource::<DrawFunctions<Transparent3d>>()

|

||||

.add_system_to_stage(RenderStage::Extract, extract_core_3d_camera_phases)

|

||||

.add_system_to_stage(RenderStage::Prepare, prepare_core_3d_views_system)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Opaque3d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<AlphaMask3d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Transparent3d>);

|

||||

|

||||

let pass_node_3d = MainPass3dNode::new(&mut render_app.world);

|

||||

let mut graph = render_app.world.resource_mut::<RenderGraph>();

|

||||

|

||||

let mut draw_3d_graph = RenderGraph::default();

|

||||

draw_3d_graph.add_node(graph::node::MAIN_PASS, pass_node_3d);

|

||||

let input_node_id = draw_3d_graph.set_input(vec![SlotInfo::new(

|

||||

graph::input::VIEW_ENTITY,

|

||||

SlotType::Entity,

|

||||

)]);

|

||||

draw_3d_graph

|

||||

.add_slot_edge(

|

||||

input_node_id,

|

||||

graph::input::VIEW_ENTITY,

|

||||

graph::node::MAIN_PASS,

|

||||

MainPass3dNode::IN_VIEW,

|

||||

)

|

||||

.unwrap();

|

||||

graph.add_sub_graph(graph::NAME, draw_3d_graph);

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Opaque3d {

|

||||

pub distance: f32,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub entity: Entity,

|

||||

pub draw_function: DrawFunctionId,

|

||||

}

|

||||

|

||||

impl PhaseItem for Opaque3d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

FloatOrd(self.distance)

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for Opaque3d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for Opaque3d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

pub struct AlphaMask3d {

|

||||

pub distance: f32,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub entity: Entity,

|

||||

pub draw_function: DrawFunctionId,

|

||||

}

|

||||

|

||||

impl PhaseItem for AlphaMask3d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

FloatOrd(self.distance)

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for AlphaMask3d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for AlphaMask3d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Transparent3d {

|

||||

pub distance: f32,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub entity: Entity,

|

||||

pub draw_function: DrawFunctionId,

|

||||

}

|

||||

|

||||

impl PhaseItem for Transparent3d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

FloatOrd(self.distance)

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for Transparent3d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for Transparent3d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

pub fn extract_core_3d_camera_phases(

|

||||

mut commands: Commands,

|

||||

cameras_3d: Query<(Entity, &Camera), With<Camera3d>>,

|

||||

) {

|

||||

for (entity, camera) in cameras_3d.iter() {

|

||||

if camera.is_active {

|

||||

commands.get_or_spawn(entity).insert_bundle((

|

||||

RenderPhase::<Opaque3d>::default(),

|

||||

RenderPhase::<AlphaMask3d>::default(),

|

||||

RenderPhase::<Transparent3d>::default(),

|

||||

));

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

pub fn prepare_core_3d_views_system(

|

||||

mut commands: Commands,

|

||||

mut texture_cache: ResMut<TextureCache>,

|

||||

msaa: Res<Msaa>,

|

||||

render_device: Res<RenderDevice>,

|

||||

views_3d: Query<

|

||||

(Entity, &ExtractedView, Option<&ExtractedCamera>),

|

||||

(

|

||||

With<RenderPhase<Opaque3d>>,

|

||||

With<RenderPhase<AlphaMask3d>>,

|

||||

With<RenderPhase<Transparent3d>>,

|

||||

),

|

||||

>,

|

||||

) {

|

||||

let mut textures = HashMap::default();

|

||||

for (entity, view, camera) in views_3d.iter() {

|

||||

let mut get_cached_texture = || {

|

||||

texture_cache.get(

|

||||

&render_device,

|

||||

TextureDescriptor {

|

||||

label: Some("view_depth_texture"),

|

||||

size: Extent3d {

|

||||

depth_or_array_layers: 1,

|

||||

width: view.width as u32,

|

||||

height: view.height as u32,

|

||||

},

|

||||

mip_level_count: 1,

|

||||

sample_count: msaa.samples,

|

||||

dimension: TextureDimension::D2,

|

||||

format: TextureFormat::Depth32Float, /* PERF: vulkan docs recommend using 24

|

||||

* bit depth for better performance */

|

||||

usage: TextureUsages::RENDER_ATTACHMENT,

|

||||

},

|

||||

)

|

||||

};

|

||||

let cached_texture = if let Some(camera) = camera {

|

||||

textures

|

||||

.entry(camera.target.clone())

|

||||

.or_insert_with(get_cached_texture)

|

||||

.clone()

|

||||

} else {

|

||||

get_cached_texture()

|

||||

};

|

||||

commands.entity(entity).insert(ViewDepthTexture {

|

||||

texture: cached_texture.texture,

|

||||

view: cached_texture.default_view,

|

||||

});

|

||||

}

|

||||

}

|

||||

|

|

@ -1,419 +1,28 @@

|

|||

mod clear_pass;

|

||||

mod clear_pass_driver;

|

||||

mod main_pass_2d;

|

||||

mod main_pass_3d;

|

||||

mod main_pass_driver;

|

||||

pub mod clear_color;

|

||||

pub mod core_2d;

|

||||

pub mod core_3d;

|

||||

|

||||

pub mod prelude {

|

||||

#[doc(hidden)]

|

||||

pub use crate::ClearColor;

|

||||

}

|

||||

|

||||

use bevy_utils::HashMap;

|

||||

|

||||

pub use clear_pass::*;

|

||||

pub use clear_pass_driver::*;

|

||||

pub use main_pass_2d::*;

|

||||

pub use main_pass_3d::*;

|

||||

pub use main_pass_driver::*;

|

||||

|

||||

use std::ops::Range;

|

||||

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

camera::{ActiveCamera, Camera2d, Camera3d, ExtractedCamera, RenderTarget},

|

||||

color::Color,

|

||||

extract_resource::{ExtractResource, ExtractResourcePlugin},

|

||||

render_graph::{EmptyNode, RenderGraph, SlotInfo, SlotType},

|

||||

render_phase::{

|

||||

batch_phase_system, sort_phase_system, BatchedPhaseItem, CachedRenderPipelinePhaseItem,

|

||||

DrawFunctionId, DrawFunctions, EntityPhaseItem, PhaseItem, RenderPhase,

|

||||

},

|

||||

render_resource::*,

|

||||

renderer::RenderDevice,

|

||||

texture::TextureCache,

|

||||

view::{ExtractedView, Msaa, ViewDepthTexture},

|

||||

RenderApp, RenderStage,

|

||||

pub use crate::{

|

||||

clear_color::ClearColor,

|

||||

core_2d::{Camera2d, Camera2dBundle},

|

||||

core_3d::{Camera3d, Camera3dBundle},

|

||||

};

|

||||

use bevy_utils::FloatOrd;

|

||||

|

||||

/// When used as a resource, sets the color that is used to clear the screen between frames.

|

||||

///

|

||||

/// This color appears as the "background" color for simple apps, when

|

||||

/// there are portions of the screen with nothing rendered.

|

||||

#[derive(Clone, Debug, ExtractResource)]

|

||||

pub struct ClearColor(pub Color);

|

||||

|

||||

impl Default for ClearColor {

|

||||

fn default() -> Self {

|

||||

Self(Color::rgb(0.4, 0.4, 0.4))

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Clone, Debug, Default, ExtractResource)]

|

||||

pub struct RenderTargetClearColors {

|

||||

colors: HashMap<RenderTarget, Color>,

|

||||

}

|

||||

|

||||

impl RenderTargetClearColors {

|

||||

pub fn get(&self, target: &RenderTarget) -> Option<&Color> {

|

||||

self.colors.get(target)

|

||||

}

|

||||

pub fn insert(&mut self, target: RenderTarget, color: Color) {

|

||||

self.colors.insert(target, color);

|

||||

}

|

||||

}

|

||||

|

||||

// Plugins that contribute to the RenderGraph should use the following label conventions:

|

||||

// 1. Graph modules should have a NAME, input module, and node module (where relevant)

|

||||

// 2. The "top level" graph is the plugin module root. Just add things like `pub mod node` directly under the plugin module

|

||||

// 3. "sub graph" modules should be nested beneath their parent graph module

|

||||

|

||||

pub mod node {

|

||||

pub const MAIN_PASS_DEPENDENCIES: &str = "main_pass_dependencies";

|

||||

pub const MAIN_PASS_DRIVER: &str = "main_pass_driver";

|

||||

pub const CLEAR_PASS_DRIVER: &str = "clear_pass_driver";

|

||||

}

|

||||

|

||||

pub mod draw_2d_graph {

|

||||

pub const NAME: &str = "draw_2d";

|

||||

pub mod input {

|

||||

pub const VIEW_ENTITY: &str = "view_entity";

|

||||

}

|

||||

pub mod node {

|

||||

pub const MAIN_PASS: &str = "main_pass";

|

||||

}

|

||||

}

|

||||

|

||||

pub mod draw_3d_graph {

|

||||

pub const NAME: &str = "draw_3d";

|

||||

pub mod input {

|

||||

pub const VIEW_ENTITY: &str = "view_entity";

|

||||

}

|

||||

pub mod node {

|

||||

pub const MAIN_PASS: &str = "main_pass";

|

||||

}

|

||||

}

|

||||

|

||||

pub mod clear_graph {

|

||||

pub const NAME: &str = "clear";

|

||||

pub mod node {

|

||||

pub const CLEAR_PASS: &str = "clear_pass";

|

||||

}

|

||||

}

|

||||

use crate::{clear_color::ClearColor, core_2d::Core2dPlugin, core_3d::Core3dPlugin};

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_render::extract_resource::ExtractResourcePlugin;

|

||||

|

||||

#[derive(Default)]

|

||||

pub struct CorePipelinePlugin;

|

||||

|

||||

#[derive(Debug, Hash, PartialEq, Eq, Clone, SystemLabel)]

|

||||

pub enum CorePipelineRenderSystems {

|

||||

SortTransparent2d,

|

||||

}

|

||||

|

||||

impl Plugin for CorePipelinePlugin {

|

||||

fn build(&self, app: &mut App) {

|

||||

app.init_resource::<ClearColor>()

|

||||

.init_resource::<RenderTargetClearColors>()

|

||||

.add_plugin(ExtractResourcePlugin::<ClearColor>::default())

|

||||

.add_plugin(ExtractResourcePlugin::<RenderTargetClearColors>::default());

|

||||

|

||||

let render_app = match app.get_sub_app_mut(RenderApp) {

|

||||

Ok(render_app) => render_app,

|

||||

Err(_) => return,

|

||||

};

|

||||

|

||||

render_app

|

||||

.init_resource::<DrawFunctions<Transparent2d>>()

|

||||

.init_resource::<DrawFunctions<Opaque3d>>()

|

||||

.init_resource::<DrawFunctions<AlphaMask3d>>()

|

||||

.init_resource::<DrawFunctions<Transparent3d>>()

|

||||

.add_system_to_stage(RenderStage::Extract, extract_core_pipeline_camera_phases)

|

||||

.add_system_to_stage(RenderStage::Prepare, prepare_core_views_system)

|

||||

.add_system_to_stage(

|

||||

RenderStage::PhaseSort,

|

||||

sort_phase_system::<Transparent2d>

|

||||

.label(CorePipelineRenderSystems::SortTransparent2d),

|

||||

)

|

||||

.add_system_to_stage(

|

||||

RenderStage::PhaseSort,

|

||||

batch_phase_system::<Transparent2d>

|

||||

.after(CorePipelineRenderSystems::SortTransparent2d),

|

||||

)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Opaque3d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<AlphaMask3d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Transparent3d>);

|

||||

|

||||

let clear_pass_node = ClearPassNode::new(&mut render_app.world);

|

||||

let pass_node_2d = MainPass2dNode::new(&mut render_app.world);

|

||||

let pass_node_3d = MainPass3dNode::new(&mut render_app.world);

|

||||

let mut graph = render_app.world.resource_mut::<RenderGraph>();

|

||||

|

||||

let mut draw_2d_graph = RenderGraph::default();

|

||||

draw_2d_graph.add_node(draw_2d_graph::node::MAIN_PASS, pass_node_2d);

|

||||

let input_node_id = draw_2d_graph.set_input(vec![SlotInfo::new(

|

||||

draw_2d_graph::input::VIEW_ENTITY,

|

||||

SlotType::Entity,

|

||||

)]);

|

||||

draw_2d_graph

|

||||

.add_slot_edge(

|

||||

input_node_id,

|

||||

draw_2d_graph::input::VIEW_ENTITY,

|

||||

draw_2d_graph::node::MAIN_PASS,

|

||||

MainPass2dNode::IN_VIEW,

|

||||

)

|

||||

.unwrap();

|

||||

graph.add_sub_graph(draw_2d_graph::NAME, draw_2d_graph);

|

||||

|

||||

let mut draw_3d_graph = RenderGraph::default();

|

||||

draw_3d_graph.add_node(draw_3d_graph::node::MAIN_PASS, pass_node_3d);

|

||||

let input_node_id = draw_3d_graph.set_input(vec![SlotInfo::new(

|

||||

draw_3d_graph::input::VIEW_ENTITY,

|

||||

SlotType::Entity,

|

||||

)]);

|

||||

draw_3d_graph

|

||||

.add_slot_edge(

|

||||

input_node_id,

|

||||

draw_3d_graph::input::VIEW_ENTITY,

|

||||

draw_3d_graph::node::MAIN_PASS,

|

||||

MainPass3dNode::IN_VIEW,

|

||||

)

|

||||

.unwrap();

|

||||

graph.add_sub_graph(draw_3d_graph::NAME, draw_3d_graph);

|

||||

|

||||

let mut clear_graph = RenderGraph::default();

|

||||

clear_graph.add_node(clear_graph::node::CLEAR_PASS, clear_pass_node);

|

||||

graph.add_sub_graph(clear_graph::NAME, clear_graph);

|

||||

|

||||

graph.add_node(node::MAIN_PASS_DEPENDENCIES, EmptyNode);

|

||||

graph.add_node(node::MAIN_PASS_DRIVER, MainPassDriverNode);

|

||||

graph

|

||||

.add_node_edge(node::MAIN_PASS_DEPENDENCIES, node::MAIN_PASS_DRIVER)

|

||||

.unwrap();

|

||||

graph.add_node(node::CLEAR_PASS_DRIVER, ClearPassDriverNode);

|

||||

graph

|

||||

.add_node_edge(node::CLEAR_PASS_DRIVER, node::MAIN_PASS_DRIVER)

|

||||

.unwrap();

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Transparent2d {

|

||||

pub sort_key: FloatOrd,

|

||||

pub entity: Entity,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub draw_function: DrawFunctionId,

|

||||

/// Range in the vertex buffer of this item

|

||||

pub batch_range: Option<Range<u32>>,

|

||||

}

|

||||

|

||||

impl PhaseItem for Transparent2d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

self.sort_key

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for Transparent2d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for Transparent2d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

impl BatchedPhaseItem for Transparent2d {

|

||||

fn batch_range(&self) -> &Option<Range<u32>> {

|

||||

&self.batch_range

|

||||

}

|

||||

|

||||

fn batch_range_mut(&mut self) -> &mut Option<Range<u32>> {

|

||||

&mut self.batch_range

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Opaque3d {

|

||||

pub distance: f32,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub entity: Entity,

|

||||

pub draw_function: DrawFunctionId,

|

||||

}

|

||||

|

||||

impl PhaseItem for Opaque3d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

FloatOrd(self.distance)

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for Opaque3d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for Opaque3d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

pub struct AlphaMask3d {

|

||||

pub distance: f32,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub entity: Entity,

|

||||

pub draw_function: DrawFunctionId,

|

||||

}

|

||||

|

||||

impl PhaseItem for AlphaMask3d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

FloatOrd(self.distance)

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for AlphaMask3d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for AlphaMask3d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

pub struct Transparent3d {

|

||||

pub distance: f32,

|

||||

pub pipeline: CachedRenderPipelineId,

|

||||

pub entity: Entity,

|

||||

pub draw_function: DrawFunctionId,

|

||||

}

|

||||

|

||||

impl PhaseItem for Transparent3d {

|

||||

type SortKey = FloatOrd;

|

||||

|

||||

#[inline]

|

||||

fn sort_key(&self) -> Self::SortKey {

|

||||

FloatOrd(self.distance)

|

||||

}

|

||||

|

||||

#[inline]

|

||||

fn draw_function(&self) -> DrawFunctionId {

|

||||

self.draw_function

|

||||

}

|

||||

}

|

||||

|

||||

impl EntityPhaseItem for Transparent3d {

|

||||

#[inline]

|

||||

fn entity(&self) -> Entity {

|

||||

self.entity

|

||||

}

|

||||

}

|

||||

|

||||

impl CachedRenderPipelinePhaseItem for Transparent3d {

|

||||

#[inline]

|

||||

fn cached_pipeline(&self) -> CachedRenderPipelineId {

|

||||

self.pipeline

|

||||

}

|

||||

}

|

||||

|

||||

pub fn extract_core_pipeline_camera_phases(

|

||||

mut commands: Commands,

|

||||

active_2d: Res<ActiveCamera<Camera2d>>,

|

||||

active_3d: Res<ActiveCamera<Camera3d>>,

|

||||

) {

|

||||

if let Some(entity) = active_2d.get() {

|

||||

commands

|

||||

.get_or_spawn(entity)

|

||||

.insert(RenderPhase::<Transparent2d>::default());

|

||||

}

|

||||

if let Some(entity) = active_3d.get() {

|

||||

commands.get_or_spawn(entity).insert_bundle((

|

||||

RenderPhase::<Opaque3d>::default(),

|

||||

RenderPhase::<AlphaMask3d>::default(),

|

||||

RenderPhase::<Transparent3d>::default(),

|

||||

));

|

||||

}

|

||||

}

|

||||

|

||||

pub fn prepare_core_views_system(

|

||||

mut commands: Commands,

|

||||

mut texture_cache: ResMut<TextureCache>,

|

||||

msaa: Res<Msaa>,

|

||||

render_device: Res<RenderDevice>,

|

||||

views_3d: Query<

|

||||

(Entity, &ExtractedView, Option<&ExtractedCamera>),

|

||||

(

|

||||

With<RenderPhase<Opaque3d>>,

|

||||

With<RenderPhase<AlphaMask3d>>,

|

||||

With<RenderPhase<Transparent3d>>,

|

||||

),

|

||||

>,

|

||||

) {

|

||||

let mut textures = HashMap::default();

|

||||

for (entity, view, camera) in views_3d.iter() {

|

||||

let mut get_cached_texture = || {

|

||||

texture_cache.get(

|

||||

&render_device,

|

||||

TextureDescriptor {

|

||||

label: Some("view_depth_texture"),

|

||||

size: Extent3d {

|

||||

depth_or_array_layers: 1,

|

||||

width: view.width as u32,

|

||||

height: view.height as u32,

|

||||

},

|

||||

mip_level_count: 1,

|

||||

sample_count: msaa.samples,

|

||||

dimension: TextureDimension::D2,

|

||||

format: TextureFormat::Depth32Float, /* PERF: vulkan docs recommend using 24

|

||||

* bit depth for better performance */

|

||||

usage: TextureUsages::RENDER_ATTACHMENT,

|

||||

},

|

||||

)

|

||||

};

|

||||

let cached_texture = if let Some(camera) = camera {

|

||||

textures

|

||||

.entry(camera.target.clone())

|

||||

.or_insert_with(get_cached_texture)

|

||||

.clone()

|

||||

} else {

|

||||

get_cached_texture()

|

||||

};

|

||||

commands.entity(entity).insert(ViewDepthTexture {

|

||||

texture: cached_texture.texture,

|

||||

view: cached_texture.default_view,

|

||||

});

|

||||

.add_plugin(Core2dPlugin)

|

||||

.add_plugin(Core3dPlugin);

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -1,33 +0,0 @@

|

|||

use bevy_ecs::world::World;

|

||||

use bevy_render::{

|

||||

camera::{ActiveCamera, Camera2d, Camera3d},

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext, SlotValue},

|

||||

renderer::RenderContext,

|

||||

};

|

||||

|

||||

pub struct MainPassDriverNode;

|

||||

|

||||

impl Node for MainPassDriverNode {

|

||||

fn run(

|

||||

&self,

|

||||

graph: &mut RenderGraphContext,

|

||||

_render_context: &mut RenderContext,

|

||||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

if let Some(camera_3d) = world.resource::<ActiveCamera<Camera3d>>().get() {

|

||||

graph.run_sub_graph(

|

||||

crate::draw_3d_graph::NAME,

|

||||

vec![SlotValue::Entity(camera_3d)],

|

||||

)?;

|

||||

}

|

||||

|

||||

if let Some(camera_2d) = world.resource::<ActiveCamera<Camera2d>>().get() {

|

||||

graph.run_sub_graph(

|

||||

crate::draw_2d_graph::NAME,

|

||||

vec![SlotValue::Entity(camera_2d)],

|

||||

)?;

|

||||

}

|

||||

|

||||

Ok(())

|

||||

}

|

||||

}

|

||||

|

|

@ -14,6 +14,7 @@ bevy_animation = { path = "../bevy_animation", version = "0.8.0-dev", optional =

|

|||

bevy_app = { path = "../bevy_app", version = "0.8.0-dev" }

|

||||

bevy_asset = { path = "../bevy_asset", version = "0.8.0-dev" }

|

||||

bevy_core = { path = "../bevy_core", version = "0.8.0-dev" }

|

||||

bevy_core_pipeline = { path = "../bevy_core_pipeline", version = "0.8.0-dev" }

|

||||

bevy_ecs = { path = "../bevy_ecs", version = "0.8.0-dev" }

|

||||

bevy_hierarchy = { path = "../bevy_hierarchy", version = "0.8.0-dev" }

|

||||

bevy_log = { path = "../bevy_log", version = "0.8.0-dev" }

|

||||

|

|

|

|||

|

|

@ -3,6 +3,7 @@ use bevy_asset::{

|

|||

AssetIoError, AssetLoader, AssetPath, BoxedFuture, Handle, LoadContext, LoadedAsset,

|

||||

};

|

||||

use bevy_core::Name;

|

||||

use bevy_core_pipeline::prelude::Camera3d;

|

||||

use bevy_ecs::{entity::Entity, prelude::FromWorld, world::World};

|

||||

use bevy_hierarchy::{BuildWorldChildren, WorldChildBuilder};

|

||||

use bevy_log::warn;

|

||||

|

|

@ -13,7 +14,7 @@ use bevy_pbr::{

|

|||

};

|

||||

use bevy_render::{

|

||||

camera::{

|

||||

Camera, Camera3d, CameraProjection, OrthographicProjection, PerspectiveProjection,

|

||||

Camera, CameraRenderGraph, OrthographicProjection, PerspectiveProjection, Projection,

|

||||

ScalingMode,

|

||||

},

|

||||

color::Color,

|

||||

|

|

@ -459,6 +460,7 @@ async fn load_gltf<'a, 'b>(

|

|||

|

||||

let mut scenes = vec![];

|

||||

let mut named_scenes = HashMap::default();

|

||||

let mut active_camera_found = false;

|

||||

for scene in gltf.scenes() {

|

||||