mirror of

https://github.com/bevyengine/bevy

synced 2024-12-18 17:13:10 +00:00

Improve profiling instructions (#16299)

# Objective - Make it easier to understand how to profile things. - Talk about CPU vs GPU work for graphics. ## Solution - Add a section on GPU profiling and CPU vs GPU work. - Rearrange some sections so Tracy is the first backend mentioned. ## Issues I did this as a very quick fix to clear some things up, but the overall guide still flows poorly and has too much extraneous info distracting from the use case of "I just want to figure out why my app is slow", where the advice should be "use tracy, and if GPU bottlenecked, do this". Someone should do a full rewrite at some point. I chose to omit talking about RenderDiagnosticsPlugin, but it's definitely worth a mention as a way to easily check GPU + GPU time for graphics work, although it's not hooked up in a lot of the engine, iirc only shadows and the main passes. Again someone else should write about it in the future. Similarly it might've been useful to have a section describing how to use NSight/RGP/IGA/Xcode for GPU profiling, as they're far from intuitive tools.

This commit is contained in:

parent

f87b9fe20c

commit

83c729162f

1 changed files with 64 additions and 36 deletions

|

|

@ -2,36 +2,59 @@

|

||||||

|

|

||||||

## Table of Contents

|

## Table of Contents

|

||||||

|

|

||||||

- [Runtime](#runtime)

|

- [CPU runtime](#cpu-runtime)

|

||||||

- [Chrome tracing format](#chrome-tracing-format)

|

- [Overview](#overview)

|

||||||

- [Tracy profiler](#tracy-profiler)

|

|

||||||

- [Adding your own spans](#adding-your-own-spans)

|

- [Adding your own spans](#adding-your-own-spans)

|

||||||

|

- [Tracy profiler](#tracy-profiler)

|

||||||

|

- [Chrome tracing format](#chrome-tracing-format)

|

||||||

- [Perf flame graph](#perf-flame-graph)

|

- [Perf flame graph](#perf-flame-graph)

|

||||||

|

- [GPU runtime](#gpu-runtime)

|

||||||

- [Compile time](#compile-time)

|

- [Compile time](#compile-time)

|

||||||

|

|

||||||

## Runtime

|

## CPU runtime

|

||||||

|

|

||||||

|

### Overview

|

||||||

|

|

||||||

Bevy has built-in [tracing](https://github.com/tokio-rs/tracing) spans to make it cheap and easy to profile Bevy ECS systems, render logic, engine internals, and user app code. Enable the `trace` cargo feature to enable Bevy's built-in spans.

|

Bevy has built-in [tracing](https://github.com/tokio-rs/tracing) spans to make it cheap and easy to profile Bevy ECS systems, render logic, engine internals, and user app code. Enable the `trace` cargo feature to enable Bevy's built-in spans.

|

||||||

|

|

||||||

If you also want to include `wgpu` tracing spans when profiling, they are emitted at the `tracing` `info` level so you will need to make sure they are not filtered out by the `LogSettings` resource's `filter` member which defaults to `wgpu=error`. You can do this by setting the `RUST_LOG=info` environment variable when running your application.

|

If you also want to include `wgpu` tracing spans when profiling, they are emitted at the `tracing` `info` level so you will need to make sure they are not filtered out by the `LogSettings` resource's `filter` member which defaults to `wgpu=error`. You can do this by setting the `RUST_LOG=info` environment variable when running your application.

|

||||||

|

|

||||||

You also need to select a `tracing` backend using one of the following cargo features.

|

You also need to select a `tracing` backend using one of the cargo features described in the below sections.

|

||||||

|

|

||||||

**⚠️ Note**: for users of [span](https://docs.rs/tracing/0.1.37/tracing/index.html) based profilers

|

> [!NOTE]

|

||||||

|

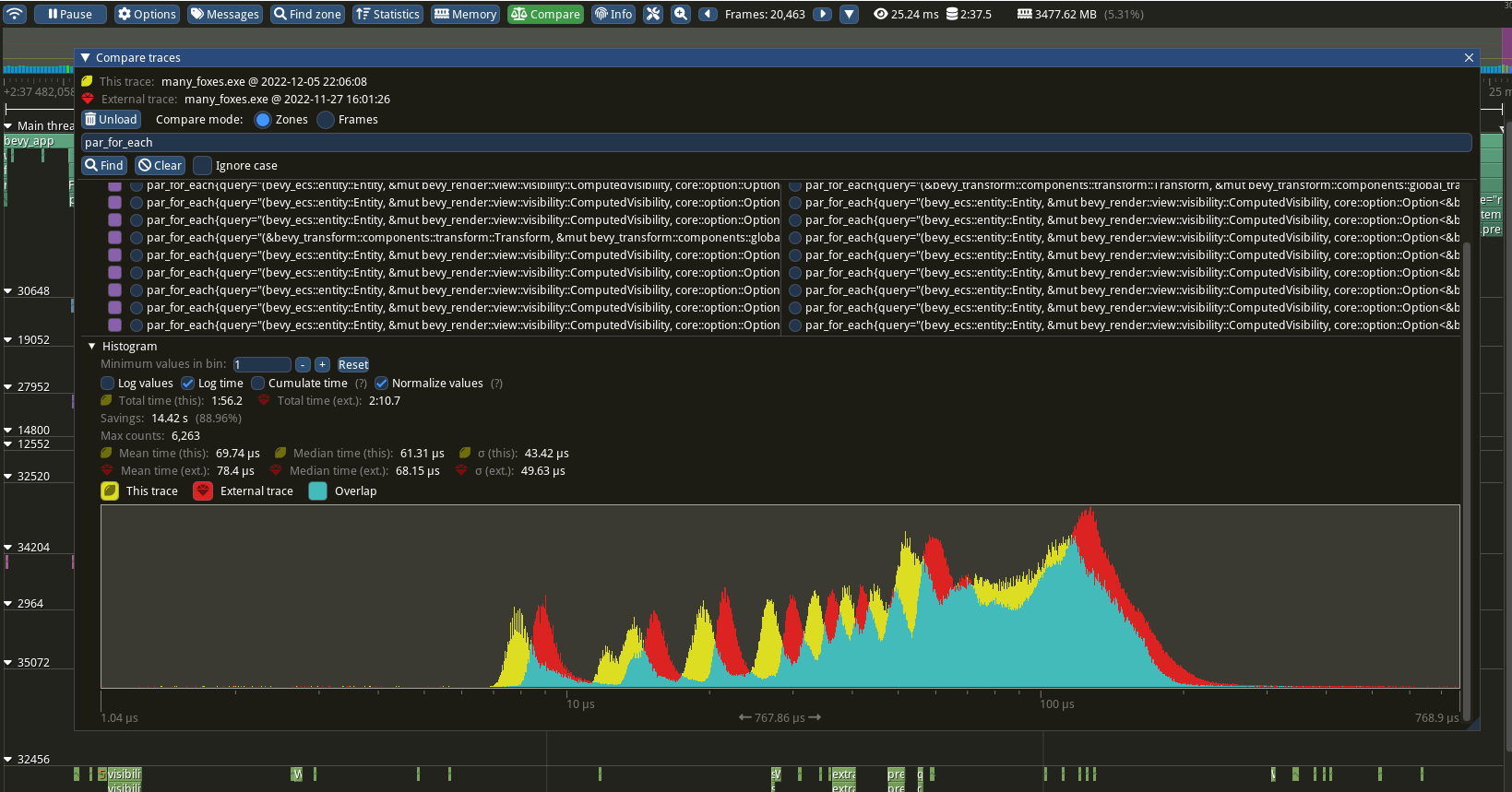

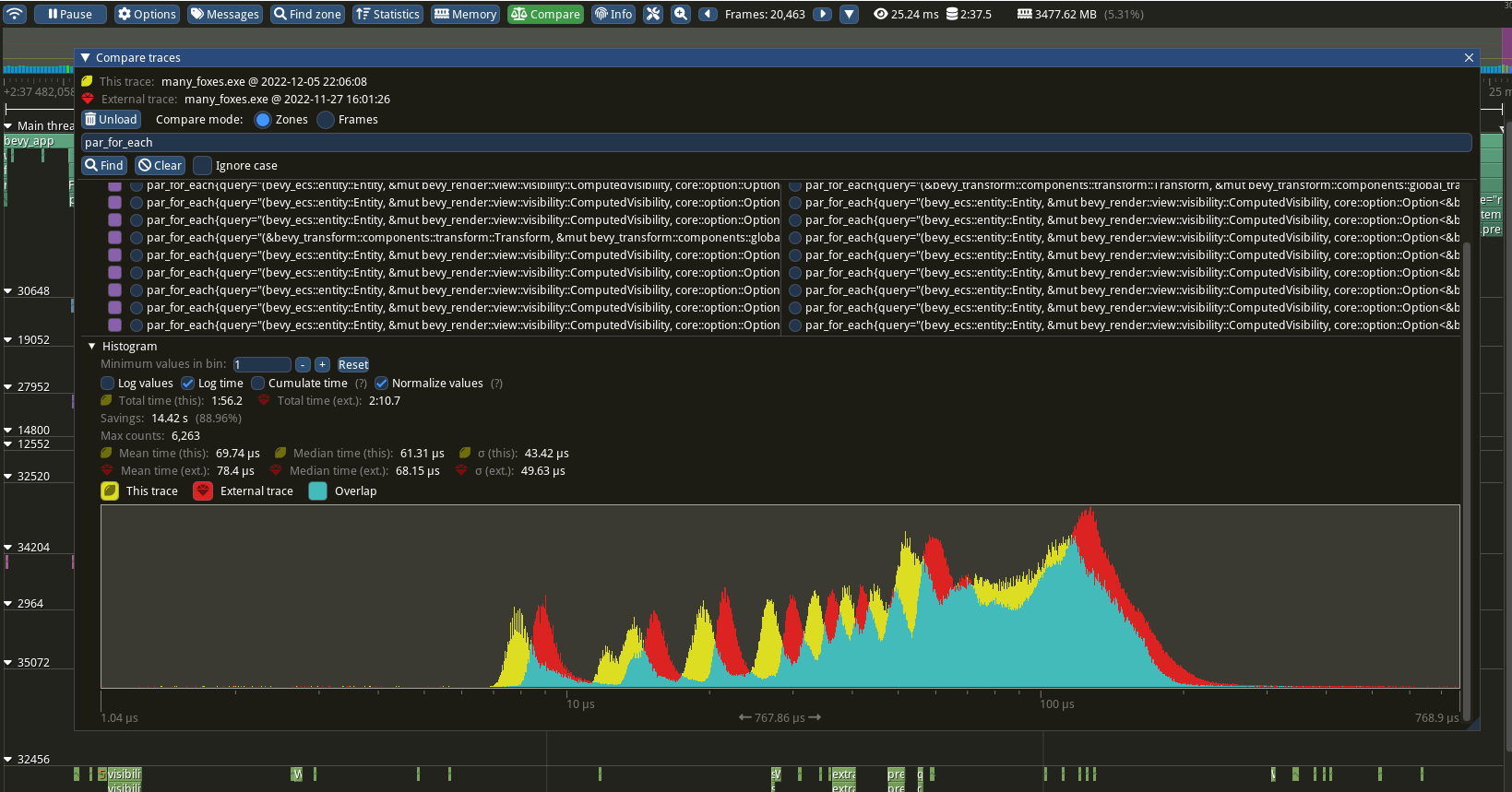

> When your app is bottlenecked by the GPU, you may encounter frames that have multiple prepare-set systems all taking an unusually long time to complete, and all finishing at about the same time.

|

||||||

When your app is bottlenecked by the GPU, you may encounter frames that have multiple prepare-set systems all taking an unusually long time to complete, and all finishing at about the same time.

|

>

|

||||||

|

> See the section on GPU profiling for determining what GPU work is the bottleneck.

|

||||||

Improvements are planned to resolve this issue, you can find more details in the docs for [`prepare_windows`](https://docs.rs/bevy/latest/bevy/render/view/fn.prepare_windows.html).

|

>

|

||||||

|

> You can find more details in the docs for [`prepare_windows`](https://docs.rs/bevy/latest/bevy/render/view/fn.prepare_windows.html).

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Chrome tracing format

|

### Adding your own spans

|

||||||

|

|

||||||

`cargo run --release --features bevy/trace_chrome`

|

Add spans to your app like this (these are in `bevy::prelude::*` and `bevy::log::*`, just like the normal logging macros).

|

||||||

|

|

||||||

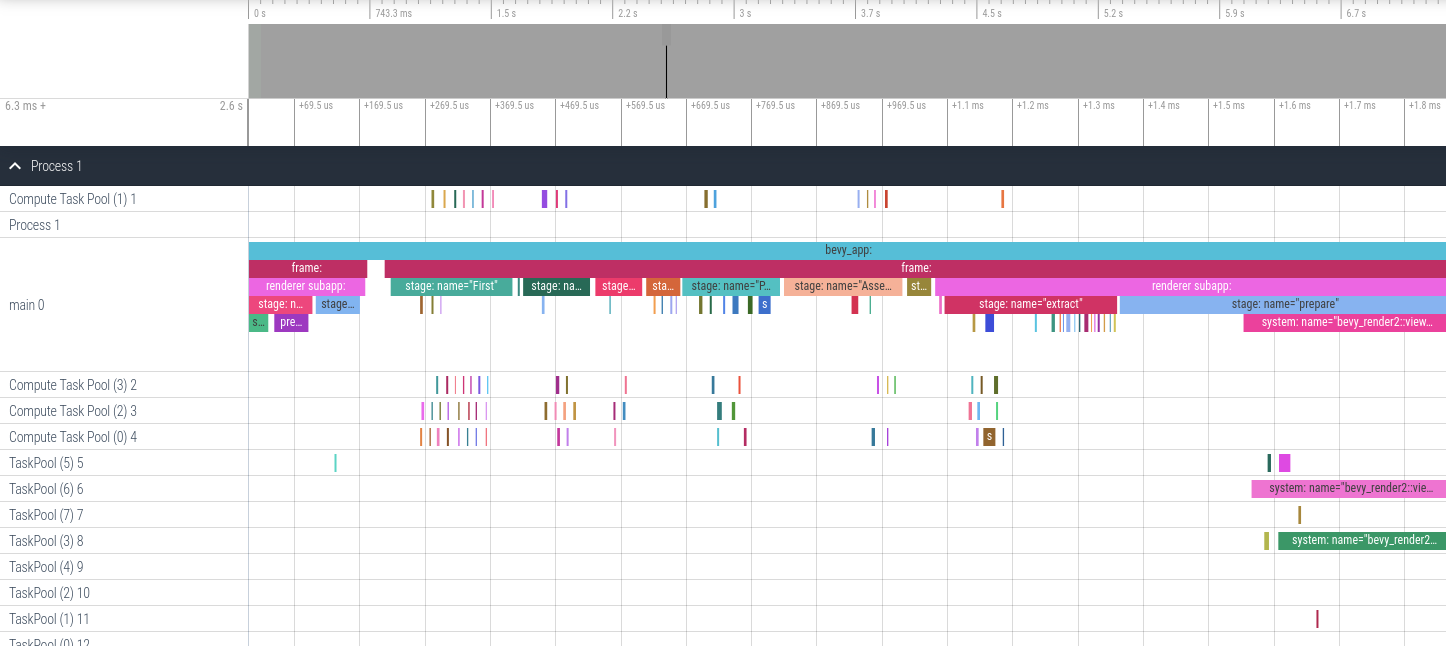

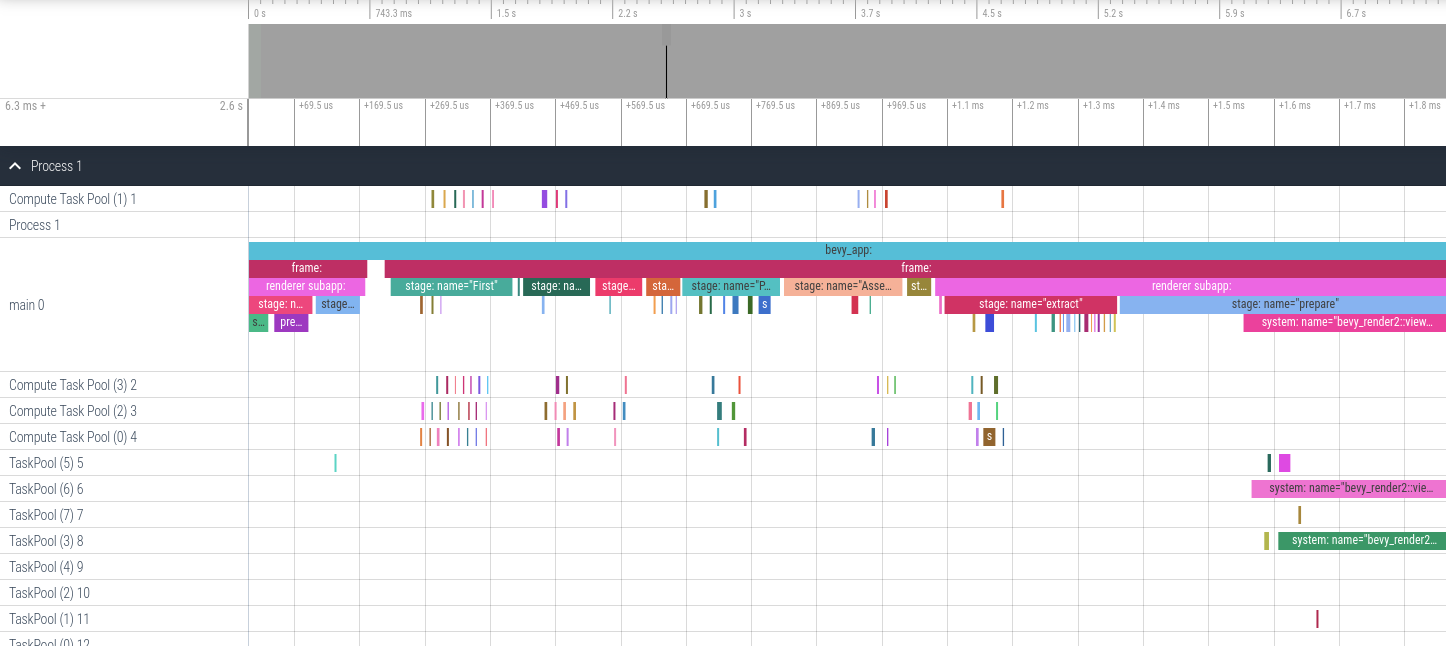

After running your app a `json` file in the "chrome tracing format" will be produced. You can open this file in your browser using <https://ui.perfetto.dev>. It will look something like this:

|

```rust

|

||||||

|

{

|

||||||

|

// creates a span and starts the timer

|

||||||

|

let my_span = info_span!("span_name", name = "span_name").entered();

|

||||||

|

do_something_here();

|

||||||

|

} // my_span is dropped here ... this stops the timer

|

||||||

|

|

||||||

|

|

||||||

|

// You can also "manually" enter the span if you need more control over when the timer starts

|

||||||

|

// Prefer the previous, simpler syntax unless you need the extra control.

|

||||||

|

let my_span = info_span!("span_name", name = "span_name");

|

||||||

|

{

|

||||||

|

// starts the span's timer

|

||||||

|

let guard = my_span.enter();

|

||||||

|

do_something_here();

|

||||||

|

} // guard is dropped here ... this stops the timer

|

||||||

|

```

|

||||||

|

|

||||||

|

Search for `info_span!` in this repo for some real-world examples.

|

||||||

|

|

||||||

|

For more details, check out the [tracing span docs](https://docs.rs/tracing/*/tracing/span/index.html).

|

||||||

|

|

||||||

### Tracy profiler

|

### Tracy profiler

|

||||||

|

|

||||||

|

|

@ -78,31 +101,13 @@ If you save more than one trace, you can compare the spans between both of them

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

### Adding your own spans

|

### Chrome tracing format

|

||||||

|

|

||||||

Add spans to your app like this (these are in `bevy::prelude::*` and `bevy::log::*`, just like the normal logging macros).

|

`cargo run --release --features bevy/trace_chrome`

|

||||||

|

|

||||||

```rust

|

After running your app a `json` file in the "chrome tracing format" will be produced. You can open this file in your browser using <https://ui.perfetto.dev>. It will look something like this:

|

||||||

{

|

|

||||||

// creates a span and starts the timer

|

|

||||||

let my_span = info_span!("span_name", name = "span_name").entered();

|

|

||||||

do_something_here();

|

|

||||||

} // my_span is dropped here ... this stops the timer

|

|

||||||

|

|

||||||

|

|

||||||

// You can also "manually" enter the span if you need more control over when the timer starts

|

|

||||||

// Prefer the previous, simpler syntax unless you need the extra control.

|

|

||||||

let my_span = info_span!("span_name", name = "span_name");

|

|

||||||

{

|

|

||||||

// starts the span's timer

|

|

||||||

let guard = my_span.enter();

|

|

||||||

do_something_here();

|

|

||||||

} // guard is dropped here ... this stops the timer

|

|

||||||

```

|

|

||||||

|

|

||||||

Search for `info_span!` in this repo for some real-world examples.

|

|

||||||

|

|

||||||

For more details, check out the [tracing span docs](https://docs.rs/tracing/*/tracing/span/index.html).

|

|

||||||

|

|

||||||

### `perf` Flame Graph

|

### `perf` Flame Graph

|

||||||

|

|

||||||

|

|

@ -116,6 +121,29 @@ Install [cargo-flamegraph](https://github.com/flamegraph-rs/flamegraph), [enable

|

||||||

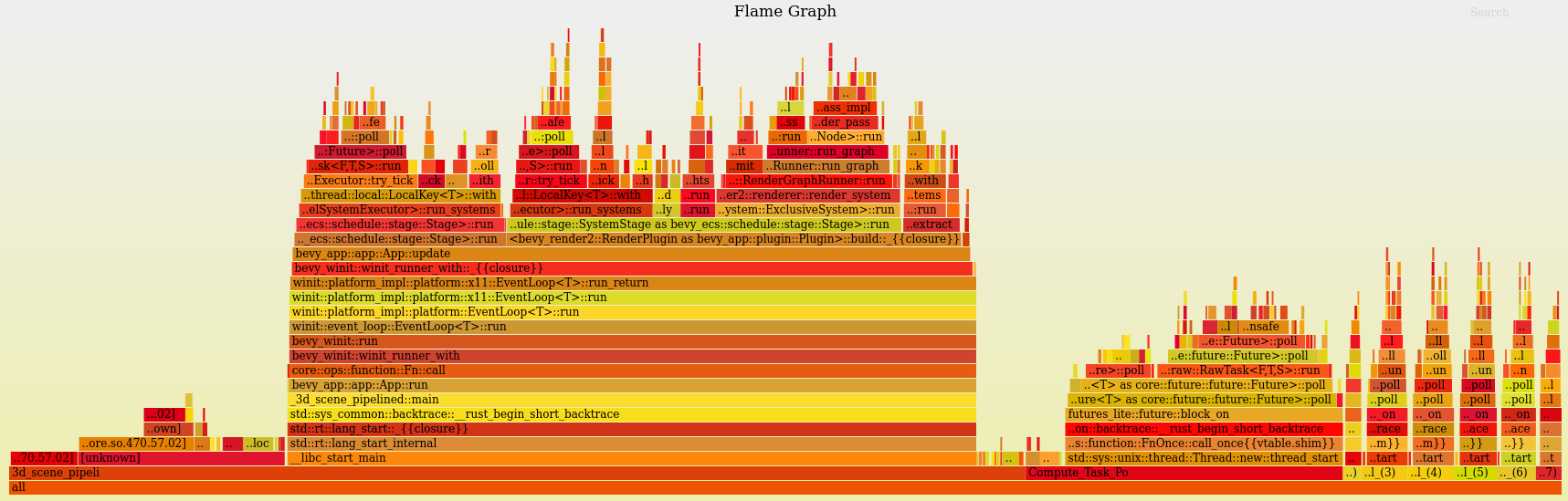

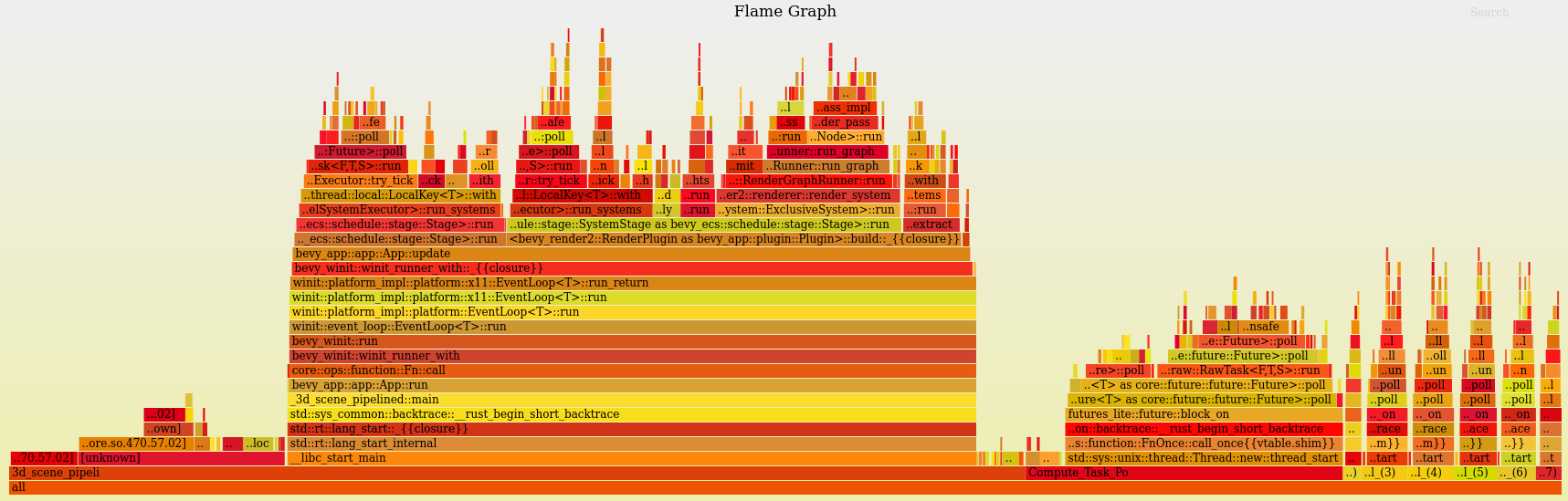

After closing your app, an interactive `svg` file will be produced:

|

After closing your app, an interactive `svg` file will be produced:

|

||||||

|

|

||||||

|

|

||||||

|

## GPU runtime

|

||||||

|

|

||||||

|

If CPU profiling has shown that GPU work is the bottleneck, it's time to profile the GPU.

|

||||||

|

|

||||||

|

For profiling GPU work, you should use the tool corresponding to your GPU's vendor:

|

||||||

|

|

||||||

|

- NVIDIA - [Nsight Graphics](https://developer.nvidia.com/nsight-graphics)

|

||||||

|

- AMD - [Radeon GPU Profiler](https://gpuopen.com/rgp)

|

||||||

|

- Intel - [Graphics Frame Analyzer](https://www.intel.com/content/www/us/en/developer/tools/graphics-performance-analyzers/graphics-frame-analyzer.html)

|

||||||

|

- Apple - [Xcode](https://developer.apple.com/documentation/xcode/optimizing-gpu-performance)

|

||||||

|

|

||||||

|

Note that while RenderDoc is a great debugging tool, it is _not_ a profiler, and should not be used for this purpose.

|

||||||

|

|

||||||

|

### Graphics work

|

||||||

|

|

||||||

|

Finally, a quick note on how GPU programming works. GPUs are essentially separate computers with their own compiler, scheduler, memory (for discrete GPUs), etc. You do not simply call functions to have the GPU perform work - instead, you communicate with them by sending data back and forth over the PCIe bus, via the GPU driver.

|

||||||

|

|

||||||

|

Specifically, you record a list of tasks (commands) for the GPU to perform into a CommandBuffer, and then submit that on a Queue to the GPU. At some point in the future, the GPU will receive the commands and execute them.

|

||||||

|

|

||||||

|

In terms of where your app is spending time doing graphics work, it might manifest as a CPU bottleneck (extracting to the render world, wgpu resource tracking, recording commands to a CommandBuffer, or GPU driver code), or it might manifest as a GPU bottleneck (the GPU actually running your commands).

|

||||||

|

|

||||||

|

Graphics related work is not all CPU work or all GPU work, but a mix of both, and you should find the bottleneck and profile using the appropriate tool for each case.

|

||||||

|

|

||||||

## Compile time

|

## Compile time

|

||||||

|

|

||||||

Append `--timings` to your app's cargo command (ex: `cargo build --timings`).

|

Append `--timings` to your app's cargo command (ex: `cargo build --timings`).

|

||||||

|

|

|

||||||

Loading…

Reference in a new issue