mirror of

https://github.com/bevyengine/bevy

synced 2025-02-18 15:08:36 +00:00

Add RenderTarget::TextureView (#8042)

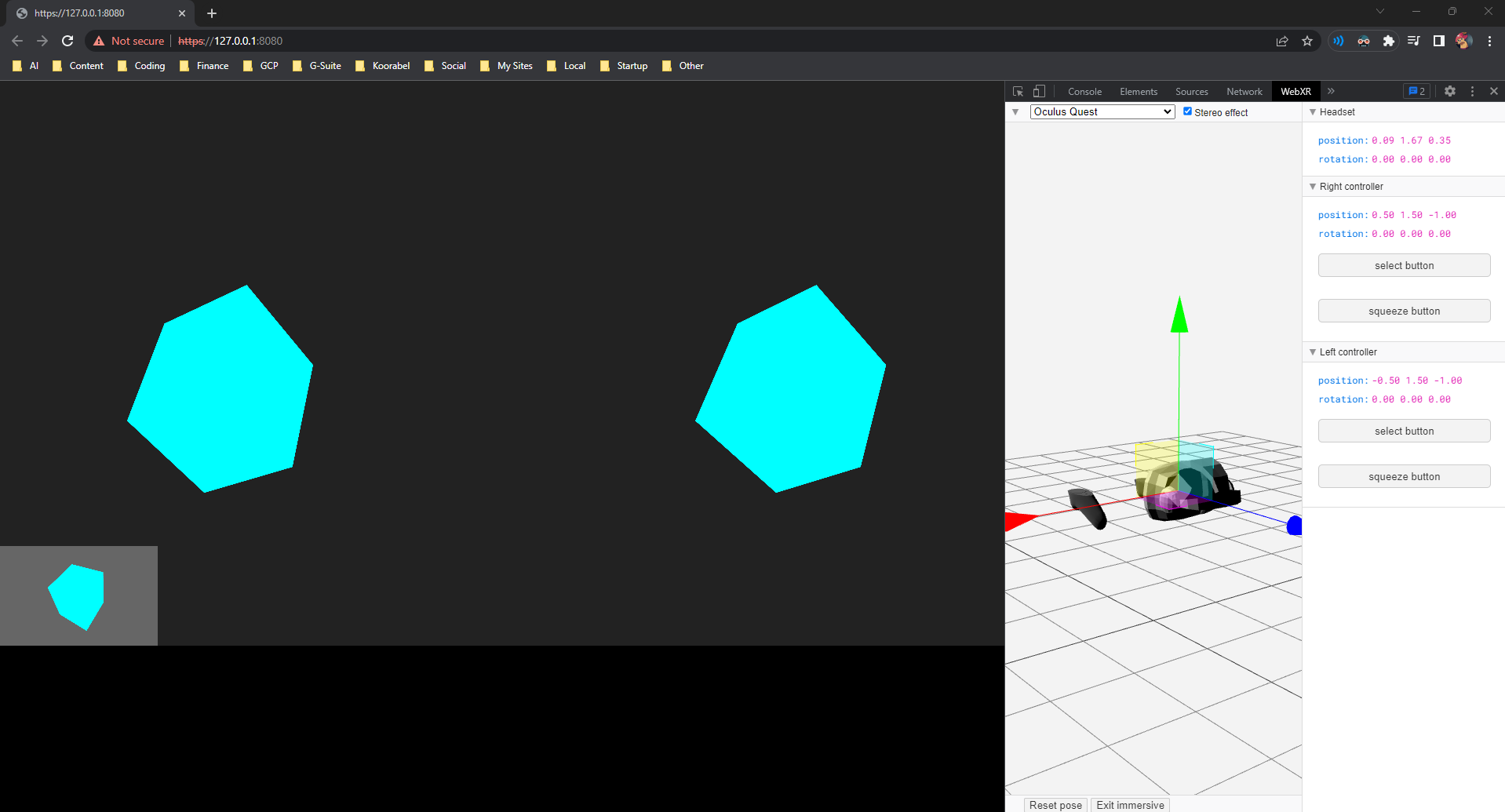

# Objective We can currently set `camera.target` to either an `Image` or `Window`. For OpenXR & WebXR we need to be able to render to a `TextureView`. This partially addresses #115 as with the addition we can create internal and external xr crates. ## Solution A `TextureView` item is added to the `RenderTarget` enum. It holds an id which is looked up by a `ManualTextureViews` resource, much like how `Assets<Image>` works. I believe this approach was first used by @kcking in their [xr fork](eb39afd51b/crates/bevy_render/src/camera/camera.rs (L322)). The only change is that a `u32` is used to index the textures as `FromReflect` does not support `uuid` and I don't know how to implement that. --- ## Changelog ### Added Render: Added `RenderTarget::TextureView` as a `camera.target` option, enabling rendering directly to a `TextureView`. ## Migration Guide References to the `RenderTarget` enum will need to handle the additional field, ie in `match` statements. --- ## Comments - The [wgpu work](c039a74884) done by @expenses allows us to create framebuffer texture views from `wgpu v0.15, bevy 0.10`. - I got the WebXR techniques from the [xr fork](https://github.com/dekuraan/xr-bevy) by @dekuraan. - I have tested this with a wip [external webxr crate](018e22bb06/crates/bevy_webxr/src/bevy_utils/xr_render.rs (L50)) on an Oculus Quest 2.  --------- Co-authored-by: Carter Anderson <mcanders1@gmail.com> Co-authored-by: Paul Hansen <mail@paul.rs>

This commit is contained in:

parent

3959908b6b

commit

6ce4bf5181

4 changed files with 108 additions and 8 deletions

|

|

@ -1,5 +1,6 @@

|

|||

use crate::{

|

||||

camera::CameraProjection,

|

||||

camera::{ManualTextureViewHandle, ManualTextureViews},

|

||||

prelude::Image,

|

||||

render_asset::RenderAssets,

|

||||

render_resource::TextureView,

|

||||

|

|

@ -26,7 +27,6 @@ use bevy_utils::{HashMap, HashSet};

|

|||

use bevy_window::{

|

||||

NormalizedWindowRef, PrimaryWindow, Window, WindowCreated, WindowRef, WindowResized,

|

||||

};

|

||||

|

||||

use std::{borrow::Cow, ops::Range};

|

||||

use wgpu::{BlendState, Extent3d, LoadOp, TextureFormat};

|

||||

|

||||

|

|

@ -383,6 +383,9 @@ pub enum RenderTarget {

|

|||

Window(WindowRef),

|

||||

/// Image to which the camera's view is rendered.

|

||||

Image(Handle<Image>),

|

||||

/// Texture View to which the camera's view is rendered.

|

||||

/// Useful when the texture view needs to be created outside of Bevy, for example OpenXR.

|

||||

TextureView(ManualTextureViewHandle),

|

||||

}

|

||||

|

||||

/// Normalized version of the render target.

|

||||

|

|

@ -394,6 +397,9 @@ pub enum NormalizedRenderTarget {

|

|||

Window(NormalizedWindowRef),

|

||||

/// Image to which the camera's view is rendered.

|

||||

Image(Handle<Image>),

|

||||

/// Texture View to which the camera's view is rendered.

|

||||

/// Useful when the texture view needs to be created outside of Bevy, for example OpenXR.

|

||||

TextureView(ManualTextureViewHandle),

|

||||

}

|

||||

|

||||

impl Default for RenderTarget {

|

||||

|

|

@ -410,6 +416,7 @@ impl RenderTarget {

|

|||

.normalize(primary_window)

|

||||

.map(NormalizedRenderTarget::Window),

|

||||

RenderTarget::Image(handle) => Some(NormalizedRenderTarget::Image(handle.clone())),

|

||||

RenderTarget::TextureView(id) => Some(NormalizedRenderTarget::TextureView(*id)),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -419,6 +426,7 @@ impl NormalizedRenderTarget {

|

|||

&self,

|

||||

windows: &'a ExtractedWindows,

|

||||

images: &'a RenderAssets<Image>,

|

||||

manual_texture_views: &'a ManualTextureViews,

|

||||

) -> Option<&'a TextureView> {

|

||||

match self {

|

||||

NormalizedRenderTarget::Window(window_ref) => windows

|

||||

|

|

@ -427,6 +435,9 @@ impl NormalizedRenderTarget {

|

|||

NormalizedRenderTarget::Image(image_handle) => {

|

||||

images.get(image_handle).map(|image| &image.texture_view)

|

||||

}

|

||||

NormalizedRenderTarget::TextureView(id) => {

|

||||

manual_texture_views.get(id).map(|tex| &tex.texture_view)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -435,6 +446,7 @@ impl NormalizedRenderTarget {

|

|||

&self,

|

||||

windows: &'a ExtractedWindows,

|

||||

images: &'a RenderAssets<Image>,

|

||||

manual_texture_views: &'a ManualTextureViews,

|

||||

) -> Option<TextureFormat> {

|

||||

match self {

|

||||

NormalizedRenderTarget::Window(window_ref) => windows

|

||||

|

|

@ -443,6 +455,9 @@ impl NormalizedRenderTarget {

|

|||

NormalizedRenderTarget::Image(image_handle) => {

|

||||

images.get(image_handle).map(|image| image.texture_format)

|

||||

}

|

||||

NormalizedRenderTarget::TextureView(id) => {

|

||||

manual_texture_views.get(id).map(|tex| tex.format)

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -450,6 +465,7 @@ impl NormalizedRenderTarget {

|

|||

&self,

|

||||

resolutions: impl IntoIterator<Item = (Entity, &'a Window)>,

|

||||

images: &Assets<Image>,

|

||||

manual_texture_views: &ManualTextureViews,

|

||||

) -> Option<RenderTargetInfo> {

|

||||

match self {

|

||||

NormalizedRenderTarget::Window(window_ref) => resolutions

|

||||

|

|

@ -470,6 +486,12 @@ impl NormalizedRenderTarget {

|

|||

scale_factor: 1.0,

|

||||

})

|

||||

}

|

||||

NormalizedRenderTarget::TextureView(id) => {

|

||||

manual_texture_views.get(id).map(|tex| RenderTargetInfo {

|

||||

physical_size: tex.size,

|

||||

scale_factor: 1.0,

|

||||

})

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

|

|

@ -486,6 +508,7 @@ impl NormalizedRenderTarget {

|

|||

NormalizedRenderTarget::Image(image_handle) => {

|

||||

changed_image_handles.contains(&image_handle)

|

||||

}

|

||||

NormalizedRenderTarget::TextureView(_) => true,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -509,6 +532,7 @@ impl NormalizedRenderTarget {

|

|||

/// [`OrthographicProjection`]: crate::camera::OrthographicProjection

|

||||

/// [`PerspectiveProjection`]: crate::camera::PerspectiveProjection

|

||||

/// [`Projection`]: crate::camera::Projection

|

||||

#[allow(clippy::too_many_arguments)]

|

||||

pub fn camera_system<T: CameraProjection + Component>(

|

||||

mut window_resized_events: EventReader<WindowResized>,

|

||||

mut window_created_events: EventReader<WindowCreated>,

|

||||

|

|

@ -516,6 +540,7 @@ pub fn camera_system<T: CameraProjection + Component>(

|

|||

primary_window: Query<Entity, With<PrimaryWindow>>,

|

||||

windows: Query<(Entity, &Window)>,

|

||||

images: Res<Assets<Image>>,

|

||||

manual_texture_views: Res<ManualTextureViews>,

|

||||

mut cameras: Query<(&mut Camera, &mut T)>,

|

||||

) {

|

||||

let primary_window = primary_window.iter().next();

|

||||

|

|

@ -547,8 +572,11 @@ pub fn camera_system<T: CameraProjection + Component>(

|

|||

|| camera_projection.is_changed()

|

||||

|| camera.computed.old_viewport_size != viewport_size

|

||||

{

|

||||

camera.computed.target_info =

|

||||

normalized_target.get_render_target_info(&windows, &images);

|

||||

camera.computed.target_info = normalized_target.get_render_target_info(

|

||||

&windows,

|

||||

&images,

|

||||

&manual_texture_views,

|

||||

);

|

||||

if let Some(size) = camera.logical_viewport_size() {

|

||||

camera_projection.update(size.x, size.y);

|

||||

camera.computed.projection_matrix = camera_projection.get_projection_matrix();

|

||||

|

|

|

|||

64

crates/bevy_render/src/camera/manual_texture_view.rs

Normal file

64

crates/bevy_render/src/camera/manual_texture_view.rs

Normal file

|

|

@ -0,0 +1,64 @@

|

|||

use crate::extract_resource::ExtractResource;

|

||||

use crate::render_resource::TextureView;

|

||||

use crate::texture::BevyDefault;

|

||||

use bevy_ecs::system::Resource;

|

||||

use bevy_ecs::{prelude::Component, reflect::ReflectComponent};

|

||||

use bevy_math::UVec2;

|

||||

use bevy_reflect::prelude::*;

|

||||

use bevy_reflect::FromReflect;

|

||||

use bevy_utils::HashMap;

|

||||

use wgpu::TextureFormat;

|

||||

|

||||

/// A unique id that corresponds to a specific [`ManualTextureView`] in the [`ManualTextureViews`] collection.

|

||||

#[derive(

|

||||

Default,

|

||||

Debug,

|

||||

Clone,

|

||||

Copy,

|

||||

PartialEq,

|

||||

Eq,

|

||||

Hash,

|

||||

PartialOrd,

|

||||

Ord,

|

||||

Component,

|

||||

Reflect,

|

||||

FromReflect,

|

||||

)]

|

||||

#[reflect(Component, Default)]

|

||||

pub struct ManualTextureViewHandle(pub u32);

|

||||

|

||||

/// A manually managed [`TextureView`] for use as a [`crate::camera::RenderTarget`].

|

||||

#[derive(Debug, Clone, Component)]

|

||||

pub struct ManualTextureView {

|

||||

pub texture_view: TextureView,

|

||||

pub size: UVec2,

|

||||

pub format: TextureFormat,

|

||||

}

|

||||

|

||||

impl ManualTextureView {

|

||||

pub fn with_default_format(texture_view: TextureView, size: UVec2) -> Self {

|

||||

Self {

|

||||

texture_view,

|

||||

size,

|

||||

format: TextureFormat::bevy_default(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// Stores manually managed [`ManualTextureView`]s for use as a [`crate::camera::RenderTarget`].

|

||||

#[derive(Default, Clone, Resource, ExtractResource)]

|

||||

pub struct ManualTextureViews(HashMap<ManualTextureViewHandle, ManualTextureView>);

|

||||

|

||||

impl std::ops::Deref for ManualTextureViews {

|

||||

type Target = HashMap<ManualTextureViewHandle, ManualTextureView>;

|

||||

|

||||

fn deref(&self) -> &Self::Target {

|

||||

&self.0

|

||||

}

|

||||

}

|

||||

|

||||

impl std::ops::DerefMut for ManualTextureViews {

|

||||

fn deref_mut(&mut self) -> &mut Self::Target {

|

||||

&mut self.0

|

||||

}

|

||||

}

|

||||

|

|

@ -1,13 +1,18 @@

|

|||

#[allow(clippy::module_inception)]

|

||||

mod camera;

|

||||

mod camera_driver_node;

|

||||

mod manual_texture_view;

|

||||

mod projection;

|

||||

|

||||

pub use camera::*;

|

||||

pub use camera_driver_node::*;

|

||||

pub use manual_texture_view::*;

|

||||

pub use projection::*;

|

||||

|

||||

use crate::{render_graph::RenderGraph, ExtractSchedule, Render, RenderApp, RenderSet};

|

||||

use crate::{

|

||||

extract_resource::ExtractResourcePlugin, render_graph::RenderGraph, ExtractSchedule, Render,

|

||||

RenderApp, RenderSet,

|

||||

};

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_ecs::schedule::IntoSystemConfigs;

|

||||

|

||||

|

|

@ -22,9 +27,11 @@ impl Plugin for CameraPlugin {

|

|||

.register_type::<ScalingMode>()

|

||||

.register_type::<CameraRenderGraph>()

|

||||

.register_type::<RenderTarget>()

|

||||

.init_resource::<ManualTextureViews>()

|

||||

.add_plugin(CameraProjectionPlugin::<Projection>::default())

|

||||

.add_plugin(CameraProjectionPlugin::<OrthographicProjection>::default())

|

||||

.add_plugin(CameraProjectionPlugin::<PerspectiveProjection>::default());

|

||||

.add_plugin(CameraProjectionPlugin::<PerspectiveProjection>::default())

|

||||

.add_plugin(ExtractResourcePlugin::<ManualTextureViews>::default());

|

||||

|

||||

if let Ok(render_app) = app.get_sub_app_mut(RenderApp) {

|

||||

render_app

|

||||

|

|

|

|||

|

|

@ -6,7 +6,7 @@ pub use visibility::*;

|

|||

pub use window::*;

|

||||

|

||||

use crate::{

|

||||

camera::{ExtractedCamera, TemporalJitter},

|

||||

camera::{ExtractedCamera, ManualTextureViews, TemporalJitter},

|

||||

extract_resource::{ExtractResource, ExtractResourcePlugin},

|

||||

prelude::{Image, Shader},

|

||||

render_asset::RenderAssets,

|

||||

|

|

@ -414,13 +414,14 @@ fn prepare_view_targets(

|

|||

render_device: Res<RenderDevice>,

|

||||

mut texture_cache: ResMut<TextureCache>,

|

||||

cameras: Query<(Entity, &ExtractedCamera, &ExtractedView)>,

|

||||

manual_texture_views: Res<ManualTextureViews>,

|

||||

) {

|

||||

let mut textures = HashMap::default();

|

||||

for (entity, camera, view) in cameras.iter() {

|

||||

if let (Some(target_size), Some(target)) = (camera.physical_target_size, &camera.target) {

|

||||

if let (Some(out_texture_view), Some(out_texture_format)) = (

|

||||

target.get_texture_view(&windows, &images),

|

||||

target.get_texture_format(&windows, &images),

|

||||

target.get_texture_view(&windows, &images, &manual_texture_views),

|

||||

target.get_texture_format(&windows, &images, &manual_texture_views),

|

||||

) {

|

||||

let size = Extent3d {

|

||||

width: target_size.x,

|

||||

|

|

|

|||

Loading…

Add table

Reference in a new issue