mirror of

https://github.com/bevyengine/bevy

synced 2025-02-18 15:08:36 +00:00

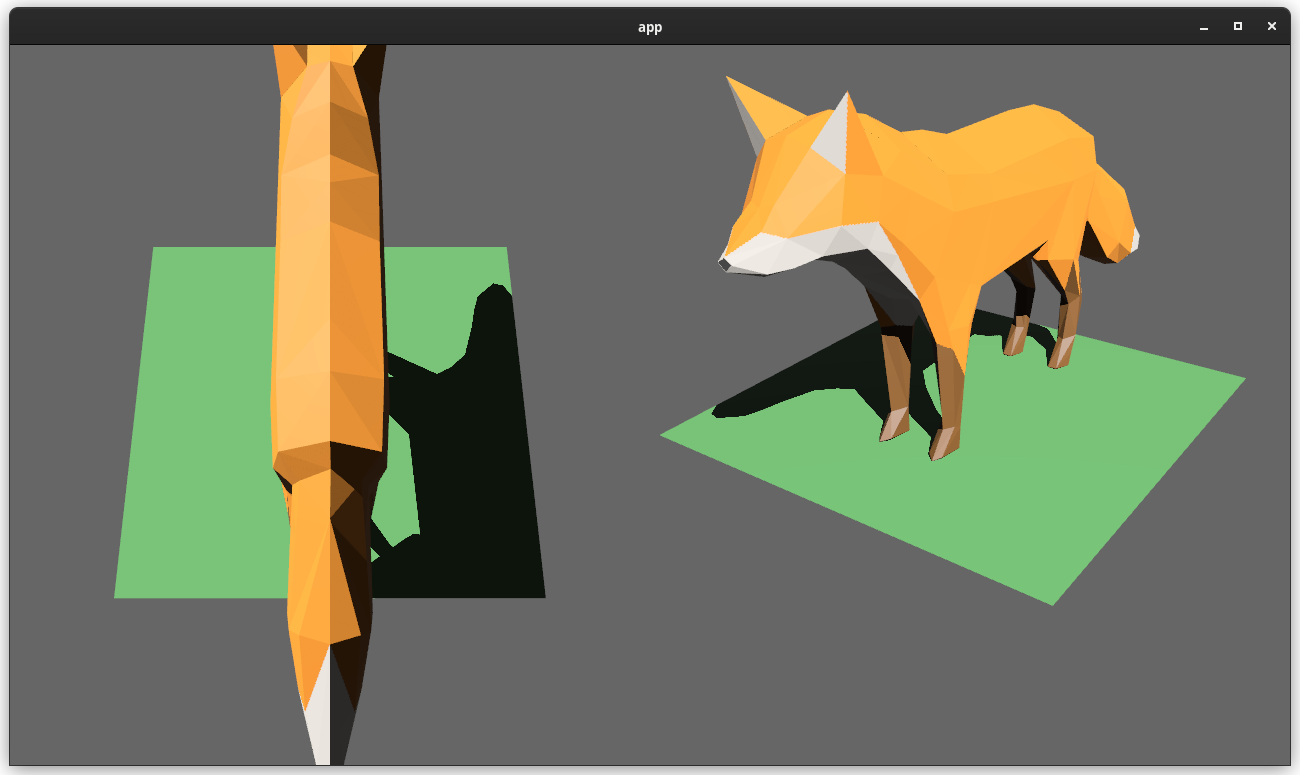

Camera Driven Viewports (#4898)

# Objective Users should be able to render cameras to specific areas of a render target, which enables scenarios like split screen, minimaps, etc. Builds on the new Camera Driven Rendering added here: #4745 Fixes: #202 Alternative to #1389 and #3626 (which are incompatible with the new Camera Driven Rendering) ## Solution  Cameras can now configure an optional "viewport", which defines a rectangle within their render target to draw to. If a `Viewport` is defined, the camera's `CameraProjection`, `View`, and visibility calculations will use the viewport configuration instead of the full render target. ```rust // This camera will render to the first half of the primary window (on the left side). commands.spawn_bundle(Camera3dBundle { camera: Camera { viewport: Some(Viewport { physical_position: UVec2::new(0, 0), physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()), depth: 0.0..1.0, }), ..default() }, ..default() }); ``` To account for this, the `Camera` component has received a few adjustments: * `Camera` now has some new getter functions: * `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, `projection_matrix` * All computed camera values are now private and live on the `ComputedCameraValues` field (logical/physical width/height, the projection matrix). They are now exposed on `Camera` via getters/setters This wasn't _needed_ for viewports, but it was long overdue. --- ## Changelog ### Added * `Camera` components now have a `viewport` field, which can be set to draw to a portion of a render target instead of the full target. * `Camera` component has some new functions: `logical_viewport_size`, `physical_viewport_size`, `logical_target_size`, `physical_target_size`, and `projection_matrix` * Added a new split_screen example illustrating how to render two cameras to the same scene ## Migration Guide `Camera::projection_matrix` is no longer a public field. Use the new `Camera::projection_matrix()` method instead: ```rust // Bevy 0.7 let projection = camera.projection_matrix; // Bevy 0.8 let projection = camera.projection_matrix(); ```

This commit is contained in:

parent

8e08e26c25

commit

5e2cfb2f19

11 changed files with 358 additions and 97 deletions

12

Cargo.toml

12

Cargo.toml

|

|

@ -176,6 +176,10 @@ path = "examples/2d/texture_atlas.rs"

|

|||

name = "3d_scene"

|

||||

path = "examples/3d/3d_scene.rs"

|

||||

|

||||

[[example]]

|

||||

name = "3d_shapes"

|

||||

path = "examples/3d/shapes.rs"

|

||||

|

||||

[[example]]

|

||||

name = "lighting"

|

||||

path = "examples/3d/lighting.rs"

|

||||

|

|

@ -208,10 +212,6 @@ path = "examples/3d/render_to_texture.rs"

|

|||

name = "shadow_biases"

|

||||

path = "examples/3d/shadow_biases.rs"

|

||||

|

||||

[[example]]

|

||||

name = "3d_shapes"

|

||||

path = "examples/3d/shapes.rs"

|

||||

|

||||

[[example]]

|

||||

name = "shadow_caster_receiver"

|

||||

path = "examples/3d/shadow_caster_receiver.rs"

|

||||

|

|

@ -220,6 +220,10 @@ path = "examples/3d/shadow_caster_receiver.rs"

|

|||

name = "spherical_area_lights"

|

||||

path = "examples/3d/spherical_area_lights.rs"

|

||||

|

||||

[[example]]

|

||||

name = "split_screen"

|

||||

path = "examples/3d/split_screen.rs"

|

||||

|

||||

[[example]]

|

||||

name = "texture"

|

||||

path = "examples/3d/texture.rs"

|

||||

|

|

|

|||

|

|

@ -4,6 +4,7 @@ use crate::{

|

|||

};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

camera::ExtractedCamera,

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext, SlotInfo, SlotType},

|

||||

render_phase::{DrawFunctions, RenderPhase, TrackedRenderPass},

|

||||

render_resource::{LoadOp, Operations, RenderPassDescriptor},

|

||||

|

|

@ -14,6 +15,7 @@ use bevy_render::{

|

|||

pub struct MainPass2dNode {

|

||||

query: QueryState<

|

||||

(

|

||||

&'static ExtractedCamera,

|

||||

&'static RenderPhase<Transparent2d>,

|

||||

&'static ViewTarget,

|

||||

&'static Camera2d,

|

||||

|

|

@ -48,7 +50,7 @@ impl Node for MainPass2dNode {

|

|||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

|

||||

let (transparent_phase, target, camera_2d) =

|

||||

let (camera, transparent_phase, target, camera_2d) =

|

||||

if let Ok(result) = self.query.get_manual(world, view_entity) {

|

||||

result

|

||||

} else {

|

||||

|

|

@ -79,6 +81,9 @@ impl Node for MainPass2dNode {

|

|||

|

||||

let mut draw_functions = draw_functions.write();

|

||||

let mut tracked_pass = TrackedRenderPass::new(render_pass);

|

||||

if let Some(viewport) = camera.viewport.as_ref() {

|

||||

tracked_pass.set_camera_viewport(viewport);

|

||||

}

|

||||

for item in &transparent_phase.items {

|

||||

let draw_function = draw_functions.get_mut(item.draw_function).unwrap();

|

||||

draw_function.draw(world, &mut tracked_pass, view_entity, item);

|

||||

|

|

|

|||

|

|

@ -4,6 +4,7 @@ use crate::{

|

|||

};

|

||||

use bevy_ecs::prelude::*;

|

||||

use bevy_render::{

|

||||

camera::ExtractedCamera,

|

||||

render_graph::{Node, NodeRunError, RenderGraphContext, SlotInfo, SlotType},

|

||||

render_phase::{DrawFunctions, RenderPhase, TrackedRenderPass},

|

||||

render_resource::{LoadOp, Operations, RenderPassDepthStencilAttachment, RenderPassDescriptor},

|

||||

|

|

@ -16,6 +17,7 @@ use bevy_utils::tracing::info_span;

|

|||

pub struct MainPass3dNode {

|

||||

query: QueryState<

|

||||

(

|

||||

&'static ExtractedCamera,

|

||||

&'static RenderPhase<Opaque3d>,

|

||||

&'static RenderPhase<AlphaMask3d>,

|

||||

&'static RenderPhase<Transparent3d>,

|

||||

|

|

@ -53,7 +55,7 @@ impl Node for MainPass3dNode {

|

|||

world: &World,

|

||||

) -> Result<(), NodeRunError> {

|

||||

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

|

||||

let (opaque_phase, alpha_mask_phase, transparent_phase, camera_3d, target, depth) =

|

||||

let (camera, opaque_phase, alpha_mask_phase, transparent_phase, camera_3d, target, depth) =

|

||||

match self.query.get_manual(world, view_entity) {

|

||||

Ok(query) => query,

|

||||

Err(_) => {

|

||||

|

|

@ -100,6 +102,9 @@ impl Node for MainPass3dNode {

|

|||

.begin_render_pass(&pass_descriptor);

|

||||

let mut draw_functions = draw_functions.write();

|

||||

let mut tracked_pass = TrackedRenderPass::new(render_pass);

|

||||

if let Some(viewport) = camera.viewport.as_ref() {

|

||||

tracked_pass.set_camera_viewport(viewport);

|

||||

}

|

||||

for item in &opaque_phase.items {

|

||||

let draw_function = draw_functions.get_mut(item.draw_function).unwrap();

|

||||

draw_function.draw(world, &mut tracked_pass, view_entity, item);

|

||||

|

|

@ -136,6 +141,9 @@ impl Node for MainPass3dNode {

|

|||

.begin_render_pass(&pass_descriptor);

|

||||

let mut draw_functions = draw_functions.write();

|

||||

let mut tracked_pass = TrackedRenderPass::new(render_pass);

|

||||

if let Some(viewport) = camera.viewport.as_ref() {

|

||||

tracked_pass.set_camera_viewport(viewport);

|

||||

}

|

||||

for item in &alpha_mask_phase.items {

|

||||

let draw_function = draw_functions.get_mut(item.draw_function).unwrap();

|

||||

draw_function.draw(world, &mut tracked_pass, view_entity, item);

|

||||

|

|

@ -177,6 +185,9 @@ impl Node for MainPass3dNode {

|

|||

.begin_render_pass(&pass_descriptor);

|

||||

let mut draw_functions = draw_functions.write();

|

||||

let mut tracked_pass = TrackedRenderPass::new(render_pass);

|

||||

if let Some(viewport) = camera.viewport.as_ref() {

|

||||

tracked_pass.set_camera_viewport(viewport);

|

||||

}

|

||||

for item in &transparent_phase.items {

|

||||

let draw_function = draw_functions.get_mut(item.draw_function).unwrap();

|

||||

draw_function.draw(world, &mut tracked_pass, view_entity, item);

|

||||

|

|

|

|||

|

|

@ -31,7 +31,7 @@ use bevy_render::{

|

|||

},

|

||||

renderer::RenderDevice,

|

||||

texture::TextureCache,

|

||||

view::{ExtractedView, ViewDepthTexture},

|

||||

view::ViewDepthTexture,

|

||||

RenderApp, RenderStage,

|

||||

};

|

||||

use bevy_utils::{FloatOrd, HashMap};

|

||||

|

|

@ -53,7 +53,7 @@ impl Plugin for Core3dPlugin {

|

|||

.init_resource::<DrawFunctions<AlphaMask3d>>()

|

||||

.init_resource::<DrawFunctions<Transparent3d>>()

|

||||

.add_system_to_stage(RenderStage::Extract, extract_core_3d_camera_phases)

|

||||

.add_system_to_stage(RenderStage::Prepare, prepare_core_3d_views_system)

|

||||

.add_system_to_stage(RenderStage::Prepare, prepare_core_3d_depth_textures)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Opaque3d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<AlphaMask3d>)

|

||||

.add_system_to_stage(RenderStage::PhaseSort, sort_phase_system::<Transparent3d>);

|

||||

|

|

@ -199,13 +199,13 @@ pub fn extract_core_3d_camera_phases(

|

|||

}

|

||||

}

|

||||

|

||||

pub fn prepare_core_3d_views_system(

|

||||

pub fn prepare_core_3d_depth_textures(

|

||||

mut commands: Commands,

|

||||

mut texture_cache: ResMut<TextureCache>,

|

||||

msaa: Res<Msaa>,

|

||||

render_device: Res<RenderDevice>,

|

||||

views_3d: Query<

|

||||

(Entity, &ExtractedView, Option<&ExtractedCamera>),

|

||||

(Entity, &ExtractedCamera),

|

||||

(

|

||||

With<RenderPhase<Opaque3d>>,

|

||||

With<RenderPhase<AlphaMask3d>>,

|

||||

|

|

@ -214,37 +214,34 @@ pub fn prepare_core_3d_views_system(

|

|||

>,

|

||||

) {

|

||||

let mut textures = HashMap::default();

|

||||

for (entity, view, camera) in views_3d.iter() {

|

||||

let mut get_cached_texture = || {

|

||||

texture_cache.get(

|

||||

&render_device,

|

||||

TextureDescriptor {

|

||||

label: Some("view_depth_texture"),

|

||||

size: Extent3d {

|

||||

depth_or_array_layers: 1,

|

||||

width: view.width as u32,

|

||||

height: view.height as u32,

|

||||

},

|

||||

mip_level_count: 1,

|

||||

sample_count: msaa.samples,

|

||||

dimension: TextureDimension::D2,

|

||||

format: TextureFormat::Depth32Float, /* PERF: vulkan docs recommend using 24

|

||||

* bit depth for better performance */

|

||||

usage: TextureUsages::RENDER_ATTACHMENT,

|

||||

},

|

||||

)

|

||||

};

|

||||

let cached_texture = if let Some(camera) = camera {

|

||||

textures

|

||||

for (entity, camera) in views_3d.iter() {

|

||||

if let Some(physical_target_size) = camera.physical_target_size {

|

||||

let cached_texture = textures

|

||||

.entry(camera.target.clone())

|

||||

.or_insert_with(get_cached_texture)

|

||||

.clone()

|

||||

} else {

|

||||

get_cached_texture()

|

||||

};

|

||||

commands.entity(entity).insert(ViewDepthTexture {

|

||||

texture: cached_texture.texture,

|

||||

view: cached_texture.default_view,

|

||||

});

|

||||

.or_insert_with(|| {

|

||||

texture_cache.get(

|

||||

&render_device,

|

||||

TextureDescriptor {

|

||||

label: Some("view_depth_texture"),

|

||||

size: Extent3d {

|

||||

depth_or_array_layers: 1,

|

||||

width: physical_target_size.x,

|

||||

height: physical_target_size.y,

|

||||

},

|

||||

mip_level_count: 1,

|

||||

sample_count: msaa.samples,

|

||||

dimension: TextureDimension::D2,

|

||||

format: TextureFormat::Depth32Float, /* PERF: vulkan docs recommend using 24

|

||||

* bit depth for better performance */

|

||||

usage: TextureUsages::RENDER_ATTACHMENT,

|

||||

},

|

||||

)

|

||||

})

|

||||

.clone();

|

||||

commands.entity(entity).insert(ViewDepthTexture {

|

||||

texture: cached_texture.texture,

|

||||

view: cached_texture.default_view,

|

||||

});

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

|

|||

|

|

@ -736,7 +736,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

continue;

|

||||

}

|

||||

|

||||

let screen_size = if let Some(screen_size) = camera.physical_target_size {

|

||||

let screen_size = if let Some(screen_size) = camera.physical_viewport_size() {

|

||||

screen_size

|

||||

} else {

|

||||

clusters.clear();

|

||||

|

|

@ -747,7 +747,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

|

||||

let view_transform = camera_transform.compute_matrix();

|

||||

let inverse_view_transform = view_transform.inverse();

|

||||

let is_orthographic = camera.projection_matrix.w_axis.w == 1.0;

|

||||

let is_orthographic = camera.projection_matrix().w_axis.w == 1.0;

|

||||

|

||||

let far_z = match config.far_z_mode() {

|

||||

ClusterFarZMode::MaxLightRange => {

|

||||

|

|

@ -772,7 +772,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

// 3,2 = r * far and 2,2 = r where r = 1.0 / (far - near)

|

||||

// rearranging r = 1.0 / (far - near), r * (far - near) = 1.0, r * far - 1.0 = r * near, near = (r * far - 1.0) / r

|

||||

// = (3,2 - 1.0) / 2,2

|

||||

(camera.projection_matrix.w_axis.z - 1.0) / camera.projection_matrix.z_axis.z

|

||||

(camera.projection_matrix().w_axis.z - 1.0) / camera.projection_matrix().z_axis.z

|

||||

}

|

||||

(false, 1) => config.first_slice_depth().max(far_z),

|

||||

_ => config.first_slice_depth(),

|

||||

|

|

@ -804,7 +804,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

// it can overestimate more significantly when light ranges are only partially in view

|

||||

let (light_aabb_min, light_aabb_max) = cluster_space_light_aabb(

|

||||

inverse_view_transform,

|

||||

camera.projection_matrix,

|

||||

camera.projection_matrix(),

|

||||

&light_sphere,

|

||||

);

|

||||

|

||||

|

|

@ -871,7 +871,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

clusters.dimensions.x * clusters.dimensions.y * clusters.dimensions.z <= 4096

|

||||

);

|

||||

|

||||

let inverse_projection = camera.projection_matrix.inverse();

|

||||

let inverse_projection = camera.projection_matrix().inverse();

|

||||

|

||||

for lights in &mut clusters.lights {

|

||||

lights.entities.clear();

|

||||

|

|

@ -958,7 +958,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

let (light_aabb_xy_ndc_z_view_min, light_aabb_xy_ndc_z_view_max) =

|

||||

cluster_space_light_aabb(

|

||||

inverse_view_transform,

|

||||

camera.projection_matrix,

|

||||

camera.projection_matrix(),

|

||||

&light_sphere,

|

||||

);

|

||||

|

||||

|

|

@ -991,7 +991,7 @@ pub(crate) fn assign_lights_to_clusters(

|

|||

radius: light_sphere.radius,

|

||||

};

|

||||

let light_center_clip =

|

||||

camera.projection_matrix * view_light_sphere.center.extend(1.0);

|

||||

camera.projection_matrix() * view_light_sphere.center.extend(1.0);

|

||||

let light_center_ndc = light_center_clip.xyz() / light_center_clip.w;

|

||||

let cluster_coordinates = ndc_position_to_cluster(

|

||||

clusters.dimensions,

|

||||

|

|

|

|||

|

|

@ -22,21 +22,72 @@ use bevy_transform::components::GlobalTransform;

|

|||

use bevy_utils::HashSet;

|

||||

use bevy_window::{WindowCreated, WindowId, WindowResized, Windows};

|

||||

use serde::{Deserialize, Serialize};

|

||||

use std::borrow::Cow;

|

||||

use std::{borrow::Cow, ops::Range};

|

||||

use wgpu::Extent3d;

|

||||

|

||||

/// Render viewport configuration for the [`Camera`] component.

|

||||

///

|

||||

/// The viewport defines the area on the render target to which the camera renders its image.

|

||||

/// You can overlay multiple cameras in a single window using viewports to create effects like

|

||||

/// split screen, minimaps, and character viewers.

|

||||

// TODO: remove reflect_value when possible

|

||||

#[derive(Reflect, Debug, Clone, Serialize, Deserialize)]

|

||||

#[reflect_value(Default, Serialize, Deserialize)]

|

||||

pub struct Viewport {

|

||||

/// The physical position to render this viewport to within the [`RenderTarget`] of this [`Camera`].

|

||||

/// (0,0) corresponds to the top-left corner

|

||||

pub physical_position: UVec2,

|

||||

/// The physical size of the viewport rectangle to render to within the [`RenderTarget`] of this [`Camera`].

|

||||

/// The origin of the rectangle is in the top-left corner.

|

||||

pub physical_size: UVec2,

|

||||

/// The minimum and maximum depth to render (on a scale from 0.0 to 1.0).

|

||||

pub depth: Range<f32>,

|

||||

}

|

||||

|

||||

impl Default for Viewport {

|

||||

fn default() -> Self {

|

||||

Self {

|

||||

physical_position: Default::default(),

|

||||

physical_size: Default::default(),

|

||||

depth: 0.0..1.0,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// Information about the current [`RenderTarget`].

|

||||

#[derive(Default, Debug, Clone)]

|

||||

pub struct RenderTargetInfo {

|

||||

/// The physical size of this render target (ignores scale factor).

|

||||

pub physical_size: UVec2,

|

||||

/// The scale factor of this render target.

|

||||

pub scale_factor: f64,

|

||||

}

|

||||

|

||||

/// Holds internally computed [`Camera`] values.

|

||||

#[derive(Default, Debug, Clone)]

|

||||

pub struct ComputedCameraValues {

|

||||

projection_matrix: Mat4,

|

||||

target_info: Option<RenderTargetInfo>,

|

||||

}

|

||||

|

||||

#[derive(Component, Debug, Reflect, Clone)]

|

||||

#[reflect(Component)]

|

||||

pub struct Camera {

|

||||

pub projection_matrix: Mat4,

|

||||

pub logical_target_size: Option<Vec2>,

|

||||

pub physical_target_size: Option<UVec2>,

|

||||

/// If set, this camera will render to the given [`Viewport`] rectangle within the configured [`RenderTarget`].

|

||||

pub viewport: Option<Viewport>,

|

||||

/// Cameras with a lower priority will be rendered before cameras with a higher priority.

|

||||

pub priority: isize,

|

||||

/// If this is set to true, this camera will be rendered to its specified [`RenderTarget`]. If false, this

|

||||

/// camera will not be rendered.

|

||||

pub is_active: bool,

|

||||

/// The method used to calculate this camera's depth. This will be used for projections and visibility.

|

||||

pub depth_calculation: DepthCalculation,

|

||||

/// Computed values for this camera, such as the projection matrix and the render target size.

|

||||

#[reflect(ignore)]

|

||||

pub computed: ComputedCameraValues,

|

||||

/// The "target" that this camera will render to.

|

||||

#[reflect(ignore)]

|

||||

pub target: RenderTarget,

|

||||

#[reflect(ignore)]

|

||||

pub depth_calculation: DepthCalculation,

|

||||

}

|

||||

|

||||

impl Default for Camera {

|

||||

|

|

@ -44,9 +95,8 @@ impl Default for Camera {

|

|||

Self {

|

||||

is_active: true,

|

||||

priority: 0,

|

||||

projection_matrix: Default::default(),

|

||||

logical_target_size: Default::default(),

|

||||

physical_target_size: Default::default(),

|

||||

viewport: None,

|

||||

computed: Default::default(),

|

||||

target: Default::default(),

|

||||

depth_calculation: Default::default(),

|

||||

}

|

||||

|

|

@ -54,6 +104,63 @@ impl Default for Camera {

|

|||

}

|

||||

|

||||

impl Camera {

|

||||

/// The logical size of this camera's viewport. If the `viewport` field is set to [`Some`], this

|

||||

/// will be the size of that custom viewport. Otherwise it will default to the full logical size of

|

||||

/// the current [`RenderTarget`].

|

||||

/// For logic that requires the full logical size of the [`RenderTarget`], prefer [`Camera::logical_target_size`].

|

||||

#[inline]

|

||||

pub fn logical_viewport_size(&self) -> Option<Vec2> {

|

||||

let target_info = self.computed.target_info.as_ref()?;

|

||||

self.viewport

|

||||

.as_ref()

|

||||

.map(|v| {

|

||||

Vec2::new(

|

||||

(v.physical_size.x as f64 / target_info.scale_factor) as f32,

|

||||

(v.physical_size.y as f64 / target_info.scale_factor) as f32,

|

||||

)

|

||||

})

|

||||

.or_else(|| self.logical_target_size())

|

||||

}

|

||||

|

||||

/// The physical size of this camera's viewport. If the `viewport` field is set to [`Some`], this

|

||||

/// will be the size of that custom viewport. Otherwise it will default to the full physical size of

|

||||

/// the current [`RenderTarget`].

|

||||

/// For logic that requires the full physical size of the [`RenderTarget`], prefer [`Camera::physical_target_size`].

|

||||

#[inline]

|

||||

pub fn physical_viewport_size(&self) -> Option<UVec2> {

|

||||

self.viewport

|

||||

.as_ref()

|

||||

.map(|v| v.physical_size)

|

||||

.or_else(|| self.physical_target_size())

|

||||

}

|

||||

|

||||

/// The full logical size of this camera's [`RenderTarget`], ignoring custom `viewport` configuration.

|

||||

/// Note that if the `viewport` field is [`Some`], this will not represent the size of the rendered area.

|

||||

/// For logic that requires the size of the actually rendered area, prefer [`Camera::logical_viewport_size`].

|

||||

#[inline]

|

||||

pub fn logical_target_size(&self) -> Option<Vec2> {

|

||||

self.computed.target_info.as_ref().map(|t| {

|

||||

Vec2::new(

|

||||

(t.physical_size.x as f64 / t.scale_factor) as f32,

|

||||

(t.physical_size.y as f64 / t.scale_factor) as f32,

|

||||

)

|

||||

})

|

||||

}

|

||||

|

||||

/// The full physical size of this camera's [`RenderTarget`], ignoring custom `viewport` configuration.

|

||||

/// Note that if the `viewport` field is [`Some`], this will not represent the size of the rendered area.

|

||||

/// For logic that requires the size of the actually rendered area, prefer [`Camera::physical_viewport_size`].

|

||||

#[inline]

|

||||

pub fn physical_target_size(&self) -> Option<UVec2> {

|

||||

self.computed.target_info.as_ref().map(|t| t.physical_size)

|

||||

}

|

||||

|

||||

/// The projection matrix computed using this camera's [`CameraProjection`].

|

||||

#[inline]

|

||||

pub fn projection_matrix(&self) -> Mat4 {

|

||||

self.computed.projection_matrix

|

||||

}

|

||||

|

||||

/// Given a position in world space, use the camera to compute the viewport-space coordinates.

|

||||

///

|

||||

/// To get the coordinates in Normalized Device Coordinates, you should use

|

||||

|

|

@ -63,7 +170,7 @@ impl Camera {

|

|||

camera_transform: &GlobalTransform,

|

||||

world_position: Vec3,

|

||||

) -> Option<Vec2> {

|

||||

let target_size = self.logical_target_size?;

|

||||

let target_size = self.logical_viewport_size()?;

|

||||

let ndc_space_coords = self.world_to_ndc(camera_transform, world_position)?;

|

||||

// NDC z-values outside of 0 < z < 1 are outside the camera frustum and are thus not in viewport-space

|

||||

if ndc_space_coords.z < 0.0 || ndc_space_coords.z > 1.0 {

|

||||

|

|

@ -86,7 +193,7 @@ impl Camera {

|

|||

) -> Option<Vec3> {

|

||||

// Build a transform to convert from world to NDC using camera data

|

||||

let world_to_ndc: Mat4 =

|

||||

self.projection_matrix * camera_transform.compute_matrix().inverse();

|

||||

self.computed.projection_matrix * camera_transform.compute_matrix().inverse();

|

||||

let ndc_space_coords: Vec3 = world_to_ndc.project_point3(world_position);

|

||||

|

||||

if !ndc_space_coords.is_nan() {

|

||||

|

|

@ -109,6 +216,8 @@ impl CameraRenderGraph {

|

|||

}

|

||||

}

|

||||

|

||||

/// The "target" that a [`Camera`] will render to. For example, this could be a [`Window`](bevy_window::Window)

|

||||

/// swapchain or an [`Image`].

|

||||

#[derive(Debug, Clone, Reflect, PartialEq, Eq, Hash, PartialOrd, Ord)]

|

||||

pub enum RenderTarget {

|

||||

/// Window to which the camera's view is rendered.

|

||||

|

|

@ -138,28 +247,29 @@ impl RenderTarget {

|

|||

}

|

||||

}

|

||||

}

|

||||

pub fn get_physical_size(&self, windows: &Windows, images: &Assets<Image>) -> Option<UVec2> {

|

||||

match self {

|

||||

RenderTarget::Window(window_id) => windows

|

||||

.get(*window_id)

|

||||

.map(|window| UVec2::new(window.physical_width(), window.physical_height())),

|

||||

RenderTarget::Image(image_handle) => images.get(image_handle).map(|image| {

|

||||

|

||||

pub fn get_render_target_info(

|

||||

&self,

|

||||

windows: &Windows,

|

||||

images: &Assets<Image>,

|

||||

) -> Option<RenderTargetInfo> {

|

||||

Some(match self {

|

||||

RenderTarget::Window(window_id) => {

|

||||

let window = windows.get(*window_id)?;

|

||||

RenderTargetInfo {

|

||||

physical_size: UVec2::new(window.physical_width(), window.physical_height()),

|

||||

scale_factor: window.scale_factor(),

|

||||

}

|

||||

}

|

||||

RenderTarget::Image(image_handle) => {

|

||||

let image = images.get(image_handle)?;

|

||||

let Extent3d { width, height, .. } = image.texture_descriptor.size;

|

||||

UVec2::new(width, height)

|

||||

}),

|

||||

}

|

||||

.filter(|size| size.x > 0 && size.y > 0)

|

||||

}

|

||||

pub fn get_logical_size(&self, windows: &Windows, images: &Assets<Image>) -> Option<Vec2> {

|

||||

match self {

|

||||

RenderTarget::Window(window_id) => windows

|

||||

.get(*window_id)

|

||||

.map(|window| Vec2::new(window.width(), window.height())),

|

||||

RenderTarget::Image(image_handle) => images.get(image_handle).map(|image| {

|

||||

let Extent3d { width, height, .. } = image.texture_descriptor.size;

|

||||

Vec2::new(width as f32, height as f32)

|

||||

}),

|

||||

}

|

||||

RenderTargetInfo {

|

||||

physical_size: UVec2::new(width, height),

|

||||

scale_factor: 1.0,

|

||||

}

|

||||

}

|

||||

})

|

||||

}

|

||||

// Check if this render target is contained in the given changed windows or images.

|

||||

fn is_changed(

|

||||

|

|

@ -243,11 +353,10 @@ pub fn camera_system<T: CameraProjection + Component>(

|

|||

|| added_cameras.contains(&entity)

|

||||

|| camera_projection.is_changed()

|

||||

{

|

||||

camera.logical_target_size = camera.target.get_logical_size(&windows, &images);

|

||||

camera.physical_target_size = camera.target.get_physical_size(&windows, &images);

|

||||

if let Some(size) = camera.logical_target_size {

|

||||

camera.computed.target_info = camera.target.get_render_target_info(&windows, &images);

|

||||

if let Some(size) = camera.logical_viewport_size() {

|

||||

camera_projection.update(size.x, size.y);

|

||||

camera.projection_matrix = camera_projection.get_projection_matrix();

|

||||

camera.computed.projection_matrix = camera_projection.get_projection_matrix();

|

||||

camera.depth_calculation = camera_projection.depth_calculation();

|

||||

}

|

||||

}

|

||||

|

|

@ -257,7 +366,9 @@ pub fn camera_system<T: CameraProjection + Component>(

|

|||

#[derive(Component, Debug)]

|

||||

pub struct ExtractedCamera {

|

||||

pub target: RenderTarget,

|

||||

pub physical_size: Option<UVec2>,

|

||||

pub physical_viewport_size: Option<UVec2>,

|

||||

pub physical_target_size: Option<UVec2>,

|

||||

pub viewport: Option<Viewport>,

|

||||

pub render_graph: Cow<'static, str>,

|

||||

pub priority: isize,

|

||||

}

|

||||

|

|

@ -276,19 +387,27 @@ pub fn extract_cameras(

|

|||

if !camera.is_active {

|

||||

continue;

|

||||

}

|

||||

if let Some(size) = camera.physical_target_size {

|

||||

if let (Some(viewport_size), Some(target_size)) = (

|

||||

camera.physical_viewport_size(),

|

||||

camera.physical_target_size(),

|

||||

) {

|

||||

if target_size.x == 0 || target_size.y == 0 {

|

||||

continue;

|

||||

}

|

||||

commands.get_or_spawn(entity).insert_bundle((

|

||||

ExtractedCamera {

|

||||

target: camera.target.clone(),

|

||||

physical_size: Some(size),

|

||||

viewport: camera.viewport.clone(),

|

||||

physical_viewport_size: Some(viewport_size),

|

||||

physical_target_size: Some(target_size),

|

||||

render_graph: camera_render_graph.0.clone(),

|

||||

priority: camera.priority,

|

||||

},

|

||||

ExtractedView {

|

||||

projection: camera.projection_matrix,

|

||||

projection: camera.projection_matrix(),

|

||||

transform: *transform,

|

||||

width: size.x,

|

||||

height: size.y,

|

||||

width: viewport_size.x,

|

||||

height: viewport_size.y,

|

||||

},

|

||||

visible_entities.clone(),

|

||||

));

|

||||

|

|

|

|||

|

|

@ -1,4 +1,5 @@

|

|||

use crate::{

|

||||

camera::Viewport,

|

||||

prelude::Color,

|

||||

render_resource::{

|

||||

BindGroup, BindGroupId, Buffer, BufferId, BufferSlice, RenderPipeline, RenderPipelineId,

|

||||

|

|

@ -336,6 +337,20 @@ impl<'a> TrackedRenderPass<'a> {

|

|||

.set_viewport(x, y, width, height, min_depth, max_depth);

|

||||

}

|

||||

|

||||

/// Set the rendering viewport to the given [`Camera`](crate::camera::Viewport) [`Viewport`].

|

||||

///

|

||||

/// Subsequent draw calls will be projected into that viewport.

|

||||

pub fn set_camera_viewport(&mut self, viewport: &Viewport) {

|

||||

self.set_viewport(

|

||||

viewport.physical_position.x as f32,

|

||||

viewport.physical_position.y as f32,

|

||||

viewport.physical_size.x as f32,

|

||||

viewport.physical_size.y as f32,

|

||||

viewport.depth.start,

|

||||

viewport.depth.end,

|

||||

);

|

||||

}

|

||||

|

||||

/// Insert a single debug marker.

|

||||

///

|

||||

/// This is a GPU debugging feature. This has no effect on the rendering itself.

|

||||

|

|

|

|||

|

|

@ -172,7 +172,7 @@ fn prepare_view_targets(

|

|||

) {

|

||||

let mut sampled_textures = HashMap::default();

|

||||

for (entity, camera) in cameras.iter() {

|

||||

if let Some(size) = camera.physical_size {

|

||||

if let Some(target_size) = camera.physical_target_size {

|

||||

if let Some(texture_view) = camera.target.get_texture_view(&windows, &images) {

|

||||

let sampled_target = if msaa.samples > 1 {

|

||||

let sampled_texture = sampled_textures

|

||||

|

|

@ -183,8 +183,8 @@ fn prepare_view_targets(

|

|||

TextureDescriptor {

|

||||

label: Some("sampled_color_attachment_texture"),

|

||||

size: Extent3d {

|

||||

width: size.x,

|

||||

height: size.y,

|

||||

width: target_size.x,

|

||||

height: target_size.y,

|

||||

depth_or_array_layers: 1,

|

||||

},

|

||||

mip_level_count: 1,

|

||||

|

|

|

|||

|

|

@ -237,9 +237,10 @@ pub fn extract_default_ui_camera_view<T: Component>(

|

|||

{

|

||||

continue;

|

||||

}

|

||||

if let (Some(logical_size), Some(physical_size)) =

|

||||

(camera.logical_target_size, camera.physical_target_size)

|

||||

{

|

||||

if let (Some(logical_size), Some(physical_size)) = (

|

||||

camera.logical_viewport_size(),

|

||||

camera.physical_viewport_size(),

|

||||

) {

|

||||

let mut projection = OrthographicProjection {

|

||||

far: UI_CAMERA_FAR,

|

||||

window_origin: WindowOrigin::BottomLeft,

|

||||

|

|

|

|||

108

examples/3d/split_screen.rs

Normal file

108

examples/3d/split_screen.rs

Normal file

|

|

@ -0,0 +1,108 @@

|

|||

//! Renders two cameras to the same window to accomplish "split screen".

|

||||

|

||||

use bevy::{

|

||||

core_pipeline::clear_color::ClearColorConfig,

|

||||

prelude::*,

|

||||

render::camera::Viewport,

|

||||

window::{WindowId, WindowResized},

|

||||

};

|

||||

|

||||

fn main() {

|

||||

App::new()

|

||||

.add_plugins(DefaultPlugins)

|

||||

.add_startup_system(setup)

|

||||

.add_system(set_camera_viewports)

|

||||

.run();

|

||||

}

|

||||

|

||||

/// set up a simple 3D scene

|

||||

fn setup(

|

||||

mut commands: Commands,

|

||||

asset_server: Res<AssetServer>,

|

||||

mut meshes: ResMut<Assets<Mesh>>,

|

||||

mut materials: ResMut<Assets<StandardMaterial>>,

|

||||

) {

|

||||

// plane

|

||||

commands.spawn_bundle(PbrBundle {

|

||||

mesh: meshes.add(Mesh::from(shape::Plane { size: 100.0 })),

|

||||

material: materials.add(Color::rgb(0.3, 0.5, 0.3).into()),

|

||||

..default()

|

||||

});

|

||||

|

||||

commands.spawn_scene(asset_server.load("models/animated/Fox.glb#Scene0"));

|

||||

|

||||

// Light

|

||||

commands.spawn_bundle(DirectionalLightBundle {

|

||||

transform: Transform::from_rotation(Quat::from_euler(

|

||||

EulerRot::ZYX,

|

||||

0.0,

|

||||

1.0,

|

||||

-std::f32::consts::FRAC_PI_4,

|

||||

)),

|

||||

directional_light: DirectionalLight {

|

||||

shadows_enabled: true,

|

||||

..default()

|

||||

},

|

||||

..default()

|

||||

});

|

||||

|

||||

// Left Camera

|

||||

commands

|

||||

.spawn_bundle(Camera3dBundle {

|

||||

transform: Transform::from_xyz(0.0, 200.0, -100.0).looking_at(Vec3::ZERO, Vec3::Y),

|

||||

..default()

|

||||

})

|

||||

.insert(LeftCamera);

|

||||

|

||||

// Right Camera

|

||||

commands

|

||||

.spawn_bundle(Camera3dBundle {

|

||||

transform: Transform::from_xyz(100.0, 100., 150.0).looking_at(Vec3::ZERO, Vec3::Y),

|

||||

camera: Camera {

|

||||

// Renders the right camera after the left camera, which has a default priority of 0

|

||||

priority: 1,

|

||||

..default()

|

||||

},

|

||||

camera_3d: Camera3d {

|

||||

// dont clear on the second camera because the first camera already cleared the window

|

||||

clear_color: ClearColorConfig::None,

|

||||

},

|

||||

..default()

|

||||

})

|

||||

.insert(RightCamera);

|

||||

}

|

||||

|

||||

#[derive(Component)]

|

||||

struct LeftCamera;

|

||||

|

||||

#[derive(Component)]

|

||||

struct RightCamera;

|

||||

|

||||

fn set_camera_viewports(

|

||||

windows: Res<Windows>,

|

||||

mut resize_events: EventReader<WindowResized>,

|

||||

mut left_camera: Query<&mut Camera, (With<LeftCamera>, Without<RightCamera>)>,

|

||||

mut right_camera: Query<&mut Camera, With<RightCamera>>,

|

||||

) {

|

||||

// We need to dynamically resize the camera's viewports whenever the window size changes

|

||||

// so then each camera always takes up half the screen.

|

||||

// A resize_event is sent when the window is first created, allowing us to reuse this system for initial setup.

|

||||

for resize_event in resize_events.iter() {

|

||||

if resize_event.id == WindowId::primary() {

|

||||

let window = windows.primary();

|

||||

let mut left_camera = left_camera.single_mut();

|

||||

left_camera.viewport = Some(Viewport {

|

||||

physical_position: UVec2::new(0, 0),

|

||||

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

|

||||

..default()

|

||||

});

|

||||

|

||||

let mut right_camera = right_camera.single_mut();

|

||||

right_camera.viewport = Some(Viewport {

|

||||

physical_position: UVec2::new(window.physical_width() / 2, 0),

|

||||

physical_size: UVec2::new(window.physical_width() / 2, window.physical_height()),

|

||||

..default()

|

||||

});

|

||||

}

|

||||

}

|

||||

}

|

||||

|

|

@ -103,6 +103,7 @@ Example | File | Description

|

|||

Example | File | Description

|

||||

--- | --- | ---

|

||||

`3d_scene` | [`3d/3d_scene.rs`](./3d/3d_scene.rs) | Simple 3D scene with basic shapes and lighting

|

||||

`3d_shapes` | [`3d/shapes.rs`](./3d/shapes.rs) | A scene showcasing the built-in 3D shapes

|

||||

`lighting` | [`3d/lighting.rs`](./3d/lighting.rs) | Illustrates various lighting options in a simple scene

|

||||

`load_gltf` | [`3d/load_gltf.rs`](./3d/load_gltf.rs) | Loads and renders a gltf file as a scene

|

||||

`msaa` | [`3d/msaa.rs`](./3d/msaa.rs) | Configures MSAA (Multi-Sample Anti-Aliasing) for smoother edges

|

||||

|

|

@ -113,12 +114,12 @@ Example | File | Description

|

|||

`shadow_caster_receiver` | [`3d/shadow_caster_receiver.rs`](./3d/shadow_caster_receiver.rs) | Demonstrates how to prevent meshes from casting/receiving shadows in a 3d scene

|

||||

`shadow_biases` | [`3d/shadow_biases.rs`](./3d/shadow_biases.rs) | Demonstrates how shadow biases affect shadows in a 3d scene

|

||||

`spherical_area_lights` | [`3d/spherical_area_lights.rs`](./3d/spherical_area_lights.rs) | Demonstrates how point light radius values affect light behavior.

|

||||

`split_screen` | [`3d/split_screen.rs`](./3d/split_screen.rs) | Demonstrates how to render two cameras to the same window to accomplish "split screen".

|

||||

`texture` | [`3d/texture.rs`](./3d/texture.rs) | Shows configuration of texture materials

|

||||

`two_passes` | [`3d/two_passes.rs`](./3d/two_passes.rs) | Renders two 3d passes to the same window from different perspectives.

|

||||

`update_gltf_scene` | [`3d/update_gltf_scene.rs`](./3d/update_gltf_scene.rs) | Update a scene from a gltf file, either by spawning the scene as a child of another entity, or by accessing the entities of the scene

|

||||

`vertex_colors` | [`3d/vertex_colors.rs`](./3d/vertex_colors.rs) | Shows the use of vertex colors

|

||||

`wireframe` | [`3d/wireframe.rs`](./3d/wireframe.rs) | Showcases wireframe rendering

|

||||

`3d_shapes` | [`3d/shapes.rs`](./3d/shapes.rs) | A scene showcasing the built-in 3D shapes

|

||||

|

||||

## Animation

|

||||

|

||||

|

|

|

|||

Loading…

Add table

Reference in a new issue