mirror of

https://github.com/bevyengine/bevy

synced 2025-02-18 15:08:36 +00:00

Revamp Bloom (#6677)

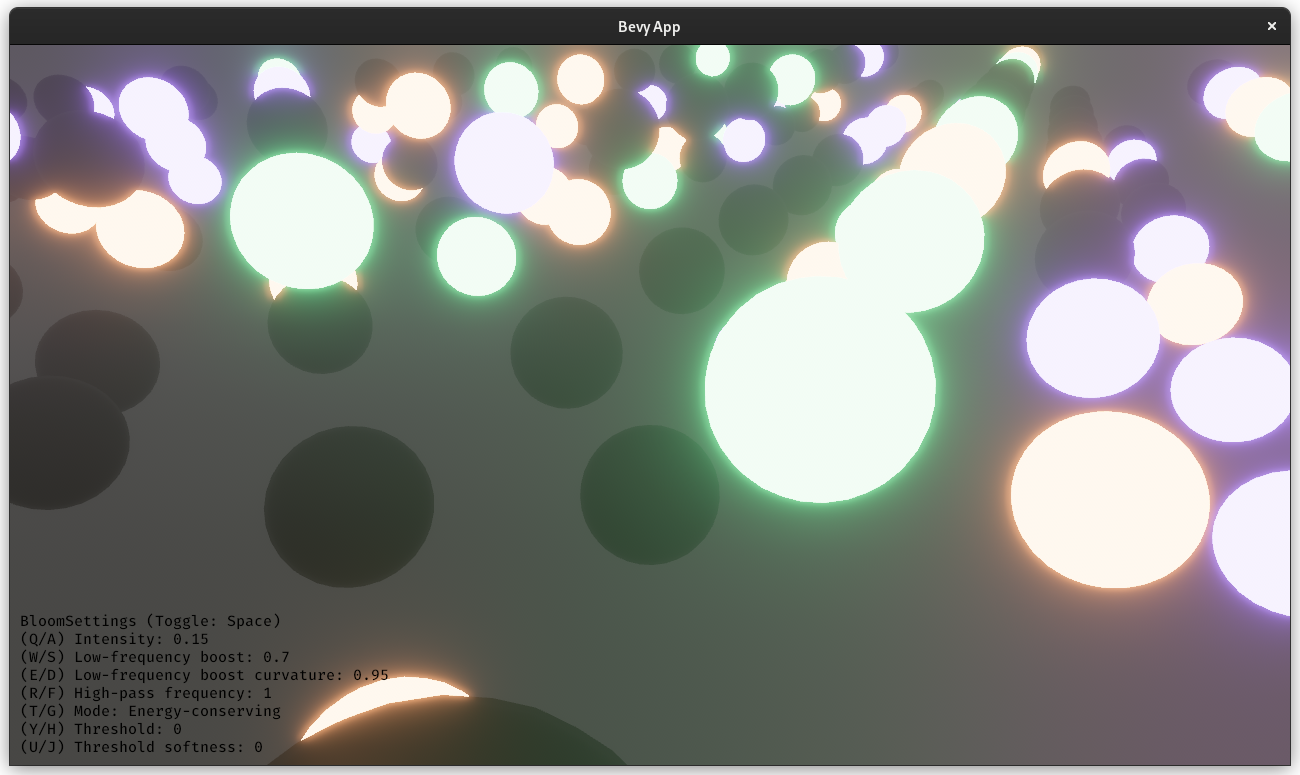

Huge credit to @StarLederer, who did almost all of the work on this. We're just reusing this PR to keep everything in one place. # Objective 1. Make bloom more physically based. 1. Improve artistic control. 1. Allow to use bloom as screen blur. 1. Fix #6634. 1. Address #6655 (although the author makes incorrect conclusions). ## Solution 1. Set the default threshold to 0. 2. Lerp between bloom textures when `composite_mode: BloomCompositeMode::EnergyConserving`. 1. Use [a parametric function](https://starlederer.github.io/bloom) to control blend levels for each bloom texture. In the future this can be controlled per-pixel for things like lens dirt. 3. Implement BloomCompositeMode::Additive` for situations where the old school look is desired. ## Changelog * Bloom now looks different. * Added `BloomSettings:lf_boost`, `BloomSettings:lf_boost_curvature`, `BloomSettings::high_pass_frequency` and `BloomSettings::composite_mode`. * `BloomSettings::scale` removed. * `BloomSettings::knee` renamed to `BloomPrefilterSettings::softness`. * `BloomSettings::threshold` renamed to `BloomPrefilterSettings::threshold`. * The bloom example has been renamed to bloom_3d and improved. A bloom_2d example was added. ## Migration Guide * Refactor mentions of `BloomSettings::knee` and `BloomSettings::threshold` as `BloomSettings::prefilter_settings` where knee is now `softness`. * If defined without `..default()` add `..default()` to definitions of `BloomSettings` instances or manually define missing fields. * Adapt to Bloom looking visually different (if needed). Co-authored-by: Herman Lederer <germans.lederers@gmail.com>

This commit is contained in:

parent

cbbf8ac575

commit

2a7000a738

12 changed files with 1409 additions and 772 deletions

20

Cargo.toml

20

Cargo.toml

|

|

@ -50,7 +50,7 @@ default = [

|

|||

"x11",

|

||||

"filesystem_watcher",

|

||||

"android_shared_stdcxx",

|

||||

"tonemapping_luts"

|

||||

"tonemapping_luts",

|

||||

]

|

||||

|

||||

# Force dynamic linking, which improves iterative compile times

|

||||

|

|

@ -239,6 +239,16 @@ path = "examples/hello_world.rs"

|

|||

hidden = true

|

||||

|

||||

# 2D Rendering

|

||||

[[example]]

|

||||

name = "bloom_2d"

|

||||

path = "examples/2d/bloom_2d.rs"

|

||||

|

||||

[package.metadata.example.bloom_2d]

|

||||

name = "2D Bloom"

|

||||

description = "Illustrates bloom post-processing in 2d"

|

||||

category = "2D Rendering"

|

||||

wasm = false

|

||||

|

||||

[[example]]

|

||||

name = "move_sprite"

|

||||

path = "examples/2d/move_sprite.rs"

|

||||

|

|

@ -451,11 +461,11 @@ category = "3D Rendering"

|

|||

wasm = true

|

||||

|

||||

[[example]]

|

||||

name = "bloom"

|

||||

path = "examples/3d/bloom.rs"

|

||||

name = "bloom_3d"

|

||||

path = "examples/3d/bloom_3d.rs"

|

||||

|

||||

[package.metadata.example.bloom]

|

||||

name = "Bloom"

|

||||

[package.metadata.example.bloom_3d]

|

||||

name = "3D Bloom"

|

||||

description = "Illustrates bloom configuration using HDR and emissive materials"

|

||||

category = "3D Rendering"

|

||||

wasm = false

|

||||

|

|

|

|||

|

|

@ -1,138 +1,151 @@

|

|||

// Bloom works by creating an intermediate texture with a bunch of mip levels, each half the size of the previous.

|

||||

// You then downsample each mip (starting with the original texture) to the lower resolution mip under it, going in order.

|

||||

// You then upsample each mip (starting from the smallest mip) and blend with the higher resolution mip above it (ending on the original texture).

|

||||

//

|

||||

// References:

|

||||

// * [COD] - Next Generation Post Processing in Call of Duty - http://www.iryoku.com/next-generation-post-processing-in-call-of-duty-advanced-warfare

|

||||

// * [PBB] - Physically Based Bloom - https://learnopengl.com/Guest-Articles/2022/Phys.-Based-Bloom

|

||||

|

||||

#import bevy_core_pipeline::fullscreen_vertex_shader

|

||||

|

||||

struct BloomUniforms {

|

||||

threshold: f32,

|

||||

knee: f32,

|

||||

scale: f32,

|

||||

intensity: f32,

|

||||

threshold_precomputations: vec4<f32>,

|

||||

viewport: vec4<f32>,

|

||||

aspect: f32,

|

||||

};

|

||||

|

||||

@group(0) @binding(0)

|

||||

var original: texture_2d<f32>;

|

||||

var input_texture: texture_2d<f32>;

|

||||

@group(0) @binding(1)

|

||||

var original_sampler: sampler;

|

||||

var s: sampler;

|

||||

|

||||

@group(0) @binding(2)

|

||||

var<uniform> uniforms: BloomUniforms;

|

||||

@group(0) @binding(3)

|

||||

var up: texture_2d<f32>;

|

||||

|

||||

fn quadratic_threshold(color: vec4<f32>, threshold: f32, curve: vec3<f32>) -> vec4<f32> {

|

||||

let br = max(max(color.r, color.g), color.b);

|

||||

#ifdef FIRST_DOWNSAMPLE

|

||||

// https://catlikecoding.com/unity/tutorials/advanced-rendering/bloom/#3.4

|

||||

fn soft_threshold(color: vec3<f32>) -> vec3<f32> {

|

||||

let brightness = max(color.r, max(color.g, color.b));

|

||||

var softness = brightness - uniforms.threshold_precomputations.y;

|

||||

softness = clamp(softness, 0.0, uniforms.threshold_precomputations.z);

|

||||

softness = softness * softness * uniforms.threshold_precomputations.w;

|

||||

var contribution = max(brightness - uniforms.threshold_precomputations.x, softness);

|

||||

contribution /= max(brightness, 0.00001); // Prevent division by 0

|

||||

return color * contribution;

|

||||

}

|

||||

#endif

|

||||

|

||||

var rq: f32 = clamp(br - curve.x, 0.0, curve.y);

|

||||

rq = curve.z * rq * rq;

|

||||

|

||||

return color * max(rq, br - threshold) / max(br, 0.0001);

|

||||

// luminance coefficients from Rec. 709.

|

||||

// https://en.wikipedia.org/wiki/Rec._709

|

||||

fn tonemapping_luminance(v: vec3<f32>) -> f32 {

|

||||

return dot(v, vec3<f32>(0.2126, 0.7152, 0.0722));

|

||||

}

|

||||

|

||||

// Samples original around the supplied uv using a filter.

|

||||

//

|

||||

// o o o

|

||||

// o o

|

||||

// o o o

|

||||

// o o

|

||||

// o o o

|

||||

//

|

||||

// This is used because it has a number of advantages that

|

||||

// outweigh the cost of 13 samples that basically boil down

|

||||

// to it looking better.

|

||||

//

|

||||

// These advantages are outlined in a youtube video by the Cherno:

|

||||

// https://www.youtube.com/watch?v=tI70-HIc5ro

|

||||

fn sample_13_tap(uv: vec2<f32>, scale: vec2<f32>) -> vec4<f32> {

|

||||

let a = textureSample(original, original_sampler, uv + vec2<f32>(-1.0, -1.0) * scale);

|

||||

let b = textureSample(original, original_sampler, uv + vec2<f32>(0.0, -1.0) * scale);

|

||||

let c = textureSample(original, original_sampler, uv + vec2<f32>(1.0, -1.0) * scale);

|

||||

let d = textureSample(original, original_sampler, uv + vec2<f32>(-0.5, -0.5) * scale);

|

||||

let e = textureSample(original, original_sampler, uv + vec2<f32>(0.5, -0.5) * scale);

|

||||

let f = textureSample(original, original_sampler, uv + vec2<f32>(-1.0, 0.0) * scale);

|

||||

let g = textureSample(original, original_sampler, uv + vec2<f32>(0.0, 0.0) * scale);

|

||||

let h = textureSample(original, original_sampler, uv + vec2<f32>(1.0, 0.0) * scale);

|

||||

let i = textureSample(original, original_sampler, uv + vec2<f32>(-0.5, 0.5) * scale);

|

||||

let j = textureSample(original, original_sampler, uv + vec2<f32>(0.5, 0.5) * scale);

|

||||

let k = textureSample(original, original_sampler, uv + vec2<f32>(-1.0, 1.0) * scale);

|

||||

let l = textureSample(original, original_sampler, uv + vec2<f32>(0.0, 1.0) * scale);

|

||||

let m = textureSample(original, original_sampler, uv + vec2<f32>(1.0, 1.0) * scale);

|

||||

|

||||

let div = (1.0 / 4.0) * vec2<f32>(0.5, 0.125);

|

||||

|

||||

var o: vec4<f32> = (d + e + i + j) * div.x;

|

||||

o = o + (a + b + g + f) * div.y;

|

||||

o = o + (b + c + h + g) * div.y;

|

||||

o = o + (f + g + l + k) * div.y;

|

||||

o = o + (g + h + m + l) * div.y;

|

||||

|

||||

return o;

|

||||

fn rgb_to_srgb_simple(color: vec3<f32>) -> vec3<f32> {

|

||||

return pow(color, vec3<f32>(1.0 / 2.2));

|

||||

}

|

||||

|

||||

// Samples original using a 3x3 tent filter.

|

||||

//

|

||||

// NOTE: Use a 2x2 filter for better perf, but 3x3 looks better.

|

||||

fn sample_original_3x3_tent(uv: vec2<f32>, scale: vec2<f32>) -> vec4<f32> {

|

||||

let d = vec4<f32>(1.0, 1.0, -1.0, 0.0);

|

||||

|

||||

var s: vec4<f32> = textureSample(original, original_sampler, uv - d.xy * scale);

|

||||

s = s + textureSample(original, original_sampler, uv - d.wy * scale) * 2.0;

|

||||

s = s + textureSample(original, original_sampler, uv - d.zy * scale);

|

||||

|

||||

s = s + textureSample(original, original_sampler, uv + d.zw * scale) * 2.0;

|

||||

s = s + textureSample(original, original_sampler, uv) * 4.0;

|

||||

s = s + textureSample(original, original_sampler, uv + d.xw * scale) * 2.0;

|

||||

|

||||

s = s + textureSample(original, original_sampler, uv + d.zy * scale);

|

||||

s = s + textureSample(original, original_sampler, uv + d.wy * scale) * 2.0;

|

||||

s = s + textureSample(original, original_sampler, uv + d.xy * scale);

|

||||

|

||||

return s / 16.0;

|

||||

// http://graphicrants.blogspot.com/2013/12/tone-mapping.html

|

||||

fn karis_average(color: vec3<f32>) -> f32 {

|

||||

// Luminance calculated by gamma-correcting linear RGB to non-linear sRGB using pow(color, 1.0 / 2.2)

|

||||

// and then calculating luminance based on Rec. 709 color primaries.

|

||||

let luma = tonemapping_luminance(rgb_to_srgb_simple(color)) / 4.0;

|

||||

return 1.0 / (1.0 + luma);

|

||||

}

|

||||

|

||||

// [COD] slide 153

|

||||

fn sample_input_13_tap(uv: vec2<f32>) -> vec3<f32> {

|

||||

let a = textureSample(input_texture, s, uv, vec2<i32>(-2, 2)).rgb;

|

||||

let b = textureSample(input_texture, s, uv, vec2<i32>(0, 2)).rgb;

|

||||

let c = textureSample(input_texture, s, uv, vec2<i32>(2, 2)).rgb;

|

||||

let d = textureSample(input_texture, s, uv, vec2<i32>(-2, 0)).rgb;

|

||||

let e = textureSample(input_texture, s, uv).rgb;

|

||||

let f = textureSample(input_texture, s, uv, vec2<i32>(2, 0)).rgb;

|

||||

let g = textureSample(input_texture, s, uv, vec2<i32>(-2, -2)).rgb;

|

||||

let h = textureSample(input_texture, s, uv, vec2<i32>(0, -2)).rgb;

|

||||

let i = textureSample(input_texture, s, uv, vec2<i32>(2, -2)).rgb;

|

||||

let j = textureSample(input_texture, s, uv, vec2<i32>(-1, 1)).rgb;

|

||||

let k = textureSample(input_texture, s, uv, vec2<i32>(1, 1)).rgb;

|

||||

let l = textureSample(input_texture, s, uv, vec2<i32>(-1, -1)).rgb;

|

||||

let m = textureSample(input_texture, s, uv, vec2<i32>(1, -1)).rgb;

|

||||

|

||||

#ifdef FIRST_DOWNSAMPLE

|

||||

// [COD] slide 168

|

||||

//

|

||||

// The first downsample pass reads from the rendered frame which may exhibit

|

||||

// 'fireflies' (individual very bright pixels) that should not cause the bloom effect.

|

||||

//

|

||||

// The first downsample uses a firefly-reduction method proposed by Brian Karis

|

||||

// which takes a weighted-average of the samples to limit their luma range to [0, 1].

|

||||

// This implementation matches the LearnOpenGL article [PBB].

|

||||

var group0 = (a + b + d + e) * (0.125f / 4.0f);

|

||||

var group1 = (b + c + e + f) * (0.125f / 4.0f);

|

||||

var group2 = (d + e + g + h) * (0.125f / 4.0f);

|

||||

var group3 = (e + f + h + i) * (0.125f / 4.0f);

|

||||

var group4 = (j + k + l + m) * (0.5f / 4.0f);

|

||||

group0 *= karis_average(group0);

|

||||

group1 *= karis_average(group1);

|

||||

group2 *= karis_average(group2);

|

||||

group3 *= karis_average(group3);

|

||||

group4 *= karis_average(group4);

|

||||

return group0 + group1 + group2 + group3 + group4;

|

||||

#else

|

||||

var sample = (a + c + g + i) * 0.03125;

|

||||

sample += (b + d + f + h) * 0.0625;

|

||||

sample += (e + j + k + l + m) * 0.125;

|

||||

return sample;

|

||||

#endif

|

||||

}

|

||||

|

||||

// [COD] slide 162

|

||||

fn sample_input_3x3_tent(uv: vec2<f32>) -> vec3<f32> {

|

||||

// Radius. Empirically chosen by and tweaked from the LearnOpenGL article.

|

||||

let x = 0.004 / uniforms.aspect;

|

||||

let y = 0.004;

|

||||

|

||||

let a = textureSample(input_texture, s, vec2<f32>(uv.x - x, uv.y + y)).rgb;

|

||||

let b = textureSample(input_texture, s, vec2<f32>(uv.x, uv.y + y)).rgb;

|

||||

let c = textureSample(input_texture, s, vec2<f32>(uv.x + x, uv.y + y)).rgb;

|

||||

|

||||

let d = textureSample(input_texture, s, vec2<f32>(uv.x - x, uv.y)).rgb;

|

||||

let e = textureSample(input_texture, s, vec2<f32>(uv.x, uv.y)).rgb;

|

||||

let f = textureSample(input_texture, s, vec2<f32>(uv.x + x, uv.y)).rgb;

|

||||

|

||||

let g = textureSample(input_texture, s, vec2<f32>(uv.x - x, uv.y - y)).rgb;

|

||||

let h = textureSample(input_texture, s, vec2<f32>(uv.x, uv.y - y)).rgb;

|

||||

let i = textureSample(input_texture, s, vec2<f32>(uv.x + x, uv.y - y)).rgb;

|

||||

|

||||

var sample = e * 0.25;

|

||||

sample += (b + d + f + h) * 0.125;

|

||||

sample += (a + c + g + i) * 0.0625;

|

||||

|

||||

return sample;

|

||||

}

|

||||

|

||||

#ifdef FIRST_DOWNSAMPLE

|

||||

@fragment

|

||||

fn downsample_prefilter(@location(0) output_uv: vec2<f32>) -> @location(0) vec4<f32> {

|

||||

fn downsample_first(@location(0) output_uv: vec2<f32>) -> @location(0) vec4<f32> {

|

||||

let sample_uv = uniforms.viewport.xy + output_uv * uniforms.viewport.zw;

|

||||

let texel_size = 1.0 / vec2<f32>(textureDimensions(original));

|

||||

var sample = sample_input_13_tap(sample_uv);

|

||||

// Lower bound of 0.0001 is to avoid propagating multiplying by 0.0 through the

|

||||

// downscaling and upscaling which would result in black boxes.

|

||||

// The upper bound is to prevent NaNs.

|

||||

sample = clamp(sample, vec3<f32>(0.0001), vec3<f32>(3.40282347E+38));

|

||||

|

||||

let scale = texel_size;

|

||||

#ifdef USE_THRESHOLD

|

||||

sample = soft_threshold(sample);

|

||||

#endif

|

||||

|

||||

let curve = vec3<f32>(

|

||||

uniforms.threshold - uniforms.knee,

|

||||

uniforms.knee * 2.0,

|

||||

0.25 / uniforms.knee,

|

||||

);

|

||||

|

||||

var o: vec4<f32> = sample_13_tap(sample_uv, scale);

|

||||

|

||||

o = quadratic_threshold(o, uniforms.threshold, curve);

|

||||

o = max(o, vec4<f32>(0.00001));

|

||||

|

||||

return o;

|

||||

return vec4<f32>(sample, 1.0);

|

||||

}

|

||||

#endif

|

||||

|

||||

@fragment

|

||||

fn downsample(@location(0) uv: vec2<f32>) -> @location(0) vec4<f32> {

|

||||

let texel_size = 1.0 / vec2<f32>(textureDimensions(original));

|

||||

|

||||

let scale = texel_size;

|

||||

|

||||

return sample_13_tap(uv, scale);

|

||||

return vec4<f32>(sample_input_13_tap(uv), 1.0);

|

||||

}

|

||||

|

||||

@fragment

|

||||

fn upsample(@location(0) uv: vec2<f32>) -> @location(0) vec4<f32> {

|

||||

let texel_size = 1.0 / vec2<f32>(textureDimensions(original));

|

||||

|

||||

let upsample = sample_original_3x3_tent(uv, texel_size * uniforms.scale);

|

||||

var color: vec4<f32> = textureSample(up, original_sampler, uv);

|

||||

color = vec4<f32>(color.rgb + upsample.rgb, upsample.a);

|

||||

|

||||

return color;

|

||||

}

|

||||

|

||||

@fragment

|

||||

fn upsample_final(@location(0) uv: vec2<f32>) -> @location(0) vec4<f32> {

|

||||

let texel_size = 1.0 / vec2<f32>(textureDimensions(original));

|

||||

|

||||

let upsample = sample_original_3x3_tent(uv, texel_size * uniforms.scale);

|

||||

|

||||

return vec4<f32>(upsample.rgb * uniforms.intensity, upsample.a);

|

||||

return vec4<f32>(sample_input_3x3_tent(uv), 1.0);

|

||||

}

|

||||

|

|

|

|||

185

crates/bevy_core_pipeline/src/bloom/downsampling_pipeline.rs

Normal file

185

crates/bevy_core_pipeline/src/bloom/downsampling_pipeline.rs

Normal file

|

|

@ -0,0 +1,185 @@

|

|||

use super::{BloomSettings, BLOOM_SHADER_HANDLE, BLOOM_TEXTURE_FORMAT};

|

||||

use crate::fullscreen_vertex_shader::fullscreen_shader_vertex_state;

|

||||

use bevy_ecs::{

|

||||

prelude::{Component, Entity},

|

||||

system::{Commands, Query, Res, ResMut, Resource},

|

||||

world::{FromWorld, World},

|

||||

};

|

||||

use bevy_math::Vec4;

|

||||

use bevy_render::{render_resource::*, renderer::RenderDevice};

|

||||

|

||||

#[derive(Component)]

|

||||

pub struct BloomDownsamplingPipelineIds {

|

||||

pub main: CachedRenderPipelineId,

|

||||

pub first: CachedRenderPipelineId,

|

||||

}

|

||||

|

||||

#[derive(Resource)]

|

||||

pub struct BloomDownsamplingPipeline {

|

||||

/// Layout with a texture, a sampler, and uniforms

|

||||

pub bind_group_layout: BindGroupLayout,

|

||||

pub sampler: Sampler,

|

||||

}

|

||||

|

||||

#[derive(PartialEq, Eq, Hash, Clone)]

|

||||

pub struct BloomDownsamplingPipelineKeys {

|

||||

prefilter: bool,

|

||||

first_downsample: bool,

|

||||

}

|

||||

|

||||

/// The uniform struct extracted from [`BloomSettings`] attached to a Camera.

|

||||

/// Will be available for use in the Bloom shader.

|

||||

#[derive(Component, ShaderType, Clone)]

|

||||

pub struct BloomUniforms {

|

||||

// Precomputed values used when thresholding, see https://catlikecoding.com/unity/tutorials/advanced-rendering/bloom/#3.4

|

||||

pub threshold_precomputations: Vec4,

|

||||

pub viewport: Vec4,

|

||||

pub aspect: f32,

|

||||

}

|

||||

|

||||

impl FromWorld for BloomDownsamplingPipeline {

|

||||

fn from_world(world: &mut World) -> Self {

|

||||

let render_device = world.resource::<RenderDevice>();

|

||||

|

||||

// Input texture binding

|

||||

let texture = BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

};

|

||||

|

||||

// Sampler binding

|

||||

let sampler = BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

ty: BindingType::Sampler(SamplerBindingType::Filtering),

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

};

|

||||

|

||||

// Downsampling settings binding

|

||||

let settings = BindGroupLayoutEntry {

|

||||

binding: 2,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(BloomUniforms::min_size()),

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

};

|

||||

|

||||

// Bind group layout

|

||||

let bind_group_layout =

|

||||

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

label: Some("bloom_downsampling_bind_group_layout_with_settings"),

|

||||

entries: &[texture, sampler, settings],

|

||||

});

|

||||

|

||||

// Sampler

|

||||

let sampler = render_device.create_sampler(&SamplerDescriptor {

|

||||

min_filter: FilterMode::Linear,

|

||||

mag_filter: FilterMode::Linear,

|

||||

address_mode_u: AddressMode::ClampToEdge,

|

||||

address_mode_v: AddressMode::ClampToEdge,

|

||||

..Default::default()

|

||||

});

|

||||

|

||||

BloomDownsamplingPipeline {

|

||||

bind_group_layout,

|

||||

sampler,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl SpecializedRenderPipeline for BloomDownsamplingPipeline {

|

||||

type Key = BloomDownsamplingPipelineKeys;

|

||||

|

||||

fn specialize(&self, key: Self::Key) -> RenderPipelineDescriptor {

|

||||

let layout = vec![self.bind_group_layout.clone()];

|

||||

|

||||

let entry_point = if key.first_downsample {

|

||||

"downsample_first".into()

|

||||

} else {

|

||||

"downsample".into()

|

||||

};

|

||||

|

||||

let mut shader_defs = vec![];

|

||||

|

||||

if key.first_downsample {

|

||||

shader_defs.push("FIRST_DOWNSAMPLE".into());

|

||||

}

|

||||

|

||||

if key.prefilter {

|

||||

shader_defs.push("USE_THRESHOLD".into());

|

||||

}

|

||||

|

||||

RenderPipelineDescriptor {

|

||||

label: Some(

|

||||

if key.first_downsample {

|

||||

"bloom_downsampling_pipeline_first"

|

||||

} else {

|

||||

"bloom_downsampling_pipeline"

|

||||

}

|

||||

.into(),

|

||||

),

|

||||

layout,

|

||||

vertex: fullscreen_shader_vertex_state(),

|

||||

fragment: Some(FragmentState {

|

||||

shader: BLOOM_SHADER_HANDLE.typed::<Shader>(),

|

||||

shader_defs,

|

||||

entry_point,

|

||||

targets: vec![Some(ColorTargetState {

|

||||

format: BLOOM_TEXTURE_FORMAT,

|

||||

blend: None,

|

||||

write_mask: ColorWrites::ALL,

|

||||

})],

|

||||

}),

|

||||

primitive: PrimitiveState::default(),

|

||||

depth_stencil: None,

|

||||

multisample: MultisampleState::default(),

|

||||

push_constant_ranges: Vec::new(),

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

pub fn prepare_downsampling_pipeline(

|

||||

mut commands: Commands,

|

||||

pipeline_cache: Res<PipelineCache>,

|

||||

mut pipelines: ResMut<SpecializedRenderPipelines<BloomDownsamplingPipeline>>,

|

||||

pipeline: Res<BloomDownsamplingPipeline>,

|

||||

views: Query<(Entity, &BloomSettings)>,

|

||||

) {

|

||||

for (entity, settings) in &views {

|

||||

let prefilter = settings.prefilter_settings.threshold > 0.0;

|

||||

|

||||

let pipeline_id = pipelines.specialize(

|

||||

&pipeline_cache,

|

||||

&pipeline,

|

||||

BloomDownsamplingPipelineKeys {

|

||||

prefilter,

|

||||

first_downsample: false,

|

||||

},

|

||||

);

|

||||

|

||||

let pipeline_first_id = pipelines.specialize(

|

||||

&pipeline_cache,

|

||||

&pipeline,

|

||||

BloomDownsamplingPipelineKeys {

|

||||

prefilter,

|

||||

first_downsample: true,

|

||||

},

|

||||

);

|

||||

|

||||

commands

|

||||

.entity(entity)

|

||||

.insert(BloomDownsamplingPipelineIds {

|

||||

first: pipeline_first_id,

|

||||

main: pipeline_id,

|

||||

});

|

||||

}

|

||||

}

|

||||

|

|

@ -1,19 +1,27 @@

|

|||

use crate::{core_2d, core_3d, fullscreen_vertex_shader::fullscreen_shader_vertex_state};

|

||||

mod downsampling_pipeline;

|

||||

mod settings;

|

||||

mod upsampling_pipeline;

|

||||

|

||||

pub use settings::{BloomCompositeMode, BloomPrefilterSettings, BloomSettings};

|

||||

|

||||

use crate::{core_2d, core_3d};

|

||||

use bevy_app::{App, Plugin};

|

||||

use bevy_asset::{load_internal_asset, HandleUntyped};

|

||||

use bevy_ecs::{

|

||||

prelude::*,

|

||||

query::{QueryItem, QueryState},

|

||||

prelude::{Component, Entity},

|

||||

query::{QueryState, With},

|

||||

schedule::IntoSystemConfig,

|

||||

system::{Commands, Query, Res, ResMut},

|

||||

world::World,

|

||||

};

|

||||

use bevy_math::{UVec2, UVec4, Vec4};

|

||||

use bevy_reflect::{Reflect, TypeUuid};

|

||||

use bevy_math::UVec2;

|

||||

use bevy_reflect::TypeUuid;

|

||||

use bevy_render::{

|

||||

camera::ExtractedCamera,

|

||||

extract_component::{

|

||||

ComponentUniforms, DynamicUniformIndex, ExtractComponent, ExtractComponentPlugin,

|

||||

UniformComponentPlugin,

|

||||

ComponentUniforms, DynamicUniformIndex, ExtractComponentPlugin, UniformComponentPlugin,

|

||||

},

|

||||

prelude::Camera,

|

||||

prelude::Color,

|

||||

render_graph::{Node, NodeRunError, RenderGraph, RenderGraphContext, SlotInfo, SlotType},

|

||||

render_resource::*,

|

||||

renderer::{RenderContext, RenderDevice},

|

||||

|

|

@ -23,12 +31,24 @@ use bevy_render::{

|

|||

};

|

||||

#[cfg(feature = "trace")]

|

||||

use bevy_utils::tracing::info_span;

|

||||

use bevy_utils::HashMap;

|

||||

use downsampling_pipeline::{

|

||||

prepare_downsampling_pipeline, BloomDownsamplingPipeline, BloomDownsamplingPipelineIds,

|

||||

BloomUniforms,

|

||||

};

|

||||

use std::num::NonZeroU32;

|

||||

use upsampling_pipeline::{

|

||||

prepare_upsampling_pipeline, BloomUpsamplingPipeline, UpsamplingPipelineIds,

|

||||

};

|

||||

|

||||

const BLOOM_SHADER_HANDLE: HandleUntyped =

|

||||

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 929599476923908);

|

||||

|

||||

const BLOOM_TEXTURE_FORMAT: TextureFormat = TextureFormat::Rg11b10Float;

|

||||

|

||||

// Maximum size of each dimension for the largest mipchain texture used in downscaling/upscaling.

|

||||

// 512 behaves well with the UV offset of 0.004 used in bloom.wgsl

|

||||

const MAX_MIP_DIMENSION: u32 = 512;

|

||||

|

||||

pub struct BloomPlugin;

|

||||

|

||||

impl Plugin for BloomPlugin {

|

||||

|

|

@ -36,8 +56,10 @@ impl Plugin for BloomPlugin {

|

|||

load_internal_asset!(app, BLOOM_SHADER_HANDLE, "bloom.wgsl", Shader::from_wgsl);

|

||||

|

||||

app.register_type::<BloomSettings>();

|

||||

app.register_type::<BloomPrefilterSettings>();

|

||||

app.register_type::<BloomCompositeMode>();

|

||||

app.add_plugin(ExtractComponentPlugin::<BloomSettings>::default());

|

||||

app.add_plugin(UniformComponentPlugin::<BloomUniform>::default());

|

||||

app.add_plugin(UniformComponentPlugin::<BloomUniforms>::default());

|

||||

|

||||

let render_app = match app.get_sub_app_mut(RenderApp) {

|

||||

Ok(render_app) => render_app,

|

||||

|

|

@ -45,10 +67,16 @@ impl Plugin for BloomPlugin {

|

|||

};

|

||||

|

||||

render_app

|

||||

.init_resource::<BloomPipelines>()

|

||||

.init_resource::<BloomDownsamplingPipeline>()

|

||||

.init_resource::<BloomUpsamplingPipeline>()

|

||||

.init_resource::<SpecializedRenderPipelines<BloomDownsamplingPipeline>>()

|

||||

.init_resource::<SpecializedRenderPipelines<BloomUpsamplingPipeline>>()

|

||||

.add_system(prepare_bloom_textures.in_set(RenderSet::Prepare))

|

||||

.add_system(prepare_downsampling_pipeline.in_set(RenderSet::Prepare))

|

||||

.add_system(prepare_upsampling_pipeline.in_set(RenderSet::Prepare))

|

||||

.add_system(queue_bloom_bind_groups.in_set(RenderSet::Queue));

|

||||

|

||||

// Add bloom to the 3d render graph

|

||||

{

|

||||

let bloom_node = BloomNode::new(&mut render_app.world);

|

||||

let mut graph = render_app.world.resource_mut::<RenderGraph>();

|

||||

|

|

@ -73,6 +101,7 @@ impl Plugin for BloomPlugin {

|

|||

);

|

||||

}

|

||||

|

||||

// Add bloom to the 2d render graph

|

||||

{

|

||||

let bloom_node = BloomNode::new(&mut render_app.world);

|

||||

let mut graph = render_app.world.resource_mut::<RenderGraph>();

|

||||

|

|

@ -99,88 +128,16 @@ impl Plugin for BloomPlugin {

|

|||

}

|

||||

}

|

||||

|

||||

/// Applies a bloom effect to a HDR-enabled 2d or 3d camera.

|

||||

///

|

||||

/// Bloom causes bright objects to "glow", emitting a halo of light around them.

|

||||

///

|

||||

/// Often used in conjunction with `bevy_pbr::StandardMaterial::emissive`.

|

||||

///

|

||||

/// Note: This light is not "real" in the way directional or point lights are.

|

||||

///

|

||||

/// Bloom will not cast shadows or bend around other objects - it is purely a post-processing

|

||||

/// effect overlaid on top of the already-rendered scene.

|

||||

///

|

||||

/// See also <https://en.wikipedia.org/wiki/Bloom_(shader_effect)>.

|

||||

#[derive(Component, Reflect, Clone)]

|

||||

pub struct BloomSettings {

|

||||

/// Baseline of the threshold curve (default: 1.0).

|

||||

///

|

||||

/// RGB values under the threshold curve will not have bloom applied.

|

||||

pub threshold: f32,

|

||||

|

||||

/// Knee of the threshold curve (default: 0.1).

|

||||

pub knee: f32,

|

||||

|

||||

/// Scale used when upsampling (default: 1.0).

|

||||

pub scale: f32,

|

||||

|

||||

/// Intensity of the bloom effect (default: 0.3).

|

||||

pub intensity: f32,

|

||||

}

|

||||

|

||||

impl Default for BloomSettings {

|

||||

fn default() -> Self {

|

||||

Self {

|

||||

threshold: 1.0,

|

||||

knee: 0.1,

|

||||

scale: 1.0,

|

||||

intensity: 0.3,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

impl ExtractComponent for BloomSettings {

|

||||

type Query = (&'static Self, &'static Camera);

|

||||

|

||||

type Filter = ();

|

||||

type Out = BloomUniform;

|

||||

|

||||

fn extract_component((settings, camera): QueryItem<'_, Self::Query>) -> Option<Self::Out> {

|

||||

if !(camera.is_active && camera.hdr) {

|

||||

return None;

|

||||

}

|

||||

|

||||

if let (Some((origin, _)), Some(size), Some(target_size)) = (

|

||||

camera.physical_viewport_rect(),

|

||||

camera.physical_viewport_size(),

|

||||

camera.physical_target_size(),

|

||||

) {

|

||||

let min_view = size.x.min(size.y) / 2;

|

||||

let mip_count = calculate_mip_count(min_view);

|

||||

let scale = (min_view / 2u32.pow(mip_count)) as f32 / 8.0;

|

||||

|

||||

Some(BloomUniform {

|

||||

threshold: settings.threshold,

|

||||

knee: settings.knee,

|

||||

scale: settings.scale * scale,

|

||||

intensity: settings.intensity,

|

||||

viewport: UVec4::new(origin.x, origin.y, size.x, size.y).as_vec4()

|

||||

/ UVec4::new(target_size.x, target_size.y, target_size.x, target_size.y)

|

||||

.as_vec4(),

|

||||

})

|

||||

} else {

|

||||

None

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

pub struct BloomNode {

|

||||

view_query: QueryState<(

|

||||

&'static ExtractedCamera,

|

||||

&'static ViewTarget,

|

||||

&'static BloomTextures,

|

||||

&'static BloomTexture,

|

||||

&'static BloomBindGroups,

|

||||

&'static DynamicUniformIndex<BloomUniform>,

|

||||

&'static DynamicUniformIndex<BloomUniforms>,

|

||||

&'static BloomSettings,

|

||||

&'static UpsamplingPipelineIds,

|

||||

&'static BloomDownsamplingPipelineIds,

|

||||

)>,

|

||||

}

|

||||

|

||||

|

|

@ -203,6 +160,9 @@ impl Node for BloomNode {

|

|||

self.view_query.update_archetypes(world);

|

||||

}

|

||||

|

||||

// Atypically for a post-processing effect, we do not need to

|

||||

// use a secondary texture normally provided by view_target.post_process_write(),

|

||||

// instead we write into our own bloom texture and then directly back onto main.

|

||||

fn run(

|

||||

&self,

|

||||

graph: &mut RenderGraphContext,

|

||||

|

|

@ -212,34 +172,67 @@ impl Node for BloomNode {

|

|||

#[cfg(feature = "trace")]

|

||||

let _bloom_span = info_span!("bloom").entered();

|

||||

|

||||

let pipelines = world.resource::<BloomPipelines>();

|

||||

let downsampling_pipeline_res = world.resource::<BloomDownsamplingPipeline>();

|

||||

let pipeline_cache = world.resource::<PipelineCache>();

|

||||

let uniforms = world.resource::<ComponentUniforms<BloomUniforms>>();

|

||||

let view_entity = graph.get_input_entity(Self::IN_VIEW)?;

|

||||

let (camera, view_target, textures, bind_groups, uniform_index) =

|

||||

match self.view_query.get_manual(world, view_entity) {

|

||||

Ok(result) => result,

|

||||

_ => return Ok(()),

|

||||

};

|

||||

let (

|

||||

downsampling_prefilter_pipeline,

|

||||

downsampling_pipeline,

|

||||

upsampling_pipeline,

|

||||

upsampling_final_pipeline,

|

||||

) = match (

|

||||

pipeline_cache.get_render_pipeline(pipelines.downsampling_prefilter_pipeline),

|

||||

pipeline_cache.get_render_pipeline(pipelines.downsampling_pipeline),

|

||||

pipeline_cache.get_render_pipeline(pipelines.upsampling_pipeline),

|

||||

pipeline_cache.get_render_pipeline(pipelines.upsampling_final_pipeline),

|

||||

) {

|

||||

(Some(p1), Some(p2), Some(p3), Some(p4)) => (p1, p2, p3, p4),

|

||||

_ => return Ok(()),

|

||||

};

|

||||

let Ok((

|

||||

camera,

|

||||

view_target,

|

||||

bloom_texture,

|

||||

bind_groups,

|

||||

uniform_index,

|

||||

bloom_settings,

|

||||

upsampling_pipeline_ids,

|

||||

downsampling_pipeline_ids,

|

||||

)) = self.view_query.get_manual(world, view_entity)

|

||||

else { return Ok(()) };

|

||||

|

||||

let (

|

||||

Some(uniforms),

|

||||

Some(downsampling_first_pipeline),

|

||||

Some(downsampling_pipeline),

|

||||

Some(upsampling_pipeline),

|

||||

Some(upsampling_final_pipeline),

|

||||

) = (

|

||||

uniforms.binding(),

|

||||

pipeline_cache.get_render_pipeline(downsampling_pipeline_ids.first),

|

||||

pipeline_cache.get_render_pipeline(downsampling_pipeline_ids.main),

|

||||

pipeline_cache.get_render_pipeline(upsampling_pipeline_ids.id_main),

|

||||

pipeline_cache.get_render_pipeline(upsampling_pipeline_ids.id_final),

|

||||

) else { return Ok(()) };

|

||||

|

||||

render_context.command_encoder().push_debug_group("bloom");

|

||||

|

||||

// First downsample pass

|

||||

{

|

||||

let view = &BloomTextures::texture_view(&textures.texture_a, 0);

|

||||

let mut prefilter_pass =

|

||||

let downsampling_first_bind_group =

|

||||

render_context

|

||||

.render_device()

|

||||

.create_bind_group(&BindGroupDescriptor {

|

||||

label: Some("bloom_downsampling_first_bind_group"),

|

||||

layout: &downsampling_pipeline_res.bind_group_layout,

|

||||

entries: &[

|

||||

BindGroupEntry {

|

||||

binding: 0,

|

||||

// Read from main texture directly

|

||||

resource: BindingResource::TextureView(view_target.main_texture()),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 1,

|

||||

resource: BindingResource::Sampler(&bind_groups.sampler),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 2,

|

||||

resource: uniforms.clone(),

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

let view = &bloom_texture.view(0);

|

||||

let mut downsampling_first_pass =

|

||||

render_context.begin_tracked_render_pass(RenderPassDescriptor {

|

||||

label: Some("bloom_prefilter_pass"),

|

||||

label: Some("bloom_downsampling_first_pass"),

|

||||

color_attachments: &[Some(RenderPassColorAttachment {

|

||||

view,

|

||||

resolve_target: None,

|

||||

|

|

@ -247,17 +240,18 @@ impl Node for BloomNode {

|

|||

})],

|

||||

depth_stencil_attachment: None,

|

||||

});

|

||||

prefilter_pass.set_render_pipeline(downsampling_prefilter_pipeline);

|

||||

prefilter_pass.set_bind_group(

|

||||

downsampling_first_pass.set_render_pipeline(downsampling_first_pipeline);

|

||||

downsampling_first_pass.set_bind_group(

|

||||

0,

|

||||

&bind_groups.prefilter_bind_group,

|

||||

&downsampling_first_bind_group,

|

||||

&[uniform_index.index()],

|

||||

);

|

||||

prefilter_pass.draw(0..3, 0..1);

|

||||

downsampling_first_pass.draw(0..3, 0..1);

|

||||

}

|

||||

|

||||

for mip in 1..textures.mip_count {

|

||||

let view = &BloomTextures::texture_view(&textures.texture_a, mip);

|

||||

// Other downsample passes

|

||||

for mip in 1..bloom_texture.mip_count {

|

||||

let view = &bloom_texture.view(mip);

|

||||

let mut downsampling_pass =

|

||||

render_context.begin_tracked_render_pass(RenderPassDescriptor {

|

||||

label: Some("bloom_downsampling_pass"),

|

||||

|

|

@ -277,27 +271,40 @@ impl Node for BloomNode {

|

|||

downsampling_pass.draw(0..3, 0..1);

|

||||

}

|

||||

|

||||

for mip in (1..textures.mip_count).rev() {

|

||||

let view = &BloomTextures::texture_view(&textures.texture_b, mip - 1);

|

||||

// Upsample passes except the final one

|

||||

for mip in (1..bloom_texture.mip_count).rev() {

|

||||

let view = &bloom_texture.view(mip - 1);

|

||||

let mut upsampling_pass =

|

||||

render_context.begin_tracked_render_pass(RenderPassDescriptor {

|

||||

label: Some("bloom_upsampling_pass"),

|

||||

color_attachments: &[Some(RenderPassColorAttachment {

|

||||

view,

|

||||

resolve_target: None,

|

||||

ops: Operations::default(),

|

||||

ops: Operations {

|

||||

load: LoadOp::Load,

|

||||

store: true,

|

||||

},

|

||||

})],

|

||||

depth_stencil_attachment: None,

|

||||

});

|

||||

upsampling_pass.set_render_pipeline(upsampling_pipeline);

|

||||

upsampling_pass.set_bind_group(

|

||||

0,

|

||||

&bind_groups.upsampling_bind_groups[mip as usize - 1],

|

||||

&bind_groups.upsampling_bind_groups[(bloom_texture.mip_count - mip - 1) as usize],

|

||||

&[uniform_index.index()],

|

||||

);

|

||||

let blend = compute_blend_factor(

|

||||

bloom_settings,

|

||||

mip as f32,

|

||||

(bloom_texture.mip_count - 1) as f32,

|

||||

);

|

||||

upsampling_pass.set_blend_constant(Color::rgb_linear(blend, blend, blend));

|

||||

upsampling_pass.draw(0..3, 0..1);

|

||||

}

|

||||

|

||||

// Final upsample pass

|

||||

// This is very similar to the above upsampling passes with the only difference

|

||||

// being the pipeline (which itself is barely different) and the color attachment

|

||||

{

|

||||

let mut upsampling_final_pass =

|

||||

render_context.begin_tracked_render_pass(RenderPassDescriptor {

|

||||

|

|

@ -313,245 +320,36 @@ impl Node for BloomNode {

|

|||

upsampling_final_pass.set_render_pipeline(upsampling_final_pipeline);

|

||||

upsampling_final_pass.set_bind_group(

|

||||

0,

|

||||

&bind_groups.upsampling_final_bind_group,

|

||||

&bind_groups.upsampling_bind_groups[(bloom_texture.mip_count - 1) as usize],

|

||||

&[uniform_index.index()],

|

||||

);

|

||||

if let Some(viewport) = camera.viewport.as_ref() {

|

||||

upsampling_final_pass.set_camera_viewport(viewport);

|

||||

}

|

||||

let blend =

|

||||

compute_blend_factor(bloom_settings, 0.0, (bloom_texture.mip_count - 1) as f32);

|

||||

upsampling_final_pass.set_blend_constant(Color::rgb_linear(blend, blend, blend));

|

||||

upsampling_final_pass.draw(0..3, 0..1);

|

||||

}

|

||||

|

||||

render_context.command_encoder().pop_debug_group();

|

||||

|

||||

Ok(())

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Resource)]

|

||||

struct BloomPipelines {

|

||||

downsampling_prefilter_pipeline: CachedRenderPipelineId,

|

||||

downsampling_pipeline: CachedRenderPipelineId,

|

||||

upsampling_pipeline: CachedRenderPipelineId,

|

||||

upsampling_final_pipeline: CachedRenderPipelineId,

|

||||

sampler: Sampler,

|

||||

downsampling_bind_group_layout: BindGroupLayout,

|

||||

upsampling_bind_group_layout: BindGroupLayout,

|

||||

}

|

||||

|

||||

impl FromWorld for BloomPipelines {

|

||||

fn from_world(world: &mut World) -> Self {

|

||||

let render_device = world.resource::<RenderDevice>();

|

||||

|

||||

let sampler = render_device.create_sampler(&SamplerDescriptor {

|

||||

min_filter: FilterMode::Linear,

|

||||

mag_filter: FilterMode::Linear,

|

||||

address_mode_u: AddressMode::ClampToEdge,

|

||||

address_mode_v: AddressMode::ClampToEdge,

|

||||

..Default::default()

|

||||

});

|

||||

|

||||

let downsampling_bind_group_layout =

|

||||

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

label: Some("bloom_downsampling_bind_group_layout"),

|

||||

entries: &[

|

||||

// Upsampled input texture (downsampled for final upsample)

|

||||

BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

// Sampler

|

||||

BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

ty: BindingType::Sampler(SamplerBindingType::Filtering),

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

// Bloom settings

|

||||

BindGroupLayoutEntry {

|

||||

binding: 2,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(BloomUniform::min_size()),

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

let upsampling_bind_group_layout =

|

||||

render_device.create_bind_group_layout(&BindGroupLayoutDescriptor {

|

||||

label: Some("bloom_upsampling_bind_group_layout"),

|

||||

entries: &[

|

||||

// Downsampled input texture

|

||||

BindGroupLayoutEntry {

|

||||

binding: 0,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

// Sampler

|

||||

BindGroupLayoutEntry {

|

||||

binding: 1,

|

||||

ty: BindingType::Sampler(SamplerBindingType::Filtering),

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

// Bloom settings

|

||||

BindGroupLayoutEntry {

|

||||

binding: 2,

|

||||

ty: BindingType::Buffer {

|

||||

ty: BufferBindingType::Uniform,

|

||||

has_dynamic_offset: true,

|

||||

min_binding_size: Some(BloomUniform::min_size()),

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

// Upsampled input texture

|

||||

BindGroupLayoutEntry {

|

||||

binding: 3,

|

||||

ty: BindingType::Texture {

|

||||

sample_type: TextureSampleType::Float { filterable: true },

|

||||

view_dimension: TextureViewDimension::D2,

|

||||

multisampled: false,

|

||||

},

|

||||

visibility: ShaderStages::FRAGMENT,

|

||||

count: None,

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

let pipeline_cache = world.resource::<PipelineCache>();

|

||||

|

||||

let downsampling_prefilter_pipeline =

|

||||

pipeline_cache.queue_render_pipeline(RenderPipelineDescriptor {

|

||||

label: Some("bloom_downsampling_prefilter_pipeline".into()),

|

||||

layout: vec![downsampling_bind_group_layout.clone()],

|

||||

vertex: fullscreen_shader_vertex_state(),

|

||||

fragment: Some(FragmentState {

|

||||

shader: BLOOM_SHADER_HANDLE.typed::<Shader>(),

|

||||

shader_defs: vec![],

|

||||

entry_point: "downsample_prefilter".into(),

|

||||

targets: vec![Some(ColorTargetState {

|

||||

format: ViewTarget::TEXTURE_FORMAT_HDR,

|

||||

blend: None,

|

||||

write_mask: ColorWrites::ALL,

|

||||

})],

|

||||

}),

|

||||

primitive: PrimitiveState::default(),

|

||||

depth_stencil: None,

|

||||

multisample: MultisampleState::default(),

|

||||

push_constant_ranges: Vec::new(),

|

||||

});

|

||||

|

||||

let downsampling_pipeline =

|

||||

pipeline_cache.queue_render_pipeline(RenderPipelineDescriptor {

|

||||

label: Some("bloom_downsampling_pipeline".into()),

|

||||

layout: vec![downsampling_bind_group_layout.clone()],

|

||||

vertex: fullscreen_shader_vertex_state(),

|

||||

fragment: Some(FragmentState {

|

||||

shader: BLOOM_SHADER_HANDLE.typed::<Shader>(),

|

||||

shader_defs: vec![],

|

||||

entry_point: "downsample".into(),

|

||||

targets: vec![Some(ColorTargetState {

|

||||

format: ViewTarget::TEXTURE_FORMAT_HDR,

|

||||

blend: None,

|

||||

write_mask: ColorWrites::ALL,

|

||||

})],

|

||||

}),

|

||||

primitive: PrimitiveState::default(),

|

||||

depth_stencil: None,

|

||||

multisample: MultisampleState::default(),

|

||||

push_constant_ranges: Vec::new(),

|

||||

});

|

||||

|

||||

let upsampling_pipeline = pipeline_cache.queue_render_pipeline(RenderPipelineDescriptor {

|

||||

label: Some("bloom_upsampling_pipeline".into()),

|

||||

layout: vec![upsampling_bind_group_layout.clone()],

|

||||

vertex: fullscreen_shader_vertex_state(),

|

||||

fragment: Some(FragmentState {

|

||||

shader: BLOOM_SHADER_HANDLE.typed::<Shader>(),

|

||||

shader_defs: vec![],

|

||||

entry_point: "upsample".into(),

|

||||

targets: vec![Some(ColorTargetState {

|

||||

format: ViewTarget::TEXTURE_FORMAT_HDR,

|

||||

blend: None,

|

||||

write_mask: ColorWrites::ALL,

|

||||

})],

|

||||

}),

|

||||

primitive: PrimitiveState::default(),

|

||||

depth_stencil: None,

|

||||

multisample: MultisampleState::default(),

|

||||

push_constant_ranges: Vec::new(),

|

||||

});

|

||||

|

||||

let upsampling_final_pipeline =

|

||||

pipeline_cache.queue_render_pipeline(RenderPipelineDescriptor {

|

||||

label: Some("bloom_upsampling_final_pipeline".into()),

|

||||

layout: vec![downsampling_bind_group_layout.clone()],

|

||||

vertex: fullscreen_shader_vertex_state(),

|

||||

fragment: Some(FragmentState {

|

||||

shader: BLOOM_SHADER_HANDLE.typed::<Shader>(),

|

||||

shader_defs: vec![],

|

||||

entry_point: "upsample_final".into(),

|

||||

targets: vec![Some(ColorTargetState {

|

||||

format: ViewTarget::TEXTURE_FORMAT_HDR,

|

||||

blend: Some(BlendState {

|

||||

color: BlendComponent {

|

||||

src_factor: BlendFactor::One,

|

||||

dst_factor: BlendFactor::One,

|

||||

operation: BlendOperation::Add,

|

||||

},

|

||||

alpha: BlendComponent {

|

||||

src_factor: BlendFactor::One,

|

||||

dst_factor: BlendFactor::One,

|

||||

operation: BlendOperation::Max,

|

||||

},

|

||||

}),

|

||||

write_mask: ColorWrites::ALL,

|

||||

})],

|

||||

}),

|

||||

primitive: PrimitiveState::default(),

|

||||

depth_stencil: None,

|

||||

multisample: MultisampleState::default(),

|

||||

push_constant_ranges: Vec::new(),

|

||||

});

|

||||

|

||||

BloomPipelines {

|

||||

downsampling_prefilter_pipeline,

|

||||

downsampling_pipeline,

|

||||

upsampling_pipeline,

|

||||

upsampling_final_pipeline,

|

||||

sampler,

|

||||

downsampling_bind_group_layout,

|

||||

upsampling_bind_group_layout,

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

#[derive(Component)]

|

||||

struct BloomTextures {

|

||||

texture_a: CachedTexture,

|

||||

texture_b: CachedTexture,

|

||||

struct BloomTexture {

|

||||

// First mip is half the screen resolution, successive mips are half the previous

|

||||

texture: CachedTexture,

|

||||

mip_count: u32,

|

||||

}

|

||||

|

||||

impl BloomTextures {

|

||||

fn texture_view(texture: &CachedTexture, base_mip_level: u32) -> TextureView {

|

||||

texture.texture.create_view(&TextureViewDescriptor {

|

||||

impl BloomTexture {

|

||||

fn view(&self, base_mip_level: u32) -> TextureView {

|

||||

self.texture.texture.create_view(&TextureViewDescriptor {

|

||||

base_mip_level,

|

||||

mip_level_count: Some(unsafe { NonZeroU32::new_unchecked(1) }),

|

||||

mip_level_count: NonZeroU32::new(1),

|

||||

..Default::default()

|

||||

})

|

||||

}

|

||||

|

|

@ -561,203 +359,144 @@ fn prepare_bloom_textures(

|

|||

mut commands: Commands,

|

||||

mut texture_cache: ResMut<TextureCache>,

|

||||

render_device: Res<RenderDevice>,

|

||||

views: Query<(Entity, &ExtractedCamera), With<BloomUniform>>,

|

||||

views: Query<(Entity, &ExtractedCamera), With<BloomSettings>>,

|

||||

) {

|

||||

let mut texture_as = HashMap::default();

|

||||

let mut texture_bs = HashMap::default();

|

||||

for (entity, camera) in &views {

|

||||

if let Some(UVec2 {

|

||||

x: width,

|

||||

y: height,

|

||||

}) = camera.physical_viewport_size

|

||||

{

|

||||

let min_view = width.min(height) / 2;

|

||||

let mip_count = calculate_mip_count(min_view);

|

||||

// How many times we can halve the resolution minus one so we don't go unnecessarily low

|

||||

let mip_count = MAX_MIP_DIMENSION.ilog2().max(2) - 1;

|

||||

let mip_height_ratio = MAX_MIP_DIMENSION as f32 / height as f32;

|

||||

|

||||

let mut texture_descriptor = TextureDescriptor {

|

||||

label: None,

|

||||

let texture_descriptor = TextureDescriptor {

|

||||

label: Some("bloom_texture"),

|

||||

size: Extent3d {

|

||||

width: (width / 2).max(1),

|

||||

height: (height / 2).max(1),

|

||||

width: ((width as f32 * mip_height_ratio).round() as u32).max(1),

|

||||

height: ((height as f32 * mip_height_ratio).round() as u32).max(1),

|

||||

depth_or_array_layers: 1,

|

||||

},

|

||||

mip_level_count: mip_count,

|

||||

sample_count: 1,

|

||||

dimension: TextureDimension::D2,

|

||||

format: ViewTarget::TEXTURE_FORMAT_HDR,

|

||||

format: BLOOM_TEXTURE_FORMAT,

|

||||

usage: TextureUsages::RENDER_ATTACHMENT | TextureUsages::TEXTURE_BINDING,

|

||||

view_formats: &[],

|

||||

};

|

||||

|

||||

texture_descriptor.label = Some("bloom_texture_a");

|

||||

let texture_a = texture_as

|

||||

.entry(camera.target.clone())

|

||||

.or_insert_with(|| texture_cache.get(&render_device, texture_descriptor.clone()))

|

||||

.clone();

|

||||

|

||||

texture_descriptor.label = Some("bloom_texture_b");

|

||||

let texture_b = texture_bs

|

||||

.entry(camera.target.clone())

|

||||

.or_insert_with(|| texture_cache.get(&render_device, texture_descriptor))

|

||||

.clone();

|

||||

|

||||

commands.entity(entity).insert(BloomTextures {

|

||||

texture_a,

|

||||

texture_b,

|

||||

commands.entity(entity).insert(BloomTexture {

|

||||

texture: texture_cache.get(&render_device, texture_descriptor),

|

||||

mip_count,

|

||||

});

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

/// The uniform struct extracted from [`BloomSettings`] attached to a [`Camera`].

|

||||

/// Will be available for use in the Bloom shader.

|

||||

#[doc(hidden)]

|

||||

#[derive(Component, ShaderType, Clone)]

|

||||

pub struct BloomUniform {

|

||||

threshold: f32,

|

||||

knee: f32,

|

||||

scale: f32,

|

||||

intensity: f32,

|

||||

viewport: Vec4,

|

||||

}

|

||||

|

||||

#[derive(Component)]

|

||||

struct BloomBindGroups {

|

||||

prefilter_bind_group: BindGroup,

|

||||

downsampling_bind_groups: Box<[BindGroup]>,

|

||||

upsampling_bind_groups: Box<[BindGroup]>,

|

||||

upsampling_final_bind_group: BindGroup,

|

||||

sampler: Sampler,

|

||||

}

|

||||

|

||||

fn queue_bloom_bind_groups(

|

||||

mut commands: Commands,

|

||||

render_device: Res<RenderDevice>,

|

||||

pipelines: Res<BloomPipelines>,

|

||||

uniforms: Res<ComponentUniforms<BloomUniform>>,

|

||||

views: Query<(Entity, &ViewTarget, &BloomTextures)>,

|

||||

downsampling_pipeline: Res<BloomDownsamplingPipeline>,

|

||||

upsampling_pipeline: Res<BloomUpsamplingPipeline>,

|

||||

views: Query<(Entity, &BloomTexture)>,

|

||||

uniforms: Res<ComponentUniforms<BloomUniforms>>,

|

||||

) {

|

||||

if let Some(uniforms) = uniforms.binding() {

|

||||

for (entity, view_target, textures) in &views {

|

||||

let prefilter_bind_group = render_device.create_bind_group(&BindGroupDescriptor {

|

||||

label: Some("bloom_prefilter_bind_group"),

|

||||

layout: &pipelines.downsampling_bind_group_layout,

|

||||

entries: &[

|

||||

BindGroupEntry {

|

||||

binding: 0,

|

||||

resource: BindingResource::TextureView(view_target.main_texture()),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 1,

|

||||

resource: BindingResource::Sampler(&pipelines.sampler),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 2,

|

||||

resource: uniforms.clone(),

|

||||

},

|

||||

],

|

||||

});

|

||||

let sampler = &downsampling_pipeline.sampler;

|

||||

|

||||

let bind_group_count = textures.mip_count as usize - 1;

|

||||

for (entity, bloom_texture) in &views {

|

||||

let bind_group_count = bloom_texture.mip_count as usize - 1;

|

||||

|

||||

let mut downsampling_bind_groups = Vec::with_capacity(bind_group_count);

|

||||

for mip in 1..textures.mip_count {

|

||||

let bind_group = render_device.create_bind_group(&BindGroupDescriptor {

|

||||

for mip in 1..bloom_texture.mip_count {

|

||||

downsampling_bind_groups.push(render_device.create_bind_group(&BindGroupDescriptor {

|

||||

label: Some("bloom_downsampling_bind_group"),

|

||||

layout: &pipelines.downsampling_bind_group_layout,

|

||||

layout: &downsampling_pipeline.bind_group_layout,

|

||||

entries: &[

|

||||

BindGroupEntry {

|

||||

binding: 0,

|

||||

resource: BindingResource::TextureView(&BloomTextures::texture_view(

|

||||

&textures.texture_a,

|

||||

mip - 1,

|

||||

)),

|

||||

resource: BindingResource::TextureView(&bloom_texture.view(mip - 1)),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 1,

|

||||

resource: BindingResource::Sampler(&pipelines.sampler),

|

||||

resource: BindingResource::Sampler(sampler),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 2,

|

||||

resource: uniforms.clone(),

|

||||

resource: uniforms.binding().unwrap(),

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

downsampling_bind_groups.push(bind_group);

|

||||

}));

|

||||

}

|

||||

|

||||

let mut upsampling_bind_groups = Vec::with_capacity(bind_group_count);

|

||||

for mip in 1..textures.mip_count {

|

||||

let up = BloomTextures::texture_view(&textures.texture_a, mip - 1);

|

||||

let org = BloomTextures::texture_view(

|

||||

if mip == textures.mip_count - 1 {

|

||||

&textures.texture_a

|

||||

} else {

|

||||

&textures.texture_b

|

||||

},

|

||||

mip,

|

||||

);

|

||||

|

||||

let bind_group = render_device.create_bind_group(&BindGroupDescriptor {

|

||||

for mip in (0..bloom_texture.mip_count).rev() {

|

||||

upsampling_bind_groups.push(render_device.create_bind_group(&BindGroupDescriptor {

|

||||

label: Some("bloom_upsampling_bind_group"),

|

||||

layout: &pipelines.upsampling_bind_group_layout,

|

||||

layout: &upsampling_pipeline.bind_group_layout,

|

||||

entries: &[

|

||||

BindGroupEntry {

|

||||

binding: 0,

|

||||

resource: BindingResource::TextureView(&org),

|

||||

resource: BindingResource::TextureView(&bloom_texture.view(mip)),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 1,

|

||||

resource: BindingResource::Sampler(&pipelines.sampler),

|

||||

resource: BindingResource::Sampler(sampler),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 2,

|

||||

resource: uniforms.clone(),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 3,

|

||||

resource: BindingResource::TextureView(&up),

|

||||

resource: uniforms.binding().unwrap(),

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

upsampling_bind_groups.push(bind_group);

|

||||

}));

|

||||

}

|

||||

|

||||

let upsampling_final_bind_group =

|

||||

render_device.create_bind_group(&BindGroupDescriptor {

|

||||

label: Some("bloom_upsampling_final_bind_group"),

|

||||

layout: &pipelines.downsampling_bind_group_layout,

|

||||

entries: &[

|

||||

BindGroupEntry {

|

||||

binding: 0,

|

||||

resource: BindingResource::TextureView(&BloomTextures::texture_view(

|

||||

&textures.texture_b,

|

||||

0,

|

||||

)),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 1,

|

||||

resource: BindingResource::Sampler(&pipelines.sampler),

|

||||

},

|

||||

BindGroupEntry {

|

||||

binding: 2,

|

||||

resource: uniforms.clone(),

|

||||

},

|

||||

],

|

||||

});

|

||||

|

||||

commands.entity(entity).insert(BloomBindGroups {

|

||||

prefilter_bind_group,

|

||||

downsampling_bind_groups: downsampling_bind_groups.into_boxed_slice(),

|

||||

upsampling_bind_groups: upsampling_bind_groups.into_boxed_slice(),

|

||||

upsampling_final_bind_group,

|

||||

sampler: sampler.clone(),

|

||||

});

|

||||

}

|

||||

}

|

||||

}

|

||||

|

||||

fn calculate_mip_count(min_view: u32) -> u32 {

|

||||

((min_view as f32).log2().round() as i32 - 3).max(1) as u32

|

||||

/// Calculates blend intensities of blur pyramid levels

|

||||

/// during the upsampling + compositing stage.

|

||||

///

|

||||

/// The function assumes all pyramid levels are upsampled and

|

||||

/// blended into higher frequency ones using this function to

|

||||

/// calculate blend levels every time. The final (highest frequency)

|

||||

/// pyramid level in not blended into anything therefore this function

|

||||

/// is not applied to it. As a result, the *mip* parameter of 0 indicates

|

||||

/// the second-highest frequency pyramid level (in our case that is the

|

||||

/// 0th mip of the bloom texture with the original image being the

|

||||

/// actual highest frequency level).

|

||||

///

|

||||

/// Parameters:

|

||||