2022-05-16 13:53:20 +00:00

|

|

|

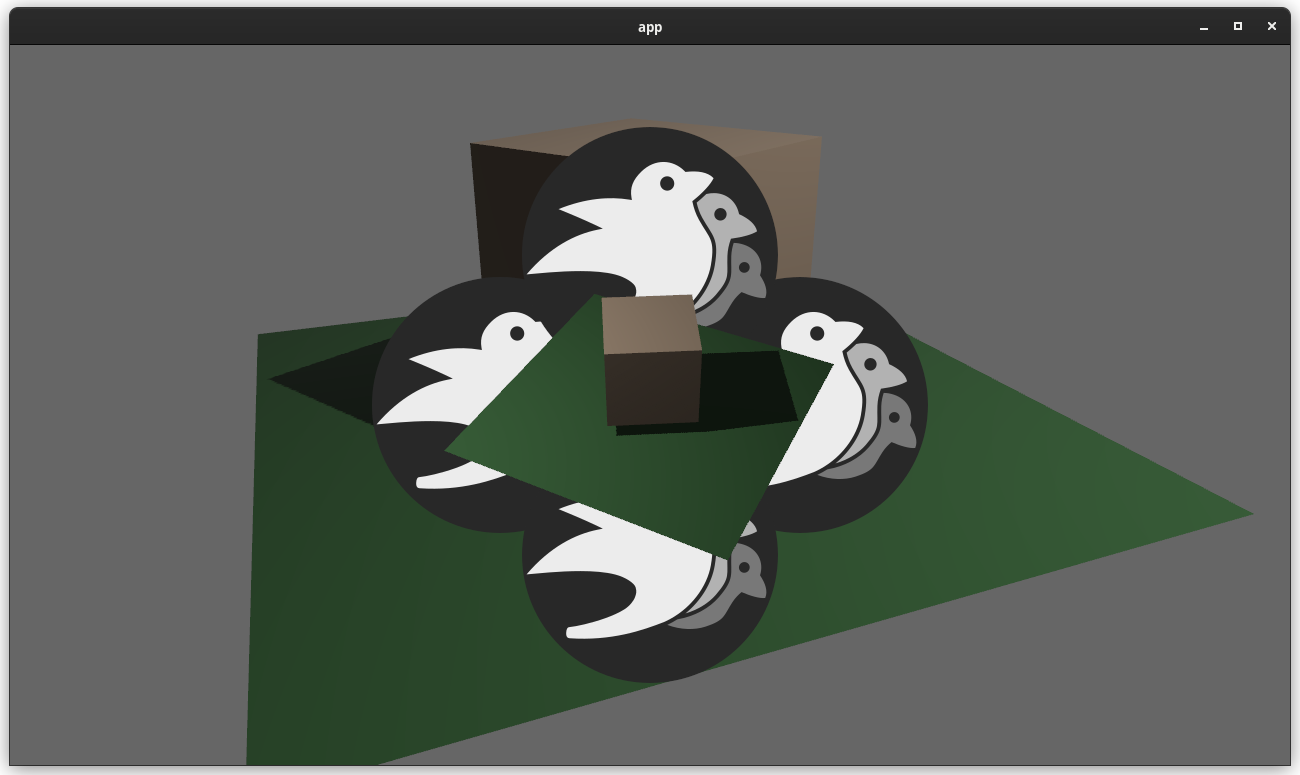

//! Simple benchmark to test rendering many point lights.

|

|

|

|

|

//! Run with `WGPU_SETTINGS_PRIO=webgl2` to restrict to uniform buffers and max 256 lights.

|

|

|

|

|

|

2022-08-30 19:52:11 +00:00

|

|

|

use std::f64::consts::PI;

|

|

|

|

|

|

2022-04-07 16:16:35 +00:00

|

|

|

use bevy::{

|

|

|

|

|

diagnostic::{FrameTimeDiagnosticsPlugin, LogDiagnosticsPlugin},

|

|

|

|

|

math::{DVec2, DVec3},

|

|

|

|

|

pbr::{ExtractedPointLight, GlobalLightMeta},

|

|

|

|

|

prelude::*,

|

2023-03-17 18:45:34 -07:00

|

|

|

render::{camera::ScalingMode, Render, RenderApp, RenderSet},

|

2023-01-19 00:38:28 +00:00

|

|

|

window::{PresentMode, WindowPlugin},

|

2022-04-07 16:16:35 +00:00

|

|

|

};

|

2022-04-15 02:53:20 +00:00

|

|

|

use rand::{thread_rng, Rng};

|

2022-04-07 16:16:35 +00:00

|

|

|

|

|

|

|

|

fn main() {

|

|

|

|

|

App::new()

|

Plugins own their settings. Rework PluginGroup trait. (#6336)

# Objective

Fixes #5884 #2879

Alternative to #2988 #5885 #2886

"Immutable" Plugin settings are currently represented as normal ECS resources, which are read as part of plugin init. This presents a number of problems:

1. If a user inserts the plugin settings resource after the plugin is initialized, it will be silently ignored (and use the defaults instead)

2. Users can modify the plugin settings resource after the plugin has been initialized. This creates a false sense of control over settings that can no longer be changed.

(1) and (2) are especially problematic and confusing for the `WindowDescriptor` resource, but this is a general problem.

## Solution

Immutable Plugin settings now live on each Plugin struct (ex: `WindowPlugin`). PluginGroups have been reworked to support overriding plugin values. This also removes the need for the `add_plugins_with` api, as the `add_plugins` api can use the builder pattern directly. Settings that can be used at runtime continue to be represented as ECS resources.

Plugins are now configured like this:

```rust

app.add_plugin(AssetPlugin {

watch_for_changes: true,

..default()

})

```

PluginGroups are now configured like this:

```rust

app.add_plugins(DefaultPlugins

.set(AssetPlugin {

watch_for_changes: true,

..default()

})

)

```

This is an alternative to #2988, which is similar. But I personally prefer this solution for a couple of reasons:

* ~~#2988 doesn't solve (1)~~ #2988 does solve (1) and will panic in that case. I was wrong!

* This PR directly ties plugin settings to Plugin types in a 1:1 relationship, rather than a loose "setup resource" <-> plugin coupling (where the setup resource is consumed by the first plugin that uses it).

* I'm not a huge fan of overloading the ECS resource concept and implementation for something that has very different use cases and constraints.

## Changelog

- PluginGroups can now be configured directly using the builder pattern. Individual plugin values can be overridden by using `plugin_group.set(SomePlugin {})`, which enables overriding default plugin values.

- `WindowDescriptor` plugin settings have been moved to `WindowPlugin` and `AssetServerSettings` have been moved to `AssetPlugin`

- `app.add_plugins_with` has been replaced by using `add_plugins` with the builder pattern.

## Migration Guide

The `WindowDescriptor` settings have been moved from a resource to `WindowPlugin::window`:

```rust

// Old (Bevy 0.8)

app

.insert_resource(WindowDescriptor {

width: 400.0,

..default()

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(WindowPlugin {

window: WindowDescriptor {

width: 400.0,

..default()

},

..default()

}))

```

The `AssetServerSettings` resource has been removed in favor of direct `AssetPlugin` configuration:

```rust

// Old (Bevy 0.8)

app

.insert_resource(AssetServerSettings {

watch_for_changes: true,

..default()

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(AssetPlugin {

watch_for_changes: true,

..default()

}))

```

`add_plugins_with` has been replaced by `add_plugins` in combination with the builder pattern:

```rust

// Old (Bevy 0.8)

app.add_plugins_with(DefaultPlugins, |group| group.disable::<AssetPlugin>());

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.build().disable::<AssetPlugin>());

```

2022-10-24 21:20:33 +00:00

|

|

|

.add_plugins(DefaultPlugins.set(WindowPlugin {

|

2023-01-19 00:38:28 +00:00

|

|

|

primary_window: Some(Window {

|

|

|

|

|

resolution: (1024.0, 768.0).into(),

|

|

|

|

|

title: "many_lights".into(),

|

Plugins own their settings. Rework PluginGroup trait. (#6336)

# Objective

Fixes #5884 #2879

Alternative to #2988 #5885 #2886

"Immutable" Plugin settings are currently represented as normal ECS resources, which are read as part of plugin init. This presents a number of problems:

1. If a user inserts the plugin settings resource after the plugin is initialized, it will be silently ignored (and use the defaults instead)

2. Users can modify the plugin settings resource after the plugin has been initialized. This creates a false sense of control over settings that can no longer be changed.

(1) and (2) are especially problematic and confusing for the `WindowDescriptor` resource, but this is a general problem.

## Solution

Immutable Plugin settings now live on each Plugin struct (ex: `WindowPlugin`). PluginGroups have been reworked to support overriding plugin values. This also removes the need for the `add_plugins_with` api, as the `add_plugins` api can use the builder pattern directly. Settings that can be used at runtime continue to be represented as ECS resources.

Plugins are now configured like this:

```rust

app.add_plugin(AssetPlugin {

watch_for_changes: true,

..default()

})

```

PluginGroups are now configured like this:

```rust

app.add_plugins(DefaultPlugins

.set(AssetPlugin {

watch_for_changes: true,

..default()

})

)

```

This is an alternative to #2988, which is similar. But I personally prefer this solution for a couple of reasons:

* ~~#2988 doesn't solve (1)~~ #2988 does solve (1) and will panic in that case. I was wrong!

* This PR directly ties plugin settings to Plugin types in a 1:1 relationship, rather than a loose "setup resource" <-> plugin coupling (where the setup resource is consumed by the first plugin that uses it).

* I'm not a huge fan of overloading the ECS resource concept and implementation for something that has very different use cases and constraints.

## Changelog

- PluginGroups can now be configured directly using the builder pattern. Individual plugin values can be overridden by using `plugin_group.set(SomePlugin {})`, which enables overriding default plugin values.

- `WindowDescriptor` plugin settings have been moved to `WindowPlugin` and `AssetServerSettings` have been moved to `AssetPlugin`

- `app.add_plugins_with` has been replaced by using `add_plugins` with the builder pattern.

## Migration Guide

The `WindowDescriptor` settings have been moved from a resource to `WindowPlugin::window`:

```rust

// Old (Bevy 0.8)

app

.insert_resource(WindowDescriptor {

width: 400.0,

..default()

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(WindowPlugin {

window: WindowDescriptor {

width: 400.0,

..default()

},

..default()

}))

```

The `AssetServerSettings` resource has been removed in favor of direct `AssetPlugin` configuration:

```rust

// Old (Bevy 0.8)

app

.insert_resource(AssetServerSettings {

watch_for_changes: true,

..default()

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(AssetPlugin {

watch_for_changes: true,

..default()

}))

```

`add_plugins_with` has been replaced by `add_plugins` in combination with the builder pattern:

```rust

// Old (Bevy 0.8)

app.add_plugins_with(DefaultPlugins, |group| group.disable::<AssetPlugin>());

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.build().disable::<AssetPlugin>());

```

2022-10-24 21:20:33 +00:00

|

|

|

present_mode: PresentMode::AutoNoVsync,

|

|

|

|

|

..default()

|

2023-01-19 00:38:28 +00:00

|

|

|

}),

|

2022-04-07 16:16:35 +00:00

|

|

|

..default()

|

Plugins own their settings. Rework PluginGroup trait. (#6336)

# Objective

Fixes #5884 #2879

Alternative to #2988 #5885 #2886

"Immutable" Plugin settings are currently represented as normal ECS resources, which are read as part of plugin init. This presents a number of problems:

1. If a user inserts the plugin settings resource after the plugin is initialized, it will be silently ignored (and use the defaults instead)

2. Users can modify the plugin settings resource after the plugin has been initialized. This creates a false sense of control over settings that can no longer be changed.

(1) and (2) are especially problematic and confusing for the `WindowDescriptor` resource, but this is a general problem.

## Solution

Immutable Plugin settings now live on each Plugin struct (ex: `WindowPlugin`). PluginGroups have been reworked to support overriding plugin values. This also removes the need for the `add_plugins_with` api, as the `add_plugins` api can use the builder pattern directly. Settings that can be used at runtime continue to be represented as ECS resources.

Plugins are now configured like this:

```rust

app.add_plugin(AssetPlugin {

watch_for_changes: true,

..default()

})

```

PluginGroups are now configured like this:

```rust

app.add_plugins(DefaultPlugins

.set(AssetPlugin {

watch_for_changes: true,

..default()

})

)

```

This is an alternative to #2988, which is similar. But I personally prefer this solution for a couple of reasons:

* ~~#2988 doesn't solve (1)~~ #2988 does solve (1) and will panic in that case. I was wrong!

* This PR directly ties plugin settings to Plugin types in a 1:1 relationship, rather than a loose "setup resource" <-> plugin coupling (where the setup resource is consumed by the first plugin that uses it).

* I'm not a huge fan of overloading the ECS resource concept and implementation for something that has very different use cases and constraints.

## Changelog

- PluginGroups can now be configured directly using the builder pattern. Individual plugin values can be overridden by using `plugin_group.set(SomePlugin {})`, which enables overriding default plugin values.

- `WindowDescriptor` plugin settings have been moved to `WindowPlugin` and `AssetServerSettings` have been moved to `AssetPlugin`

- `app.add_plugins_with` has been replaced by using `add_plugins` with the builder pattern.

## Migration Guide

The `WindowDescriptor` settings have been moved from a resource to `WindowPlugin::window`:

```rust

// Old (Bevy 0.8)

app

.insert_resource(WindowDescriptor {

width: 400.0,

..default()

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(WindowPlugin {

window: WindowDescriptor {

width: 400.0,

..default()

},

..default()

}))

```

The `AssetServerSettings` resource has been removed in favor of direct `AssetPlugin` configuration:

```rust

// Old (Bevy 0.8)

app

.insert_resource(AssetServerSettings {

watch_for_changes: true,

..default()

})

.add_plugins(DefaultPlugins)

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.set(AssetPlugin {

watch_for_changes: true,

..default()

}))

```

`add_plugins_with` has been replaced by `add_plugins` in combination with the builder pattern:

```rust

// Old (Bevy 0.8)

app.add_plugins_with(DefaultPlugins, |group| group.disable::<AssetPlugin>());

// New (Bevy 0.9)

app.add_plugins(DefaultPlugins.build().disable::<AssetPlugin>());

```

2022-10-24 21:20:33 +00:00

|

|

|

}))

|

2022-04-07 16:16:35 +00:00

|

|

|

.add_plugin(FrameTimeDiagnosticsPlugin::default())

|

|

|

|

|

.add_plugin(LogDiagnosticsPlugin::default())

|

2023-03-17 18:45:34 -07:00

|

|

|

.add_systems(Startup, setup)

|

|

|

|

|

.add_systems(Update, (move_camera, print_light_count))

|

2022-04-07 16:16:35 +00:00

|

|

|

.add_plugin(LogVisibleLights)

|

|

|

|

|

.run();

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn setup(

|

|

|

|

|

mut commands: Commands,

|

|

|

|

|

mut meshes: ResMut<Assets<Mesh>>,

|

|

|

|

|

mut materials: ResMut<Assets<StandardMaterial>>,

|

|

|

|

|

) {

|

2022-07-13 19:13:46 +00:00

|

|

|

warn!(include_str!("warning_string.txt"));

|

|

|

|

|

|

2022-04-07 16:16:35 +00:00

|

|

|

const LIGHT_RADIUS: f32 = 0.3;

|

|

|

|

|

const LIGHT_INTENSITY: f32 = 5.0;

|

|

|

|

|

const RADIUS: f32 = 50.0;

|

|

|

|

|

const N_LIGHTS: usize = 100_000;

|

|

|

|

|

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

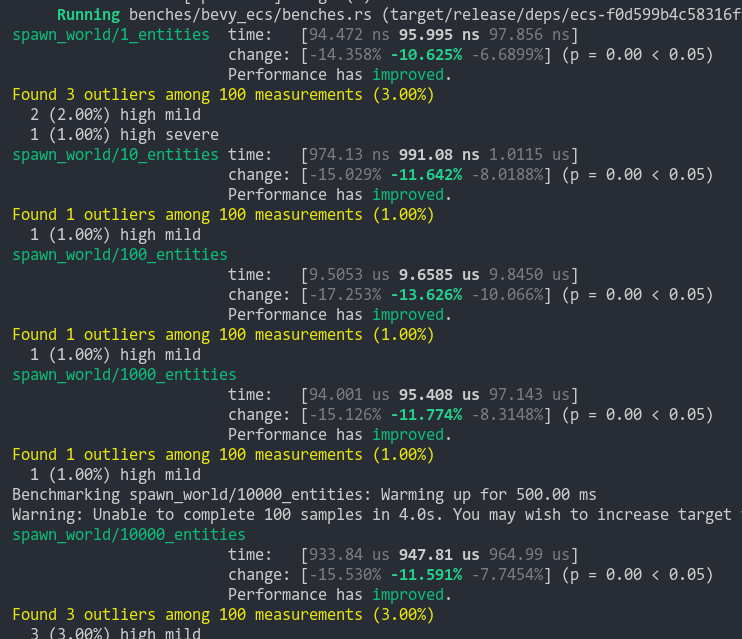

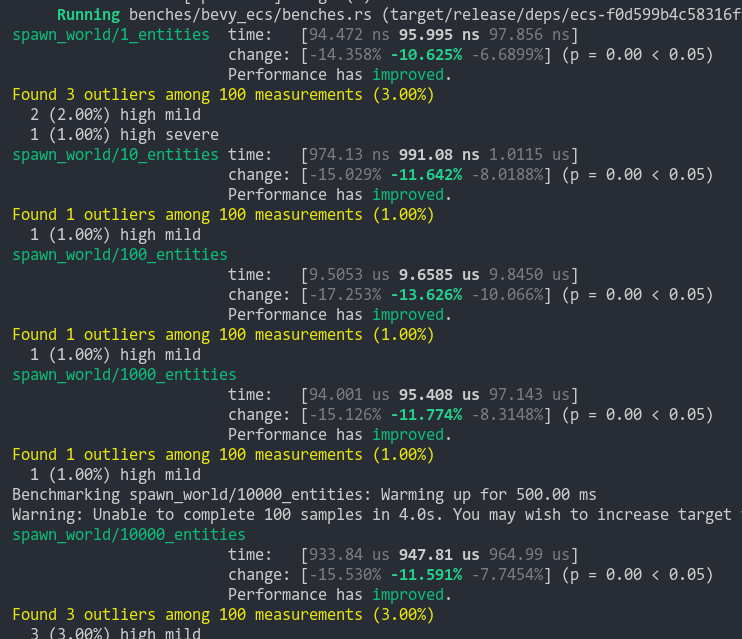

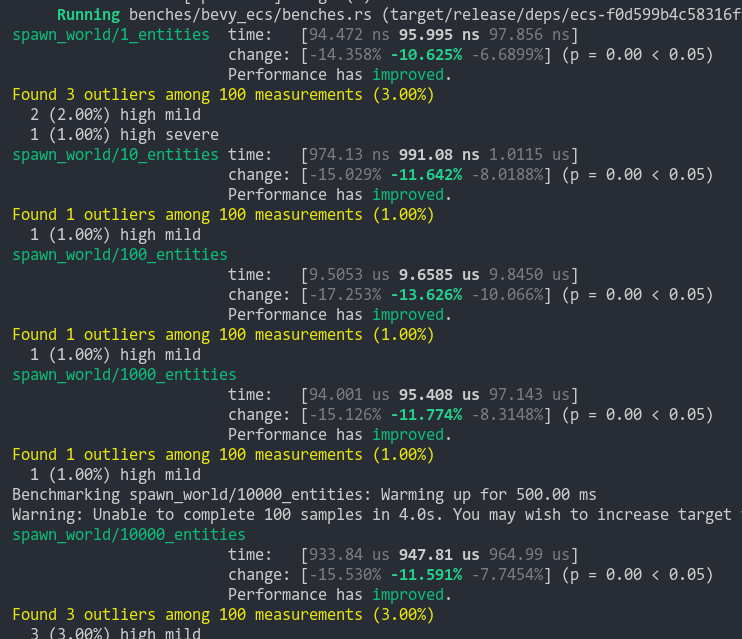

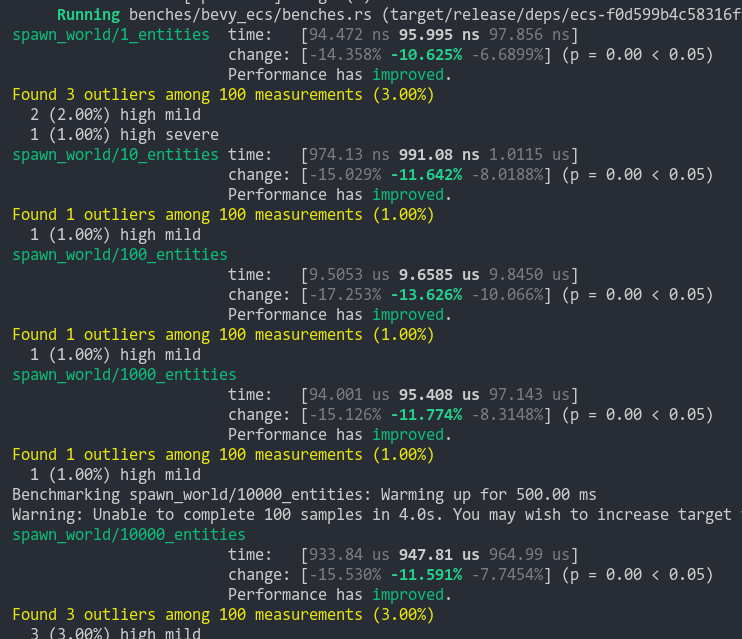

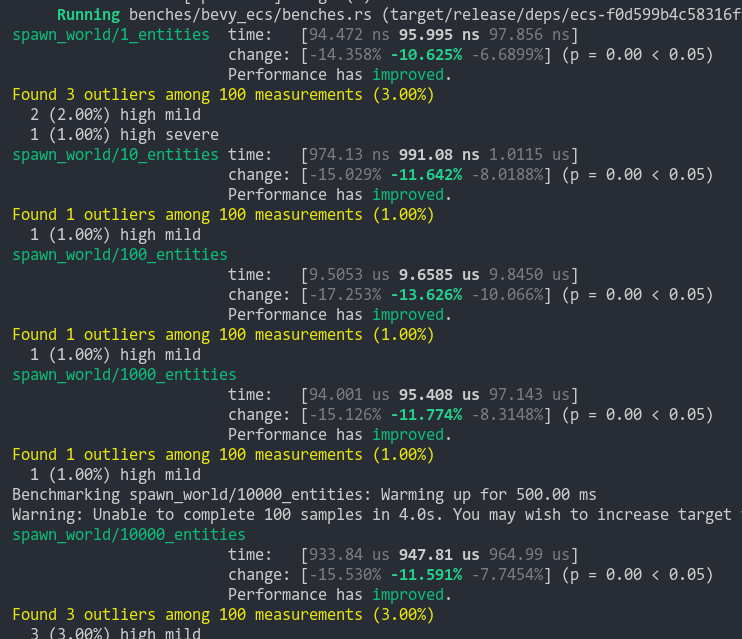

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

commands.spawn(PbrBundle {

|

2022-11-14 22:34:27 +00:00

|

|

|

mesh: meshes.add(

|

|

|

|

|

Mesh::try_from(shape::Icosphere {

|

|

|

|

|

radius: RADIUS,

|

|

|

|

|

subdivisions: 9,

|

|

|

|

|

})

|

|

|

|

|

.unwrap(),

|

|

|

|

|

),

|

2022-04-07 16:16:35 +00:00

|

|

|

material: materials.add(StandardMaterial::from(Color::WHITE)),

|

2022-07-03 19:55:33 +00:00

|

|

|

transform: Transform::from_scale(Vec3::NEG_ONE),

|

2022-04-07 16:16:35 +00:00

|

|

|

..default()

|

|

|

|

|

});

|

|

|

|

|

|

|

|

|

|

let mesh = meshes.add(Mesh::from(shape::Cube { size: 1.0 }));

|

|

|

|

|

let material = materials.add(StandardMaterial {

|

|

|

|

|

base_color: Color::PINK,

|

|

|

|

|

..default()

|

|

|

|

|

});

|

|

|

|

|

|

|

|

|

|

// NOTE: This pattern is good for testing performance of culling as it provides roughly

|

|

|

|

|

// the same number of visible meshes regardless of the viewing angle.

|

|

|

|

|

// NOTE: f64 is used to avoid precision issues that produce visual artifacts in the distribution

|

|

|

|

|

let golden_ratio = 0.5f64 * (1.0f64 + 5.0f64.sqrt());

|

2022-04-15 02:53:20 +00:00

|

|

|

let mut rng = thread_rng();

|

2022-04-07 16:16:35 +00:00

|

|

|

for i in 0..N_LIGHTS {

|

|

|

|

|

let spherical_polar_theta_phi = fibonacci_spiral_on_sphere(golden_ratio, i, N_LIGHTS);

|

|

|

|

|

let unit_sphere_p = spherical_polar_to_cartesian(spherical_polar_theta_phi);

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

commands.spawn(PointLightBundle {

|

2022-04-07 16:16:35 +00:00

|

|

|

point_light: PointLight {

|

|

|

|

|

range: LIGHT_RADIUS,

|

|

|

|

|

intensity: LIGHT_INTENSITY,

|

2022-04-15 02:53:20 +00:00

|

|

|

color: Color::hsl(rng.gen_range(0.0..360.0), 1.0, 0.5),

|

2022-04-07 16:16:35 +00:00

|

|

|

..default()

|

|

|

|

|

},

|

|

|

|

|

transform: Transform::from_translation((RADIUS as f64 * unit_sphere_p).as_vec3()),

|

|

|

|

|

..default()

|

|

|

|

|

});

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// camera

|

2022-04-15 02:53:20 +00:00

|

|

|

match std::env::args().nth(1).as_deref() {

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

Some("orthographic") => commands.spawn(Camera3dBundle {

|

Camera Driven Rendering (#4745)

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

2022-06-02 00:12:17 +00:00

|

|

|

projection: OrthographicProjection {

|

|

|

|

|

scale: 20.0,

|

|

|

|

|

scaling_mode: ScalingMode::FixedHorizontal(1.0),

|

|

|

|

|

..default()

|

|

|

|

|

}

|

|

|

|

|

.into(),

|

|

|

|

|

..default()

|

|

|

|

|

}),

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

_ => commands.spawn(Camera3dBundle::default()),

|

2022-04-15 02:53:20 +00:00

|

|

|

};

|

2022-04-07 16:16:35 +00:00

|

|

|

|

|

|

|

|

// add one cube, the only one with strong handles

|

|

|

|

|

// also serves as a reference point during rotation

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

commands.spawn(PbrBundle {

|

2022-04-07 16:16:35 +00:00

|

|

|

mesh,

|

|

|

|

|

material,

|

|

|

|

|

transform: Transform {

|

2022-10-28 21:03:01 +00:00

|

|

|

translation: Vec3::new(0.0, RADIUS, 0.0),

|

2022-04-07 16:16:35 +00:00

|

|

|

scale: Vec3::splat(5.0),

|

|

|

|

|

..default()

|

|

|

|

|

},

|

|

|

|

|

..default()

|

|

|

|

|

});

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// NOTE: This epsilon value is apparently optimal for optimizing for the average

|

|

|

|

|

// nearest-neighbor distance. See:

|

|

|

|

|

// http://extremelearning.com.au/how-to-evenly-distribute-points-on-a-sphere-more-effectively-than-the-canonical-fibonacci-lattice/

|

|

|

|

|

// for details.

|

|

|

|

|

const EPSILON: f64 = 0.36;

|

|

|

|

|

fn fibonacci_spiral_on_sphere(golden_ratio: f64, i: usize, n: usize) -> DVec2 {

|

|

|

|

|

DVec2::new(

|

2022-08-30 19:52:11 +00:00

|

|

|

PI * 2. * (i as f64 / golden_ratio),

|

2022-04-07 16:16:35 +00:00

|

|

|

(1.0 - 2.0 * (i as f64 + EPSILON) / (n as f64 - 1.0 + 2.0 * EPSILON)).acos(),

|

|

|

|

|

)

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn spherical_polar_to_cartesian(p: DVec2) -> DVec3 {

|

|

|

|

|

let (sin_theta, cos_theta) = p.x.sin_cos();

|

|

|

|

|

let (sin_phi, cos_phi) = p.y.sin_cos();

|

|

|

|

|

DVec3::new(cos_theta * sin_phi, sin_theta * sin_phi, cos_phi)

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// System for rotating the camera

|

|

|

|

|

fn move_camera(time: Res<Time>, mut camera_query: Query<&mut Transform, With<Camera>>) {

|

|

|

|

|

let mut camera_transform = camera_query.single_mut();

|

2022-07-01 03:58:54 +00:00

|

|

|

let delta = time.delta_seconds() * 0.15;

|

|

|

|

|

camera_transform.rotate_z(delta);

|

|

|

|

|

camera_transform.rotate_x(delta);

|

2022-04-07 16:16:35 +00:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// System for printing the number of meshes on every tick of the timer

|

|

|

|

|

fn print_light_count(time: Res<Time>, mut timer: Local<PrintingTimer>, lights: Query<&PointLight>) {

|

|

|

|

|

timer.0.tick(time.delta());

|

|

|

|

|

|

|

|

|

|

if timer.0.just_finished() {

|

|

|

|

|

info!("Lights: {}", lights.iter().len(),);

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

struct LogVisibleLights;

|

|

|

|

|

|

|

|

|

|

impl Plugin for LogVisibleLights {

|

|

|

|

|

fn build(&self, app: &mut App) {

|

|

|

|

|

let render_app = match app.get_sub_app_mut(RenderApp) {

|

|

|

|

|

Ok(render_app) => render_app,

|

|

|

|

|

Err(_) => return,

|

|

|

|

|

};

|

|

|

|

|

|

2023-03-17 18:45:34 -07:00

|

|

|

render_app.add_systems(Render, print_visible_light_count.in_set(RenderSet::Prepare));

|

2022-04-07 16:16:35 +00:00

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

// System for printing the number of meshes on every tick of the timer

|

|

|

|

|

fn print_visible_light_count(

|

2023-01-21 17:55:39 +00:00

|

|

|

time: Res<Time>,

|

2022-04-07 16:16:35 +00:00

|

|

|

mut timer: Local<PrintingTimer>,

|

|

|

|

|

visible: Query<&ExtractedPointLight>,

|

|

|

|

|

global_light_meta: Res<GlobalLightMeta>,

|

|

|

|

|

) {

|

|

|

|

|

timer.0.tick(time.delta());

|

|

|

|

|

|

|

|

|

|

if timer.0.just_finished() {

|

|

|

|

|

info!(

|

|

|

|

|

"Visible Lights: {}, Rendered Lights: {}",

|

|

|

|

|

visible.iter().len(),

|

|

|

|

|

global_light_meta.entity_to_index.len()

|

|

|

|

|

);

|

|

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

struct PrintingTimer(Timer);

|

|

|

|

|

|

|

|

|

|

impl Default for PrintingTimer {

|

|

|

|

|

fn default() -> Self {

|

Replace the `bool` argument of `Timer` with `TimerMode` (#6247)

As mentioned in #2926, it's better to have an explicit type that clearly communicates the intent of the timer mode rather than an opaque boolean, which can be only understood when knowing the signature or having to look up the documentation.

This also opens up a way to merge different timers, such as `Stopwatch`, and possibly future ones, such as `DiscreteStopwatch` and `DiscreteTimer` from #2683, into one struct.

Signed-off-by: Lena Milizé <me@lvmn.org>

# Objective

Fixes #2926.

## Solution

Introduce `TimerMode` which replaces the `bool` argument of `Timer` constructors. A `Default` value for `TimerMode` is `Once`.

---

## Changelog

### Added

- `TimerMode` enum, along with variants `TimerMode::Once` and `TimerMode::Repeating`

### Changed

- Replace `bool` argument of `Timer::new` and `Timer::from_seconds` with `TimerMode`

- Change `repeating: bool` field of `Timer` with `mode: TimerMode`

## Migration Guide

- Replace `Timer::new(duration, false)` with `Timer::new(duration, TimerMode::Once)`.

- Replace `Timer::new(duration, true)` with `Timer::new(duration, TimerMode::Repeating)`.

- Replace `Timer::from_seconds(seconds, false)` with `Timer::from_seconds(seconds, TimerMode::Once)`.

- Replace `Timer::from_seconds(seconds, true)` with `Timer::from_seconds(seconds, TimerMode::Repeating)`.

- Change `timer.repeating()` to `timer.mode() == TimerMode::Repeating`.

2022-10-17 13:47:01 +00:00

|

|

|

Self(Timer::from_seconds(1.0, TimerMode::Repeating))

|

2022-04-07 16:16:35 +00:00

|

|

|

}

|

|

|

|

|

}

|