2022-05-30 15:57:25 +00:00

|

|

|

//! A shader that renders a mesh multiple times in one draw call.

|

2022-05-16 13:53:20 +00:00

|

|

|

|

2022-01-05 19:43:11 +00:00

|

|

|

use bevy::{

|

Camera Driven Rendering (#4745)

This adds "high level camera driven rendering" to Bevy. The goal is to give users more control over what gets rendered (and where) without needing to deal with render logic. This will make scenarios like "render to texture", "multiple windows", "split screen", "2d on 3d", "3d on 2d", "pass layering", and more significantly easier.

Here is an [example of a 2d render sandwiched between two 3d renders (each from a different perspective)](https://gist.github.com/cart/4fe56874b2e53bc5594a182fc76f4915):

Users can now spawn a camera, point it at a RenderTarget (a texture or a window), and it will "just work".

Rendering to a second window is as simple as spawning a second camera and assigning it to a specific window id:

```rust

// main camera (main window)

commands.spawn_bundle(Camera2dBundle::default());

// second camera (other window)

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Window(window_id),

..default()

},

..default()

});

```

Rendering to a texture is as simple as pointing the camera at a texture:

```rust

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle),

..default()

},

..default()

});

```

Cameras now have a "render priority", which controls the order they are drawn in. If you want to use a camera's output texture as a texture in the main pass, just set the priority to a number lower than the main pass camera (which defaults to `0`).

```rust

// main pass camera with a default priority of 0

commands.spawn_bundle(Camera2dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

target: RenderTarget::Texture(image_handle.clone()),

priority: -1,

..default()

},

..default()

});

commands.spawn_bundle(SpriteBundle {

texture: image_handle,

..default()

})

```

Priority can also be used to layer to cameras on top of each other for the same RenderTarget. This is what "2d on top of 3d" looks like in the new system:

```rust

commands.spawn_bundle(Camera3dBundle::default());

commands.spawn_bundle(Camera2dBundle {

camera: Camera {

// this will render 2d entities "on top" of the default 3d camera's render

priority: 1,

..default()

},

..default()

});

```

There is no longer the concept of a global "active camera". Resources like `ActiveCamera<Camera2d>` and `ActiveCamera<Camera3d>` have been replaced with the camera-specific `Camera::is_active` field. This does put the onus on users to manage which cameras should be active.

Cameras are now assigned a single render graph as an "entry point", which is configured on each camera entity using the new `CameraRenderGraph` component. The old `PerspectiveCameraBundle` and `OrthographicCameraBundle` (generic on camera marker components like Camera2d and Camera3d) have been replaced by `Camera3dBundle` and `Camera2dBundle`, which set 3d and 2d default values for the `CameraRenderGraph` and projections.

```rust

// old 3d perspective camera

commands.spawn_bundle(PerspectiveCameraBundle::default())

// new 3d perspective camera

commands.spawn_bundle(Camera3dBundle::default())

```

```rust

// old 2d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_2d())

// new 2d orthographic camera

commands.spawn_bundle(Camera2dBundle::default())

```

```rust

// old 3d orthographic camera

commands.spawn_bundle(OrthographicCameraBundle::new_3d())

// new 3d orthographic camera

commands.spawn_bundle(Camera3dBundle {

projection: OrthographicProjection {

scale: 3.0,

scaling_mode: ScalingMode::FixedVertical,

..default()

}.into(),

..default()

})

```

Note that `Camera3dBundle` now uses a new `Projection` enum instead of hard coding the projection into the type. There are a number of motivators for this change: the render graph is now a part of the bundle, the way "generic bundles" work in the rust type system prevents nice `..default()` syntax, and changing projections at runtime is much easier with an enum (ex for editor scenarios). I'm open to discussing this choice, but I'm relatively certain we will all come to the same conclusion here. Camera2dBundle and Camera3dBundle are much clearer than being generic on marker components / using non-default constructors.

If you want to run a custom render graph on a camera, just set the `CameraRenderGraph` component:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_render_graph: CameraRenderGraph::new(some_render_graph_name),

..default()

})

```

Just note that if the graph requires data from specific components to work (such as `Camera3d` config, which is provided in the `Camera3dBundle`), make sure the relevant components have been added.

Speaking of using components to configure graphs / passes, there are a number of new configuration options:

```rust

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// overrides the default global clear color

clear_color: ClearColorConfig::Custom(Color::RED),

..default()

},

..default()

})

commands.spawn_bundle(Camera3dBundle {

camera_3d: Camera3d {

// disables clearing

clear_color: ClearColorConfig::None,

..default()

},

..default()

})

```

Expect to see more of the "graph configuration Components on Cameras" pattern in the future.

By popular demand, UI no longer requires a dedicated camera. `UiCameraBundle` has been removed. `Camera2dBundle` and `Camera3dBundle` now both default to rendering UI as part of their own render graphs. To disable UI rendering for a camera, disable it using the CameraUi component:

```rust

commands

.spawn_bundle(Camera3dBundle::default())

.insert(CameraUi {

is_enabled: false,

..default()

})

```

## Other Changes

* The separate clear pass has been removed. We should revisit this for things like sky rendering, but I think this PR should "keep it simple" until we're ready to properly support that (for code complexity and performance reasons). We can come up with the right design for a modular clear pass in a followup pr.

* I reorganized bevy_core_pipeline into Core2dPlugin and Core3dPlugin (and core_2d / core_3d modules). Everything is pretty much the same as before, just logically separate. I've moved relevant types (like Camera2d, Camera3d, Camera3dBundle, Camera2dBundle) into their relevant modules, which is what motivated this reorganization.

* I adapted the `scene_viewer` example (which relied on the ActiveCameras behavior) to the new system. I also refactored bits and pieces to be a bit simpler.

* All of the examples have been ported to the new camera approach. `render_to_texture` and `multiple_windows` are now _much_ simpler. I removed `two_passes` because it is less relevant with the new approach. If someone wants to add a new "layered custom pass with CameraRenderGraph" example, that might fill a similar niche. But I don't feel much pressure to add that in this pr.

* Cameras now have `target_logical_size` and `target_physical_size` fields, which makes finding the size of a camera's render target _much_ simpler. As a result, the `Assets<Image>` and `Windows` parameters were removed from `Camera::world_to_screen`, making that operation much more ergonomic.

* Render order ambiguities between cameras with the same target and the same priority now produce a warning. This accomplishes two goals:

1. Now that there is no "global" active camera, by default spawning two cameras will result in two renders (one covering the other). This would be a silent performance killer that would be hard to detect after the fact. By detecting ambiguities, we can provide a helpful warning when this occurs.

2. Render order ambiguities could result in unexpected / unpredictable render results. Resolving them makes sense.

## Follow Up Work

* Per-Camera viewports, which will make it possible to render to a smaller area inside of a RenderTarget (great for something like splitscreen)

* Camera-specific MSAA config (should use the same "overriding" pattern used for ClearColor)

* Graph Based Camera Ordering: priorities are simple, but they make complicated ordering constraints harder to express. We should consider adopting a "graph based" camera ordering model with "before" and "after" relationships to other cameras (or build it "on top" of the priority system).

* Consider allowing graphs to run subgraphs from any nest level (aka a global namespace for graphs). Right now the 2d and 3d graphs each need their own UI subgraph, which feels "fine" in the short term. But being able to share subgraphs between other subgraphs seems valuable.

* Consider splitting `bevy_core_pipeline` into `bevy_core_2d` and `bevy_core_3d` packages. Theres a shared "clear color" dependency here, which would need a new home.

2022-06-02 00:12:17 +00:00

|

|

|

core_pipeline::core_3d::Transparent3d,

|

2022-11-03 16:33:05 +00:00

|

|

|

ecs::{

|

|

|

|

|

query::QueryItem,

|

|

|

|

|

system::{lifetimeless::*, SystemParamItem},

|

|

|

|

|

},

|

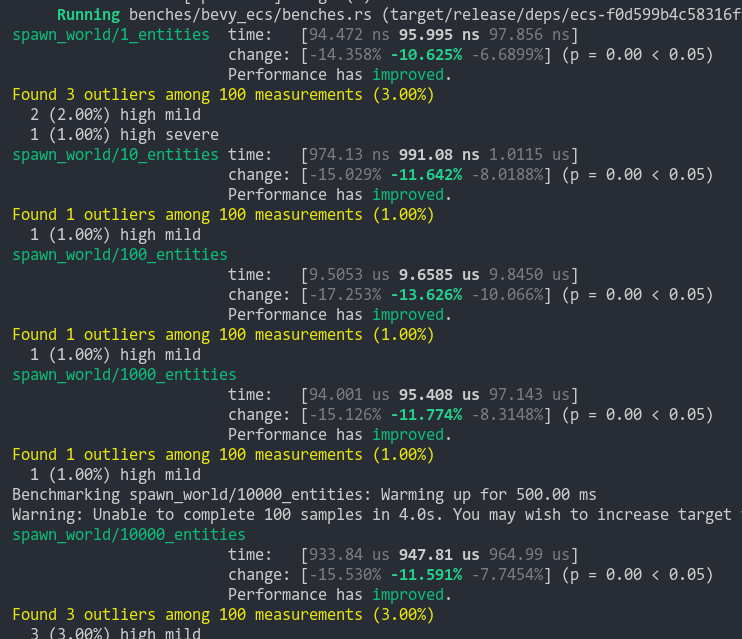

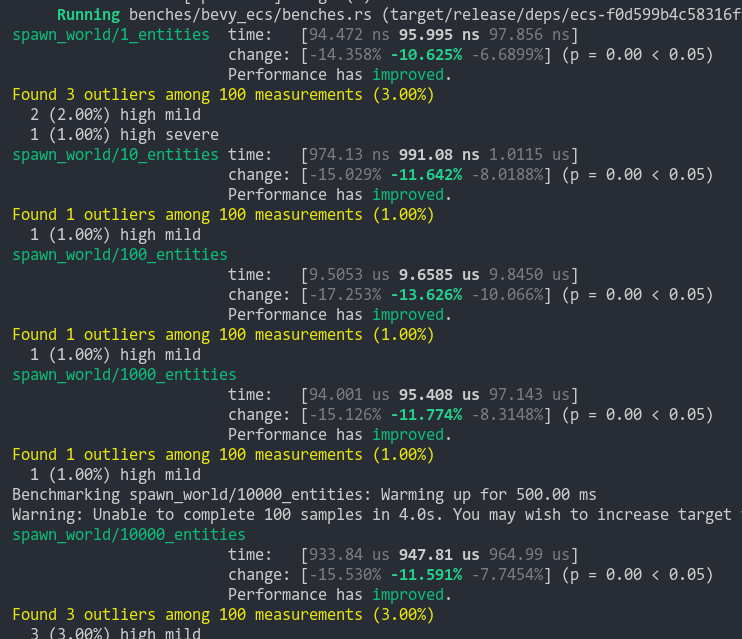

Use EntityHashMap<Entity, T> for render world entity storage for better performance (#9903)

# Objective

- Improve rendering performance, particularly by avoiding the large

system commands costs of using the ECS in the way that the render world

does.

## Solution

- Define `EntityHasher` that calculates a hash from the

`Entity.to_bits()` by `i | (i.wrapping_mul(0x517cc1b727220a95) << 32)`.

`0x517cc1b727220a95` is something like `u64::MAX / N` for N that gives a

value close to π and that works well for hashing. Thanks for @SkiFire13

for the suggestion and to @nicopap for alternative suggestions and

discussion. This approach comes from `rustc-hash` (a.k.a. `FxHasher`)

with some tweaks for the case of hashing an `Entity`. `FxHasher` and

`SeaHasher` were also tested but were significantly slower.

- Define `EntityHashMap` type that uses the `EntityHashser`

- Use `EntityHashMap<Entity, T>` for render world entity storage,

including:

- `RenderMaterialInstances` - contains the `AssetId<M>` of the material

associated with the entity. Also for 2D.

- `RenderMeshInstances` - contains mesh transforms, flags and properties

about mesh entities. Also for 2D.

- `SkinIndices` and `MorphIndices` - contains the skin and morph index

for an entity, respectively

- `ExtractedSprites`

- `ExtractedUiNodes`

## Benchmarks

All benchmarks have been conducted on an M1 Max connected to AC power.

The tests are run for 1500 frames. The 1000th frame is captured for

comparison to check for visual regressions. There were none.

### 2D Meshes

`bevymark --benchmark --waves 160 --per-wave 1000 --mode mesh2d`

#### `--ordered-z`

This test spawns the 2D meshes with z incrementing back to front, which

is the ideal arrangement allocation order as it matches the sorted

render order which means lookups have a high cache hit rate.

<img width="1112" alt="Screenshot 2023-09-27 at 07 50 45"

src="https://github.com/bevyengine/bevy/assets/302146/e140bc98-7091-4a3b-8ae1-ab75d16d2ccb">

-39.1% median frame time.

#### Random

This test spawns the 2D meshes with random z. This not only makes the

batching and transparent 2D pass lookups get a lot of cache misses, it

also currently means that the meshes are almost certain to not be

batchable.

<img width="1108" alt="Screenshot 2023-09-27 at 07 51 28"

src="https://github.com/bevyengine/bevy/assets/302146/29c2e813-645a-43ce-982a-55df4bf7d8c4">

-7.2% median frame time.

### 3D Meshes

`many_cubes --benchmark`

<img width="1112" alt="Screenshot 2023-09-27 at 07 51 57"

src="https://github.com/bevyengine/bevy/assets/302146/1a729673-3254-4e2a-9072-55e27c69f0fc">

-7.7% median frame time.

### Sprites

**NOTE: On `main` sprites are using `SparseSet<Entity, T>`!**

`bevymark --benchmark --waves 160 --per-wave 1000 --mode sprite`

#### `--ordered-z`

This test spawns the sprites with z incrementing back to front, which is

the ideal arrangement allocation order as it matches the sorted render

order which means lookups have a high cache hit rate.

<img width="1116" alt="Screenshot 2023-09-27 at 07 52 31"

src="https://github.com/bevyengine/bevy/assets/302146/bc8eab90-e375-4d31-b5cd-f55f6f59ab67">

+13.0% median frame time.

#### Random

This test spawns the sprites with random z. This makes the batching and

transparent 2D pass lookups get a lot of cache misses.

<img width="1109" alt="Screenshot 2023-09-27 at 07 53 01"

src="https://github.com/bevyengine/bevy/assets/302146/22073f5d-99a7-49b0-9584-d3ac3eac3033">

+0.6% median frame time.

### UI

**NOTE: On `main` UI is using `SparseSet<Entity, T>`!**

`many_buttons`

<img width="1111" alt="Screenshot 2023-09-27 at 07 53 26"

src="https://github.com/bevyengine/bevy/assets/302146/66afd56d-cbe4-49e7-8b64-2f28f6043d85">

+15.1% median frame time.

## Alternatives

- Cart originally suggested trying out `SparseSet<Entity, T>` and indeed

that is slightly faster under ideal conditions. However,

`PassHashMap<Entity, T>` has better worst case performance when data is

randomly distributed, rather than in sorted render order, and does not

have the worst case memory usage that `SparseSet`'s dense `Vec<usize>`

that maps from the `Entity` index to sparse index into `Vec<T>`. This

dense `Vec` has to be as large as the largest Entity index used with the

`SparseSet`.

- I also tested `PassHashMap<u32, T>`, intending to use `Entity.index()`

as the key, but this proved to sometimes be slower and mostly no

different.

- The only outstanding approach that has not been implemented and tested

is to _not_ clear the render world of its entities each frame. That has

its own problems, though they could perhaps be solved.

- Performance-wise, if the entities and their component data were not

cleared, then they would incur table moves on spawn, and should not

thereafter, rather just their component data would be overwritten.

Ideally we would have a neat way of either updating data in-place via

`&mut T` queries, or inserting components if not present. This would

likely be quite cumbersome to have to remember to do everywhere, but

perhaps it only needs to be done in the more performance-sensitive

systems.

- The main problem to solve however is that we want to both maintain a

mapping between main world entities and render world entities, be able

to run the render app and world in parallel with the main app and world

for pipelined rendering, and at the same time be able to spawn entities

in the render world in such a way that those Entity ids do not collide

with those spawned in the main world. This is potentially quite

solvable, but could well be a lot of ECS work to do it in a way that

makes sense.

---

## Changelog

- Changed: Component data for entities to be drawn are no longer stored

on entities in the render world. Instead, data is stored in a

`EntityHashMap<Entity, T>` in various resources. This brings significant

performance benefits due to the way the render app clears entities every

frame. Resources of most interest are `RenderMeshInstances` and

`RenderMaterialInstances`, and their 2D counterparts.

## Migration Guide

Previously the render app extracted mesh entities and their component

data from the main world and stored them as entities and components in

the render world. Now they are extracted into essentially

`EntityHashMap<Entity, T>` where `T` are structs containing an

appropriate group of data. This means that while extract set systems

will continue to run extract queries against the main world they will

store their data in hash maps. Also, systems in later sets will either

need to look up entities in the available resources such as

`RenderMeshInstances`, or maintain their own `EntityHashMap<Entity, T>`

for their own data.

Before:

```rust

fn queue_custom(

material_meshes: Query<(Entity, &MeshTransforms, &Handle<Mesh>), With<InstanceMaterialData>>,

) {

...

for (entity, mesh_transforms, mesh_handle) in &material_meshes {

...

}

}

```

After:

```rust

fn queue_custom(

render_mesh_instances: Res<RenderMeshInstances>,

instance_entities: Query<Entity, With<InstanceMaterialData>>,

) {

...

for entity in &instance_entities {

let Some(mesh_instance) = render_mesh_instances.get(&entity) else { continue; };

// The mesh handle in `AssetId<Mesh>` form, and the `MeshTransforms` can now

// be found in `mesh_instance` which is a `RenderMeshInstance`

...

}

}

```

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-09-27 10:28:28 +02:00

|

|

|

pbr::{

|

|

|

|

|

MeshPipeline, MeshPipelineKey, RenderMeshInstances, SetMeshBindGroup, SetMeshViewBindGroup,

|

|

|

|

|

},

|

2022-01-05 19:43:11 +00:00

|

|

|

prelude::*,

|

|

|

|

|

render::{

|

2022-05-30 18:36:03 +00:00

|

|

|

extract_component::{ExtractComponent, ExtractComponentPlugin},

|

2024-04-09 15:26:34 +02:00

|

|

|

mesh::{GpuBufferInfo, GpuMesh, MeshVertexBufferLayoutRef},

|

2022-01-05 19:43:11 +00:00

|

|

|

render_asset::RenderAssets,

|

|

|

|

|

render_phase::{

|

Implement GPU frustum culling. (#12889)

This commit implements opt-in GPU frustum culling, built on top of the

infrastructure in https://github.com/bevyengine/bevy/pull/12773. To

enable it on a camera, add the `GpuCulling` component to it. To

additionally disable CPU frustum culling, add the `NoCpuCulling`

component. Note that adding `GpuCulling` without `NoCpuCulling`

*currently* does nothing useful. The reason why `GpuCulling` doesn't

automatically imply `NoCpuCulling` is that I intend to follow this patch

up with GPU two-phase occlusion culling, and CPU frustum culling plus

GPU occlusion culling seems like a very commonly-desired mode.

Adding the `GpuCulling` component to a view puts that view into

*indirect mode*. This mode makes all drawcalls indirect, relying on the

mesh preprocessing shader to allocate instances dynamically. In indirect

mode, the `PreprocessWorkItem` `output_index` points not to a

`MeshUniform` instance slot but instead to a set of `wgpu`

`IndirectParameters`, from which it allocates an instance slot

dynamically if frustum culling succeeds. Batch building has been updated

to allocate and track indirect parameter slots, and the AABBs are now

supplied to the GPU as `MeshCullingData`.

A small amount of code relating to the frustum culling has been borrowed

from meshlets and moved into `maths.wgsl`. Note that standard Bevy

frustum culling uses AABBs, while meshlets use bounding spheres; this

means that not as much code can be shared as one might think.

This patch doesn't provide any way to perform GPU culling on shadow

maps, to avoid making this patch bigger than it already is. That can be

a followup.

## Changelog

### Added

* Frustum culling can now optionally be done on the GPU. To enable it,

add the `GpuCulling` component to a camera.

* To disable CPU frustum culling, add `NoCpuCulling` to a camera. Note

that `GpuCulling` doesn't automatically imply `NoCpuCulling`.

2024-04-28 07:50:00 -05:00

|

|

|

AddRenderCommand, DrawFunctions, PhaseItem, PhaseItemExtraIndex, RenderCommand,

|

2024-05-21 11:23:04 -07:00

|

|

|

RenderCommandResult, SetItemPipeline, TrackedRenderPass, ViewSortedRenderPhases,

|

2022-01-05 19:43:11 +00:00

|

|

|

},

|

|

|

|

|

render_resource::*,

|

|

|

|

|

renderer::RenderDevice,

|

2022-09-05 00:30:21 +00:00

|

|

|

view::{ExtractedView, NoFrustumCulling},

|

2023-03-17 18:45:34 -07:00

|

|

|

Render, RenderApp, RenderSet,

|

2022-01-05 19:43:11 +00:00

|

|

|

},

|

|

|

|

|

};

|

|

|

|

|

use bytemuck::{Pod, Zeroable};

|

|

|

|

|

|

|

|

|

|

fn main() {

|

|

|

|

|

App::new()

|

2023-06-21 22:51:03 +02:00

|

|

|

.add_plugins((DefaultPlugins, CustomMaterialPlugin))

|

2023-03-17 18:45:34 -07:00

|

|

|

.add_systems(Startup, setup)

|

2022-01-05 19:43:11 +00:00

|

|

|

.run();

|

|

|

|

|

}

|

|

|

|

|

|

|

|

|

|

fn setup(mut commands: Commands, mut meshes: ResMut<Assets<Mesh>>) {

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

commands.spawn((

|

2024-02-08 20:01:34 +02:00

|

|

|

meshes.add(Cuboid::new(0.5, 0.5, 0.5)),

|

2022-12-25 00:39:29 +00:00

|

|

|

SpatialBundle::INHERITED_IDENTITY,

|

2022-01-05 19:43:11 +00:00

|

|

|

InstanceMaterialData(

|

|

|

|

|

(1..=10)

|

|

|

|

|

.flat_map(|x| (1..=10).map(move |y| (x as f32 / 10.0, y as f32 / 10.0)))

|

|

|

|

|

.map(|(x, y)| InstanceData {

|

|

|

|

|

position: Vec3::new(x * 10.0 - 5.0, y * 10.0 - 5.0, 0.0),

|

|

|

|

|

scale: 1.0,

|

Migrate from `LegacyColor` to `bevy_color::Color` (#12163)

# Objective

- As part of the migration process we need to a) see the end effect of

the migration on user ergonomics b) check for serious perf regressions

c) actually migrate the code

- To accomplish this, I'm going to attempt to migrate all of the

remaining user-facing usages of `LegacyColor` in one PR, being careful

to keep a clean commit history.

- Fixes #12056.

## Solution

I've chosen to use the polymorphic `Color` type as our standard

user-facing API.

- [x] Migrate `bevy_gizmos`.

- [x] Take `impl Into<Color>` in all `bevy_gizmos` APIs

- [x] Migrate sprites

- [x] Migrate UI

- [x] Migrate `ColorMaterial`

- [x] Migrate `MaterialMesh2D`

- [x] Migrate fog

- [x] Migrate lights

- [x] Migrate StandardMaterial

- [x] Migrate wireframes

- [x] Migrate clear color

- [x] Migrate text

- [x] Migrate gltf loader

- [x] Register color types for reflection

- [x] Remove `LegacyColor`

- [x] Make sure CI passes

Incidental improvements to ease migration:

- added `Color::srgba_u8`, `Color::srgba_from_array` and friends

- added `set_alpha`, `is_fully_transparent` and `is_fully_opaque` to the

`Alpha` trait

- add and immediately deprecate (lol) `Color::rgb` and friends in favor

of more explicit and consistent `Color::srgb`

- standardized on white and black for most example text colors

- added vector field traits to `LinearRgba`: ~~`Add`, `Sub`,

`AddAssign`, `SubAssign`,~~ `Mul<f32>` and `Div<f32>`. Multiplications

and divisions do not scale alpha. `Add` and `Sub` have been cut from

this PR.

- added `LinearRgba` and `Srgba` `RED/GREEN/BLUE`

- added `LinearRgba_to_f32_array` and `LinearRgba::to_u32`

## Migration Guide

Bevy's color types have changed! Wherever you used a

`bevy::render::Color`, a `bevy::color::Color` is used instead.

These are quite similar! Both are enums storing a color in a specific

color space (or to be more precise, using a specific color model).

However, each of the different color models now has its own type.

TODO...

- `Color::rgba`, `Color::rgb`, `Color::rbga_u8`, `Color::rgb_u8`,

`Color::rgb_from_array` are now `Color::srgba`, `Color::srgb`,

`Color::srgba_u8`, `Color::srgb_u8` and `Color::srgb_from_array`.

- `Color::set_a` and `Color::a` is now `Color::set_alpha` and

`Color::alpha`. These are part of the `Alpha` trait in `bevy_color`.

- `Color::is_fully_transparent` is now part of the `Alpha` trait in

`bevy_color`

- `Color::r`, `Color::set_r`, `Color::with_r` and the equivalents for

`g`, `b` `h`, `s` and `l` have been removed due to causing silent

relatively expensive conversions. Convert your `Color` into the desired

color space, perform your operations there, and then convert it back

into a polymorphic `Color` enum.

- `Color::hex` is now `Srgba::hex`. Call `.into` or construct a

`Color::Srgba` variant manually to convert it.

- `WireframeMaterial`, `ExtractedUiNode`, `ExtractedDirectionalLight`,

`ExtractedPointLight`, `ExtractedSpotLight` and `ExtractedSprite` now

store a `LinearRgba`, rather than a polymorphic `Color`

- `Color::rgb_linear` and `Color::rgba_linear` are now

`Color::linear_rgb` and `Color::linear_rgba`

- The various CSS color constants are no longer stored directly on

`Color`. Instead, they're defined in the `Srgba` color space, and

accessed via `bevy::color::palettes::css`. Call `.into()` on them to

convert them into a `Color` for quick debugging use, and consider using

the much prettier `tailwind` palette for prototyping.

- The `LIME_GREEN` color has been renamed to `LIMEGREEN` to comply with

the standard naming.

- Vector field arithmetic operations on `Color` (add, subtract, multiply

and divide by a f32) have been removed. Instead, convert your colors

into `LinearRgba` space, and perform your operations explicitly there.

This is particularly relevant when working with emissive or HDR colors,

whose color channel values are routinely outside of the ordinary 0 to 1

range.

- `Color::as_linear_rgba_f32` has been removed. Call

`LinearRgba::to_f32_array` instead, converting if needed.

- `Color::as_linear_rgba_u32` has been removed. Call

`LinearRgba::to_u32` instead, converting if needed.

- Several other color conversion methods to transform LCH or HSL colors

into float arrays or `Vec` types have been removed. Please reimplement

these externally or open a PR to re-add them if you found them

particularly useful.

- Various methods on `Color` such as `rgb` or `hsl` to convert the color

into a specific color space have been removed. Convert into

`LinearRgba`, then to the color space of your choice.

- Various implicitly-converting color value methods on `Color` such as

`r`, `g`, `b` or `h` have been removed. Please convert it into the color

space of your choice, then check these properties.

- `Color` no longer implements `AsBindGroup`. Store a `LinearRgba`

internally instead to avoid conversion costs.

---------

Co-authored-by: Alice Cecile <alice.i.cecil@gmail.com>

Co-authored-by: Afonso Lage <lage.afonso@gmail.com>

Co-authored-by: Rob Parrett <robparrett@gmail.com>

Co-authored-by: Zachary Harrold <zac@harrold.com.au>

2024-02-29 14:35:12 -05:00

|

|

|

color: LinearRgba::from(Color::hsla(x * 360., y, 0.5, 1.0)).to_f32_array(),

|

2022-01-05 19:43:11 +00:00

|

|

|

})

|

|

|

|

|

.collect(),

|

|

|

|

|

),

|

2022-01-17 22:55:44 +00:00

|

|

|

// NOTE: Frustum culling is done based on the Aabb of the Mesh and the GlobalTransform.

|

|

|

|

|

// As the cube is at the origin, if its Aabb moves outside the view frustum, all the

|

|

|

|

|

// instanced cubes will be culled.

|

|

|

|

|

// The InstanceMaterialData contains the 'GlobalTransform' information for this custom

|

|

|

|

|

// instancing, and that is not taken into account with the built-in frustum culling.

|

|

|

|

|

// We must disable the built-in frustum culling by adding the `NoFrustumCulling` marker

|

|

|

|

|

// component to avoid incorrect culling.

|

|

|

|

|

NoFrustumCulling,

|

2022-01-05 19:43:11 +00:00

|

|

|

));

|

|

|

|

|

|

|

|

|

|

// camera

|

Spawn now takes a Bundle (#6054)

# Objective

Now that we can consolidate Bundles and Components under a single insert (thanks to #2975 and #6039), almost 100% of world spawns now look like `world.spawn().insert((Some, Tuple, Here))`. Spawning an entity without any components is an extremely uncommon pattern, so it makes sense to give spawn the "first class" ergonomic api. This consolidated api should be made consistent across all spawn apis (such as World and Commands).

## Solution

All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input:

```rust

// before:

commands

.spawn()

.insert((A, B, C));

world

.spawn()

.insert((A, B, C);

// after

commands.spawn((A, B, C));

world.spawn((A, B, C));

```

All existing instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api. A new `spawn_empty` has been added, replacing the old `spawn` api.

By allowing `world.spawn(some_bundle)` to replace `world.spawn().insert(some_bundle)`, this opened the door to removing the initial entity allocation in the "empty" archetype / table done in `spawn()` (and subsequent move to the actual archetype in `.insert(some_bundle)`).

This improves spawn performance by over 10%:

To take this measurement, I added a new `world_spawn` benchmark.

Unfortunately, optimizing `Commands::spawn` is slightly less trivial, as Commands expose the Entity id of spawned entities prior to actually spawning. Doing the optimization would (naively) require assurances that the `spawn(some_bundle)` command is applied before all other commands involving the entity (which would not necessarily be true, if memory serves). Optimizing `Commands::spawn` this way does feel possible, but it will require careful thought (and maybe some additional checks), which deserves its own PR. For now, it has the same performance characteristics of the current `Commands::spawn_bundle` on main.

**Note that 99% of this PR is simple renames and refactors. The only code that needs careful scrutiny is the new `World::spawn()` impl, which is relatively straightforward, but it has some new unsafe code (which re-uses battle tested BundlerSpawner code path).**

---

## Changelog

- All `spawn` apis (`World::spawn`, `Commands:;spawn`, `ChildBuilder::spawn`, and `WorldChildBuilder::spawn`) now accept a bundle as input

- All instances of `spawn_bundle` have been deprecated in favor of the new `spawn` api

- World and Commands now have `spawn_empty()`, which is equivalent to the old `spawn()` behavior.

## Migration Guide

```rust

// Old (0.8):

commands

.spawn()

.insert_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

commands.spawn_bundle((A, B, C));

// New (0.9)

commands.spawn((A, B, C));

// Old (0.8):

let entity = commands.spawn().id();

// New (0.9)

let entity = commands.spawn_empty().id();

// Old (0.8)

let entity = world.spawn().id();

// New (0.9)

let entity = world.spawn_empty();

```

2022-09-23 19:55:54 +00:00

|

|

|

commands.spawn(Camera3dBundle {

|

2022-01-05 19:43:11 +00:00

|

|

|

transform: Transform::from_xyz(0.0, 0.0, 15.0).looking_at(Vec3::ZERO, Vec3::Y),

|

2022-03-01 20:52:09 +00:00

|

|

|

..default()

|

2022-01-05 19:43:11 +00:00

|

|

|

});

|

|

|

|

|

}

|

|

|

|

|

|

bevy_derive: Add derives for `Deref` and `DerefMut` (#4328)

# Objective

A common pattern in Rust is the [newtype](https://doc.rust-lang.org/rust-by-example/generics/new_types.html). This is an especially useful pattern in Bevy as it allows us to give common/foreign types different semantics (such as allowing it to implement `Component` or `FromWorld`) or to simply treat them as a "new type" (clever). For example, it allows us to wrap a common `Vec<String>` and do things like:

```rust

#[derive(Component)]

struct Items(Vec<String>);

fn give_sword(query: Query<&mut Items>) {

query.single_mut().0.push(String::from("Flaming Poisoning Raging Sword of Doom"));

}

```

> We could then define another struct that wraps `Vec<String>` without anything clashing in the query.

However, one of the worst parts of this pattern is the ugly `.0` we have to write in order to access the type we actually care about. This is why people often implement `Deref` and `DerefMut` in order to get around this.

Since it's such a common pattern, especially for Bevy, it makes sense to add a derive macro to automatically add those implementations.

## Solution

Added a derive macro for `Deref` and another for `DerefMut` (both exported into the prelude). This works on all structs (including tuple structs) as long as they only contain a single field:

```rust

#[derive(Deref)]

struct Foo(String);

#[derive(Deref, DerefMut)]

struct Bar {

name: String,

}

```

This allows us to then remove that pesky `.0`:

```rust

#[derive(Component, Deref, DerefMut)]

struct Items(Vec<String>);

fn give_sword(query: Query<&mut Items>) {

query.single_mut().push(String::from("Flaming Poisoning Raging Sword of Doom"));

}

```

### Alternatives

There are other alternatives to this such as by using the [`derive_more`](https://crates.io/crates/derive_more) crate. However, it doesn't seem like we need an entire crate just yet since we only need `Deref` and `DerefMut` (for now).

### Considerations

One thing to consider is that the Rust std library recommends _not_ using `Deref` and `DerefMut` for things like this: "`Deref` should only be implemented for smart pointers to avoid confusion" ([reference](https://doc.rust-lang.org/std/ops/trait.Deref.html)). Personally, I believe it makes sense to use it in the way described above, but others may disagree.

### Additional Context

Discord: https://discord.com/channels/691052431525675048/692572690833473578/956648422163746827 (controversiality discussed [here](https://discord.com/channels/691052431525675048/692572690833473578/956711911481835630))

---

## Changelog

- Add `Deref` derive macro (exported to prelude)

- Add `DerefMut` derive macro (exported to prelude)

- Updated most newtypes in examples to use one or both derives

Co-authored-by: MrGVSV <49806985+MrGVSV@users.noreply.github.com>

2022-03-29 02:10:06 +00:00

|

|

|

#[derive(Component, Deref)]

|

2022-01-05 19:43:11 +00:00

|

|

|

struct InstanceMaterialData(Vec<InstanceData>);

|

2022-05-16 13:53:20 +00:00

|

|

|

|

2022-01-05 19:43:11 +00:00

|

|

|

impl ExtractComponent for InstanceMaterialData {

|

2024-01-22 10:01:55 -05:00

|

|

|

type QueryData = &'static InstanceMaterialData;

|

|

|

|

|

type QueryFilter = ();

|

2022-11-21 13:19:44 +00:00

|

|

|

type Out = Self;

|

2022-01-05 19:43:11 +00:00

|

|

|

|

2024-01-22 10:01:55 -05:00

|

|

|

fn extract_component(item: QueryItem<'_, Self::QueryData>) -> Option<Self> {

|

2022-11-21 13:19:44 +00:00

|

|

|

Some(InstanceMaterialData(item.0.clone()))

|

2022-01-05 19:43:11 +00:00

|

|

|

}

|

|

|

|

|

}

|

|

|

|

|

|

2024-02-03 22:40:55 +01:00

|

|

|

struct CustomMaterialPlugin;

|

2022-01-05 19:43:11 +00:00

|

|

|

|

|

|

|

|

impl Plugin for CustomMaterialPlugin {

|

|

|

|

|

fn build(&self, app: &mut App) {

|

2023-06-21 22:51:03 +02:00

|

|

|

app.add_plugins(ExtractComponentPlugin::<InstanceMaterialData>::default());

|

2022-01-05 19:43:11 +00:00

|

|

|

app.sub_app_mut(RenderApp)

|

|

|

|

|

.add_render_command::<Transparent3d, DrawCustom>()

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120

Fixes #3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-02-23 23:21:13 +00:00

|

|

|

.init_resource::<SpecializedMeshPipelines<CustomPipeline>>()

|

2023-03-17 18:45:34 -07:00

|

|

|

.add_systems(

|

|

|

|

|

Render,

|

|

|

|

|

(

|

Reorder render sets, refactor bevy_sprite to take advantage (#9236)

This is a continuation of this PR: #8062

# Objective

- Reorder render schedule sets to allow data preparation when phase item

order is known to support improved batching

- Part of the batching/instancing etc plan from here:

https://github.com/bevyengine/bevy/issues/89#issuecomment-1379249074

- The original idea came from @inodentry and proved to be a good one.

Thanks!

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the new

ordering

## Solution

- Move `Prepare` and `PrepareFlush` after `PhaseSortFlush`

- Add a `PrepareAssets` set that runs in parallel with other systems and

sets in the render schedule.

- Put prepare_assets systems in the `PrepareAssets` set

- If explicit dependencies are needed on Mesh or Material RenderAssets

then depend on the appropriate system.

- Add `ManageViews` and `ManageViewsFlush` sets between

`ExtractCommands` and Queue

- Move `queue_mesh*_bind_group` to the Prepare stage

- Rename them to `prepare_`

- Put systems that prepare resources (buffers, textures, etc.) into a

`PrepareResources` set inside `Prepare`

- Put the `prepare_..._bind_group` systems into a `PrepareBindGroup` set

after `PrepareResources`

- Move `prepare_lights` to the `ManageViews` set

- `prepare_lights` creates views and this must happen before `Queue`

- This system needs refactoring to stop handling all responsibilities

- Gather lights, sort, and create shadow map views. Store sorted light

entities in a resource

- Remove `BatchedPhaseItem`

- Replace `batch_range` with `batch_size` representing how many items to

skip after rendering the item or to skip the item entirely if

`batch_size` is 0.

- `queue_sprites` has been split into `queue_sprites` for queueing phase

items and `prepare_sprites` for batching after the `PhaseSort`

- `PhaseItem`s are still inserted in `queue_sprites`

- After sorting adjacent compatible sprite phase items are accumulated

into `SpriteBatch` components on the first entity of each batch,

containing a range of vertex indices. The associated `PhaseItem`'s

`batch_size` is updated appropriately.

- `SpriteBatch` items are then drawn skipping over the other items in

the batch based on the value in `batch_size`

- A very similar refactor was performed on `bevy_ui`

---

## Changelog

Changed:

- Reordered and reworked render app schedule sets. The main change is

that data is extracted, queued, sorted, and then prepared when the order

of data is known.

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the

reordering.

## Migration Guide

- Assets such as materials and meshes should now be created in

`PrepareAssets` e.g. `prepare_assets<Mesh>`

- Queueing entities to `RenderPhase`s continues to be done in `Queue`

e.g. `queue_sprites`

- Preparing resources (textures, buffers, etc.) should now be done in

`PrepareResources`, e.g. `prepare_prepass_textures`,

`prepare_mesh_uniforms`

- Prepare bind groups should now be done in `PrepareBindGroups` e.g.

`prepare_mesh_bind_group`

- Any batching or instancing can now be done in `Prepare` where the

order of the phase items is known e.g. `prepare_sprites`

## Next Steps

- Introduce some generic mechanism to ensure items that can be batched

are grouped in the phase item order, currently you could easily have

`[sprite at z 0, mesh at z 0, sprite at z 0]` preventing batching.

- Investigate improved orderings for building the MeshUniform buffer

- Implementing batching across the rest of bevy

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-08-27 07:33:49 -07:00

|

|

|

queue_custom.in_set(RenderSet::QueueMeshes),

|

|

|

|

|

prepare_instance_buffers.in_set(RenderSet::PrepareResources),

|

2023-03-17 18:45:34 -07:00

|

|

|

),

|

|

|

|

|

);

|

2022-01-05 19:43:11 +00:00

|

|

|

}

|

Webgpu support (#8336)

# Objective

- Support WebGPU

- alternative to #5027 that doesn't need any async / await

- fixes #8315

- Surprise fix #7318

## Solution

### For async renderer initialisation

- Update the plugin lifecycle:

- app builds the plugin

- calls `plugin.build`

- registers the plugin

- app starts the event loop

- event loop waits for `ready` of all registered plugins in the same

order

- returns `true` by default

- then call all `finish` then all `cleanup` in the same order as

registered

- then execute the schedule

In the case of the renderer, to avoid anything async:

- building the renderer plugin creates a detached task that will send

back the initialised renderer through a mutex in a resource

- `ready` will wait for the renderer to be present in the resource

- `finish` will take that renderer and place it in the expected

resources by other plugins

- other plugins (that expect the renderer to be available) `finish` are

called and they are able to set up their pipelines

- `cleanup` is called, only custom one is still for pipeline rendering

### For WebGPU support

- update the `build-wasm-example` script to support passing `--api

webgpu` that will build the example with WebGPU support

- feature for webgl2 was always enabled when building for wasm. it's now

in the default feature list and enabled on all platforms, so check for

this feature must also check that the target_arch is `wasm32`

---

## Migration Guide

- `Plugin::setup` has been renamed `Plugin::cleanup`

- `Plugin::finish` has been added, and plugins adding pipelines should

do it in this function instead of `Plugin::build`

```rust

// Before

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

app.insert_resource::<MyResource>

.add_systems(Update, my_system);

let render_app = match app.get_sub_app_mut(RenderApp) {

Ok(render_app) => render_app,

Err(_) => return,

};

render_app

.init_resource::<RenderResourceNeedingDevice>()

.init_resource::<OtherRenderResource>();

}

}

// After

impl Plugin for MyPlugin {

fn build(&self, app: &mut App) {

app.insert_resource::<MyResource>

.add_systems(Update, my_system);

let render_app = match app.get_sub_app_mut(RenderApp) {

Ok(render_app) => render_app,

Err(_) => return,

};

render_app

.init_resource::<OtherRenderResource>();

}

fn finish(&self, app: &mut App) {

let render_app = match app.get_sub_app_mut(RenderApp) {

Ok(render_app) => render_app,

Err(_) => return,

};

render_app

.init_resource::<RenderResourceNeedingDevice>();

}

}

```

2023-05-05 00:07:57 +02:00

|

|

|

|

|

|

|

|

fn finish(&self, app: &mut App) {

|

|

|

|

|

app.sub_app_mut(RenderApp).init_resource::<CustomPipeline>();

|

|

|

|

|

}

|

2022-01-05 19:43:11 +00:00

|

|

|

}

|

|

|

|

|

|

|

|

|

|

#[derive(Clone, Copy, Pod, Zeroable)]

|

|

|

|

|

#[repr(C)]

|

|

|

|

|

struct InstanceData {

|

|

|

|

|

position: Vec3,

|

|

|

|

|

scale: f32,

|

|

|

|

|

color: [f32; 4],

|

|

|

|

|

}

|

|

|

|

|

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120

Fixes #3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-02-23 23:21:13 +00:00

|

|

|

#[allow(clippy::too_many_arguments)]

|

2022-01-05 19:43:11 +00:00

|

|

|

fn queue_custom(

|

|

|

|

|

transparent_3d_draw_functions: Res<DrawFunctions<Transparent3d>>,

|

|

|

|

|

custom_pipeline: Res<CustomPipeline>,

|

|

|

|

|

msaa: Res<Msaa>,

|

Mesh vertex buffer layouts (#3959)

This PR makes a number of changes to how meshes and vertex attributes are handled, which the goal of enabling easy and flexible custom vertex attributes:

* Reworks the `Mesh` type to use the newly added `VertexAttribute` internally

* `VertexAttribute` defines the name, a unique `VertexAttributeId`, and a `VertexFormat`

* `VertexAttributeId` is used to produce consistent sort orders for vertex buffer generation, replacing the more expensive and often surprising "name based sorting"

* Meshes can be used to generate a `MeshVertexBufferLayout`, which defines the layout of the gpu buffer produced by the mesh. `MeshVertexBufferLayouts` can then be used to generate actual `VertexBufferLayouts` according to the requirements of a specific pipeline. This decoupling of "mesh layout" vs "pipeline vertex buffer layout" is what enables custom attributes. We don't need to standardize _mesh layouts_ or contort meshes to meet the needs of a specific pipeline. As long as the mesh has what the pipeline needs, it will work transparently.

* Mesh-based pipelines now specialize on `&MeshVertexBufferLayout` via the new `SpecializedMeshPipeline` trait (which behaves like `SpecializedPipeline`, but adds `&MeshVertexBufferLayout`). The integrity of the pipeline cache is maintained because the `MeshVertexBufferLayout` is treated as part of the key (which is fully abstracted from implementers of the trait ... no need to add any additional info to the specialization key).

* Hashing `MeshVertexBufferLayout` is too expensive to do for every entity, every frame. To make this scalable, I added a generalized "pre-hashing" solution to `bevy_utils`: `Hashed<T>` keys and `PreHashMap<K, V>` (which uses `Hashed<T>` internally) . Why didn't I just do the quick and dirty in-place "pre-compute hash and use that u64 as a key in a hashmap" that we've done in the past? Because its wrong! Hashes by themselves aren't enough because two different values can produce the same hash. Re-hashing a hash is even worse! I decided to build a generalized solution because this pattern has come up in the past and we've chosen to do the wrong thing. Now we can do the right thing! This did unfortunately require pulling in `hashbrown` and using that in `bevy_utils`, because avoiding re-hashes requires the `raw_entry_mut` api, which isn't stabilized yet (and may never be ... `entry_ref` has favor now, but also isn't available yet). If std's HashMap ever provides the tools we need, we can move back to that. Note that adding `hashbrown` doesn't increase our dependency count because it was already in our tree. I will probably break these changes out into their own PR.

* Specializing on `MeshVertexBufferLayout` has one non-obvious behavior: it can produce identical pipelines for two different MeshVertexBufferLayouts. To optimize the number of active pipelines / reduce re-binds while drawing, I de-duplicate pipelines post-specialization using the final `VertexBufferLayout` as the key. For example, consider a pipeline that needs the layout `(position, normal)` and is specialized using two meshes: `(position, normal, uv)` and `(position, normal, other_vec2)`. If both of these meshes result in `(position, normal)` specializations, we can use the same pipeline! Now we do. Cool!

To briefly illustrate, this is what the relevant section of `MeshPipeline`'s specialization code looks like now:

```rust

impl SpecializedMeshPipeline for MeshPipeline {

type Key = MeshPipelineKey;

fn specialize(

&self,

key: Self::Key,

layout: &MeshVertexBufferLayout,

) -> RenderPipelineDescriptor {

let mut vertex_attributes = vec![

Mesh::ATTRIBUTE_POSITION.at_shader_location(0),

Mesh::ATTRIBUTE_NORMAL.at_shader_location(1),

Mesh::ATTRIBUTE_UV_0.at_shader_location(2),

];

let mut shader_defs = Vec::new();

if layout.contains(Mesh::ATTRIBUTE_TANGENT) {

shader_defs.push(String::from("VERTEX_TANGENTS"));

vertex_attributes.push(Mesh::ATTRIBUTE_TANGENT.at_shader_location(3));

}

let vertex_buffer_layout = layout

.get_layout(&vertex_attributes)

.expect("Mesh is missing a vertex attribute");

```

Notice that this is _much_ simpler than it was before. And now any mesh with any layout can be used with this pipeline, provided it has vertex postions, normals, and uvs. We even got to remove `HAS_TANGENTS` from MeshPipelineKey and `has_tangents` from `GpuMesh`, because that information is redundant with `MeshVertexBufferLayout`.

This is still a draft because I still need to:

* Add more docs

* Experiment with adding error handling to mesh pipeline specialization (which would print errors at runtime when a mesh is missing a vertex attribute required by a pipeline). If it doesn't tank perf, we'll keep it.

* Consider breaking out the PreHash / hashbrown changes into a separate PR.

* Add an example illustrating this change

* Verify that the "mesh-specialized pipeline de-duplication code" works properly

Please dont yell at me for not doing these things yet :) Just trying to get this in peoples' hands asap.

Alternative to #3120

Fixes #3030

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-02-23 23:21:13 +00:00

|

|

|

mut pipelines: ResMut<SpecializedMeshPipelines<CustomPipeline>>,

|

2023-01-16 15:41:14 +00:00

|

|

|

pipeline_cache: Res<PipelineCache>,

|

2024-04-09 15:26:34 +02:00

|

|

|

meshes: Res<RenderAssets<GpuMesh>>,

|

Use EntityHashMap<Entity, T> for render world entity storage for better performance (#9903)

# Objective

- Improve rendering performance, particularly by avoiding the large

system commands costs of using the ECS in the way that the render world

does.

## Solution

- Define `EntityHasher` that calculates a hash from the

`Entity.to_bits()` by `i | (i.wrapping_mul(0x517cc1b727220a95) << 32)`.

`0x517cc1b727220a95` is something like `u64::MAX / N` for N that gives a

value close to π and that works well for hashing. Thanks for @SkiFire13

for the suggestion and to @nicopap for alternative suggestions and

discussion. This approach comes from `rustc-hash` (a.k.a. `FxHasher`)

with some tweaks for the case of hashing an `Entity`. `FxHasher` and

`SeaHasher` were also tested but were significantly slower.

- Define `EntityHashMap` type that uses the `EntityHashser`

- Use `EntityHashMap<Entity, T>` for render world entity storage,

including:

- `RenderMaterialInstances` - contains the `AssetId<M>` of the material

associated with the entity. Also for 2D.

- `RenderMeshInstances` - contains mesh transforms, flags and properties

about mesh entities. Also for 2D.

- `SkinIndices` and `MorphIndices` - contains the skin and morph index

for an entity, respectively

- `ExtractedSprites`

- `ExtractedUiNodes`

## Benchmarks

All benchmarks have been conducted on an M1 Max connected to AC power.

The tests are run for 1500 frames. The 1000th frame is captured for

comparison to check for visual regressions. There were none.

### 2D Meshes

`bevymark --benchmark --waves 160 --per-wave 1000 --mode mesh2d`

#### `--ordered-z`

This test spawns the 2D meshes with z incrementing back to front, which

is the ideal arrangement allocation order as it matches the sorted

render order which means lookups have a high cache hit rate.

<img width="1112" alt="Screenshot 2023-09-27 at 07 50 45"

src="https://github.com/bevyengine/bevy/assets/302146/e140bc98-7091-4a3b-8ae1-ab75d16d2ccb">

-39.1% median frame time.

#### Random

This test spawns the 2D meshes with random z. This not only makes the

batching and transparent 2D pass lookups get a lot of cache misses, it

also currently means that the meshes are almost certain to not be

batchable.

<img width="1108" alt="Screenshot 2023-09-27 at 07 51 28"

src="https://github.com/bevyengine/bevy/assets/302146/29c2e813-645a-43ce-982a-55df4bf7d8c4">

-7.2% median frame time.

### 3D Meshes

`many_cubes --benchmark`

<img width="1112" alt="Screenshot 2023-09-27 at 07 51 57"

src="https://github.com/bevyengine/bevy/assets/302146/1a729673-3254-4e2a-9072-55e27c69f0fc">

-7.7% median frame time.

### Sprites

**NOTE: On `main` sprites are using `SparseSet<Entity, T>`!**

`bevymark --benchmark --waves 160 --per-wave 1000 --mode sprite`

#### `--ordered-z`

This test spawns the sprites with z incrementing back to front, which is

the ideal arrangement allocation order as it matches the sorted render

order which means lookups have a high cache hit rate.

<img width="1116" alt="Screenshot 2023-09-27 at 07 52 31"

src="https://github.com/bevyengine/bevy/assets/302146/bc8eab90-e375-4d31-b5cd-f55f6f59ab67">

+13.0% median frame time.

#### Random

This test spawns the sprites with random z. This makes the batching and

transparent 2D pass lookups get a lot of cache misses.

<img width="1109" alt="Screenshot 2023-09-27 at 07 53 01"

src="https://github.com/bevyengine/bevy/assets/302146/22073f5d-99a7-49b0-9584-d3ac3eac3033">

+0.6% median frame time.

### UI

**NOTE: On `main` UI is using `SparseSet<Entity, T>`!**

`many_buttons`

<img width="1111" alt="Screenshot 2023-09-27 at 07 53 26"

src="https://github.com/bevyengine/bevy/assets/302146/66afd56d-cbe4-49e7-8b64-2f28f6043d85">

+15.1% median frame time.

## Alternatives

- Cart originally suggested trying out `SparseSet<Entity, T>` and indeed

that is slightly faster under ideal conditions. However,

`PassHashMap<Entity, T>` has better worst case performance when data is

randomly distributed, rather than in sorted render order, and does not

have the worst case memory usage that `SparseSet`'s dense `Vec<usize>`

that maps from the `Entity` index to sparse index into `Vec<T>`. This

dense `Vec` has to be as large as the largest Entity index used with the

`SparseSet`.

- I also tested `PassHashMap<u32, T>`, intending to use `Entity.index()`

as the key, but this proved to sometimes be slower and mostly no

different.

- The only outstanding approach that has not been implemented and tested

is to _not_ clear the render world of its entities each frame. That has

its own problems, though they could perhaps be solved.

- Performance-wise, if the entities and their component data were not

cleared, then they would incur table moves on spawn, and should not

thereafter, rather just their component data would be overwritten.

Ideally we would have a neat way of either updating data in-place via

`&mut T` queries, or inserting components if not present. This would

likely be quite cumbersome to have to remember to do everywhere, but

perhaps it only needs to be done in the more performance-sensitive

systems.

- The main problem to solve however is that we want to both maintain a

mapping between main world entities and render world entities, be able

to run the render app and world in parallel with the main app and world

for pipelined rendering, and at the same time be able to spawn entities

in the render world in such a way that those Entity ids do not collide

with those spawned in the main world. This is potentially quite

solvable, but could well be a lot of ECS work to do it in a way that

makes sense.

---

## Changelog

- Changed: Component data for entities to be drawn are no longer stored

on entities in the render world. Instead, data is stored in a

`EntityHashMap<Entity, T>` in various resources. This brings significant

performance benefits due to the way the render app clears entities every

frame. Resources of most interest are `RenderMeshInstances` and

`RenderMaterialInstances`, and their 2D counterparts.

## Migration Guide

Previously the render app extracted mesh entities and their component

data from the main world and stored them as entities and components in

the render world. Now they are extracted into essentially

`EntityHashMap<Entity, T>` where `T` are structs containing an

appropriate group of data. This means that while extract set systems

will continue to run extract queries against the main world they will

store their data in hash maps. Also, systems in later sets will either

need to look up entities in the available resources such as

`RenderMeshInstances`, or maintain their own `EntityHashMap<Entity, T>`

for their own data.

Before:

```rust

fn queue_custom(

material_meshes: Query<(Entity, &MeshTransforms, &Handle<Mesh>), With<InstanceMaterialData>>,

) {

...

for (entity, mesh_transforms, mesh_handle) in &material_meshes {

...

}

}

```

After:

```rust

fn queue_custom(

render_mesh_instances: Res<RenderMeshInstances>,

instance_entities: Query<Entity, With<InstanceMaterialData>>,

) {

...

for entity in &instance_entities {

let Some(mesh_instance) = render_mesh_instances.get(&entity) else { continue; };

// The mesh handle in `AssetId<Mesh>` form, and the `MeshTransforms` can now

// be found in `mesh_instance` which is a `RenderMeshInstance`

...

}

}

```

---------

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-09-27 10:28:28 +02:00

|

|

|

render_mesh_instances: Res<RenderMeshInstances>,

|

|

|

|

|

material_meshes: Query<Entity, With<InstanceMaterialData>>,

|

2024-05-21 11:23:04 -07:00

|

|

|

mut transparent_render_phases: ResMut<ViewSortedRenderPhases<Transparent3d>>,

|

|

|

|

|

mut views: Query<(Entity, &ExtractedView)>,

|

2022-01-05 19:43:11 +00:00

|

|

|

) {

|

2022-11-28 13:54:13 +00:00

|

|

|

let draw_custom = transparent_3d_draw_functions.read().id::<DrawCustom>();

|

2022-01-05 19:43:11 +00:00

|

|

|

|

2023-01-20 14:25:21 +00:00

|

|

|

let msaa_key = MeshPipelineKey::from_msaa_samples(msaa.samples());

|

2022-01-05 19:43:11 +00:00

|

|

|

|

2024-05-21 11:23:04 -07:00

|

|

|

for (view_entity, view) in &mut views {

|

|

|

|

|

let Some(transparent_phase) = transparent_render_phases.get_mut(&view_entity) else {

|

|

|

|

|

continue;

|

|

|

|

|

};

|

|

|

|

|

|

2022-11-12 09:31:03 +00:00

|

|

|

let view_key = msaa_key | MeshPipelineKey::from_hdr(view.hdr);

|

2022-07-05 06:13:39 +00:00

|

|

|

let rangefinder = view.rangefinder3d();

|

Use EntityHashMap<Entity, T> for render world entity storage for better performance (#9903)

# Objective

- Improve rendering performance, particularly by avoiding the large

system commands costs of using the ECS in the way that the render world

does.

## Solution

- Define `EntityHasher` that calculates a hash from the

`Entity.to_bits()` by `i | (i.wrapping_mul(0x517cc1b727220a95) << 32)`.

`0x517cc1b727220a95` is something like `u64::MAX / N` for N that gives a

value close to π and that works well for hashing. Thanks for @SkiFire13

for the suggestion and to @nicopap for alternative suggestions and

discussion. This approach comes from `rustc-hash` (a.k.a. `FxHasher`)

with some tweaks for the case of hashing an `Entity`. `FxHasher` and

`SeaHasher` were also tested but were significantly slower.

- Define `EntityHashMap` type that uses the `EntityHashser`

- Use `EntityHashMap<Entity, T>` for render world entity storage,

including:

- `RenderMaterialInstances` - contains the `AssetId<M>` of the material

associated with the entity. Also for 2D.

- `RenderMeshInstances` - contains mesh transforms, flags and properties

about mesh entities. Also for 2D.