2021-11-19 21:16:58 +00:00

|

|

|

use crate::{

|

2023-03-02 08:21:21 +00:00

|

|

|

environment_map, prepass, EnvironmentMapLight, FogMeta, GlobalLightMeta, GpuFog, GpuLights,

|

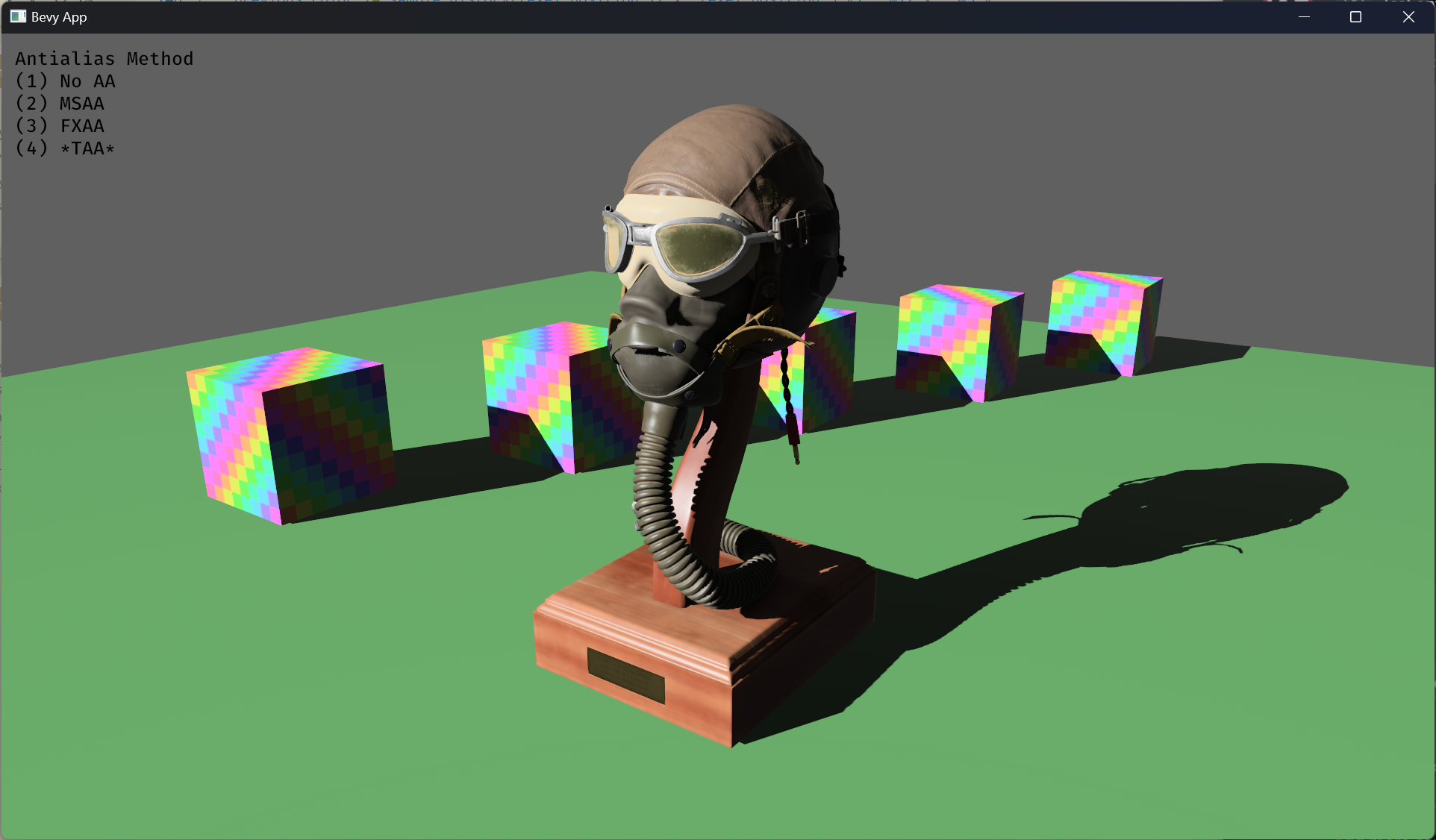

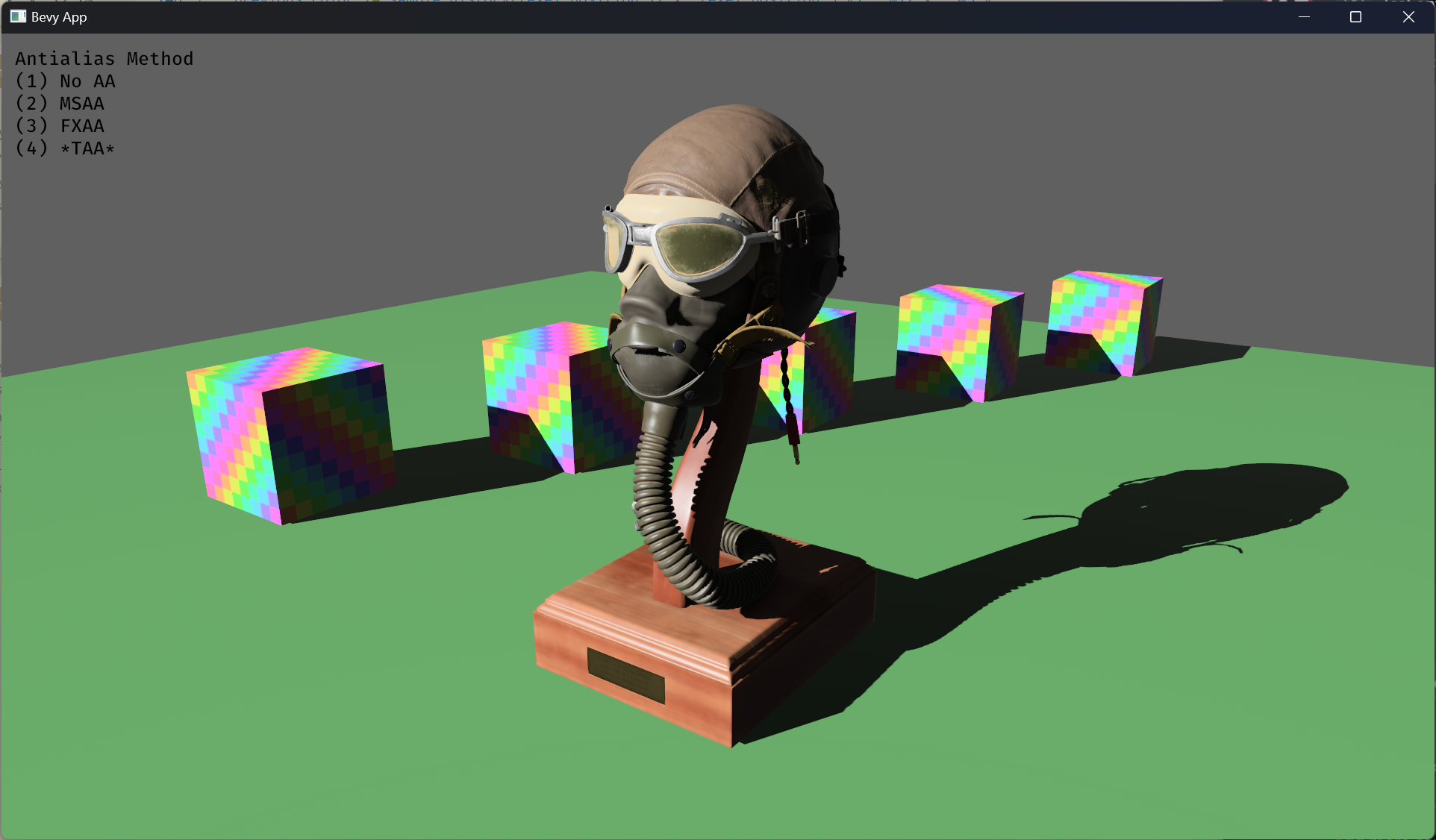

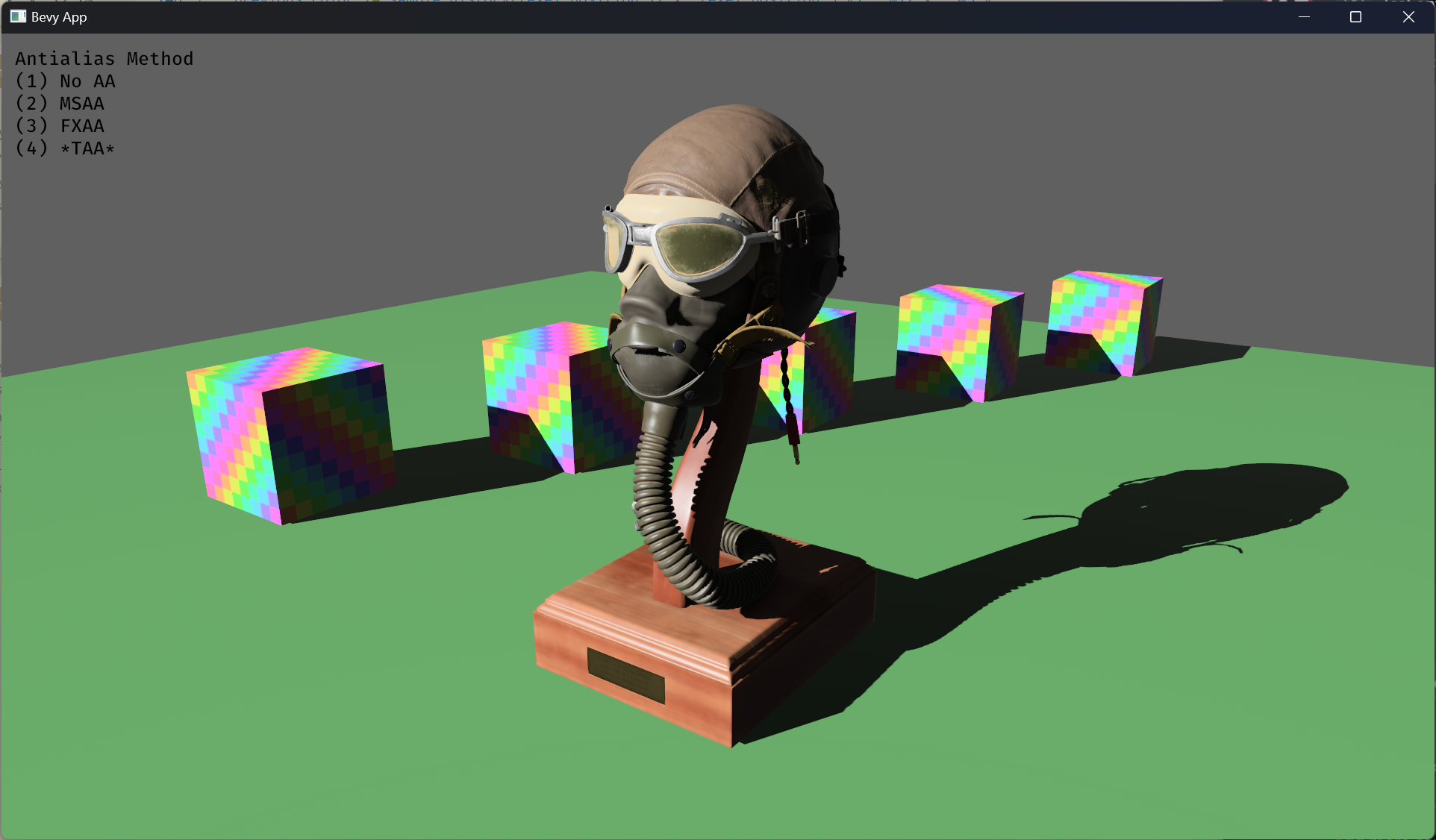

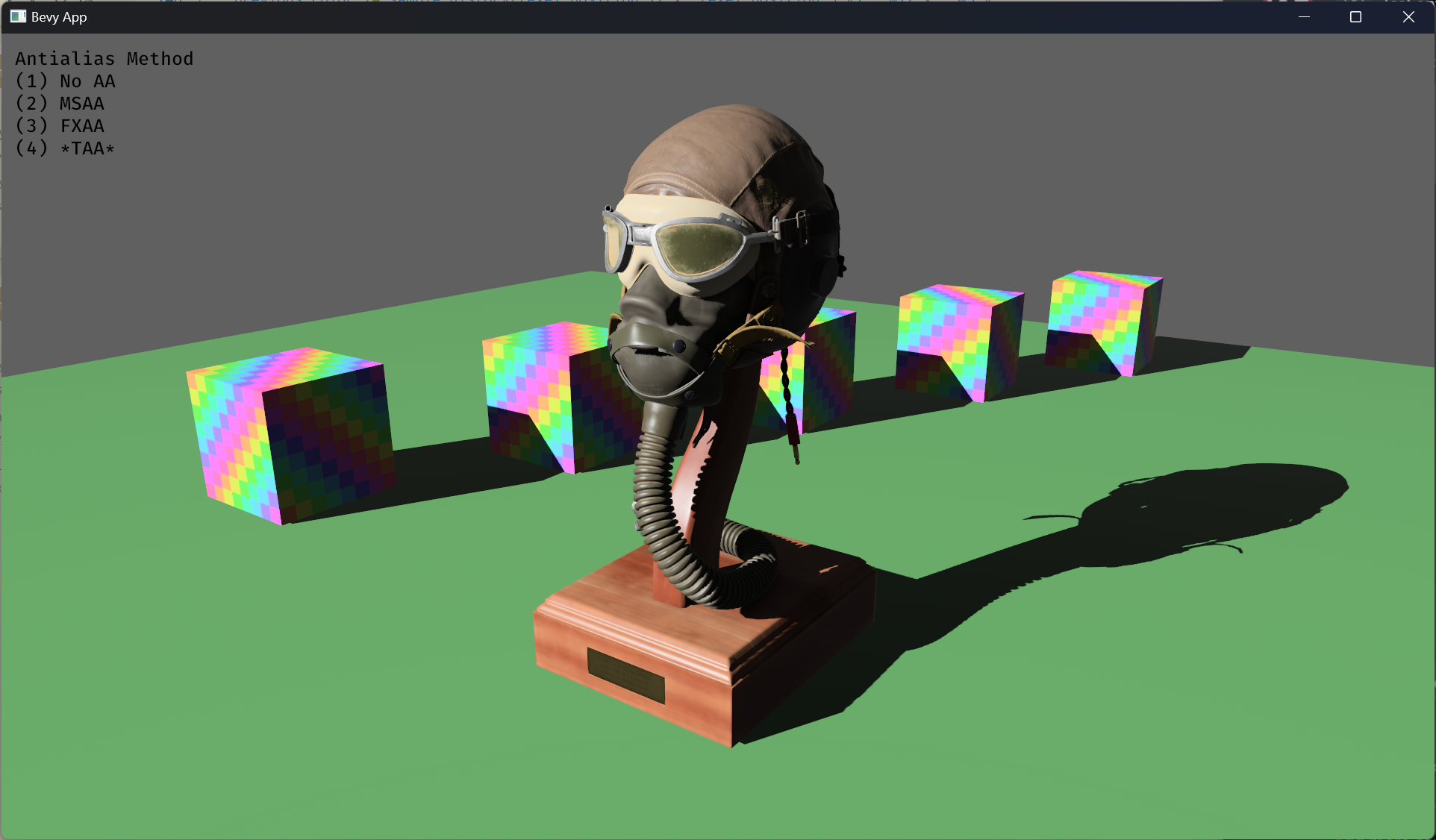

Temporal Antialiasing (TAA) (#7291)

# Objective

- Implement an alternative antialias technique

- TAA scales based off of view resolution, not geometry complexity

- TAA filters textures, firefly pixels, and other aliasing not covered

by MSAA

- TAA additionally will reduce noise / increase quality in future

stochastic rendering techniques

- Closes https://github.com/bevyengine/bevy/issues/3663

## Solution

- Add a temporal jitter component

- Add a motion vector prepass

- Add a TemporalAntialias component and plugin

- Combine existing MSAA and FXAA examples and add TAA

## Followup Work

- Prepass motion vector support for skinned meshes

- Move uniforms needed for motion vectors into a separate bind group,

instead of using different bind group layouts

- Reuse previous frame's GPU view buffer for motion vectors, instead of

recomputing

- Mip biasing for sharper textures, and or unjitter texture UVs

https://github.com/bevyengine/bevy/issues/7323

- Compute shader for better performance

- Investigate FSR techniques

- Historical depth based disocclusion tests, for geometry disocclusion

- Historical luminance/hue based tests, for shading disocclusion

- Pixel "locks" to reduce blending rate / revamp history confidence

mechanism

- Orthographic camera support for TemporalJitter

- Figure out COD's 1-tap bicubic filter

---

## Changelog

- Added MotionVectorPrepass and TemporalJitter

- Added TemporalAntialiasPlugin, TemporalAntialiasBundle, and

TemporalAntialiasSettings

---------

Co-authored-by: IceSentry <c.giguere42@gmail.com>

Co-authored-by: IceSentry <IceSentry@users.noreply.github.com>

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: Daniel Chia <danstryder@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

Co-authored-by: Brandon Dyer <brandondyer64@gmail.com>

Co-authored-by: Edgar Geier <geieredgar@gmail.com>

2023-03-27 22:22:40 +00:00

|

|

|

GpuPointLights, LightMeta, NotShadowCaster, NotShadowReceiver, PreviousGlobalTransform,

|

Reorder render sets, refactor bevy_sprite to take advantage (#9236)

This is a continuation of this PR: #8062

# Objective

- Reorder render schedule sets to allow data preparation when phase item

order is known to support improved batching

- Part of the batching/instancing etc plan from here:

https://github.com/bevyengine/bevy/issues/89#issuecomment-1379249074

- The original idea came from @inodentry and proved to be a good one.

Thanks!

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the new

ordering

## Solution

- Move `Prepare` and `PrepareFlush` after `PhaseSortFlush`

- Add a `PrepareAssets` set that runs in parallel with other systems and

sets in the render schedule.

- Put prepare_assets systems in the `PrepareAssets` set

- If explicit dependencies are needed on Mesh or Material RenderAssets

then depend on the appropriate system.

- Add `ManageViews` and `ManageViewsFlush` sets between

`ExtractCommands` and Queue

- Move `queue_mesh*_bind_group` to the Prepare stage

- Rename them to `prepare_`

- Put systems that prepare resources (buffers, textures, etc.) into a

`PrepareResources` set inside `Prepare`

- Put the `prepare_..._bind_group` systems into a `PrepareBindGroup` set

after `PrepareResources`

- Move `prepare_lights` to the `ManageViews` set

- `prepare_lights` creates views and this must happen before `Queue`

- This system needs refactoring to stop handling all responsibilities

- Gather lights, sort, and create shadow map views. Store sorted light

entities in a resource

- Remove `BatchedPhaseItem`

- Replace `batch_range` with `batch_size` representing how many items to

skip after rendering the item or to skip the item entirely if

`batch_size` is 0.

- `queue_sprites` has been split into `queue_sprites` for queueing phase

items and `prepare_sprites` for batching after the `PhaseSort`

- `PhaseItem`s are still inserted in `queue_sprites`

- After sorting adjacent compatible sprite phase items are accumulated

into `SpriteBatch` components on the first entity of each batch,

containing a range of vertex indices. The associated `PhaseItem`'s

`batch_size` is updated appropriately.

- `SpriteBatch` items are then drawn skipping over the other items in

the batch based on the value in `batch_size`

- A very similar refactor was performed on `bevy_ui`

---

## Changelog

Changed:

- Reordered and reworked render app schedule sets. The main change is

that data is extracted, queued, sorted, and then prepared when the order

of data is known.

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the

reordering.

## Migration Guide

- Assets such as materials and meshes should now be created in

`PrepareAssets` e.g. `prepare_assets<Mesh>`

- Queueing entities to `RenderPhase`s continues to be done in `Queue`

e.g. `queue_sprites`

- Preparing resources (textures, buffers, etc.) should now be done in

`PrepareResources`, e.g. `prepare_prepass_textures`,

`prepare_mesh_uniforms`

- Prepare bind groups should now be done in `PrepareBindGroups` e.g.

`prepare_mesh_bind_group`

- Any batching or instancing can now be done in `Prepare` where the

order of the phase items is known e.g. `prepare_sprites`

## Next Steps

- Introduce some generic mechanism to ensure items that can be batched

are grouped in the phase item order, currently you could easily have

`[sprite at z 0, mesh at z 0, sprite at z 0]` preventing batching.

- Investigate improved orderings for building the MeshUniform buffer

- Implementing batching across the rest of bevy

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-08-27 14:33:49 +00:00

|

|

|

ScreenSpaceAmbientOcclusionTextures, Shadow, ShadowSamplers, ViewClusterBindings,

|

|

|

|

|

ViewFogUniformOffset, ViewLightsUniformOffset, ViewShadowBindings,

|

|

|

|

|

CLUSTERED_FORWARD_STORAGE_BUFFER_COUNT, MAX_CASCADES_PER_LIGHT, MAX_DIRECTIONAL_LIGHTS,

|

2021-11-19 21:16:58 +00:00

|

|

|

};

|

2023-03-18 01:45:34 +00:00

|

|

|

use bevy_app::Plugin;

|

Add morph targets (#8158)

# Objective

- Add morph targets to `bevy_pbr` (closes #5756) & load them from glTF

- Supersedes #3722

- Fixes #6814

[Morph targets][1] (also known as shape interpolation, shape keys, or

blend shapes) allow animating individual vertices with fine grained

controls. This is typically used for facial expressions. By specifying

multiple poses as vertex offset, and providing a set of weight of each

pose, it is possible to define surprisingly realistic transitions

between poses. Blending between multiple poses also allow composition.

Morph targets are part of the [gltf standard][2] and are a feature of

Unity and Unreal, and babylone.js, it is only natural to implement them

in bevy.

## Solution

This implementation of morph targets uses a 3d texture where each pixel

is a component of an animated attribute. Each layer is a different

target. We use a 2d texture for each target, because the number of

attribute×components×animated vertices is expected to always exceed the

maximum pixel row size limit of webGL2. It copies fairly closely the way

skinning is implemented on the CPU side, while on the GPU side, the

shader morph target implementation is a relatively trivial detail.

We add an optional `morph_texture` to the `Mesh` struct. The

`morph_texture` is built through a method that accepts an iterator over

attribute buffers.

The `MorphWeights` component, user-accessible, controls the blend of

poses used by mesh instances (so that multiple copy of the same mesh may

have different weights), all the weights are uploaded to a uniform

buffer of 256 `f32`. We limit to 16 poses per mesh, and a total of 256

poses.

More literature:

* Old babylone.js implementation (vertex attribute-based):

https://www.eternalcoding.com/dev-log-1-morph-targets/

* Babylone.js implementation (similar to ours):

https://www.youtube.com/watch?v=LBPRmGgU0PE

* GPU gems 3:

https://developer.nvidia.com/gpugems/gpugems3/part-i-geometry/chapter-3-directx-10-blend-shapes-breaking-limits

* Development discord thread

https://discord.com/channels/691052431525675048/1083325980615114772

https://user-images.githubusercontent.com/26321040/231181046-3bca2ab2-d4d9-472e-8098-639f1871ce2e.mp4

https://github.com/bevyengine/bevy/assets/26321040/d2a0c544-0ef8-45cf-9f99-8c3792f5a258

## Acknowledgements

* Thanks to `storytold` for sponsoring the feature

* Thanks to `superdump` and `james7132` for guidance and help figuring

out stuff

## Future work

- Handling of less and more attributes (eg: animated uv, animated

arbitrary attributes)

- Dynamic pose allocation (so that zero-weighted poses aren't uploaded

to GPU for example, enables much more total poses)

- Better animation API, see #8357

----

## Changelog

- Add morph targets to bevy meshes

- Support up to 64 poses per mesh of individually up to 116508 vertices,

animation currently strictly limited to the position, normal and tangent

attributes.

- Load a morph target using `Mesh::set_morph_targets`

- Add `VisitMorphTargets` and `VisitMorphAttributes` traits to

`bevy_render`, this allows defining morph targets (a fairly complex and

nested data structure) through iterators (ie: single copy instead of

passing around buffers), see documentation of those traits for details

- Add `MorphWeights` component exported by `bevy_render`

- `MorphWeights` control mesh's morph target weights, blending between

various poses defined as morph targets.

- `MorphWeights` are directly inherited by direct children (single level

of hierarchy) of an entity. This allows controlling several mesh

primitives through a unique entity _as per GLTF spec_.

- Add `MorphTargetNames` component, naming each indices of loaded morph

targets.

- Load morph targets weights and buffers in `bevy_gltf`

- handle morph targets animations in `bevy_animation` (previously, it

was a `warn!` log)

- Add the `MorphStressTest.gltf` asset for morph targets testing, taken

from the glTF samples repo, CC0.

- Add morph target manipulation to `scene_viewer`

- Separate the animation code in `scene_viewer` from the rest of the

code, reducing `#[cfg(feature)]` noise

- Add the `morph_targets.rs` example to show off how to manipulate morph

targets, loading `MorpStressTest.gltf`

## Migration Guide

- (very specialized, unlikely to be touched by 3rd parties)

- `MeshPipeline` now has a single `mesh_layouts` field rather than

separate `mesh_layout` and `skinned_mesh_layout` fields. You should

handle all possible mesh bind group layouts in your implementation

- You should also handle properly the new `MORPH_TARGETS` shader def and

mesh pipeline key. A new function is exposed to make this easier:

`setup_moprh_and_skinning_defs`

- The `MeshBindGroup` is now `MeshBindGroups`, cached bind groups are

now accessed through the `get` method.

[1]: https://en.wikipedia.org/wiki/Morph_target_animation

[2]:

https://registry.khronos.org/glTF/specs/2.0/glTF-2.0.html#morph-targets

---------

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2023-06-22 20:00:01 +00:00

|

|

|

use bevy_asset::{load_internal_asset, Assets, Handle, HandleId, HandleUntyped};

|

2023-02-19 20:38:13 +00:00

|

|

|

use bevy_core_pipeline::{

|

Reorder render sets, refactor bevy_sprite to take advantage (#9236)

This is a continuation of this PR: #8062

# Objective

- Reorder render schedule sets to allow data preparation when phase item

order is known to support improved batching

- Part of the batching/instancing etc plan from here:

https://github.com/bevyengine/bevy/issues/89#issuecomment-1379249074

- The original idea came from @inodentry and proved to be a good one.

Thanks!

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the new

ordering

## Solution

- Move `Prepare` and `PrepareFlush` after `PhaseSortFlush`

- Add a `PrepareAssets` set that runs in parallel with other systems and

sets in the render schedule.

- Put prepare_assets systems in the `PrepareAssets` set

- If explicit dependencies are needed on Mesh or Material RenderAssets

then depend on the appropriate system.

- Add `ManageViews` and `ManageViewsFlush` sets between

`ExtractCommands` and Queue

- Move `queue_mesh*_bind_group` to the Prepare stage

- Rename them to `prepare_`

- Put systems that prepare resources (buffers, textures, etc.) into a

`PrepareResources` set inside `Prepare`

- Put the `prepare_..._bind_group` systems into a `PrepareBindGroup` set

after `PrepareResources`

- Move `prepare_lights` to the `ManageViews` set

- `prepare_lights` creates views and this must happen before `Queue`

- This system needs refactoring to stop handling all responsibilities

- Gather lights, sort, and create shadow map views. Store sorted light

entities in a resource

- Remove `BatchedPhaseItem`

- Replace `batch_range` with `batch_size` representing how many items to

skip after rendering the item or to skip the item entirely if

`batch_size` is 0.

- `queue_sprites` has been split into `queue_sprites` for queueing phase

items and `prepare_sprites` for batching after the `PhaseSort`

- `PhaseItem`s are still inserted in `queue_sprites`

- After sorting adjacent compatible sprite phase items are accumulated

into `SpriteBatch` components on the first entity of each batch,

containing a range of vertex indices. The associated `PhaseItem`'s

`batch_size` is updated appropriately.

- `SpriteBatch` items are then drawn skipping over the other items in

the batch based on the value in `batch_size`

- A very similar refactor was performed on `bevy_ui`

---

## Changelog

Changed:

- Reordered and reworked render app schedule sets. The main change is

that data is extracted, queued, sorted, and then prepared when the order

of data is known.

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the

reordering.

## Migration Guide

- Assets such as materials and meshes should now be created in

`PrepareAssets` e.g. `prepare_assets<Mesh>`

- Queueing entities to `RenderPhase`s continues to be done in `Queue`

e.g. `queue_sprites`

- Preparing resources (textures, buffers, etc.) should now be done in

`PrepareResources`, e.g. `prepare_prepass_textures`,

`prepare_mesh_uniforms`

- Prepare bind groups should now be done in `PrepareBindGroups` e.g.

`prepare_mesh_bind_group`

- Any batching or instancing can now be done in `Prepare` where the

order of the phase items is known e.g. `prepare_sprites`

## Next Steps

- Introduce some generic mechanism to ensure items that can be batched

are grouped in the phase item order, currently you could easily have

`[sprite at z 0, mesh at z 0, sprite at z 0]` preventing batching.

- Investigate improved orderings for building the MeshUniform buffer

- Implementing batching across the rest of bevy

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-08-27 14:33:49 +00:00

|

|

|

core_3d::{AlphaMask3d, Opaque3d, Transparent3d},

|

2023-02-19 20:38:13 +00:00

|

|

|

prepass::ViewPrepassTextures,

|

|

|

|

|

tonemapping::{

|

|

|

|

|

get_lut_bind_group_layout_entries, get_lut_bindings, Tonemapping, TonemappingLuts,

|

|

|

|

|

},

|

|

|

|

|

};

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

use bevy_ecs::{

|

|

|

|

|

prelude::*,

|

2023-01-04 01:13:30 +00:00

|

|

|

query::ROQueryItem,

|

2022-06-11 09:13:37 +00:00

|

|

|

system::{lifetimeless::*, SystemParamItem, SystemState},

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

};

|

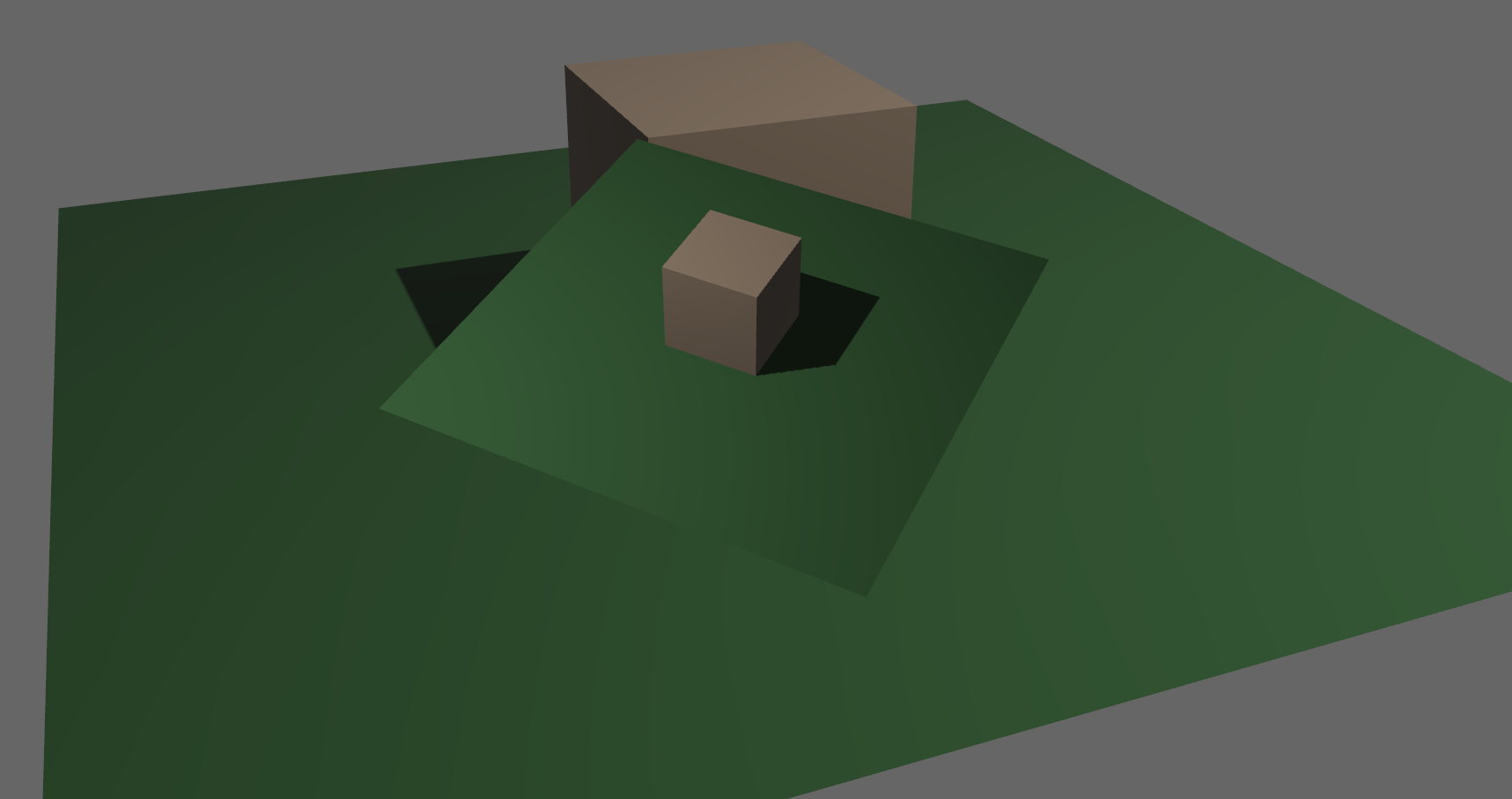

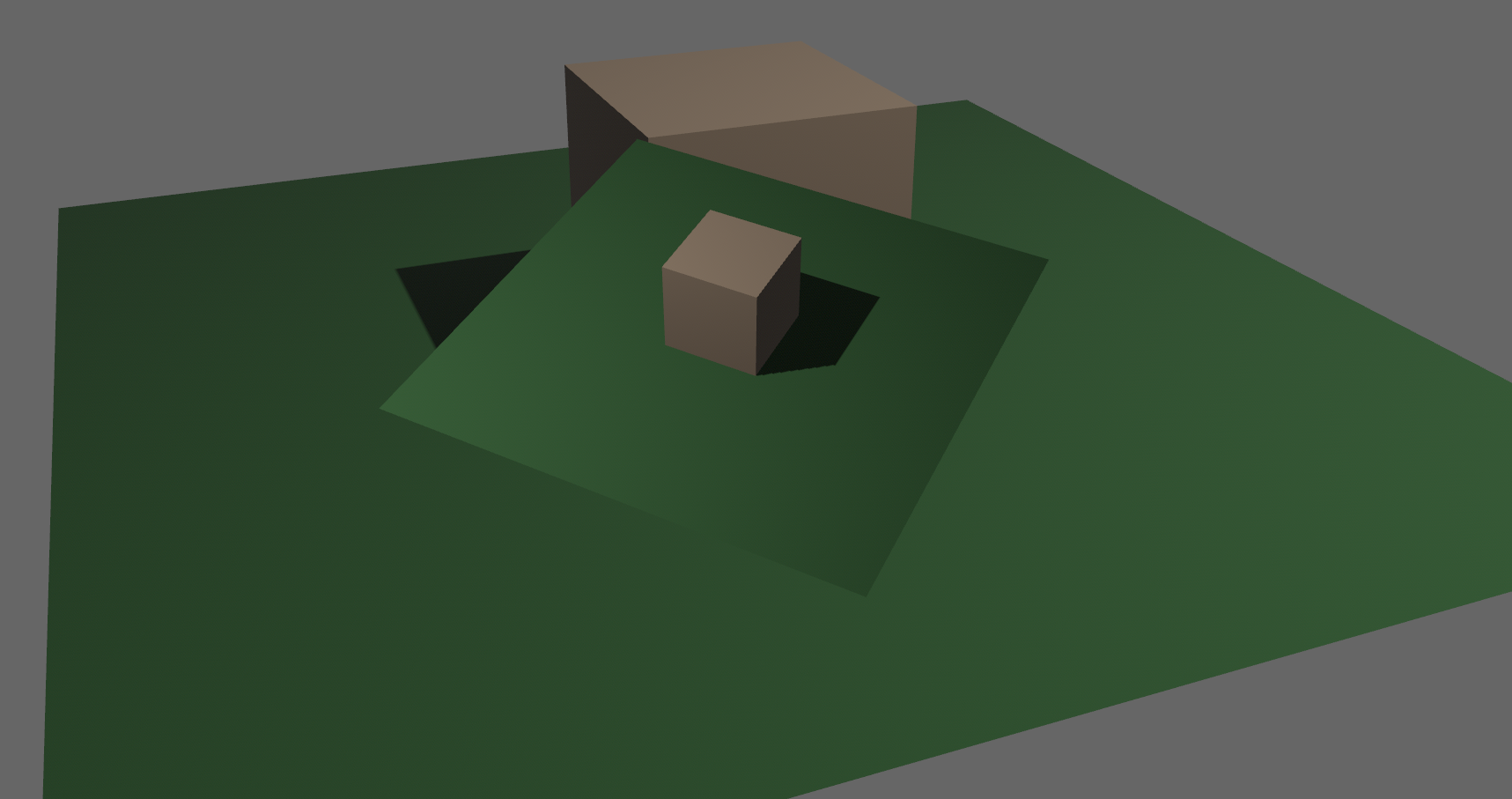

Reduce the size of MeshUniform to improve performance (#9416)

# Objective

- Significantly reduce the size of MeshUniform by only including

necessary data.

## Solution

Local to world, model transforms are affine. This means they only need a

4x3 matrix to represent them.

`MeshUniform` stores the current, and previous model transforms, and the

inverse transpose of the current model transform, all as 4x4 matrices.

Instead we can store the current, and previous model transforms as 4x3

matrices, and we only need the upper-left 3x3 part of the inverse

transpose of the current model transform. This change allows us to

reduce the serialized MeshUniform size from 208 bytes to 144 bytes,

which is over a 30% saving in data to serialize, and VRAM bandwidth and

space.

## Benchmarks

On an M1 Max, running `many_cubes -- sphere`, main is in yellow, this PR

is in red:

<img width="1484" alt="Screenshot 2023-08-11 at 02 36 43"

src="https://github.com/bevyengine/bevy/assets/302146/7d99c7b3-f2bb-4004-a8d0-4c00f755cb0d">

A reduction in frame time of ~14%.

---

## Changelog

- Changed: Redefined `MeshUniform` to improve performance by using 4x3

affine transforms and reconstructing 4x4 matrices in the shader. Helper

functions were added to `bevy_pbr::mesh_functions` to unpack the data.

`affine_to_square` converts the packed 4x3 in 3x4 matrix data to a 4x4

matrix. `mat2x4_f32_to_mat3x3` converts the 3x3 in mat2x4 + f32 matrix

data back into a 3x3.

## Migration Guide

Shader code before:

```

var model = mesh[instance_index].model;

```

Shader code after:

```

#import bevy_pbr::mesh_functions affine_to_square

var model = affine_to_square(mesh[instance_index].model);

```

2023-08-15 06:00:23 +00:00

|

|

|

use bevy_math::{Affine3, Affine3A, Mat4, Vec2, Vec3Swizzles, Vec4};

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

use bevy_reflect::TypeUuid;

|

2021-12-14 03:58:23 +00:00

|

|

|

use bevy_render::{

|

2022-09-28 04:20:27 +00:00

|

|

|

globals::{GlobalsBuffer, GlobalsUniform},

|

2022-03-29 18:31:13 +00:00

|

|

|

mesh::{

|

|

|

|

|

skinning::{SkinnedMesh, SkinnedMeshInverseBindposes},

|

Add morph targets (#8158)

# Objective

- Add morph targets to `bevy_pbr` (closes #5756) & load them from glTF

- Supersedes #3722

- Fixes #6814

[Morph targets][1] (also known as shape interpolation, shape keys, or

blend shapes) allow animating individual vertices with fine grained

controls. This is typically used for facial expressions. By specifying

multiple poses as vertex offset, and providing a set of weight of each

pose, it is possible to define surprisingly realistic transitions

between poses. Blending between multiple poses also allow composition.

Morph targets are part of the [gltf standard][2] and are a feature of

Unity and Unreal, and babylone.js, it is only natural to implement them

in bevy.

## Solution

This implementation of morph targets uses a 3d texture where each pixel

is a component of an animated attribute. Each layer is a different

target. We use a 2d texture for each target, because the number of

attribute×components×animated vertices is expected to always exceed the

maximum pixel row size limit of webGL2. It copies fairly closely the way

skinning is implemented on the CPU side, while on the GPU side, the

shader morph target implementation is a relatively trivial detail.

We add an optional `morph_texture` to the `Mesh` struct. The

`morph_texture` is built through a method that accepts an iterator over

attribute buffers.

The `MorphWeights` component, user-accessible, controls the blend of

poses used by mesh instances (so that multiple copy of the same mesh may

have different weights), all the weights are uploaded to a uniform

buffer of 256 `f32`. We limit to 16 poses per mesh, and a total of 256

poses.

More literature:

* Old babylone.js implementation (vertex attribute-based):

https://www.eternalcoding.com/dev-log-1-morph-targets/

* Babylone.js implementation (similar to ours):

https://www.youtube.com/watch?v=LBPRmGgU0PE

* GPU gems 3:

https://developer.nvidia.com/gpugems/gpugems3/part-i-geometry/chapter-3-directx-10-blend-shapes-breaking-limits

* Development discord thread

https://discord.com/channels/691052431525675048/1083325980615114772

https://user-images.githubusercontent.com/26321040/231181046-3bca2ab2-d4d9-472e-8098-639f1871ce2e.mp4

https://github.com/bevyengine/bevy/assets/26321040/d2a0c544-0ef8-45cf-9f99-8c3792f5a258

## Acknowledgements

* Thanks to `storytold` for sponsoring the feature

* Thanks to `superdump` and `james7132` for guidance and help figuring

out stuff

## Future work

- Handling of less and more attributes (eg: animated uv, animated

arbitrary attributes)

- Dynamic pose allocation (so that zero-weighted poses aren't uploaded

to GPU for example, enables much more total poses)

- Better animation API, see #8357

----

## Changelog

- Add morph targets to bevy meshes

- Support up to 64 poses per mesh of individually up to 116508 vertices,

animation currently strictly limited to the position, normal and tangent

attributes.

- Load a morph target using `Mesh::set_morph_targets`

- Add `VisitMorphTargets` and `VisitMorphAttributes` traits to

`bevy_render`, this allows defining morph targets (a fairly complex and

nested data structure) through iterators (ie: single copy instead of

passing around buffers), see documentation of those traits for details

- Add `MorphWeights` component exported by `bevy_render`

- `MorphWeights` control mesh's morph target weights, blending between

various poses defined as morph targets.

- `MorphWeights` are directly inherited by direct children (single level

of hierarchy) of an entity. This allows controlling several mesh

primitives through a unique entity _as per GLTF spec_.

- Add `MorphTargetNames` component, naming each indices of loaded morph

targets.

- Load morph targets weights and buffers in `bevy_gltf`

- handle morph targets animations in `bevy_animation` (previously, it

was a `warn!` log)

- Add the `MorphStressTest.gltf` asset for morph targets testing, taken

from the glTF samples repo, CC0.

- Add morph target manipulation to `scene_viewer`

- Separate the animation code in `scene_viewer` from the rest of the

code, reducing `#[cfg(feature)]` noise

- Add the `morph_targets.rs` example to show off how to manipulate morph

targets, loading `MorpStressTest.gltf`

## Migration Guide

- (very specialized, unlikely to be touched by 3rd parties)

- `MeshPipeline` now has a single `mesh_layouts` field rather than

separate `mesh_layout` and `skinned_mesh_layout` fields. You should

handle all possible mesh bind group layouts in your implementation

- You should also handle properly the new `MORPH_TARGETS` shader def and

mesh pipeline key. A new function is exposed to make this easier:

`setup_moprh_and_skinning_defs`

- The `MeshBindGroup` is now `MeshBindGroups`, cached bind groups are

now accessed through the `get` method.

[1]: https://en.wikipedia.org/wiki/Morph_target_animation

[2]:

https://registry.khronos.org/glTF/specs/2.0/glTF-2.0.html#morph-targets

---------

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2023-06-22 20:00:01 +00:00

|

|

|

GpuBufferInfo, InnerMeshVertexBufferLayout, Mesh, MeshVertexBufferLayout,

|

|

|

|

|

VertexAttributeDescriptor,

|

2022-03-29 18:31:13 +00:00

|

|

|

},

|

2023-01-19 22:11:13 +00:00

|

|

|

prelude::Msaa,

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

render_asset::RenderAssets,

|

Reorder render sets, refactor bevy_sprite to take advantage (#9236)

This is a continuation of this PR: #8062

# Objective

- Reorder render schedule sets to allow data preparation when phase item

order is known to support improved batching

- Part of the batching/instancing etc plan from here:

https://github.com/bevyengine/bevy/issues/89#issuecomment-1379249074

- The original idea came from @inodentry and proved to be a good one.

Thanks!

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the new

ordering

## Solution

- Move `Prepare` and `PrepareFlush` after `PhaseSortFlush`

- Add a `PrepareAssets` set that runs in parallel with other systems and

sets in the render schedule.

- Put prepare_assets systems in the `PrepareAssets` set

- If explicit dependencies are needed on Mesh or Material RenderAssets

then depend on the appropriate system.

- Add `ManageViews` and `ManageViewsFlush` sets between

`ExtractCommands` and Queue

- Move `queue_mesh*_bind_group` to the Prepare stage

- Rename them to `prepare_`

- Put systems that prepare resources (buffers, textures, etc.) into a

`PrepareResources` set inside `Prepare`

- Put the `prepare_..._bind_group` systems into a `PrepareBindGroup` set

after `PrepareResources`

- Move `prepare_lights` to the `ManageViews` set

- `prepare_lights` creates views and this must happen before `Queue`

- This system needs refactoring to stop handling all responsibilities

- Gather lights, sort, and create shadow map views. Store sorted light

entities in a resource

- Remove `BatchedPhaseItem`

- Replace `batch_range` with `batch_size` representing how many items to

skip after rendering the item or to skip the item entirely if

`batch_size` is 0.

- `queue_sprites` has been split into `queue_sprites` for queueing phase

items and `prepare_sprites` for batching after the `PhaseSort`

- `PhaseItem`s are still inserted in `queue_sprites`

- After sorting adjacent compatible sprite phase items are accumulated

into `SpriteBatch` components on the first entity of each batch,

containing a range of vertex indices. The associated `PhaseItem`'s

`batch_size` is updated appropriately.

- `SpriteBatch` items are then drawn skipping over the other items in

the batch based on the value in `batch_size`

- A very similar refactor was performed on `bevy_ui`

---

## Changelog

Changed:

- Reordered and reworked render app schedule sets. The main change is

that data is extracted, queued, sorted, and then prepared when the order

of data is known.

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the

reordering.

## Migration Guide

- Assets such as materials and meshes should now be created in

`PrepareAssets` e.g. `prepare_assets<Mesh>`

- Queueing entities to `RenderPhase`s continues to be done in `Queue`

e.g. `queue_sprites`

- Preparing resources (textures, buffers, etc.) should now be done in

`PrepareResources`, e.g. `prepare_prepass_textures`,

`prepare_mesh_uniforms`

- Prepare bind groups should now be done in `PrepareBindGroups` e.g.

`prepare_mesh_bind_group`

- Any batching or instancing can now be done in `Prepare` where the

order of the phase items is known e.g. `prepare_sprites`

## Next Steps

- Introduce some generic mechanism to ensure items that can be batched

are grouped in the phase item order, currently you could easily have

`[sprite at z 0, mesh at z 0, sprite at z 0]` preventing batching.

- Investigate improved orderings for building the MeshUniform buffer

- Implementing batching across the rest of bevy

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-08-27 14:33:49 +00:00

|

|

|

render_phase::{PhaseItem, RenderCommand, RenderCommandResult, RenderPhase, TrackedRenderPass},

|

Migrate to encase from crevice (#4339)

# Objective

- Unify buffer APIs

- Also see #4272

## Solution

- Replace vendored `crevice` with `encase`

---

## Changelog

Changed `StorageBuffer`

Added `DynamicStorageBuffer`

Replaced `UniformVec` with `UniformBuffer`

Replaced `DynamicUniformVec` with `DynamicUniformBuffer`

## Migration Guide

### `StorageBuffer`

removed `set_body()`, `values()`, `values_mut()`, `clear()`, `push()`, `append()`

added `set()`, `get()`, `get_mut()`

### `UniformVec` -> `UniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `len()`, `is_empty()`, `capacity()`, `push()`, `reserve()`, `clear()`, `values()`

added `set()`, `get()`

### `DynamicUniformVec` -> `DynamicUniformBuffer`

renamed `uniform_buffer()` to `buffer()`

removed `capacity()`, `reserve()`

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2022-05-18 21:09:21 +00:00

|

|

|

render_resource::*,

|

2022-10-26 20:13:59 +00:00

|

|

|

renderer::{RenderDevice, RenderQueue},

|

|

|

|

|

texture::{

|

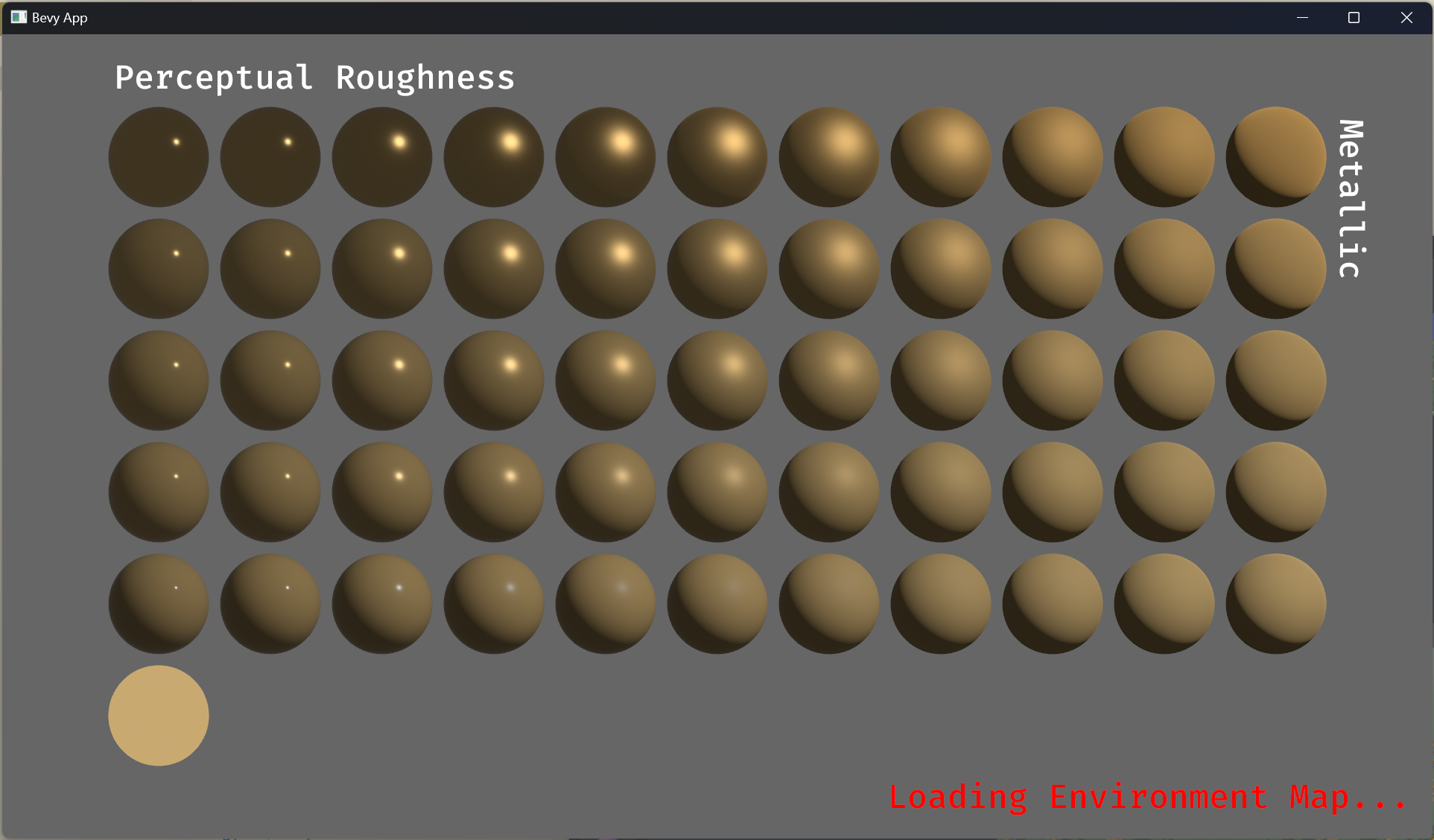

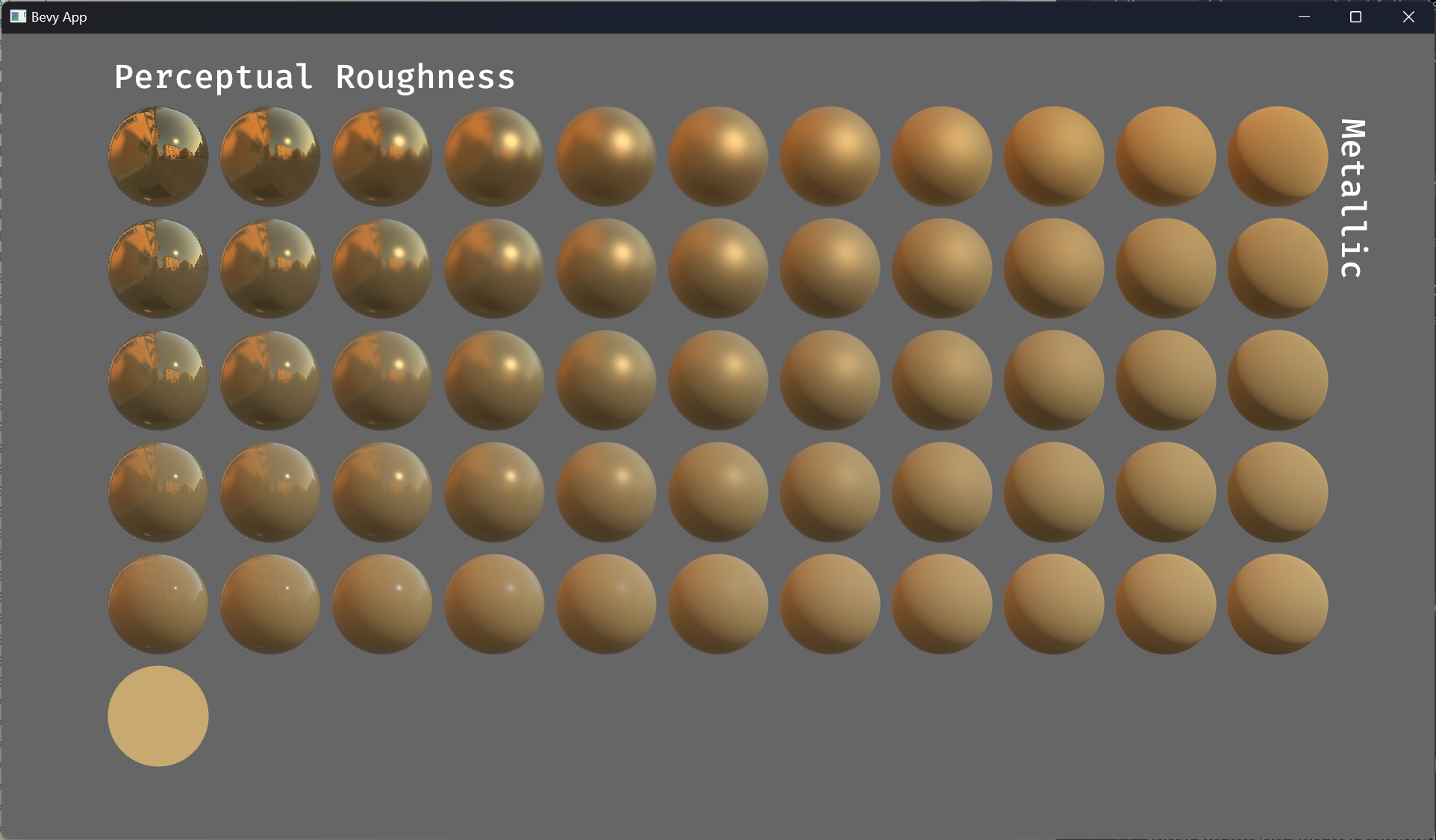

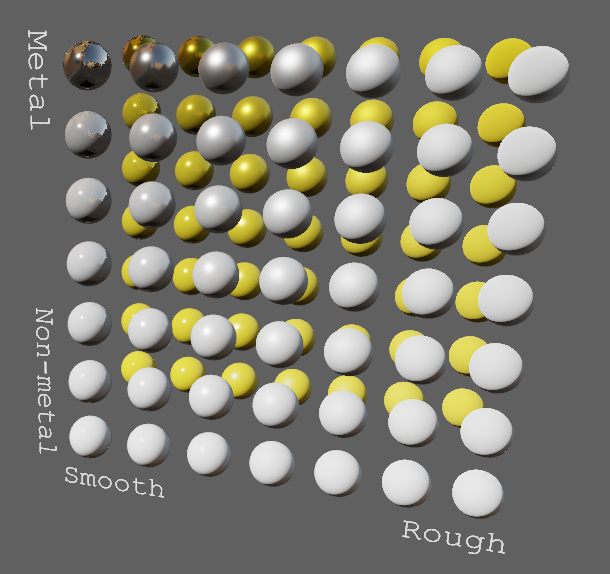

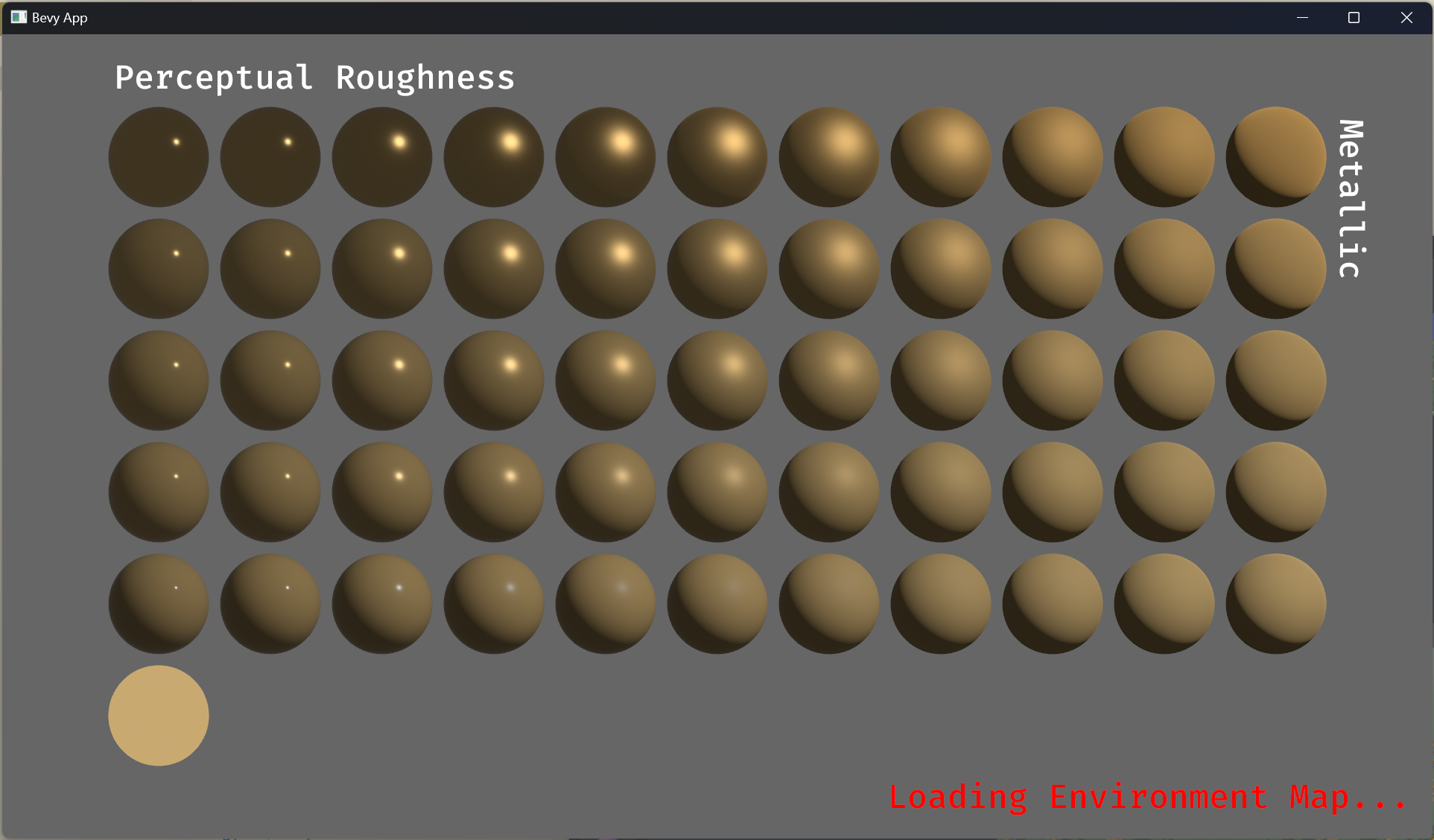

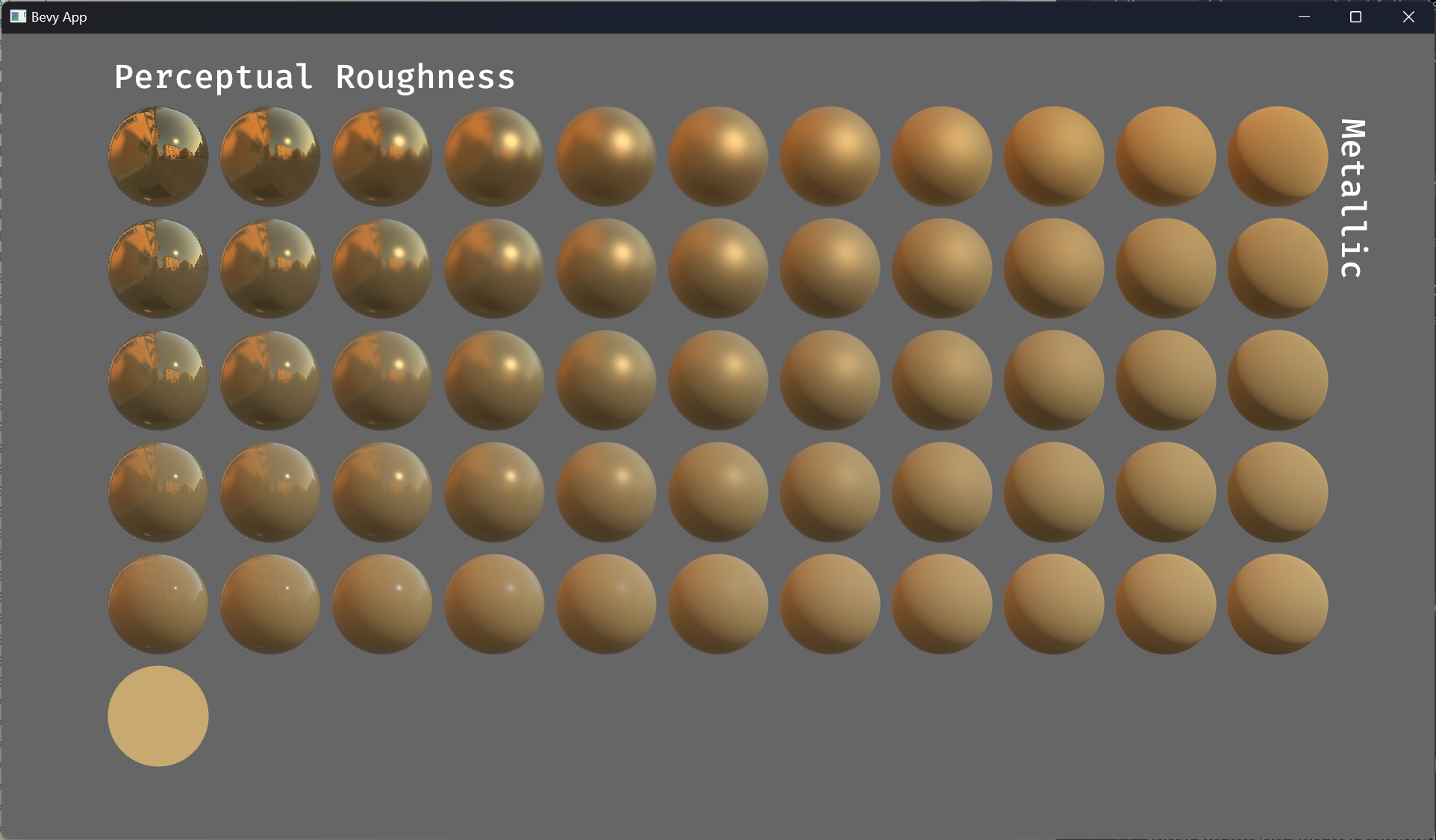

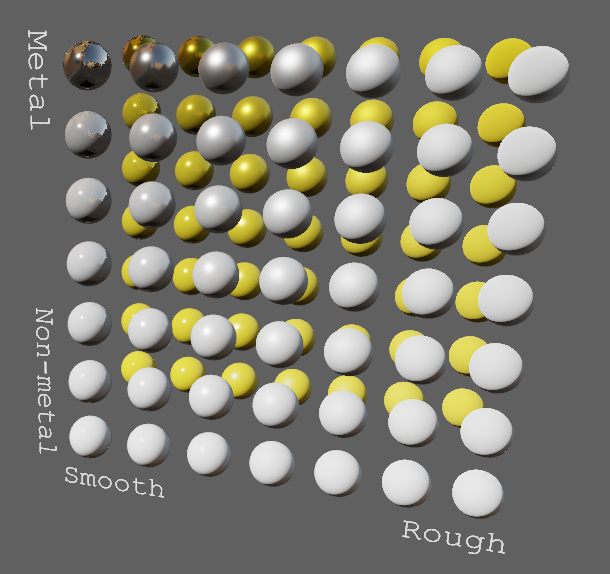

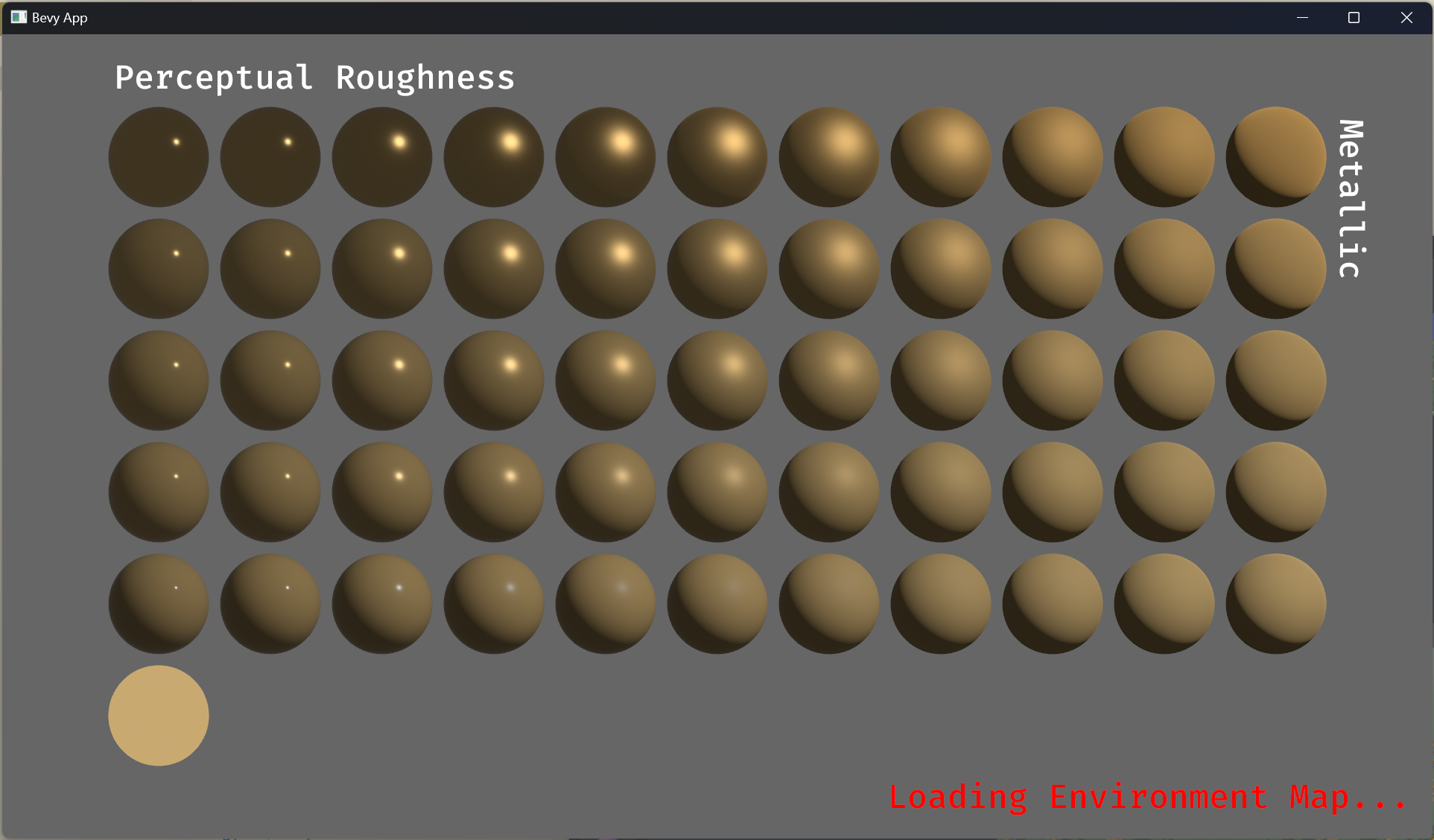

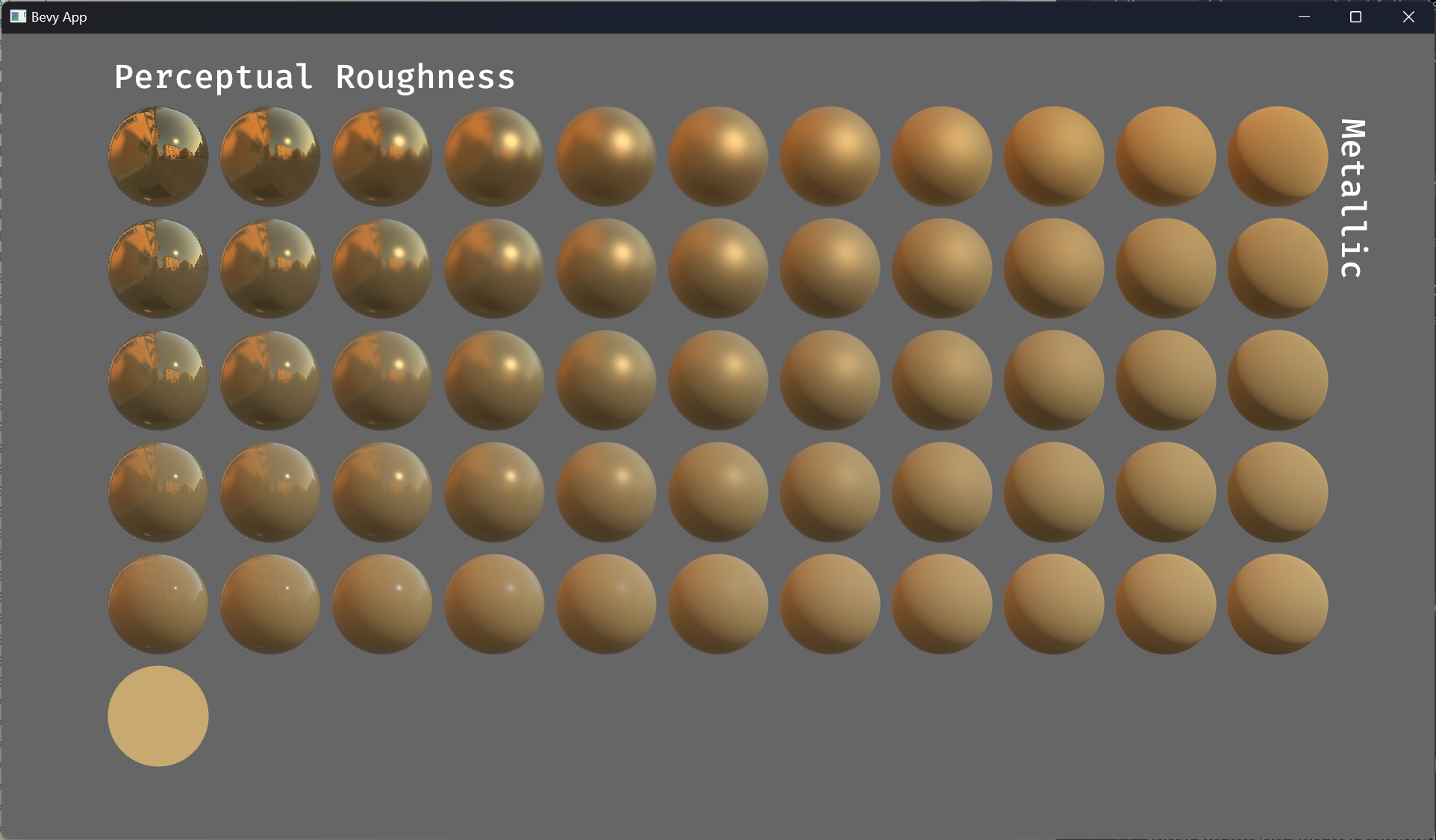

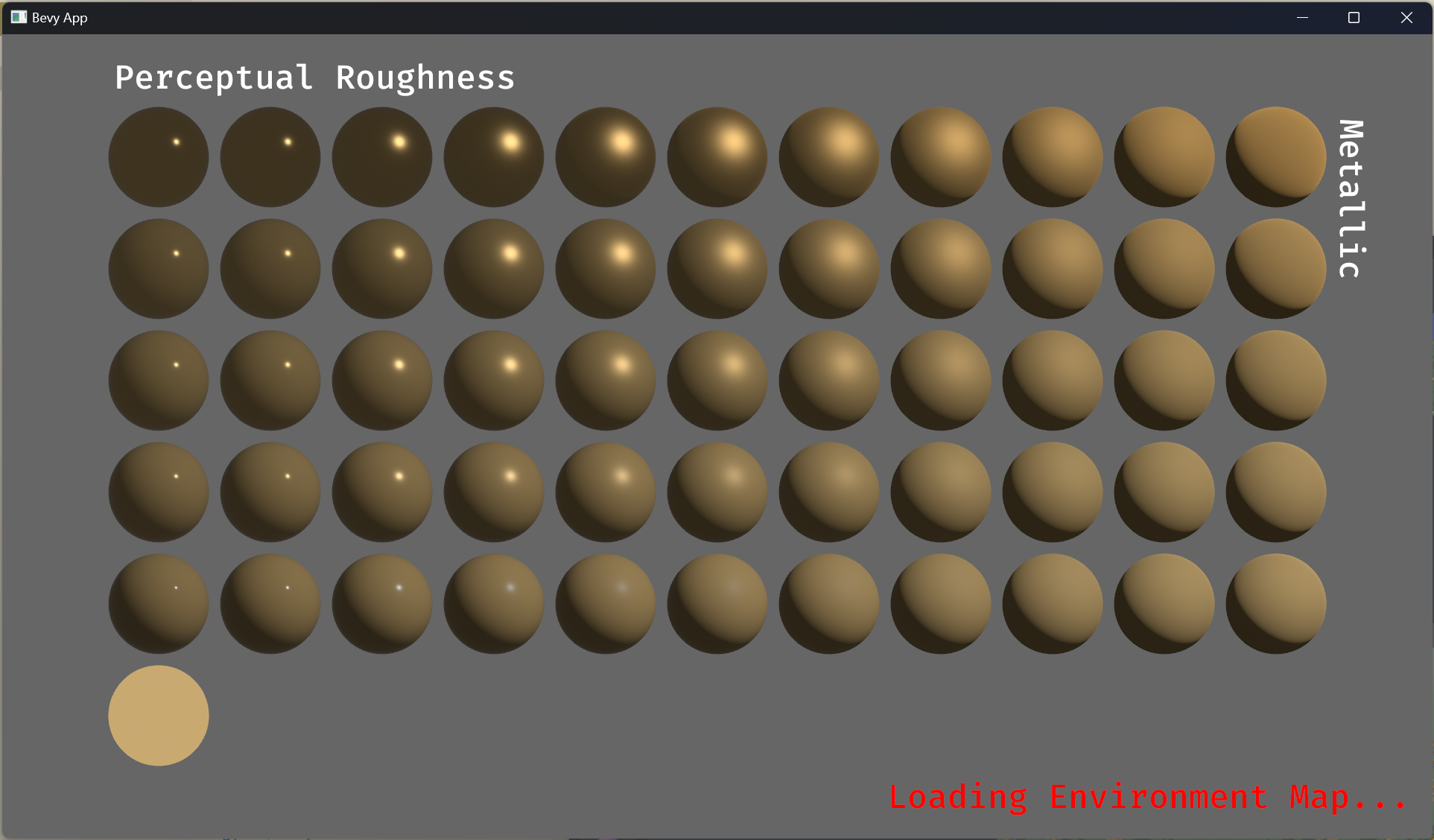

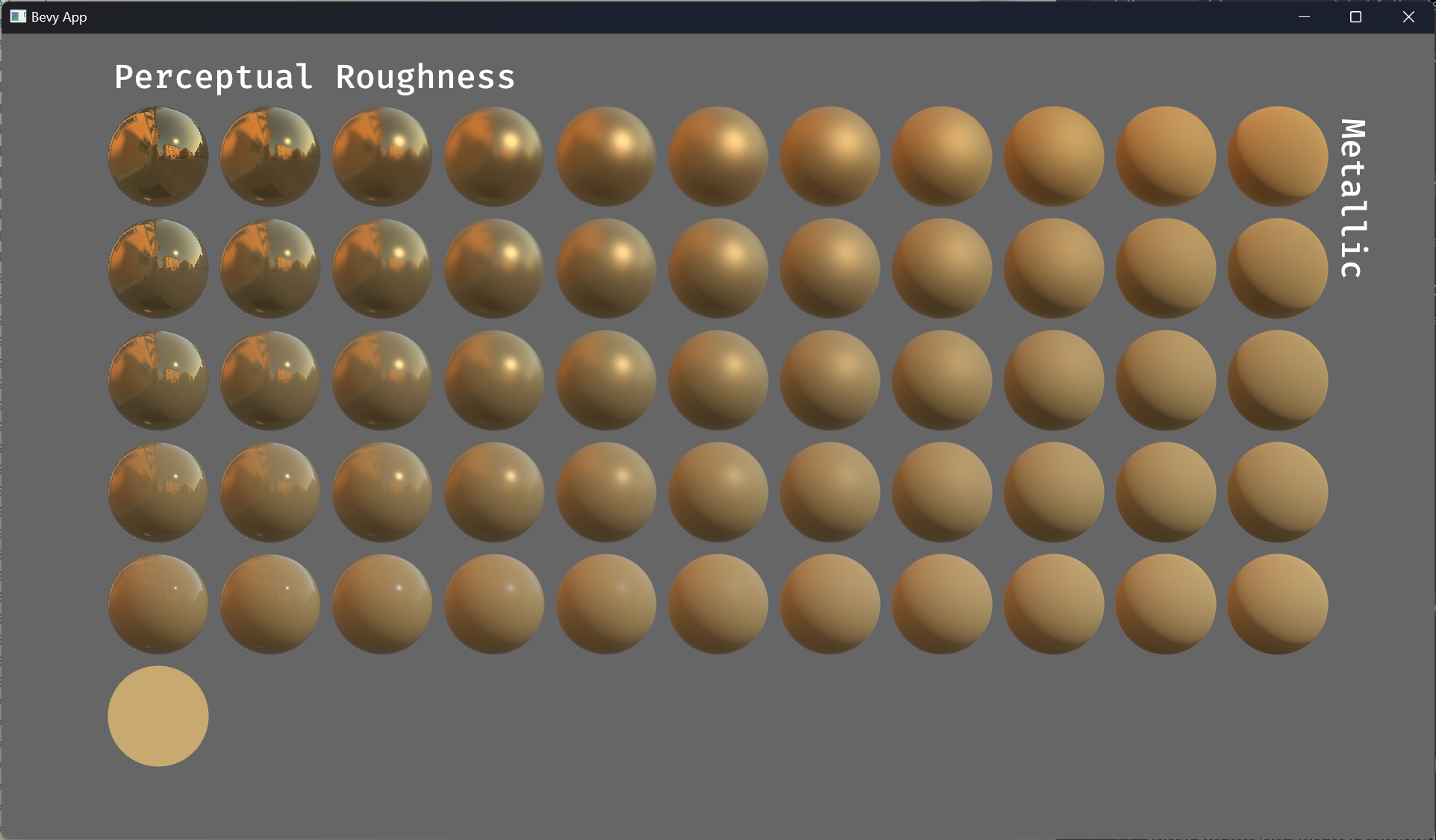

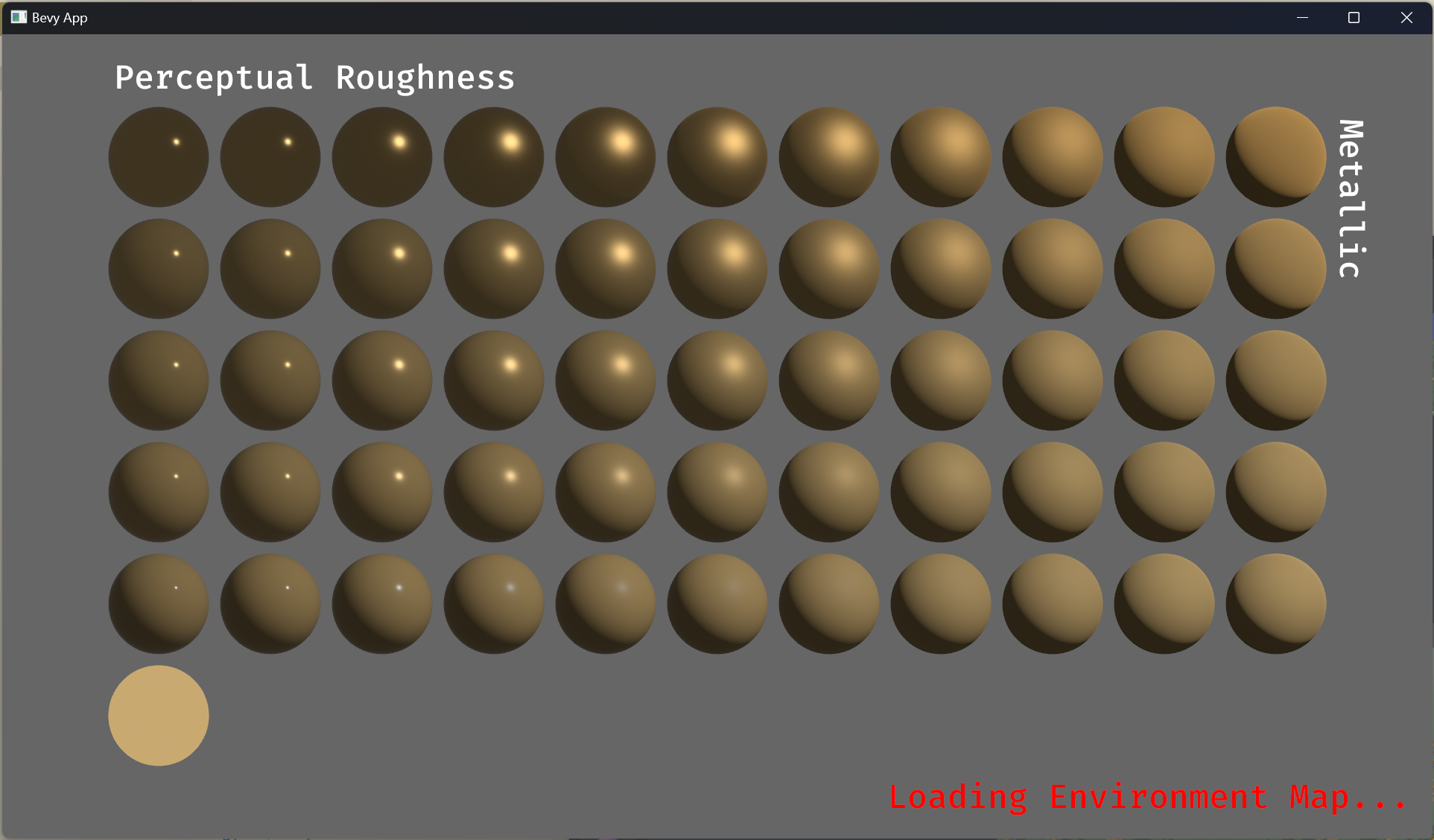

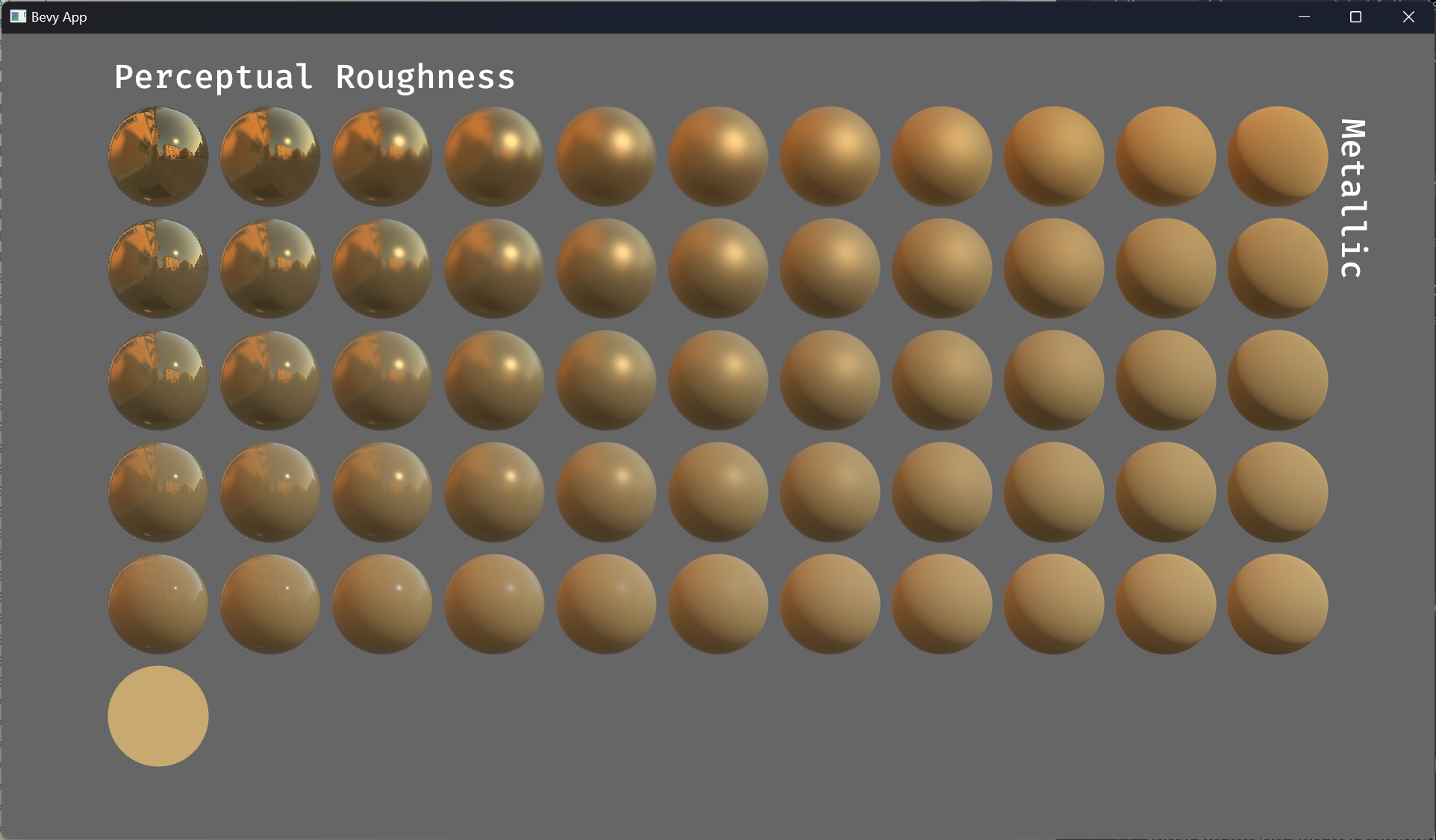

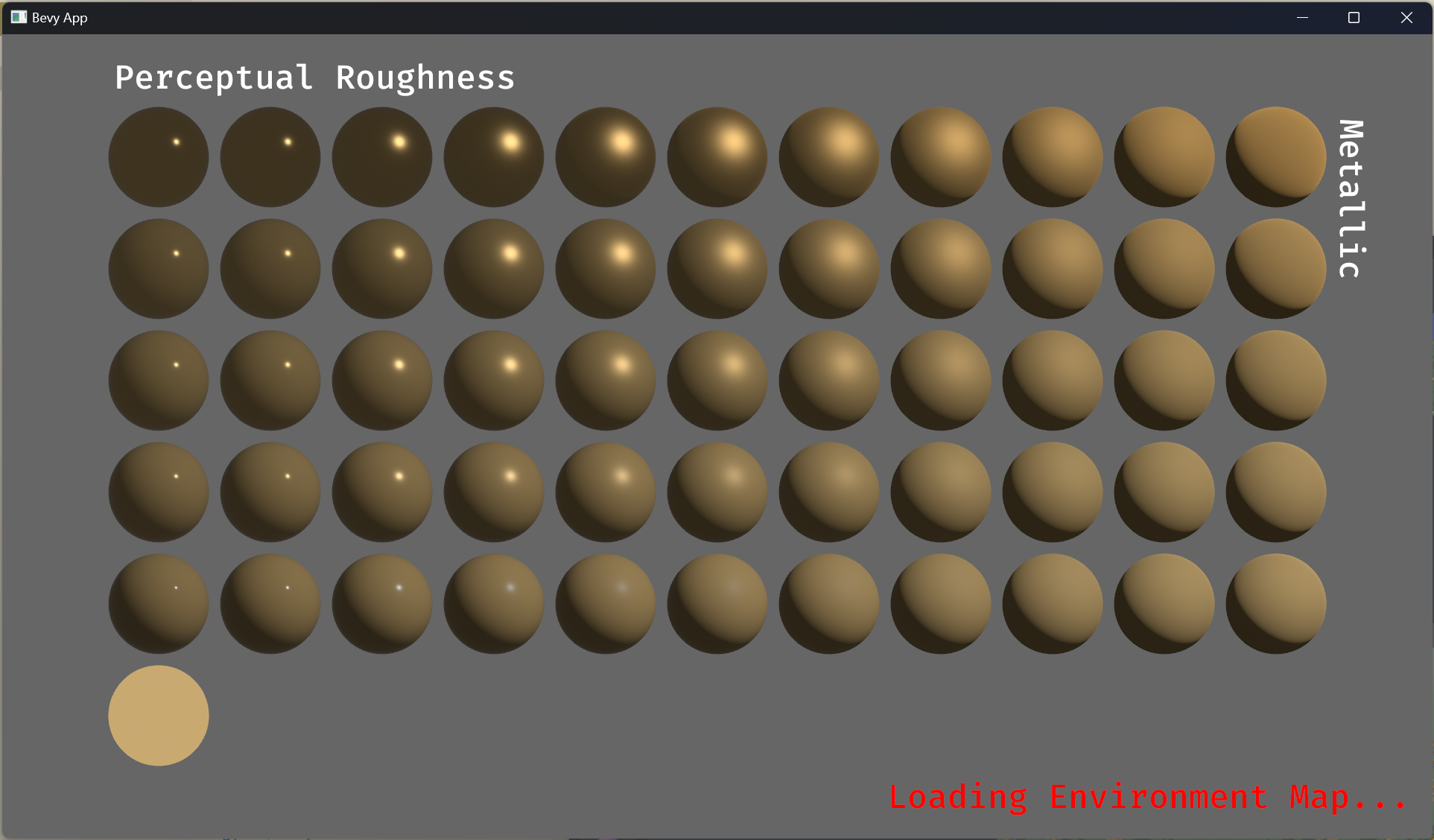

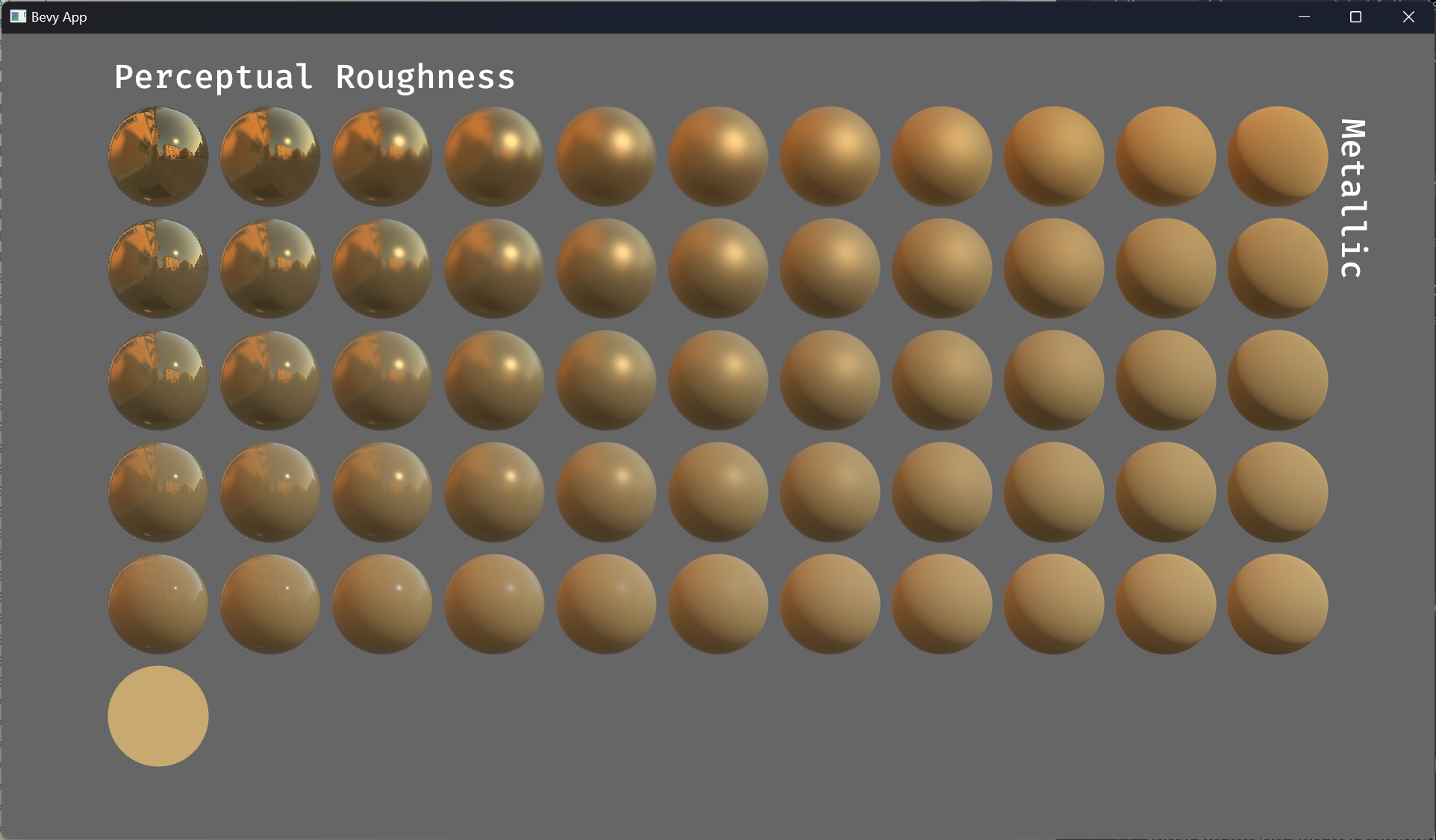

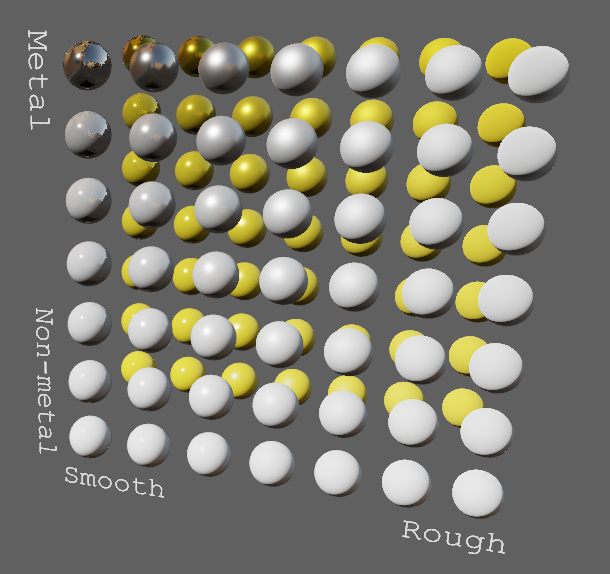

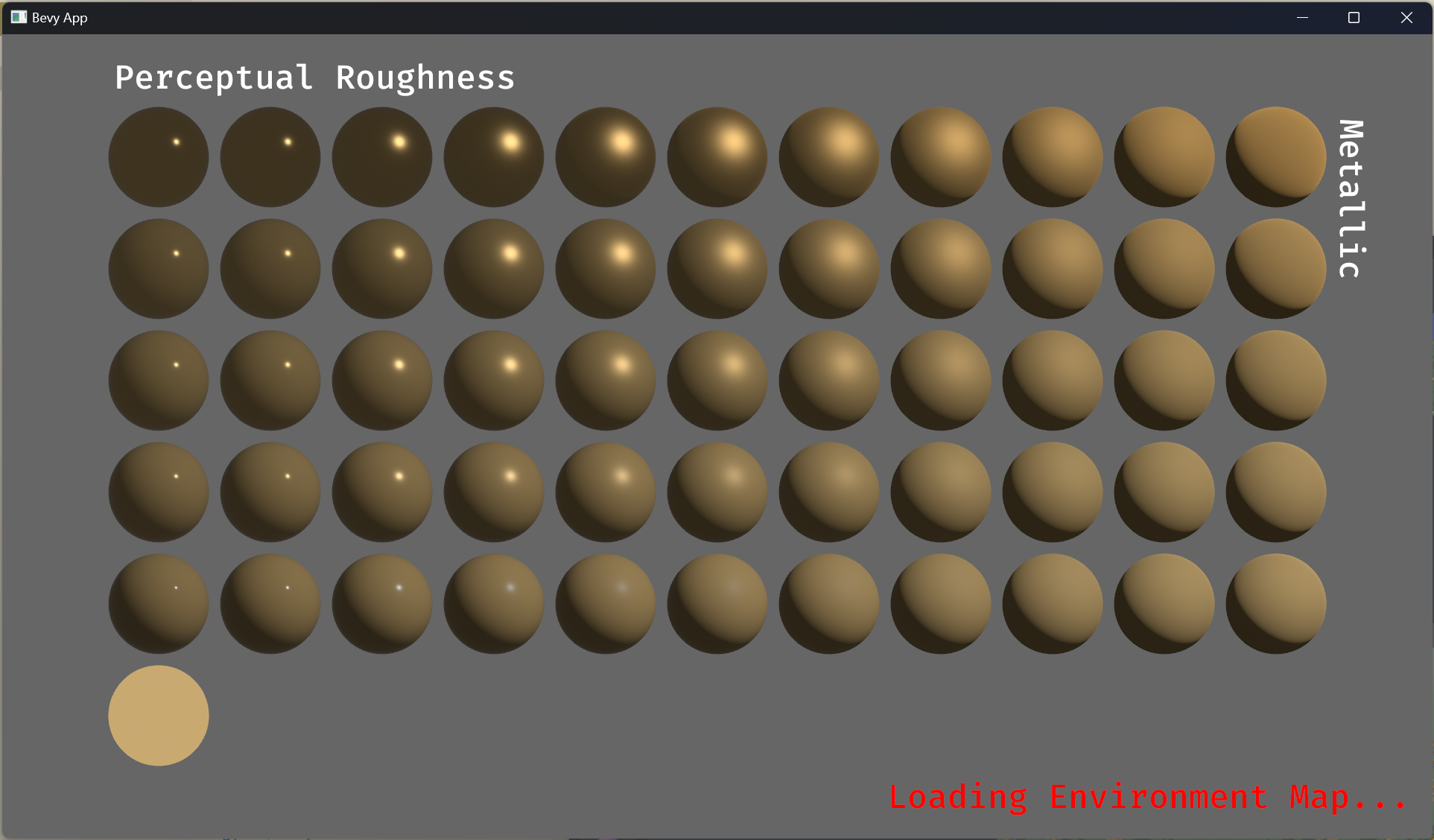

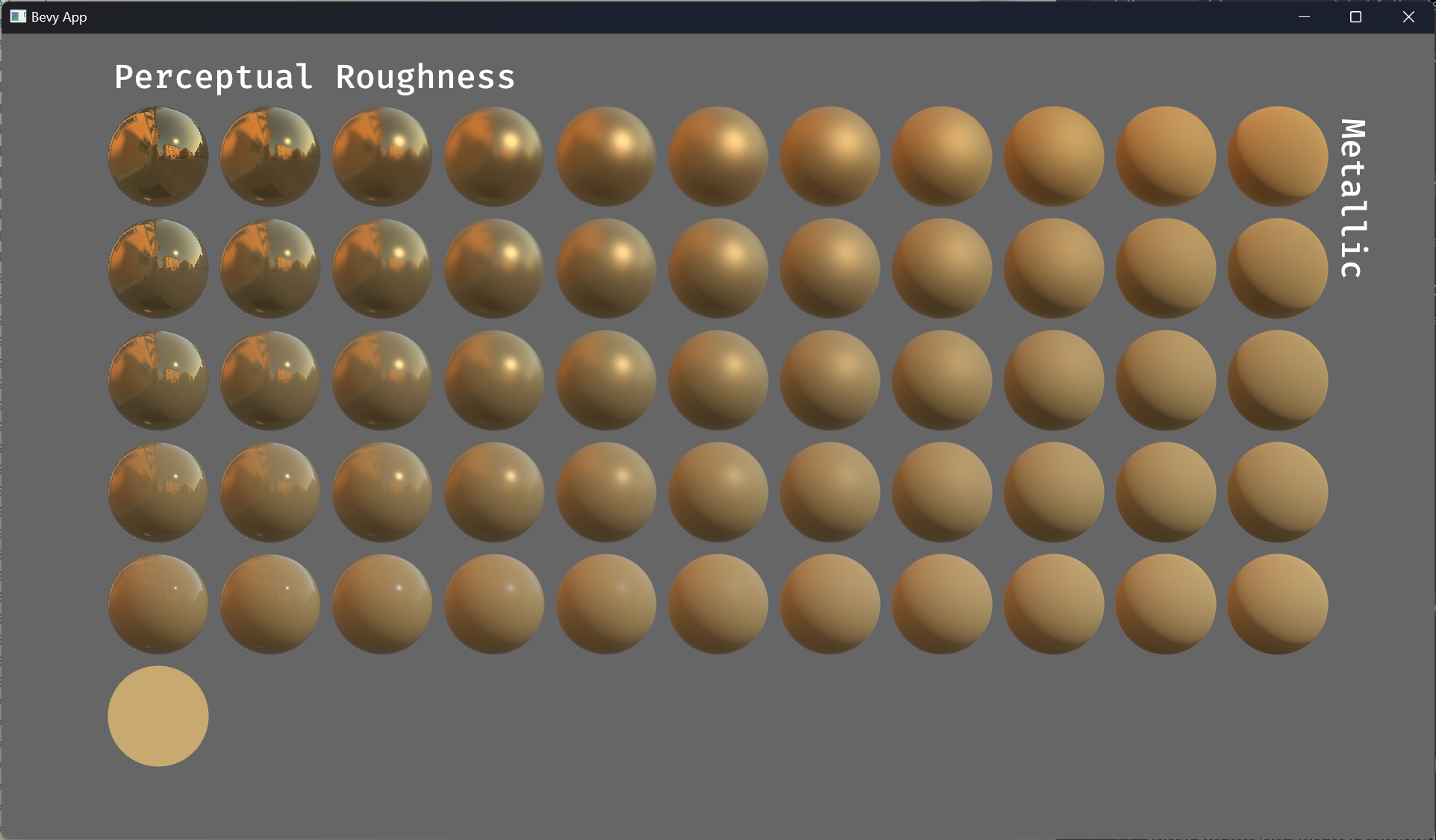

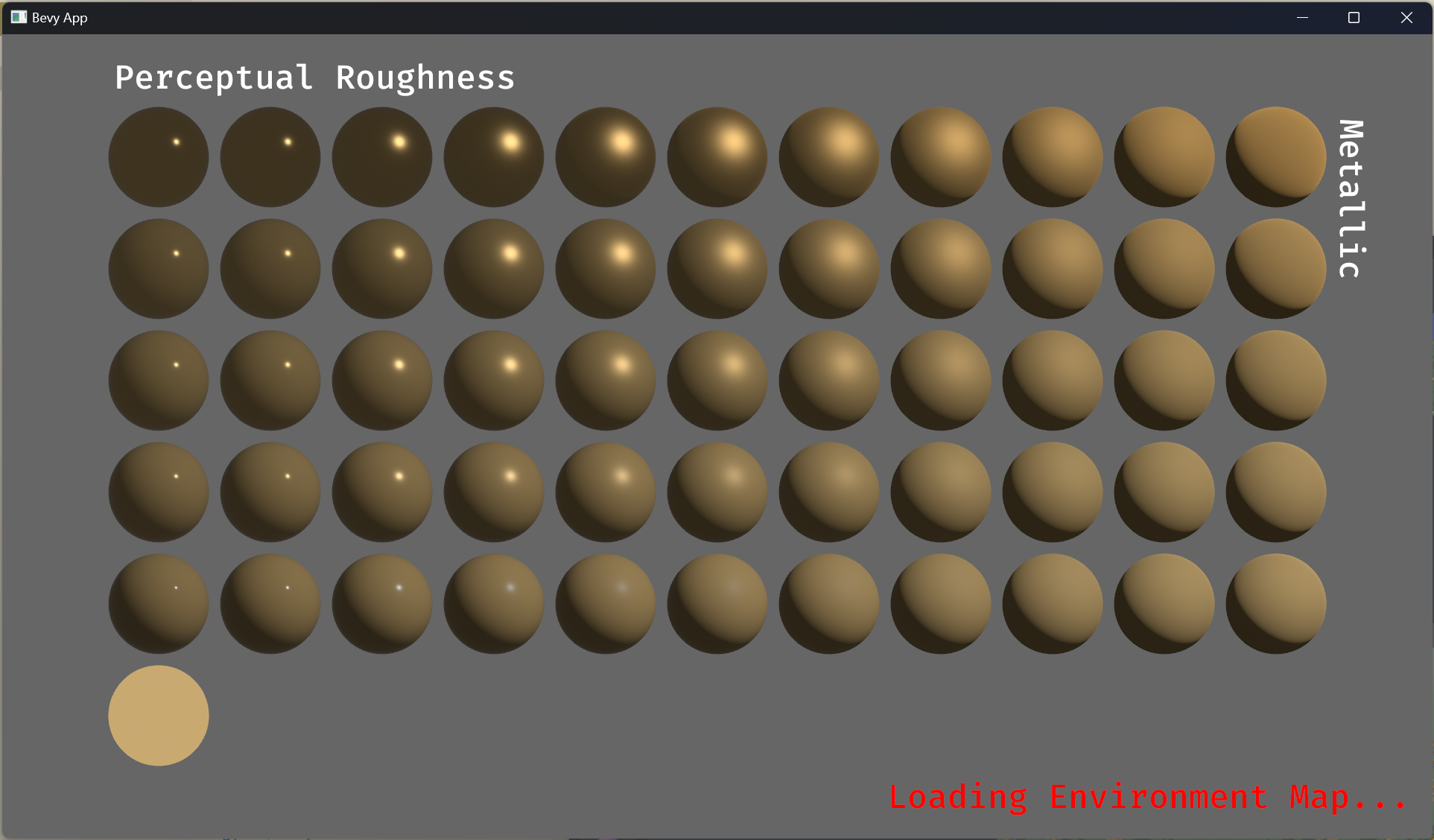

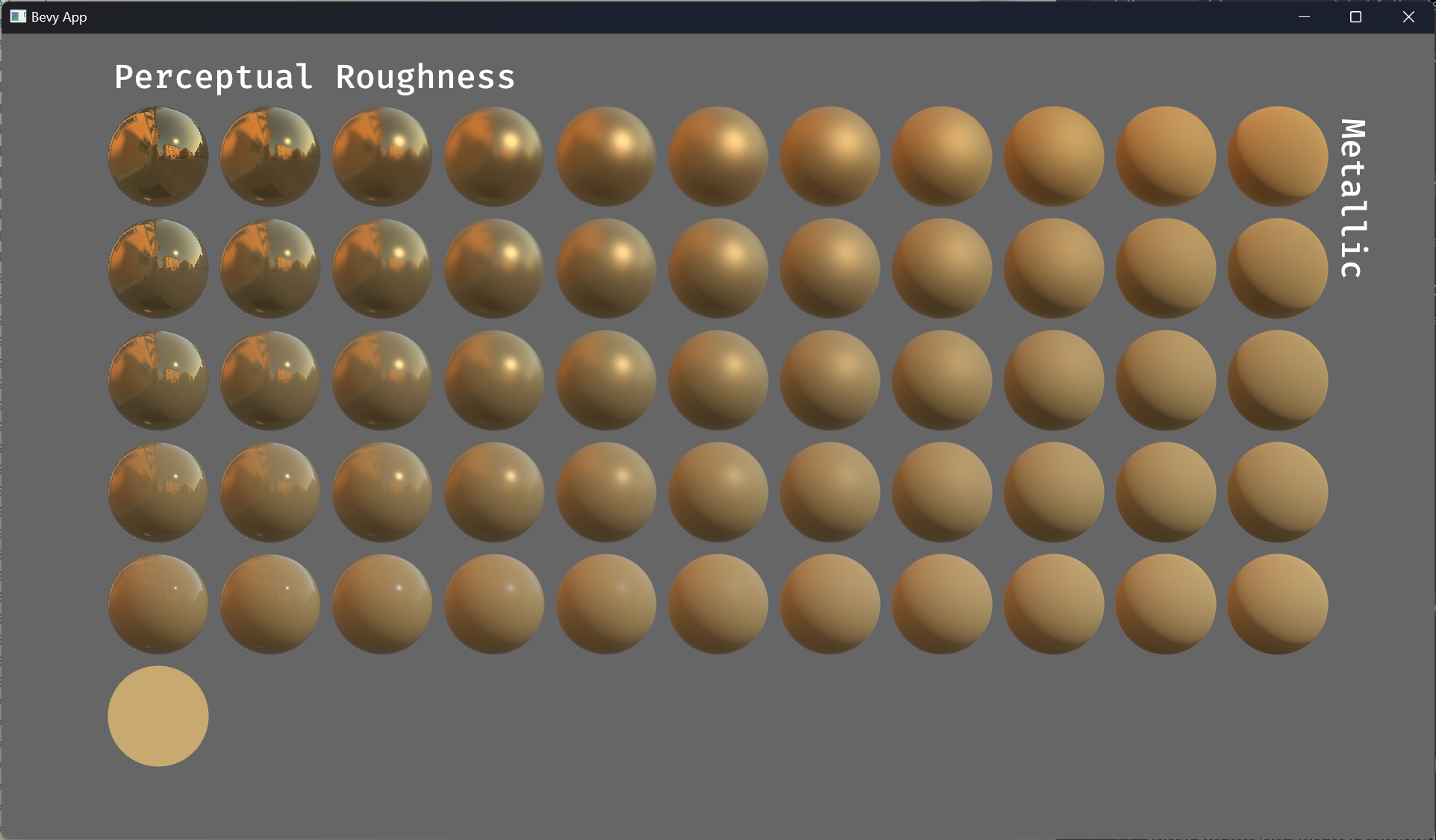

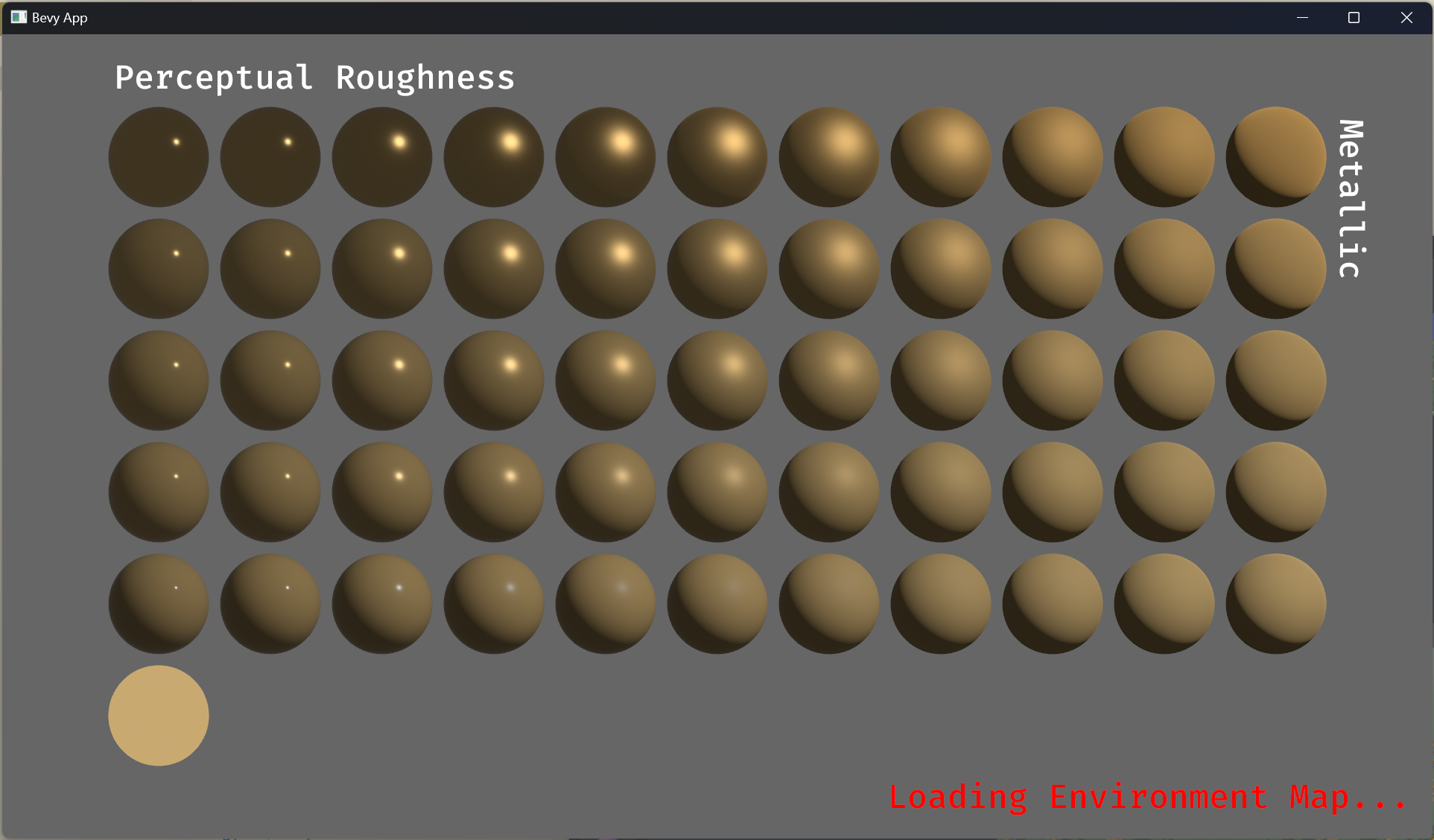

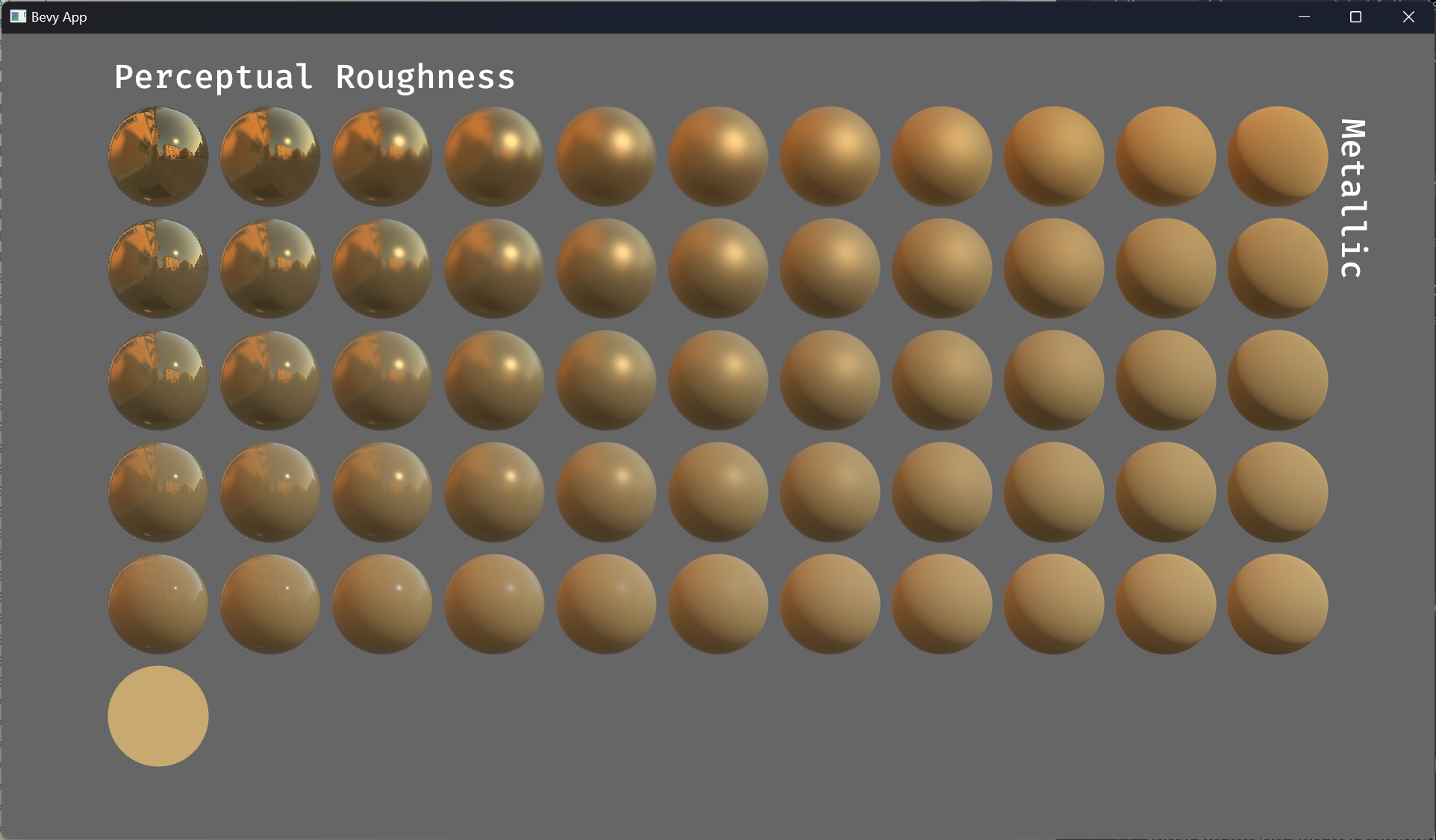

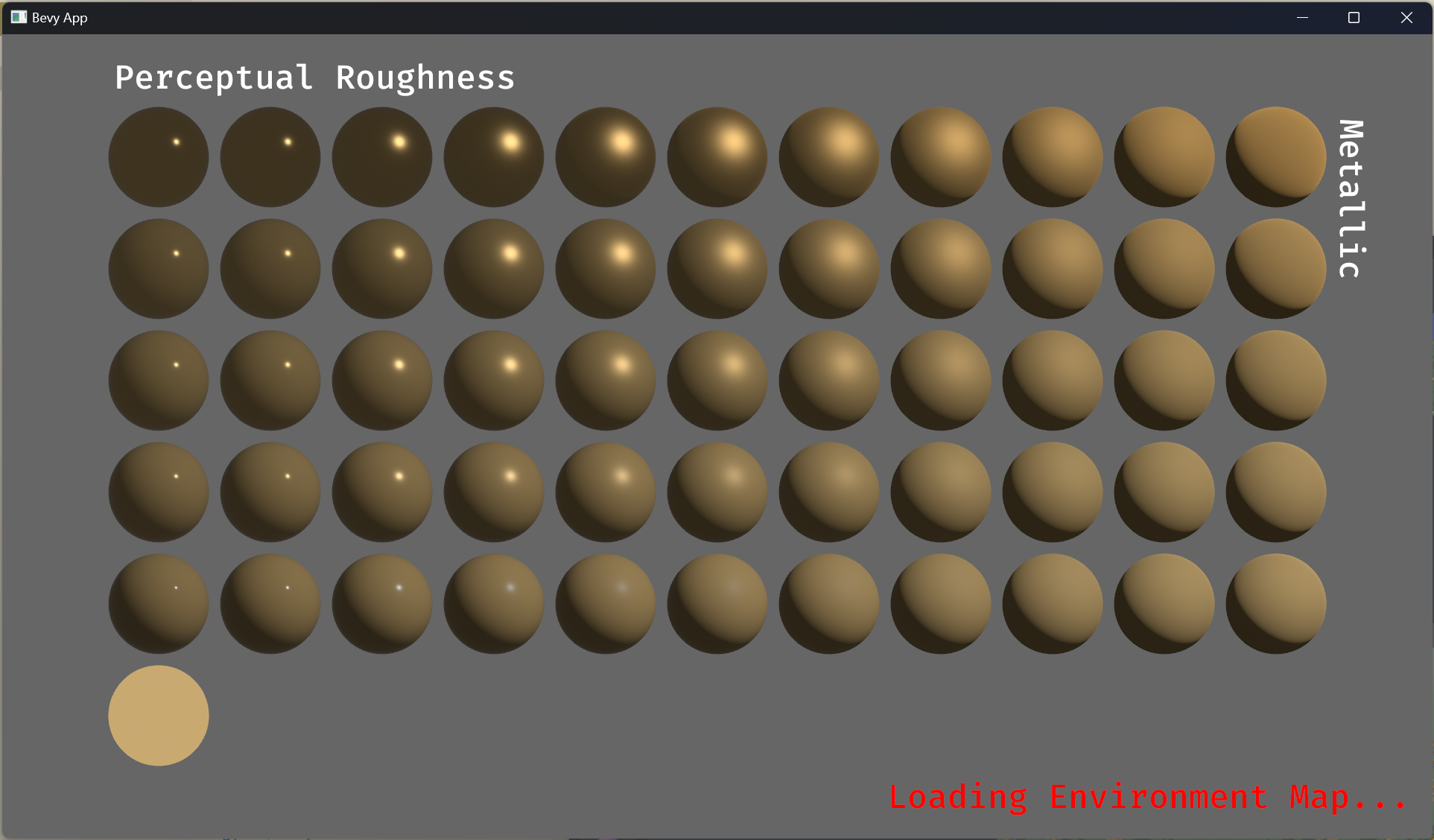

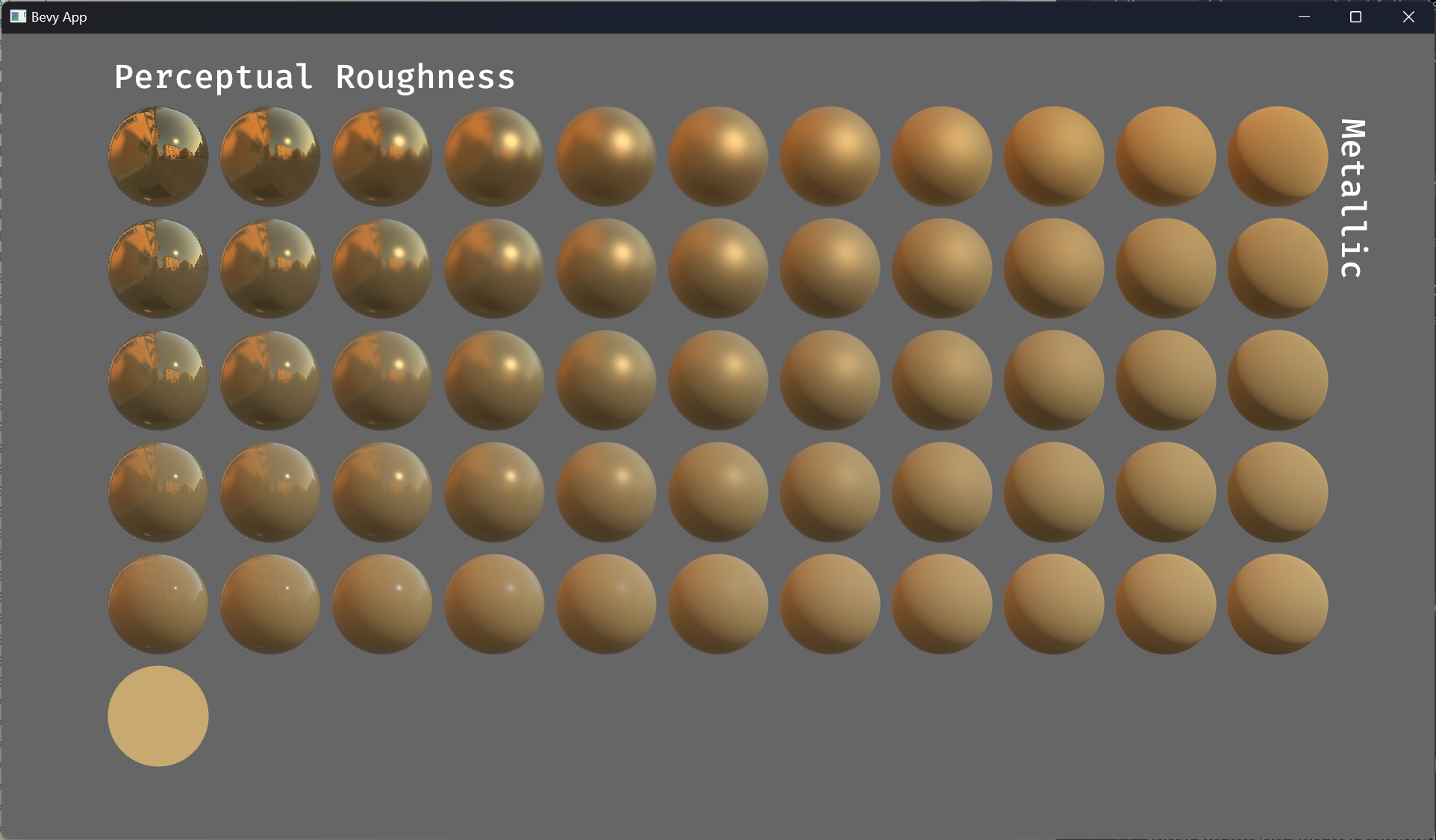

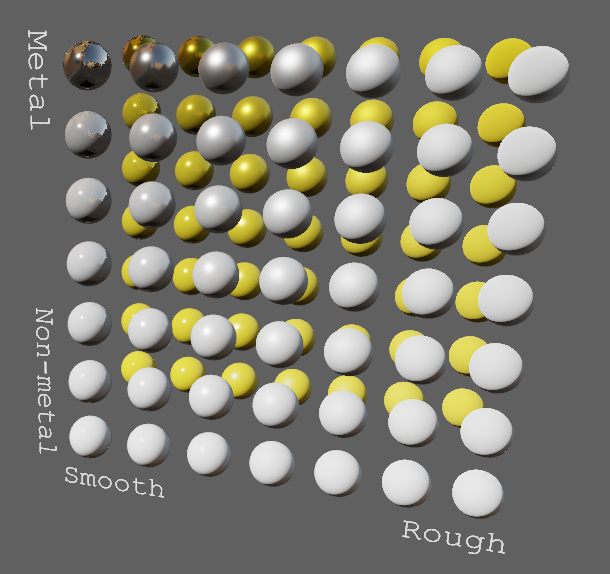

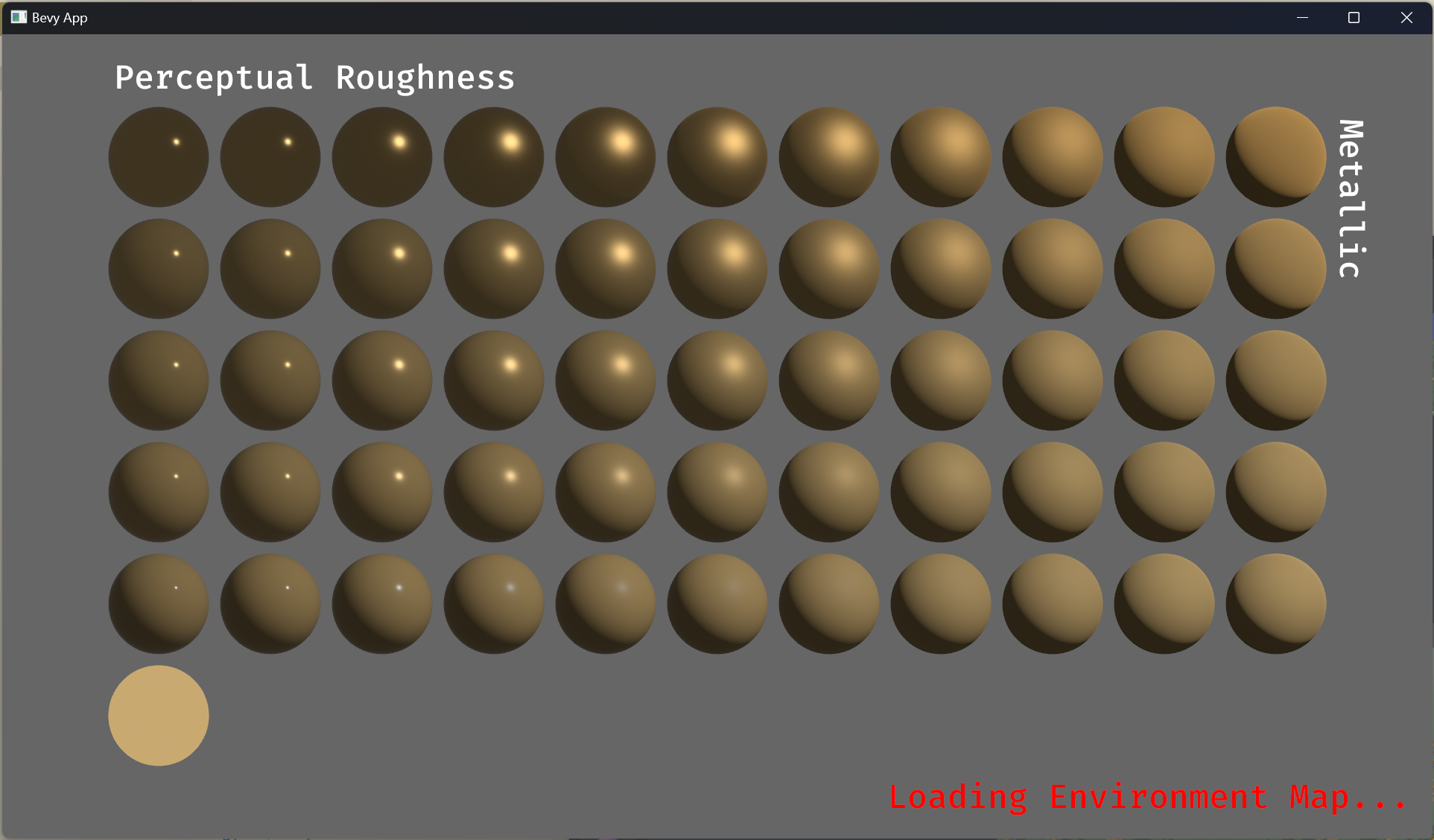

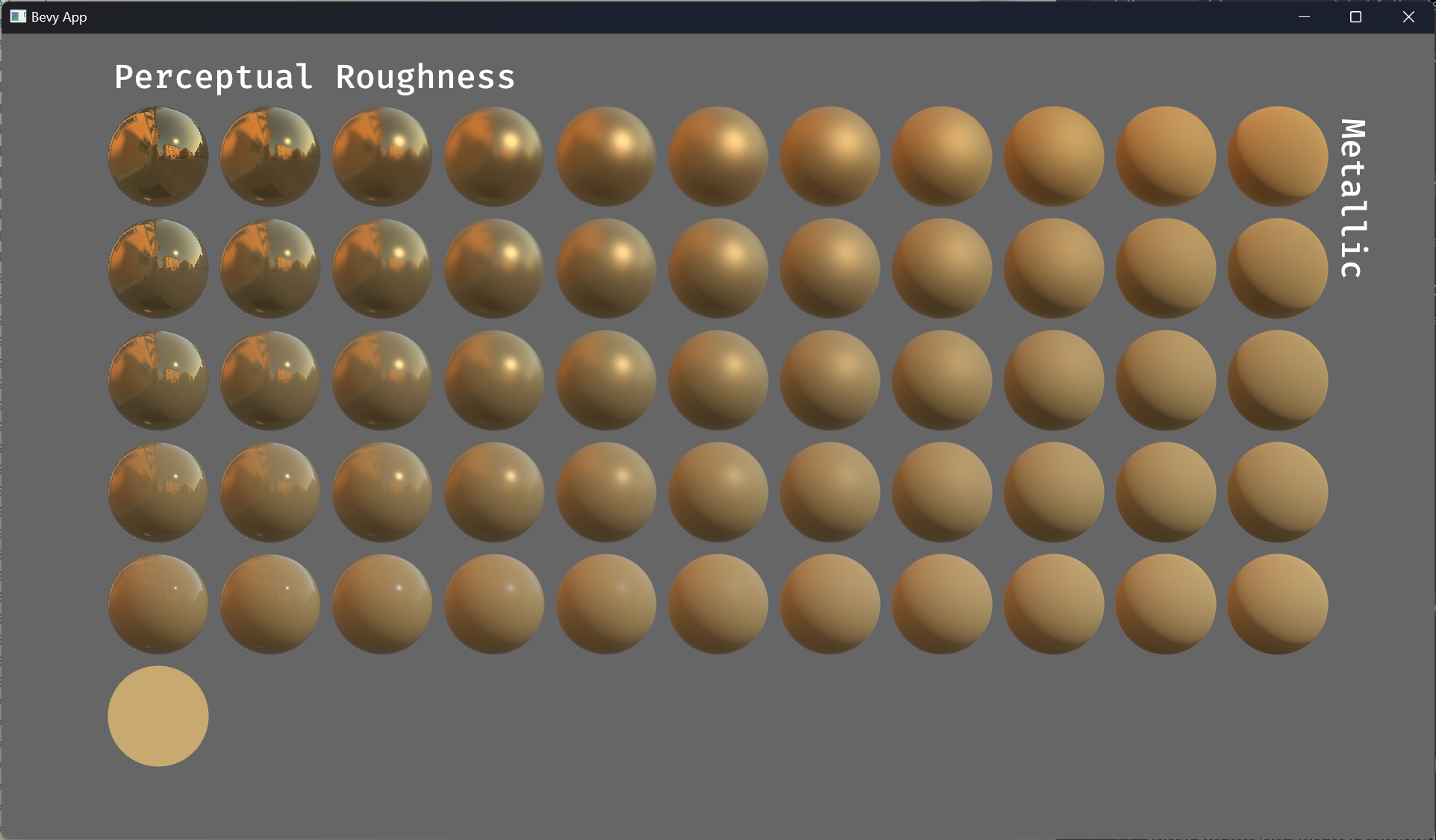

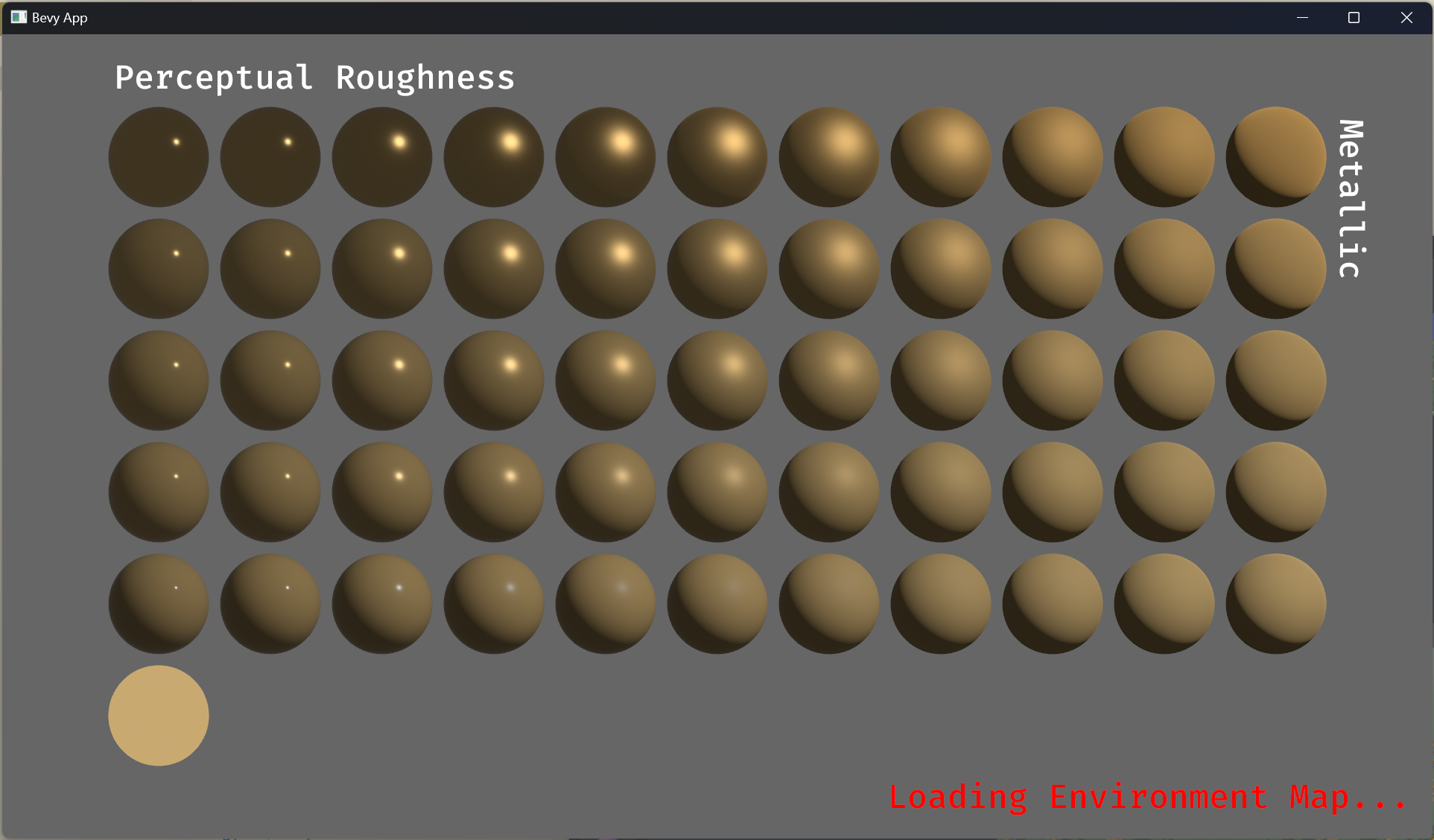

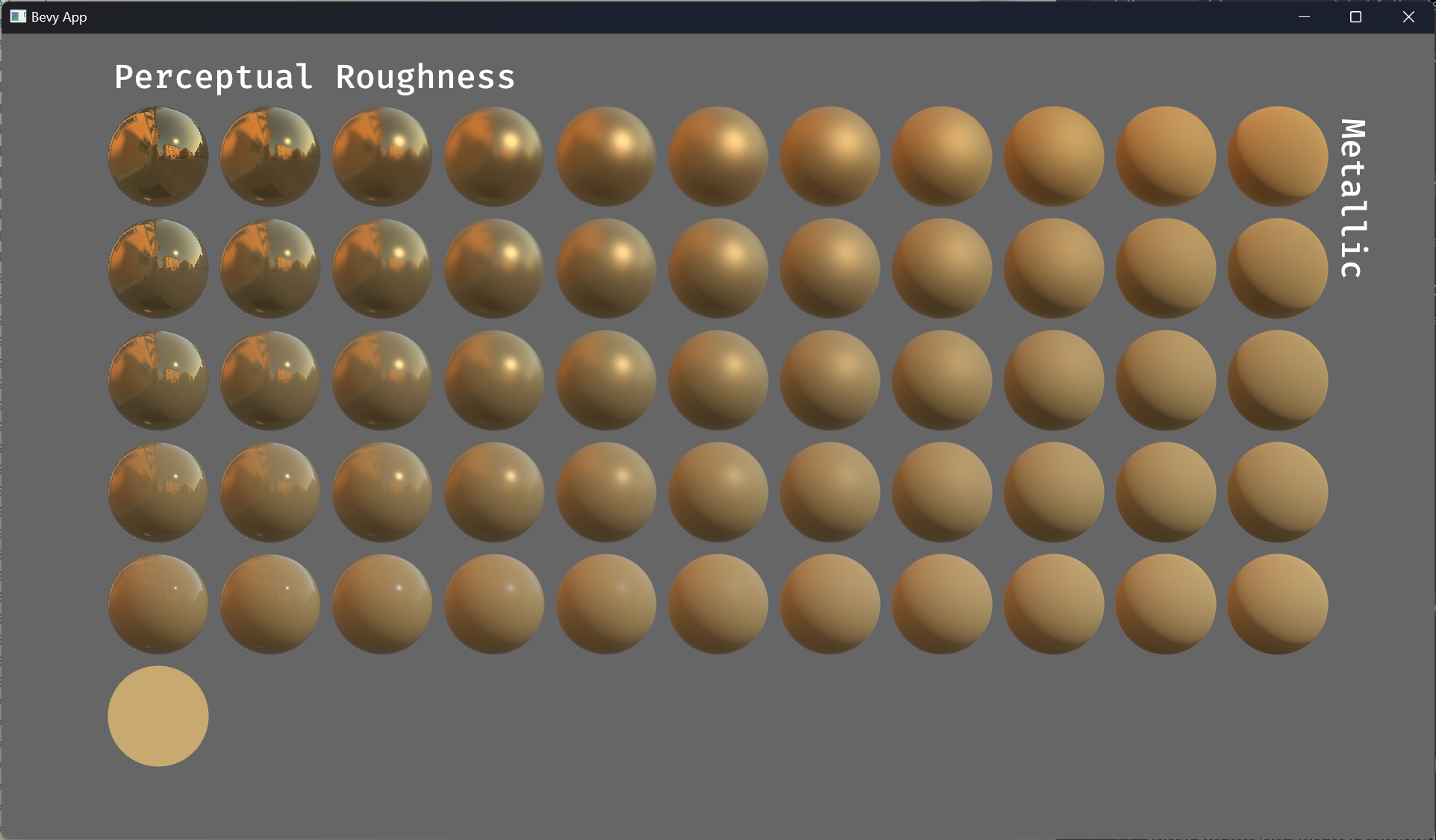

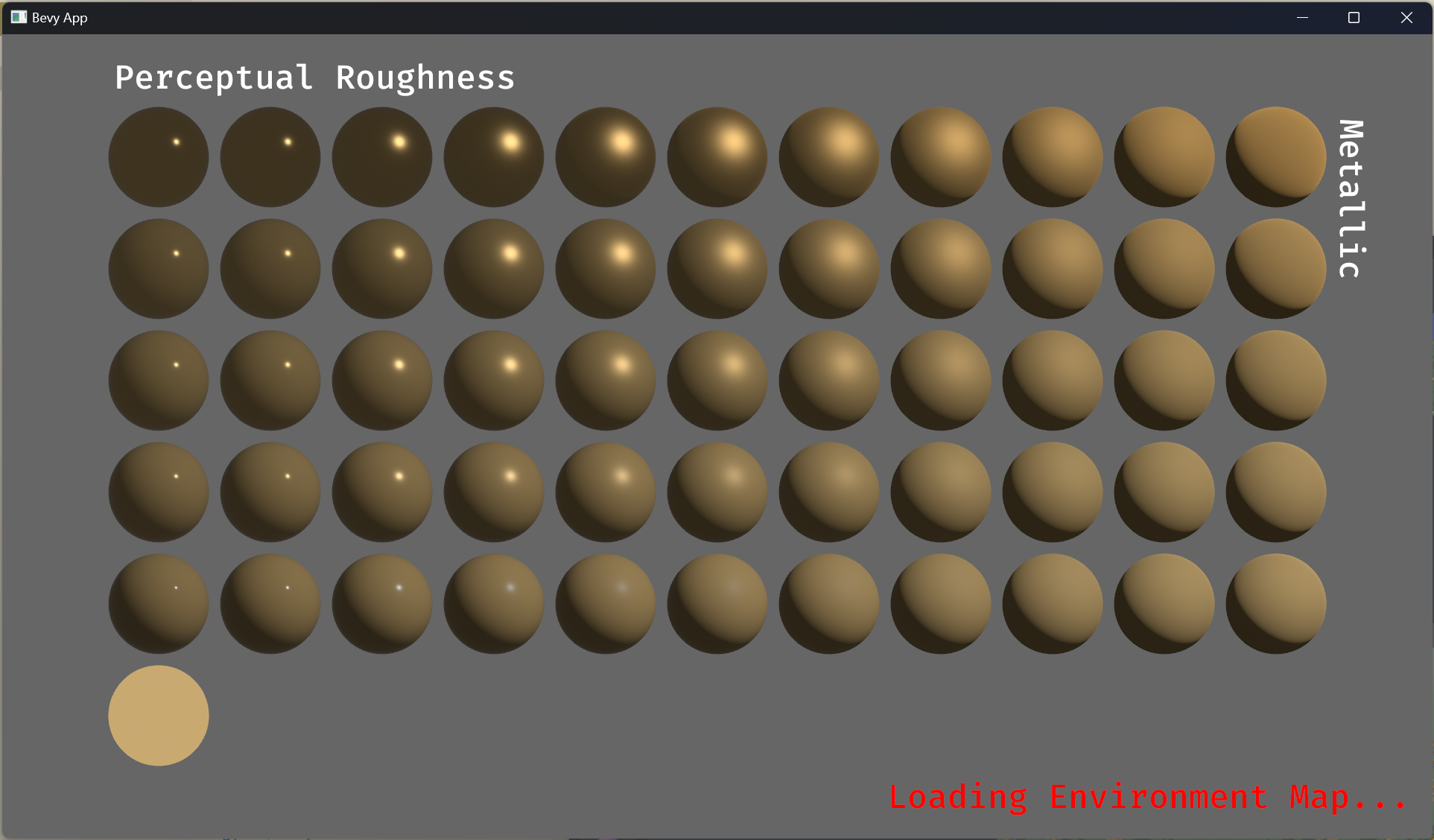

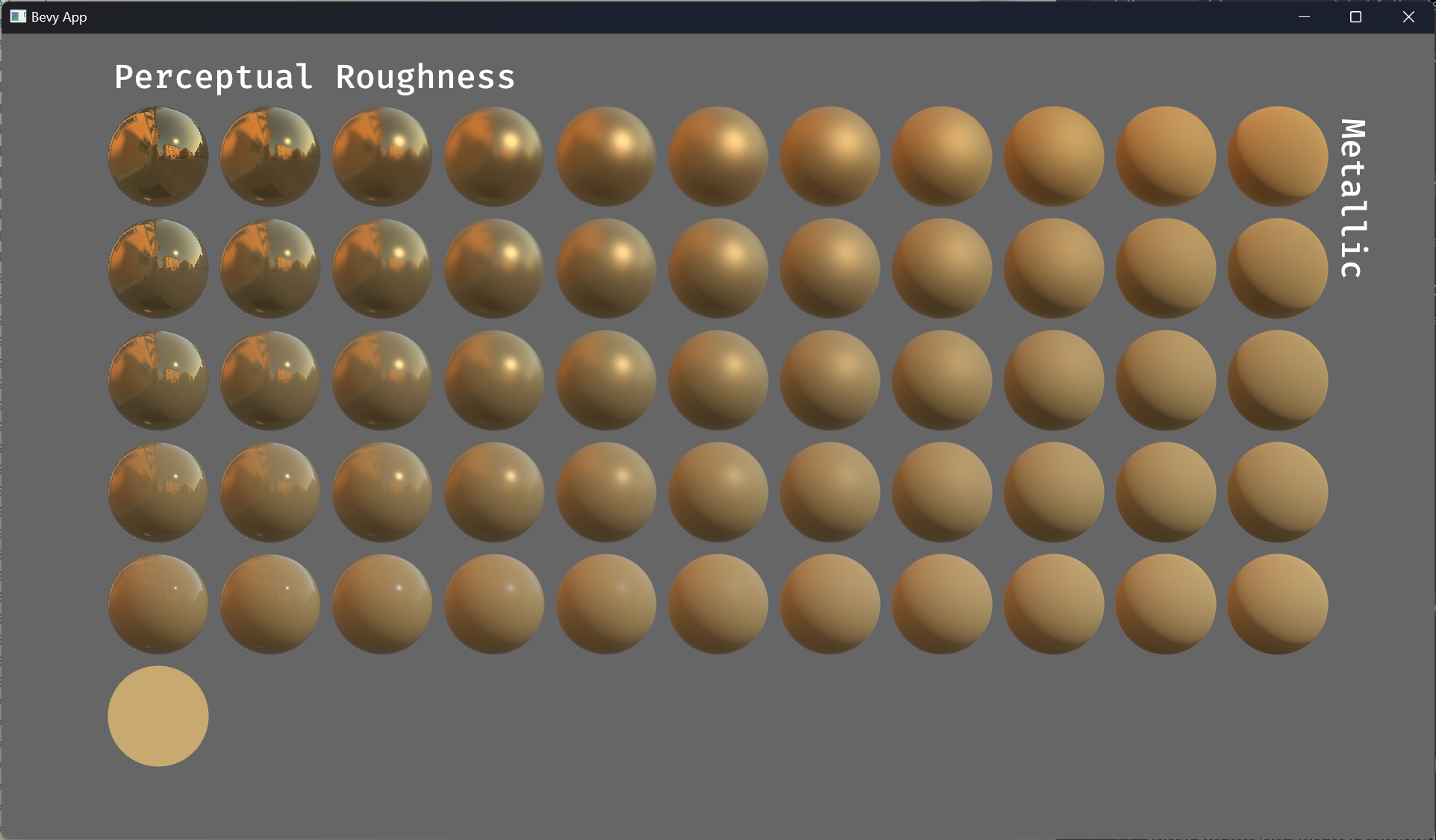

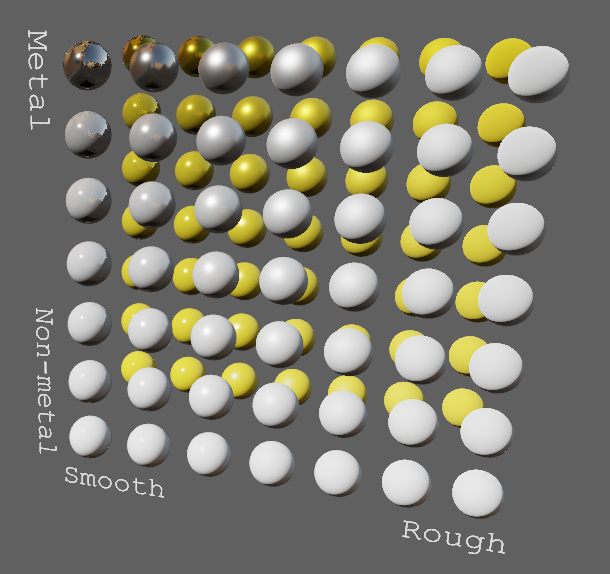

EnvironmentMapLight, BRDF Improvements (#7051)

(Before)

(After)

# Objective

- Improve lighting; especially reflections.

- Closes https://github.com/bevyengine/bevy/issues/4581.

## Solution

- Implement environment maps, providing better ambient light.

- Add microfacet multibounce approximation for specular highlights from Filament.

- Occlusion is no longer incorrectly applied to direct lighting. It now only applies to diffuse indirect light. Unsure if it's also supposed to apply to specular indirect light - the glTF specification just says "indirect light". In the case of ambient occlusion, for instance, that's usually only calculated as diffuse though. For now, I'm choosing to apply this just to indirect diffuse light, and not specular.

- Modified the PBR example to use an environment map, and have labels.

- Added `FallbackImageCubemap`.

## Implementation

- IBL technique references can be found in environment_map.wgsl.

- It's more accurate to use a LUT for the scale/bias. Filament has a good reference on generating this LUT. For now, I just used an analytic approximation.

- For now, environment maps must first be prefiltered outside of bevy using a 3rd party tool. See the `EnvironmentMap` documentation.

- Eventually, we should have our own prefiltering code, so that we can have dynamically changing environment maps, as well as let users drop in an HDR image and use asset preprocessing to create the needed textures using only bevy.

---

## Changelog

- Added an `EnvironmentMapLight` camera component that adds additional ambient light to a scene.

- StandardMaterials will now appear brighter and more saturated at high roughness, due to internal material changes. This is more physically correct.

- Fixed StandardMaterial occlusion being incorrectly applied to direct lighting.

- Added `FallbackImageCubemap`.

Co-authored-by: IceSentry <c.giguere42@gmail.com>

Co-authored-by: James Liu <contact@jamessliu.com>

Co-authored-by: Rob Parrett <robparrett@gmail.com>

2023-02-09 16:46:32 +00:00

|

|

|

BevyDefault, DefaultImageSampler, FallbackImageCubemap, FallbackImagesDepth,

|

|

|

|

|

FallbackImagesMsaa, GpuImage, Image, ImageSampler, TextureFormatPixelInfo,

|

2022-10-26 20:13:59 +00:00

|

|

|

},

|

|

|

|

|

view::{ComputedVisibility, ViewTarget, ViewUniform, ViewUniformOffset, ViewUniforms},

|

2023-03-18 01:45:34 +00:00

|

|

|

Extract, ExtractSchedule, Render, RenderApp, RenderSet,

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

};

|

|

|

|

|

use bevy_transform::components::GlobalTransform;

|

Add morph targets (#8158)

# Objective

- Add morph targets to `bevy_pbr` (closes #5756) & load them from glTF

- Supersedes #3722

- Fixes #6814

[Morph targets][1] (also known as shape interpolation, shape keys, or

blend shapes) allow animating individual vertices with fine grained

controls. This is typically used for facial expressions. By specifying

multiple poses as vertex offset, and providing a set of weight of each

pose, it is possible to define surprisingly realistic transitions

between poses. Blending between multiple poses also allow composition.

Morph targets are part of the [gltf standard][2] and are a feature of

Unity and Unreal, and babylone.js, it is only natural to implement them

in bevy.

## Solution

This implementation of morph targets uses a 3d texture where each pixel

is a component of an animated attribute. Each layer is a different

target. We use a 2d texture for each target, because the number of

attribute×components×animated vertices is expected to always exceed the

maximum pixel row size limit of webGL2. It copies fairly closely the way

skinning is implemented on the CPU side, while on the GPU side, the

shader morph target implementation is a relatively trivial detail.

We add an optional `morph_texture` to the `Mesh` struct. The

`morph_texture` is built through a method that accepts an iterator over

attribute buffers.

The `MorphWeights` component, user-accessible, controls the blend of

poses used by mesh instances (so that multiple copy of the same mesh may

have different weights), all the weights are uploaded to a uniform

buffer of 256 `f32`. We limit to 16 poses per mesh, and a total of 256

poses.

More literature:

* Old babylone.js implementation (vertex attribute-based):

https://www.eternalcoding.com/dev-log-1-morph-targets/

* Babylone.js implementation (similar to ours):

https://www.youtube.com/watch?v=LBPRmGgU0PE

* GPU gems 3:

https://developer.nvidia.com/gpugems/gpugems3/part-i-geometry/chapter-3-directx-10-blend-shapes-breaking-limits

* Development discord thread

https://discord.com/channels/691052431525675048/1083325980615114772

https://user-images.githubusercontent.com/26321040/231181046-3bca2ab2-d4d9-472e-8098-639f1871ce2e.mp4

https://github.com/bevyengine/bevy/assets/26321040/d2a0c544-0ef8-45cf-9f99-8c3792f5a258

## Acknowledgements

* Thanks to `storytold` for sponsoring the feature

* Thanks to `superdump` and `james7132` for guidance and help figuring

out stuff

## Future work

- Handling of less and more attributes (eg: animated uv, animated

arbitrary attributes)

- Dynamic pose allocation (so that zero-weighted poses aren't uploaded

to GPU for example, enables much more total poses)

- Better animation API, see #8357

----

## Changelog

- Add morph targets to bevy meshes

- Support up to 64 poses per mesh of individually up to 116508 vertices,

animation currently strictly limited to the position, normal and tangent

attributes.

- Load a morph target using `Mesh::set_morph_targets`

- Add `VisitMorphTargets` and `VisitMorphAttributes` traits to

`bevy_render`, this allows defining morph targets (a fairly complex and

nested data structure) through iterators (ie: single copy instead of

passing around buffers), see documentation of those traits for details

- Add `MorphWeights` component exported by `bevy_render`

- `MorphWeights` control mesh's morph target weights, blending between

various poses defined as morph targets.

- `MorphWeights` are directly inherited by direct children (single level

of hierarchy) of an entity. This allows controlling several mesh

primitives through a unique entity _as per GLTF spec_.

- Add `MorphTargetNames` component, naming each indices of loaded morph

targets.

- Load morph targets weights and buffers in `bevy_gltf`

- handle morph targets animations in `bevy_animation` (previously, it

was a `warn!` log)

- Add the `MorphStressTest.gltf` asset for morph targets testing, taken

from the glTF samples repo, CC0.

- Add morph target manipulation to `scene_viewer`

- Separate the animation code in `scene_viewer` from the rest of the

code, reducing `#[cfg(feature)]` noise

- Add the `morph_targets.rs` example to show off how to manipulate morph

targets, loading `MorpStressTest.gltf`

## Migration Guide

- (very specialized, unlikely to be touched by 3rd parties)

- `MeshPipeline` now has a single `mesh_layouts` field rather than

separate `mesh_layout` and `skinned_mesh_layout` fields. You should

handle all possible mesh bind group layouts in your implementation

- You should also handle properly the new `MORPH_TARGETS` shader def and

mesh pipeline key. A new function is exposed to make this easier:

`setup_moprh_and_skinning_defs`

- The `MeshBindGroup` is now `MeshBindGroups`, cached bind groups are

now accessed through the `get` method.

[1]: https://en.wikipedia.org/wiki/Morph_target_animation

[2]:

https://registry.khronos.org/glTF/specs/2.0/glTF-2.0.html#morph-targets

---------

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2023-06-22 20:00:01 +00:00

|

|

|

use bevy_utils::{tracing::error, HashMap, Hashed};

|

Reorder render sets, refactor bevy_sprite to take advantage (#9236)

This is a continuation of this PR: #8062

# Objective

- Reorder render schedule sets to allow data preparation when phase item

order is known to support improved batching

- Part of the batching/instancing etc plan from here:

https://github.com/bevyengine/bevy/issues/89#issuecomment-1379249074

- The original idea came from @inodentry and proved to be a good one.

Thanks!

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the new

ordering

## Solution

- Move `Prepare` and `PrepareFlush` after `PhaseSortFlush`

- Add a `PrepareAssets` set that runs in parallel with other systems and

sets in the render schedule.

- Put prepare_assets systems in the `PrepareAssets` set

- If explicit dependencies are needed on Mesh or Material RenderAssets

then depend on the appropriate system.

- Add `ManageViews` and `ManageViewsFlush` sets between

`ExtractCommands` and Queue

- Move `queue_mesh*_bind_group` to the Prepare stage

- Rename them to `prepare_`

- Put systems that prepare resources (buffers, textures, etc.) into a

`PrepareResources` set inside `Prepare`

- Put the `prepare_..._bind_group` systems into a `PrepareBindGroup` set

after `PrepareResources`

- Move `prepare_lights` to the `ManageViews` set

- `prepare_lights` creates views and this must happen before `Queue`

- This system needs refactoring to stop handling all responsibilities

- Gather lights, sort, and create shadow map views. Store sorted light

entities in a resource

- Remove `BatchedPhaseItem`

- Replace `batch_range` with `batch_size` representing how many items to

skip after rendering the item or to skip the item entirely if

`batch_size` is 0.

- `queue_sprites` has been split into `queue_sprites` for queueing phase

items and `prepare_sprites` for batching after the `PhaseSort`

- `PhaseItem`s are still inserted in `queue_sprites`

- After sorting adjacent compatible sprite phase items are accumulated

into `SpriteBatch` components on the first entity of each batch,

containing a range of vertex indices. The associated `PhaseItem`'s

`batch_size` is updated appropriately.

- `SpriteBatch` items are then drawn skipping over the other items in

the batch based on the value in `batch_size`

- A very similar refactor was performed on `bevy_ui`

---

## Changelog

Changed:

- Reordered and reworked render app schedule sets. The main change is

that data is extracted, queued, sorted, and then prepared when the order

of data is known.

- Refactor `bevy_sprite` and `bevy_ui` to take advantage of the

reordering.

## Migration Guide

- Assets such as materials and meshes should now be created in

`PrepareAssets` e.g. `prepare_assets<Mesh>`

- Queueing entities to `RenderPhase`s continues to be done in `Queue`

e.g. `queue_sprites`

- Preparing resources (textures, buffers, etc.) should now be done in

`PrepareResources`, e.g. `prepare_prepass_textures`,

`prepare_mesh_uniforms`

- Prepare bind groups should now be done in `PrepareBindGroups` e.g.

`prepare_mesh_bind_group`

- Any batching or instancing can now be done in `Prepare` where the

order of the phase items is known e.g. `prepare_sprites`

## Next Steps

- Introduce some generic mechanism to ensure items that can be batched

are grouped in the phase item order, currently you could easily have

`[sprite at z 0, mesh at z 0, sprite at z 0]` preventing batching.

- Investigate improved orderings for building the MeshUniform buffer

- Implementing batching across the rest of bevy

---------

Co-authored-by: Robert Swain <robert.swain@gmail.com>

Co-authored-by: robtfm <50659922+robtfm@users.noreply.github.com>

2023-08-27 14:33:49 +00:00

|

|

|

use fixedbitset::FixedBitSet;

|

Add morph targets (#8158)

# Objective

- Add morph targets to `bevy_pbr` (closes #5756) & load them from glTF

- Supersedes #3722

- Fixes #6814

[Morph targets][1] (also known as shape interpolation, shape keys, or

blend shapes) allow animating individual vertices with fine grained

controls. This is typically used for facial expressions. By specifying

multiple poses as vertex offset, and providing a set of weight of each

pose, it is possible to define surprisingly realistic transitions

between poses. Blending between multiple poses also allow composition.

Morph targets are part of the [gltf standard][2] and are a feature of

Unity and Unreal, and babylone.js, it is only natural to implement them

in bevy.

## Solution

This implementation of morph targets uses a 3d texture where each pixel

is a component of an animated attribute. Each layer is a different

target. We use a 2d texture for each target, because the number of

attribute×components×animated vertices is expected to always exceed the

maximum pixel row size limit of webGL2. It copies fairly closely the way

skinning is implemented on the CPU side, while on the GPU side, the

shader morph target implementation is a relatively trivial detail.

We add an optional `morph_texture` to the `Mesh` struct. The

`morph_texture` is built through a method that accepts an iterator over

attribute buffers.

The `MorphWeights` component, user-accessible, controls the blend of

poses used by mesh instances (so that multiple copy of the same mesh may

have different weights), all the weights are uploaded to a uniform

buffer of 256 `f32`. We limit to 16 poses per mesh, and a total of 256

poses.

More literature:

* Old babylone.js implementation (vertex attribute-based):

https://www.eternalcoding.com/dev-log-1-morph-targets/

* Babylone.js implementation (similar to ours):

https://www.youtube.com/watch?v=LBPRmGgU0PE

* GPU gems 3:

https://developer.nvidia.com/gpugems/gpugems3/part-i-geometry/chapter-3-directx-10-blend-shapes-breaking-limits

* Development discord thread

https://discord.com/channels/691052431525675048/1083325980615114772

https://user-images.githubusercontent.com/26321040/231181046-3bca2ab2-d4d9-472e-8098-639f1871ce2e.mp4

https://github.com/bevyengine/bevy/assets/26321040/d2a0c544-0ef8-45cf-9f99-8c3792f5a258

## Acknowledgements

* Thanks to `storytold` for sponsoring the feature

* Thanks to `superdump` and `james7132` for guidance and help figuring

out stuff

## Future work

- Handling of less and more attributes (eg: animated uv, animated

arbitrary attributes)

- Dynamic pose allocation (so that zero-weighted poses aren't uploaded

to GPU for example, enables much more total poses)

- Better animation API, see #8357

----

## Changelog

- Add morph targets to bevy meshes

- Support up to 64 poses per mesh of individually up to 116508 vertices,

animation currently strictly limited to the position, normal and tangent

attributes.

- Load a morph target using `Mesh::set_morph_targets`

- Add `VisitMorphTargets` and `VisitMorphAttributes` traits to

`bevy_render`, this allows defining morph targets (a fairly complex and

nested data structure) through iterators (ie: single copy instead of

passing around buffers), see documentation of those traits for details

- Add `MorphWeights` component exported by `bevy_render`

- `MorphWeights` control mesh's morph target weights, blending between

various poses defined as morph targets.

- `MorphWeights` are directly inherited by direct children (single level

of hierarchy) of an entity. This allows controlling several mesh

primitives through a unique entity _as per GLTF spec_.

- Add `MorphTargetNames` component, naming each indices of loaded morph

targets.

- Load morph targets weights and buffers in `bevy_gltf`

- handle morph targets animations in `bevy_animation` (previously, it

was a `warn!` log)

- Add the `MorphStressTest.gltf` asset for morph targets testing, taken

from the glTF samples repo, CC0.

- Add morph target manipulation to `scene_viewer`

- Separate the animation code in `scene_viewer` from the rest of the

code, reducing `#[cfg(feature)]` noise

- Add the `morph_targets.rs` example to show off how to manipulate morph

targets, loading `MorpStressTest.gltf`

## Migration Guide

- (very specialized, unlikely to be touched by 3rd parties)

- `MeshPipeline` now has a single `mesh_layouts` field rather than

separate `mesh_layout` and `skinned_mesh_layout` fields. You should

handle all possible mesh bind group layouts in your implementation

- You should also handle properly the new `MORPH_TARGETS` shader def and

mesh pipeline key. A new function is exposed to make this easier:

`setup_moprh_and_skinning_defs`

- The `MeshBindGroup` is now `MeshBindGroups`, cached bind groups are

now accessed through the `get` method.

[1]: https://en.wikipedia.org/wiki/Morph_target_animation

[2]:

https://registry.khronos.org/glTF/specs/2.0/glTF-2.0.html#morph-targets

---------

Co-authored-by: François <mockersf@gmail.com>

Co-authored-by: Carter Anderson <mcanders1@gmail.com>

2023-06-22 20:00:01 +00:00

|

|

|

|

|

|

|

|

use crate::render::{

|

|

|

|

|

morph::{extract_morphs, prepare_morphs, MorphIndex, MorphUniform},

|

|

|

|

|

MeshLayouts,

|

|

|

|

|

};

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

|

|

|

|

|

#[derive(Default)]

|

|

|

|

|

pub struct MeshRenderPlugin;

|

|

|

|

|

|

2023-08-28 16:58:45 +00:00

|

|

|

/// Maximum number of joints supported for skinned meshes.

|

|

|

|

|

pub const MAX_JOINTS: usize = 256;

|

2022-03-29 18:31:13 +00:00

|

|

|

const JOINT_SIZE: usize = std::mem::size_of::<Mat4>();

|

|

|

|

|

pub(crate) const JOINT_BUFFER_SIZE: usize = MAX_JOINTS * JOINT_SIZE;

|

|

|

|

|

|

2022-07-14 21:17:16 +00:00

|

|

|

pub const MESH_VERTEX_OUTPUT: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 2645551199423808407);

|

Split mesh shader files (#4867)

# Objective

- Split PBR and 2D mesh shaders into types and bindings to prepare the shaders to be more reusable.

- See #3969 for details. I'm doing this in multiple steps to make review easier.

---

## Changelog

- Changed: 2D and PBR mesh shaders are now split into types and bindings, the following shader imports are available: `bevy_pbr::mesh_view_types`, `bevy_pbr::mesh_view_bindings`, `bevy_pbr::mesh_types`, `bevy_pbr::mesh_bindings`, `bevy_sprite::mesh2d_view_types`, `bevy_sprite::mesh2d_view_bindings`, `bevy_sprite::mesh2d_types`, `bevy_sprite::mesh2d_bindings`

## Migration Guide

- In shaders for 3D meshes:

- `#import bevy_pbr::mesh_view_bind_group` -> `#import bevy_pbr::mesh_view_bindings`

- `#import bevy_pbr::mesh_struct` -> `#import bevy_pbr::mesh_types`

- NOTE: If you are using the mesh bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_pbr::mesh_bindings` which itself imports the mesh types needed for the bindings.

- In shaders for 2D meshes:

- `#import bevy_sprite::mesh2d_view_bind_group` -> `#import bevy_sprite::mesh2d_view_bindings`

- `#import bevy_sprite::mesh2d_struct` -> `#import bevy_sprite::mesh2d_types`

- NOTE: If you are using the mesh2d bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_sprite::mesh2d_bindings` which itself imports the mesh2d types needed for the bindings.

2022-05-31 23:23:25 +00:00

|

|

|

pub const MESH_VIEW_TYPES_HANDLE: HandleUntyped =

|

|

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 8140454348013264787);

|

|

|

|

|

pub const MESH_VIEW_BINDINGS_HANDLE: HandleUntyped =

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```

```rust

// /assets/shaders/custom_material.wgsl

[[block]]

struct CustomMaterial {

color: vec4<f32>;

};

[[group(1), binding(0)]]

var<uniform> material: CustomMaterial;

```

### Custom Path Imports

Enables defining custom import paths. These are intended to be used by crates to export shader functionality:

```rust

// bevy_pbr2/src/render/pbr.wgsl

#import bevy_pbr::mesh_view_bind_group

#import bevy_pbr::mesh_bind_group

[[block]]

struct StandardMaterial {

base_color: vec4<f32>;

emissive: vec4<f32>;

perceptual_roughness: f32;

metallic: f32;

reflectance: f32;

flags: u32;

};

/* rest of PBR fragment shader here */

```

```rust

impl Plugin for MeshRenderPlugin {

fn build(&self, app: &mut bevy_app::App) {

let mut shaders = app.world.get_resource_mut::<Assets<Shader>>().unwrap();

shaders.set_untracked(

MESH_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_bind_group"),

);

shaders.set_untracked(

MESH_VIEW_BIND_GROUP_HANDLE,

Shader::from_wgsl(include_str!("mesh_view_bind_group.wgsl"))

.with_import_path("bevy_pbr::mesh_view_bind_group"),

);

```

By convention these should use rust-style module paths that start with the crate name. Ultimately we might enforce this convention.

Note that this feature implements _run time_ import resolution. Ultimately we should move the import logic into an asset preprocessor once Bevy gets support for that.

## Decouple Mesh Logic from PBR Logic via MeshRenderPlugin

This breaks out mesh rendering code from PBR material code, which improves the legibility of the code, decouples mesh logic from PBR logic, and opens the door for a future `MaterialPlugin<T: Material>` that handles all of the pipeline setup for arbitrary shader materials.

## Removed `RenderAsset<Shader>` in favor of extracting shaders into RenderPipelineCache

This simplifies the shader import implementation and removes the need to pass around `RenderAssets<Shader>`.

## RenderCommands are now fallible

This allows us to cleanly handle pipelines+shaders not being ready yet. We can abort a render command early in these cases, preventing bevy from trying to bind group / do draw calls for pipelines that couldn't be bound. This could also be used in the future for things like "components not existing on entities yet".

# Next Steps

* Investigate using Naga for "partial typed imports" (ex: `#import bevy_pbr::material::StandardMaterial`, which would import only the StandardMaterial struct)

* Implement `MaterialPlugin<T: Material>` for low-boilerplate custom material shaders

* Move shader import logic into the asset preprocessor once bevy gets support for that.

Fixes #3132

2021-11-18 03:45:02 +00:00

|

|

|

HandleUntyped::weak_from_u64(Shader::TYPE_UUID, 9076678235888822571);

|

Split mesh shader files (#4867)

# Objective

- Split PBR and 2D mesh shaders into types and bindings to prepare the shaders to be more reusable.

- See #3969 for details. I'm doing this in multiple steps to make review easier.

---

## Changelog

- Changed: 2D and PBR mesh shaders are now split into types and bindings, the following shader imports are available: `bevy_pbr::mesh_view_types`, `bevy_pbr::mesh_view_bindings`, `bevy_pbr::mesh_types`, `bevy_pbr::mesh_bindings`, `bevy_sprite::mesh2d_view_types`, `bevy_sprite::mesh2d_view_bindings`, `bevy_sprite::mesh2d_types`, `bevy_sprite::mesh2d_bindings`

## Migration Guide

- In shaders for 3D meshes:

- `#import bevy_pbr::mesh_view_bind_group` -> `#import bevy_pbr::mesh_view_bindings`

- `#import bevy_pbr::mesh_struct` -> `#import bevy_pbr::mesh_types`

- NOTE: If you are using the mesh bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_pbr::mesh_bindings` which itself imports the mesh types needed for the bindings.

- In shaders for 2D meshes:

- `#import bevy_sprite::mesh2d_view_bind_group` -> `#import bevy_sprite::mesh2d_view_bindings`

- `#import bevy_sprite::mesh2d_struct` -> `#import bevy_sprite::mesh2d_types`

- NOTE: If you are using the mesh2d bind group at bind group index 2, you can remove those binding statements in your shader and just use `#import bevy_sprite::mesh2d_bindings` which itself imports the mesh2d types needed for the bindings.

2022-05-31 23:23:25 +00:00

|

|

|

pub const MESH_TYPES_HANDLE: HandleUntyped =

|

Shader Imports. Decouple Mesh logic from PBR (#3137)

## Shader Imports

This adds "whole file" shader imports. These come in two flavors:

### Asset Path Imports

```rust

// /assets/shaders/custom.wgsl

#import "shaders/custom_material.wgsl"

[[stage(fragment)]]

fn fragment() -> [[location(0)]] vec4<f32> {

return get_color();

}

```